Professional Documents

Culture Documents

In This Usecase We Will Try To Answer Another Interesting Business Question: Are The Most Viewed

Uploaded by

Sqaure PodOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

In This Usecase We Will Try To Answer Another Interesting Business Question: Are The Most Viewed

Uploaded by

Sqaure PodCopyright:

Available Formats

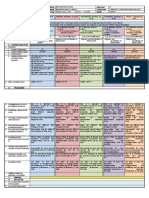

Note: You need CDH for this exercise.

In this usecase we will try to answer another interesting business question: are the most viewed

products also the most sold?

Since Hadoop can store unstructured and semi-structured data alongside structured data without

remodeling an entire database, you can just as well ingest, store, and process web log events. Let's

find out what site visitors have viewed the most.

For this, you need the web clickstream data. The most common way to ingest web clickstream is to

use Apache Flume. Flume is a scalable real-time ingest framework that allows you to route, filter,

aggregate, and do "mini-operations" on data on its way in to the scalable processing platform.

But for this usecase, we will use sample access log data which is @

/opt/examples/log_data/access.log.2

1. Let's move this data from the local filesystem, into HDFS

(/user/hive/warehouse/original_access_logs).

2. Now let’s build an intermediate table in Hive. Create an external table with name

: intermediate_access_logs, fields (ip STRING,

date STRING, method STRING, url STRING, http_version STRING, code1 STRING, code2

STRING, dash STRING, user_agent STRING),use serde as

(org.apache.hadoop.hive.contrib.serde2.RegexSerDe) with (([^ ]*) - - \\[([^\\]]*)\\] "([^\ ]*) ([^\ ]*)

([^\ ]*)" (\\d*) (\\d*) "([^"]*)" "([^"]*)") as input regex and (%1$$s %2$$s %3$$s %4$$s %5$$s

%6$$s %7$$s %8$$s %9$$s) as output string format and

use /user/hive/warehouse/original_access_logs as the location. I know there's lot going on here,

let me explain. Here we are creating a hive table to load data from an unstructured log file. So

how do we parse an unstructured file, by using Regex (short for Regular expressions). So, we

need to tell hive that we are going to parse it using a regex and thats where RegexSerDe and

input regex comes into picture. Well then why do we need output string format? you should use

this whenever you have regex to tell hive which columns you want to use from the regex. hope

this helps :-) If not watch the video.

3. Now let’s create another table and load the data from the table we created in step 2. Name

it tokenized_access_logs, fields as (ip STRING,

date STRING, method STRING, url STRING, http_version STRING, code1 STRING, code2

STRING, dash STRING, user_agent STRING), and if you notice data in previous table are

delimited by ",", so use that and location would be

(/user/hive/warehouse/tokenized_access_logs). Once you create this, load the data.

4. Final step is to query from the table we created to find out answer to our question. (hint: All you

must do is group by on url and find the count. Make sure url contains the word product) and then

compare these results with the previous usecase where we queried the most sold product and

check if you find anything odd.

You might also like

- Python Advanced Programming: The Guide to Learn Python Programming. Reference with Exercises and Samples About Dynamical Programming, Multithreading, Multiprocessing, Debugging, Testing and MoreFrom EverandPython Advanced Programming: The Guide to Learn Python Programming. Reference with Exercises and Samples About Dynamical Programming, Multithreading, Multiprocessing, Debugging, Testing and MoreNo ratings yet

- Lab 5 Correlate Structured W Unstructured DataDocument5 pagesLab 5 Correlate Structured W Unstructured DataVinNo ratings yet

- Hive Lecture NotesDocument17 pagesHive Lecture NotesYuvaraj V, Assistant Professor, BCA100% (1)

- Trắc Nghiệm Big dataDocument69 pagesTrắc Nghiệm Big dataMinhNo ratings yet

- Big DataDocument17 pagesBig DatagtfhbmnvhNo ratings yet

- BDA Lab Assignment 4 PDFDocument21 pagesBDA Lab Assignment 4 PDFparth shahNo ratings yet

- Big Data TestingDocument34 pagesBig Data Testingabhi16101100% (1)

- Hive InterviewDocument17 pagesHive Interviewmihirhota75% (4)

- Apache Hive Optimization Techniques - 1 - Towards Data ScienceDocument8 pagesApache Hive Optimization Techniques - 1 - Towards Data SciencemydummNo ratings yet

- Real Time Hadoop Interview Questions From Various InterviewsDocument6 pagesReal Time Hadoop Interview Questions From Various InterviewsSaurabh GuptaNo ratings yet

- HiveDocument65 pagesHiveApurvaNo ratings yet

- Data Operations HiveDocument26 pagesData Operations Hivedamannaughty1No ratings yet

- Kick-Starter Kit For BigData DevelopersDocument7 pagesKick-Starter Kit For BigData Developerskim jong unNo ratings yet

- Cloudera Msazure Hadoop Deployment GuideDocument39 pagesCloudera Msazure Hadoop Deployment GuideKristofNo ratings yet

- Big Data Analytics: Essential Hadoop ToolsDocument41 pagesBig Data Analytics: Essential Hadoop ToolsVISHNUNo ratings yet

- Ab InitioFAQ2Document14 pagesAb InitioFAQ2Sravya ReddyNo ratings yet

- Ab Initio Interview QuestionsDocument6 pagesAb Initio Interview Questionskamnagarg87No ratings yet

- BDA Assignment I and IIDocument8 pagesBDA Assignment I and IIAshok Mane ManeNo ratings yet

- Hadoop ClusterDocument23 pagesHadoop ClusterAnoushka RaoNo ratings yet

- SME PrepDocument5 pagesSME PrepGuruprasad VijayakumarNo ratings yet

- New 9Document3 pagesNew 9Raj PradeepNo ratings yet

- ACA BigData Consolidated DumpDocument29 pagesACA BigData Consolidated DumpAhimed Habib HusenNo ratings yet

- Sem 7 - COMP - BDADocument16 pagesSem 7 - COMP - BDARaja RajgondaNo ratings yet

- An Experimental Approach Towards Big Data For Analyzing Memory Utilization On A Hadoop Cluster Using Hdfs and MapreduceDocument6 pagesAn Experimental Approach Towards Big Data For Analyzing Memory Utilization On A Hadoop Cluster Using Hdfs and MapreducePradip KumarNo ratings yet

- Apache Hive Interview QuestionsDocument6 pagesApache Hive Interview Questionssourashree100% (1)

- Bda Unit 1Document13 pagesBda Unit 1CrazyYT Gaming channelNo ratings yet

- BDA - II Sem - II MidDocument4 pagesBDA - II Sem - II MidPolikanti Goutham100% (1)

- Pre COBOL TestDocument5 pagesPre COBOL TestjsmaniNo ratings yet

- Database Communication Using 3-Tier Architecture PDFDocument40 pagesDatabase Communication Using 3-Tier Architecture PDFThànhTháiNguyễnNo ratings yet

- WarehousingDocument100 pagesWarehousingKarthik SakaraboyinaNo ratings yet

- Practical-1: Aim: Hadoop Configuration and Single Node Cluster Setup and Perform File Management Task inDocument61 pagesPractical-1: Aim: Hadoop Configuration and Single Node Cluster Setup and Perform File Management Task inParthNo ratings yet

- UntitledDocument39 pagesUntitledSai HareenNo ratings yet

- DSBDSAssingment 11Document20 pagesDSBDSAssingment 11403 Chaudhari Sanika SagarNo ratings yet

- Ab Initio MeansDocument19 pagesAb Initio MeansVenkat PvkNo ratings yet

- SampleDocument30 pagesSampleSoya BeanNo ratings yet

- Laz DB DesktopDocument12 pagesLaz DB DesktopBocage Elmano SadinoNo ratings yet

- Week 2 Project - Search Algorithms - CSMMDocument8 pagesWeek 2 Project - Search Algorithms - CSMMRaj kumar manepallyNo ratings yet

- BigData Module 2Document41 pagesBigData Module 2R SANJAY CSNo ratings yet

- PySpark QuestionsDocument5 pagesPySpark QuestionsSai KrishnaNo ratings yet

- Case Studies C++Document5 pagesCase Studies C++Vaibhav ChitranshNo ratings yet

- BDA Experiment 14 PDFDocument77 pagesBDA Experiment 14 PDFNikita IchaleNo ratings yet

- Production Issues: in Beginning Almost Every Time!Document8 pagesProduction Issues: in Beginning Almost Every Time!cortland99No ratings yet

- Bda Lab ManualDocument20 pagesBda Lab ManualRAKSHIT AYACHITNo ratings yet

- Hive2 PDFDocument8 pagesHive2 PDFRavi MistryNo ratings yet

- Date Warehouse, Social Networking Analysis, User Profile,: - Project - , - Solution - , - Business FlowDocument5 pagesDate Warehouse, Social Networking Analysis, User Profile,: - Project - , - Solution - , - Business FlowABHISHEK KUMARNo ratings yet

- Akash Mavle Links To Lot of Scalable Big Data ArchitecturesDocument57 pagesAkash Mavle Links To Lot of Scalable Big Data ArchitecturesakashmavleNo ratings yet

- Apache Hive: Prashant GuptaDocument61 pagesApache Hive: Prashant GuptaNaveen ReddyNo ratings yet

- Compare Hadoop & Spark Criteria Hadoop SparkDocument18 pagesCompare Hadoop & Spark Criteria Hadoop Sparkdasari ramyaNo ratings yet

- Best Hadoop Online TrainingDocument41 pagesBest Hadoop Online TrainingHarika583No ratings yet

- SparkDocument7 pagesSparkchetanruparel07awsNo ratings yet

- BigData Hadoop Online Training by ExpertsDocument41 pagesBigData Hadoop Online Training by ExpertsHarika583No ratings yet

- HOL Hive PDFDocument23 pagesHOL Hive PDFKishore KumarNo ratings yet

- Compiler Construction: BY Ahsan Khan Email: Ahsan@Cuiatd - Edu.PkDocument37 pagesCompiler Construction: BY Ahsan Khan Email: Ahsan@Cuiatd - Edu.Pksardar BityaanNo ratings yet

- Assignment 2 - Data Structure ComparisonDocument5 pagesAssignment 2 - Data Structure ComparisonAnonymous aiVnyoJbNo ratings yet

- Ab Initio Faqs - v03Document8 pagesAb Initio Faqs - v03giridhar_007No ratings yet

- WordCount Program Hadoop Task 2Document7 pagesWordCount Program Hadoop Task 220261A6757 VIJAYAGIRI ANIL KUMARNo ratings yet

- Abinitio QuestionsDocument2 pagesAbinitio Questionstirupatirao pasupulatiNo ratings yet

- HADOOP and PYTHON For BEGINNERS - 2 BOOKS in 1 - Learn Coding Fast! HADOOP and PYTHON Crash Course, A QuickStart Guide, Tutorial Book by Program Examples, in Easy Steps!Document89 pagesHADOOP and PYTHON For BEGINNERS - 2 BOOKS in 1 - Learn Coding Fast! HADOOP and PYTHON Crash Course, A QuickStart Guide, Tutorial Book by Program Examples, in Easy Steps!Antony George SahayarajNo ratings yet

- Guntur Municipal Corporation: ReceiptDocument1 pageGuntur Municipal Corporation: ReceiptSqaure PodNo ratings yet

- 6 Real-World Case Studies: Data Science For BusinessDocument18 pages6 Real-World Case Studies: Data Science For BusinessSqaure PodNo ratings yet

- 1 For Course AWS Machine Learning 1Document9 pages1 For Course AWS Machine Learning 1Sqaure PodNo ratings yet

- Variable Assignment and Strings 4. Tuples: PrintDocument2 pagesVariable Assignment and Strings 4. Tuples: PrintSqaure PodNo ratings yet

- SRM GuideDocument8 pagesSRM GuideROHIT CHUGHNo ratings yet

- Exchange 2013 High Availability and Site ResilienceDocument4 pagesExchange 2013 High Availability and Site ResilienceKhodor AkoumNo ratings yet

- DLL Ict 10 Week 3RD Quarter Feb 18-22, 2019Document3 pagesDLL Ict 10 Week 3RD Quarter Feb 18-22, 2019Bernadeth Irma Sawal Caballa67% (3)

- Barangay Accounting System of Barangay HalayhayinDocument2 pagesBarangay Accounting System of Barangay Halayhayinramilfleco100% (2)

- NXT Shop DocumentationDocument79 pagesNXT Shop DocumentationhgdhjqdgqwjkNo ratings yet

- Dorks CamerasDocument9 pagesDorks Camerasydrthr0% (1)

- En TS 8.1.1 TSCSTA BookDocument260 pagesEn TS 8.1.1 TSCSTA BookSandor VargaNo ratings yet

- More Than 100 Keyboard Shortcuts Must ReadDocument4 pagesMore Than 100 Keyboard Shortcuts Must ReadJoe A. CagasNo ratings yet

- Computer All Shortcuts 2Document3 pagesComputer All Shortcuts 2Keerthana MNo ratings yet

- Activity Using Paint: LessonDocument15 pagesActivity Using Paint: LessonJOVITA SARAOSNo ratings yet

- Loaders: Loader Is A Program Which Accepts Object Program, Prepares These Program For ExecutionDocument21 pagesLoaders: Loader Is A Program Which Accepts Object Program, Prepares These Program For Executionneetu kalra100% (1)

- Prerequisites For Oracle FLEXCUBE Installer Oracle FLEXCUBE Universal Banking Release 12.2.0.0.0 (May) (2016)Document11 pagesPrerequisites For Oracle FLEXCUBE Installer Oracle FLEXCUBE Universal Banking Release 12.2.0.0.0 (May) (2016)MulualemNo ratings yet

- Coding VBADocument16 pagesCoding VBAPurna Cliquer'sNo ratings yet

- OS X Lion Artifacts v1.0Document39 pagesOS X Lion Artifacts v1.0opexxxNo ratings yet

- Configuring Lifebeat Monitoring For An OS ClientDocument12 pagesConfiguring Lifebeat Monitoring For An OS Clientanon-957947100% (4)

- Chart-Graph in ExcelDocument11 pagesChart-Graph in ExcelAkhilesh YadavNo ratings yet

- BR100 TemplateDocument6 pagesBR100 Templatejoeb00gieNo ratings yet

- Simple Excel Sheet To Mysql Conversion Using JavaDocument6 pagesSimple Excel Sheet To Mysql Conversion Using Javaravibecks2300No ratings yet

- Using Impact To Integrate The Active Event List and Charting Portlets in TheTivoliIntegratedPortalDocument14 pagesUsing Impact To Integrate The Active Event List and Charting Portlets in TheTivoliIntegratedPortaltiti2006No ratings yet

- Assignment No 11 Study of Mongodb Command - 181021004Document20 pagesAssignment No 11 Study of Mongodb Command - 181021004Shivpriya AmaleNo ratings yet

- BMA - ENP How To Get E-NP DataDocument4 pagesBMA - ENP How To Get E-NP DataCosminNo ratings yet

- Sybase - ASE - PerformanceDocument12 pagesSybase - ASE - PerformanceGilberto Dias Soares Jr.No ratings yet

- Detailed Lesson Plan in Technology Productivity Software ApplicationsDocument14 pagesDetailed Lesson Plan in Technology Productivity Software ApplicationsNyca Pacis100% (1)

- Imagemodeler Userguide 31-40Document10 pagesImagemodeler Userguide 31-40Jose L. B.S.No ratings yet

- (Document 2) GCP - Exam Registration Steps - v1Document12 pages(Document 2) GCP - Exam Registration Steps - v1Joydip MukhopadhyayNo ratings yet

- Certification Objectives: Q&A Self-TestDocument32 pagesCertification Objectives: Q&A Self-Testdongsongquengoai4829No ratings yet

- FLOW 3D v12 0 Install InstructionsDocument31 pagesFLOW 3D v12 0 Install InstructionsYayang SaputraNo ratings yet

- Introducting Perforce - HelixDocument30 pagesIntroducting Perforce - Helixpankaj@23No ratings yet

- 2.6.1.3 Packet Tracer - Configure Cisco Routers For Syslog, NTP, and SSH OperationsDocument7 pages2.6.1.3 Packet Tracer - Configure Cisco Routers For Syslog, NTP, and SSH Operationsاحمد الجناحيNo ratings yet

- XX Plane Com Manuals DesktopDocument337 pagesXX Plane Com Manuals DesktopPapp AttilaNo ratings yet