Professional Documents

Culture Documents

The Academy For Teaching and Learning Excellence (ATLE)

Uploaded by

Czarlin Adriano0 ratings0% found this document useful (0 votes)

10 views4 pagesmultiple choice test questions samples

Original Title

handout-multiple-choice-best-practices

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentmultiple choice test questions samples

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

10 views4 pagesThe Academy For Teaching and Learning Excellence (ATLE)

Uploaded by

Czarlin Adrianomultiple choice test questions samples

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 4

The

Academy for Teaching and Learning Excellence (ATLE)

“Office hours for faculty.”

(813) 974-‐1841 | atle.usf.edu| atle@usf.edu

Multiple Choice Questions:

Best Practices

Introduction to Multiple Choice Questions

Advantages

1. Easy and quick to grade

2. Reduce some of the burden of large classes

3. Can assess all levels of Bloom’s Taxonomy – knowledge, understanding, application,

analysis, synthesis, and evaluation

4. Useful as a diagnostic tool because wrong choices can indicate weaknesses and

misconceptions

5. Familiar to students

Disadvantages

6. Difficult and time-‐consuming to construct quality questions

7. Can be ambiguous to students

8. Encourages students to find the correct answer by process of elimination

9. Limited creativity

10. Good test takers can guess

11. Not applicable for all course objectives

Anatomy of a Multiple Choice Question

12. Stem = the sentence-‐like portion of the question

13. Alternatives = all the possible choices in the question

14. Distractor = the wrong answers

Purpose of Guidelines

15. Use of the guidelines will help you write quality questions

16. Quality questions…

• foil good test takers

• don’t hinder poor test takers

• discriminate those who know from those who don’t

Developing Multiple Choice Questions

Guidelines

17. Stem Specific Guidelines

• The stem should be clear, brief (but comprehensive), and easy to read.

• Do not include irrelevant information in the stem.

• The stem should pose a problem

• Include repeated or redundant phrases in the stem instead of the alternatives.

• State the stem in positive form avoiding words like except, but, and not.

• Write the stem in a complete sentence

USF 2013 | Sommer Mitchell |atle@usf.edu 1

The Academy for Teaching and Learning Excellence (ATLE)

“Office hours for faculty.”

(813) 974-‐1841 | atle.usf.edu| atle@usf.edu

18. Alternative Specific Guidelines

• Make sure the grammar and syntax in the stem and alternatives is the same.

• Your alternatives should be worded in a similar way avoiding clues and key words.

• Whether a question asks for the correct alternative or best response, there should

be only one clear answer. If you are looking for the best responses, you should

explain that clearly in the directions.

• All alternative should be plausible.

• Keep alternatives parallel in form and length so that the answer does not stand out

from the distractors.

• Avoid the use of specific determiners like always, never, and only in the alternatives.

• Alternatives “all of the above” and “none of the above” should be avoided because

they reduce the effectiveness of a question.

• The alternatives should be presented in a logical order like alphabetically or

numerically.

19. Distractor Specific Guidelines

• Use as many functional distractors as possible – functional distractors are those

chosen by students that have not achieved the objective or concept.

• Use plausible distractors.

• Use familiar, yet incorrect phrases as distractors.

• Avoid the use of humor when developing distractors.

20. General Guidelines

• You should avoid using textbook, verbatim phrasing.

• The position of each correct answer in the list of alternatives should be as random

as possible. If possible, have each position be the correct answer and equal number

of times.

• The layout of the multiple choice questions should be done in a clear and consistent

manner.

• Use proper grammar, spelling, and punctuation.

• Avoid using unnecessarily difficult vocabulary.

• Analyze the effectiveness of each question after each administration of a test. A

well-‐constructed test contains only a few questions that more than90 percent or

less than 30 percent of students answer correctly. Difficult items are those that

about 50-‐75 percent answer correctly and moderately difficult questions fall

between 70-‐85 percent (Davis 1993, 269).

• You should expect each question to take approximately 1 minute to answer.

• Have a colleague review your test before you give it to students.

• In order to produce a well-‐constructed test, you should begin writing questions in

advance of the day before the test.

• Directions should be stated clearly at the beginning of each section of the test.

21. Resources

• Davis, B. Tools for Teaching, San Francisco: Jossey-‐Bass Publishers, 1993.

USF 2013 | Sommer Mitchell |atle@usf.edu 2

The Academy for Teaching and Learning Excellence (ATLE)

“Office hours for faculty.”

(813) 974-‐1841 | atle.usf.edu| atle@usf.edu

• The Virginia Commonwealth University Center for Teaching Excellence has several

resources on their website found at:

http://www.vcu.edu/cte/resources/nfrg/12_03_writing_MCQs.htm.

• Burton, S., Merrill, P., Sudweeks, R., Wood, B. How to Prepare Better Multiple Choice

Test Items: Guidelines for University Faculty, Provo: Brigham Young University

Testing Services and The Department of Instructional Science, 1991. A PDF can be

found at: http://testing.byu.edu/info/handbooks/betteritems.pdf.

• Vanderbilt University Center for Teaching “Writing Good Multiple Choice Test

Questions” found at: http://cft.vanderbilt.edu/teaching-‐

guides/assessment/writing-‐good-‐multiple-‐choice-‐test-‐questions/.

Critical Thinking & Multiple Choice Questions

Though writing higher-‐order thinking multiple choice questions is difficult, it is possible

when using Bloom’s taxonomy.

22. Knowledge – questions written at this level ask students to remember factual

knowledge. The outcome is for students to identify the meaning of a term or the order

of events. Examples questions are:

• What is…?

• Who were the main…? Can you list three?

• Which one…?

• Why did…?

• Can you select…?

23. Understanding (Comprehension) – question written at this level require students to

do more than memorize information to answer correctly. This level asks for basic

knowledge and its use in context. The outcome is for students to identify an example of

a term, concept, and principles or to interpret the meaning of an idea. Example

questions are:

• Which of the following is an example of…?

• What is the main idea of…?

• How can you summarize…?

• If A happens, then ______ happens.

• Which is the best answer?

24. Application -‐ questions written at this level require students to recognize a problem or

discrepancy. The outcome is for students to distinguish between two items or identify

how concepts and principles are related. Example questions are:

• What can result if…?

• How can you organize ___ to show ___?

• How can you use…?

• What is the best approach to…?

USF 2013 | Sommer Mitchell |atle@usf.edu 3

The Academy for Teaching and Learning Excellence (ATLE)

“Office hours for faculty.”

(813) 974-‐1841 | atle.usf.edu| atle@usf.edu

25. Analysis – questions written at this level requires students to break down material into

its component parts including identifying the parts, relationships between them, and

the principles involved. The outcome is for students to recognize unstated assumptions

or determine whether a word fits with an accepted definition or principle. Example

questions are:

• How is ___ related to ___?

• What conclusions can be drawn from…?

• What is the distinction between ___ and ___?

• What ideas justify…?

• What is the function of…?

26. Synthesis – questions written at this level require students to create new connections,

generalizations, patterns, or perspectives. The outcome is for students to originate,

integrate, or combine ideas into a product, plan, or proposal. Example questions are:

• What can be done to minimize/maximize…?

• How can you test…?

• How can you adapt ___ to create a different…?

• Which of the following can you combine to improve/change…?

27. Evaluation – questions written at this level require students to appraise, assess, or

critique on the basis of specific standards. The outcome is for students to judge the

value of material for a given purpose. Example questions are:

• How can you prove/disprove…?

• What data was used to make the conclusion…?

• Which of the following support the view…?

• How can you asses the value or importance of…?

• Which of the following can you cite to defend the actions of…?

28. Resources

• The University of Arizona has a handout with example multiple choice questions at

each level found at:

http://omse.medicine.arizona.edu/sites/omse.medicine.arizona.edu/files/images/

Blooms.pdf.

• University of Texas Instructional Assessment Resources has example questions at:

http://omse.medicine.arizona.edu/sites/omse.medicine.arizona.edu/files/images/

Blooms.pdf

USF 2013 | Sommer Mitchell |atle@usf.edu 4

You might also like

- Vedic Maths - India's Approach To Calculating!Document4 pagesVedic Maths - India's Approach To Calculating!padmanaban_cse100% (2)

- Teacher Made TestDocument52 pagesTeacher Made TestJason Jullado67% (3)

- 50 Ways to Be a Better Teacher: Professional Development TechniquesFrom Everand50 Ways to Be a Better Teacher: Professional Development TechniquesRating: 4 out of 5 stars4/5 (9)

- Lesson Plan SETS 2 PDFDocument10 pagesLesson Plan SETS 2 PDFHelmi Tarmizi83% (6)

- Validity and ReliabilityDocument39 pagesValidity and ReliabilityE-dlord M-alabanan100% (1)

- Cosmos Carl SaganDocument18 pagesCosmos Carl SaganRabia AbdullahNo ratings yet

- Facilitating Learning Issues On Learner-Centered TeachingDocument23 pagesFacilitating Learning Issues On Learner-Centered TeachingDexter Jimenez Resullar88% (8)

- Educ 6 Development of Assessment ToolsDocument26 pagesEduc 6 Development of Assessment ToolsRizel Durango-Barrios60% (5)

- Objective Test Types 50 SlidesDocument50 pagesObjective Test Types 50 Slidesr. balakrishnanNo ratings yet

- Planning and Construction of Objective TestsDocument3 pagesPlanning and Construction of Objective TestsGina Oblima60% (10)

- 14 Rules For Writing MultipleDocument18 pages14 Rules For Writing MultipleAngelica100% (1)

- Test Construction PresentationDocument65 pagesTest Construction PresentationRita Rutsate Njonjo100% (1)

- Effective TeachingDocument32 pagesEffective TeachingPeter GeorgeNo ratings yet

- Different Formats of Classroom Assessment ToolsDocument6 pagesDifferent Formats of Classroom Assessment ToolsAya MaduraNo ratings yet

- Lesson 5 - Construction of Written TestDocument33 pagesLesson 5 - Construction of Written TestAndrea Lastaman100% (8)

- Pearson Knowledge Management An Integrated Approach 2nd Edition 0273726854Document377 pagesPearson Knowledge Management An Integrated Approach 2nd Edition 0273726854karel de klerkNo ratings yet

- Linking Classroom Assessment With Student LearningDocument18 pagesLinking Classroom Assessment With Student LearningArturo GallardoNo ratings yet

- SABS Standards and Their Relevance to Conveyor SpecificationsDocument17 pagesSABS Standards and Their Relevance to Conveyor SpecificationsRobert Nicodemus Pelupessy0% (1)

- Laser PsicosegundoDocument14 pagesLaser PsicosegundoCristiane RalloNo ratings yet

- Uop Teuop-Tech-And-More-Air-Separation-Adsorbents-Articlech and More Air Separation Adsorbents ArticleDocument8 pagesUop Teuop-Tech-And-More-Air-Separation-Adsorbents-Articlech and More Air Separation Adsorbents ArticleRoo FaNo ratings yet

- Test Strategies - Test Construction (Olivares)Document53 pagesTest Strategies - Test Construction (Olivares)Vinia A. Villanueva100% (1)

- Educ.222 - Information Technology in Education (TOPIC-CONSTRUCTING TEST) Prepared by Rothy Star Moon S. CasimeroDocument4 pagesEduc.222 - Information Technology in Education (TOPIC-CONSTRUCTING TEST) Prepared by Rothy Star Moon S. CasimeroPEARL JOY CASIMERONo ratings yet

- Test Construction SDO QC AP HUMSS 2023Document45 pagesTest Construction SDO QC AP HUMSS 2023Mc Kenneth BaluyotNo ratings yet

- Assess NotesDocument25 pagesAssess NotesJessie DesabilleNo ratings yet

- EDUP3063 Assessment in Education: Topic 6 Designing, Constructing and Administrating TestDocument43 pagesEDUP3063 Assessment in Education: Topic 6 Designing, Constructing and Administrating TestshalynNo ratings yet

- Construct Test ItemDocument39 pagesConstruct Test ItemKhalif Haqim 's AdriannaNo ratings yet

- Guidelines in Writing Test Items PDFDocument23 pagesGuidelines in Writing Test Items PDFMarielleAnneAguilarNo ratings yet

- Designing Multiple Choice Questions: Getting StartedDocument3 pagesDesigning Multiple Choice Questions: Getting StartedAhmed BassemNo ratings yet

- Final GaniDocument59 pagesFinal GaniCristine joy OligoNo ratings yet

- Developing Effective Multiple-Choice TestsDocument2 pagesDeveloping Effective Multiple-Choice TestsArif YuniarNo ratings yet

- Unit No. 4 Types of TestsDocument24 pagesUnit No. 4 Types of TestsilyasNo ratings yet

- Chapter 14 HandoutsDocument10 pagesChapter 14 HandoutsNecesario BanaagNo ratings yet

- You Have Questions They Have AnswersDocument1 pageYou Have Questions They Have AnswersSusilo WatiNo ratings yet

- Nadela Activity ADocument4 pagesNadela Activity ADorothy Joy NadelaNo ratings yet

- Al1 Chapt 2 L5 HandoutDocument5 pagesAl1 Chapt 2 L5 Handoutcraasha12No ratings yet

- Constructing Tests and Tables of SpecificationDocument9 pagesConstructing Tests and Tables of SpecificationkolNo ratings yet

- Constructing Traditional Forms of AssessmentDocument40 pagesConstructing Traditional Forms of Assessmentlizyl5ruoisze5dalidaNo ratings yet

- Test Construction GuideDocument103 pagesTest Construction Guidesharmaine casanovaNo ratings yet

- Test AssessingDocument43 pagesTest AssessingAsylzhan MusabekNo ratings yet

- Constructing TestsDocument18 pagesConstructing TestsElma AisyahNo ratings yet

- Marikina Polytechnic College Graduate Curriculum Development Table of Specification GuideDocument10 pagesMarikina Polytechnic College Graduate Curriculum Development Table of Specification GuideMaestro MotovlogNo ratings yet

- Question Types:: Dichotomous Question Close-Ended QuestionDocument21 pagesQuestion Types:: Dichotomous Question Close-Ended QuestionamyNo ratings yet

- Multiple ChoiceDocument31 pagesMultiple ChoiceMARY ROSE RECIBENo ratings yet

- HowtoassesstDocument6 pagesHowtoassesstJaime CNo ratings yet

- Lesson 5 G5 - 082520Document27 pagesLesson 5 G5 - 082520alharemahamodNo ratings yet

- Role of Teacher in Classroom ManagementDocument28 pagesRole of Teacher in Classroom ManagementNfmy KyraNo ratings yet

- Questioning StylesDocument24 pagesQuestioning Stylescherryl mae pajulas-banlatNo ratings yet

- Chapter 3 Profed 201Document7 pagesChapter 3 Profed 201Floyd LawtonNo ratings yet

- Week 15 (Cont'd) : Assessing Student LearningDocument22 pagesWeek 15 (Cont'd) : Assessing Student LearningSarah FosterNo ratings yet

- Ielts Reading Test 1 - Teacher'S Notes Written by Sam MccarterDocument6 pagesIelts Reading Test 1 - Teacher'S Notes Written by Sam MccarternguyentrongnghiaNo ratings yet

- Types Paper Pencil TestDocument18 pagesTypes Paper Pencil TestclamaresjaninaNo ratings yet

- PSC 352 Handout Planning A TestDocument14 pagesPSC 352 Handout Planning A TestWalt MensNo ratings yet

- Evaluation and Assessment: Group 6Document23 pagesEvaluation and Assessment: Group 6Paulene EncinaresNo ratings yet

- Advantages and Disadvantages of Different Types of Test QuestionsDocument5 pagesAdvantages and Disadvantages of Different Types of Test QuestionsAnandhi BalajiNo ratings yet

- Traditional AssessmentDocument29 pagesTraditional AssessmentKrystelle Joy ZipaganNo ratings yet

- Group A Assessment Learning Group ActivityDocument4 pagesGroup A Assessment Learning Group ActivityAndrew RichNo ratings yet

- Group 2 Completion TestDocument7 pagesGroup 2 Completion TestRhod Uriel FabularNo ratings yet

- AL1 Week 6Document8 pagesAL1 Week 6Reynante Louis DedaceNo ratings yet

- Are You The Kind of Teacher Who Ask The Following Questions?Document61 pagesAre You The Kind of Teacher Who Ask The Following Questions?Winter BacalsoNo ratings yet

- Ta HB Ch4 Teaching StrategyDocument19 pagesTa HB Ch4 Teaching StrategyvicenteferrerNo ratings yet

- Types of Test Items SummaryDocument4 pagesTypes of Test Items SummaryvasundharaNo ratings yet

- Item Writing Guidelines SummaryDocument1 pageItem Writing Guidelines SummarynamavayaNo ratings yet

- Ss20-Banta-Ao-Activity 4Document8 pagesSs20-Banta-Ao-Activity 4Lenssie Banta-aoNo ratings yet

- Classroom Assessment TechniquesDocument47 pagesClassroom Assessment Techniquessara wilsonNo ratings yet

- DCT 2 Test Format - 13 July 2015Document37 pagesDCT 2 Test Format - 13 July 2015azizafkar100% (1)

- How to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessFrom EverandHow to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessNo ratings yet

- CST Students with Disabilities: New York State Teacher CertificationFrom EverandCST Students with Disabilities: New York State Teacher CertificationNo ratings yet

- Active Passive Reading and Language Activity G6Document2 pagesActive Passive Reading and Language Activity G6Czarlin AdrianoNo ratings yet

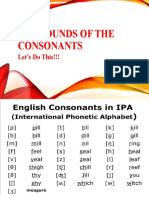

- The Sounds of The Consonants (SPEECH 5)Document2 pagesThe Sounds of The Consonants (SPEECH 5)Czarlin AdrianoNo ratings yet

- IPA and The English Word Pronunciation Gr. 4Document9 pagesIPA and The English Word Pronunciation Gr. 4Czarlin AdrianoNo ratings yet

- Intonation and Word Stress (SPEECH 7)Document9 pagesIntonation and Word Stress (SPEECH 7)Czarlin AdrianoNo ratings yet

- Figurative Language: Read It, Write It, Tell It Episode: "Johnny Appleseed"Document8 pagesFigurative Language: Read It, Write It, Tell It Episode: "Johnny Appleseed"Czarlin AdrianoNo ratings yet

- 511 Sentence Completion Test Exercises Multiple Choice Questions With Answers Advanced Level 12Document6 pages511 Sentence Completion Test Exercises Multiple Choice Questions With Answers Advanced Level 12Czarlin AdrianoNo ratings yet

- Sentence and Non Sentence Worksheet 1Document2 pagesSentence and Non Sentence Worksheet 1Czarlin AdrianoNo ratings yet

- Sentence and FragmentDocument2 pagesSentence and FragmentCzarlin AdrianoNo ratings yet

- Subject-Verb AgreementDocument11 pagesSubject-Verb AgreementCzarlin AdrianoNo ratings yet

- Mind Your Tone: A Lesson On IntonationDocument3 pagesMind Your Tone: A Lesson On IntonationCzarlin AdrianoNo ratings yet

- CPC-COM-SU-4743-G External CoatingsDocument24 pagesCPC-COM-SU-4743-G External Coatingsaslam.amb100% (1)

- Six Sigma Statistical Methods Using Minitab 13 Manual4754Document95 pagesSix Sigma Statistical Methods Using Minitab 13 Manual4754vinaytoshchoudharyNo ratings yet

- IAS Mains Electrical Engineering 1994Document10 pagesIAS Mains Electrical Engineering 1994rameshaarya99No ratings yet

- I/O Buffer Megafunction (ALTIOBUF) User GuideDocument54 pagesI/O Buffer Megafunction (ALTIOBUF) User GuideSergeyNo ratings yet

- Accident Avoiding Bumper SystemDocument3 pagesAccident Avoiding Bumper SystemDeepak DaineNo ratings yet

- CSR of DABUR Company..Document7 pagesCSR of DABUR Company..Rupesh kumar mishraNo ratings yet

- Electroencephalography (EEG) : Dr. Altaf Qadir KhanDocument65 pagesElectroencephalography (EEG) : Dr. Altaf Qadir KhanAalia RanaNo ratings yet

- COT English 3rd PrepositionDocument14 pagesCOT English 3rd PrepositionGanie Mae Talde Casuncad100% (1)

- PD083 05Document1 pagePD083 05Christian Linares AbreuNo ratings yet

- Filipinism 3Document3 pagesFilipinism 3Shahani Cel MananayNo ratings yet

- Toyota Camry ANCAP PDFDocument2 pagesToyota Camry ANCAP PDFcarbasemyNo ratings yet

- Find Out Real Root of Equation 3x-Cosx-1 0 by Newton's Raphson Method. 2. Solve Upto Four Decimal Places by Newton Raphson. 3Document3 pagesFind Out Real Root of Equation 3x-Cosx-1 0 by Newton's Raphson Method. 2. Solve Upto Four Decimal Places by Newton Raphson. 3Gopal AggarwalNo ratings yet

- Executive CommitteeDocument7 pagesExecutive CommitteeMansur ShaikhNo ratings yet

- Quadratic SDocument22 pagesQuadratic SShawn ShibuNo ratings yet

- Final Portfolio Cover LetterDocument2 pagesFinal Portfolio Cover Letterapi-321017157No ratings yet

- PDFDocument42 pagesPDFDanh MolivNo ratings yet

- RallinAneil 2019 CHAPTER2TamingQueer DreadsAndOpenMouthsLiDocument10 pagesRallinAneil 2019 CHAPTER2TamingQueer DreadsAndOpenMouthsLiyulianseguraNo ratings yet

- Ac and DC MeasurementsDocument29 pagesAc and DC MeasurementsRudra ChauhanNo ratings yet

- BrochureDocument2 pagesBrochureNarayanaNo ratings yet

- Iso 19108Document56 pagesIso 19108AzzahraNo ratings yet

- jrc122457 Dts Survey Deliverable Ver. 5.0-3Document46 pagesjrc122457 Dts Survey Deliverable Ver. 5.0-3Boris Van CyrulnikNo ratings yet

- How to Critique a Work in 40 StepsDocument16 pagesHow to Critique a Work in 40 StepsGavrie TalabocNo ratings yet

- Ett 531 Motion Visual AnalysisDocument4 pagesEtt 531 Motion Visual Analysisapi-266466498No ratings yet