Professional Documents

Culture Documents

VNS for Probabilistic Satisfiability

Uploaded by

sk8888888Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

VNS for Probabilistic Satisfiability

Uploaded by

sk8888888Copyright:

Available Formats

MIC2005.

The 6th Metaheuristics International Conference

557

Variable Neighborhood Search for the Probabilistic Satisability Problem

Dejan Jovanovi c Nenad Mladenovi c

Mathematical

Zoran Ognjanovi c

Institute Kneza Mihaila 35, 11000 Belgrade, Serbia and Montenegro {dejan,zorano}@mi.sanu.ac.yu

School

of Mathematics, University of Birmingham Edgbaston, Birmingham, United Kingdom N.Mladenovic@bham.ac.uk

Introduction

In the eld of articial intelligence researchers have studied uncertain reasoning using dierent tools. Some of the formalisms for representing and reasoning with uncertain knowledge are based on probabilistic logic [1, 9]. This logic extends classical propositional language with expressions that speak about probability, while formulas remain true or false. The satisability problem of a probabilistic formula (PSAT) is NP-complete [1]. Although PSAT can be reduced to linear programming problem, solving it by any standard linear system solving procedure is unsuitable in practice, due to exponential growth of number of variables in the linear system obtained by the reduction. Nevertheless, it is still possible to use more ecient numerical methods, e.g., the column generation procedure of linear programming. In more recent article [8] we presented Genetic algorithms (GA) for PSAT. In this paper we suggest Variable neighborhood search (VNS) based heuristic for solving PSAT.

Probabilistic Logic and PSAT

In this section we introduce the PSAT problem formally (for a more detailed description please see [9]). Let L = {x, y, z, . . .} be the set of propositional letters. A weight term is an expression of the form a1 w(1 ) + . . . + an w(n ), where ai s are rational numbers, and i s are classical propositional formulas with propositional letters from L. The intended meaning of w() is probability of being true. A basic weight formula has the form t b, where t is a weight term, and b is a rational number. Formula (t < b) denotes (t b). A weight literal is an expression of the form t b or t < b. The set of all weight formulas is the minimal set that contains all basic weight formulas, and it is closed under Boolean operations. Let be a classical propositional formula and {x1 , x2 , . . . , xk } be the set of all propositional letters that Vienna, Austria, August 2226, 2005

558

MIC2005. The 6th Metaheuristics International Conference

Repeat the following steps until the stopping condition is met: 1. Set k 1; 2. Until k = kmax , repeat the following steps: (a) Shaking. Generate a point x at random from the kth neighborhood of x (x Nk (x)); (b) Local Search. Apply some local search method with x as initial solution; denote with x the so obtained local optimum; (c) Move or not. If this optimum is better than the the incumbent, move there (x x ), and continue the search with N1 (k 1); otherwise, set k k + 1;

Figure 1: Main steps of the basic VNS metaheuristic appear in . An atom of is dened as a formula at = x1 . . . xk where xi {xi , xi }. Let At() denote the set {at1 , . . . , at2k } of all atoms of . Every classical propositional formula is equivalent to a formula CDNF() = m atij called complete disjunctive normal form of j=1 . We use at CDNF() to denote that the atom at appears in CDNF(). A formula f is in the weight conjunctive form (WCF) if it is a conjunction of weight literals. Every weight formula f can be transformed to a disjunctive normal form

i,j j i,j DNF(f ) = m i=1 (ai,j w(1 ) + . . . + ai,j w(ni,j ) i,j bi,j ), j=1 ni,j 1 k

where disjuncts are formulas in WCF and each i,j can be either < or . Since a disjunction is satisable i at least one disjunct is satisable, we will consider only WCF formulas. Formally, PSAT is the following problem: given a WCF formula f , is there any probability function dened on At(f ) such that f is satisable? Note that a WCF formula f is satised i i by i for every weight literal ai w(1 ) + . . . + ai i w(ni ) i bi in f n 1 ai 1

atCDNF(i ) 1

(at) + . . . + ai i n

atCDNF(i i ) n

(at) i bi ,

(1)

while the probabilities (at) assigned to atoms are nonnegative and sum up to 1. This system contains a row for each weight literal of f , and columns that correspond to atoms at At(f ) that belong to CDNF() for at least one propositional formula from f .

VNS for the PSAT

VNS is a recent metaheuristic for solving combinatorial and global optimization problems (e.g, see [6, 3, 4]). It is a simple, yet very eective metaheuristic that has shown to be very robust on a variety of practical NP-hard problems. The basic idea behind VNS is change of neighborhood structures in the search for a better solution. In an initialization phase, a set of kmax (a parameter) neighborhoods is preselected (Ni , i = 1, . . . , kmax ), a stopping condition determined and an initial local solution found. Then the main loop of the method has the steps described in Figure 1. Therefore, to construct dierent neighborhood structures and to perform a systematic search, one needs to supply the solution space with some metric (or quasi-metric) and then induce neighborhoods from it. In the next sections, we answer this problem-specic question for the PSAT problem. Vienna, Austria, August 2226, 2005

MIC2005. The 6th Metaheuristics International Conference

559

The Solution Space. As the PSAT problem reduces to a linear program over probabilities, the number of atoms with nonzero probabilities necessary to guarantee that a solution will be found, if one exists, is equal to L + 1. Here L is the number of weight literals in the WCF formula. The solution is therefore an array of L + 1 atoms x = [A1 , A2 , . . . , AL+1 ], where Ai , i = 1, . . . , L + 1 are atoms from At(f ), with the assigned probabilities p = [p1 , p2 , . . . , pL+1 ]. Atoms are represented as bit strings, with the ith bit of the string 1 i the ith variable is positive in the atom. A solution (an array of atoms) is the bit string obtained by concatenation of the atom bit strings. Observe that if the variable x is known, the probabilities of atoms not in x are 0, and system (1) can be rewritten compactly as

L+1

cij pj i bi , i = 1, . . . , L .

j=1

(2)

i i Here cij = ai , with sum going over all k from (1) such that Aj CDNF(k ). Solving (2) k gives us a vector of probabilities p.

Initial Solution. Initial solution is obtained as follows. First, 10 (L + 1) atoms are randomly generated, and then from these atoms the L + 1 atoms with the best grades are selected to form an initial solution. A grade of an atom is computed as the sum of this atoms contribution to satisability of the conjuncts in the WCF formula. An atom corresponds to a column of the linear system (2). If one coecient from the column is positive, and located in a row with as the relational symbol, it can be used to push toward the satisability of the row. In such a case we add its value to the grade. The < case is not in favor of satisfying the row, so we subtract the coecient from the grade. Similar reasoning is applied when the coecient is negative. After initial atoms are selected as described, they are all assigned equal probabilities.

Neighborhood Structures. The neighborhood structures are those induced by the Hamming distance on the solution bit strings. The distance between two solutions is the number of corresponding bits that dier in their bit string representations. With this selection of neighborhood structures a shake in the k th neighborhood of a solution is obtained by inverting k bits in the solutions bit string.

3.1

Finding Probabilities by VND

When we change some atoms there may be a better probabilities assignment in the solution, i.e., one that is closer to satisfying the PSAT problem. At this point, we suggest three procedures within Variable neighborhood descent (VND) framework: two fast heuristics and Nelder Mead nonlinear programming method.

Nonlinear Optimization Approach. To nd a possibly better probabilities assignment a nonlinear program is dened with the objective function being the distance of the left side from the right side of the linear system (2). Let x be the current solution, we dene an Vienna, Austria, August 2226, 2005

560

MIC2005. The 6th Metaheuristics International Conference

unconstrained objective function z to be

L

z(p) =

i=1

di (p) + g(p)

where di is the distance of the left and the corresponding right hand side of the ith row dened as ( L+1 cij pj bi )2 if the ith row is not satised, j=1 di (p) = 0 otherwise. and g(p) is the penalty function

L+1

g(p) = 1 (

pi <0

p2 ) i

+ 2 (1

i=1

pi )2

with penalty parameters 1 and 2 . The penalty function is used to transform the constrained nonlinear problem to the unconstrained one. Since the unconstrained objective function z(p) is not dierentiable a minimization method that does not use derivatives is needed. We applied the downhill simplex method of Nelder and Mead [7], however, in order to speed-up the search, this optimization procedure is used only when the VNS algorithm reaches kmax . Heuristic Approach. Nonlinear optimization has shown to be too time demanding, so we resorted to a heuristic approach that is used for the majority of the solution optimizations. The heuristic optimization consists of the following two independent heuristics. Worst Unsatised Projection. The 5 most unsatised rows are selected, i.e. the rows with the largest values di (p). The equations of these rows dene corresponding hyper-planes that border the solution space. In an attempt to reach the solution space, consecutive projections of probabilities on the these hyper-planes are performed. Using normal projection the probabilities are changed toward satisability of each worst row in a fastest possible manner, i.e. in the direction normal to that hyper-plane. This procedure is repeated at most 10 times or until no improvement has been achieved, every time selecting the current 5 worst rows. Greedy Giveaway. Again, the worst unsatised row is selected (the ith ), but also the most satised row is selected (the j th ). Nonzero coecients are found in these two rows, but only those that have zero coecients at the same position in the other row. For example, if ci,k and cj,l are such coecients, then pk can be changed with the the most unsatised row moving toward satisability, and pl can be changed the opposite way, reducing the satisability of the most satised row. This procedure is repeated for all suitable pairs of the coecients from the two rows. These two heuristics together (rst one, then the other) yield a great improvement of the objective function value. Improvements obtained are comparable to those obtained by nonlinear optimization, but they are much faster. This is the reason why the nonlinear optimization is used only after the Nkmax neighborhood is unsuccessfully explored. Local Search. The local search part of the algorithm scans through the rst neighborhood of a solution in pursue of a solution better than the current best. Note that, since the N1 Vienna, Austria, August 2226, 2005

MIC2005. The 6th Metaheuristics International Conference

561

neighborhood is huge, it would be very inecient to recompute the probabilities at every point. Therefore, throughout the local search, the probabilities are are xed. Solutions are compared only by the value of the objective function z. This value is kept for the solutions that are in use. As for the newly generated solutions, the objective value z is computed using the current probabilities assignment, but since only one bit of one atom is changed, the updating is in O(L). The VNS implementation used is stated in Algorithm 1. Algorithm 1 Basic VNS for PSAT

1: x initialSolution(); improve(x, heuristic) 2: while (not done()) do 3: k1 4: while (k kmax ) do 5: x shake(x, k); improve(x , heuristic); x localSearch(x ) 6: if (x better than x) then 7: x x ; improve(x, heuristic); k 1 8: else 9: k k+1 10: end if 11: end while improve(x, nelder-mead) 12: 13: end while

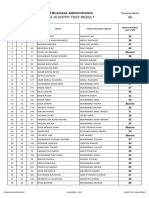

Computational Results

For testing purposes a set of 27 random satisable probability WCF formulas has been generated. Maximal number of summands in weight terms (S), and the maximal number of disjuncts (D) in DNFs of propositional formulas has been set to 5. Three problems instances were generated for each of the following (N , L) pairs: (50, 100), (50, 250), (100, 100), (100, 200), (100, 500), (200, 200), (200, 400), (200, 1000). The VNS algorithm was run with kmax set to 30, and we exit if a feasible solution is found or the method doesnt advance in 10 consecutive iterations. The results are shown in Table 1. It can be seen that VNS approach outperforms our previous checker based on the GA-approach [8] in almost all problem instances, with both increase in the solving success rate and decrease of the execution time. We are not aware of any larger PSAT problem instances reported in the literature. For example, L is up to 500 in [2], and N = 200, L = 800 in [5]. Also, the instances considered in mentioned papers have a simpler form than the ones used here, with weight terms containing the probability of only one clause with up to 4 literals.

Conclusion

In this paper we suggest VNS based heuristic for solving PSAT problem. This approach allows us to solve large problem instances more eectively and more eciently than the recent GA based heuristic. There are many directions for further research: (i) improve eciency of our VNS based heuristic by reducing the large neighborhood or by introducing new ones to be used within local search; (ii) apply our approach to the so-called interval PSAT [2] in which basic weight formulas are of the form c1 t c2 ; (iii) try to conjecture some relation between the parameters and the hardness of the PSAT problem instance (similar to the phase transition Vienna, Austria, August 2226, 2005

562

MIC2005. The 6th Metaheuristics International Conference

Table 1: Computational results of the VNS method compared to the previous GA approach.

N, L, instance 50, 100, 1 50, 100, 2 50, 100, 3 50, 250, 1 50, 250, 2 50, 250, 3 100, 100, 1 100, 100, 2 100, 100, 3 100, 200, 1 100, 200, 2 100, 200, 3 VNS solved (cpu time) 26/30 (29.46) 30/30 (0.23) 29/30 (17.72) 30/30 (32.60) 15/30 (14.60) 30/30 (11.37) 30/30 (0.03) 30/30 (0.13) 30/30 (0.03) 30/30 (4.23) 27/30 (79.07) 25/30 (77.04) GA solved (cpu time) 23/30 (188.07) 27/30 (21.98) 13/30 (194.04) 25/30 (504.58) 25/30 (2515.88) 25/30 (664.7) 30/30 (6.19) 30/30 (13.28) 30/30 (66.85) 27/30 (858.85) 10/30 (1395.62) 10/30 (1235.31) N, L, instance 100, 500, 1 100, 500, 2 100, 500, 3 200, 200, 1 200, 200, 2 200, 200, 3 200, 400, 1 200, 400, 2 200, 400, 3 200, 1000, 1 200, 1000, 2 200, 1000, 3 VNS solved (cpu time) 30/30 (230.97) 23/30 (1930.52) 2/30 (30460.00) 0/30 () 30/30 (2.30) 30/30 (0.27) 30/30 (9.73) 12/30 (6082.67) 2/30 (5531.50) 30/30 (966.47) 0/30 () 30/30 (1942.93) GA solved (cpu time) 21/30 (11369.29) 19/30 (18932.76) 12/30 (17907.2) 0/30 () 27/30 (253.82) 28/30 (48.36) 28/30 (524.63) 16/30 (12167.64) 15/30 (11938.51) 22/30 (68437.78) 0/30 () 21/30 (56081.48)

phenomenon for SAT). Thus, an exhaustive empirical study should give better insight into this phenomenon.

References

[1] R. Fagin, J. Halpern, and N. Megiddo. A logic for reasoning about probabilities. Information and Computation, 87(1/2):78128, 1990. [2] P. Hansen and B. Jaumard. Probabilistic satisability. In Algorithms for uncertainty and defeasible reasoning, volume 5 of Handbook of Defeasible Reasoning and Uncertainty Management Systems, pages 321367. Kluwer Academic Publishers, 2001. [3] P. Hansen and N. Mladenovi. Variable neighborhood search: Principles and applications. c European Journal of Operational Research, 130(3):449467, May 2001. c [4] P. Hansen and N. Mladenovi. Variable neighborhood search. In Handbook of Metaheuristics, volume 57 of International Series in Operations Research and Management Science, pages 145184. Springer, 2003. [5] P. Hansen and S. Perron. Merging the local and global approaches to probabilistic satisability. Technical report, GERAD, 2004. [6] N. Mladenovi and P. Hansen. Variable neighborhood search. Computers and Operations c Research, 24(11):10971100, 1997. [7] J. A. Nelder and R. Mead. A simplex method for function minimization. The Computer Journal, 7(4):308313, 1965. [8] Z. Ognjanovi, U. Midi, and J. Kratica. A genetic algorithm for probabilistic sat problem. c c In Articial Intelligence and Soft Computing, volume 3070 of Lecture Notes in Computer Science, pages 462467. Springer, 2004. [9] Z. Ognjanovi and M. Rakovi. Some rst-order probability logics. Theoretical Computer c s c Science, 247(1-2):191212, 2000.

Vienna, Austria, August 2226, 2005

You might also like

- NA FinalExam Summer15 PDFDocument8 pagesNA FinalExam Summer15 PDFAnonymous jITO0qQHNo ratings yet

- Mirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationDocument9 pagesMirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationfurbyhaterNo ratings yet

- File Signature LabDocument3 pagesFile Signature LabGheorghe RotariNo ratings yet

- Theorist's Toolkit Lecture 12: Semindefinite Programs and Tightening RelaxationsDocument4 pagesTheorist's Toolkit Lecture 12: Semindefinite Programs and Tightening RelaxationsJeremyKunNo ratings yet

- Gradient Methods For Minimizing Composite Objective FunctionDocument31 pagesGradient Methods For Minimizing Composite Objective Functionegv2000No ratings yet

- Lec3 PDFDocument9 pagesLec3 PDFyacp16761No ratings yet

- Problem Set 5Document4 pagesProblem Set 5Yash VarunNo ratings yet

- Chen1996A Class of Smoothing Functions For Nonlinear and MixedDocument42 pagesChen1996A Class of Smoothing Functions For Nonlinear and MixedKhaled BeheryNo ratings yet

- CS 390FF: Randomized Gossip AlgorithmsDocument3 pagesCS 390FF: Randomized Gossip AlgorithmsAgustín EstramilNo ratings yet

- A Deterministic Strongly Polynomial Algorithm For Matrix Scaling and Approximate PermanentsDocument23 pagesA Deterministic Strongly Polynomial Algorithm For Matrix Scaling and Approximate PermanentsShubhamParasharNo ratings yet

- 论进化优化在最小集覆盖上的逼近能力Document25 pages论进化优化在最小集覆盖上的逼近能力Jeremy WayinNo ratings yet

- Numerical Methods For Inverse Singular Value ProblemsDocument22 pagesNumerical Methods For Inverse Singular Value ProblemsmuhmmadazanNo ratings yet

- Generalized Pattern Search Methods For A Class of Nonsmooth Optimization Problems With Structure - Bogani (2008)Document11 pagesGeneralized Pattern Search Methods For A Class of Nonsmooth Optimization Problems With Structure - Bogani (2008)Emerson ButynNo ratings yet

- Final 13Document9 pagesFinal 13نورالدين بوجناحNo ratings yet

- Penalty Decomposition Methods For L - Norm MinimizationDocument26 pagesPenalty Decomposition Methods For L - Norm MinimizationLing PiNo ratings yet

- Remezr2 ArxivDocument30 pagesRemezr2 ArxivFlorinNo ratings yet

- BASIC ITERATIVE METHODS FOR SOLVING LINEAR SYSTEMSDocument33 pagesBASIC ITERATIVE METHODS FOR SOLVING LINEAR SYSTEMSradoevNo ratings yet

- LP NotesDocument7 pagesLP NotesSumit VermaNo ratings yet

- Theorist's Toolkit Lecture 2-3: LP DualityDocument9 pagesTheorist's Toolkit Lecture 2-3: LP DualityJeremyKunNo ratings yet

- S1/2 Regularization Methods and Fixed Point Algorithms for Affine Rank MinimizationDocument28 pagesS1/2 Regularization Methods and Fixed Point Algorithms for Affine Rank MinimizationVanidevi ManiNo ratings yet

- Numerical Methods Using Trust-Region Approach For Solving Nonlinear Ill-Posed ProblemsDocument11 pagesNumerical Methods Using Trust-Region Approach For Solving Nonlinear Ill-Posed ProblemsTaniadi SuriaNo ratings yet

- Condition Numbers and Backward Error of A Matrix PDocument19 pagesCondition Numbers and Backward Error of A Matrix PDiana Mendez AstudilloNo ratings yet

- MIR2012 Lec1Document37 pagesMIR2012 Lec1yeesuenNo ratings yet

- Name 1: - Drexel Username 1Document4 pagesName 1: - Drexel Username 1Bao DangNo ratings yet

- Section-3.8 Gauss JacobiDocument15 pagesSection-3.8 Gauss JacobiKanishka SainiNo ratings yet

- Journal of Computational and Applied Mathematics Model-Trust AlgorithmDocument11 pagesJournal of Computational and Applied Mathematics Model-Trust AlgorithmCândida MeloNo ratings yet

- Assigment ProblemDocument30 pagesAssigment ProblemplanetmarsNo ratings yet

- Qip NPDocument10 pagesQip NPcreatorb2003No ratings yet

- Prony Method For ExponentialDocument21 pagesProny Method For ExponentialsoumyaNo ratings yet

- Termination of Affine Loops Over The Integers: Mehran HosseiniDocument13 pagesTermination of Affine Loops Over The Integers: Mehran HosseiniToghrul KarimovNo ratings yet

- EigenvaloptDocument13 pagesEigenvaloptSáng QuangNo ratings yet

- 04 SparseLinearSystemsDocument41 pages04 SparseLinearSystemssusma sapkotaNo ratings yet

- The Cross Entropy Method For ClassificationDocument8 pagesThe Cross Entropy Method For ClassificationDocente Fede TecnologicoNo ratings yet

- Goal-Oriented Error Control of The Iterative Solution of Finite Element EquationsDocument31 pagesGoal-Oriented Error Control of The Iterative Solution of Finite Element EquationsOsman HusseinNo ratings yet

- Amsj 2023 N01 05Document17 pagesAmsj 2023 N01 05ntuneskiNo ratings yet

- Mark Rusi BolDocument55 pagesMark Rusi BolPat BustillosNo ratings yet

- Properties of Nonlinear Systems and Convergence of The Newton-Raphson Method in Geometric Constraint SolvingDocument20 pagesProperties of Nonlinear Systems and Convergence of The Newton-Raphson Method in Geometric Constraint SolvingLucas SantosNo ratings yet

- Parameterized Approximation Schemes For IS and KnapsackDocument16 pagesParameterized Approximation Schemes For IS and KnapsackJiong GuoNo ratings yet

- Ellipse FittingDocument8 pagesEllipse FittingRaja Anil100% (1)

- Use COMSOL for efficient inverse modeling of aquifersDocument8 pagesUse COMSOL for efficient inverse modeling of aquifersLexin LiNo ratings yet

- Data Augmentation For Support Vector Machines: Mallick Et Al. 2005 George and Mcculloch 1993 1997 Ishwaran and Rao 2005Document24 pagesData Augmentation For Support Vector Machines: Mallick Et Al. 2005 George and Mcculloch 1993 1997 Ishwaran and Rao 2005EZ112No ratings yet

- MA4254 Discrete OptimizationDocument69 pagesMA4254 Discrete OptimizationmengsiongNo ratings yet

- Improved Conic Reformulations For K-Means Clustering: Madhushini Narayana Prasad and Grani A. HanasusantoDocument24 pagesImproved Conic Reformulations For K-Means Clustering: Madhushini Narayana Prasad and Grani A. HanasusantoNo12n533No ratings yet

- Regularized Compression of A Noisy Blurred ImageDocument13 pagesRegularized Compression of A Noisy Blurred ImageAnonymous lVQ83F8mCNo ratings yet

- 20-2021-A Dual Scheme For Solving Linear Countable Semi-InDocument14 pages20-2021-A Dual Scheme For Solving Linear Countable Semi-InBang Bang NgNo ratings yet

- Nonnegative Tensor CompletionDocument13 pagesNonnegative Tensor CompletionTom SmithNo ratings yet

- International Journal of C Numerical Analysis and Modeling Computing and InformationDocument7 pagesInternational Journal of C Numerical Analysis and Modeling Computing and Informationtomk2220No ratings yet

- Local Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXDocument9 pagesLocal Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXhellothapliyalNo ratings yet

- Lecture 5Document13 pagesLecture 5kostas_ntougias5453No ratings yet

- Admm HomeworkDocument5 pagesAdmm HomeworkNurul Hidayanti AnggrainiNo ratings yet

- CH 5Document29 pagesCH 5Web devNo ratings yet

- Numerically stable LDL factorizations in IPMs for convex QPDocument21 pagesNumerically stable LDL factorizations in IPMs for convex QPmajed_ahmadi1984943No ratings yet

- Practice Sheet Divide and ConquerDocument5 pagesPractice Sheet Divide and ConquerApoorva GuptaNo ratings yet

- Ridge 3Document4 pagesRidge 3manishnegiiNo ratings yet

- Theoretical Analysis of Numerical Integration in Galerkin Meshless MethodsDocument22 pagesTheoretical Analysis of Numerical Integration in Galerkin Meshless MethodsDheeraj_GopalNo ratings yet

- A Fast Algorithm For Matrix Balancing: AbstractDocument18 pagesA Fast Algorithm For Matrix Balancing: AbstractFagner SutelNo ratings yet

- Bayesian Monte Carlo: Carl Edward Rasmussen and Zoubin GhahramaniDocument8 pagesBayesian Monte Carlo: Carl Edward Rasmussen and Zoubin GhahramanifishelderNo ratings yet

- Computing Cylindrical Algebraic Decomposition via Triangular DecompositionDocument21 pagesComputing Cylindrical Algebraic Decomposition via Triangular DecompositionMohan Kumar100% (1)

- A Problem in Enumerating Extreme PointsDocument9 pagesA Problem in Enumerating Extreme PointsNasrin DorrehNo ratings yet

- Difference Equations in Normed Spaces: Stability and OscillationsFrom EverandDifference Equations in Normed Spaces: Stability and OscillationsNo ratings yet

- Live Sound 101: Choosing Speakers and Setting Up a Sound SystemDocument14 pagesLive Sound 101: Choosing Speakers and Setting Up a Sound Systemohundper100% (1)

- Updated Scar Management Practical Guidelines Non-IDocument10 pagesUpdated Scar Management Practical Guidelines Non-IChilo PrimaNo ratings yet

- Unit-II Inheritance and PointersDocument140 pagesUnit-II Inheritance and PointersAbhishekNo ratings yet

- PMDC Renewal FormDocument3 pagesPMDC Renewal FormAmjad Ali100% (1)

- PE6705 Water Flooding and Enhanced Oil Recovery L T P C 3 0 0 3 Objective: Unit I 9Document6 pagesPE6705 Water Flooding and Enhanced Oil Recovery L T P C 3 0 0 3 Objective: Unit I 9Prince ImmanuelNo ratings yet

- Haukongo Nursing 2020Document86 pagesHaukongo Nursing 2020Vicky Torina ShilohNo ratings yet

- GCSE History Revision Guide and Workbook Examines Henry VIII's Reign 1509-1540Document23 pagesGCSE History Revision Guide and Workbook Examines Henry VIII's Reign 1509-1540Haris KhokharNo ratings yet

- EN - Ultrasonic Sensor Spec SheetDocument1 pageEN - Ultrasonic Sensor Spec Sheettito_matrixNo ratings yet

- United Nations Manual on Reimbursement for Peacekeeping EquipmentDocument250 pagesUnited Nations Manual on Reimbursement for Peacekeeping EquipmentChefe GaragemNo ratings yet

- Natural Fibres For Composites in EthiopiaDocument12 pagesNatural Fibres For Composites in EthiopiaTolera AderieNo ratings yet

- Water Supply Improvement SchemeDocument110 pagesWater Supply Improvement SchemeLeilani JohnsonNo ratings yet

- Athus Souza - ResumeDocument2 pagesAthus Souza - ResumeArielBen-ShalomBarbosaNo ratings yet

- Kami Export - Exercise Lab - Mini-2Document3 pagesKami Export - Exercise Lab - Mini-2Ryan FungNo ratings yet

- Osg VS Ayala LandDocument17 pagesOsg VS Ayala LandJan BeulahNo ratings yet

- Handbook of Research On Emerging Pedagogies For The Future of Education, Trauma-Informed, Care, and Pandemic Pedagogy Aras BozkurtDocument535 pagesHandbook of Research On Emerging Pedagogies For The Future of Education, Trauma-Informed, Care, and Pandemic Pedagogy Aras BozkurtBrenda MoysénNo ratings yet

- 2015 Idmp Employee Intentions Final PDFDocument19 pages2015 Idmp Employee Intentions Final PDFAstridNo ratings yet

- United States v. Bueno-Hernandez, 10th Cir. (2012)Document3 pagesUnited States v. Bueno-Hernandez, 10th Cir. (2012)Scribd Government DocsNo ratings yet

- Arduino ProjectsDocument584 pagesArduino ProjectsJoão Francisco83% (12)

- Rolling ResistanceDocument12 pagesRolling Resistancemu_rajesh3415No ratings yet

- CHEM F313: Instrumental Methods of AnalysisDocument12 pagesCHEM F313: Instrumental Methods of AnalysisAYUSH SHARMANo ratings yet

- Absorbance at 405Nm: United States PatentDocument20 pagesAbsorbance at 405Nm: United States Patentfelipe_fismed4429No ratings yet

- CRAPAC Monthly JanDocument4 pagesCRAPAC Monthly JanJasonMortonNo ratings yet

- VespaDocument5 pagesVespaAmirul AimanNo ratings yet

- ClassifiedrecordsDocument23 pagesClassifiedrecordsChetana SJadigerNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Execution and Documentation Requirements For Life Cycle Cost AnalysesDocument20 pagesExecution and Documentation Requirements For Life Cycle Cost AnalysessmetNo ratings yet

- Ssnt Question BankDocument32 pagesSsnt Question Bankhowise9476No ratings yet

- Uti MF v. Ito 345 Itr 71 - (2012) 019taxmann - Com00250 (Bom)Document8 pagesUti MF v. Ito 345 Itr 71 - (2012) 019taxmann - Com00250 (Bom)bharath289No ratings yet

- Entry Test Result MPhil 2014 PDFDocument11 pagesEntry Test Result MPhil 2014 PDFHafizAhmadNo ratings yet