Professional Documents

Culture Documents

NLA V.sundrapandian Chapter 11,12,13

NLA V.sundrapandian Chapter 11,12,13

Uploaded by

Himanshu Yadav0 ratings0% found this document useful (0 votes)

9 views91 pagesOriginal Title

NLA v.sundrapandian chapter 11,12,13

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views91 pagesNLA V.sundrapandian Chapter 11,12,13

NLA V.sundrapandian Chapter 11,12,13

Uploaded by

Himanshu YadavCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 91

Cnaprer 11

Givens Matrices and

Their Applications

‘Givens mtn at sre matic fhe

ees ee

Jugend =

0 00- go 1

1 ee

where 2408

“Teyana athe erated Asian atest Wale Gives (900

Since wean dome c= ton ands~ sind lor sone,» Gren mate can be coment

‘ote by 15.8). Gantry the marx) tates a of coordinate an (gy

‘ctor athe =i sd he] yl voter ate ys) trough the en ange 8 Soe

‘kine te (9) pane Ties wy the Gem rica ermal kam are Gh

Fotatin opine fotaton athe (.)) plane Thus, wha an evectr == (shy, ee

relied ya Gives ston 3 corpora

ae ethene matron tei tion te ey ofl

‘pecbed patos aa weston sn ening von sled pense

ha, Gives mates brewster spans He

Iaczorination of given wate A (oqeze or rectangle), rong

Atoan upper Hasesers form, ant so Edg te eine,

Inethod), et

‘Scanned with CamScanner

4

nd Their Applicat

my

434 : Gh

Next, we establish that the Givens matrix J(i,,0) is orthogonal and hence jp

conditioned. (Orthogonal matrices have condition number equal f0 UHlty under the gpg

norms)

Lemma 11.1 The Givens matrix J(j, j,) is orthogonal

‘Thus, it follows immediately that

Proof: By definition, c? +s

Ji, j, 4) Sli 0)" =F

Hence, J(i, 7,4) is an orthogonal matrix.

In the next lemma, we present a simple algorithm for co

structing & 2610 below the fy,

i © ft

coordinate for any 2-vector via premultiplication by Givens matrices.

Lemma 11.2 Ifr= (| is a 2-vector, then the Givens rotation

T2

J(,2,8) = [ a Al

where

2nd 2 au

+a Vata 4

is such that

J(1,2,8) 2 = [3]

Proof: A direct calculation yields

cx, + Sz;

J(1,2,8)2 = ce We |

Note that Ee x a ; 7

cay + $29 = LOE = \/a2 +03

Vr +22

an ery = MTU _ 4

sa + ct, =

vat + a

Thus,

J(1,2,8) z= [ vats |

This completes the proof.

The procedure as stated in Lemma 11.2 for computing c and s might yield some overfou

or underflow. However, this possibility can be quite easily overcome by the following sim!

rearrangement of the formula (11.1).

Scanned with CamScanner

rth (Computing the Givers parameters

rn yon Bevooton, tern for intruducing were We sever)

at

ni algorithin Computer (he Ciyayy :

onto volt Hol M8, Oa iat aae ieee vi vo Ma are ee

ws

" andl Whew {ital & Lvl, We proceed wa follow

ted, (. i owt

Nt VOR ae

cave Whom [ral [| We prose flow:

Py 1

faa) Os mmm seat

sunny bw noted thatthe agorithin roquior bout four flops wn one sau root.

Next We Hustrate Algorithm Lhd with some examples.

prAMPEB VLA bet | f |

|< ra), we proceed mx follows:

since [a

je Oh he ope tie gh

my 2 yl rn wn

HHenee,

susan [§ ‘|- a a

Note that

IXAMPLB 11.2 Let 2 = | } |

Since |g] 2 |r|, we proceed as follows:

ie) = ‘A 3 uv c=st=

8 vt Vio’

sono [i

‘Scanned with CamScanner

436 iver

ns Matrices and Theiy tap

ia,

Next, we show how the premultiplication of a matrix A by a Givens

mat

without computing the Givens matrix explicitly. Similar remarks hold for Posting WG, ip

of a matrix A by a Givens matrix. “Mea!

Algorithm 11.2 (Computation of Premultiplying by a Givens matria) Given

matrix A, Givens parameters c and s, and the indices i and j where 1 <4 i, one way to do th

is to construct the Givens rotation matrix J(i,j,) affecting the i and j* rows only, sop

that J(i,j,6)A will have a zero in the (j,i) position. Explicitly, the required procedure Ae

follows:

Algorithm 11.3 (Creating Zeros in a Specified Position of a Matrix Using Givens Rotation,

Suppose we are given an nxn real matrix A, and we wish to create a zero in the (j,)th Podtien

of A, where j > i. The following algorithm overwrites A by J(i,4,0)A such that the latter has

a zero in the (j,4)th position.

nO such that

es) [au]_[*

-s c}lai} [0

Step 2: Overwtite A with J(i,j,8)A by forming the product implicitly as specified in

Algorithm 11.2.

Next, we illustrate the above procedure with a few examples.

Step 1: Find c= cos8, s

EXAMPLE 11.7 Let

1

A 2

6

In this example, we create a zero in the (2, 1)th

Step 1: Find cand s such that

Step 2: Form J(1,2,0).

w

J(1,2,0) = | -%

0

J(,2,0)A=] 0 te Se

Be 8 As

Cr

Scanned with CamScanner

givens QR Factorization

i ails 441

pbe ALB Let ieee

yi

Antena

Sibel

sexu, 6 eFC A EEO In he (1) en

dl 3,

th yx

my Fd eal ch tha Wi Vostion of A using J(2, 8,0)

r

y, Form J(2,3,0)

sep

1 0 0

J(2,3,0) = | 0 s |

s 5

3 MPM

J(2,3,0)A=| V5 &

0-H 0

11.2 GIVENS QR FACTORIZATION

from the algorithms presented in Section 11.1 for creating zeros in specified positions of a

jyen vector or a matrix, it should be clear that Givens rotation matrices can also be applied,

jke Householder matrices, for finding the QR factorization of a given matrix. Since both.

Householder and Givens matrices are orthogonal, both methods can be shown to be numerically

able, The Givens method, however, is almost twice as expensive as the Householder method

infinding the QR factorization of a given matrix. In spite of this reason, QR factorization using

Givens rotations appear to be particularly useful in QR iterations for eigenvalue ‘computations

aswell as in the solution of linear systems with structured coefficient matrices such as Toeplitz

matrices. ;

“Tnthis section, we present the basic algorithm for constructing the QR factorization of an

‘neal matrix A, where m > n, using the Givens’ method.

(Givens QR Factorization Theorem) Let A be an m Xn real matrix

e revit 1), Then there exist p orthogonal Givens matrices

eens 44 {|

gyre tye) sential od ~ i

‘ a

‘Scanned with CamScanner

| |

then we have AuQn

where A is a matrix of the same order ax A with zerow below the diagonal,

Outline of the Proof: The basic idea of this construction js very analogous to Novel oy

Factorization outlined in the proof of Theorem 104,

Let AO = A eee ;

We compute Givens matrices Q1, Qay «+1 @ky using, Givens rotations such that

AW = QAM

has zeros below the (1,1) entry in the first column,

Al? QA

has zeros below the (2,2) entry in the second column, and 80 on. Each Qi 1 generated a

product of Givens rotations. One way of defining Qj is as follows

Qy = J(1,m,0)/(1,m = 1,0)-+°F(1, 2,8)

Qo = J(2,m,0)I(2,m = 1,0) J(2,3,0)

and 50 on. By construction,

BE A® = QAP~Y = QpQp-1AP™ = ++ = QQp-1 QA = QTA

where p = min(n,m — 1)

We now have

A=QR

with QT = QpQp-1-+-QoQ1 and R having the same order as A with zeros below the main

diagonal. This completes the proof.

Next, we state the algorithm for QR factorization of matrices using Givens rotations,

Algorithm 11.4 (Givens QR Factorization of Matrices) Suppose we are given an mxn

real matrix with m >n. Define p = min(n,m — 1), The following algorithm computes the

Givens matrices defining an orthonormal matrix Q and a matrix R as stated in Theorem 11.1.

The algorithm overwrites A with R.

For k= 1,2,...,p do

For!=k+1,...,mdo

mameoesl ote uf baaiok

(1) Find c and s such that

| feailsl-b): 3a

(2) Save the indices k and J and the numbers. ¢ and 8,

rite A with J(k,1,0)A (using Algorithm

‘Scanned with CamScanner

ons QR Factorization re vr

Gi

kt It-can be easly, seen that the Givens QR factorizatlon ‘of matrices defined in

om algorithm requires 2n4(=) flops, ‘This count does not include the computation of

abort Qs Thus, it is clear that the Givens QR factorization algorithm jg computationally

weiaiyice as exe m8 the Householder QR factorization algorithin.

ark 113 ‘The Givens QR factorization algorithm is numerically stable (Wilkinson 1965,

rem tn the notation of backward stability of numerical algorithis, it ear be shown that if

20 he computed matrices Q and R satisfy

R=Q™A+E)

sere ; ;

e Elle < 6un?/2(a + 6n)°"- Alle (1.2)

where #18 the machine precision. The bound for the round-off error given in (11.2) essentially

Sablishes that the Givens ‘QR factorization algorithm is numerically stable

py Givens and Householder QR Factorization Theorems, we know that the QR factor

ition of a matrix Always exists ‘nd this factorization may be obtained in different way

tt is, therefore, natural to wonder whether the QR factorization of a matrix is unique. We

discuss the uniqueness of QR factorization of matrices for square and, in general,

matrices.

‘Theorem 11.2 (QR Uniqueness Theorem for Square Matrices) Let Abe an nxn nonsingular

rnatrix. ‘Then there exists a unique m x 7 orthogonal matrix Q and a unique n 7 UPPSE

triangular matrix R with positive diagonal entries such that

A=QR

Proof: Let os ht

A=QR=QR (11.3)

be two QR factorizations of A, where Q and Q are orthogonal matrices and R and R are upper

ttiangular matrices having positive diagonal entries. .

No reall that an orthogonal matrix O is always nonsingules ecause det = 21, (Chis

follows because O7! = OT and so OOT = I, which gives [det(O)|? = 1). Because A, Q.and

Q are nonsingular matrices, it is immediate that the upper triangular matrices R and Rare

a4

nonsingular matrices. In fact, we can express Rand R as

att he ae

R=Q'A and R=QhA a

and we know that product of nonsingular matrices is nonsingular,

From (11.3), it follows that

v£@'Q=kR* (ne

so that

ty ~Q'QQ'Q=

viv =Q7QQ'Q=1 ole a

a i

Scanned with CamScanner

444 Givens Matrices and Th Ate

Hence, V is an orthogonal Note also that V

” ygonal matrix. Note alse

wre Upper triangular matrices. Since VV = I, equating oe on both sid

V must be a diagonal matrix with +1 as its diagonal entries. s

upper triangular because bot}

th

les, mci

dy

Rri_| ©& with d)=41,i=1,2,....n

dn

Since R and # are upper triangular matrices with positive diagonal entries, it is ln,

that di = 1 for each i=1,2,....n. Hence, it follows that

or equivalently that

R=R

Substituting above into (11.4), it follows immediately that

OQ=1

or equivalently that

Q=a

This completes the proof.

Next, we

Beneralize the QR uniqueness theorem to overdetermined matrices having fy

column rank.

Theorem 11.3 (QR Uniqueness Theorem - General Case) Let A be an mxn(

matrix with full column rank. Then there exists a unique m x n matrix Q with

columns and a uni

ue mn upper triangular matrix R with positive diagonal entries such

that

A=QR

Proof: The proof is carried out using an important result (Theorem 1.53) for positive definite

matrices. First, we establish that ATA is a positive defini

ite matrix.

First, we note that A7A is positive semi-definite because for any x € R’,

TAT Aw = (Az) Az = ||An|2 > 0

Since the columns of A are lj y

|

f

| Therefore, we have

Scanned with CamScanner

‘ Givens QR Factorization

H re

guppone that

A=Qhe Oh To)

and Q are mn matrices hay

eG patroes With podltive diagonal ent gett columns, ond Rad ft ne 0%” SPREE

vi that

A= PO Ohm HOR

pol similatly,

A A= OOR= HOR

From the uniqueness result, expressed in (1. 5), it is now immediate that

Rap oy

orequivalently that

R=R

Now, as 2 is nonsingular, it is immediate from (11.6) that

Q=90

‘This completes the proof.

Next, we illustrate the Givens QR factorization of a matrix with an example.

EXAMPLE 11.9: Using Givens rotations, find the QR factorization of the matric

1, 2-1

A=|21 3

164

Solution: Let A be A.

Step 1: We find the Givens matrix Q, using Givens rotations such that

AM = Q,4

has zeros below the (1, 1) entry in the first column.

By the Givens QR factorization algorithm, wp know that

= J01,3.00,20)

To find J(1,2,4), we must determine ¢ and s such that

a

‘Scanned with CamScanner

0.4472 0.8944 0

J(1,2,@) = | -0.8944 0.4472 0

0 o1

Note that

ios 0: 2.2361 11,7889 2.2361

J,2,A=| -s c 0] = 0 -13416 2.2361

001 1 5 4

(For programming purposes, J(1,2,9)A may be written over A)

Next, we must find J(1,3,). For this purpose, we find ¢ and s such that

[2 2] J-(3]

ay; = 2.2361, a31 = 1

Here,

= 0.9129 and s =ct = 0.4082

t= 9.4472, c=

ay

c 0s 0.9129 '0 0.4082

J(1, 3,8) = 010/= Orn 0

Oc

0.4082 0 0.9129

Therefore,

Thus, we obtain

0.4082 0.8165 0.4082

-0.1826 -0.3651 0.9129

Qi = J(1,3,6)J(1, 2,8) = 0.8944 0.4472 0

and

2.4495 3.6742 |

AO =Q,A= 0 -1.3416 2.2361

0 3.8341 2.7386

(Note that A“ has zeros below the (1, 1) entry in the first column)

Step 2: Here, we find the Givens matrix Q» using Givens rotation so that

A?) = Qa

{ column.

‘Scanned with CamScanner

_

Givens Reduction to Hessenberg Form

Aa7

were.

1.3416, gy = 3.8341

= 0.9439 and c= st = —0.3303

therefore, we have

AsttiKO} 40 1 0 0

o-reae-[0 ec s|=| 0 -0.3303 0.9439

0 -s ¢ 0 0.9439 | -0.3303

We find that

if | 24498 36742 3.6742

R=A® =Q,AM = 0 4.0620 1.8464

0 0 3.0151

and also that

* 0.4082 0.1231 0.9045

Q=QTQF = | 0.8165 0.4924 -0.3015

0.4082 0.8615 —0.3015

Thus,

A=QR

where the matrices Q and R are as described above.

11.3 GIVENS REDUCTION TO HESSENBERG FORM.

Using the algorithms presented in Section 11.1 for creating zeros in specified positions of a

given vector or a matrix, we now apply Givens rotation matrices, like Householder matrices,

toreduce an arbitrary n x n matrix to its upper Hessenberg form. Since both Householder and

Givens mattices are orthogonal, both methods can be shown to be numerically stable. The

Givens method, however, is almost twice as expensive as the Householder method in reducing a

siven square matrix to its upper Hessenberg form. In spite of this reason, Hesenberg reduction

3 rotations appeat to be particularly useful in Hessenberg QR iteration method for

ons, signal processing applications and also in control theory.

‘we present the basic algorithm for reducing an arbitrary m x n real matrix:

-form using the Givens’ method. rolled

to Hessenberg Form) Let A be any n x n real

ity tre ion P, whi

caren where P is a product of

Fase 8

Scanned with CamScanner

> |

448 Givens Matrices and Their APP eon,

Outline of the Proof:

Step 1: Here, Givens rotations J(2,3,0), (2, 4y0).-+-s F278) are computed Neng,

so that with

Py = J(2n,0)d(2n = 1,0) S24, OI (23,0)

we have

Ewer:

erm 3s

he A

PiAPT = AY) =

Ob ee

By construction, the matrix A) has zeros below the subdiagonal entry in the first column,

Step 2 Here, Givens rotations J(3, 4,8), J(3,5:8),---»J(3,.8) are computed Stceessiely

so that with

P2 = J(3,n,6)J(3,n — 1,8) --- J(3,4, 8)

we have

+e *

ae te *

O* * *

PAM PT =A =| 9 0 x *

(no eee

By construction, the matrix A) has zeros below the subdiagonal entries in the first and second

columns.

Continuing in this manner, the matrix A will have zeros below the subdiagonal entries

in the first i columns.

At the end of (n — 2)th step, the matrix A(-*) is the upper Hessenberg matrix H,. We

note that the orthogonal similarity matrix P is a product of Givens matrices given by

I P= ProoPa-g- Pai

where

© P= IG +1, 0) +1,n-1,6)--- Ji +1,64+2,0)

senbery Reduction) Let A be any n x n real matrix. The

ith PAP? — H,,, where H,, is an upper Hessenberg matrix.

Scanned with CamScanner

3am Reduction to Hessenberg i. 449

lessen

Rees about “yn? flops, while the Hen’

ies

1 reires about $n? flops. ‘Th

i js about twice as expensive as ¢}

fort

8 reduction of matnices using Givens method

3B teduction of matrices using Householder’s

Clear that the Givens reduction to Hessenberg

Suseholder reduction to Hessenberg form.

Givens reduet

similarity tr

matrices,

US, it is

he H

k 11.5 It can be shown that the

ne algorithm. It is clear because the

tion to Hessenberg form is a numerically

a product of Givens (orthogonal)

stal ‘ansformation used is orthogonal, which is

just

Here, a21 = 4, a3; = 2

a;

t= a

Therefore,

1 00 1 0 0

P=P,=J(2,3,0)=|0 c s|=]0 ogosa 0.4472

0 -s.¢ 0 0.4472 0.8944

Note that

1 5.3666 0.4472 waalt

Hy= PAP? = | 44721 -2.6000 1.8000

0 1.8000 2.6000

‘hich isin upper Hessenberg form. !

EXAMPLE 1111 Using Givens method, reduce the following matrix to upper Hessenbe:

form:

‘Scanned with CamScanner

i 450 Givens Matrices and Their APPlcatia,

‘AO has zeros below the subdiagonal entry i,

‘Step 1: Here, find P, so that PAPI =

Py = J(2,4,8)F(2,3,9)

First, we find J(2,3,0). For this, we must determine ¢ and s such that

foe] able)

first column. Note that

Here, ag = 3 and as; = 4

Hence,

0

1, 0400) De ee

0 cs 0 0 06 08 0

J23)=1 9 5 ¢ o|—]0 -08 06 0

0 o1 0 OO

Note that Rete vig hie

5 1.92

| (2,3, 0)AT(2,3,8)7 =] 9 9.44

3 38

Next, we find J(2,4,0). For this, we find c and s such that:

ce s]fan]_[*

-s c}lan]~ [0

Here, a2) = 5 and ay) =3

Therefore,

0.8575 and s = ct =0.5145

00 1 00 0

0 s|_|0 0.8575 0 0.6145

PO eo 01 0 |

Oc 0 0.5145 0 0.8575

‘Scanned with CamScanner

: Heesenhe

3

m

"Form

1.0000

4 = Raptr _| saa 10290 3.4000 4.0474

0 37941 1.2005 0.6765,

to Dia 2.9200 1.1456

In this last step, we find p

Se this, we must find © and s g2,, 1(3:4,4)

h that

aod

1.0000 1.0290 -0.

>4pr_ | 58310 3.7941 0.52

Hy= PAP" =| 99909 4.4608

which is in upper Hessenberg form.

PROBLEM SET

we

1 Letx= [ |: Find a Givens matrix G such that Gx =

[ota crs mac cmt =|

Scanned with CamScanner

0.1259 0.1259 |

*

°

5.2813

378 —1.2687

-3.4962 3.9379

0 0.0000 1.8931 0.7021

0

0.709, ¢

and 5 = ct = 0.6105

if have

10 00 1 0 0 0

P; = 4(3,4,0)=|9%1 00 o1 0 0

8 © s1* 10 0 07920 o.s105

0 -s ¢ 0 0 -0.6105 0.7920

N

1 0 0 0

P=P,P,=|% 95145 0.6860 0.5145

0 -0.8221 0.2239 0.5235,

0 0.2439 0.6923 0.6791

J

eee “|

Matrices and Their Appia,

452 Giant

*

§ 7 at Gz = | *

3. Let | 2 Find a Givens matrix G such the ;

*

1 a *

4. Let 2 = a Find a Givens matrix G such that Gr =) 9

*

4

*

1 F Gr=|0

5. Let x= 2 |.. Find a Givens matrix G such that Gr =) 7

-3

2 se

6. Let z= 3 Find a Givens matrix G such that Gx = ;

1

trix

7. Using Givens rotations, find the Givens QR factorization of the mat

8. Using Givens rotations, find the Givens QR factorization of the matrix

“EH

9. Using Givens rotations, reduce the following matrix to Hessenberg form:

[2]

10. Using Givens rotations, reduce the following matrix to Hessenberg form:

enw

wore

2 0)

A=|-2 34

1-4 2

11. Using Givens rotations, reduce the following matrix to Hessenberg form:

ye

Ht hoes eB

ce 2ier3

4-231

Scanned with CamScanner

a Chap 12 ee

= Methods for Matrix

lWenvalue Problem

tn this chapter, we study various a

realmatrices. We note that matrix eigen cay celts for fin

carious disciplines of science and eng ee?

is important for the following reason

simply that \ be a root of the ‘

ding all or selected

problem is one of the very

ting, ‘The numerical me

The eler nl

characteristic equat

important p

thods for solving th

ntary definition for a matrix eige

ion of the associated matrix A

det(A — 1) = 0

We note that the above definition should be take

practical approach by any means whatsoever,

A standard practical approach for solving the

many applications, we do not need to know the

need the dominant and subdominant eige

this type of problems, we use the power method and inverse iteration algorithms

This chapter is organized in seven sections. In Section 12.1, we

on eigenvalues and eigenvectors, which are important in applications, We need many of the

results from this section for the numerical methods presented in the subsequent sections. Im

Section 12.2 we discuss power method, a classi

n only as a definition, and that it is not a

eigenvalue problem is the QR method. For

full set of eigenvalues of A, and we merely

nvalues and the associated eigenvectors of A. For

state the basic theorems

al numerical method for finding the dominant

eigenvalue and the corresponding dominant eigenvector for a real diagonalizable matrix A. The

power method has a lot of importance in linear control systems theory as dominant eigenvalue

and the corresponding eigenvector of the system matrix of a given autonomous linear system

completely characterize the long-term or steady-state behaviour of all solutions.

Tn Section 12.3, we discuss the inverse iteration, which is a very efficient numerical method

owing to Wilkinson (1965) for computing the eigenvector © of a real diagonalizable matrix

_ Avwhen a good approximation o is available for the associated eigenvalue \ of A. In Section

| 124), we discuss an efficient numerical method called the Rayleigh quotient method to compute

‘an eigenvalue \ of a real symmetric matrix A when a good approximation to the associted

Se tion Tae the computation of the subdominant eigenvalue and associated

rector of a real diagonalizable matrix A using a numerically stable procedure ealled the

holder deflation. In Section 12.6, we detail the Jacobi 's ishtod for Sutag the eigenval-

and eigenvectors of a real symmetric matrix, Jacob's method is an = a roost

symmetric matrices of order n < 20. Jacobi’s method tends to be very slow for matrices

453

Scanned with CamScanner

Methods for Matrix Eigenvalue Prob,

ee Numerical ey

ty transformations involvin, «.

oP arvlern > 20, Jacobi's method uses orthogonal ae OR Method, and the ae

rcation maeln, Pialy, in Section 12.7, ree ienaier [ge Menor mat

inetd tke thy Hessenbery QR Method, Single a de othe atte <

Very’ useful in applications for finding’ all the eigen

12.1 BASIC THEOREMS ON EIGENVALUES

AND EIGENVECTORS

i eigen whi

Th'dils seckitn, wwe i basic theorems on egies on te emt whichis

is section, we d ; i fo :

w ‘a in the ana of various numerical methods in this chapt * estimating the

x e ices.

eigenvalues and eigenvectors of real, square matri otter

First, wo recall the definition of eigenvalues and eig

areal n x n matrix, then we say that A is an eigenvalue

eee o ector x such that the following equation is satisfied:

there exists a nonzero n.

ofA is

Ax = dt

In this caso, « is called a right eigenvector or simply an eigenvector of A corresponding to the

eigenvalue A, and the pair (,.r) is called an eigenpair of A. The set of all eigenvalues of A ic

called the spectrum of A, and is denoted by eig(.A). ; ;

Wersay that a vector y €€" isa left eigenvector of A corresponding to ) if

yaa ay

where y” = 9? is the conjugate transpose of y.

Remark 12.1 If \ is a real ej

8 complex eigenvalue, then:

'genvalue, then the corresponding eigenvector 2 is real. Ii

(9) A, the conjugate of A, is also an eigenvalue of 4;

(©) the eigenvector + corresponding to \ is complex; and

(©) &, the conjugate of z, is an eigenvector of A corresponding to J.

Remark 12.2. From Definition 12.1, it is immediate that the eigenvalues of A are the roots

Of its characteristic Polynomial, denoted by pa(A), and defined by

Pa(A) = det(Ar — A)

A ra de change es Dl

Pa(s) = det(sI — A) <9

Note that this is uot a practical method for netsie of A for obvi

I determining the eigenvalues

Pie Cincher eee

Scanned with CamScanner

asic Theorems "i

Bi on Bigenvalues ang

Eigenvectors 455

rk 12.3 If ris an eigen

pent’ 0, because Vector of A comes

ave Ponding to an eigenvalue A, then $0 is 2 for

Alaz) = a(n)

50, if £1 and w2 are eigenvector,

4-33) because Sof A corresponding to the is

- he same eigenvalue 2, then $0

=a(Ar) = Naz)

A(w, +22) = Ag,

2) At + Aty = day + d2q = Moy +22)

thus, if we define

Ey={ve€": Ar= dr, re}

1 Ey is a subspace of €" correspond

thet responding to A, called the eigenspace corresponding to the

tigenwalte

gymmetric matrices are important

in applications. 4

psa fot about symmetric mares applications. ‘The following theorem states an im-

rem 12.1 Let A

Teo be any n x n real symmetric matrix. Then the eigenvalues of A are

Next, we recall the definition of similar matrices.

pee em Let A be etl nxn matrix. Any transformation of A of the form X~1AX,

es Oe pees led a similarity transformation of A, and the matrices A and

Remark 12.4 Let A and B be similar matrices, i.e. suppose that

B=X"1AX

where X is nonsingular. Since

pa(A) = det(B— A) = det(X~!AX — M) = det(A— M) = pa()

ame eigenvalues. Thus, we may hope that by a suitable

e A to a simple form such as an upper Hessenberg form

note that this calculation is ill-conditioned

~ itisimmediate that A and B have the s

mmilarity transformation, we can reduc

“and thus compute the eigenvalues of A. However,

iffa(X) is large. Indeed, if

a(X-1AX) =X1AX + B

ablished that

Bla = wr2(X) Alle

precision. Thus, fe2(X) is large, then we will have significant

‘orthogonal, then K2(X) = 1, and so we have

[Ella = | Allz

umber if we work with an orthogonal similarity transfor

minimize the round-off errors in eigenvalue calculations, we

transformations, whenever possible,

round-off

Scanned with CamScanner

=

for Matrix Bigenvalue Pro),

m

456 Numerical Methods

Definition 12.3 We say that a square matrix A is diagonalizable if there exists a nonsing,),

matrix X such that

At

Az

XAX

in

that a square matrix A is orthogonally diagonalizable if there exists an orthogony

We

X such that X7AX is a diagonal matrix,

matrix

Next, we state the conditions for diagonalization and orthogonal diagonalization of square

matrices.

‘Theorem 12.2 Let A be areal n x n matrix. Then:

(a) A is diagonalizable if and only if A has a set of n linearly independent eigenvectors

(b) A is orthogonally diagonalizable if and only if A is a symmetric matrix.

Remark 12.5 Let A bea real nxn square matrix. Then A need not be diagonalizable. Even

if A is diagonalizable, it is not easy to determine the eigenvalues of A by finding a similarity

transformation X-!AX that brings A to a diagonal form, because finding such an X really

amounts to finding a set of n linearly independent eigenvectors of A.

‘The next theorem, known as Real Schur Form Theorem, is very important in applications

This theorem provides a most nearly triangular form that we can achieve for a given square

matrix by orthogonal similarity.

Theorem 12.3 (Real Schur Form Theorem) If A is a real n x n matrix, then there is an

nxn real orthogonal matrix Q such that

Ru Riz: Rie

peer

| cages) esol adie eth (22a)

oO 0 Rr

where each diagonal block Ris is either a real 1 x 1 matrix or a real 2 x 2 matrix with a pait

of complex conjugate eigenvalues. The diagonal blocks yj; may be arranged in any prescribed

order. (The matrix R in (12.1) is called the real Schur form (RSF) of A)

Remark 12.6 The proof of Theorem 12.3 assumes a knowledge of ei; i

. igenvalues and eigenvee-

tors of A. Thus, the proof of Theorem 12.3 is not useful in practice to determine the eigenvalues

of A. Nevertheless, we can obtain the real Schur form of A (and thus the eigenvalues of A) by

an iterative procedure known as QR algorithm.

Fora real square matrix A, the MATLAB command ‘{Q, R] = fo aa

and R, where @ and Hikes ea rua ie |] = schur(A)’ gives the mat

Scanned with CamScanner

Power Method

POWER METHOD

12

se section, we discuss power

‘nis me

ale a es corresponding eigat 2%

god has 8 lot of importance 4) for a

hod has @ lot ce in line 8

snr aponding cigenvector ofthe ge ee eteals

Graracterize the steady-state hehe

OnFirst, we define the dominant's

genve sa 1 anetical method for finding the dominant

Veter en teal diagonalizable matrix A. This

M matrix of its theory as dominant eigenvalue and the

siven autonom completely

our of al solute ‘ous linear system comp!

ere kr sere ae

efinition 12.4 Let A be ay

Y Teal

prminant eigenvalue of A

: axa

if the eigenvalues ni

Tix. Then an eigenvalue A; of A is called a

SIU 2108 a eanitetende eS

2 Ws] > > Vaal

Remark 12.7 Suppose that is a do

i * flue of a given real matrix A. Then it is

obvious that 1 must have algebraic multi, icity ee z

yy eannot be complex. For i), ae ‘ rae to 1. Similarly, it is also obvious that

# Xi will also be an eigenvalue of A, and

wo that A; is not a dominant ei

necessarily real and has multi

A corresponding to Ay, then x) can

(\y21) the dominant eigenpair of A

Setvelte of A. Hence, the dominant eigenvalue of a mata

Plicity equal to one. In view of this, if is an eigenvector of

be chosen to be a real n-vector, ie. x1 € R, We call

EXAMPLE 12.1 If A in a 5 x5 real matrix with the eigenvalues —7,1+ 1,6 + i, then

A, = —7 is the dominant eigenvalue of A. If

Ais a5 x5 real matrix with the eigenvalues

3,-3,24,—2, then A does not have a domin

‘ant eigenvalue. If A is a 5 x 5 real matrix with

the eigenvalues —3 + i,2 +i, 1, then A does not have a dominant eigenvalue.

The dominant eigenpair plays an important role in autonomous linear control systems as

the long-term behaviour of the solutions is governed by the dominant eigenpair. This can be

easily seen as follows:

Consider a linear autonomous discrete-time system given by

x(k +1) = An(k) (12.2)

where A is a real n xn, diagonalizable matrix. If we define the initial condition as

(0) =z» (12.3)

then it is immediate that the unique solution of the initial-value problem (12.2-12.3) is given.

by

a(k) = Akzo, (kE Z+) (12.4)

Wher, all positive integers. Suppose that (1,71) is a dominant eigen-

La Sevotes Ye sak ot a post (by assumption), A has a set of n linearly independent

ir of A, as a ee sc tcatine its eigenvalues, say A1,.2,--+,An-

Scanned with CamScanner

458 Numerical Methods for Matrix Eigenvalue Probie,

m

Given any initial state x9 € IR", there exist uniquely defined scalars

4, 035426 On”

such that

Xp = a101 + a2t2 + + Antn

Using (12.4) and the fact that Abr; = Ak for i= 1,2,.-.»ms we Bet |

&

a(k) = ayAkxy + apAz2 +-°° + ObAnTn (125)

ie. ke ra

2 a

a(k) =F [ova tay a) ayt s+ On (¥) tn (126)

Since A, is the dominant eigenvalue of A, it is immediate that

al

lim |~] 0, ¥j=2,3,--.9” (127)

k=00 |My

Hence, from (12.6) and (12.7), it follows that

lim, 2(8) = un (128)

where j is some constant.

‘Also, since A; is the dominant eigenvalue of A, it is immediate that

Az

A

Thus, the speed of convergence in (12.8) is governed by

|

If ay #0, then it is immediate that 1 # 0 so that yur: is an eigenvector corresponding

to the dominant eigenvalue Ay. Thus, if we choose any initial condition xo such that zo

has a nonzero projection on the subspace of R" spanned by the eigenvector zy, then the

corresponding solution x(k) = A*zo approaches the directon of the dominant eigenvector 11 |

as k + co with the speed of convergence given by [|

Ifa; =0, ie. if zo has a zero projection on the subspace span{z}, then we suppose that

A has a second dominant eigenvalue \2 with rz as the associated eigenvector, i.e. we assume

that the eigenvalues of A are ordered so that

[Aa] > [2] > [As] >= > [An]

In this case, we call (A2,22) as the subdominant eigenpair of A

Since a; = 0, Eq. (12.5) reduces to

2(k) = a2\n + asNhns +--+ + ap Aker,

Scanned with CamScanner

power Method

459

ie

a(k) = A$ [ou t+ay(2s\ ‘

(RY a4 4a (%) -|

A similar calculation, as before, then shows that

dim, 2(k) = jizy (12.9)

jris a constant. Since d» is the

ae Weissiere, chat tne a a = subdominant eigenvalue of A, it can be also easily

Convergence in (12.9) is governed by

Note again that if a2 #0, then ji 4.0 so that jizy is an eigenvector of A corresponding to

the subdominant eigenvalue Ay of A. Thus, fay =0 and ay 40, Le, if 29 an initial state

with zero projection on the subspace span{;} but with nonzero projection on the subspace

spon{z2}, then the corresponding solution 2(k) = A*zo of the linear dynamical system (12.2)

converges to fo direction of the subdominant eigenvector 2» of A with the speed of convergence

owed by |32|.

Since, in general, the initial state x9 will have a nonzero projection on either of the two

subspaces—span{r1} and span{2}, it is immediate that the dominant and subdominant eigen-

air of A completely determine the long-term (or steady-state) behaviour of the solutions of

the linear dynamical system (12.2).

Next, we describe the power method for finding the dominant eigenpair of a real diagonal-

izable matrix A. This is an efficient classical algorithm for finding the dominant eigenpair of

| Avand particularly suitable for large sparse matrices (note that a matrix is called sparse if it

contains a large number of zero entries.) For stating the power method algorithm, we need

the following definition:

1d

‘there are many entries of y having the maximum modulus value. Tn such

ax(y) to be the first entry of y having the maximum modulus valve, ‘Thus,

I-defined function on R”.

Remark 12.8 Ify IR", then we note that max(y) and [yl are defined diferently. The

tion connecting max(y) and ||ylloo can be stated as follows:

Scanned with CamScanner

j

460 Numerical Methods for Matrix Eigenvalue Pro

le

%

EXAMPLE 12.2 Let ri

2

y 8

1

3

From Definition (12.5), it is immediate that max(y) = -3

Also, if

then it is immediate that max(z)

The following theorem is important in applications:

Theorem 12.4

(a) Ify€R" and c€ R, then

max(cy) = emax(y)

(b) max(-) is a continuous function on R".

Proof:

(a) Let max(y) =. Then, by definition, i is the first entry from 1 to n such that

Lys] = maxt{(ly|s [uel +5 Lynl}

It is easy to deduce that

max(cy) = cy; = emax(y)

(b) Consider any two vectors y,2 € IR”. Then we know that

max(y) < |[yllo and max(z) < nz|loo

Hence,

(1210)

|max(y) ~ max(z)] < ||uloo ~ ll2[lool $ lly = Zlloe = |max(y ~ 2)|

From (12.10), itis immediate that max(:) is a continuous function on R". This compl

the proof.

Scanned with CamScanner

power Method 4a

ye

power Method Algorithm

por «sate the power method algorithm for computing the dominant eigenpair of a real

trix and establish the convergence of the power method algorithm. ‘The

| able

etna a Dower method because its algorithm involves the powers of the matrix A.

oe!

sora 125 (Power Method for Diagonalizable Matrices) Let A be areal, diagonalizable,

soem trix with 0 dominant eigenvalue, Suppose that A has the eigenvalues A1,Azs-+-+%n

"od so that

ed 50 tl

ot Lal > [al 2 Aa] 2 > 2 Pan

gnce A'S dingonalizable, A has a set of n Tinearly independent eigenvectors 21,22»... +n

© responding tO the eigenvalues \1, Az,...,n respectively. Since A, is real, we can take 21

veal n-vector. Suppose yp € IR" is any vector (initial guess) such that if we write

sobea

Yo = 021 + agt2 +++ + Ann

thon oy #0. For k= 1,2,3,...00, we define vectors yk and zx by

% = Ayer

y (12.11)

ue = acto

Ask +00, {max(zi)} — Ar, and {ye} — ex1, where c is a real constant,

Proof; First, we claim that

Ayo

we Saat) OF REZ (12:12)

where Z, denotes the set of positive integers.

We prove this claim using induction. By definition,

a

2 = Ayo and y= ae

A

so that

sift ADs

1 max( Ayo)

Hence, Bq, (12:12) holds for k = 1. Next, suppose that Eq. (12.12) holds for It = m, where

me Z,. Then we have

A™ yo

m= tmax( Ayo)

2m

Zm41 = Aym and Ymy1 = ae ae

m+)

Amy

max(Ayo)

Scanned with CamScanner

be

(12.13)

4

Led

462 Numerical Methods for Matrix

ue Probe

Thus,

max(A"*!yo)

max(2m+1) = aA 0)

so that

li i pai Cog

Unt = max(m41) ~ inax(A™*1 yo)

‘Thus, we have shown that Eq. (12.12) also holds for k =m +1. Hence, by ing

Eq. (12.12) holds for all k in Zy. ition,

The initial guess yo is chosen so that

wo = ars + ante +--+ Onn

Hence,

Ay, = A*oyei +02%2 +--+ Ontn)

= aydkxy + 02h +--+ OnMtn

Hence, we have

ay! an\*

Ayo = ¥ fous tag (#) ty t-+On (#) In (214)

Since A, is the dominant eigenvalue, it follows that ;

aA

Met = 2,825

[2] <1 oe Faso

Hence, it is immediate that

a"

(2) 0 as k + co for j=2,3,...,0

1

From (12.12) and (12.14), it is now immediate that

Ay

Ve Tea) — OH BF B00

stant.

(yk) = 1 for all k € Z,. By the continuity of the function max(:),it

‘Scanned with CamScanner

puarswer: Method

12!

463

gince yk C41 88 K —> 0, it follows from (12.16) th

16) that,

A(lim =

ity Ye=1) = Alor) = e(Axy) = cdr (12.17)

Using the continuity of the function max(-), we conclude from (12.17) and (12.16) that

om and (12.16) that

im, max(zp) = max(cdyx1) = \yemax(2,

=)

‘this completes the proof.

2.9 In the stateme

remark 1 ement of the power method, it i

Remy that if we write yo as power method, it is assumed that the initial guess yo

Yo = Oa +202 +--+ Anta

then a1 #0, ie. an assumption is made so that yo has a non-7 i i

eee ca aaioainne datos seria d See ee rnton silee soe

the convergence of yx to the direction of the dominant eigenvector x1. An objection to this

assumption may be made on the grounds that we do not know the Aoainaes eigenvector a

wroti, However, we remark that this assumption may be made without loss of generality

peeause even if a, = 0, the roundoff errors in the subsequent iterations will indeed enforce

that yp has a nonzero projection on the eigenspace spanned by the dominant eigenvector #1.

Remark 12.10 ‘The power method is an iterative procedure to compute the dominant eigen-

pair of a real diagonalizable matrix A. Now, suppose that A has a tie-dominant eigenvalue,

ie. if the eigenvalues of A are such that 41 = 2 ="+"=Ar and

Jr > egal 2-7 Dal

and also that A has a set of r linearly independent real eigenvectors corresponding to the tie-

dominant eigenvalue Ai. ‘Then a simple calculation shows that the power method sequences

{y:} and {z,} defined in Theorem 12.5 are such that as k — 00, max(z) ~ Ary the tie-

dominant eigenvalue of A, and ys converges to some vector in the r-dimensional subspace of

R® spanned by 1, £2)---»2r-

power method algorithm given in Theorem 12.5,

Remark 12.11 For implementation of the

conventional to take yo a5

we need to choose the initial guess Yo- It is

yo =

—

Scanned with CamScanner

Numerical Methods for Matrix Eigenvalue p.,,

464

EXAMPLE 12.3 Let cmmas teil

= 3-5 2

e 5 2 10

5.5638, and ~11.9249. Thus, the domninan

i igen

Then the eigenvalues of A are 0.0887,

ae aie ee tm contained in Theorem 12.5 fr the given,

ig

Next, we apply the power method algorit

Take 1

w= 2

1

| and max(z1) =

—0.6667

= 0

1.0000

A

| wm inax(z1)

k=2:

i! 2.6667 )

} n=An= 0 | and max(z2) = —13.3333

13.3333

—0.2000

eh wale cls 0

2° max(z2) 1.0000

k=3:

2.2000

z3=Ayo=|} 1.4000 | and max(zs) = —11.0000

BI

| =11.0000

|

|

Ps 0.2000

eee oars

| aes (23) 1.0000

Proceeding like this, we will get

i 1.9368 0.1710

| ai =| 2.6585 |, max(zy,) = -11.3243 and y,, = | 0.2348

11.3243 1.0000

-«

Scanned with CamScanner

Note that max(=11) = ~11.3245 is a good approximation for the dominant eigenvalue

11.9249. It is also easy to verify that y11 is close to the direction of the dominant

| ie Sivector 7. Indeed, we see that

0.0006

0.0003

Ayn — wn = | 0.0021

‘hich js small. Thus, we see that max(z.) — Ay and y, + cr, as k gets large.

wl

pxAMPLE 124 Let

9 53

8 38

“| 7 -6 6

4 67

°

\068i, and 3.9242. Thus, the dominant

S

‘Then the eigenvalues of A are 20.4530, 1.3114 + 6.

nvalue of A is Ay = 20.4530. i

eee, we apply the power method algorithm given in Theorem 12.5 for the given matrix

A

[Toke :

i ai

ony

1

kek

; a

2 = Ay = a and max(z1) = 26

and max(zp) = 20.7692

‘Scanned with CamScanner

Numerical for Matrix

Proceeding like this, we get

: iat 1.0000

ano = | 184272 cide tate Eloy

10 =| Ganzg |» maxlei0) = 02612

19.2649 iii

= 20.4530 is the same as the dominant eigenvalie *s = 204500, Ney,

Note that: max(z10)

also that yio is (approximately) along the direction of the dominant eigenvector xy of 4

Indeed, we see that

0.0863

_4 | 0.5301

Ayno — rio = 10" | _9. 5480.

0.0964

A

the power method algorithm given in Theorem 125

‘dg is the subdominant eigenvalue of A and ),

12.2.2 Rate of Convergence

Next, we prove a theorem which says that

has a rate of convergence given by |32| where

is the dominant eigenvalue of A.

‘Theorem 12.6 Consider the power method algorithm given in Theorem 12.5. The rate of

convergence of this method is given by

do

AL

where Ap is the subdominant eigenvalue of A, and 4; is the dominant eigenvalue of A.

Proof: As we showed in the proof of Theorem 12.5, the vectors 4 have the property that

Akyo

= mmax(A¥yo) for ke Z,

where Z,, is the set of all positive integers. Therefore, if the initial guess yo is chosen so that

wo =ay21 tart, +++ +anty with oy £0

Aky = ayMry + agra +--+ an dhan

‘Scanned with CamScanner

|

ince the dominant eigenvalue, we know that

»

Py |<} for j= 2,3,...4n

lence for large values of k,

t

max(A*yp) = M max(ay2)) 2 aku

wee max(a121). Note that : # 0 because ay #0 and 2; is nonzero being an eigenvector:

B= for j=1,2,...,n

since ‘fi

= Seat)

JJows that for large values of k

d2)\* EA

Bir + Bp (2 ba ns

Ye X Ary » (3) my t--+8, (2) In

Hence, it is immediate that for large values of k

it fol

liye —Arzil) << Bo(32)Rr2 + Ba(32)fxa +--+ Bn GE) al

<_ [Goll32/xall + all $21 lle + -- + [Ball 3 llenll

IN

Since Az is the subdominant eigenvalue of A, it is immediate that

ds| < [2 sf

By Ay for j =3,4,...,.0

Hence,

lige — Bizal| < 2 * (eles + alles + ++ [Bnlllarnll) (12.18)

Ive set

= |pa|lizal| + \Gsllzall +--+ 1Bnlllznll

then (12.18) reduces to i

de

in -ferl < 0 |

is completes

showing that the rate of convergence of the power method is given by (32|. This

the proof. eee

Since the rate of convergence of the power method algorithm is given by

dz

A

Scanned with CamScanner

468 Numerical Methods for Matrix Bigenvalue Problem

it is evident that the convergence will be fast if

lil<

and that the convergence will be very slow if

In the second case, the convergence of the power method can be improved by making

shift of origin discussed below.

12.2.3. Power Method with Shift

Suppose that A is a real, diagonalizable n x n matrix with eigenvalues ordered so that

2 lanl

Ja] > [Aa] 2

Let #; denote the eigenvector of A corresponding to the eigenvalue ; for i = 1,2,...,y

Since A, is the dominant eigenvalue of A, we can take xr to be real.

Note that the eigenvalues of the matrix A ~ pI are

Ai — pA2= Ps

with the corresponding eigenvectors

21,22,

If \; ~p is the dominant eigenvalue of A — pf, then the power method algorithm applied

to the new matrix A — pI has a rate of convergence given by

MP

For a suitable value of p, this ratio can be made much smaller than jp | ‘This technique is

usually known as power method with shift. It is very useful in applications as it yields a faster

convergence,

As an illustration, consider

ooeeae so

A=| 3 2 -1

2-2 30

‘Then it is easy to see that A has the eigenvalues given by Ay = 32, Az = 30, and \s = 24.

Thus, the rate of convergence for the power method applied to A is governed by

A2| _ 30

fe = ip = 9.9875 = 1

a il

Scanned with CamScanner

d

power Method 469

| on the other hand, if we consider B = A~ pI, where p = 26, then

| Bile 55

I (Sr=a| ote 1

2-2 4

| the eigenvalues #1 = 6, wo = 4 and pg = ~2,

bes is the rate of convergence for the power method applied to B is governed by

eB if 6667

mi) 6

hich is smaller than 0.9875. Hence, the power method applied to the shifted matrix B yields

w jster convergence than the original matrix A,

if we assume that A has all real eigenvalues, then we may choose p using ad hoc techniques.

Wilkinson (1965) has shown that if Ais a real n x n matrix with eigenvalues ordered so

that

M > A2 2g 2+ Bn

thon the best choice for p is given by

(Az +n)

; (12.19)

However, it is easy to construct simple examples to show that the convergence of the power

rethod for the shifted matrix A — pI can still be slow for the choice of the shift p given by

(12.19).

‘As an illustration, consider a 30 x 30 real matrix A with ei

‘Then the rate of convergence for the power method applied to A

nvalues 30,29, 28,...,2,1.

governed by

Applying the formula (12.19), we get the shift as

_ 2941) _

ek orem

15

Hence, the shifted matrix B = A — pI will have the eigenvalues

15, 14,...,2,1,0,—1, -2,...,—1#

The rate of convergence for the power method applied to B = A ~ pl is governed by

dz

AL

4

= = = 0.9333

B 0.9:

Thus, the power method algorithm yields a slow convergence for the shifted matrix B =

A~ pl as well. Thus, the formula (12.19) does not always result in fast convergence. Note

also that one needs the knowledge of \ and A,,, the subdominant and the least dominant

Scanned with CamScanner

470. Numerical Mothods for Matrix Bigenvalue Problen,

eigenvalue of A, to calculate the shift p given in the formula (12.19); Since these eigenvalue,

formula (12.19) is not useful in practice. So, we may try aq

are not available a priori, the ful

techniques for obtaining # good choice of the Mie p yielding faster convergence ofthe Powe,

method algorithm. f /

The power method with shift is illustrated in the following example:

M) 5 Le

EXAMPLE 12.5 Let sf castn

A=| 10 58 36

9 -9 61

= 72, \2 = 70 and rs = 68.

‘Then A has the eigenvalues Ay

h the initial guess

We apply the power method algorithm to Awitl

1

pei

1

93

2 = Ay =| 104 | and max(a) = 104

61

. 0.8942

n=—— = | 10000

max(Z1) 0.5865

kn?

73.0385

zy = Ay = | 88.0577 | and max(z2) = 88.0577

34.8269

‘, 0.8294

2

= axa) 1.0000

2 0.3955

Proceeding like this, we get

21.0335

zm =| 52.3963 | and max(z20) = 52.963

19.0489

—0.4014 2

1.0000

—0.3636

‘Scanned with CamScanner

boot approximation for the dominant eigenvalue Xt

ar

Ayao = mnx( 229) 99 { en

15.7408

1. The poor conver

js not small ‘gence exhibited above should surprising. Indeed,

ne of convergence of the power method algorithm is eodee Ie, ratio

i

|

mh

a for 18 example, this ratio reduces to

70

Bl = 0.9722

jch is close to one. Bes:

ae we introduce a shift of origin given by x e :

p=69 YY

Note that the eigenvalues of the shifted matrix

2 23 25

B=A-pl=|10 -11 36

9-9 -8

are given by

i =A1—p=3,H2=A2x—p=1 and ps =d3— p=

‘Thus, if we apply the power method algorithm to the shifted matrix B = A—

method has a rate of convergence governed by

HB 1

fa) _ ~ = 0.3333

| | 3

Thus, we have a faster convergence for the power method with the

this as follows:

Take the same initial guess yo and apply the power method algorithm fort

aa Siege

‘Scanned with CamScanner

472 Numerical Methods for Mateix Bigenvalue py,

len,

-13.6286 Cm

zy = By = | —12.3714 | and max(z2) = —13.6286

= 1.0000

1.0000

0.9078

0.0734

Proceeding like this, we get

3.0000

2.7239 | and max(z211) = 3.0000

0.2259

Z 1.0000

a

=—“11_ = | 0.9080

wr max(zin) | 0.0753

Note that max(- is the same as the dominant eigenvalue 411 of B. Also, we have

0.5187

By ~ 3y1 = 10~* | 0.5083

0.0104

so that yi1 is a good approximation for the dominant eigenvector x2 of B.

This illustrates the faster convergence for the power method algorithm with shift.

12.2.4 Simple Applications of Power Method with Shift

If A is any 3 x 3 real matrix having a dominant eigenvalue, we can easily compute all the

eigenvalues using the power method, followed by power method with shift, and the fact thet

the sum of the eigenvalues of A equals the trace of A. We illustrate this with some examples

EXAMPLE 12.6 Using power method, find the dominant eigenvalue and dominant eigen

vector for the matrix

0

-2

0

A

Hoa

aor

Hence, find all the eigenvalues of A.

Solution: By inspection, it is easy to note that \ = —2 is an eigenvalue of A.

First, we find the dominant eigenvalue of A using the power method.

1

Take yo = | 0

0

Scanned with CamScanner

aT

| r Method

| ge

f 2 u 1.0000

x =Ayo =] 0}, max(z:)=5 and y, =—+~ = | 0.0000

| 4 max(=1) | 0,2000

5.2000 1.0000

= | 0.6667 |, max(z,)=5.2 and yp = 2 = | 0.0000

2,000 max(ea) | 0.9846

proceeding like this, we get

5.9991

0,0000 | , max(z9) = 5.9991 and yo =

5.9955

Hence, it is clear that the dominant eigenvalue A;

0

1.0000

220 __ — | 0.0000

max(z20) | 0,9994

= 6 and the dominant eigenvector

1

0 |. This can be easily checked by noting that Avy = Ari

A-AIl = A-6I. Hence,

-1 0 1

B=| 0 -8 0

1 0 -1

it is clear that B has an eigenvalue ~8.

1

1

Now, we set B=

By inspection,

Now, we take yo = | 1

1

0 0

2 = By =| -8 |. max(e:)=-8 and w= A

0

0 0

sted ag 22 =

w=an=|-$] mut 8 and w= Sy [|

ie dominant eigenvalue of B is » = -8 and the dominant eigenvector of Bis

ad

sccavalue of A is Xp = u + shift = -8 +6 = ~2, and the associated

"race(A) = 5+ (-2)+5=8 Since the sum of the eigenvalues of

that ial,

= Troce(A) — (M1 +42) = 8- (6-2) =4

‘Scanned with CamScanner

I ATA, Numerical Methods for Matrix Bigenvalue Probl,

method, find the dominant eigenvalue and dominant cigy,

—6 2

7-4

43

nd dominant eigenvector of A using thy

f | EXAMPLE 12.7 Using power

! vector for the matrix

Hence, find all the eigenvalues of A.

Solution: First, we find the dominant eigenvalue a

a) power method.

t Take yo =| 1

ih

| : — i500

a-[3] -eo-4 and 1 = Tax(a) 6.2500

koh

} a= [ i | max(z9) = 13.0000 and yp = —2— = aa |

5.7500 | | mata) 0.4423

k=3:

max(zs)

0.4881

7.0962,

14.5385 As 1.0000

z3= | —14.3654 | , max(z3) = 14.5385 and ys = ———~ = | —0.9881

Proceeding like this, we get

14.9999

14.9998 |, max(zs) = 14.9999 and ys =

7.4999

ot 28 a

max(ze)

it Re janet Desh sary

Anads dy tdyoagere nl

rah NS ide a

‘Scanned with CamScanner

‘ power Method Fe;

wo

-ll

0.6111

“a= | = i max(21)=—-18 and y, = = | 1.0000

mex(e) | ors

—8.7222

0.5902

eit | 5 tnex(2a) — 14-7778 and) ya =O

12.1111 max(z2) 0.8195

Proceeding like this, we obtain

—7.5152 0.5011

zy = | —14.9973 | , max(z23) = —14.9973 and yp; = —2%— 1.0000

14.9640 max(z23) 0.9978

Hence, it is clear that the dominant eigenvalue of B is : = —15 and the dominant eigen-

05

vector of Bisv=| 1.0

10

Hence, the second eigenvalue of A is \z = j1-+shift = —15 + 15 = 0, and the corresponding

eigenvector is v. Note that Trace(A) = 8 + 7 +3 = 18. Since the sum of the eigenvalues of A

js equal to the trace of A, the third eigenvalue of A is given by \z = Trace(A) — (Ar + A2) =

-(15+0) =3.

12.2.5 Calculating the Least Dominant Eigenpair

First, we define the least dominant eigenpair for a real n x n matrix A.

Definition 12.6 Let A be a real nxn matrix with eigenvalues \), A2,..., An. The eigenvalue

An of A is called the least dominant eigenvalue of A if the eigenvalues of A can be ordered so

that

[al 2 al 2 +++ 2 n-al > nl (12.20)

Fiom (12.20), it is clear that An is a real eigenvalue of A with multiplicity one. The associated

tigenvector an of A is called the least dominant eigenvector of A. The pait (Ansty) is called

the least dominant eigenpair of A

Tfwe assume that A is, in addition, invertible, then there is an obvious relation connecting

the eigenvalues of A and A-!. Indeed, we know that the eigenvalues of A~* are 3b, 5...

with the associated eigenvectors 1, 2,-.-,2n; respectively. y

‘Thus, if An is the least dominant eigenvalue of A, then it is immediate that a is the

inant eigenvalue of A~! with the associated eigenvector Tp, Thus, we can use the power

1 to compute the eigenpair (36 ,4n) using which we can easily : deter-

lowing theorem,

Scanned with CamScanner

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5819)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- DSA by Shradha Didi & Aman Bhaiya - Bonus DSA QuestionsDocument2 pagesDSA by Shradha Didi & Aman Bhaiya - Bonus DSA QuestionsHimanshu YadavNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Untitled Document PDFDocument1 pageUntitled Document PDFHimanshu YadavNo ratings yet

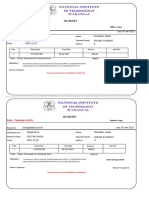

- FeePaymentReceiptForStudent PDFDocument1 pageFeePaymentReceiptForStudent PDFHimanshu YadavNo ratings yet

- Untitled DocumentDocument1 pageUntitled DocumentHimanshu YadavNo ratings yet

- Untitled Document PDFDocument1 pageUntitled Document PDFHimanshu YadavNo ratings yet

- Payment SlipDocument1 pagePayment SlipHimanshu YadavNo ratings yet

- MCQ On Simplex Method 5eea6a0e39140f30f369e4e8Document24 pagesMCQ On Simplex Method 5eea6a0e39140f30f369e4e8Himanshu YadavNo ratings yet

- File InputDocument1 pageFile InputHimanshu YadavNo ratings yet

- Object Oriented Programmings PDFDocument145 pagesObject Oriented Programmings PDFHimanshu YadavNo ratings yet

- Himanshu Yadav: ContactDocument1 pageHimanshu Yadav: ContactHimanshu YadavNo ratings yet

- File InputDocument10 pagesFile InputHimanshu YadavNo ratings yet

- HimanshuYadav 22mac2r14 Mathematics PDFDocument1 pageHimanshuYadav 22mac2r14 Mathematics PDFHimanshu YadavNo ratings yet

- Himanshu Yadav: ContactDocument1 pageHimanshu Yadav: ContactHimanshu YadavNo ratings yet

- OutputDocument1 pageOutputHimanshu YadavNo ratings yet

- Himanshu Yadav: ContactDocument1 pageHimanshu Yadav: ContactHimanshu YadavNo ratings yet

- Code OutputDocument1 pageCode OutputHimanshu YadavNo ratings yet

- BC FP Lidar Pres MoskalDocument35 pagesBC FP Lidar Pres MoskalHimanshu YadavNo ratings yet

- Code OutputDocument1 pageCode OutputHimanshu YadavNo ratings yet

- File InputDocument72 pagesFile InputHimanshu YadavNo ratings yet

- File InputDocument1 pageFile InputHimanshu YadavNo ratings yet

- File InputDocument1 pageFile InputHimanshu YadavNo ratings yet

- Airbornelidar PDFDocument61 pagesAirbornelidar PDFHimanshu YadavNo ratings yet

- File OutputDocument1 pageFile OutputHimanshu YadavNo ratings yet

- 12 .DMA - Stack & HeapsDocument1 page12 .DMA - Stack & HeapsHimanshu YadavNo ratings yet

- File InputDocument1 pageFile InputHimanshu YadavNo ratings yet

- Python Assignment-11Document1 pagePython Assignment-11Himanshu YadavNo ratings yet

- File OutputDocument1 pageFile OutputHimanshu YadavNo ratings yet

- Python Assignment-6Document1 pagePython Assignment-6Himanshu YadavNo ratings yet

- ProbabilityproblemlistDocument24 pagesProbabilityproblemlistHimanshu YadavNo ratings yet

- Python Assignment-13Document2 pagesPython Assignment-13Himanshu YadavNo ratings yet