Professional Documents

Culture Documents

Emotional matching between videos and ads: Does incongruence lead to better engagement

Uploaded by

simran singhOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Emotional matching between videos and ads: Does incongruence lead to better engagement

Uploaded by

simran singhCopyright:

Available Formats

Does Emotional Matching Between Video Ads and Content

Lead to Better Engagement: Evidence from a Large-Scale

Field Experiment∗

Anuj Kapoor Sridhar Narayanan Amitt Sharma

June 9, 2022

Abstract

Modern digital advertising platforms allow ads to be targeted in a variety of ways, and

generally aim to match the ad being shown with either the user or the content being shown.

In this study, we examine the effect of matching in emotional content of ads and the video

on which the ad is shown on consumers’ engagement with the ad. On the one hand, ads

that are emotionally matched with the content video could lead to greater engagement with

the ad because of the desire for consumers to extend the emotion they are experiencing. On

the other hand, emotional mismatch between the ad and content video can lead to greater

perceptual contrast, thereby drawing more attention to the ad. Additionally, consumers

viewing a video with negative emotions might have a preference for positive emotions in

the advertising to get themselves to a happier state. Thus, whether emotional matching

is more effective in driving ad engagement, and thereby potentially consumers’ evaluation

of, affect towards, and purchase of the advertised good is an empirical question. We study

this question through a field experiment run in collaboration with VDO.AI, a video and ad

serving platform. In this experiment, we manipulate the video/ad combination consumers

see, with variation across the experimental conditions in the emotional content of ads and

videos. We find that in our setting incongruence, where emotional content of videos and ads

are mismatched, leads to greater ad engagement. We find suggestive evidence for attention

being the mechanism through which incongruence leads to better outcomes. Our findings

contribute to the literature on advertising, and provide an important targeting variable for

firms in the AdTech space.

Keywords Video Advertising, Emotions, Contextual Advertising, Congruence, Consumer En-

gagement, Field-Experiment

∗

Kapoor: Indian Institute of Management, Ahmedabad, Narayanan: Stanford University and Sharma: vdo.ai.

The views discussed here represent that of the authors and not of Indian Institute of Management, Ahmedabad

or Stanford University or vdo.ai. The usual disclaimer applies. The authors declare they have no commercial

relationship with vdo.ai, and the research is conducted with no restrictions on the publishability of the findings

from the field experiment. The authors thank Ayush Garg and Vedang Asgaonkar for excellent research assistance.

Please contact Kapoor (anujk@iima.ac.in) or Narayanan (sridharn@stanford.edu) for correspondence.

Electronic copy available at: https://ssrn.com/abstract=4131151

1 Introduction

In this paper, we empirically study the effect of matching or congruence between ads and the videos

in which they are placed in terms of their emotional content. Digital video and ad serving platforms

allow for targeted advertising, using a variety of targeting variables. These include consumer

characteristics, the content of the video on which they are placed, and contextual variables such

as time of day. An important distinguishing factor of video advertising, as opposed to forms of

advertising relying on text or even static images, is the potential for rich emotional content in these

ads. Thus, an important potential variable for targeting of ads could be the emotional content of

the content video in which advertising is placed and how well matched it is with the emotional

content of the ad. For this, an understanding of the impact of emotional matching of videos and

ads is important. In this study, we aim to gain such an understanding through a field experiment

where we exogenously vary the degree to which there is such emotional matching, and examine

the impact of such matching on consumers’ engagement with the ad.

There has been a growing literature on the study of matching in persuasive messaging, includ-

ing advertising. In particular, the role of targeting or tailoring or what has been referred to as

’personalized matching’ has received a lot of attention in the marketing and psychology literatures

(see, for instance Teeny et al. 2021 for a recent review). The focus in this literature has typically

been on the match between the advertising message and the recipient of the message. There has

been on the other hand more limited attention paid to ’non-personalized matching’, particularly

in the advertising context. Non-personalized matching refers to the match between the situation

or context of the message and the message itself. Studies in the past have examined matching

between the type of product being written about - hedonic vs. utilitarian - and the type of message

- emotional vs. cognitive - (Rocklage and Fazio, 2020), or the characteristics of the message source

and the message itself (Karmarkar and Tormala, 2010). Additionally, past studies have examined

the effect of matching valence (Coulter, 1998; Kamins et al., 1991), arousal (Puccinelli et al., 2015),

mood (Kamins et al., 1991) and theme (Dahlén et al., 2008) on the effectiveness of ads. A recent

study (Fong, 2021) uses the emotional content of music in videos and ads to indirectly examine

the impact of matching. There is however little direct evidence of the effect of matching between

the emotional content of a video ad and that of the content video in which the ad is placed. We

attempt to fill this gap by directly examining matching in emotional content of ads and the videos

they are placed in. Further, in contrast to previous studies that have examined attitudes of con-

sumers towards the subject of the ad, we focus on actions that consumers can take to engage or

disengage with the ad, including skipping the video altogether. This is of great relevance in the

online video ad context, where consumers often have the option to skip an ad.

We design and implement a large-scale field experiment in collaboration with the video/ad

Electronic copy available at: https://ssrn.com/abstract=4131151

serving platform VDO.AI to directly study the effect of matching emotions for video ads and the

video content on which they are placed. VDO.AI provides both video and ad content for playing

in designated locations on third party websites. In collaboration with the firm, we select a set of

videos and ads that span the range of emotions from happy to sad. We focus on these specific

emotions since they are commonly studied in the literature (e.g. Kamins et al. 1991; Lee et al.

2013) and are commonly employed by the advertising industry - for instance Facebook (Kramer

et al., 2014) and Google1 We then use a set of research assistants to rate these videos/ads on a

happiness/sadness scale. Next, we set up a randomized controlled experiment on the VDO.AI

platform, with users randomly assigned to different combinations of videos and ads, such that

we have conditions with matched/unmatched content videos and ads in terms of their emotional

content. In our setting, the ad is always a post-roll ad, i.e. it is played only after the video is

completely played, thereby ensuring that the consumer has had the opportunity to experience the

emotion that the content video aims to evoke. We observe the extent to which consumers view

the ad, and develop measures of consumer engagement with the ad based on this observation.

We examine the impact of emotional matching/congruence vs. non-matching/incongruence of the

content video and the ad on these measures of consumer engagement.

Our main finding is that when ads are incongruent or emotionally mismatched with the content

videos, there is greater consumer engagement than when the ads are congruent or matched. These

results contrasts with one of the key findings of prior research that matching leads to better

advertising outcomes (Kamins et al., 1991; Lee et al., 2013; Fong, 2021). One of the key differences

in our experimental design is that unlike prior studies, which manipulate valence or arousal or

mood, we directly manipulate the emotional content in the ad as well as in the content video in

which the ad is inserted. Second, our experiment is in the field setting, unlike prior work, that has

typically been in the lab and therefore suffers from the typical limitations in establishing external

validity. Third, we examine consumer behaviors directly through revealed preference measures

of engagement , as opposed to stated preference measures such as attitudes in prior literature.

Overall, we find that in our context of video advertising, congruence between the content video

and the ad leads to lower engagement than incongruence. These effects are both statistically and

economically significant.

We further examine the mechanism by which incongruence might lead to greater user engage-

ment with the ad. In particular, we examine two different mechanisms by which incongruence

between the content video and ad can lead to greater engagement - attention and emotion reg-

ulation. The first mechanism is based on the fact that when ads are incongruent, there is more

1

https://www.forbes.com/sites/kashmirhill/2014/06/28/facebook-manipulated-689003-users\

-emotions-for-science/?sh=2c8c20bb197c

Electronic copy available at: https://ssrn.com/abstract=4131151

perceptual contrast between them, leading to greater attention from the user, and thereby greater

engagement with the ad (Biswas et al., 1994; Andrade, 2005). The second mechanism of emotional

regulation is based on consumers wanting to extent the emotion they are experiencing (Tamir,

2009, 2016). Whey they are watching a happy video, they might want to extend this emotional

state and hence not wish to view a sad ad. A happy ad would extend their happy emotional state

and therefore they might be more likely to view and engage with a happy ad following a happy

video. The effect of these phenomena is that consumers might be more willing to engage with the

ad when the emotional content in the ad and the content video are matched than when they are

mismatched. Our research design allows us to examine the different combinations of emotions in

videos/ads, and thereby indirectly examine these mechanisms as well. The evidence from our anal-

ysis is consistent with attention being the main mechanism by which incongruent videos/ads lead

to greater user engagement with the ad and we do not find support for the emotional regulation

mechanism.

The paper contributes to the literature on advertising, emotions and matching. In the adver-

tising literature, a number of recent studies have examined the value of targeting of display ads

(Bleier and Eisenbeiss, 2015), retargeted ads (Lambrecht and Tucker, 2013) and email marketing

campaigns (Sahni et al., 2018) among others. Our study contributes to this literature by exam-

ining the role of targeting based on advertising content in the context of video advertising. It

contributes to the rich literature on context effects, and specifically applied to advertising see for

instance Kirmani and Yi 1991 and Kwon et al. 2019) by examining a very relevant part of the

context for the ad, namely the context in which the ad is placed. It contributes to the literature

on matching in advertising (recent examples include Belanche et al. 2017; Furnham et al. 2002;

Janssens et al. 2012; Kononova and Yuan 2015) by considering a very important type of non-

personalized matching - matching on emotional content. In addition, it provides a set of valuable

insights for practitioners in the field. Advertisers and advertising platforms can both benefit from

the opportunities for targeting based on emotional content. It adds to the literature through a

field experiment, giving us causal effects while also being externally valid.

In the rest of the paper, we first provide a background of the empirical context, describe the

experimental design, present the main results as well as explore the underlying mechanisms before

finally concluding.

2 Background

Our empirical setting is in the context of video advertising on online platforms. The most ubiq-

uitous of these is YouTube, where viewers watch content videos and ads are inserted into the

Electronic copy available at: https://ssrn.com/abstract=4131151

videos at various points in the video. Compared to other forms of online advertising such as

search advertising or even most forms of display advertising, video advertising has the potential to

engage consumers emotionally. Thus, it is the primary form of online advertising used for brand

advertising2 . Additionally, it allows for a high degree of targeting and subsequent tracking. Video

advertising has grown to be a 81.9 billion US dollars industry3 in 2021.

Video advertising differs in its form across different platforms. On YouTube, for instance, ads

can be shown before the content video is played and mid-roll (i.e. during the course of the video).

On other platforms, ads are shown only mid-roll or post-roll (i.e. after the content video has

finished playing). Our empirical context is of post-roll ads, where the viewer has to completely

view the content video before the ad video is shown to them. Next, we describe the features of

our specific platform.

Our study is conducted in collaboration with a video advertising platform called VDO.AI.

VDO.AI, based in India and the US, collaborates with publishers worldwide to provide video

content for their web pages. Essentially, it collates interesting content videos that are played on

third-party publishers’ websites. Figure 1 shows a screen grab from one such video playing on

the website of the Indian newspaper, The Hindu. The platform also allows advertisers to use this

space to place their ads, specifically as post-roll ads that play after the content video has finished

playing completely.

The platform allows users to engage or disengage with the video. The content video and ad can

be muted at any time so it plays without audio. Additionally, ads can typically be skipped after 5

seconds of viewing it. The user can of course exit the webpage where the video is playing at any

time. The platform records whether the viewer muted the video, skipped the ad, and the extent

to which the ad is viewed before the page was exited. This allows us to build various measures of

engagement with the ad.

2

https://www.forbes.com/sites/forbescommunicationscouncil/2022/04/13/

why-every-company-needs-a-chief-video-officer/?sh=d08238065295

3

https://www.forbes.com/sites/bradadgate/2021/12/08/agencies-agree-2021-was-a-record-year-for-ad-spendi

?sh=127b8b417bc6

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 1. In-stream & Post-Roll Video Ad Placement in the Experiment

Notes: The figure depicts a sample ”In-stream” and ”Post-roll” video ad placement deployed in the experiment.

Typically, which ad is shown in a given content video is determined by a second-price auction,

where advertisers bid on the ad slot, and the bidder who won the auction pays the amount bid by

the next-highest bidder. For the purpose of the experiment we set up, VDO.AI drew on a separate

inventory of ads to determine the ad to be shown - in other words, the ad placement was not

determined by an auction. The specific videos and ads to be shown during our experiment were

pre-determined and not determined through any algorithmic or human-directed targeting process.

One additional aspect of this context that is pertinent to our study is the issue of viewability

(Uhl et al., 2020). Content videos and ads are placed by VDO.AI on websites with other content.

This is distinct from video-viewing platforms such as Youtube, where the primary content is the

video itself. Therefore, while the video/ad might be playing in our context, there is no guarantee

that the user is actually viewing it (Bounie et al., 2017). This can happen for multiple reasons.

First, the video might not be completely in the page view - i.e. some part of it might be above or

below a page fold, such that the video is only partially visible to the consumer. Second, a consumer

might be viewing other content in the webpage, while the video is running. This is inherently not

measurable short of attaching eye tracking devices to the screens to identify which parts of the

screen the consumer is looking at (McGranaghan et al., 2022). This is obviously infeasible at scale.

The industry has therefore, through smaller scale studies involving such eye-tracking devices, tried

to determine the proportion of users on a given webpage that are actually viewing a given piece

Electronic copy available at: https://ssrn.com/abstract=4131151

of content, and arrived at estimates of what is termed as ’viewability’ by this industry. This is

pertinent to our study since it affects our interpretation of the effects of our treatment. We will

discuss this in more detail while discussing the empirical estimates from our experiment.

3 Experimental Design

Our experiment aims to understand how emotional matching or congruence between an ad and

the content video in which it is placed affects consumers’ engagement with the ad. We designed

a field experiment in collaboration with the video and ad serving platform VDO.AI, where we

exogenously manipulated the content video and the ad that the consumer could view. This design

allowed us to both examine the main question of the role of matching/congruence and explore the

underlying mechanisms by which it affects behavior.

The experiment was conducted across a large number of publishers on the internet, with a focus

on consumers in India or those viewing India-related content in other parts of the world. This

focus on Indian consumers was because emotions in videos could relate to the cultural context of

the viewer. The focus on Indian consumers allowed us to reduce the disparities in cultures that

could cause ambiguity of the emotions felt by users for a given video. For identifying the videos

and ads to be included in the experiment, we used Indian research assistants to score videos on

the emotions. Thus, the identification of videos, as well as the consumers viewing it were Indian

or India-related.

Users who arrived at a website included in the experiment were randomly assigned to one of

four conditions in a two by two design - two conditions for the content video - happy or sad,

crossed with two conditions for the ad video - again happy or sad. Thus, they were shown either a

happy content video from a pre-selected set of videos, or a sad video from a pre-selected set. After

viewing the video, they were shown ads from one of two ad conditions - happy or sad, once again

from a pre-selected set of happy or sad videos.

Figure 2 shows a schematic of the experimental design.

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 2. Sequence of watching the Video Advertisement

Notes: The Figure above shows a schematic of the experimental design.

3.1 Video & Ad Selection

First, the curation team from our partner VDO.AI selected a number of videos and ads that they

classified as happy or sad. This was a manual process, where the team had access to a larger set of

videos from which they shortlisted a set of videos as ranging in emotions from happy to sad. This

selection process focused on finding videos and ads that were clear in their emotional content, and

typically had only one dominant emotion running through the entire course of the video.

Then, we recruited a set of 7 research assistants to view all these videos and rate them on their

emotional content on a 7-point Likert scale ranging from happy (3) to sad (-3). These research

assistants were also recruited in India, so as to be culturally aligned with the consumers who would

be viewing the ads in the field experiment. We then selected a set of videos that were at least 2

or above on in terms of their average ratings across the 7 research assistants, or below -2 (thus,

selecting the videos that were more extreme in their emotional content on either side of the scale -

happy or sad). This reduced our set of videos to a set of 24 content videos and 33 ad videos. Table

1 lists the number of unique ads and content videos in the experiment by experimental condition.

Table 1. Content Videos and Ads

Happy Sad

Content Video 11 13

Ad 20 13

Electronic copy available at: https://ssrn.com/abstract=4131151

Notes: The Table above lists the number of unique ads and content videos in the experiment.

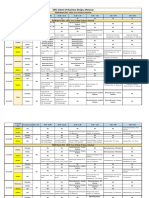

Table 2 reports 3 particular coefficients of inter-rater agreement for more than 2 raters specifi-

cally adapted for cardinal data (the measures for Ad and Video Emotions are cardinal in nature).

As we can see, Krippendorff’s Alpha is 0.839 for the videos and 0.792 for the first quartile and

0.694 for the videos and 0.619 for the second quartile which is an acceptable score (Krippendorff,

2018). Similarly, Kendall’s W values are at a reasonable level.

Table 2. Inter-Rater Agreement

Q1 Q2

Kendall W Kripp α Percentage Kendall W Kripp α Percentage

Videos

Happy Videos 0.176 0.004 27.3 0.451 0.203 9.09

Sad Videos 0.161 0.003 69.2 0.264 0.0522 15.4

All Videos 0.896 0.839 50 0.79 0.694 12.5

Ads

Happy Ads 0.224 0.013 35 0.187 0.010 10.0

Sad Ads 0.177 0.054 38.5 0.175 0.032 5.34

All Ads 0.860 0.792 36.4 0.728 0.619 6.17

All Content 0.879 0.821 42.1 0.762 0.677 8.77

Notes: The Table above reports Krippendorff’s Alpha & Kendall’s W.

It is important to note that all the ads and the videos in the experiment were already earmarked

by VDO.AI’s clients for actual deployment before the start of our experiment, and therefore the

ads and the content videos in the experiment were relevant to the users as well as the advertisers.

4 Empirical Analysis

4.1 Data

We first describe the data, conduct randomization checks and discuss our overall empirical strategy.

The data were collected at the level of an impression. The impression recorded the unique user-ID

along with the IP address the user was accessing the website from. A total of about 25 million

impressions were included in the experiment. They were then randomly assigned to be shown

one of the 24 content videos, or one of the 33 ads. Since the content videos and ads were not

equal across conditions, and it was easiest for our partner platform to implement a design where

one of these 24 content videos or 33 ads were shown at random, our final sample was not equally

distributed across the four different conditions. Table 3 below shows the distribution of the ad

impressions across each experimental condition.

Electronic copy available at: https://ssrn.com/abstract=4131151

Table 3. Distribution of Impressions Across the Different Experimental Conditions

Condition Description Number Of Impressions

1 HH Happy Video followed by a Happy Ad 7,35,518

2 HS Happy Video followed by a Sad Ad 4,47,897

4 SH Sad Video followed by a Happy Ad 8,19,593

5 SS Sad Video followed by a Sad Ad 4,99,422

Total 25,02,430

Notes: The Table above shows the count of impressions across the four experiment sub-conditions, i.e. Happy ad

& Happy video, Happy ad & Sad video, Sad ad & Sad video, Sad ad & Sad video.

We next report a set of randomization checks in Section B of the Appendix. In this section,

we show that randomization is induced properly. Specifically, we verify that the users across

experimental conditions have no systematic differences in the probability of being shown the videos

they are eligible to be shown, that their probability of being shown a happy or sad video does not

systematically vary across the days of week, hours of day, device type they are using, etc.

4.2 Outcome Measures

For each impression, we study four main outcomes based on whether the user viewed: (1) the

first quartile of the duration of the advertisement, (2) the midpoint of the duration of the adver-

tisement, (3) the third quartile of the duration of the advertisement or (4) the full ad. All four

outcome measures [(1) - (4)] are behavioral measures that are important indicators of the degree of

engagement of the consumer with the ad, and are important to the video platform 4 . Table 5 lists

the raw data for the outcome measures across Happy and Sad Videos, Table 4 lists the outcome

measures across Happy and Sad Videos and finally, Table 6 lists the outcome measures across the

four experiment sub-conditions, i.e. Happy ad & Happy video, Happy ad & Sad video, Sad ad &

Sad video, Sad ad & Sad video.

Table 4. Outcomes across Happy and Sad Ads

Ad-Emotion Count First Quartile Mid Point Third Quartile Complete

Mean Std Mean Std Mean Std Mean Std

Happy 15,55,111 0.82 0.45 0.73 0.50 0.66 0.52 0.61 0.53

Sad 9,47,319 0.81 0.43 0.73 0.48 0.66 0.5 0.61 0.52

Notes: The Table above lists the means and standard deviations of the outcome measures across Happy and Sad

Ads.

4

This is related to the question of what constitutes relevant “actions.” In general, major advertising platforms

like Facebook and Google list the amount of ad viewed as an important performance metric.

10

Electronic copy available at: https://ssrn.com/abstract=4131151

Table 5. Outcomes across Happy and Sad Videos

Video-Emotion Count First Quartile Mid Point Third Quartile Complete

Mean Std Mean Std Mean Std Mean Std

Happy 11,83,415 0.79 0.40 0.70 0.46 0.63 0.48 0.58 0.49

Sad 13,19,015 0.80 0.39 0.72 0.45 0.65 0.48 0.60 0.49

Notes: The Table above lists the means and standard deviations of the outcome measures across Happy and Sad

videos.

Table 6. Outcomes across Video & Ad Impression Distribution

Cohort Total Impressions % First Quartile % Midpoint % Third Quartile % Complete

HH 735518 79.59 70.01 63.29 58.00

HS 447897 79.82 70.63 63.84 58.55

SH 819593 80.65 71.66 65.20 60.01

SS 499422 80.65 71.98 65.46 60.37

Notes: The Table above shows the performance of outcome variables across the four experiment sub-conditions, i.e.

Happy ad & Happy video, Happy ad & Sad video, Sad ad & Sad video, Sad ad & Sad video.

4.3 Main Effects

We first run a series of regressions to explore the relationship between the matching/congruence

between content video and ad, and the ad watching behavior. Let WatchBehaviori denote a binary

0/1 indicator of the ad watch outcome for exposure i - these denote the four different outcomes

we examine - watching the first quartile, midpoint, third quartile and the complete ad. Thus, if

the user corresponding to impression i watched the ad until the first quartile, the corresponding

W atchB ehaviori is 1, otherwise it is 0. Let IncongruenceConditioni be a dummy variable indicating

whether the experimental conditions correspond to incongruence between the content video and ad

(where a happy ad is played after a sad video or vice-versa). To examine the effect of incongruence

on these outcomes, we run the following regressions, one for each of the four outcome variables.

WatchBehaviori = α + β1 IncongruenceConditioni + ϵi

Here, β captures the effect of the incongruence between the content video and the ad on user’s

11

Electronic copy available at: https://ssrn.com/abstract=4131151

watch behavior, relative to the conditions where these are matched or congruent. We present our

results in Table 7.

Table 7. Effect of Incongruence vs. Congruence on Ad Watch Behavior

First Quartile Midpoint Third Quartile Complete

Incongruence 0.00391∗∗∗ 0.00483∗∗∗ 0.00609∗∗∗ 0.00652∗∗∗

(0.000552) (0.000622) (0.000653) (0.000667)

Constant 0.818∗∗∗ 0.726∗∗∗ 0.659∗∗∗ 0.606∗∗∗

(0.00257) (0.00259) (0.00259) (0.00258)

Observations 2502430 2502430 2502430 2502430

Standard errors in parentheses

∗

p < 0.05, ∗∗ p < 0.01, ∗∗∗ p < 0.001

Notes: The dependent variable is completion till first quartile, completion till second quartile, completion till third

quartile, completion till full. The “OLS” columns show the estimates of a linear probability model.

The main results of our experiment are that incongruence between content videos and ads

leads to greater viewing of the ads across all four outcome variables. The baseline viewership of

the first quartile of the ad is about 81.8%, and this goes up by .39% for the incongruent conditions.

Thus, when the ad and content videos are mismatched, this viewership goes up to about 82.2%.

Similarly, the viewership of the ad until its midpoint goes up from 72.6% to about 73.1%. For the

third quartile, these numbers are approximately 65.9% and 66.5%, and for the viewership of the

complete video, these are approximately 60.6% and 61.2%.

Of note here is that these are relatively high baseline levels of viewership of the ads compared

to the numbers typically seen for a platform like YouTube. This is because of two reasons. First,

the platform shows these ads on other content-filled web pages - the consumer navigates to these

web pages for this other content and not for the videos themselves. Thus, they could be ’viewing’

the ad, but actually consuming some other content simultaneously. Also, the ads play by default

in the muted state, allowing for such multitasking on the part of the consumer. This is the issue

of ”viewability” that was discussed in the section 2. Thus, only a proportion of those recorded as

viewing the ad actually view it. Second, since our experiment only involves post-roll ads, where

the ad is shown conditional on viewing the content video to completion, the set of consumers spend

a lot of time on the website by design. Since exiting the video is the same as exiting the webpage,,

a larger proportion of them ’view’ the ad to completion than if the consumers were not selected

in this way. While we don’t have a way of directly estimating the proportion of these users that

actively view the ad, the estimates of our project partner, VDO.AI and of the industry itself is

that approximately 5 to 20% of viewers of an ad actively view it.

12

Electronic copy available at: https://ssrn.com/abstract=4131151

The magnitude of the effect of incongruence may seem small. At an effect size of 0.00652 vs. a

baseline of 0.606, the effect of incongruence on viewing the ad to completion may seem like a very

small 1.07%. However, the effect size is considered to be a relevant metric by VDO.AI because of

the impact such increase in ad viewership would have on revenues when scaled across the billions

of impressions it serves every month.

Since the users access the content video and the video advertisement from different countries and

across different time zones, we control for various observables like user-agent, country, country ×

T ime and the city from where the user logs in. To account for variable conditions over time, we

also include both day and hour-of-day fixed effects. The Table 8 report the regression results with

additional controls. Specifically, we operationalize the regression as

WatchBehaviori = α + βIncongruenceConditioni + γXi + ϵi

where X = f (user − agent, country, country × T ime, city, day, hour − of − day)

Our main findings are that even with a very detailed set of controls, with fixed effects for every

combination of country and time, browser/device type and city of login, the effects of incongruence

are significant and positive.

13

Electronic copy available at: https://ssrn.com/abstract=4131151

Table 8. Regression results on the effect of ad-video emotion incongruence on ad watching (with

additional controls)

Coefficient for IncongruenceCondition:

(1) (2) (3) (4) (5)

First-Quartile 0.00391∗∗∗ 0.00387∗∗∗ 0.00253∗∗∗ 0.00385∗∗∗ 0.00241∗∗∗

(0.000552) (0.000560) (0.000557) (0.000551) (0.000552)

Mid Point 0.00483∗∗∗ 0.00480∗∗∗ 0.00299∗∗∗ 0.00476∗∗∗ 0.00288∗∗∗

(0.000622) (0.000622) (0.000610) (0.000622) (0.000606)

Third Quartile 0.00609∗∗∗ 0.00603∗∗∗ 0.00396∗∗∗ 0.00601∗∗∗ 0.00387∗∗∗

(0.000653) (0.000650) (0.000633) (0.000650) (0.000629)

Complete 0.00652∗∗∗ 0.00647∗∗∗ 0.00435∗∗∗ 0.00645∗∗∗ 0.00428∗∗∗

(0.000667) (0.000664) (0.000640) (0.000664) (0.000638)

Time(Hourly) ✓ ✓ ✓

Day ✓ ✓ ✓

User-Agent(Browser, Device) ✓ ✓

Country ✓ ✓

Country x Time ✓ ✓

City ✓

∗ p<0.1; ∗∗ p<0.05; ∗∗∗ p<0.01

Notes: The dependent variable is completion till first quartile, completion till second quartile, completion till third

quartile, completion till full. The “OLS” columns show the estimates of a linear probability model.

We also report the relative effect sizes in Figure 3. We convert the absolute effect into a

percentage change relative to baseline, computing the robust standard errors of this percentage

change using the delta method. The figure reports the percentage change in viewership across the

four different outcome measures, and the confidence intervals of this percentage change.

14

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 3. Percentage change in the Incongruent advertising with respect to the baseline

Notes: The Figure above reports the relative effect sizes. The absolute effect is converted into a percentage change

relative to baseline, computing the robust standard errors of this percentage change using the delta method. The

figure reports the percentage change in viewership across the four different outcome measures, and the confidence

intervals of this percentage change.

Further, in Table 9, rather than classifying the ads and emotions as happy and sad, we use the

emotion scores directly as a continuous measure. The covariate in the regression is the product

of the emotion scores for the content video and the ad. When the content video and ad are

matched, these scores are either both positive (in the case of both being happy) or both negative

(in the case of both being sad), resulting in a positive product. When they are mismatched or

incongruent, this product is negative. The coefficient for this variable would thus indicate the

effect of incongruence. If this coefficient is positive, it would imply that consumers’ viewership

of the ad at the four different time points (first quartile, midpoint, third quartile and complete

video) is greater when the content video and ad are congruent. If it is negative, it would imply

that incongruence leads to greater viewership. The estimate shows the same pattern as earlier –

15

Electronic copy available at: https://ssrn.com/abstract=4131151

it is negative, implying that incongruence leads to greater viewership.

Table 9. Effect of Incongruence on Ad Viewership - using Emotion Scores Directly

First Quartile Midpoint Third Quartile Complete

Product of Emotion Scores -0.000270∗∗∗ -0.000328∗∗∗ -0.000420∗∗∗ -0.000452∗∗∗

(0.0000416) (0.0000466) (0.0000489) (0.0000499)

Constant 0.820∗∗∗ 0.729∗∗∗ 0.662∗∗∗ 0.610∗∗∗

(0.00255) (0.00255) (0.00255) (0.00255)

Observations 2502430 2502430 2502430 2502430

Standard errors in parentheses

∗

p < 0.05, ∗∗ p < 0.01, ∗∗∗ p < 0.001

Notes: The dependent variable is completion till first quartile, completion till second quartile, completion till third

quartile, completion till full. The “OLS” columns show the estimates of a linear

5 Examination of Power of Experiment

We conducted Monte Carlo simulations to determine the statistical power needed to detect the

ad-video congruence effect in our sample. We define the effect size to be the percentage difference

in occurrence of an event (say reached first quartile) between the incongruence and congruence

cohorts. We consider a range of effect sizes(0.1%, 0.25% ,0.5%, 1%) and see if we will be able to

detect the delta in ad consumption behavior due to the ad-video congruence. Expressed simply,

we test if for a given effect size, the experiment and the sample has the ability to reject the null

hypothesis of equality between the congruent and incongruent population. To assess this, we took

3 different sample sizes (100,000 ; 500,000 ; 1,000,000) and for each sample, We simulate power

estimates by bootstrapping. The step-by-step procedure for the simulation exercise is presented in

the next few sentences. As a first step, we bootstrap samples from the experiment for a given effect

size, sample size and condition. Then, we compute estimates for the main regression specification.

Next, we collate the p-values from the statistical tests. The power is finally, the proportion of

these p-values ≤ 0.05.

Figures 4 and 5 plot the statistical power against a range of effect sizes. We do block sampling at

the user-level with replacement by pooling together the data from both the experimental conditions

i.e. congruence and Incongruence for a given effect size x. Specifically, we simulate the change in

the decision of x% of the population. The effect is the difference between % occurrence of an event

under the conditions of incongruence and congruence. In this simulation, we produce this difference

by changing the dummy for the event to 1 in x% of the incongruent samples. Then we test the null:

% occurrence of the event is the same in congruence and incongruence using a regression framework

16

Electronic copy available at: https://ssrn.com/abstract=4131151

and compare the estimated p-value with 5% as the benchmark. The procedure is repeated for many

draws and different effect sizes.

Figure 4. Bootstrapped Power Estimates - A

Notes: Examination of Power of Experiment - A

Figure 5. Bootstrapped Power Estimates - B

Notes: Examination of Power of Experiment - B

17

Electronic copy available at: https://ssrn.com/abstract=4131151

6 Mechanisms for the Effects of Incongruence

We now explore the mechanism behind the main results and present corresponding empirical

evidence to corroborate our theoretical underpinning.

Theoretical Underpinnings of the Mechanism

So far, we have documented that incongruence in emotions between the ads and the content video

increases the consumer’s probability of watching the ad up to various points of the video.

The first mechanism we examine for our effects is the attention mechanism (Han and Marois,

2014; Kahneman, 1973), which refers to the fact that consumer attention is higher for more novel

content in a given space. This has been specifically applied to the space of emotions as well

(Belanche et al., 2017; Cornelis et al., 2012) and is consistent with the research in advertising and

eye-tracking where attention is directly measured (Pieters and Warlop, 1999). The implication of

this broad idea in our specific context is that attention to an ad that follows a content video should

be increasing in the degree to which the two differ in terms of their emotional content. This in

turn should increase viewership of the ad as well. In our experiment, we have content videos and

ads with a range of emotion scores, and they were all randomly assigned to consumers. This allows

us to examine empirically how consumers behave differently when the ad and content videos were

emotionally very far apart, versus when they were closer together.

Treatment Effects Across Different Incongruent Ad-Video Groups

Specifically, the above discussion suggests that the exact levels of emotion scores of the incongru-

ence condition matter (Noseworthy et al., 2014). We expect the ad in the incongruent ad-video pair

to be less novel and attention grabbing if the ad’s emotion score is close to the emotion score of the

video. Thus, as the distance between the emotion scores of the content video and ad increases (and

thereby novelty of the ad increases), the increased attention this generates should lead to an in-

crease in the effect of incongruence. Furthermore, the optimal stimulation theory (Joachimsthaler

and Lastovicka, 1984) would suggest that there is a point beyond which the contrast between the

content video and ad could lead to ad avoidance as the consumer’s experience is less pleasurable

(Westbrook and Oliver, 1991).

Building on these theoretical underpinnings, we present our propositions:

• Proposition 1a: The treatment effect is higher when we compare the incongruent group to

a congruent group where the ad shown in the incongruent ad-video pair has an emotion score

closest to the emotion score of the video content. That is, as we increase the emotion score

difference between ads and videos in the incongruent group, the treatment effect increases.

18

Electronic copy available at: https://ssrn.com/abstract=4131151

• Proposition 1b: The treatment effect reaches its highest peak and as we further increase

the difference between the emotion of the ad and the platform video, we expect a decreasing

pattern – the treatment effects are expected to decrease as the spacing between the ad and

video emotions in the incongruent ad-video pair increases.

Now, we look for empirical support for the above two propositions. As a first step, we partition

the incongruent group in our data into sub-groups on the basis of the emotion scores of the

respective ads and videos in the incongruent ad-video pair. We split the data into bins with a

bin-spacing of 1.25 (Binning is done on the product of the scores i.e. emotion score of the ad ×

emotion score of the placement video.

As a next step, we individually estimate the causal effect of treatment against each of these

incongruent ad-video pairs. We present the outcomes in Figure 6.

We wish to clarify that the covariate in the regression is not the product. It is still incongruence

(hence the positive effect). What we do is split the data according to the product of the ad and

video, classify it as congruent or incongruent based on the value of this product and then regress

the outcome measures against the incongruence dummy. This would demonstrate that the positive

effect (coefficient) of incongruence changes according to the level of incongruity (the product of

ad and video emotion). The products in the graph on the x axis listed as 4,5,6,7,8,9 are actually

negative.

As expected, we see an initial increasing trend in the causal effect of treatment as the difference

between the emotion scores of the content videos and the ad increases. Therefore, we infer that

when the difference between the advertisement-video emotions is low, the advertisement will draw

less attention from the user. Interestingly, as we further increase the difference between the emotion

of the ad and the video, we observe a decreasing pattern – the treatment effect decreases as the

spacing between the emotions of the ads and videos in the incongruent ad-video pair further

increases.

19

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 6. Treatment Effects Across Different Levels of Emotional Contrast between Content

Video/Ad

Notes: The Figure shows the plots of the coefficients of the ad-video incongruence against the corresponding prod-

uct of emotion score of the ad × emotion score of the placement video. The top-left panel plots this for the first

quadrant viewership, the top-right panel does this for the midpoint, the bottom-left for the third quadrant and

bottom-right for the completion of the video

Figures 7 and 8 plot the same graphs with a bin size of 1.1 and 1.2 respectively. We see a

consistent U-shaped pattern across all the bin sizes.

20

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 7. Heterogeneous Treatment Effects - A (Bin Size =1.1)

Notes: The Figure shows the plots of the coefficients of the ad-video incongruence against the corresponding prod-

uct of emotion score of the ad × emotion score of the placement video. The top-left panel plots this for the first

quadrant viewership, the top-right panel does this for the midpoint, the bottom-left for the third quadrant and

bottom-right for the completion of the video

21

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 8. Heterogeneous Treatment Effects - A (Bin Size = 1.2)

Notes: The Figure shows the plots of the coefficients of the ad-video incongruence against the corresponding prod-

uct of emotion score of the ad × emotion score of the placement video. The top-left panel plots this for the first

quadrant viewership, the top-right panel does this for the midpoint, the bottom-left for the third quadrant and

bottom-right for the completion of the video

Heterogeneity Across Geographies

To further explore the proposition that the users’ behavior under the incongruence treatment effect

is driven by stimulus-novelty and attention, we focus on geography-level features that capture

the user’s exposure to the emotion of the video and the ads: (1) video watching volume – the

inherent tendency of users to consume extreme (sad) emotional videos in that geography, and (2)

ad watching volume - which denotes the inherent tendency of the users to consume extreme (sad)

emotional ads in that geography. These variables capture user’s saturation level in that geography

to an extreme video or ad.

The theoretical mechanism proposed earlier would suggest that it is harder to shift users’

attention with ads having congruent emotional content if they are saturated with more similar

emotion videos or ads. Conversely, when consumers are more saturated with viewing of emotional

content of one kind, incongruent ads should have greater engagement due to their ability to get

the attention of the viewer. Hence, we expect to see higher treatment effects (more viewership in

22

Electronic copy available at: https://ssrn.com/abstract=4131151

the case of incongruent ad-video pairs) for users with relatively higher consumption of extreme

emotion (sad) videos or ads.

We expect the intervention (incongruent condition) to be more effective in shifting users’ atten-

tion when they have an inherent tendency to see more extreme content. The research on emotion

regulation also suggests that individuals have an inherent tendency to regulate their emotions

by moving from an extreme emotion state (i.e. sadness) to a relatively less extreme state (e.g.

happiness) (Tamir, 2009). Further, users with overall low completion rates of video content are

expected to be more saturated and therefore, are highly likely to engage more with incongruent

emotion ad-content pairs.

Overall, we expect the users who consume relatively less content (watch lesser duration of the

videos) to find incongruent ad video pairs to be more novel compared to the users who consume

relatively more video content. Together, we formulate the following propositions:

• Proposition 2a: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively higher for users with high consumption of extreme emotion (happy)

video content.

• Proposition 2b: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively higher for users with high consumption of extreme emotion (sad)

video content.

• Proposition 2c: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively lower for users with high consumption of less extreme emotion

(happy) ads.

• Proposition 2d : The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively lower for users with high consumption of extreme emotion (happy)

video content.

• Proposition 2e: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively higher for users with overall low completion rates of video content.

• Proposition 2f : The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively lower for users with overall high completion rates of video content.

We estimate the treatment effects separately for the above propositions. We present the results

in Figure 9. Video completion rates are calculated for each city, followed by a median split

across these completion rates to stratify data into HVC(High Video Completion) and LVC(Low

23

Electronic copy available at: https://ssrn.com/abstract=4131151

Video Completion) cities. Similarly, for each emotion (Happy and Sad), video and ad completion

rates are calculated city-wise. Cities with higher happy ad(placement video) completion rates

than sad ad(placement video) completion rates are categorised as HAP(HVP). Cities with higher

sad ad(placement video) completion rates than happy ad(placement video) completion rates are

categorised as SAP(SVP). As expected, the effects are significant and positive only in high extreme

emotion (sad) ad and video conditions. These findings are consistent with Propositions 2a, 2b, 2c

and 2d, 2e & 2f, that rely on the attention as the theoretical underpinning.

Figure 9. Heterogeneous Treatment Effects - B

Notes: Heterogeneity in the incongruence effects across video and advertisement preferences aggregated across

geographies (cities). Confidence intervals are built using robust standard errors. HVC refers to the High Video

Completion. LVC refers to the Low Video Completion. HAP refers to Happy ad preference. SAP refers to sad ad

preference. HVP stands for the Happy video preference. SVP stands for the sad ad preference.

We further cut our data across user’s heterogeneous preferences across happy and sad place-

ment video preference (Figure 10) and predict:

• Proposition 3a: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively higher for users who consumer more sad video and especially,

24

Electronic copy available at: https://ssrn.com/abstract=4131151

when happy ad is placed after the sad placement video.

• Proposition 3b: The incongruence effect (i.e. preference for incongruent emotion ad-

content pair) is relatively higher for users with higher preference for Happy video and when

happy ad plays after the sad placement video.

Prediction 3(a) stems from our theoretical mechanism which suggests that users in the sad

emotional state are more likely to regulate their emotions and therefore, try to move away from

the sad state and hence, prefer happy ad content. Extrapolating this to our scenario, users who

prefer sad content are more likely to pay attention to happy ad content as they have higher need to

regulate their emotions (by virtue of consuming more sad content) are therefore, happy ad content

is likely to pick her attention. Therefore, this need for regulating their emotions prompts users who

prefer sad content in general to be inconsistent in their choice and they prefer, happy ad content

after consuming sad video content.

On the other hand, our theoretical mechanism supports Prediction 3b as preference for less

extreme emotions like Happiness don’t generate much need for emotion regulation in the users.

Since, the need for regulating their emotions is not high in such users, they stay consistent with their

preferences and prefer happy ads. We see the coefficient for HVP-SH is gretaer than the coefficient

for HVP-HS as the sad placement video does create a small need for the user to regulate their

emotion - Though we don’t expect the two coefficients to be statistically significantly different

from each other. Therefore, with lower need for emotional regulation, users with preference for

happy content stay show a consistent preference for happy ad content.

25

Electronic copy available at: https://ssrn.com/abstract=4131151

Figure 10. Heterogeneous Treatment Effects - B

Notes: Heterogeneity in the incongruence effects across video and advertisement preferences aggregated across

geographies (cities). Confidence intervals are built using robust standard errors.

Heterogeneity Across Contextual Variables

We now explore the differences in the impact of emotion incongruence across contextual factors

like day of the week and device used. Based on the attention as the theoretical mechanism, we

expect the preference for incongruent ad-video pair to be more, in case, the prior attention is

already high. That’s when the increment in attention from the incongruent ad-video pair will add

up to the already high attention level in hand. We formulate the formal propositions below:

• Proposition 4: The treatment effect (greater engagement with the ad when the ad-video

pair has incongruent emotional content) is higher when the contextual factors provide users

with extra attention & novelty apart from the attention & novelty provided by the treatment

itself (serving an ad with a different emotion than the content video). Therefore, a viewer

26

Electronic copy available at: https://ssrn.com/abstract=4131151

watching the ad-video combination on a desktop in the first half of the week (from Wednesday

to Sunday) is likely to have a greater effect of incongruent ad video pair.

We test this prediction by using a causal forest (Wager and Athey, 2018) model for assessing the

impact of different heterogeneity effects on the main test statistic. The test statistic is the difference

in the fraction of viewers who reach 25% of the ad between the congruence and incongruence

cohorts. We look for heterogeneous treatment effects across the device being used for watching

the content and the day of the week. User consuming content over desktop during the first half

of the week is expected to have less time in hand and hence, likely to be more actively consuming

the content and pay additional attention to the video ad. The causal forest model partitions the

sample into clusters using these heterogeneity effects, maximizing the difference between CATE

(Conditional Average Treatment Effects) value of these clusters. The results are reported in Figures

11 & 12.

The maximal difference obtained between CATE values using this model were

∆(CAT E) = 0.0028

Notes: We generate dummy variables for each of the heterogeneity effects. Thus the following dummy variables

were made:

• desktop : Equals 1 if the viewing device is desktop and 0 if it is a mobile phone

• Monday, Wednesday, Thursday, Friday, Saturday, Sunday: Set according to the day of the week

when the video-ad combination was viewed. If the day is a Tuesday, all set to 0

Figure 11. Heterogeneous Treatment Effects - C

27

Electronic copy available at: https://ssrn.com/abstract=4131151

Notes: The causal forest was trained with 1,000 honest estimators, with the lower bound for clus-

ter size set to 10,000 samples.

To assess the relative impact of the different heterogeneity effects, we make use of SHAP value

plot for these effects.

Figure 12. SHAP values for Heterogeneous Treatment Effects

Notes: or every dummy variable, the red data points denote the variable set to 1 and blue denote the variable

having value 0. The dummies that have red data points on the positive side tend to increase viewership in the con-

gruence cohort, reducing the CATE. Dummy variables with red points in the negative side reduce viewership in the

congruence cohort, increasing the CATE. Thus we can infer that a viewer watching on a desktop in the first half of

the week shows maximum magnitude of CATE.

Yi is the binary indicator that the user i watched at least 25% of the advertisement; xi is a vector

of covariates (a categorical variable with values desktop and days of the week); wi is the binary

indicator representing whether the ad-video emotions were congruent(0) or incongruent(1). From

the potential outcomes framework, (Yi (1), Yi (0)), represent respectively, the behavioral outcome

of the user i under the treatment and control conditions. We estimate the conditional average

treatment effect function τ (x) = E[Yi (1) - Yi (0) | xi = x]. The CATE function was estimated

using the CausalModel function from the python package dowhy. We then obtain the SHAP values

for the covariates and plot them. The scatter plots for each of the covariaes shows the relative

magnitude and direction of bias that it introduces in the ATE. The CausalModel function also

gives us the maximum value of difference between CATE obtained by partitioning the covariates.

28

Electronic copy available at: https://ssrn.com/abstract=4131151

Heterogeneity of Impact

In this section, we estimate the characteristics of a user segment that is more likely to be impacted

by incongruent ad-video pairs. We first estimate the conditional average treatment effect (CATE)

on streaming of congruent ad-video pairs. We define a user as relatively “strongly” impacted by

incongruity, if their estimated CATE on congruent ad-video pair is greater in magnitude than the

median estimated CATE in the data, and relatively “weakly” affected otherwise. Next, the ATE

for each sub-group is calculated. Lastly, the averages of the co-variates in compared across the

treatment and control groups (e.g., (Athey and Wager, 2019; Athey et al., 2021; Chernozhukov

et al., 2018)). The CATE is estimated non-parametrically by fitting a causal forest (Athey and

Wager, 2019). In Table 10, we report the individual CATE values for each of the covariates,

confirming the theoretical hypothesis.

Table 10. Heterogeneous Treatment Effects

Weak group CATE Strong group CATE Difference

later half of week 0.00302** first half of week 0.00447*** 0.00145

(2.73) (3.92)

desktop 0.00255* mobile 0.00305** 0.00050

(2.41) (2.58)

Notes: Strong subgroup has users whose estimated CATE on congruent ad-video pair is greater in

magnitude than the median estimated CATE in the data . Robust standard errors in parenthesis.

7 Robustness

7.1 Statistical Considerations

Bootstrapped Test Statistics

The main test statistic here is the difference in the fraction of viewers who reached 25 % of the ad

between the congruence and incongruence cohorts. Though we have an adequate sample size by

virtue of which the standard results based on the Central Limit Theorem work, we seek to verify the

statistical significance of the results with greater confidence. Therefore, we simulate exact p-value

that does not assume normal approximation. We simulate the sampling distribution of the test

statistic assuming the null hypothesis is true. The null hypothesis is that the emotion incongruence

has no effect. We lay out the procedure in the next few sentences. Firstly, we do block sampling at

29

Electronic copy available at: https://ssrn.com/abstract=4131151

the user-level (with replacement). Specifically, we sample two datasets from the congruence group,

each with 20% of the sample size of the group. We compute the difference in the test statistic

for these two datasets, and repeat this procedure 10,000 times to obtain 10,000 such differences.

The Figure 13 plots the empirical CDF of these differences, representing the distribution of the

test statistic under the null. We denote this empirical CDF as F d (d). The test statistic value

is 0.0391% (represented as vertical red lines on the plot). Expressed further, the probability of

encountering a value more extreme than 0.391% under the null, F d (−0.00391) + 1 − F d (0.00391),

is 0.0002. This is in agreement with the p-values computed by the normal approximation. Overall,

we conclude that there is a robust statistically significant difference in the ad completion rates

between the treatment and the control group.

Figure 13. Computing simulated p-values

Notes: The Figure plots the empirical CDF of these differences, representing the distribution of

the test statistic under the null.

Type-S, Type-M and Type-III Errors

P-values may prove to be unreliable while testing our null hypothesis. This is where Gelman and

Carlin (2014) concept of Type-III and Type-M errors comes into picture. Type - S error is the

probability that the estimate has the incorrect sign, even if it is statistically significantly different

30

Electronic copy available at: https://ssrn.com/abstract=4131151

from zero. The Exaggeration Ratio (expected Type- M error)is the expectation of the absolute

value of the estimate divided by the effect size, if statistically significantly different from zero.

In our analysis, we use Aklin’s STATA implementation of the Gelman and Carlin (2014) error

analysis method. For getting the the error-rates, we need to estimate the effect size of the sample

and calculate the standard deviation/standard error in the estimated effect size of the sample. We

use Cohen’s D for estimating the effect size.

Cohen’s D and Standard Error

Cohen’s D defines the effect size of a sample by comparing two data-sets. Cohen’s D is defined as:

x1 − x2

d= ∗

ss

(n1 − 1)s21 + (n2 − 1)s22

s∗ =

n1 + n2 − 2

s

n1 + n2 d2

SE ≈ +

n1 n2 2(n1 + n2 )

where d is the estimated effect size, x1 ; x2 are the means of the samples, n1 ; n2 are the sizes of the

two samples, s1 ; s2 are the standard deviations of the two samples and SE represents the standard

error in the effect size estimate.

Process for Calculating Type-S and Type-M error rates

In our analysis, we first split the data on the basis of cohorts. We remove some outliers with

fewer observations. We only select those cohorts which have larger number of observations and

the number of observations in each cohort is almost the same. The number of observations in each

cohort is made same via bootstrapping. After this, we take every possible pair of cohorts and note

their cohen’s d and standard error. Finally, we take the average of the Cohen’s d and the standard

error. Using the obtained effect size estimate and the standard error, we calculate the Type-S and

Type-M errors.

Results and their Interpretation

• Effect Size Estimate = Average value of Cohen’s D = 0.009

• Standard Error = 0.001

31

Electronic copy available at: https://ssrn.com/abstract=4131151

• α = 0.05 (if p< α then we reject the null hypothesis.)

• Number of Simulations that we perform while calculating the error rates = 10,000.

We perform around 10,000 replications of the measure of probability that the incongruence

effect is overstated. In each replication, where the incongruence effect is estimated to be statis-

tically significantly different from zero, the absolute value of the estimated incongruence effect is

divided by A = 0.398 (effect size) to obtain a ratio. The distribution of these ratios across 10,000

replications is plotted in Figure 14 below. The mean ratio is 1.000014 (a low value according to

Gelman and Carlin (2014)

Figure 14. Assessment of the Overstatement of Treatment Effect

Notes: The distribution of exaggeration ratios across 10,000 replications.

7.2 Are the Effects Driven by Advertisements themselves and not by the incongruity

between the emotions of the ads and content video?

Table 11 reports whether there is support for an alternative hypothesis for the difference in out-

comes between incongruent and congruent groups by positing stronger emotions to the ad itself

and not by virtue of an ad’s emotion being aligned with the emotion of the placement video.

32

Electronic copy available at: https://ssrn.com/abstract=4131151

For instance, one possibility is that persuasiveness of the emotions differ based on the valence of

the emotion (Lau-Gesk and Meyers-Levy, 2009) with sad emotion being more persuasive (Kramer

et al., 2014). The evidence suggests this is not the main phenomenon driving the results.

Table 11. Understanding the Persuasiveness of Ad Emotions

Dependent variable:

First Q Mid Third Q Complete

(1) (2) (3) (4)

Sad -0.00374∗∗ -0.00051 -0.00058 -0.00027

(0.00178) (0.00178) (0.00178) (0.00177)

Notes: The Table above reports outcome measures regressed against Ad emotion. Ad Emotions are categorised into

happy and sad based on their emotion scores. Happy ads act as a reference for the above regression, and coefficients

for sad ads are reported with respect to happy ads. ∗ p<0.1; ∗∗ p<0.05; ∗∗∗ p<0.01

7.3 Are incongruent effects simply an artefact of users trying to regulate their emo-

tions?

Prior research suggests that consumption of extreme valence emotions make users look for alle-

viating, repairing, or managing an emotion in the short term, also known as emotion regulation

(Kemp and Kopp, 2011). Therefore, to regulate their emotions, users who consume extreme emo-

tions through placement videos for longer period of time are more likely to opt for an ad with an

opposite valence emotion. This is not what we see in the data. In fact, we see that the incongruent

ad-video pairs perform better for shorter placement videos compared to the longer ad-video pairs.

Table 12 reports the results. Further, Table 25 reports the results for the short length videos,

Table 26 reports the results for the medium length videos and Table 27 reports the results for the

long length videos.

33

Electronic copy available at: https://ssrn.com/abstract=4131151

Table 12. Heterogeneity across the Length of Placement Videos

Dependent variable:

First Q Mid Third Q Complete

(1) (2) (3) (4)

group = Incongruent 0.00423∗∗∗ 0.00740∗∗∗ 0.00796∗∗∗ 0.00887∗∗∗

(0.00118) (0.00127) (0.00131) (0.00132)

length = Q2 0.02439∗∗∗ 0.03793∗∗∗ 0.04409∗∗∗ 0.04845∗∗∗

(0.00121) (0.00129) (0.00133) (0.00135)

length = Q3 0.02461∗∗∗ 0.03965∗∗∗ 0.04666∗∗∗ 0.05105∗∗∗

(0.00098) (0.00108) (0.00112) (0.00115)

group = Incongruent, length = Q2 -0.00398∗∗ -0.00895∗∗∗ -0.00952∗∗∗ -0.01092∗∗∗

(0.00201) (0.00210) (0.00215) (0.00218)

group = Incongruent, length = Q3 -0.00256∗ -0.00703∗∗∗ -0.00595∗∗∗ -0.00689∗∗∗

(0.00154) (0.00166) (0.00172) (0.00176)

Constant 0.80270∗∗∗ 0.70165∗∗∗ 0.63003∗∗∗ 0.57480∗∗∗

(0.00267) (0.00268) (0.00267) (0.00265)

Note: ∗ p<0.1; ∗∗ p<0.05; ∗∗∗ p<0.01

Notes: The Table above reports results for the different lengths of the placement videos. Re-

gressions where an indicator for treatment (Incongruent)/control (Congruent) is interacted with

a categorical variable for the length of the placement video. The placement videos are further

divided into quartiles, Q1 , Q2 , Q3 & Q4 . Q1 denotes placement videos with length < 60s, Q2 de-

notes placement videos with length = 60s and Q3 denotes placement videos with length > 60s.

The number of videos in each quartile, Q1 , Q2 , Q3 is 8,9 and 10 respectively. Complete congruence

with length ∈ Q1 is taken as the reference group and all coefficients are reported with respect to

it.∗ p<0.1; ∗∗ p<0.05; ∗∗∗ p<0.01

34

Electronic copy available at: https://ssrn.com/abstract=4131151

8 Conclusions

In this study, we present the first field-based direct evidence of the effect of ad-video emotional

incongruence ad-video on user engagement with the ad. Our empirical analysis is based on a

field experiment that we designed and implemented on a video and advertising delivery platform.

The empirical results provide evidence for substantively greater engagement in the incongruent

condition. We find evidence consistent with greater attention to the ad when the ad is emotionally

incongruent or mismatched with the content video. We don’t find evidence for emotional regulation

being the mechanism driving the results.

The limitations of this study include our inability to study behavioral outcomes downstream

of ad engagement, such aa visits to the advertiser’s website or purchase of the advertised product

due to the nature of our partner platform, and the inability of tracking consumers after they have

finished viewing the ad. A second limitation is the nature of the platform, where the video and

ad might be playing without the consumer necessarily engaging with it actively. This leads to an

overestimation of engagement, though not of the treatment effect itself.

35

Electronic copy available at: https://ssrn.com/abstract=4131151

References

Andrade, E. B. (2005), ‘Behavioral consequences of affect: Combining evaluative and regulatory

mechanisms’, Journal of Consumer Research 32(3), 355–362.

Athey, S., Bergstrom, K. A., Hadad, V., Jamison, J. C., Ozler, B., Parisotto, L., Sama, J. D.

et al. (2021), Shared decision-making: Can improved counseling increase willingness to pay for

modern contraceptives?, Technical report, The World Bank.

Athey, S. and Wager, S. (2019), ‘Estimating treatment effects with causal forests: An application’,

Observational Studies 5(2), 37–51.

Belanche, D., Flavián, C. and Pérez-Rueda, A. (2017), ‘Understanding interactive online advertis-

ing: Congruence and product involvement in highly and lowly arousing, skippable video ads’,

Journal of Interactive Marketing 37, 75–88.

Biswas, R., Riffe, D. and Zillmann, D. (1994), ‘Mood influence on the appeal of bad news’, Jour-

nalism Quarterly 71(3), 689–696.

Bleier, A. and Eisenbeiss, M. (2015), ‘Personalized online advertising effectiveness: The interplay

of what, when, and where’, Marketing Science 34(5), 669–688.

Bounie, D., Valérie, M. and Quinn, M. (2017), ‘Do you see what i see? ad viewability and the

economics of online advertising’, Ad Viewability and the Economics of Online Advertising (March

1, 2017) .

Chernozhukov, V., Demirer, M., Duflo, E. and Fernandez-Val, I. (2018), Generic machine learning

inference on heterogeneous treatment effects in randomized experiments, with an application to

immunization in india, Technical report, National Bureau of Economic Research.

Cornelis, E., Adams, L. and Cauberghe, V. (2012), ‘The effectiveness of regulatory (in) congruent

ads: The moderating role of an ad’s rational versus emotional tone’, International Journal of

Advertising 31(2), 397–420.

Coulter, K. S. (1998), ‘The effects of affective responses to media context on advertising evalua-

tions’, Journal of Advertising 27(4), 41–51.

Dahlén, M., Rosengren, S., Törn, F. and Öhman, N. (2008), ‘Could placing ads wrong be right?:

advertising effects of thematic incongruence’, Journal of Advertising 37(3), 57–67.

36

Electronic copy available at: https://ssrn.com/abstract=4131151

Fong, H. (2021), ‘A theory-based interpretable, deep learning architecture for music emotion’,

Working Paper, Yale School of Management .

Furnham, A., Bergland, J. and Gunter, B. (2002), ‘Memory for television advertisements as a

function of advertisement–programme congruity’, Applied Cognitive Psychology: The Official

Journal of the Society for Applied Research in Memory and Cognition 16(5), 525–545.

Gelman, A. and Carlin, J. (2014), ‘Beyond power calculations: Assessing type s (sign) and type

m (magnitude) errors’, Perspectives on Psychological Science 9(6), 641–651.

Han, S. W. and Marois, R. (2014), ‘Functional fractionation of the stimulus-driven attention

network’, Journal of Neuroscience 34(20), 6958–6969.

Janssens, W., De Pelsmacker, P. and Geuens, M. (2012), ‘Online advertising and congruency

effects: It depends on how you look at it’, International Journal of Advertising 31(3), 579–604.

Joachimsthaler, E. A. and Lastovicka, J. L. (1984), ‘Optimal stimulation level—exploratory be-

havior models’, Journal of Consumer Research 11(3), 830–835.

Kahneman, D. (1973), Attention and effort, Vol. 1063, Citeseer.

Kamins, M. A., Marks, L. J. and Skinner, D. (1991), ‘Television commercial evaluation in the con-

text of program induced mood: Congruency versus consistency effects’, Journal of Advertising

20(2), 1–14.

Karmarkar, U. R. and Tormala, Z. L. (2010), ‘Believe me, i have no idea what i’m talking about:

The effects of source certainty on consumer involvement and persuasion’, Journal of Consumer

Research 36(6), 1033–1049.

Kemp, E. and Kopp, S. W. (2011), ‘Emotion regulation consumption: When feeling better is the

aim’, Journal of Consumer Behaviour 10(1), 1–7.

Kirmani, A. and Yi, Y. (1991), ‘The effects of advertising context on consumer responses’, ACR

North American Advances .

Kononova, A. and Yuan, S. (2015), ‘Double-dipping effect? how combining youtube environmen-

tal psas with thematically congruent advertisements in different formats affects memory and

attitudes’, Journal of Interactive Advertising 15(1), 2–15.

Kramer, A. D., Guillory, J. E. and Hancock, J. T. (2014), ‘Experimental evidence of massive-scale

emotional contagion through social networks’, Proceedings of the National Academy of Sciences

111(24), 8788–8790.

37

Electronic copy available at: https://ssrn.com/abstract=4131151

Krippendorff, K. (2018), Content analysis: An introduction to its methodology, Sage publications.

Kwon, E. S., King, K. W., Nyilasy, G. and Reid, L. N. (2019), ‘Impact of media context on ad-

vertising memory: A meta-analysis of advertising effectiveness’, Journal of Advertising Research

59(1), 99–128.

Lambrecht, A. and Tucker, C. (2013), ‘When does retargeting work? information specificity in

online advertising’, Journal of Marketing research 50(5), 561–576.

Lau-Gesk, L. and Meyers-Levy, J. (2009), ‘Emotional persuasion: When the valence versus the

resource demands of emotions influence consumers’ attitudes’, Journal of Consumer Research

36(4), 585–599.

Lee, C. J., Andrade, E. B. and Palmer, S. E. (2013), ‘Interpersonal relationships and preferences

for mood-congruency in aesthetic experiences’, Journal of Consumer Research 40(2), 382–391.

McGranaghan, M., Liaukonyte, J. and Wilbur, K. C. (2022), ‘How viewer tuning, presence, and

attention respond to ad content and predict brand search lift’, Marketing Science .

Noseworthy, T. J., Di Muro, F. and Murray, K. B. (2014), ‘The role of arousal in congruity-based

product evaluation’, Journal of Consumer Research 41(4), 1108–1126.

Pieters, R. and Warlop, L. (1999), ‘Visual attention during brand choice: The impact of time

pressure and task motivation’, International Journal of research in Marketing 16(1), 1–16.

Puccinelli, N. M., Wilcox, K. and Grewal, D. (2015), ‘Consumers’ response to commercials: when

the energy level in the commercial conflicts with the media context’, Journal of Marketing

79(2), 1–18.

Rocklage, M. D. and Fazio, R. H. (2020), ‘The enhancing versus backfiring effects of positive

emotion in consumer reviews’, Journal of Marketing Research 57(2), 332–352.

Sahni, N. S., Wheeler, S. C. and Chintagunta, P. (2018), ‘Personalization in email marketing: The

role of noninformative advertising content’, Marketing Science 37(2), 236–258.

Tamir, M. (2009), ‘What do people want to feel and why? pleasure and utility in emotion regula-

tion’, Current Directions in Psychological Science 18(2), 101–105.

Tamir, M. (2016), ‘Why do people regulate their emotions? a taxonomy of motives in emotion

regulation’, Personality and social psychology review 20(3), 199–222.

38

Electronic copy available at: https://ssrn.com/abstract=4131151