Professional Documents

Culture Documents

Entropy

Entropy

Uploaded by

NonikaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Entropy

Entropy

Uploaded by

NonikaCopyright:

Available Formats

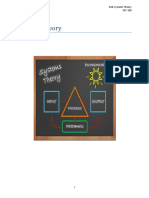

Entropy is a fundamental concept in physics and information theory that describes the measure

of disorder or randomness in a system. It plays a crucial role in understanding various

phenomena, ranging from the behavior of gases to the flow of heat and the nature of

information.

In thermodynamics, entropy is often associated with the second law, which states that the

entropy of an isolated system tends to increase over time. In simple terms, it suggests that

spontaneous processes in nature typically lead to an increase in disorder. For example, when

an ice cube melts, the water molecules become more randomly distributed, and the entropy of

the system increases.

Entropy is also connected to the concept of energy dispersal. In a closed system, energy tends

to disperse and spread out, leading to an increase in entropy. This is often illustrated by

considering a hot cup of coffee placed in a colder room. The heat from the coffee spreads out to

the surroundings, and the overall entropy of the system increases as the energy becomes more

evenly distributed.

In information theory, entropy measures the average amount of information or uncertainty in a

random variable or a data source. It quantifies the amount of surprise or unpredictability in the

data. For example, a coin flip with equal chances of heads or tails has higher entropy compared

to a biased coin that consistently lands on one side.

Entropy is a concept that extends beyond the realms of physics and information theory. It has

also found applications in fields such as biology, economics, and even philosophy. In these

contexts, entropy is often used to describe the tendency of systems to evolve towards states of

greater disorder, randomness, or equilibrium.

It's important to note that while entropy often implies disorder or randomness, it doesn't

necessarily mean chaos or lack of structure. In fact, in certain situations, order can arise from

disorder, and complexity can emerge from randomness.

The concept of entropy provides a powerful framework for understanding the behavior and

evolution of diverse systems in our universe. Whether it's the behavior of physical systems, the

transmission of information, or the organization of complex systems, entropy plays a

fundamental role in shaping our understanding of the natural world.

You might also like

- 77 2011 Hirsh JB Mar R Peterson JB Psychological Entropy Psych RevDocument18 pages77 2011 Hirsh JB Mar R Peterson JB Psychological Entropy Psych Revmacplenty100% (12)

- Disorder-A Cracked Crutch For Supporting Entropy DiscussionsDocument6 pagesDisorder-A Cracked Crutch For Supporting Entropy DiscussionsMaen SalmanNo ratings yet

- Epistemology Vs OntologyDocument13 pagesEpistemology Vs Ontologychiaxmen100% (2)

- Clase 1 - Hablar de La FísicaDocument5 pagesClase 1 - Hablar de La FísicaGoalter PeñaNo ratings yet

- EntropyDocument1 pageEntropyKamal SivaNo ratings yet

- The Entropy Effect: An Exploration into Systems and Entropy ~ the Final Frontier of ScienceFrom EverandThe Entropy Effect: An Exploration into Systems and Entropy ~ the Final Frontier of ScienceNo ratings yet

- For Red Hot Blot to Blue Singing Whale: A look into thermodynamics and the evolution of speciesFrom EverandFor Red Hot Blot to Blue Singing Whale: A look into thermodynamics and the evolution of speciesNo ratings yet

- Life As Thermodynamic Evidence of Algorithmic Structure in Natural EnvironmentsDocument20 pagesLife As Thermodynamic Evidence of Algorithmic Structure in Natural EnvironmentsMohammed Samir KhokarNo ratings yet

- Research Paper FinalDocument31 pagesResearch Paper FinalJoe WhitingNo ratings yet

- The Heavy Burden of Proof For Ontological Naturalism: Georg Gasser & Matthias Stefan University of InnsbruckDocument23 pagesThe Heavy Burden of Proof For Ontological Naturalism: Georg Gasser & Matthias Stefan University of InnsbruckMir MusaibNo ratings yet

- Research Article On Entropy: Name:Sujen PiyaDocument6 pagesResearch Article On Entropy: Name:Sujen PiyaAayusha PaudelNo ratings yet

- EntropyDocument6 pagesEntropymuhammadasghartr5No ratings yet

- Complexity, Creativity, and Society: Brian GoodwinDocument12 pagesComplexity, Creativity, and Society: Brian GoodwinMalosGodelNo ratings yet

- Angelo SpeechDocument2 pagesAngelo SpeechJina Marie RicoNo ratings yet

- Entropy: Entropy and Information Theory: Uses and MisusesDocument20 pagesEntropy: Entropy and Information Theory: Uses and MisusesROBERTO MIRANDA CALDASNo ratings yet

- Dynamics Page The Yi Jing and The Western Systems Theory 220717Document9 pagesDynamics Page The Yi Jing and The Western Systems Theory 220717Ignacio CoccioloNo ratings yet

- Zentropy New Theory of Entropy May Solve Materials Design IssuesDocument3 pagesZentropy New Theory of Entropy May Solve Materials Design IssuesQw ErtyNo ratings yet

- AljonDocument1 pageAljonGoriong ButeteNo ratings yet

- VIGNETTEDocument2 pagesVIGNETTEanjing goblokNo ratings yet

- Bertallanfy An Outline of General Systems TheoryDocument13 pagesBertallanfy An Outline of General Systems TheoryPedro Neves MarquesNo ratings yet

- Group 8 ENTROPYDocument24 pagesGroup 8 ENTROPYRoxanne Salazar AmpongNo ratings yet

- 2014 Eng 2 07Document7 pages2014 Eng 2 07hberni25No ratings yet

- Science and Scientific MethodDocument10 pagesScience and Scientific MethodXia Allia100% (1)

- Ethics and The Economist 033111Document23 pagesEthics and The Economist 033111Yusuf CinkaraNo ratings yet

- Dual-Process Theory of ThinkingDocument12 pagesDual-Process Theory of ThinkingCrysanta100% (1)

- Speculations and PhysicsDocument275 pagesSpeculations and PhysicsSam Vaknin100% (1)

- Body Brain and Culture Victor TurnerDocument10 pagesBody Brain and Culture Victor Turnerstephen fitzpatrickNo ratings yet

- What Is Life - E. SchrödingerDocument6 pagesWhat Is Life - E. SchrödingerHola ArgentinaNo ratings yet

- The Turbulent BrainDocument7 pagesThe Turbulent BrainKalpana SeshadriNo ratings yet

- ATDDocument62 pagesATDAnil BhuseNo ratings yet

- The Sciences Review Questions Final ExamDocument65 pagesThe Sciences Review Questions Final ExamJilian McGuganNo ratings yet

- Entropy NewDocument12 pagesEntropy NewR MathewNo ratings yet

- Enrtropy SyntropyDocument18 pagesEnrtropy SyntropyEnrique KaufmannNo ratings yet

- Traditional Beliefs and Electromagnetic Fields Colin A. Ross (2011)Document18 pagesTraditional Beliefs and Electromagnetic Fields Colin A. Ross (2011)Cambiador de Mundo100% (1)

- Sherilyn L. Loria BSEM-1A "Research" ( Logic English College Algebra)Document19 pagesSherilyn L. Loria BSEM-1A "Research" ( Logic English College Algebra)JosephVincentLazadoMagtalasNo ratings yet

- Entropy Principle for the Development of Complex Biotic Systems: Organisms, Ecosystems, the EarthFrom EverandEntropy Principle for the Development of Complex Biotic Systems: Organisms, Ecosystems, the EarthNo ratings yet

- ENTROPY AS A PHILOSOPHY, SaridisDocument9 pagesENTROPY AS A PHILOSOPHY, SaridisTsir Tsiritata0% (1)

- Physics and The ParanormalDocument2 pagesPhysics and The ParanormalTheodore Franklin PenderNo ratings yet

- CH 01Document10 pagesCH 01api-3750482No ratings yet

- Innovation and SelectionDocument44 pagesInnovation and SelectionJorge RasnerNo ratings yet

- Chaos ThoeryDocument12 pagesChaos Thoeryayodejitobiloba05No ratings yet

- Psychoenergetic ScienceDocument10 pagesPsychoenergetic ScienceLeandro SilvaNo ratings yet

- Simus 2011 Metaphors and Metaphysics in EcologyDocument19 pagesSimus 2011 Metaphors and Metaphysics in Ecologymartin686No ratings yet

- 4 TempermentsDocument42 pages4 TempermentsArtist Metu100% (2)

- Entropy 16 00953 PDFDocument15 pagesEntropy 16 00953 PDFGustavo BarrazaNo ratings yet

- Human EnthalpyDocument3 pagesHuman EnthalpyahmedkhidryagoubNo ratings yet

- Systems Theory PaperDocument9 pagesSystems Theory PapermjointNo ratings yet

- The Neuroscience of Free WillDocument13 pagesThe Neuroscience of Free Willluissolanoalvarez02No ratings yet

- KIVERSTEIN - Extended CognitionDocument22 pagesKIVERSTEIN - Extended Cognitionjairo leonNo ratings yet

- LARSEN-FREEMAN-e-CAMERON - Complex Systems and Applied Linguistics - Cap1Document12 pagesLARSEN-FREEMAN-e-CAMERON - Complex Systems and Applied Linguistics - Cap1Bhianca MoroNo ratings yet

- ChaosDocument7 pagesChaosNeagu AdrianNo ratings yet

- What Are ThermodynamicsDocument2 pagesWhat Are ThermodynamicsChoco MeowMeowNo ratings yet

- What Is LifeDocument6 pagesWhat Is Lifeeztimoria100% (1)

- RE 2, Mohamed Mousa 201600903Document3 pagesRE 2, Mohamed Mousa 201600903Mohamad IbrahimNo ratings yet

- Chaos Theory and EpilepsyDocument15 pagesChaos Theory and EpilepsysamfazNo ratings yet

- Intro To Sociology CHAPTER1Document2 pagesIntro To Sociology CHAPTER1Jaypee AsoyNo ratings yet

- Speculations and Physics: Sam Vaknin, PH.DDocument275 pagesSpeculations and Physics: Sam Vaknin, PH.DSiva RamNo ratings yet

- Problems in Writing The History of Psychology POWER POINTDocument51 pagesProblems in Writing The History of Psychology POWER POINTMing MingNo ratings yet

- Chaos/Complexity Theory in Language Learning: An Ideological LookDocument8 pagesChaos/Complexity Theory in Language Learning: An Ideological LookReza GhorbanzadehNo ratings yet

- 5 - Wormhole Serenades - Cosmic Bridges To Unknown RealmsDocument1 page5 - Wormhole Serenades - Cosmic Bridges To Unknown RealmsNonikaNo ratings yet

- Lucid DreamingDocument1 pageLucid DreamingNonikaNo ratings yet

- ConceptosDocument2 pagesConceptosNonikaNo ratings yet

- PareidoliaDocument1 pagePareidoliaNonikaNo ratings yet

- SynesthesiaDocument1 pageSynesthesiaNonikaNo ratings yet

- DocumentDocument2 pagesDocumentNonikaNo ratings yet

- DocumentoDocument1 pageDocumentoNonikaNo ratings yet

- Practical Session: Working in IT. IT Jobs and DutiesDocument1 pagePractical Session: Working in IT. IT Jobs and DutiesNonikaNo ratings yet

- CrosswordDocument1 pageCrosswordNonikaNo ratings yet