Professional Documents

Culture Documents

Industry

Industry

Uploaded by

RituShuklaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Industry

Industry

Uploaded by

RituShuklaCopyright:

Available Formats

Industry It Bpo Hospital pharma Manufacturing Journal of Information, Information Technology, and Organizations Volume 3, 2008 Accepting Editor:

Bob Trav ica Applying Importance-Performance Analysis to Information Systems: An Exploratory Case Study Sulaiman Ainin and Nur Haryati Hisham Faculty of Business and Accountancy, University of Malaya, Kuala Lumpur, Malaysia, a inins@um.e du.my; nurharyati@mesiniaga.com.my Abstract Using an end-user satisfaction survey, the perception of the importance of attributes of information systems was investigated and whether the performance of information systems met the users expect at ions in a company in Malaysia. It was discovered that the end-users were moderat ely sat isfied with the companys IS performance and that there were gaps between importance and performance on all the systems-related attributes studied. The largest gap pertained to the attributes Understanding Systems, Documentation, and High Availability of Systems. The contribution of the study is in advancing Importance-Performance Analysis applicable to IS research. Ke ywords: information systems performance, information systems importance, end-user satisfact ion Introduction Information technology (IT ) and information systems (IS) continue to be highly or significantly debated in todays corporate environments. As IT spending grows and becomes commoditized

and as essent ial as elect ricity and running wat er, m any organizat ions cont inue t o wonder if their IT spending is just ified (Farbey, Land, & Targett , 1992) and whet her t heir IS funct ions are effect ive (Delone & McLean, 1992). IT and IS have evolved drast ically from t he heyday of m ainfram e computing to the environment that has reached out to the end-users. In the past, end-users interacted with systems via the system analyst or programmer who translated the user requirements into system input in order to generate the output required for the end-users analysis and decisionmaking process. However, now end-users are more directly involved with the systems as they navigate themselves typically via an interactive user interface, thus assuming more responsibility for their own applications. Therefore, the ability to capture and measure end-user satisfaction serves as a tangible surrogate measure in determining the performance of the IS function and services, and of IS themselves (Ives, Olson, & Baroudi, 1983). Besides evaluating IS performance, it is also import ant t o evaluat e whet her IS in an organizat ion m eet users expect at ions. This paper aims to demonstrate the use of Importance-Performance Analysis (IPA) in evaluating IS. Mat eri al p ub li sh ed as p art o f t h i s p ub li cati on , ei th er o n -l in e o r i n p ri nt , i s co py rig ht ed by th e In fo rmi n g Sci en ce In st it ut e. P ermi s si on to mak e d i gi t al o r p ap er cop y o f p art o r al l o f t h es e wo rk s fo r p erso n al o r cl as s ro om u s e is g rant ed wit ho ut fee p rov id ed th at t h e cop i es are n ot mad e o r d is t ri bu t ed fo r p ro fi t or commercial advantage AND that copies 1) bear this notice i n fu l l an d 2 ) g iv e t h e fu ll ci t at io n o n th e fi rs t p ag e. It is p ermissible to abstract these works so long as credit is given. To co py in all oth er cas es o r to repu blis h o r to p os t on a s erv er o r t o redi st rib ut e to l is ts requ i res s p eci fi c p ermi s si on an d p aymen t of a fee. Contact Pu bl is h er@ In fo rmi n g Sci en ce.o rg t o requ est red is tribu tion p ermis sion . Importance-Performance Anal ysi s

96 Research Framework Importance-Performance Analysis The Importance-Performance Analysis (IPA) framework was introduced by Martilla and James (1977) in marketing research in order to assist in understanding customer satisfaction as a function of both expectations concerning the significant attributes and judgments about their performance. Analyzed individually, importance and performance data may not be as meaningful as when both data sets are studied simultaneously (Graf, Hemmasi, & Nielsen, 1992). Hence, importance and perform ance dat a are plott ed on a t wo dim ensional grid wit h import ance on t he y-axis and performance on the x-axis. The data are then mapped into four quadrants (Bacon, 2003; Martilla & Jam es, 1977) as depict ed in Figure 1. In quadrant 1, import ance is high but perform ance is low. This quadrant is labeled as Concentrate Here, indicating the existing systems require urgent corrective action and thus should be given top priority. Items in quadrant 2 indicate high import ance and high perform ance, which indicates that exist ing syst em s have strengt hs and should continue being maintained. T his category is labeled as Keep up good work. In contrast, the category of low importance and low performance items makes the third quadrant labeled as Low Priority. While the systems with such a rating of the attributes do not pose a threat they may be candidates for discontinuation. Finally, quadrant 4 represents low importance and high performance, which suggests insignificant strengths and a possibility that the resources invested may better be diverted elsewhere. The four quadrants matrix helps organizations to identify the areas for improvement and actions for m inim izing t he gap bet ween importance and performance. Ext ensions of t he import anceperform ance m apping include adding an upward sloping a 45-degree line t o highlight regions of differing priorities. This is also known as the iso-rating or iso-priority line, where importance equals performance. Any attribute below the line must be given priority, whereas an attribute above the line indicates otherwise (Bacon, 2003). Fi gu re 1 . Im po rtan ce -Pe rfo rm an ce Ma p (Source: adapted from Bacon, 2003; Martilla & James, 1977)

1 5 5 High High Low Low Importance Performance i s o r a t i n g Q 2 Q 4 Q 4 Q 1 Ainin & Hi sham 97

IP A has been used in different research and practical domains (Eskildsen & Kristensen, 2006). Slack (1994) used it to study operations strategy while Sampson and Showalter (1999) evaluated customers. Ford, Joseph, and Joseph (1999) used IPA in the area of marketing strategy. IPA is also used in various industries, such as health (Dolinsky & Caputo, 1991; Skok, Kophamel, & Richardson, 2001), banking (Joseph, Allbrigth, St one, Sekhon, & T inson 2005; Yeo, 2003), hot el (Weber, 2000), and tourism (Duke & Mont 1996). The IPA has also been applied in IS research. Magal and Levenburg (2005) employed IP A to study the motivations behind e-business strategies among small businesses while Shaw, Delone, and Niederman (2002) used it to analyze end-user support . Skok and colleagues (2001) m apped out t he IPA using t he Delone and McLean IS success model. Delone and McLean (1992) used the construct of end-user satisfaction as a proxy measure of systems performance. Firstly, End-User Satisfaction has a high face validity since it is hard to deny that an information system is successful when it is favored by the users. Secondly, the development of the Bailey and P earson instrument (1983) and its derivatives provided a reliable tool for measuring user satisfaction, which also facilitates comparison among studies. Thirdly, the measurement of end-user satisfaction is relatively more popular since other measures have performed poorly. Methodology Based on the reasons mentioned above, this study adapted the measurement tool developed by Bailey and Pearson (1983) to evaluate end-user satisfaction. The measures used are: System Quality, Information Quality, Service Quality, and System Use. System Quality typically focuses on the processing syst em it self, m easuring it s perform ance in t erm s of product ivit y, t hroughput, and resource utilization. On the other hand, Information Quality focuses on measures involving the output from an IS, typically the reports produced. If the users perceive the information generated to be inaccurate, outdated, or irrelevant, their dissatisfaction will eventually force them to seek alternatives and avoid using the information system altogether. System Use reflects the level of recipient consumpt ion of the inform at ion syst em output . It is hard t o deny the success of a system that is used heavily, which explains its popularity as the IS measure of choice. Although certain researchers strived to differentiate between voluntary and mandatory use, Delone and

McLean (1992) noted that no syst em use is t ot ally m andatory. If the syst em is proven to perform poorly in all aspects, management can always opt to discontinue the system and seek other alternatives. The inclusion of the Service Quality dimension recognizes the service element in the inform at ion syst em s funct ion. P itt, Wat son, and Kavan (1995) proposed that Service Qualit y is an antecedent of System Use and User Satisfaction. Because IS now has an important service component, IS researchers may be interested in identifying the exact service components that can help boo st User Sat isfact ion . New and modified measurement items were added to suit the current issues which are pertinent to the IS development and performance evaluation, specific to the Malaysian IT firm. The newly added factors include High Availability of Systems that directly affects the employees ability to be productive. Frequent downtimes mean idle time since employees are not able to access the required dat a or em ail for t heir communicat ion needs. Senior m anagem ent has raised concerns on this issue since it affects business continuity and can potentially compromise its competitive position. Thus, the IS department under study is exploring measures to provide continuous access to the companys IS including clustering technology that provides real-time replication onto a backup system. The second new variable is Implem ent at ion of Lat est T echnology that has a direct bearing on t he companys product ivit y. Being a leading IT solut ions provider, the company needs to keep it self abreast of the recent technologies and solutions available in the market. This is aided by strategic partnerships with various partners who provide the thought leadership, access to their latest deImportance-Performance Anal ysi s 98 velopments and skill transfer in order to equip the employees with the relevant expertise. Additionally, in order to formulate better solutions for its customers, the company needs to have firsthand experience in using the proposed technologies. To realize this strategy, the company has embarked on a restructuring exercise that sees the formalization of a R&D think-tank and a dep loymen t un it t o facilit at e rap id ro ll-out o f th e lat est t echno logies fo r int ern al use.

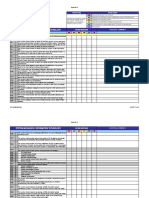

The third new factor included in this study is Ubiquitous Access to IT Applications to enable productivity anytime and anywhere. The primary aim is to provide constant connectivity for the employees who are often out of the office, enabling them to stay in touch with email and critical applicat ions host ed wit hin the organizat ions net work. A convenience sampling method was used for data gathering. The targeted respondents were the organizat ions end-users of various IS (em ail, Int ernet browsing, and a host of office aut om at ion syst em s developed in-house). In order to expedit e the dat a collect ion process, the survey was converted into an online format and deposited in the organizations Lotus Notes database. An email broadcast was sent out to explain the research objectives and a brief instruction on how to complete the survey was also included. 680 users accessed the survey and 163 questionnaires were filled, which is equivalent to a response rate of 24%. All completed questionnaires were aut omat ically depo sit ed int o a Lot us Not es dat abase. Th e security sett ings on t h is dat abase were modified to allow anonymous responses to ensure complete anonymity. The questionnaire contained 20 attributes that were selected out of the 39 items proposed by Bailey and Pearson (1983). The rationale for this was to reduce the complexity of the survey questionnaire. Also, the survey questionnaire did not include any negative questions for verification purposes again for the sake of simplification and to reduce the total time taken to provide a complete response. It is foreseeable that including other factors may provide a different insight or improve the internal reliability of the variables studied. However, to perform a vigorous test to qualify the best set of variables would be time consuming and could possibly shorten the duration required for data collection. The questionnaire was comprised of two sections, each containing the select 20 attributes (see Table 1). The first section asked respondents to evaluate the degree of importance placed upon each attribute. The second section required an evaluation of the actual performance of the same att ribut es. The respondent s were prompt ed to use a five-point Likert Scale (1=low, 5-high). Findings It was found that the respondents were moderately satisfied with the IS performance as indicated by the mean scores (see Table 1). The mean scores in Table 1 indicated that the respondents were t he least sat isfied wit h the Degree of T raining (the m ean score of 2.95) t hat was provided t o t hem

In cont rast, t h e respondent s expressed th e great est sat isfact ion with t h e fo llowing att ributes: Relationship with the Electronic Data Processing (EDP) staff (the mean score of 3.82), Response/Turnaround T im e (t he m ean score of 3.76) and T echnical Compet ence of EDP St aff (t he m ean score of 3.65). A det ailed discussion on user sat isfact ion is present ed in Hisham s (2006) paper. T able 1 indicates the respondents perception that all attributes were below their expectations or level of importance (note the negative values for differences in mean scores). The degree of difference, however, varies. From the gap between means, it is easy to see that the IS department needs to work harder to achieve better results on Understanding of the Systems, Documentation, System Availability, Ubiquitous Access, and Training. These five items have the highest gap scores indicat ing t he biggest discrepancy bet ween import ance and perform ance. On the ot her hand, the items with the lowest gap scores suggest that the current performance levels are manageable, even if they are still below end-users expectations. These include Relationship with the Ainin & Hi sham 99 EDP Staff, Relevancy, T ime Required for New Development, Feeling of Control, and Feeling of Participation. Table 1. IS Attributes, Means, and Gap Scores IS Attributes Performance Me an (X) Importance Me an (Y) Me ans Di ffe ren ce (X-Y) Underst anding of Syst em s 3.27 4.36 -1.09

Docum ent at ion 3.06 4.14 -1.08 High Availability of Systems 3.1 4.16 -1.06 Ubiquit ous Access to Applicat ions 3.05 4.07 -1.02 Degree of T raining 2.95 3.95 -1.00 Securit y of Dat a 3.53 4.49 -0.96 Int egrat ion of Syst em s 3.09 3.99 -0.90 Top Managem ent Involvem ent 3.35 4.19 -0.84 Flexibility of Systems 3.28 4.07 -0.79 Implem ent at ion of Lat est T echnology 3.03 3.81 -0.78 Confidence in the Syst em s 3.44 4.22 -0.78 Att it ude of the EDP St aff 3.55 4.31 -0.76 Job Effect s 3.51 4.24 -0.73 Response/Turnaround T im e 3.76 4.46 -0.70 Technical Competence of the EDP Staff 3.65 4.23 -0.58 Feeling of P art icipat ion 3.25 3.79 -0.54 Feeling of Cont rol 3.27 3.74 -0.47 T im e Required for New Developm ent 3.36 3.8 -0.44 Relevancy 3.54 3.97 -0.43 Relat ionship wit h the EDP St aff 3.82 4.05 -0.23 Mean scores for both import ance and perform ance data were plott ed as coordinat es on the Importance-Performance Map as depicted in Figure 1. All the means were above 2.5 thus falling in the second quadrant of t he IPA m ap (refer t o Figure 1). To show t he result ing posit ions on t he m ap more clearly, only the plotted scores are shown (Figure 2). These indicate that both performance and importance of the systems are satisfactory. Therefore, the systems are qualified for further maintenance (cf. Bacon, 2003; Martilla & James, 1977). As discussed above, performance and importance scores provide more meaning when they are studied together. It is not enough to know which attribute was rated as the most important, or

which one fared the best or worst. By mapping these scores against the iso-rating line one get an indication of whether the focus and resources are being deployed adequately, insufficiently or too Importance-Performance Anal ysi s 100 lavishly. Figure 2 shows that all the scores were above the iso-rating, thus indicating that importance exceeds performance. This implies that there are opportunities for improvement in this company . Legend for IS Attribute 1. Un derstan ding of Sy stems 2. Do cu mentatio n 3. High Availability of Sys tems 4. Ub iq uitous Access to Application s 5. Degree of Training 6. Secu rity of Data 7. In tegration of Sys tems 8. Top Managemen t Inv olvemen t 9. Flexibility o f Sys tems 10. Imp lementation o f Lates t Techno lo gy Fi gu re 2. Map of IS Attri bu tes Discussion and Conclusion Organizations today are compelled to analyze the actual value (performance) of their IS. On the practical level, the implication of this is that IS departments should measure the satisfaction level amongst their end-users as part of evaluating the performance of IS. The same goes for managers in other areas who are responsible for t he ret urn on IS invest ment s. End-users input can reveal insights as to which areas deserve special attention and more resources. Using tested tools such as Bailey and P earsons (1983) instrum ent helps ensure a highly consist ent , reliable, and valid outcome that, when deployed over time, can help measure the performance of the IS department to ensure its continual alignment between its operational goals and the underlying business object ives. 11. Confid ence in the Sy stems 12. Attitude of the EDP Staff 13. Job Effects 14. Res ponse/Turnaround Time 15. Technical Co mpetence of the EDP Staff 16. Feelin g of Participation 17. Feelin g of Co ntrol 1 8. Time Required for New Develop ment 19. Relevancy 2 0. Relations hip with the EDP Staff

As determined in this study, key IS attributes of IS performance pertain to Service Quality (Relationship with the EDP Staff) and System Quality (Response/Turnaround Time) are critical in delivering end-user satisfaction (i.e. system performance). On the other hand, data security is deemed to be the most important IS attribute, which echoes todays concern about rampant security t hreat s. Th ese in clude v irus and wo rm att ack s wh ich could lead t o dat a lo ss, ident it y t h eft , hacking by unscrupulous hackers, and unauthorized access to data. As such, an IS department Ainin & Hi sham 101 needs to be more proactive in handling these threats and continually demonstrate to the end-users its ability to secure the system and its information repositories. Confidence in the System is also partly related to data security. But even more, this attribute also has to do with the quality of information. The data presented to the user must be reliable, accurate, and timely; otherwise, data will be meaningless and will hinder the end-users from making sound decisions. Principles of IPA can be expanded to other functional areas and there are particularly functional units that provide internal services, such as Human Resources. For example, a human resources department provides services to internal staff, such as the leave management system (a system for managing employees leave applications). The staff can be asked to rate the systems performance as well as their expectation from the system. The findings then can be used to gauge wh et h er t h eir ex p ect at io n s ar e met , wh et h er t h ey ar e sat isf ied wit h th e sy st em, an d mo st impo r tantly as a guide for enhancements and improvements. The Importance-Performance Map (Figure 2) revealed that all twenty IS attributes were performing below the end-users expectations. The three variables with highest gap scores were, Understanding of Systems, Documentation, and High Availability of Systems. Mapping the mean scores for both data sets onto a scatter plot and analyzing the distance of the scores plotted against the iso-rating line gave much insight to help guide the prioritization of resources and management intervention. For example, to improve end-users understanding of systems, the IS department could strive to improve its systems documentation and training. This may include the use of comput er-based t raining, applying mult im edia and video to conduct online demonst rat ions, and organizing IT open days when end-users can approach t he IS personnel and inquire about t he

systems deployed. Based on the gap analysis, the IS department has already fostered good relationships with the end-users, encouraging a high user involvement in the development of new applications. This mak es en d- user s f eel t hat t h ey ar e mo r e in co ntr o l an d t h ey are assur ed th at t h e so lut io n s dev eloped are highly relevant to their tasks. The IS department would be wise to maintain their healthy relationship with the end-users, while pursuing the enhancement of the IS attributes identified wit h t h e h igh est gap sco res. Managers from the end-users departments should work together with the IS department to reduce the Importance-Performance gaps. They should play a more active role in the development and implementation of new systems. For example, during the design stage, managers must write and request their specifications based on the functional and procedural requirements, while during the testing stage, managers must test the system thoroughly and extensively. The limitation of this study is the use of a convenient rather than random sample. Additionally, end-user responses on the perceived importance might have suffered from their desire to rank everything as very important in order to suggest a highly concerned outlook on the overall state of the attributes presented. Nevertheless, the study accomplished its purpose of continuing IPA research within the filed of IS, which was only recently imported from the field of marketing. This applicat ion of IPA cont ribut es in several ways. For example, IPA has been used in IS research by Magal and Levenburg (2005) for evaluating e-strategies among small businesses in the United States. The respondents were business owners. In contrast, the present study differs as the respondents are users of systems within a company. Moreover, Skok and colleagues (2001) used IP A to study IS performance of a health club in the United Kingdom by deploying Delone and McLeans (1992 IS success t ypology. The model incorporat es users sat isfact ion as a variable t o be studied. The present study differs in focusing on the end user satisfaction alone as it considers it a surrogate measure of IS success. Finally, the present study has adapted Bailey and Pearsons (1983) instrument by adding three

new dimensions High Availability of Systems, Implementation of Latest Technology, and Importance-Performance Anal ysi s 102 Ubiquitous Access. These factors were included in response to the needs of the studied company. High Availability of Systems directly affects the employees ability to be productive. Frequent downtimes mean idle time since employees are not able to access the data needed. The senior management has raised this issue since it affects business continuity and can potentially compromise its competitive position. T hus, the IS department is exploring measures to provide continuous access to the companys IS, including the technology clustering that provides real-time backups. Furt hermore, t he dim ension Implem ent at ion of Lat est T echnology is added t o the instrum ent because the company has to keep abreast of recent technologies and solutions available in the market. In order to formulate better solutions for its customers, the company must have first-hand experience in using t he proposed technologies. The third new dim ension is Ubiquit ous Access t hat is supposed t o enable the company t o achieve product ivity object ives anyt im e and anywhere. This means providing a constant connectivity for employees who are often out of office and enabling them to have continuous access to critical applications hosted within the companys netwo r k . Last ly, it should be not ed t hat most of the previous work on IPA was conduct ed in more developed countries, such as the United States and the United Kingdom. No research of this sort has ever been done in Malaysia, which belongs in t he cat egory of developing count ries. References Bacon, D. R. (2003). A comparison of approaches to importance-performance analysis. International Journal of Market Research, 45(1), 55-71. Bailey, J. E., & Pearson, S. W. (1983). Development of a tool for measuring and analyzing computer user satisfaction. Management Science, 29(5), 530-545. Available at http://business.clemson.edu/ISE/html/development_of_a_tool_for_meas.html Delone, W. H., & McLean, E. R. (1992). Information systems success: The quest for the dependent variable. Information Systems Research, 3(1), 60-95. Available at http://business.clemson.edu/ISE/html/information_systems_success__t.html Dolinsky, A. L., & Caputo, R. K. (1991). Adding a competitive dimension to importance-performance

analysis: An application to traditional health care systems. Health Marketing Quarterly, 8(3/4), 6179. Duke, C. R., & Mont, A. S. (1996). Rediscovery performance importance analysis of products. Journal of Product and Brand Management, 5(2), 143-154. Eskildsen, J. K., & Kristensen, K. (2006). Enhancing IPA international. Journal of Productivity and Performance Management, 55(1), 40-60. Farbey, B., Land, F., & Targett, D. (1992). Evaluating investments in IT. Journal of Information Technology, 7, 109-122. Ford, J. B., Joseph, M., & Joseph, B. (1999). IPA as a strategic tool for service marketers: The case of service quality perceptions of business students in New Zealand and the USA. Journal of Services Marketing, 13( 2), 171 186. Graf, L. A., Hemmasi, M., & Nielsen, W. (1992). Importance satisfaction analysis: A diagnostic tool for organizational change. Leadership and Organization Development Journal, 13(6), 8-12. Hisham, N. (2006). Measuring end user computing satisfaction. Unpublished MBA dissertation. University of Malay a. Ives, B., Olson, M., & Baroudi, J. J. (1983). The measurement of user information satisfaction. Communication of the ACM, 26(10), 785-793. Available at http://business.clemson.edu/ISE/html/the_measurement_of_user_inform.html Ainin & Hi sham 103 Joseph, M., Allbrigth, D., Stone, G., Sekhon, Y., & Tinson, J. (2005). IPA of UK and US Bank: Customer perceptions of service delivery technologies. International Journal of Financial Services Management, 1(1), 66 88. Magal, S., & Levenburg, N. M. (2005). Using IPA to evaluate e-business strategies among small businesses. Proceedings of the 38 th Hawaii International Conference on System Sciences; Jan 3-6, 2005; Hilton Waikoloa Village Island of Hawaii, USA Martila, J. A., & James, J. C. (1977). Importance-performance analysis. Journal of Marketing, 2(1), 77-79.

Pitt, L. F., Watson, R. T., & Kavan, C. B. (1995). Service quality: A measure of information systems effectiveness. MIS Quarterly, 19(2), 173185. Sampson, S. E., & Showalter, M. J. (1999). The performance importance respons e function: Observation and implications. The Services Industries Journal, 19(3), 1-25. Shaw, N. C., Delone, W. H., & Niederman, F. (2002). Sources of dissatisfaction in end-user support: An empirical study. Data Base for Advances in Information Systems, 33(2), 4156. Skok, W., Kophamel, A., & Richardson, I. (2001). Diagnosing information systems success: Importanceperformance maps in the health club industry. Information & Management, 38, 409-419. Slack, N. (1994). The importance-performance matrix as a determinant of improvement priority. International Journal of Operations & Production Management, 14(5), 59-76. Weber, K. (2000). Meeting planners perceptions of hotel-chain practices and benefits: An importanceperformance analysis. Cornell Hotel and Restaurant Administration Quarterly, 41(4), 3238. Yeo, A. Y. C. (2003). Examining a Singapore banks competitive superiority using importanceperformance analysis. Journal of American Academy of Business, 3(1/2), 155-161. Biographies Dr. Ainin is asso ciat ed wit h th e Dep artment o f Mark et in g an d I n fo rmation Systems, Faculty of Business and Accountancy, University of Malaya, Kuala Lumpur, Malaysia. She is currently the Dean of the Faculty. Her main areas of research include technology adoption, digital divide, information systems performance, and management educat ion . Nu rh ayati Hish am obtained her Mast ers Degree in Business Adm inistration from University of Malaya. She currently works for a Malaysian information technology company.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 955-0047 Rev-AW RED PS, DSMC Media Operation GuideDocument106 pages955-0047 Rev-AW RED PS, DSMC Media Operation GuideAarudhNatarajNo ratings yet

- LG VRF Control PDFDocument14 pagesLG VRF Control PDFFredie UnabiaNo ratings yet

- Machine Vision and Metrology Workshop-10.Document2 pagesMachine Vision and Metrology Workshop-10.abhayNo ratings yet

- WEEK 2 DSGN8290 The User and InteractionDocument10 pagesWEEK 2 DSGN8290 The User and InteractionMihir SataNo ratings yet

- Laminar Flow CabinetDocument2 pagesLaminar Flow CabinetMario RodriguezNo ratings yet

- Fichtner PDFDocument76 pagesFichtner PDFbacuoc.nguyen356No ratings yet

- JVC Xr-d400sl Service enDocument28 pagesJVC Xr-d400sl Service enenergiculNo ratings yet

- Component List, Switches: Service InformationDocument4 pagesComponent List, Switches: Service InformationJozefNo ratings yet

- MC R20 - Unit-2Document43 pagesMC R20 - Unit-2Yadavilli VinayNo ratings yet

- NXP I2cguideDocument6 pagesNXP I2cguideCarlos Roman Zarza100% (1)

- Software Checklist - RequirementsWorksheetsDocument96 pagesSoftware Checklist - RequirementsWorksheetsOh Oh OhNo ratings yet

- EN ME600 Infusion PumpDocument2 pagesEN ME600 Infusion PumpMohammed MudesirNo ratings yet

- Photowatt Pw500: - 12V Photovoltaic Module - JboxDocument3 pagesPhotowatt Pw500: - 12V Photovoltaic Module - JboxSergeNo ratings yet

- Maiwand Aslami, PMP: Work Experience SkillsDocument1 pageMaiwand Aslami, PMP: Work Experience SkillsFirdaus PanthakyNo ratings yet

- Hydraulic Elevators Basic ComponentsDocument16 pagesHydraulic Elevators Basic ComponentsIkhwan Nasir100% (2)

- Motor ProtectionDocument17 pagesMotor ProtectionHEMANT RAMJINo ratings yet

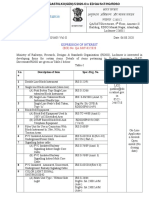

- Qastlko@rdso - Railnet.gov - In: Expression of InterestDocument5 pagesQastlko@rdso - Railnet.gov - In: Expression of InterestSaurabh RanaNo ratings yet

- Augusta WestlandDocument16 pagesAugusta Westlandriteshaggarwal87No ratings yet

- Ministry of Education, Sports & Youth Affairs: Draft DocumentDocument46 pagesMinistry of Education, Sports & Youth Affairs: Draft DocumentEdward MulondoNo ratings yet

- Single Valve Steam Turbine GovernorDocument10 pagesSingle Valve Steam Turbine GovernorPeter TanNo ratings yet

- Software Requirement Engineering: Classification of Requirements & Documentation StandardsDocument67 pagesSoftware Requirement Engineering: Classification of Requirements & Documentation StandardsRabbia KhalidNo ratings yet

- AndreasBossard Web2.0 at UBSDocument10 pagesAndreasBossard Web2.0 at UBSandreasbossard8398No ratings yet

- Analog Electronics IIDocument2 pagesAnalog Electronics IIBhawana RawatNo ratings yet

- Etika Dan Regulasi, UU ITE, HKIDocument23 pagesEtika Dan Regulasi, UU ITE, HKIFahan AdityaNo ratings yet

- Computer Network Lesson PlanDocument3 pagesComputer Network Lesson PlansenthilvlNo ratings yet

- WWW - Manaresults.Co - In: Switch Gear and ProtectionDocument2 pagesWWW - Manaresults.Co - In: Switch Gear and ProtectiontdetretrNo ratings yet

- Ajitem Vijay OakDocument2 pagesAjitem Vijay OakPrijesh RamaniNo ratings yet

- A Cloudy Crystal Ball - Visions of The FutureDocument5 pagesA Cloudy Crystal Ball - Visions of The FuturemercutNo ratings yet

- Arrl 1974 Radio Amateur HandbookDocument706 pagesArrl 1974 Radio Amateur HandbookJorge Jockyman JuniorNo ratings yet