Professional Documents

Culture Documents

Solving XOR Problem Using DNN AIDS

Uploaded by

P MuthulakshmiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Solving XOR Problem Using DNN AIDS

Uploaded by

P MuthulakshmiCopyright:

Available Formats

Solving XOR problem using DNN

Algorithm:

1. prepare training data: Create input-output pairs for the XOR operation.

2. Design DNN model: Create a DNN with input, hidden, and output layers.

3. Forward Propagation: Pass input data through the network to get predictions.

4. Activation Function: Apply a function to introduce non-linearity.

5. Loss Function: Measure the difference between predicted and actual outputs.

6. Backpropagation: Calculate gradients to adjust model parameters.

7. Gradient Descent: Update parameters to minimize prediction error.

8. Train: Iterate through data to improve the model.

9. Validate: Use a separate dataset to check model performance.

10. Test: Assess accuracy on new data.

11. Adjust: Fine-tune model architecture or hyperparameters.

12. Predict: Now, the DNN can predict XOR results accurately.

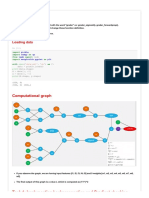

Program:

import numpy as np

import matplotlib.pyplot as plt

# These are XOR inputs

x=np.array([[0,0,1,1],[0,1,0,1]])

# These are XOR outputs

y=np.array([[0,1,1,0]])

# Number of inputs

n_x = 2

# Number of neurons in output layer

n_y = 1

# Number of neurons in hidden layer

n_h = 2

# Total training examples

m = x.shape[1]

# Learning rate

lr = 0.1

# Define random seed for consistent results

np.random.seed(2)

# Define weight matrices for neural network

w1 = np.random.rand(n_h,n_x) # Weight matrix for hidden layer

w2 = np.random.rand(n_y,n_h) # Weight matrix for output layer

# I didnt use bias units

# We will use this list to accumulate losses

losses = []

# I used sigmoid activation function for hidden layer and output

def sigmoid(z):

z= 1/(1+np.exp(-z))

return z

# Forward propagation

def forward_prop(w1,w2,x):

z1 = np.dot(w1,x)

a1 = sigmoid(z1)

z2 = np.dot(w2,a1)

a2 = sigmoid(z2)

return z1,a1,z2,a2

# Backward propagation

def back_prop(m,w1,w2,z1,a1,z2,a2,y):

dz2 = a2-y

dw2 = np.dot(dz2,a1.T)/m

dz1 = np.dot(w2.T,dz2) * a1*(1-a1)

dw1 = np.dot(dz1,x.T)/m

dw1 = np.reshape(dw1,w1.shape)

dw2 = np.reshape(dw2,w2.shape)

return dz2,dw2,dz1,dw1

iterations = 10000

for i in range(iterations):

z1,a1,z2,a2 = forward_prop(w1,w2,x)

loss = -(1/m)*np.sum(y*np.log(a2)+(1-y)*np.log(1-a2))

losses.append(loss)

da2,dw2,dz1,dw1 = back_prop(m,w1,w2,z1,a1,z2,a2,y)

w2 = w2-lr*dw2

w1 = w1-lr*dw1

# We plot losses to see how our network is doing

plt.plot(losses)

plt.xlabel("EPOCHS")

plt.ylabel("Loss value")

def predict(w1,w2,input):

z1,a1,z2,a2 = forward_prop(w1,w2,test)

a2 = np.squeeze(a2)

if a2>=0.5:

print("For input", [i[0] for i in input], "output is 1")# ['{:.2f}'.format(i) for i in x])

else:

print("For input", [i[0] for i in input], "output is 0")

test = np.array([[1],[0]])

predict(w1,w2,test)

test = np.array([[0],[0]])

predict(w1,w2,test)

test = np.array([[0],[1]])

predict(w1,w2,test)

test = np.array([[1],[1]])

predict(w1,w2,test)

output:

For input [1, 0] output is 1

For input [0, 0] output is 0

For input [0, 1] output is 1

For input [1, 1] output is 0

You might also like

- exp4 (1)Document9 pagesexp4 (1)yallasanjay1No ratings yet

- Backpropagation: Loading DataDocument12 pagesBackpropagation: Loading Datasatyamgovilla007_747No ratings yet

- PCS Lab-3Document8 pagesPCS Lab-3Fatima IsmailNo ratings yet

- Exp 3Document9 pagesExp 3Mohammad HasnainNo ratings yet

- PythonDocument10 pagesPythonKalim Ullah MarwatNo ratings yet

- ML-Exp10Document4 pagesML-Exp10godizlatanNo ratings yet

- Lab Report1Document17 pagesLab Report1Md Ifrat RahmanNo ratings yet

- Lab Report AnalysisDocument13 pagesLab Report AnalysisArslan Bin ShabeerNo ratings yet

- MATLAB Programming File for DSP ConceptsDocument31 pagesMATLAB Programming File for DSP ConceptsJasvinder kourNo ratings yet

- Advance AI and ML LABDocument16 pagesAdvance AI and ML LABPriyanka PriyaNo ratings yet

- Test 2 Lab 6Document8 pagesTest 2 Lab 6M7. SotariNo ratings yet

- Machine Learning and Pattern Recognition Minimal Stochastic Variational Inference DemoDocument3 pagesMachine Learning and Pattern Recognition Minimal Stochastic Variational Inference DemozeliawillscumbergNo ratings yet

- Lab Report 2 DSPDocument8 pagesLab Report 2 DSPKhansa MaryamNo ratings yet

- Dhrumil AmlDocument14 pagesDhrumil AmlSAVALIYA FENNYNo ratings yet

- Understanding Z-TransformsDocument8 pagesUnderstanding Z-TransformsCharmil GandhiNo ratings yet

- Ex - No:2 Back Propagation Neural NetworkDocument4 pagesEx - No:2 Back Propagation Neural NetworkGopinath NNo ratings yet

- AIML Lab ProgDocument15 pagesAIML Lab ProgGreen MongorNo ratings yet

- Tutorial NNDocument20 pagesTutorial NN黄琦妮No ratings yet

- Practical 2Document3 pagesPractical 2soham pawarNo ratings yet

- Lab Report1Document17 pagesLab Report1Tanzidul AzizNo ratings yet

- Lab 3 - Operations On SignalsDocument8 pagesLab 3 - Operations On Signalsziafat shehzadNo ratings yet

- Planar Data Classification With One Hidden Layer v5Document19 pagesPlanar Data Classification With One Hidden Layer v5sn3fruNo ratings yet

- Ann Practical AllDocument23 pagesAnn Practical Allmst aheNo ratings yet

- DSP Using Matlab® - 4Document40 pagesDSP Using Matlab® - 4api-372116488% (8)

- Python-Advances in Probabilistic Graphical ModelsDocument102 pagesPython-Advances in Probabilistic Graphical Modelsnaveen441No ratings yet

- Tutorial 1 Report: Digital Signal Processing LabDocument17 pagesTutorial 1 Report: Digital Signal Processing LabTuấnMinhNguyễnNo ratings yet

- 1D and 2D Convolution Experiment No: 2Document3 pages1D and 2D Convolution Experiment No: 2NAVYA BNo ratings yet

- Linear Regression with Gradient DescentDocument43 pagesLinear Regression with Gradient DescentTev WallaceNo ratings yet

- DSP Fundamentals Lecture Note Set #4 More Operations Involving One or Two SignalsDocument5 pagesDSP Fundamentals Lecture Note Set #4 More Operations Involving One or Two SignalsZaid Al-AlshaikhNo ratings yet

- CO429Document4 pagesCO429abdo abdokNo ratings yet

- Software_Laboratory_II_CodeDocument27 pagesSoftware_Laboratory_II_Codeas9900276No ratings yet

- Workshop 8: Numerical Differentiation and IntegrationDocument9 pagesWorkshop 8: Numerical Differentiation and IntegrationLevi GrantzNo ratings yet

- PP - PracticalDocument10 pagesPP - PracticalAnmol RaiNo ratings yet

- Activity#3-Operations On SequencesDocument7 pagesActivity#3-Operations On SequencesAdrianne BastasaNo ratings yet

- CodeDocument11 pagesCodemushahedNo ratings yet

- Mark Goldstein mg3479 A2 CodeDocument4 pagesMark Goldstein mg3479 A2 Codejoziah43No ratings yet

- UntitledDocument6 pagesUntitledmaremqlo54No ratings yet

- Implement Forward and Backward Propagation for Neural NetworkDocument5 pagesImplement Forward and Backward Propagation for Neural NetworkRavi KNo ratings yet

- DSP Lab ReportDocument19 pagesDSP Lab ReportAbhishek Jain100% (1)

- Lab1 Title: Discrete-Time Signals and Operations: Ref: Ingle and Proakis, Digital Signal Processing Using Matlab, p.7Document5 pagesLab1 Title: Discrete-Time Signals and Operations: Ref: Ingle and Proakis, Digital Signal Processing Using Matlab, p.7عيدروس قاسمNo ratings yet

- Noron ThiDocument26 pagesNoron ThiĐức ThánhNo ratings yet

- 16BCB0126 VL2018195002535 Pe003Document40 pages16BCB0126 VL2018195002535 Pe003MohitNo ratings yet

- Deep Neural Network - Application 2layerDocument7 pagesDeep Neural Network - Application 2layerGijacis KhasengNo ratings yet

- Exp 2Document9 pagesExp 2Mohammad HasnainNo ratings yet

- Soft Computing Lab ManualDocument13 pagesSoft Computing Lab ManualSwarnim ShuklaNo ratings yet

- Lab Report 2 Zaryab Rauf Fa17-Ece-046Document9 pagesLab Report 2 Zaryab Rauf Fa17-Ece-046HAMZA ALINo ratings yet

- Trainina A NN BackpropagationDocument6 pagesTrainina A NN Backpropagationarun_1328No ratings yet

- Multivariate Multi Step Time Series Forecasting Using Stacked LSTM Sequence To Sequence Autoencoder in Tensorflow 2 0 KerasDocument9 pagesMultivariate Multi Step Time Series Forecasting Using Stacked LSTM Sequence To Sequence Autoencoder in Tensorflow 2 0 KerasAnish ShahNo ratings yet

- Don’t Worry About Building a Neural Network from ScratchDocument14 pagesDon’t Worry About Building a Neural Network from ScratchGautam KumarNo ratings yet

- IN5400 - Machine Learning For Image AnalysisDocument6 pagesIN5400 - Machine Learning For Image AnalysisJohanne SaxegaardNo ratings yet

- Backprop Training Neural Net Sum OperationDocument6 pagesBackprop Training Neural Net Sum Operationarun_1328No ratings yet

- Calculate effort required to write C programs using Halstead complexity metricsDocument2 pagesCalculate effort required to write C programs using Halstead complexity metricsyusra22100% (1)

- CNN Numpy 1st HandsonDocument5 pagesCNN Numpy 1st HandsonJohn Solomon100% (1)

- Kelompok 3 - Sheet 4Document13 pagesKelompok 3 - Sheet 4Satrya Budi PratamaNo ratings yet

- Oefentt Jaar 3Document11 pagesOefentt Jaar 3Branco SieljesNo ratings yet

- ML House Consumption RegressionDocument4 pagesML House Consumption RegressionSylia BenNo ratings yet

- Generation and Interpretation of Communication SignalsDocument19 pagesGeneration and Interpretation of Communication SignalsSyed F. JNo ratings yet

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"From EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Rating: 2.5 out of 5 stars2.5/5 (2)

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Computer Graphics 4: Bresenham Line Drawing Algorithm, Circle Drawing & Polygon FillingDocument48 pagesComputer Graphics 4: Bresenham Line Drawing Algorithm, Circle Drawing & Polygon FillingareejalmajedNo ratings yet

- DAA 2nd SessionalDocument1 pageDAA 2nd SessionalDr. Mohd ShahidNo ratings yet

- Dif FFT)Document8 pagesDif FFT)AHMED DARAJNo ratings yet

- Introduction to Expert Systems PrinciplesDocument605 pagesIntroduction to Expert Systems Principlesrocky praveenNo ratings yet

- RS Aggarwal Solutions For Class 9 Maths Chapter 5 Coordinate GeometryDocument5 pagesRS Aggarwal Solutions For Class 9 Maths Chapter 5 Coordinate GeometryscihimaNo ratings yet

- Recurrent Neural Network: SUBMITTED BY: Harmanjeet Singh ROLL NO - 1803448 B.Tech, Cse (7) Ctiemt, Shahpur (Jalandhar)Document11 pagesRecurrent Neural Network: SUBMITTED BY: Harmanjeet Singh ROLL NO - 1803448 B.Tech, Cse (7) Ctiemt, Shahpur (Jalandhar)GAMING WITH DEAD PANNo ratings yet

- Chapter 3 Decision TreesDocument12 pagesChapter 3 Decision TreesMark MagumbaNo ratings yet

- Mathematical Modeling: Solving Problems with Math ModelsDocument22 pagesMathematical Modeling: Solving Problems with Math ModelsAnggi AwanNo ratings yet

- Lecture3.11-3.13 NP Theory and Non Deterministic AlgorithmDocument42 pagesLecture3.11-3.13 NP Theory and Non Deterministic AlgorithmSaikat DasNo ratings yet

- Titanic Passenger Survival Prediction ModelDocument18 pagesTitanic Passenger Survival Prediction ModelKSNo ratings yet

- Infix to Postfix Conversion TechniquesDocument41 pagesInfix to Postfix Conversion TechniquesVimal Krishna RaoNo ratings yet

- ML Techniques PresentationDocument45 pagesML Techniques PresentationfareenfarzanawahedNo ratings yet

- Topic3-Taylors, Eulers, R-K Method Engineering m4 PDFDocument15 pagesTopic3-Taylors, Eulers, R-K Method Engineering m4 PDFDivakar c.sNo ratings yet

- r5411205 Information Retrieval SystemsDocument4 pagesr5411205 Information Retrieval SystemssivabharathamurthyNo ratings yet

- 15sp MidtermsolvedDocument12 pages15sp MidtermsolvedIshan JawaNo ratings yet

- Check Digit: Multiple Errors, Such As Two Replacement Errors (12 34) Though, Typically, Double Errors WillDocument4 pagesCheck Digit: Multiple Errors, Such As Two Replacement Errors (12 34) Though, Typically, Double Errors WillMuhamad AbdurahimNo ratings yet

- C++ Pointer To Object - InfoBrother114519Document7 pagesC++ Pointer To Object - InfoBrother114519Ajit KumarNo ratings yet

- Machine Learning Assignment 3Document7 pagesMachine Learning Assignment 3Yellow MoustacheNo ratings yet

- FAMDocument8 pagesFAMAnanya ZabinNo ratings yet

- 1 - Primitive Data Types (2019) - 2Document50 pages1 - Primitive Data Types (2019) - 2Mathew HethrewNo ratings yet

- PowerPoints Organized by Dr. Michael R. Gustafson II, Duke UniversityDocument16 pagesPowerPoints Organized by Dr. Michael R. Gustafson II, Duke UniversityGilang PamungkasNo ratings yet

- Data Structures Chapter 3 SummaryDocument4 pagesData Structures Chapter 3 SummaryExtra WorkNo ratings yet

- Parallel Algorithms Ws 20Document353 pagesParallel Algorithms Ws 20Ismael Abdulkarim AliNo ratings yet

- Building The ALUDocument11 pagesBuilding The ALUGiacomo BecattiNo ratings yet

- Assignment 1 CSC 248Document10 pagesAssignment 1 CSC 248Fareez DausNo ratings yet

- Research Paper On Radix SortDocument8 pagesResearch Paper On Radix Sortpolekymelyh3100% (1)

- VMU DDE MA Computer App Principles Programming LanguagesDocument2 pagesVMU DDE MA Computer App Principles Programming LanguagesRavindra KumarNo ratings yet

- Tch13 Searching and SortingDocument43 pagesTch13 Searching and SortingNora MasoudNo ratings yet

- MechanicsDocument37 pagesMechanicsMyat Htet KoNo ratings yet

- Computer Networks Record: PojitDocument10 pagesComputer Networks Record: Pojitpoojitha ManchikalapatiNo ratings yet