Professional Documents

Culture Documents

Finite-State Spell-Checking With Weighted Language

Uploaded by

Hardik GuptaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Finite-State Spell-Checking With Weighted Language

Uploaded by

Hardik GuptaCopyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/228543643

Finite-State Spell-Checking with Weighted Language and Error Models—

Building and Evaluating Spell-Checkers with Wikipedia as Corpus

Article · July 2012

CITATIONS READS

14 2,025

2 authors:

Tommi A. Pirinen Krister Lindén

UiT The Arctic University of Norway University of Helsinki

35 PUBLICATIONS 383 CITATIONS 135 PUBLICATIONS 1,049 CITATIONS

SEE PROFILE SEE PROFILE

Some of the authors of this publication are also working on these related projects:

ACL SIGUR View project

Open and Language Independent Automata-Based Resource Production Methods for Common Language Research Infrastructure View project

All content following this page was uploaded by Krister Lindén on 02 June 2014.

The user has requested enhancement of the downloaded file.

Finite-State Spell-Checking with Weighted Language and Error

Models—Building and Evaluating Spell-Checkers with Wikipedia as Corpus

Tommi A Pirinen, Krister Lindén

University of Helsinki—Department of Modern Languages

Unioninkatu 40 A—FI-00014 Helsingin yliopisto

{tommi.pirinen,krister.linden}@helsinki.fi

Abstract

In this paper we present simple methods for construction and evaluation of finite-state spell-checking tools using an existing finite-state

lexical automaton, freely available finite-state tools and Internet corpora acquired from projects such as Wikipedia. As an example,

we use a freely available open-source implementation of Finnish morphology, made with traditional finite-state morphology tools, and

demonstrate rapid building of Northern Sámi and English spell checkers from tools and resources available from the Internet.

1. Introduction nearest match in a finite-state representation of dictionar-

1

Spell-checking is perhaps one of the oldest most re- ies.

searched application in the field of language technology, For the purpose of the article, we consider the error model

starting from the mid 20th century (Damerau, 1964). The to be any two-tape finite state automaton mapping any

task of spell-checking can be divided into two categories: string of the error model alphabet to at least one string of

isolated non-word errors and context-based real-word er- the language model alphabet. As an actual implementation

rors (Kukich, 1992). This paper concentrates on checking of Finnish spell-checking, we use a finite-state implemen-

and correcting the first form, but the methods introduced tation of a traditional edit distance algorithm. In the lit-

are extendible to context-aware spell-checking. erature, the edit distance model has usually been found to

To check whether a word is spelled correctly, a language cover over 80 % of the misspellings at distance one (Dam-

model is needed. For this article, we consider a language erau, 1964). Furthermore, as Finnish has a more or less

model to be a one-tape finite-state automaton recognising phonemically motivated orthography, the existence of ho-

valid word forms of a language. In many languages, this mophonic misspellings are virtually non-existent. In other

can be as simple as a word list compiled into a suffix tree words, our base assumption is that the greatest source of er-

automaton. However, for languages with productive mor- rors for Finnish spell-checking is the slip-of-the-finger style

phological processes in compounding and derivation that of typo, for which the edit distance is a good error model.

are capable of creating infinite dictionaries, such as Finnish, The statistical foundation for the language model and the

a cyclic automaton is required. In order to suggest correc- error model in this article is similar to the one described by

tions, the correction algorithm must allow search from an Norvig (2010), which also gives a good overview of the sta-

infinite space. A nearest match search from a finite-state tistical basis for the spelling error correction problem along

automaton is typically required (Oflazer, 1996). The reason with a simple and usable python implementation.

we stress this limitation caused by morphologically com- For practical applications, the spell-checker typically needs

plex languages is that often even recent methods for opti- to provide a small selection of the best matches for the

mizing speed or accuracy suggest that we can rely on finite user to choose from in a relatively short time span, which

dictionaries or acyclic finite automata as language models. means that when defining corrections, it is also necessary

To generate correctly spelled words from a misspelled word to specify their likelihood in order to rank the correction

form, an error model is needed. The most traditional and suggestions. In this article, we show how to use a standard

common error model is the Levenshtein edit distance, at- weighted finite-state framework to include probability esti-

tributed to Levenshtein (1966). In the edit distance algo- mates for both the language model and the error model. For

rithm, the misspelling is assumed to be a finite number of the language model, we use simple unigram training with a

operations applied to characters of a string: deletion, in- Wikipedia corpus with the more common word forms to be

sertion, change, or transposition2 . The field of approxi- suggested before the less common word forms. In the error

mate string matching has been extensively studied since the model, we design the weights in the edit distance automa-

mid 20th century, yielding efficient algorithms for simple ton so that suggestions with a greater Levenshtein-Damerau

string-to-string correction. For a good survey, see Kukich edit distance are suggested after those with fewer errors.

(1992). Research on approximate string matching has also To evaluate the spell-checker even in the simple case of cor-

provided different fuzzy search algorithms for finding the recting non-word errors in isolation, a corpus of spelling

mistakes with expected corrections is needed. Construct-

1

This is the author’s draft; it may differ from the published ing such a corpus typically requires some amount of man-

version ual labour. In this paper, we evaluate the test results both

2 against a manually collected misspelling corpus and against

Transposition is often attributed to an extended Levenshtein-

Damerau edit distance given in (Damerau, 1964) automatically misspelled texts. For a description of the er-

ror generation techniques, see Bigert (2005). Finnish. While the main focus of the article is on the cre-

ation and evaluation of a Finnish finite-state spell-checker,

2. Goal of the paper we also show examples of building and evaluating spell-

In this article, we demonstrate how to build and evalu- checkers for other languages.

ate a spell-checking and correction functionality from an

existing lexical automaton. We present a simple way 3. Methods

to use an arbitrary string-to-string relation transducer as

The framework for implementing the spell-checking func-

a misspelling model for the correction suggestion algo-

tionality in this article is the finite-state library HFST

rithm, and test it by implementing a finite-state form of

(Lindén et al., 2009). This requires that the underlying mor-

the Levenshtein-Damerau edit distance relation. We also

phological description for spell-checking is compiled into a

present a unigram training method to automatically rank

finite-state automaton. For our Finnish and Northern Sámi

spelling corrections, and evaluate the improvement our

examples, we use a traditional linguistic description based

method brings over a correction algorithm using only the

on the Xerox LexC/TwolC formalism (Beesley and Kart-

edit distance. The paper describes a work-in-progress ver-

tunen, 2003) to create a lexical transducer that works as a

sion of a finite state spell-checking method with instruc-

morphological analyzer. As the morphological analyses are

tions for building the speller for various languages and from

not used for the probability weight estimation in this article,

various resources. The language model in the article is an

the analysis level is simply discarded to get a one-tape au-

existing free open-source implementation of Finnish mor-

tomaton serving as a language model. However, the word

phology3 (Pirinen, 2008) compiled with HFST (Lindén et

list of English is directly compiled into a one tape suffix

al., 2009)—a free, featurewise fully compliant implementa-

tree automaton.

tion of the traditional Xerox-style LexC and TwolC tools4 .

As mentioned, the original language model can be as sim-

One aim of this paper is to demonstrate the use of

ple as a list of words compiled into a suffix tree automa-

Wikipedia as a freely available open-source corpus5 . The

ton or as elaborate as a full-fledged morphological descrip-

Wikipedia data is used in this experiment for training the

tion in a finite-state programming language, such as Xerox

lexical automaton with word form frequencies, as well as

LexC and TwolC7 . The words that are found in the trans-

collecting a corpus of spelling errors with actual correc-

ducer are considered correct. The rest are considered mis-

tions.

spelled.

The field of spell-checking is already a widely researched

It has previously been demonstrated how to add weights

topic, cf. the surveys by Kukich (1992) and Schultz and

to a cyclic finite-state morphology using information on

Mihov (2002). This article demonstrates a generic way

base-form frequencies. The technique is further described

to use freely available resources for building finite-state

by Lindén and Pirinen (2009). In the current article, the

spell-checkers. The purpose of using a basic finite-state

word form counts are based on data from the Wikipedia.

algebra to create spell-checkers in this article is two-fold.

The training is in principle a matter of collecting the cor-

Firstly, the amount of commonly known implementations

pus strings and their frequencies and composing them with

of morphological language models under different finite-

the finite-state lexical data. Deviating from the article by

state frameworks suggest that a finite-state morphology is

Lindén and Pirinen (2009), we only count full word forms.

feasible as a language model for morphologically complex

No provisions for compounding of word forms based on the

languages. Secondly, by demonstrating the building of an

training data are made, i.e. the training data is composed

application for spell-checking with a freely available open-

with the lexical model. This gives us an acyclic lexicon

source weighted finite-state library, we hope to outline a

with the frequency data for correctly spelled words.

generally useful approach to building open-source spell-

The actual implementation goes as follows. Clean up the

checkers.

Wikipedia dump to extract the article content from XML

To demonstrate the feasibility of building a spell-checker

and Wikipedia mark-up by removing the mark-up and the

from freely available resources, we use basic composition

contents of mark-up that does not constitute running text,

and n-best-path search with weighted finite-state automata,

leaving only the article content untouched. The tokeniza-

which allows us to use multiple arbitrary language and er-

tion is done by splitting text at white space characters and

ror models as permitted by the finite-state algebra. To the

separating word final punctuation. Next we use the spell-

best of our knowledge, no previous research has used or

checking automaton to acquire the correctly spelled word

documented this approach.

forms from the corpora, and count their frequencies. The

To further evaluate plausibility of rapid conversion from

formula for converting the frequencies f of a token in the

morphological or lexical automata to spell checkers we

corpus to a weight in the finite-state lexical transducer is

also sought and picked up a free open implementation of ft

Wt = − log CS , where CS is the corpus size in tokens.

the Northern Sámi morphological analyzer6 as well as a

The resulting strings with weights can then be compiled

word list of English from (Norvig, 2010), and briefly tested

into paths of a weighted automaton, i.e. into an acyclic tree

them with the same methods and similar error model as for

automaton with log probability weights in the final states

3

http://home.gna.org/omorfi of the word forms. The original language model is then

4

http://hfst.sf.net

5 7

Database dumps available at http://download. It is also possible to convert aspell and hunspell style de-

wikimedia.org scriptions into transducers. Preliminary scripts exist in http:

6

http://divvun.no //hfst.sf.net/.

weighted by setting all word forms not found in the corpus on. In this case the frequency data obtained from Wikipedia

to a weight greater than the word with frequency of one, e.g. will give us the popularity order of ‘ja’ > ‘jo’. A fraction

1

Wmax = − log CS+1 . The simplest way to achieve this is of the weighted edit distance two transducer is given in Fig-

?

to compose the Σ automaton with final weight Wmax with ure 1. The transducer in the figure displays a full edit dis-

the unweighted cyclic language model. Finally, we take the tance two transducer for a language with two symbols in

union of the cyclic model and the acyclic model. The word the alphabet; an edit distance transducer for a full alpha-

forms seen in the corpus will now have two weights, but the bet is simply a union of such transducers for each pair of

lexicon can be pruned to retain only the most likely reading symbols in the language9 .

for each string.

For example in the Finnish Wikipedia there were

17,479,297 running tokens8 , and the most popular of these

is ‘ja’ and with 577,081 tokens, so in this language model

577081

the Wja = − log 17479297 ≈ 4.44. The training material

is summarized in the Table 1. The token count is the to-

tal number of tokens after preprocessing and tokenization.

The unique strings is the number of unique tokens that be-

longed to the language model, i.e. the size of actual train-

ing data, after uniqification and discarding potential mis-

spellings and other strings not recognized by the language

model. For this reason the English training model is rather

small, despite the relative size of the corpus, since the finite

language model only covered a very small portion of the

unique tokens.

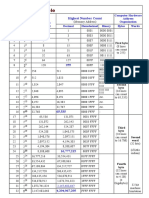

Language Finnish Northern Sámi English

Token count 17,479,297 258,366 2,110,728,338

Unique language strings 968,996 44,976 34,920

Download size 956 MiB 8.7 MiB 5.6 GiB

Version used 2009-11-17 2010-02-22 2010-01-30

Table 1: Token counts for wikipedia based training material

For finding corrections using the finite-state methodol-

ogy, multiple approaches with specialized algorithms have Figure 1: Edit distance transducer of alphabet a, b length

been suggested, e.g. (Oflazer, 1996; Schulz and Mihov, two and weight π.

2002; Huldén, 2009). In this article, we use a regular

weighted finite-state transducer to represent a mapping of

misspellings to correct forms. This allows us to use any To get a ranked set of spelling correction suggestions, we

weighted finite-state library that implements composition. simply compile the misspelled word into a path automa-

One of the simplest forms of mapping misspellings to cor- ton Tword . The path automaton is composed with the cor-

rect strings is the edit distance algorithm usually attributed rection relation TE —in this case the weighted edit dis-

to Levenshtein (1966) and furthermore in the case of spell- tance two transducer—to get an automaton that contains

checking to Damerau (1964). A finite-state automaton rep- all the possible spelling corrections Tsug = Tword ◦ TE .

resentation is given by e.g. Schulz and Mihov (2002). A We then compose the resulting automaton with the original

transducer that corrects strings can be any arbitrary string- weighted lexical data TL to find the string corrections that

to-string mapping automaton, and can be weighted. In this are real words of the language model Tf = Tsug ◦ TL . The

article, we build an edit distance mapping transducer allow- resulting transducer now contains a union of words with the

ing two edits. combined weight of the frequency of the word form and the

Since the error model can also be weighted, we use the weight of the edit distance. From this transducer, a ranked

weight Wmax as the edit weight, which is greater than any list of spelling suggestions is extracted by a standard n-best-

of the weights given by the language model. As a conse- path algorithm listing unique suggestions.

quence, our weighted edit distance will function like the

traditional edit distance algorithm when generating the cor- 4. Test Data Sets

rections for a language model, i.e. any correct string with For the Finnish test material, we use two types of samples

edit distance one is considered to be a better correction than extracted from Wikipedia. First, we use a hand-picked se-

a misspelling with edit distance two. For example assuming lection of 761 misspelled strings found by browsing the

misspelling ‘jq’ for ‘ja’, the error model would find ‘ja’ at

an edit distance of Wmax , but also e.g. ‘jo’ already and so 9

For the source code of the Finnish edit distance trans-

ducer in the HFST framework, see http://svn.gna.

8

We used relatively naive preprocessing and tokenization, org/viewcvs/omorfi/trunk/src/suggestion/

splitting at spaces and filtering html and Wikipedia markup edit-distance-2.text

strings that the speller rejected. These strings were man- Material Rank 1 2 3 4 Lower No rank Total

Weighted edit distance 2

ually corrected using a native reader’s best judgement from Finnish 371 118 65 33 103 84 761

reading the misspelled word in context to achieve a gold 49 % 16 % 9% 4% 14 % 11 % 100 %

Northern 2221 697 430 286 2743 2732 9115

standard for evaluation. Sámi 24 % 8% 5% 3% 30 % 30 % 100 %

Another larger set of approximately 10,000 evaluation English 8739 2695 1504 940 3491 17738 35106

25 % 8% 4% 3% 10 % 51 % 100 %

strings was created by using the strings from the same Wikipedia word form frequencies and edit distance 2

Wikipedia corpus, and automatically introducing spelling Finnish 451 105 50 22 62 84 761

59 % 14 % 7% 3% 8% 11 % 100 %

errors similar to the approach described by Bigert et al. Northern 2421 745 427 266 2518 2732 9115

(2003), using isolated word Damerau-Levenshtein type er- Sámi 27 % 8% 5% 3% 28 % 30 % 100 %

English 9174 2946 1489 858 2902 17738 35106

rors with a probability of approximately 0.33 % per char- 26 % 8% 4% 2% 8% 51 % 100 %

acter. This error model could also be considered an error

model applied in reverse compared to the error model used

Table 2: Precision of suggestion algorithms with real

when correcting misspelled strings. As there is nothing lim-

spelling errors

iting the number of errors generated per word except the

word length, this error model may introduce words with an

edit distance greater than two. Material Rank 1 2 3 4 Lower No rank Total

As the Northern Sámi gold standard, we used the test suite Weighted edit distance 2

Finnish 4321 1125 565 351 1781 1635 10076

included in the svn distribution10 . It seems to contain a set 43 % 11 % 6% 3% 18 % 16 % 100 %

of common typos. Northern 1269 257 136 80 528 7730 10000

Sámi 13 % 3% 1% 1% 5% 77 % 100 %

As the English gold standard for evaluation, we use the English 4425 938 337 290 1353 2657 10000

Birkbeck spelling error corpus referred to in (Norvig, 44 % 10 % 3% 3% 14 % 27 % 100 %

Wikipedia word form frequencies and edit distance 2

2010). The corpus has restricted free licensing, but the re- Finnish 4885 1128 488 305 1407 1635 10076

strictions prohibit its use as training material in a free open 49 % 11 % 5% 3% 14 % 16 % 100 %

Northern 1726 253 76 29 186 7730 10000

source project. Sámi 17 % 3% 1% 1% 2% 77 % 100 %

English 5584 795 307 196 461 2657 10000

56 % 8% 3% 2% 5% 27 % 100 %

5. Evaluation

To evaluate the correction algorithm, we use the two data Table 3: Precision of suggestion algorithms with generated

sets introduced in the previous section. However, we use a spelling errors

slightly different error model to automatically correct mis-

spellings than we use for generating them, i.e. some errors

exceeding the edit distance of two are unfixable by the error

model we use for correction. methods, because the correct word form is probably non-

The evaluation of the correction suggestion quality is given existent in the training corpus, and the multi-part productive

in Tables 2 and 3. The Table 2 contains precision values for compound with an ambiguous segmentation produces lots

the spelling errors from real texts, and Table 3 for the auto- of nearer matches at edit distance one. A more elaborate

matically introduced spelling errors. The precision is mea- error model considering haplology as a misspelling with a

sured by ranked suggestions. In the tables, we give the re- weight equal or less than a single traditional edit distance

sults separately for ranks 1—4, and for the remaining lower would of course improve the suggestion quality in this case.

ranks. The lower ranks ranged from 5—440 where the The number of words getting no ranks is common to both

number of total suggestions ranged from 1—600. In the last methods. They indicate the spelling errors for which the

column, we have the cases where a correctly written word correct form was neither among the ones covered by the er-

could not be found with the proposed suggestion algorithm. ror model for edit distance two nor in the language model.

The tables contain both the results for the weighted edit dis- A substantial number are cases which were not consid-

tance relation, and for a combination of the weighted edit ered in the error model, e.g. a missing space causing run-

distance relation and the word form frequency data from on words (‘ensisijassa’ instead of ‘ensi sijassa’ in the first

Wikipedia.11 place). A good number also comes from spoken or infor-

As a first impression we note that mere Wikipedia train- mal language forms for very common words, which tend

ing does improve the results in all cases; the number of to deviate more than edit distance two (‘esmeks’ instead of

suggestions in first position rises in all test sets and lan- ‘esimerkiksi’ for example), with a few more due to miss-

guages. This suggests that more mistakes are made in com- ing forms in the language model. E.g. ‘bakterisidin’ is one

mon words than in rare ones, since the low ranking word edit from ‘bakterisidina’ as bactericide, but the correction

counts did not increase as a result of Wikipedia training. is not made because the word does not exist in the language

In the Finnish tests, haplological cases like ‘kokonais- model. These error types are correctable by adding words

malmivaroista’ from total ore resources spelled as ‘konais- to the lexicon, i.e. the language model, e.g. using special-

malmivaroista’ came in at the bottom of the list for both purpose dictionaries, such as spoken language or medical

dictionaries. Finally there is a handful of errors that seem

10

https://victorio.uit.no/langtech/trunk/ legitimate spelling mistakes of more than two edits (‘asso-

gt/sme/src/typos.txt sioitten’ instead of ‘assosiaatioiden’). For these cases, a

11 different error model than the basic edit distance might be

For full tables and test logs, see http://home.gna.

org/omorfi/testlogs. necessary.

For the Northern Sámi spelling error corpus we note that a is expandable for at least a non-homogeneous non-unit-

large amount of errors is not covered by the error model. weight edit distance with context restrictions. However, it

This means that the error model is not sufficient for North- does not yet cater to general weighted language models.

ern Sámi spell checking as we can see a number of errors Other manual extensions to the spelling error model should

with edit distance greater than 2, e.g. ‘sáddejun’ instead of also be tested. Our method ensures that arbitrary weighted

‘sáddejuvvon’. relations can be used. Especially the use of a non-

Comparing our English test results with previous research homogeneous non-unit-length edit distance can easily be

using implementations of the same language and error achieved. Since it has been successfully used in e.g. hun-

model, we reiterate that a great number of words are out spell13 , it should be further evaluated. Other obvious and

of reach by an error model of mere edit distance 2. Some common improvements to the edit distance model is to

of the test words are even word usage errors, such as ‘gone’ scale the weights of the edit distance by the physical dis-

in stead of ‘went’, but unfortunately they were intermixed tance of the keys on a QWERTY keyboard.

with the other spelling error material and we did not have The acquisition of an error model or probabilities of er-

time to remove them from the test corpus. The rest of the rors in the current model is also possible (Brill and Moore,

spelling errors beyond edit distance 2 are mostly caused 2000), but this requires the availability of an error corpus

by English orthography being relatively distant from pro- containing a large (representative) set of spelling errors and

nunciation, such as ‘negoshayshauns’ in stead of ‘negotia- their corrections, which usually are not available nor easy

tions’, which usually are corrected with very different error to create. One possible solution for this may of course be to

models such as soundex and other phonetic keys as demon- implement an adaptive error model that modifies the prob-

strated by e.g. Mitton (2009). The results of the evaluation abilities of the errors for each correction made by user.

of the correction suggestions show a similar tendency as the The methods were only evaluated on languages with sub-

one found in the original article by Norvig (2010). stantial resources, but the use of freely available language

The impact on performance when using non-optimized resources and toolkits makes the proposed methods for

methods to check spelling and get suggestion lists was creating spell-checkers interesting for less resourced lan-

not thoroughly measured, but to give an impression of the guages as well, since most written languages already have

general applicability of the methods, we note that for the text corpora, word lists and inflectional descriptions.

Finnish material of generated misspellings, the speed of The next step is to improve the free open-source Voikko14

spell-checking was 3.18 seconds for 10,000 words or ap- spell-checking library with the HFST transducer-based

prox. 3,000 words per second, and the speed of generat- spell-checking library. Voikko has been successfully used

ing suggestion lists, i.e. all possible corrections for 10,000 in open-source software such as OpenOffice.org, Mozilla

misspelled words took 10,493 seconds, i.e. on the aver- Firefox, and the Gnome desktop (in the enchant spell-

age it took approx. 1 second to generate all the sugges- checking library).

tions for each misspelled word, when measured with the

GNU time utility and the hfst-omor-evaluate pro- 7. Conclusions

gram from the HFST toolkit, which batch processes spell- In this article we have demonstrated an approach for creat-

checking tasks on tokenized input and evaluates precision ing spell-checkers from various language models—ranging

and recall against a correction corpus. The space require- from simple word lists to complex full-fledged implementa-

ments for the Finnish spell checking automata are 9.2 MiB tions of morphology—built into a finite-state automata. We

for the Finnish morphology and 378 KiB for the Finnish also demonstrated a simple approach to training the mod-

edit distance two automaton with an alphabet size of 72. As els using word frequency data extracted from Wikipedia.

a comparison, the English language model obtained from Further, we have presented a construction of a simple edit

the word list is only 3.2 MiB in size, and correspondingly distance error model in the form of a weighted finite-state

the error model 273 KiB with an alphabet size of 54. transducer, and proven usability of this basic finite state

approach by evaluating the resulting spell-checkers against

6. Discussion both manually collected smaller and automatically created

The obvious and popular development is to extend the lan- larger error corpora. Given the amount of finite-state im-

guage model to support n-gram dictionaries with n > 1, plementations of morphological language models it seems

which has been shown to be a successful technique for reasonable to expect that general finite-state methods and

English e.g. by Mays (1991). The extension using the language models can support spell-checking for a large ar-

same framework is not altogether trivial for a language like ray of languages. The methods may be especially useful

Finnish, where the number of forms and unique strings are for less resourced languages, since most written languages

considerable giving most n-grams a very low-frequency. already have text corpora, word lists and inflectional de-

Even if the speed and resource use for spell-checking and scriptions.

correction was found to be reasonable, it may still be inter- The fact that arbitrary weighted two-tape automata may be

esting to optimize for speed as has been shown in the lit- used for implementing error models suggests that it is rela-

erature (Oflazer, 1996; Schulz and Mihov, 2002; Huldén, tively easy to implement different error models with avail-

2009). At least the last of these is readily available as able open-source finite-state toolkits. We also showed that

an open-source finite-state implementation in foma12 , and

13

http://hunspell.sf.net

12 14

http://foma.sf.net http://voikko.sf.net/

combining the basic edit distance error model with a sim- Kemal Oflazer. 1996. Error-tolerant finite-state recogni-

ple unigram frequency model already improves the quality tion with applications to morphological analysis and

of the error corrections. We also note that even using a spelling correction. Comput. Linguist., 22(1):73–89.

basic finite-state transducer algebra from a freely available Tommi Pirinen. 2008. Suomen kielen äärellistilainen

finite-state toolkit and no specialized algorithms, the speed automaattinen morfologinen analyysi avoimen

and memory requirements of the spell-checking seems suf- lähdekoodin menetelmin. Master’s thesis, Helsin-

ficient for typical interactive usage. gin yliopisto.

Klaus Schulz and Stoyan Mihov. 2002. Fast string correc-

8. Acknowledgements tion with levenshtein-automata. International Journal of

We thank our colleagues in the HFST research team and Document Analysis and Recognition, 5:67–85.

the anonymous reviewers for valuable suggestions regard-

ing the article.

9. References

Kenneth R Beesley and Lauri Karttunen. 2003. Finite State

Morphology. CSLI publications.

Johnny Bigert, Linus Ericson, and Antoine Solis. 2003.

Autoeval and missplel: Two generic tools for automatic

evaluation. In Nodalida 2003, Reykjavik, Iceland.

Johnny Bigert. 2005. Automatic and unsupervised meth-

ods in natural language processing. Ph.D. thesis, Royal

institute of technolog (KTH).

Eric Brill and Robert C. Moore. 2000. An improved error

model for noisy channel spelling correction. In ACL ’00:

Proceedings of the 38th Annual Meeting on Association

for Computational Linguistics, pages 286–293, Morris-

town, NJ, USA. Association for Computational Linguis-

tics.

F J Damerau. 1964. A technique for computer detection

and correction of spelling errors. Commun. ACM, (7).

Måns Huldén. 2009. Fast approximate string matching

with finite automata. Procesamiento del Lenguaje Nat-

ural, 43:57–64.

Karen Kukich. 1992. Techniques for automatically cor-

recting words in text. ACM Comput. Surv., 24(4):377–

439.

V. I. Levenshtein. 1966. Binary codes capable of correct-

ing deletions, insertions, and reversals. Soviet Physics—

Doklady 10, 707710. Translated from Doklady Akademii

Nauk SSSR, pages 845–848.

Krister Lindén and Tommi Pirinen. 2009. Weighting finite-

state morphological analyzers using hfst tools. In Bruce

Watson, Derrick Courie, Loek Cleophas, and Pierre

Rautenbach, editors, FSMNLP 2009, 13 July.

Krister Lindén, Miikka Silfverberg, and Tommi Pirinen.

2009. Hfst tools for morphology—an efficient open-

source package for construction of morphological ana-

lyzers. In Cerstin Mahlow and Michael Piotrowski, edi-

tors, sfcm 2009, volume 41 of Lecture Notes in Computer

Science, pages 28—47. Springer.

Eric Mays, Fred J. Damerau, and Robert L. Mercer. 1991.

Context based spelling correction. Inf. Process. Man-

age., 27(5):517–522.

Roger Mitton. 2009. Ordering the suggestions of a

spellchecker without using context*. Nat. Lang. Eng.,

15(2):173–192.

Peter Norvig. 2010. How to write a spelling correc-

tor. Web Page, Visited February 28th 2010, Available

http://norvig.com/spell-correct.html.

View publication stats

You might also like

- Creating and Weighting Hunspell Dictionaries As FiDocument17 pagesCreating and Weighting Hunspell Dictionaries As FiAvraham ZentliNo ratings yet

- The Design of A System For The Automatic Extraction of A Lexical Database Analogous To Wordnet From Raw TextDocument8 pagesThe Design of A System For The Automatic Extraction of A Lexical Database Analogous To Wordnet From Raw Textmusic2850No ratings yet

- Paraphrasing For Style: Weixu Alan Rit Ter W Illiam B. Dolan Ral PH Grishman Colin Cher R YDocument16 pagesParaphrasing For Style: Weixu Alan Rit Ter W Illiam B. Dolan Ral PH Grishman Colin Cher R Ytishubayan3644No ratings yet

- Logical Approach To GrammarDocument59 pagesLogical Approach To GrammarJacaNo ratings yet

- Totalrecall: A Bilingual Concordance For Computer Assisted Translation and Language LearningDocument4 pagesTotalrecall: A Bilingual Concordance For Computer Assisted Translation and Language LearningMichael Joseph Wdowiak BeijerNo ratings yet

- Vintext CVPR21Document10 pagesVintext CVPR21Nguyễn Quốc TuấnNo ratings yet

- 1378 7760 1 PBDocument6 pages1378 7760 1 PBРома КравчукNo ratings yet

- Character-Based Pivot Translation For Under-Resourced Languages and DomainsDocument11 pagesCharacter-Based Pivot Translation For Under-Resourced Languages and DomainsRaedmemphisNo ratings yet

- Language and Dialect Identification of Cuneiform TDocument11 pagesLanguage and Dialect Identification of Cuneiform TRomuald DionNo ratings yet

- Bert, Elmo, Use and Infersent Sentence Encoders: The Panacea For Research-Paper Recommendation?Document5 pagesBert, Elmo, Use and Infersent Sentence Encoders: The Panacea For Research-Paper Recommendation?AndreansyahNo ratings yet

- Tandem LSTM-SVM Approach For Sentiment AnalysisDocument6 pagesTandem LSTM-SVM Approach For Sentiment AnalysisクォックアンNo ratings yet

- English-Hindi Translation in 21 DaysDocument5 pagesEnglish-Hindi Translation in 21 DaysAdonis KumNo ratings yet

- Stress Test For Bert and Deep Models: Predicting Words From Italian PoetryDocument23 pagesStress Test For Bert and Deep Models: Predicting Words From Italian PoetryDarrenNo ratings yet

- Using Webcorp in The Classroom For Building Specialized DictionariesDocument13 pagesUsing Webcorp in The Classroom For Building Specialized DictionariesNatalei KublerNo ratings yet

- KoreanDocument6 pagesKoreanBaini MustafaNo ratings yet

- Correcting ESL Errors Using Phrasal SMT Techniques: Chris Brockett, William B. Dolan, and Michael GamonDocument8 pagesCorrecting ESL Errors Using Phrasal SMT Techniques: Chris Brockett, William B. Dolan, and Michael GamonJames BrentNo ratings yet

- Koehn 2003 SPBDocument7 pagesKoehn 2003 SPBMotivatioNetNo ratings yet

- Purepos 2.0: A Hybrid Tool For Morphological DisambiguationDocument7 pagesPurepos 2.0: A Hybrid Tool For Morphological DisambiguationGyörgy OroszNo ratings yet

- The Theory of Automata in Natural Language ProcessingDocument6 pagesThe Theory of Automata in Natural Language ProcessingAaron Carl FernandezNo ratings yet

- Using Synonyms For Arabic-to-English Example-BasedDocument11 pagesUsing Synonyms For Arabic-to-English Example-BasedAdel BOULKHESSAIMNo ratings yet

- Collocation Translation AcquisitionDocument8 pagesCollocation Translation AcquisitionMohammed A. Al Sha'rawiNo ratings yet

- Generating Phrasal and Sentential Paraphrases: A Survey of Data-Driven MethodsDocument48 pagesGenerating Phrasal and Sentential Paraphrases: A Survey of Data-Driven MethodsTryerNo ratings yet

- Comparing Sanskrit Texts For Critical EditionsDocument8 pagesComparing Sanskrit Texts For Critical EditionsKrishna KumarNo ratings yet

- Sentence Processing - Study 1Document24 pagesSentence Processing - Study 1Anindita PalNo ratings yet

- Enhancing Tagging Performance by Combining Knowledge SourcesDocument13 pagesEnhancing Tagging Performance by Combining Knowledge SourcesBengt HörbergNo ratings yet

- Sentence Boundary Disambiguation - KannadaDocument3 pagesSentence Boundary Disambiguation - KannadatelugutalliNo ratings yet

- Machine Translation For English To KannaDocument8 pagesMachine Translation For English To Kannasiva princeNo ratings yet

- Conversion of NNLM To Back Off Language Model in ASRDocument4 pagesConversion of NNLM To Back Off Language Model in ASREditor IJRITCCNo ratings yet

- Implementing A Japanese Semantic Parser Based On Glue ApproachDocument8 pagesImplementing A Japanese Semantic Parser Based On Glue ApproachLam Sin WingNo ratings yet

- Inlg 19 TL DR Writeup 4Document7 pagesInlg 19 TL DR Writeup 4Ismail NajimNo ratings yet

- SSICT-2023 Paper 5Document4 pagesSSICT-2023 Paper 5Bùi Nguyên HoàngNo ratings yet

- Evaluating Computational Language Models With Scaling Properties of Natural LanguageDocument34 pagesEvaluating Computational Language Models With Scaling Properties of Natural LanguageAshwini SDNo ratings yet

- A Classifier-Based Approach To Preposition and Determiner Error Correction in L2 EnglishDocument8 pagesA Classifier-Based Approach To Preposition and Determiner Error Correction in L2 EnglishSajadAbdaliNo ratings yet

- Unsupervised Learning of The Morphology PDFDocument46 pagesUnsupervised Learning of The Morphology PDFappled.apNo ratings yet

- Word Sense Disambiguation Methods Applied To English and RomanianDocument8 pagesWord Sense Disambiguation Methods Applied To English and RomanianLeonNo ratings yet

- Vickrey - Word-Sense Disambiguation For Machine TranslationDocument8 pagesVickrey - Word-Sense Disambiguation For Machine TranslationPedor RomerNo ratings yet

- Yirdaw 2012Document8 pagesYirdaw 2012Getachew A. AbegazNo ratings yet

- Document PDFDocument6 pagesDocument PDFTaha TmaNo ratings yet

- Dept of CSE, AIET, Mijar 1Document13 pagesDept of CSE, AIET, Mijar 1Ravish ShankarNo ratings yet

- Dept of CSE, AIET, Mijar 1Document13 pagesDept of CSE, AIET, Mijar 1Ravish ShankarNo ratings yet

- Trigram 16Document36 pagesTrigram 16blaskoNo ratings yet

- KanadaDocument4 pagesKanadagado borifanNo ratings yet

- NICT ATR Chinese-Japanese-English Speech-To-Speech Translation SystemDocument5 pagesNICT ATR Chinese-Japanese-English Speech-To-Speech Translation SystemFikadu MengistuNo ratings yet

- Master Thesis Web ServicesDocument7 pagesMaster Thesis Web Servicesashleyjonesjackson100% (2)

- Text Classification For Arabic Words Using Rep-TreeDocument8 pagesText Classification For Arabic Words Using Rep-TreeAnonymous Gl4IRRjzNNo ratings yet

- GrammarDocument10 pagesGrammarapi-235898453No ratings yet

- A Dictionary and Morphological Analyser For EnglishDocument3 pagesA Dictionary and Morphological Analyser For EnglishFaizal White KnightNo ratings yet

- Evolving EnglishDocument2 pagesEvolving EnglishalierfanrezaNo ratings yet

- Topic Segmentation For Textual Document Written in Arabic LanguageDocument10 pagesTopic Segmentation For Textual Document Written in Arabic LanguageMaya HsNo ratings yet

- Literature SurveyDocument2 pagesLiterature Surveyrohithrr825No ratings yet

- Smithcomb 2Document7 pagesSmithcomb 2Paul RussellNo ratings yet

- A Novel Unsupervised Corpus-Based StemmingDocument16 pagesA Novel Unsupervised Corpus-Based StemmingOffice WorkNo ratings yet

- SLTC 2008 ArgawDocument2 pagesSLTC 2008 ArgawDersoNo ratings yet

- L C - W E U C: Earning Ross Lingual ORD Mbeddings With Niversal OnceptsDocument8 pagesL C - W E U C: Earning Ross Lingual ORD Mbeddings With Niversal OnceptsijwscNo ratings yet

- Automatic English-to-Korean Text Translation of Telegraphic Messages in A Limited DomainDocument6 pagesAutomatic English-to-Korean Text Translation of Telegraphic Messages in A Limited DomaingadirNo ratings yet

- 7 Formal Systems and Programming Languages: An IntroductionDocument40 pages7 Formal Systems and Programming Languages: An Introductionrohit_pathak_8No ratings yet

- A Fast Morphological Algorithm With Unknown Word Guessing Induced by A Dictionary For A Web Search EngineDocument8 pagesA Fast Morphological Algorithm With Unknown Word Guessing Induced by A Dictionary For A Web Search EngineFaizal White KnightNo ratings yet

- Chapter 2 - Vector Space ClusteringDocument59 pagesChapter 2 - Vector Space ClusteringromoNo ratings yet

- Literature Review On Automatic Speech RecognitionDocument7 pagesLiterature Review On Automatic Speech RecognitionafdtsebxcNo ratings yet

- Easa Sib 2022-08 1Document2 pagesEasa Sib 2022-08 1Frank MasonNo ratings yet

- Static/dynamic Contact FEA and Experimental Study For Tooth Profile Modification of Helical GearsDocument9 pagesStatic/dynamic Contact FEA and Experimental Study For Tooth Profile Modification of Helical Gearsanmol6237No ratings yet

- 65b FlyktDocument3 pages65b FlyktKai SanNo ratings yet

- Laser Third Edition b2 WB Unit 3 TechnologyDocument8 pagesLaser Third Edition b2 WB Unit 3 TechnologyBob MurleyNo ratings yet

- Briefing Graphic DesignersDocument3 pagesBriefing Graphic DesignersWayne FarleyNo ratings yet

- Schematic, Amplifier Channel, Ex 4000-B Schematic, Amplifier Channel, Ex 4000-BDocument6 pagesSchematic, Amplifier Channel, Ex 4000-B Schematic, Amplifier Channel, Ex 4000-BsolidmfXD1995No ratings yet

- DC - OA - LF Bharti MPCG - OMDocument1 pageDC - OA - LF Bharti MPCG - OMDikshant ETINo ratings yet

- SriDocument123 pagesSriUgochukwu FidelisNo ratings yet

- G5Baim Artificial Intelligence Methods: Graham KendallDocument51 pagesG5Baim Artificial Intelligence Methods: Graham KendallAltamash100% (1)

- OS RecordDocument19 pagesOS RecordKAVIARASU.DNo ratings yet

- QR Code in School Education-Oer Digital Content C.PALANISAMY, M.SC (Bot) ., M.Ed., M.Phil., M.A (Yoga) ., M.SC (Psy) .,PGDCA.Document5 pagesQR Code in School Education-Oer Digital Content C.PALANISAMY, M.SC (Bot) ., M.Ed., M.Phil., M.A (Yoga) ., M.SC (Psy) .,PGDCA.ramNo ratings yet

- Á1058Ñ Analytical Instrument Qualification: First Supplement To USP 40-NF 35Document8 pagesÁ1058Ñ Analytical Instrument Qualification: First Supplement To USP 40-NF 35felipemolinajNo ratings yet

- Helsinki Manifesto 201106Document10 pagesHelsinki Manifesto 201106European Network of Living LabsNo ratings yet

- OltDocument4 pagesOltjeffer246086No ratings yet

- Super Decisions V.3.0: Tutorial 1 - Building Hierarchical Pairwise Comparison ModelsDocument59 pagesSuper Decisions V.3.0: Tutorial 1 - Building Hierarchical Pairwise Comparison ModelsYulizar WidiatamaNo ratings yet

- Course Outline June 2020Document3 pagesCourse Outline June 2020nurul syafiqah12No ratings yet

- Take Home Quizz - Exec HMDocument5 pagesTake Home Quizz - Exec HMbeshfrend'z0% (1)

- JAWABAN Soal MTCNADocument5 pagesJAWABAN Soal MTCNAB. Jati Lestari100% (1)

- TESSA® APM - FunctionsDocument15 pagesTESSA® APM - FunctionsHiro ItoNo ratings yet

- Assignment 8dbmsDocument3 pagesAssignment 8dbmssubhamNo ratings yet

- Fall 2019 PEE I LAB 5Document25 pagesFall 2019 PEE I LAB 5Andrew Park0% (1)

- Final Project ReportDocument43 pagesFinal Project ReportUtsho GhoshNo ratings yet

- Powers of 2 Table - Vaughn's SummariesDocument2 pagesPowers of 2 Table - Vaughn's SummariesAnonymous NEqv0Uy7KNo ratings yet

- AS9120 Certification Infosheet 2017Document2 pagesAS9120 Certification Infosheet 2017balajiNo ratings yet

- 1.question Bank - IGMCRI (Anatomy)Document11 pages1.question Bank - IGMCRI (Anatomy)tanushri narendranNo ratings yet

- Rei Das OptionsDocument2 pagesRei Das OptionsFagner VieiraNo ratings yet

- SOP - TWM - Asset Management - v1Document12 pagesSOP - TWM - Asset Management - v1Abdul HafizNo ratings yet

- A Literature Review of The Use of Web 2.0 Tools in Higher Education by Conole - Alevizou - 2010Document111 pagesA Literature Review of The Use of Web 2.0 Tools in Higher Education by Conole - Alevizou - 2010Kangdon LeeNo ratings yet

- Ceng223 SyllabusDocument2 pagesCeng223 SyllabusANIL EREN GÖÇERNo ratings yet

- 221-240 - PMP BankDocument4 pages221-240 - PMP BankAdetula Bamidele OpeyemiNo ratings yet