Professional Documents

Culture Documents

Ceea-Aceg 132

Uploaded by

api-256647308Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ceea-Aceg 132

Uploaded by

api-256647308Copyright:

Available Formats

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

DEVELOPMENT OF OUTCOME-BASED ASSESSMENT

METRIC FOR ENGINEERING PROGRAM

ACCREDITATION

Hooman Nabovati1, Paula Ogg2, Mouhamed Abdulla1, and Jon Berge1

1. Faculty of Applied Science and Technology, 2. Sheridan Centre for Academic Excellence

Sheridan College Institute of Technology and Advanced Learning, Ontario, Canada

hooman.nabovati@sheridancollege.ca

Abstract – The Canadian Engineering Accreditation curriculum and related processes, and finally managing and

Board (CEAB) requires engineering programs to assess implementing change [2]. In fact, as part of the consent

twelve high-level educational graduate attributes. For renewal process for engineering degrees, colleges should

evidence of teaching and learning of these engineering regularly show evidence that programs are meeting the

attributes, we developed a framework founded on academic standards, and that students are achieving the

pedagogical practices from backwards design, program and course level outcomes.

constructive alignment, outcome-based education, and Currently, for the consent renewal process, analysis is a

student-centred learning. This quantifiable framework manual endeavour that is mostly qualitative in nature. It

offers a rigorous and tunable approach to systematically entails collecting sample work, assignments, and exam

aggregate performance results over a specified duration. papers from students, reviewing documents, and sharing

Alongside evaluation tools to measure graduate attributes, anecdotes. It may also include focus group discussions,

we created a comprehensive common skills rubric to questionnaires, and surveys administered by the respective

evaluate performance-based learning activities. Overall, provincial Ministry for post-secondary education. For data-

the proposed framework offers an accurate and informed decision making, quantitative process is surely

sustainable solution for the assessment of CEAB graduate more appealing. A systematic and more sustainable

attributes used for Bachelor of Engineering programs. approach to measure and explicitly reveal data points of the

With high precision, results from this assessment process graduate attributes is therefore needed.

can shed light on a set of factors that impact students’ In this paper, we develop a framework to aggregate

performance in achieving outcomes of a particular results incoming from diverse data sources to derive a

engineering program. The results can also build a substantiated conclusion on the assessment of CEAB

foundation that triggers continual improvement in graduate attributes. Empowered by pedagogical practices

teaching and learning courses in engineering programs. of backwards design, constructive alignment, outcome-

based education, and student-centred learning, a

Keywords: Engineering Accreditation, Outcome-Based comprehensive common skills rubric is developed. The

Metric, Graduate Attributes, Common Skills Rubric, rubric is used to evaluate performance-based activities

Engineering Education. such as projects, presentations, work-integrated learnings,

and laboratory assignments using defined indicators.

1. INTRODUCTION Educators and subject matter experts in the field

determined where there were relevant indicators of the

The Canadian Engineering Accreditation Board graduate attributes in each course within the engineering

(CEAB) requires programs to evaluate twelve graduate program. Using formal evaluation tools and the common

attributes (the list is shown in Fig. 2). The accreditation skills rubric, the indicators measure the related graduate

board demands that programs assess graduate attributes at attributes across the span of the program. The significance

different levels across the curriculum, namely, as either of each evaluation based on the course evaluation plan and

introduced, developed, or applied/advanced [1]. To further the design of various assessment tools is quantified. A

expand on the process, the Engineering Graduate discussion of the mechanics of implementing the proposed

Attribution Development (EGAD) project suggested a six- framework and algorithm to assess graduate attributes is

step approach for mapping indicators to the curriculum, provided.

i.e., defining program objectives and indicators, mapping Remarks on how this pedagogical quantification

indicators across the curriculum, collecting data, approach can be used as an effective tool to identify

analyzing, and interpreting the data, improving the teaching and learning gaps is also presented in this work.

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 1 of 7 – Peer reviewed

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

2. PEDAGOGICAL APPROACH process is a critical to ensure the academic quality in the

Bachelor of Engineering (Electrical Engineering) program.

For our engineering program, we carefully defined Achieving these attributes ensure that graduates possess

indicators for each of the graduate attributes. This is done the professional skill set and technical competency

using pedagogical practices from backwards design [3], required to build a successful careers as future professional

constructive alignment [4], outcome-based learning [5][6], engineers and in return positively contribute to society.

student-centred learning. Best practices from the conceive, The purpose and rationale of the assessment of graduate

design, implement, operate (CDIO) standards are also attributes for the Bachelor of Engineering (Electrical

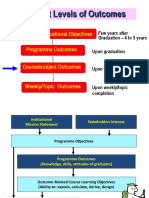

incorporated [7], [8]. Engineering) program is visualized in the diagram of Fig.

In this treatment, we also looked at different taxonomies 1. We should highlight that graduate attributes cannot be

of verbs to differentiate rubric descriptions with an measured directly through students’ learning activities and

engineering context. Beyond Bloom’s taxonomy, the regular evaluations. As a workaround, a set of measurable

structure of observed learning outcomes (SOLO) learning outcomes, called indicators, can be defined at the

taxonomy (see e.g., [9]) was particularly useful. It is used program level. These indicators cover various aspects and

to scaffold and show graduate attributes as a function of (i) qualities of the graduate attributes. The indicators are

different levels of learning (i.e., introduced, developed, or measured granularly for all pertinent learning outcomes

applied); (ii) semester and year of study; and (iii) for used to assess graduate attributes. The indicators are

diverse target audiences (i.e., self, team, project work, or assessed in various courses using a variety of tools,

for society at large). including, examinations, projects, case studies, laboratory

As part of our process, we did a comparative analysis of practice, peer-evaluations, and surveys.

what other engineering programs did to satisfy the CEAB

requirements. In particular, we examined the McGill [10] Inputs

indicators and assessment rubric and McMaster [11] • Engineering

Curriculum

indicators. Using our backwards design and student- • Evaluation Tools

centred approach, we choose to write our rubric using • Learning Outcomes

SOLO rather than Bloom and reading from left to right

1. Program-Specific 4. Continual

with an A+ in the first top quadrant on how to achieve 4. Internal and

Indications Improvement

External Quality

success towards a B developing and a C emerging skill. We Assurance

(Introductory,

Development, Applied)

also choose to use positive active voice language that a 3. Data Analysis of

student could pose a question about on how to move 3. Program

Assessment Results

towards success. Additionally, we examined the Ryerson Learning

2. Assessment of CEAB

Graduate Attributes

indicators [12]. Again, we choose to use our backwards Outcomes

3. Data Storage of

design and student-centred approach to write our indicators Assessment Results

from the accomplishing standard in 4th year down towards

the introductory standard in 1st year. Similar to our rubric,

Ryerson wrote all indicators in positive active voice Output

Requirements for

language. We feel that the essence of our indicators is in CEAB Accreditation

alignment with the other engineering programs.

Of course, it is not expected that students in first Fig. 1. Importance of measuring graduate attributes in the

semester have mastery of graduate attributes such as Bachelor of Engineering (Electrical Engineering) program.

design, problem analysis, or investigation. Likewise,

waiting until the end of a program to introduce ethics and

We defined program learning outcomes aligned with the

equity is professionally unsuitable. Ultimately, scaffolding

graduate attributes. Therefore, the assessment of the

with explicit criteria is essential for students’ success and

graduate attributes provides an accurate and sustainable

future.

evaluation of the program outcomes. The results of this

assessment can be used to improve academic quality within

3. RATIONALE FOR ASSESSING GRADUATE the program through internal and external quality

ATTRIBUTES assurance procedures.

The graduate attributes are defined by CEAB and the Furthermore, we analyze the results obtained from the

curriculum of the Bachelor of Engineering (Electrical assessment of graduate attributes, and when there is an

Engineering) program at Sheridan College has been insufficiency in students' performance on any of the

developed to support graduates in achieving these graduate attributes, we initiate the process of continual

outcomes. Assessment of the graduate attributes is improvement. This approach may lead to curriculum

essential to evaluate the effectiveness of the program in changes that are escalated to institutional processes and

achieving its high-level academic goals. This ongoing standards for implementing the change. The results also

support the revisions that may be required on selections of

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 2 of 7 – Peer reviewed

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

1. CEAB Graduate Attributes 3. Identify Courses for Assessment 4. Assessment of Indicators

• Knowledge base for engineering • Mathematics This is done at different levels of

• Problem analysis • Natural Sciences learning (i.e., introduced,

• Investigation • Engineering Science developed, and applied or

• Engineering Design • Engineering Design advanced) using course

• Use of engineering tools • Complementary Studies evaluation tools and common

• Individual and teamwork skills rubric (see Fig.3).

• Communication skills

• Professionalism

• Impact of engineering on Soc. and Env.

• Ethics and equity 2. Curriculum Mapping of LO to Indicators Mapping

• Economics and project management Graduate Attributes Common Skills Rubric

• Life-long learning

5. Course-Level Assessment

6. Aggregating the Assessment Results of Indicators for Selected

Results to evaluate Indicators Learning Activities

Indicator GA#

Graduate Attribute

6. Aggregating the Assessment

Results to evaluate Graduate

Attributes

Indicator GA#

Fig. 2. Systematic algorithm of data aggregation to assess graduate attributes.

assessment tools, and application of them to evaluate to five courses. In the Bachelor of Engineering (Electrical

students' learning activities. Engineering) program, each graduate attribute is evaluated

Ultimately, the assessment results of indicators are through three to five indicators, represented by variable

aggregated to measure the graduate attributes. The 𝐺𝐺𝐴𝐴𝑖𝑖 , where 1 ≤ 𝑖𝑖 ≤ 𝑁𝑁, such that 𝑁𝑁 represents the number

assessment results of graduate attributes are then collected of indicators that are defined for a specific GA. These

throughout the program to create a repository of the indicators are assessed using various evaluation tools

outcomes. This repository serves as evidence of the including exams, tests, and performance-based evaluations

effectiveness and cohesion of the program curriculum. This such as projects, laboratory assignments, and case studies.

procedure is an essential step for obtaining accreditation The use of the common skills rubric ensures a high

from CEAB. degree of uniformity in the evaluation of performance-

based evaluations. For our assessment process, the results

4. FRAMEWORK FOR ASSESSING GRADUATE are distributed across four different levels of learning

ATTRIBUTES denoted by 𝐿𝐿 = 1, 2, 3, or 4. These learning levels are

defined for the grade ranges shown in Table 1.

The evaluation of graduate attributes is performed using

a variety of assessment tools and in the context of learning

activities. Subject matter experts analyze the content of Table 1: Levels of learning.

each course to identify the most relevant graduate

Level Grade Range

attributes. For this process a repository is created that

𝐿𝐿1 49% and below

contains all curriculum mappings. It also ensures that all

graduate attributes are evaluated adequately throughout the 𝐿𝐿2 50% to 64%

program. 𝐿𝐿3 65% to 79%

The process of assessing graduate attributes is described 𝐿𝐿4 80% and above

in Fig. 2. As noted earlier, graduate attributes are measured

through the indicators at different levels of learning, either The assessment tools used to evaluate the indicators are

at an introductory, developed, or applied/advanced. To not equally significant. Their weights in the assessment

determine the level of learning, we developed a rubric that process should carefully be determined. The contribution

examines learning outcomes of courses, level of of evaluation tools to students’ final grades are clearly

scaffolding, and year and semester in which the course is defined in the evaluation plan of each course. When

offered. The rubric provides consistency in allocating the evaluations assess multiple indicators, their weights are

levels of learning to each course. adjusted proportionally. For instance, if a final exam

In our metric, each graduate attribute is represented by contributes 40% to the final grade of a course and only 25%

variable GA. This variable is generally assessed over three of the exam questions evaluate a specific indicator, the

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 3 of 7 – Peer reviewed

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

weight of evaluation for this indicator and through the final recommended for each course. For exams and theoretical

exam should be adjusted to (0.4 × 0.25 × 100) = 10%. In tests, each question is mapped to a course learning

other words, the distribution of the assessment results for outcome. In the Bachelor of Engineering (Electrical

that specific indicator will contribute to the overall Engineering) program, the learning outcomes in each

assessment of the graduate attribute with the weight of course have been mapped to indicators. This means that the

10%. We should also remark that the indicators are mark that students achieve for each question represents

assessed at the question level in theoretical exams and their performance in the associated indicator.

students’ grade distribution in the exam is not necessarily On the other hand, for performance-based evaluations

equivalent to the assessment results of the associated such as projects, case studies, and laboratory assignments,

indicators. We begin aggregating the results when we have including non-traditional assessment tools such as peer-

obtained the assessment results for various indicators of the evaluations or surveys, the common skills rubric is utilized

graduate attribute 𝐺𝐺𝐺𝐺 and determined the weight or to assess the indicators directly. The common skills rubric

significance of the assessment tools for each result. Each is also recommended for assessment of the complementary

indicator such as 𝐺𝐺𝐴𝐴𝑖𝑖 can be assessed by taking the skills such as communication, teamwork, and leadership.

weighted average of all 𝑀𝑀 assessment results. It should be When we unpack the significant skills that emerge from

noted that the value of M may be considerable since the the graduate attribute indicators, we can develop a common

indicator could potentially be assessed several times across skills rubric targeting these discrete skills. This tool

multiple courses and through various tools. The overall enables proper consistency in evaluating skills scaffolded

assessment of the indicator and in the level of learning 𝐿𝐿 across the curriculum from course-to-course and from

can be calculated as follows: year-to-year. This comprehensive rubric provides a

reference for the best academic practices whereby we can

∑𝑀𝑀

𝑗𝑗=1 𝑤𝑤𝑖𝑖,𝑗𝑗 𝑛𝑛𝑗𝑗 (𝐿𝐿) parse the criteria for various learning activities.

𝐺𝐺𝐴𝐴𝑖𝑖 (𝐿𝐿) = (1) The student-centred language of the rubric provides

∑𝑀𝑀

𝑗𝑗=1 𝑤𝑤𝑖𝑖,𝑗𝑗 students with constructive and actionable feedback that

leads them toward academic success. The rubric is

Where 𝐿𝐿 could be either 1, 2, 3 or 4, and 1 ≤ 𝑖𝑖 ≤ 𝑁𝑁. In this designed to evaluate the indicators throughout the program

equation, the variable 𝑛𝑛𝑗𝑗 (𝐿𝐿) represents the number of and can be modified according to requirements of each

grades in level 𝐿𝐿 assessed through the 𝑗𝑗𝑡𝑡ℎ assessment and learning activity. Instructors could modify the rubric by

𝑤𝑤𝑖𝑖,𝑗𝑗 is the weight of the 𝑖𝑖 𝑡𝑡ℎ indicator for this assessment. removing the indicators that are not relevant and assigning

Despite being defined at different pedagogical levels, all numerical grades to each section of evaluations. The rubric

indicators make an equal contribution to the assessment of

the associated graduate attribute. The indicators cover

various aspects and qualities defined by the graduate Input

attributes. Therefore, the overall assessment of a graduate Course Evaluation Tools

attribute can be calculated as the average of all its

indicators with equal weights.

Theoretical Tests Performance-based

and Exams Type of Projects and Labs

𝑁𝑁 Evaluations

1 (2) ?

𝐺𝐺𝐺𝐺(𝐿𝐿) = � 𝐺𝐺𝐴𝐴𝑖𝑖 (𝐿𝐿)

𝑁𝑁

𝑖𝑖=1 Customize the

Common-skills Rubric

Assign Grade of Each

where 𝐿𝐿 is either 1, 2, 3 or 4, and 𝑁𝑁 represents the number

Question to the

of indicators that are defined for the graduate attribute 𝐺𝐺𝐺𝐺. Associated Learning Assess the Indicators

This method can be easily adjusted to evaluate different Outcome or Indicator using the Customized

levels of content, introductory, developed, or applied/ Rubric

advanced. This can be performed by calculating the

weighted average, as described in (1), on the assessment

results that are specific to a particular level of content.

Output

5. ASSESSING THE INDICATORS THROUGH Assessment Results for all Indicators

LEARNING ACTIVITIES Assigned to a Course

We developed two different mechanisms to assess the

indicators. Instructors choose either of these mechanisms Fig. 3. Assessment of indicators through exams and

based on assessment tools that are outlined and performance-based evaluation.

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 4 of 7 – Peer reviewed

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

could evaluate different criteria of learning activity and describes the different levels of learning, L1 to L4, for the

assess the indicators quantitively. This process is captured design indicators, DE.1 to DE.5. The descriptors are

by the flowchart of Fig. 3, which describes a developed using a student-centred language and they

straightforward method for assessing indicators through provide students with constructive feedback. To help

various course evaluations. readers gain a better understanding of the implementation

of our assessment methodology, we show in Appendix A

6. IMPLEMENTING THE GRADUATE ATTRIBUTE an excerpt of the common-skills rubric. In fact, the excerpt

ASSESSMENT PROCESS of the rubric refers exclusively to the graduate attribute of

design.

To further elaborate the process, we discuss the

assessment of the design graduate attribute in the sixth

100 Embedded App. Dev.

semester of the Bachelor of Engineering (Electrical

(ELEE-30893D)

Engineering) program at Sheridan College. This graduate 90 DE1 DE2 DE3 DE4 DE5

attribute is mainly assessed in four courses of Embedded 80 Design of Digital Sys.

Application Development (ELEE30893D), Electric DE5 Electric Machines

70 (ELEE-31436D)

(ELEE-31139D)

Machines (ELEE31139D), Control Systems

Percentage (%)

60

(ELEE31186D), and Design of Digital Systems

(ELEE31436D). In the evaluation plan of each course, we 50 DE4 DE4 DE4

DE4 DE4 DE4

explicitly connect the assessment tools (e.g., project, 40 Control Systems

DE4

midterm test, and final exam) to possible learning 30

(ELEE-31186D)

DE3 DE3 DE3

outcomes. With this first mapping, we were able to build a DE4

20

comprehensive evaluation matrix for each course. DE3 DE3 DE3

DE3 DE4

As a second mapping, we associated the learning 10

DE3

DE4 DE1 DE1 DE1

outcomes of each course to appropriate indicators of

related graduate attributes. We were then able to clearly

interconnect assessment tools to graduate attributes by Evaluations / B.Eng. Course

superimposing these two mappings together. The

mappings between learning outcomes, evaluation tools, Fig. 4. Evaluation components to assess the design

indicators, and graduate attributes are all specified on the graduate attribute in the sixth semester of the Bachelor of

course documentations. Engineering (Electrical Engineering) program.

At the program level, the design graduate attribute

should be assessed through 5 indicators, that are defined as Upon successful assessment of the indicators in these

follows. four courses, the design graduate attribute could be

assessed through the algorithm discussed previously. In

• DE.1: Define design requirements, specifications, this case, equation 1 should be adjusted as M = 22 since the

and constraints. indicators are assessed using 22 learning activities

throughout these four courses.

• DE.2: Consider external factors including

environmental, social, economic impacts as well as

health and safety in an engineering design. 7. CONCLUSION

• DE.3: Generate divergent solutions to a design We have developed a regimented procedure to assess

problem. the graduate attributes at both courses and program level.

• DE.4: Develop a refined design to implement an The graduate attributes are assessed using learning

engineering project. outcomes to indicator mapping or a comprehensive

• DE.5: Evaluate the performance of an engineering common skills rubric. An algorithm is developed to

design. determine the weight of assessments and evaluate

indicators and graduate attributes. The developed

The mapping of these indicators to the course evaluation procedure provides an accurate, sustainable, and adaptable

tools for the four courses is depicted in Fig. 4. Note that the solution to assess graduate attributes for the program

percentages listed on the chart specify the maximum accreditation, continual improvement, and educational

amount of correlation between the indicators and the quality control. The proposed algorithm reveals explicit

assessment tools. Instructors that assess graduate attributes and quantitative data for informed decision making. It is

may choose to adjust the weights so that it is proportional also instrumental to identify areas of teaching and learning

to the significance of the indicator in students’ overall gaps to explore and revisit through a focus group to

learning. ultimately improve students’ learning experience.

For projects, the design indicators are best assessed

through the common skills rubric. The rubric essentially

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 5 of 7 – Peer reviewed

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

Acknowledgements [7] M. Abdulla, Z. Motamedi, and A. Majeed,

“Redesigning Telecommunication Engineering

Courses with CDIO geared for Polytechnic Education,”

This pedagogical research is supported by the School of

In Proc. of the 10th Conference on Canadian Eng.

Mechanical and Electrical Engineering Technology, Education Association (CEEA'19), pp. 1-5, Ottawa,

Faculty of Applied Science and Technology, and the ON, Canada, Jun. 9-12, 2019. DOI:

Centre for Academic Excellence, at Sheridan College. 10.24908/PCEEA.VI0.13855

[8] E. F. Crawley, J. Malmqvist, S. Ostlund, D. R. Brodeur

References and Kristina Edström, Rethinking Engineering

Education: The CDIO Approach. New York, NY:

[1] Engineers Canada, “Accreditation Criteria Springer, 2014.

Procedures.” Canadian Engineering Accreditation [9] J.B. Biggs and K.F. Collis, Evaluating the Quality of

Board, 2022. [Online]. Available: Learning: The SOLO Taxonomy (Structure of the

https://engineerscanada.ca/sites/default/files/2022- Observed Learning Outcome). New York, NY:

11/Accreditation_Criteria_Procedures_2022.pdf Academic Press. 1982.

[2] EGAD Project, “Step 2: Mapping Indicators to the [10] McGill Faculty of Engineering, “Graduate Attributes

Curriculum. Engineering Graduate Attribution Key Documents,” McGill University, 2017, Sep 28.

Development.” EDAG Project. Accessed: Mar. 23, [Online]. Available

2023. [Online.] Available: https://www.mcgill.ca/engineering/files/engineering/ru

https://egad.engineering.queensu.ca brics_0.pdf

[3] G.P. Wiggins and J. McTighe, Understanding by [11] McMaster Faculty of Engineering, “Graduate Attribute

Design. Association for Supervision in Curriculum Indicators,” McMaster University, 2017. [Online].

Development (ASCD). Alexandria, VA. 2005. Available:

[4] J.B. Biggs and C.S. Tang, Teaching for Quality http://mechfaculty.mcmaster.ca/~lightsm/gradatt/

Learning at University: What the Student Does. New [12] Fang, L, "CEAB Graduate Attribute Assessment at

York, NY: Open University Press, McGraw-Hill, 2011. Ryerson University," Ryerson University, 2014, Dec.

[5] W.G. Spady, Outcome-Based Education: Critical [Online]. Available:

Issues and Answers. Arlington, VA: American https://egad.engineering.queensu.ca/wp-

Association of School Administrators, 1994. content/uploads/2018/07/Ryerson-CEAB-Graduate-

[6] R.F. Mager, Preparing Instructional Objectives. Attributes.pdf

Belmont, CA: Fearon, 1984.

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 6 of 7 – Peer reviewed

Conference Proceedings 2023 Canadian Engineering Education Association-Association canadienne de l'éducation en génie

Appendix A: COMMON SKILLS RUBRIC FOR GRADUATE ATTRIBUTE OF DESIGN

GA Indicators Evaluation Levels

Level 4 (80 - 100%) Level 3 (65 - 79%) Level 2 (50 - 64%) Level 1 (below 49%)

DE. 1 Define design • Processes the design Analyzes the problem Explains the design Needs to identify the

requirements, assignments as an and provides an requirements. Needs design requirements

specifications, and open-ended problem accurate list of design to address the and constraints.

constraints. with possible solutions. requirements. constraints.

• Connects the solution

and constraints.

DE. 2 Consider external • Considers all external • Considers most of • Considers some • Needs to consider the

factors including factors including external factors external factors effect of external

environmental, environmental, social, including including factors in the

social, economic economic impacts, environmental, social, environmental, social, engineering design.

impacts as well as health, and safety in economic impacts, economic impacts, • Needs to evaluate the

health and safety the engineering health, and safety in health, and safety in importance of each

in an engineering design. the engineering the engineering factor and clearly

design. • Prioritizes the design. design. prioritizes them.

contributing factors. • Lists the contributing

factors.

DE. 3 Generate Generates multiple Presents some design Needs to present a Needs to study the

divergent solutions design alternatives that solutions that address complete design design problem and

Design

to a design address the design some of key design solution. identify multiple

problem. problem. requirements. solutions considering

the constraints.

DE. 4 Develop a refined • Evaluates several Develops one solution Develops one solution Needs to consider the

design to design alternatives. that meets the design that meets some of the design requirements

implement an Chooses the solution requirements and design requirement. and constraints.

engineering that meets the design constraints.

project requirements and

constraints.

• Modifies the chosen

solution following a

systematic approach.

DE. 5 Evaluate the • Evaluates the Validates the design by • Assesses the Needs to evaluate the

performance of an performance of the comparing the performance of the design solution.

engineering design accurately. performance of the solution with general

design • Addresses the solution with the statements.

practicality of the design requirements. • Needs to build the

solutions. Connects statement based on

the discrepancies to the measurements or

the design constraints. analysis results.

CEEA-ACÉG23; Paper 132

Okanagan College & UBC-Okanagan; June 18 – 21, 2023 – 7 of 7 – Peer reviewed

You might also like

- Group Project Software Management: A Guide for University Students and InstructorsFrom EverandGroup Project Software Management: A Guide for University Students and InstructorsNo ratings yet

- Assessing a multidisciplinary capstone design courseDocument6 pagesAssessing a multidisciplinary capstone design coursevuliencnNo ratings yet

- 06654398Document4 pages06654398samruddhiconstructions2205No ratings yet

- PEO Assessment 1Document14 pagesPEO Assessment 1Gurram SunithaNo ratings yet

- Implementation and Assessment of Outcome Based Education in Engineering EducationDocument13 pagesImplementation and Assessment of Outcome Based Education in Engineering EducationMd. Samiur RahmanNo ratings yet

- What Is AbetDocument38 pagesWhat Is AbetDr_M_SolimanNo ratings yet

- Paper-Rubrics For DesignDocument6 pagesPaper-Rubrics For DesignSadagopan SelvaNo ratings yet

- Assessing ABET Student Outcomes in Mechanical EngineeringDocument8 pagesAssessing ABET Student Outcomes in Mechanical EngineeringElkarroumi AmineNo ratings yet

- Automating Outcome Based Education For The Attainment of Course and Program OutcomesDocument4 pagesAutomating Outcome Based Education For The Attainment of Course and Program OutcomesGIRISH GIDAYENo ratings yet

- Design and Implementation of Scoring Rubrics For The Industrial Technology ProgramsDocument6 pagesDesign and Implementation of Scoring Rubrics For The Industrial Technology ProgramsHarunSaripNo ratings yet

- Outcomes Based Assessment of The Engineering Programs at Qassim University For ABET AccreditationDocument11 pagesOutcomes Based Assessment of The Engineering Programs at Qassim University For ABET AccreditationHai LinhNo ratings yet

- How To Prepare Self-Assessment Report (SAR)Document65 pagesHow To Prepare Self-Assessment Report (SAR)naveed ahmedNo ratings yet

- Program Evaluator Worksheet: For The Academic Year (-) General InformationDocument15 pagesProgram Evaluator Worksheet: For The Academic Year (-) General InformationDheyaa Jasim KadhimNo ratings yet

- Final Year Projects - A Means of Adding Value To Graduate AttributesDocument6 pagesFinal Year Projects - A Means of Adding Value To Graduate Attributesrajesh jamesNo ratings yet

- Specific Generic Performance Indicators and Their Rubrics For The Comprehensive Measurement of Abet Student OutcomesDocument21 pagesSpecific Generic Performance Indicators and Their Rubrics For The Comprehensive Measurement of Abet Student OutcomesLayla VuNo ratings yet

- A Guide to Engineering Accreditation Criteria HarmonizationDocument8 pagesA Guide to Engineering Accreditation Criteria HarmonizationCapito HeartNo ratings yet

- NBA - WOSA-Full Paper - Rubrics - Saa. Ezhilan3!3!2014!11!5 - 31Document15 pagesNBA - WOSA-Full Paper - Rubrics - Saa. Ezhilan3!3!2014!11!5 - 31ci_balaNo ratings yet

- Guidelines for academic program assessmentDocument50 pagesGuidelines for academic program assessmentHinaNo ratings yet

- Implementation of Constructive Alignment Approach in Department of Electronic Engineering, Faculty of Engineering, Universiti Malaysia SarawakDocument5 pagesImplementation of Constructive Alignment Approach in Department of Electronic Engineering, Faculty of Engineering, Universiti Malaysia Sarawakdilan bro SLNo ratings yet

- Outcome Based EducationDocument51 pagesOutcome Based EducationMuhammad Waseem100% (1)

- Curriculum Assessment and Enhancement at Purdue University Calumet Based on ABET 2000Document9 pagesCurriculum Assessment and Enhancement at Purdue University Calumet Based on ABET 2000Sadagopan SelvaNo ratings yet

- Dr. DesaiDocument8 pagesDr. Desaimailsk123No ratings yet

- AG Engineering Technology - 15 Sep 06Document18 pagesAG Engineering Technology - 15 Sep 06orenthal12jamesNo ratings yet

- ABET Criteria For Accrediting Engineering ProgramsDocument5 pagesABET Criteria For Accrediting Engineering ProgramsawakenatorNo ratings yet

- Engineering final year project assessment practicesDocument8 pagesEngineering final year project assessment practicesVNM CARLONo ratings yet

- Accreditation CVF 2021Document20 pagesAccreditation CVF 2021Umair KhadimNo ratings yet

- ABET Accreditation EssentialsDocument51 pagesABET Accreditation EssentialshameeeeNo ratings yet

- 10 5923 J Edu 20180803 02Document6 pages10 5923 J Edu 20180803 02Mian AsimNo ratings yet

- Ug Curriculum Booklet 2017Document170 pagesUg Curriculum Booklet 2017Kashish GoelNo ratings yet

- Designing and Teaching Courses To Satisfy The ABETDocument20 pagesDesigning and Teaching Courses To Satisfy The ABETIwanConySNo ratings yet

- NATE Module 1 - Week1 PDFDocument21 pagesNATE Module 1 - Week1 PDFhasmit prajapatiNo ratings yet

- NATE Module 1 - Week1Document21 pagesNATE Module 1 - Week1hasmit prajapatiNo ratings yet

- Implementing An Outcomes-Based Quality Assurance Process For Program Level Assessment at DLSU Gokongwei College of EngineeringDocument6 pagesImplementing An Outcomes-Based Quality Assurance Process For Program Level Assessment at DLSU Gokongwei College of Engineeringandyoreta6332No ratings yet

- Criterion 2 MechDocument48 pagesCriterion 2 MechlokeshwarrvrjcNo ratings yet

- Abet Assessment OverviewDocument12 pagesAbet Assessment OverviewDr. Muhammad Imran Majid / Associate ProfessorNo ratings yet

- Ece Assessment ProcessDocument29 pagesEce Assessment ProcesspragatinareshNo ratings yet

- Abet Eac Criteria 2011 2012Document26 pagesAbet Eac Criteria 2011 2012Acreditacion Fic UniNo ratings yet

- XCDocument10 pagesXCKarthiKeyanNo ratings yet

- Accredited CSE Department with Top ProgramsDocument62 pagesAccredited CSE Department with Top ProgramsGopal KrishnaNo ratings yet

- Prof C R Muthukrishnannba Obe Awareness 16jan23Document122 pagesProf C R Muthukrishnannba Obe Awareness 16jan23SaidronaNo ratings yet

- Establishing Fair Objectives and Grading Criteria ForDocument18 pagesEstablishing Fair Objectives and Grading Criteria ForPraful KakdeNo ratings yet

- Evaluasi 1Document10 pagesEvaluasi 1Candra IrawanNo ratings yet

- ToT On Curriculum Review For Program Accreditation Pptslides4traineesDocument127 pagesToT On Curriculum Review For Program Accreditation Pptslides4traineeskuba DefaruNo ratings yet

- Introduction of ABET To CE 203Document45 pagesIntroduction of ABET To CE 203Saciid LaafaNo ratings yet

- Criterion 1&2Document95 pagesCriterion 1&2Haider ALHussainyNo ratings yet

- NBA's Outcome Based EducationDocument15 pagesNBA's Outcome Based EducationarravindNo ratings yet

- Accreditation Workshop: Mechanical Engineering Preparation For The Accreditation ProcessDocument25 pagesAccreditation Workshop: Mechanical Engineering Preparation For The Accreditation ProcessKrishna MurthyNo ratings yet

- Key Components of OBE and AccreditationDocument61 pagesKey Components of OBE and Accreditationpvs-1983No ratings yet

- PPT's C R Muthukrishnan NBA-AWARENESS WEBINAR-Outcomes-Assessments-Continuous ImprovementDocument87 pagesPPT's C R Muthukrishnan NBA-AWARENESS WEBINAR-Outcomes-Assessments-Continuous ImprovementAritra ChowdhuryNo ratings yet

- Outcome Based Education Curriculum in Polytechnic Diploma ProgrammesDocument42 pagesOutcome Based Education Curriculum in Polytechnic Diploma ProgrammesSanthosh KumarNo ratings yet

- BME CourseSchem2019Document134 pagesBME CourseSchem2019kavindra singhNo ratings yet

- Final Year ProjectsDocument17 pagesFinal Year ProjectsAlia KhanNo ratings yet

- MechanicalEngineering BachDocument9 pagesMechanicalEngineering BachLLNo ratings yet

- Assessing Students Prior Knowledge and Learning in An Engineering Management Course For Civil EngineersDocument13 pagesAssessing Students Prior Knowledge and Learning in An Engineering Management Course For Civil EngineersDebora DujongNo ratings yet

- Analysis of Graduates Performance Based On Programme Educational Objective Assessment For An Electrical Engineering DegreeDocument7 pagesAnalysis of Graduates Performance Based On Programme Educational Objective Assessment For An Electrical Engineering DegreeHtet lin AgNo ratings yet

- 2017 2018 - Man 2103Document4 pages2017 2018 - Man 2103parminderNo ratings yet

- Assessment Guidelines March09Document35 pagesAssessment Guidelines March09Ivana Ćirković MiladinovićNo ratings yet

- Department of Computer Science and Engineering ABET Status - ReviewDocument28 pagesDepartment of Computer Science and Engineering ABET Status - Reviewkhaleel_anwar2000No ratings yet

- DOK Question StemsDocument1 pageDOK Question StemsNightwing100% (1)

- Dok WheelDocument1 pageDok Wheelapi-249854100100% (1)

- CYC Case Study For Common Skill RubricsDocument24 pagesCYC Case Study For Common Skill Rubricsapi-256647308No ratings yet

- Lab Report Rubric Electrical EngineeringDocument3 pagesLab Report Rubric Electrical Engineeringapi-256647308No ratings yet

- Introduction To Biomedical Engineering DesignDocument17 pagesIntroduction To Biomedical Engineering DesignEmad TalebNo ratings yet

- Design For Electrical and Computer EngineersDocument323 pagesDesign For Electrical and Computer EngineersKyle Chang67% (3)

- Chapter 8 Engineering Ethics (Risk, Safety, and Accidents)Document26 pagesChapter 8 Engineering Ethics (Risk, Safety, and Accidents)Shashitharan PonnambalanNo ratings yet

- NBM No. 125 - A - Submission of Revised Budget (BP) Forms 202 and 203Document16 pagesNBM No. 125 - A - Submission of Revised Budget (BP) Forms 202 and 203Philip JameroNo ratings yet

- EGR 3713 Syllabus Part A+BDocument4 pagesEGR 3713 Syllabus Part A+BSarah-RuthNo ratings yet

- CH 7Document43 pagesCH 7Hossam AliNo ratings yet

- ResumeDocument1 pageResumeapi-289204640No ratings yet

- HospitalDocument12 pagesHospitalMohammad Ilham AkbarNo ratings yet

- Unit 1 Introduction To Engineering EconomyDocument7 pagesUnit 1 Introduction To Engineering EconomyJoshua John JulioNo ratings yet

- Draft ISO 21457Document21 pagesDraft ISO 21457Hendra YudistiraNo ratings yet

- Chapter 1Document72 pagesChapter 1Thanhthung DinhNo ratings yet

- 1-Plant Design - BasicsDocument7 pages1-Plant Design - BasicsAbdullahNo ratings yet

- Engineering Exploration: First Year Engineering (All) 2018-19 Maharashtra Institute of Technology AurangabadDocument66 pagesEngineering Exploration: First Year Engineering (All) 2018-19 Maharashtra Institute of Technology Aurangabadatharva gaikwadNo ratings yet

- Bentley - SAP - Oil-Gas PDFDocument9 pagesBentley - SAP - Oil-Gas PDFRahul Raj KuriariNo ratings yet

- Engineering Execution PlanDocument23 pagesEngineering Execution Planari zeinNo ratings yet

- CEN Engineering Tech Provider and Supply Equipment ProcessDocument13 pagesCEN Engineering Tech Provider and Supply Equipment ProcessPrasad RamanNo ratings yet

- 4 Smart Plant ConstructionDocument7 pages4 Smart Plant ConstructionAldo RaquitaNo ratings yet

- Senior Electrical EngineerDocument2 pagesSenior Electrical EngineerReza Novianti PasandeNo ratings yet

- Presentation of Electronics-and-Computer-Engineering 2023Document41 pagesPresentation of Electronics-and-Computer-Engineering 2023Aron DionisiusNo ratings yet

- ch8 Compound Machine Design ProjectDocument10 pagesch8 Compound Machine Design Projectapi-272055202No ratings yet

- Conceptions of The Engineering Design Process: An Expert Study of Advanced Practicing ProfessionalsDocument30 pagesConceptions of The Engineering Design Process: An Expert Study of Advanced Practicing ProfessionalsEinNo ratings yet

- EML 4501 SyllabusDocument4 pagesEML 4501 SyllabusShyam Ramanath ThillainathanNo ratings yet

- Nasir CV - Senior Project EngineerDocument4 pagesNasir CV - Senior Project EngineerSyed Ñąveed Hąįdeŕ33% (3)

- ENGINEERING DESIGN GUILDLINES Plant Cost Estimating Rev1.2webDocument23 pagesENGINEERING DESIGN GUILDLINES Plant Cost Estimating Rev1.2webfoxmancementNo ratings yet

- Eme ReportDocument9 pagesEme ReportMegha NeelgarNo ratings yet

- Spe 102210 MSDocument9 pagesSpe 102210 MSmohamedabbas_us3813No ratings yet

- Engineering Design Guideline-Relief Valves - Rev 02Document30 pagesEngineering Design Guideline-Relief Valves - Rev 02Pilar Ruiz RamirezNo ratings yet

- REOI Announcement (PRMC Dan DED Mamminasa Dan Kedungsepur)Document2 pagesREOI Announcement (PRMC Dan DED Mamminasa Dan Kedungsepur)김현수No ratings yet

- Reverse Engineering and Redesign Methodology for Product EvolutionDocument26 pagesReverse Engineering and Redesign Methodology for Product EvolutionJefMurNo ratings yet

- Company Profile - DTS Engineering V3.18.09.1 The BreezeDocument15 pagesCompany Profile - DTS Engineering V3.18.09.1 The BreezeJuragan IwalNo ratings yet