Professional Documents

Culture Documents

ML ND Stat

Uploaded by

Gowsikkan0 ratings0% found this document useful (0 votes)

3 views2 pagesOriginal Title

ml nd stat

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

3 views2 pagesML ND Stat

Uploaded by

GowsikkanCopyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 2

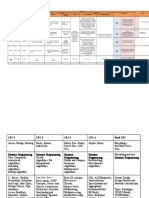

Descriptive Statistics: Probability Theory: Statistical Inference:

Measures of central tendency Basic probability Sampling techniques

(mean, median, mode) concepts (random sampling,

Measures of variability (variance, Conditional probability stratified sampling,

standard deviation, range) Bayes' theorem etc.)

Percentiles and quartiles Random variables and Hypothesis testing

Skewness and kurtosis probability distributions Type I and Type II

(discrete and errors

continuous) Confidence intervals

Joint and marginal t-tests and z-tests

probability distributions Chi-square tests

Expected value and Analysis of variance

variance (ANOVA)

Regression Analysis: Experimental Design: Time Series Analysis:

Simple linear regression Control and treatment Trend analysis

Multiple linear regression groups Seasonality and

Assumptions of linear regression Randomized controlled cyclicity

Model evaluation and diagnostics trials (RCTs) Autocorrelation and

(R-squared, adjusted R-squared, Factorial designs partial autocorrelation

residuals analysis) Blocking and Stationarity and

Logistic regression (binary and randomization differencing

multinomial Analysis of variance ARIMA models

(ANOVA)

Multivariate Analysis: Bayesian Statistics: Non-parametric Methods:

Principal Component Analysis Bayesian inference Mann-Whitney U test

(PCA) Prior and posterior Wilcoxon signed-rank

Factor Analysis distributions test

Cluster Analysis (k-means, Markov Chain Monte Kruskal-Wallis test

hierarchical clustering) Carlo (MCMC) methods Non-parametric

Discriminant Analysis correlation (Spearman's

rank correlation)

Resampling Methods: Data Visualization: Statistical Software:

Bootstrapping Histograms Familiarity with

Cross-validation Box plots statistical programming

Scatter plots languages (R or Python)

Bar charts Experience with

Heatmaps statistical libraries and

Time series plots packages (e.g., pandas,

NumPy, scikit-learn)

Supervised Learning: Unsupervised Learning: Evaluation Metrics:

Linear Regression Clustering (k-means, Accuracy, Precision,

Logistic Regression hierarchical clustering, Recall, F1-score

Decision Trees DBSCAN) ROC curve and AUC

Random Forests Dimensionality Confusion Matrix

Support Vector Reduction (Principal Mean Squared Error

Machines (SVM) Component Analysis - (MSE), Root Mean

Naive Bayes PCA, t-SNE) Squared Error (RMSE)

k-Nearest Neighbors Anomaly Detection R-squared, Adjusted R-

(k-NN) Association Rules squared

Gradient Boosting

(e.g., XGBoost,

LightGBM)

Cross-Validation and Model Regularization: Feature Engineering:

Selection: L1 and L2 Handling Missing Data

k-fold Cross-Validation Regularization (Lasso Feature Scaling

Hyperparameter and Ridge Regression) One-Hot Encoding

Tuning Elastic Net Feature Selection

Grid Search Early Stopping Techniques

Model Selection Feature Extraction

Techniques (e.g., (e.g., PCA)

nested cross-

validation)

Ensemble Learning: Handling Imbalanced Data:

Bagging Undersampling

Boosting Oversampling

Stacking SMOTE (Synthetic

Voting Classifiers Minority Over-

sampling Technique)

Evaluation metrics for

imbalanced data (e.g.,

precision-recall curve)

Reinforcement Learning Reinforcement Learning Model Interpretability:

(basics): (advanced): Feature Importance

Markov Decision Policy Gradients Partial Dependence

Processes (MDPs) Actor-Critic methods Plots

Q-Learning Proximal Policy LIME (Local

Deep Q-Network Optimization (PPO) Interpretable Model-

(DQN) Deep Deterministic Agnostic Explanations)

Policy Gradient (DDPG) SHAP (SHapley

Additive exPlanations)

You might also like

- Dmaic ToolkitDocument8 pagesDmaic ToolkitHerlander FaloNo ratings yet

- Intro To Anomal Detection With Opencv, Computer Vision, and Scikit-LearnDocument38 pagesIntro To Anomal Detection With Opencv, Computer Vision, and Scikit-Learn超揚林No ratings yet

- Cheatsheet Recurrent Neural NetworksDocument5 pagesCheatsheet Recurrent Neural NetworkscidsantNo ratings yet

- ML-DL Training CurriculumDocument2 pagesML-DL Training CurriculumShubhendra vatsaNo ratings yet

- Updated CPC ScheduleDocument1 pageUpdated CPC ScheduleAzim CoolNo ratings yet

- Fundamental Topics in Statistics 1708250861Document4 pagesFundamental Topics in Statistics 1708250861benkhelfa.oNo ratings yet

- L5 Par EsteightDocument10 pagesL5 Par EsteightJens RydNo ratings yet

- Psychology Statistic NoteDocument13 pagesPsychology Statistic Notechinmengen0928No ratings yet

- Machine LearningDocument1 pageMachine LearningaymanmabdelsalamNo ratings yet

- Course Description PDFDocument5 pagesCourse Description PDFrogermaximoffNo ratings yet

- HMX7001 Analysis of Data Using SPSS - Advanced LevelDocument97 pagesHMX7001 Analysis of Data Using SPSS - Advanced LevelLim Kok PingNo ratings yet

- BSCM-04 Estimation of Distribution AlgorithmsDocument9 pagesBSCM-04 Estimation of Distribution Algorithmsscribd.stoplight591No ratings yet

- BAAIDocument4 pagesBAAIShreenidhi M RNo ratings yet

- Data Science I: Lesson #01 - Outline PresentationDocument20 pagesData Science I: Lesson #01 - Outline PresentationalesyNo ratings yet

- Chem 2021 CPC ScheduleDocument2 pagesChem 2021 CPC ScheduleMaitraeyan kumarNo ratings yet

- Data Scientist 1Document96 pagesData Scientist 1Farah DibaNo ratings yet

- Visual Guide To Machine LearningDocument349 pagesVisual Guide To Machine LearningMichelle SaverNo ratings yet

- SylDocument3 pagesSyllokesh kNo ratings yet

- Dmaic Tool KitDocument14 pagesDmaic Tool KitErik Leonel LucianoNo ratings yet

- Syllabus Data Analytics MCA-206Document3 pagesSyllabus Data Analytics MCA-206VISHAL KUMAR SHAWNo ratings yet

- Codigos para STATADocument9 pagesCodigos para STATAPilar CarterNo ratings yet

- ML 2Document3 pagesML 2suresh reddyNo ratings yet

- PGP-AIML Curriculum - Great LakesDocument43 pagesPGP-AIML Curriculum - Great LakesArnabNo ratings yet

- Crash Course in Analytics For Non Analytics ManagersDocument74 pagesCrash Course in Analytics For Non Analytics ManagersUzair FaruqiNo ratings yet

- Research Designs Non Probability Sampling Probability Sampling Sampling Plan Analysis in Research ProcessingDocument2 pagesResearch Designs Non Probability Sampling Probability Sampling Sampling Plan Analysis in Research ProcessingSujith DeepakNo ratings yet

- SlidesDocument51 pagesSlidesGrace GaoNo ratings yet

- Notes Mathematics in The Modern WorldDocument28 pagesNotes Mathematics in The Modern WorldICE ADRIENNE OCAMPO100% (1)

- Data Science & Machine Learning 2024Document2 pagesData Science & Machine Learning 2024Maham noorNo ratings yet

- Base PaperDocument12 pagesBase PaperRoshan JainNo ratings yet

- Dmaic ToolkitDocument8 pagesDmaic ToolkitKrisdaryadiHadisubrotoNo ratings yet

- StatsDocument2 pagesStatstowhidalam232No ratings yet

- WIP - ML-22-DEC WeekendDocument40 pagesWIP - ML-22-DEC Weekendkumarswamy gorrepatiNo ratings yet

- Stata Glossary and Index: Release 16Document268 pagesStata Glossary and Index: Release 16Antonio CastroNo ratings yet

- Crisp DM Framework: Data Mining Tasks: Description Estimation Prediction Classification Clustering AssociationDocument6 pagesCrisp DM Framework: Data Mining Tasks: Description Estimation Prediction Classification Clustering AssociationUTKARSH PABALENo ratings yet

- ProMSA FeatureSheetDocument3 pagesProMSA FeatureSheetGANESH GNo ratings yet

- Ae5b PDFDocument61 pagesAe5b PDFKarimNo ratings yet

- Pengantar Spatial Machine LearningDocument83 pagesPengantar Spatial Machine LearningDimasNo ratings yet

- Exploratory Data AnalysisDocument209 pagesExploratory Data AnalysisChaitanya Krishna DeepakNo ratings yet

- Effective Problem SolvingDocument1 pageEffective Problem Solvingmuthuswamy77No ratings yet

- Performance Analysis of SVM With Quadratic Kernel and Logistic Regression in Classification of Wild AnimalsDocument19 pagesPerformance Analysis of SVM With Quadratic Kernel and Logistic Regression in Classification of Wild AnimalsSuhas GowdaNo ratings yet

- Combined Subject Table of ContentsDocument49 pagesCombined Subject Table of ContentsDanielaLlanaNo ratings yet

- IT6006-Data Analytics Department of CSE 2018-2019Document193 pagesIT6006-Data Analytics Department of CSE 2018-2019slogeshwariNo ratings yet

- PR - L1-Introduction To Pattern Recognition PDFDocument20 pagesPR - L1-Introduction To Pattern Recognition PDFcooldoubtlessNo ratings yet

- Regresion Con StataDocument20 pagesRegresion Con StataMirko VelascoNo ratings yet

- Dmitry GrapovDocument41 pagesDmitry GrapovG Nathan JdNo ratings yet

- Data Science Knowledge Mapping Form54153Document3 pagesData Science Knowledge Mapping Form54153MossyNo ratings yet

- Research Method and MaterialsDocument1 pageResearch Method and MaterialsTeshome AR RobNo ratings yet

- Short Details of Business Analyst CourseDocument4 pagesShort Details of Business Analyst CourseAakash YadavNo ratings yet

- SauDocument2 pagesSauSharmaDeepNo ratings yet

- Bayesian Note Part 1Document14 pagesBayesian Note Part 1meisam hejaziniaNo ratings yet

- Becoming A Data ScientistDocument4 pagesBecoming A Data ScientistJohnNo ratings yet

- Session 1 INtroduction To Data AnalyticsDocument16 pagesSession 1 INtroduction To Data AnalyticsVarun KashyapNo ratings yet

- Data Analysis:: Quantitative and QualitativeDocument73 pagesData Analysis:: Quantitative and QualitativeChu WanNo ratings yet

- Ineuron 12mnthsDocument26 pagesIneuron 12mnthsSamgam StudioNo ratings yet

- CS395T - Computational Statistics With Application To BioinformaticsDocument5 pagesCS395T - Computational Statistics With Application To Bioinformaticspanna1No ratings yet

- Example of Supervised Learning AlgorithmsDocument5 pagesExample of Supervised Learning AlgorithmsSuvendu Sekhar SahooNo ratings yet

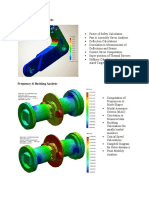

- Linear Static Stress AnalysisDocument10 pagesLinear Static Stress Analysiskrishbalu17No ratings yet

- Data Science - 240 HrsDocument2 pagesData Science - 240 HrsAsh talksNo ratings yet

- Data MiningDocument21 pagesData Miningmohamedelgohary679No ratings yet

- Math For ML MasteryDocument7 pagesMath For ML MasteryVarun GehlotNo ratings yet

- RS. 1,20,000 Course Outline PG in Data Science: A. Business Analytics Subject ContentDocument13 pagesRS. 1,20,000 Course Outline PG in Data Science: A. Business Analytics Subject ContentShadab khanNo ratings yet

- Techniques in Wildlife Investigations: Design and Analysis of Capture DataFrom EverandTechniques in Wildlife Investigations: Design and Analysis of Capture DataRating: 5 out of 5 stars5/5 (1)

- Global Certificate in Data ScienceDocument11 pagesGlobal Certificate in Data ScienceAkshay SehgalNo ratings yet

- 2 UOLO - Automatic Object Detection and SegmentationDocument8 pages2 UOLO - Automatic Object Detection and SegmentationSUMOD SUNDARNo ratings yet

- Employee Culling Based On Online Work Assessment Through Machine Learning AlgorithmDocument6 pagesEmployee Culling Based On Online Work Assessment Through Machine Learning AlgorithmKredwanNo ratings yet

- NN Lab Course PlanDocument9 pagesNN Lab Course PlanHarsh MishraNo ratings yet

- Final Report Spam Mail Detection 34Document50 pagesFinal Report Spam Mail Detection 34ASWIN MANOJNo ratings yet

- Data Science in PracticeDocument199 pagesData Science in PracticeKharlzg100% (8)

- Vol 1.2 Online Issn - 0975-4172 Print Issn - 0975-4350 September 2009Document231 pagesVol 1.2 Online Issn - 0975-4172 Print Issn - 0975-4350 September 2009Frank VijayNo ratings yet

- On-The - y Active Learning of Interpretable Bayesian Force Fields For Atomistic Rare EventsDocument11 pagesOn-The - y Active Learning of Interpretable Bayesian Force Fields For Atomistic Rare EventsDarnishNo ratings yet

- Business Analytics Sample SOPDocument2 pagesBusiness Analytics Sample SOPM.Sowndhara KumarNo ratings yet

- FSD Ce2108Document274 pagesFSD Ce2108tataxpNo ratings yet

- AI Lec 5Document37 pagesAI Lec 5Shafaq KhanNo ratings yet

- Predictive Data Mining and Discovering Hidden Values of Data WarehouseDocument5 pagesPredictive Data Mining and Discovering Hidden Values of Data WarehouseLangit Merah Di SelatanNo ratings yet

- Artificial Intelligence: Binary Classifiers For Multi-Class Classification ProblemsDocument12 pagesArtificial Intelligence: Binary Classifiers For Multi-Class Classification ProblemsThành Cao ĐứcNo ratings yet

- ML Unit 2Document39 pagesML Unit 2Sristi Jaya MangalaNo ratings yet

- Machine Learning: Dr. Muhammad AsadullahDocument69 pagesMachine Learning: Dr. Muhammad AsadullahSyed Kamran Ahmad1No ratings yet

- ML Lab ManualDocument37 pagesML Lab Manualapekshapandekar01100% (1)

- 3D Reconstruction of Human Body Via Machine Learning Qi HeDocument59 pages3D Reconstruction of Human Body Via Machine Learning Qi Hehithyshi dcNo ratings yet

- Executive PG Programme in Data ScienceDocument33 pagesExecutive PG Programme in Data ScienceSarat SahooNo ratings yet

- Whisper OpenaiDocument28 pagesWhisper OpenaiChris HeNo ratings yet

- Plotting Decision Regions - 1 - MlxtendDocument5 pagesPlotting Decision Regions - 1 - Mlxtendakhi016733No ratings yet

- Startup Ecosystem India Incubators Accelerators 23 01 2019Document95 pagesStartup Ecosystem India Incubators Accelerators 23 01 2019joshniNo ratings yet

- The Impact of Artificial Intelligence On Software DevelopmentDocument3 pagesThe Impact of Artificial Intelligence On Software Developmentdorothy mutahiNo ratings yet

- Internpedia 2020: DisclaimerDocument56 pagesInternpedia 2020: DisclaimerMuhammad MahatabNo ratings yet

- Docs Slides Lecture1Document31 pagesDocs Slides Lecture1PravinkumarGhodakeNo ratings yet

- K Mean ClusteringDocument3 pagesK Mean ClusteringrafNo ratings yet

- Batch 24 Major Project Review 1Document22 pagesBatch 24 Major Project Review 1manikantaNo ratings yet

- Survey of Boosting From An Optimization Perspective: ICML 2009 TutorialDocument3 pagesSurvey of Boosting From An Optimization Perspective: ICML 2009 TutorialAugustoTexNo ratings yet