Professional Documents

Culture Documents

Proof

Uploaded by

anand vihariOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Proof

Uploaded by

anand vihariCopyright:

Available Formats

(botnets') activities.

In this study, we system into three format and loading them into MATLAB tables for

separate modules, each of which is implemented by a set of subsequent preprocessing steps. With this hosting, each

procedures. These components perform a chain of actions on record of IoT traffic is delivered in a raw table format, with

the input to generate the end product (anomaly-based the data characteristics shown in columns.

detection).

2. Data Cleansing Process (DCP)

A. IMPLEMENTATION OF THE DATA PREPARATION Data cleansing entails digging into the data to figure out

(DP) UNIT what's really going on and fixing any misunderstandings

The N-BaIoT 2021 dataset [21], [22], [23] contains raw (DHP). DHP's focus is on defect and inconsistency removal

IoT traffic data that must be preprocessed before it can be fed to boost data quality [24], [25], [26]. In this research, we

into component of the LP module. This is the responsibility used DHP to search for null-value cells and replace them

of the data preparation (DP) module. The following sequence with zero numerical, search for corrupted-value cells and

of steps constitutes this module's implementation. replace them with zero numerical, fix the typically attributes

are simple and filled wi), and fix the attrib names. Values

1. Data Hosting Process (DHP) between 0 and 1 are used for the output classes in the binary

The (DHP) is the procedure of storing data on a classifier, whereas values between 0 and 9 are used in the

dependable and always-available server. In this study, we multi classifier. In Table 1 below, we detail the steps for

host the data, train the model, and assess its performance all inputting labels and fixing any mistakes or incorrect data

within the MATLAB environment. This phase is in charge of entries.

receiving the data records in comma-separated value (CSV)

Table 1. Label indoctrination for the target classes

with one another, and that not hold true in many practical

contexts.

Classifier Botnet(s) Normal

Binary Classifier 1 (anomaly) 0 (normal)

Ternary Classifier 1. Mirai botnet 0 (normal)

2. Bashlite botnet (Gafgyt)

Multiclassifier 1. MIRAI_DANMINI_DOORBELL 0 (normal)

2. MIRAI_ECOBEE_THERMOSTAT

3. MIRAI_PHILIPS_DARLING_MONITOR

4. MIRAI_DELIVERY_ 9.

GAFGYT_PROVISION_SAFETY_CAMERA

B. CLAS

SIFICAT

ION In contrast to standard linear models, Generalized

Additive Models (GAMs) attribute the outcome to Predicting

1. Generalised Additive Models y at time t using covariate vector x may be done with the

following formula

Because of the ease with which they may be

implemented, linear regression replicas are widely used in g(y)=a+f_1 (x_1 )+f_2 (x_2 )+⋯+f_i (x_i )+ϵ (2)

statistical demonstrating and prediction. For a single case,

the connection may be expressed linearly as: Given a set of explanatory variables and a linear

predictor, one may use a link function g(.) to determine the

y=β_0+β_1 x_1+⋯+β_i x_i+ϵ (1) unknown function f() (e.g. binomial, normal, Poisson).

Estimating f I (may be done in several ways, most of which

error is a zero-expectation Gaussian random variable, x I is a

include some form of statistical computing). Starting with a

collection of qualities that assist explain y, and I is a set of

scatterplot, each version uses a scatterplot smoother to

unknown parameters or coefficients. The linear regression

generate a fitted function that optimally balances smoothness

model is used because it is simple and intuitive. Since that

and fit to the data. If the function f I (x I can be

predictions are often modeled as a weighted sum of the

approximated, it may reveal whether or not the effect of x I is

characteristics, measuring the effect of alterations to the

linear. For the analysis of geoscience time series data, GAM

features is straightforward.

models are appealing because to their interpretability, signal

The model's due to the fact that the relationship among additivity, and regularisation. Before, it was mentioned that

the features and the outcome may not be linear, that interact GAM works best with interpretable models, where the

impact of each explanatory variable can be easily seen and

understood. Time-series signals may be naturally explained

by GAM models due to the presence of several additive Where, Ω(f)=1/2 λ‖w‖^2 with l being the change amid the

changes. More adaptable than basic regression models foretold value y I and the observed value y i, spoken as a

focused purely on error reduction, GAM offers a tuning differentiable curved loss purpose (such as the mean squared

parameter to modify tradeoff). The number of splines order error) for each realisation i. To prevent over-fitting, the

are further parameters often selected on heuristics, regularisation term (a regularisation coefficient) softens the

knowledge, and the presentation of the model. final weights. In addition, setting a maximum tree depth

2. Random Forest controls the complexity of the model.

Random Forests (RF) have moved from learning replicas 4. Multi-Layer Perceptron

like GAM to those in the machine learning library due to

their impressive performance in complex prediction

A Multi-Layer Perceptron (MLP) model was the initial

problems with many explanatory factors and nonlinear

DL method explored. In order to find the optimal weights

dynamics. The outputs of many decision trees are combined

in RF, a method for doing classification and regression and re udices for the nonlinear function translating in uts

analysis. Decision trees are conceptually straightforward yet to out uts, an network roblem , y :

effective prediction tools because they break down a dataset g(x )=y (5)

into ever-narrower subgroups while simultaneously creating The neural network weights and biases that translate

a decision tree. The model that emerges is straightforward measured SST to explanatory factors x are represented by

because it follows a natural chain of causation. the symbol.

The neural network weights and biases that translate

Each RF tree is a standard CART in which the splitting measured SST to explanatory factors x are represented by

predictor is chosen at random and node "impurity" is used as the symbol.

a criterion (the subset is different at each split). The average

response inside the feature subdomains corresponding to

each branch of the tree. The impurity of a node represents the (6)

degree to which its observations deviate from the model. In

regression trees, one typical measure is the sum of squares of where f is the activation layer l-1 to node n in layer l, and b

residuals at that node. Predictions are averaged using a b n((l)) is the (ReLU) was chosen as the activation function

majority rule after trees are constructed using bootstrap for this particular use case.

samples drawn from the whole dataset with replacement. (7)

The tradeoff between a model that flawlessly follows the The squared difference between the observation and the

training data but cannot the properties of the training data machine-learning forecast forms the basis of a loss function,

must be taken into account, as it does with all machine with a regularisation contribution managed by λ:

learning models. Popularity of RF can be attributed to its

excellent performance with low hyperparameter adjustment. (8)

In order to optimise performance, hyperparameters such as

the sum of features to evaluate when looking for the ideal

split, and the splitting criterion can be modified. where _2 stands for the L 2 norm. Overfitting, when the

model does a good job of fitting the training data but fails to

3. XGBoost generalise to new data, may be avoided by using weight

XGBoost shares many traits and compensations with RF, decay, which is enforced by the regularisation term to

but the main distinction that allows for performance penalise complicated models.

increases is the sequential rather than the separate The y that produces y is found via the supervised machine

construction of decision trees (including interpretability, learning algorithm by minimising the loss function. A

predictive performance, and simplicity). The University of machine learning method, as seen in Figure 1, uses several

Washington created an algorithm called XGBoost in 2016; hidden layers to map an input vector (layer) to an output

since then, it has won many Kaggle competitions and has vector (layer). The data to determine the parameters used to

found widespread use in industry. XGBoost provides map x to y using nonlinear functions. To find a happy

methodological additions for approximation tree learning, as medium between the model's actual capabilities and the

well as optimisation towards distributed computing, to difficulty of the task at hand, hyperparameter adjustment is

capable of processing of samples. essential. The ability of a neural network-style model to

Tree ensemble models use a framework very similar to represent complex functions grows as the number of layers

RF to generate predictions about form: and hidden units per layer is expanded. The danger of

overfitting decreases the network's generalisation ability, yet

(3) increasing the network's depth can increase performance on

the training data. regularisation coefficient are the usual

where K is the total number of trees, F is the collection of hy er arameters to ad ust in neural networks λ

CART trees, q is the total number of decision trees, and w q

(x) is the weight assigned to each leaf based on the value of x C. IMPLEMENTATION OF THE EVALUATION

in the input. F is intended by minimising an impartial PROCESS (EP) UNIT

function. Measuring and controlling the quality indicators

(4) that verify the system is in line with its criteria and goals is

an integral part of the evaluation process (EP). We

employed the aforementioned four model variants—MLP,

You might also like

- Backpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningFrom EverandBackpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningNo ratings yet

- Estimation of Liquid-Liquid Equilibrium For A Quaternary System Using The GMDH AlgorithmDocument6 pagesEstimation of Liquid-Liquid Equilibrium For A Quaternary System Using The GMDH AlgorithmLaiadhi DjemouiNo ratings yet

- Radial Basis Networks: Fundamentals and Applications for The Activation Functions of Artificial Neural NetworksFrom EverandRadial Basis Networks: Fundamentals and Applications for The Activation Functions of Artificial Neural NetworksNo ratings yet

- KMurphy PDFDocument20 pagesKMurphy PDFHamid FarhanNo ratings yet

- Joint Modelling Package for Multiple Imputation of Multilevel DataDocument24 pagesJoint Modelling Package for Multiple Imputation of Multilevel DataTyan OrizaNo ratings yet

- Genetic algorithm solves nonlinear parameter estimation problemsDocument9 pagesGenetic algorithm solves nonlinear parameter estimation problemsJack IbrahimNo ratings yet

- Implicit Regularization For Deep Neural Networks Driven by An Ornstein-Uhlenbeck Like ProcessDocument31 pagesImplicit Regularization For Deep Neural Networks Driven by An Ornstein-Uhlenbeck Like ProcessNathalia SantosNo ratings yet

- Semi-supervised learning with normalizing flowsDocument16 pagesSemi-supervised learning with normalizing flowsPalle JayanthNo ratings yet

- pp9 - v4 - MejoradoDocument6 pagespp9 - v4 - Mejoradoapi-3734323No ratings yet

- A Relational Approach To The Compilation of Sparse Matrix ProgramsDocument16 pagesA Relational Approach To The Compilation of Sparse Matrix Programsmmmmm1900No ratings yet

- Relational Approach to Compiling Sparse Matrix CodeDocument16 pagesRelational Approach to Compiling Sparse Matrix Codemmmmm1900No ratings yet

- CLM ArticleDocument40 pagesCLM Articlerico.caballero7No ratings yet

- Large Margin Deep Networks For ClassificationDocument16 pagesLarge Margin Deep Networks For ClassificationShah Nawaz KhanNo ratings yet

- Automatic Database Normalization and Primary Key GenerationDocument6 pagesAutomatic Database Normalization and Primary Key GenerationRohit DoriyaNo ratings yet

- Gradient Estimation Using Stochastic Computation GraphsDocument13 pagesGradient Estimation Using Stochastic Computation Graphsstephane VernedeNo ratings yet

- General Graph Optimization Framework for Robotics and Computer Vision ProblemsDocument7 pagesGeneral Graph Optimization Framework for Robotics and Computer Vision ProblemsSidharth SharmaNo ratings yet

- Week - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Document7 pagesWeek - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Mrunal BhilareNo ratings yet

- GPS Data Processing Methodology from Theory to ApplicationsDocument37 pagesGPS Data Processing Methodology from Theory to ApplicationsnicefamiliarNo ratings yet

- CS 3035 (ML) - CS - End - May - 2023Document11 pagesCS 3035 (ML) - CS - End - May - 2023Rachit SrivastavNo ratings yet

- Glow: Generative Flow With Invertible 1×1 Convolutions: Equal ContributionDocument15 pagesGlow: Generative Flow With Invertible 1×1 Convolutions: Equal ContributionNguyễn ViệtNo ratings yet

- Lecture 2: Introduction To PytorchDocument7 pagesLecture 2: Introduction To PytorchNilesh ChaudharyNo ratings yet

- Analysis For Graph Based SLAM Algorithms Under G2o FrameworkDocument11 pagesAnalysis For Graph Based SLAM Algorithms Under G2o FrameworkTruong Trong TinNo ratings yet

- Bayesian Analysis of Spatially Autocorrelated Data Spatial Data Analysis in Ecology and Agriculture Using RDocument18 pagesBayesian Analysis of Spatially Autocorrelated Data Spatial Data Analysis in Ecology and Agriculture Using Raletheia_aiehtelaNo ratings yet

- Automatic Reparameterisation of Probabilistic ProgramsDocument10 pagesAutomatic Reparameterisation of Probabilistic ProgramsdperepolkinNo ratings yet

- The Good BookDocument10 pagesThe Good BookGeet SharmaNo ratings yet

- Generalizing Mlps With Dropouts, Batch Normalization, and Skip ConnectionsDocument10 pagesGeneralizing Mlps With Dropouts, Batch Normalization, and Skip ConnectionsAnil Kumar BNo ratings yet

- Composite Key Generation On A Shared NotDocument16 pagesComposite Key Generation On A Shared NotHala MADRIDNo ratings yet

- Wavelets On Graphs Via Spectral Graph TheoryDocument37 pagesWavelets On Graphs Via Spectral Graph Theory孔翔鸿No ratings yet

- Gradient Descent AlgorithmDocument5 pagesGradient Descent AlgorithmravinyseNo ratings yet

- Linear Regression Simple Technique For IDocument3 pagesLinear Regression Simple Technique For IbAxTErNo ratings yet

- VGAM Package for Categorical Data AnalysisDocument30 pagesVGAM Package for Categorical Data AnalysisGilberto Martinez BuitragoNo ratings yet

- tutorial-MART and R InterfaceDocument24 pagestutorial-MART and R InterfaceaarivalaganNo ratings yet

- Final Thesis Pk12Document97 pagesFinal Thesis Pk12Suman PradhanNo ratings yet

- Generalization of Rischs Algorithm To Special Functions PDFDocument19 pagesGeneralization of Rischs Algorithm To Special Functions PDFJuan Pastor RamosNo ratings yet

- Over-parameterized systems satisfy PL* condition ensuring SGD convergenceDocument31 pagesOver-parameterized systems satisfy PL* condition ensuring SGD convergenceLuis BoteNo ratings yet

- Laude 2018 Discrete ContinuousDocument13 pagesLaude 2018 Discrete ContinuousswagmanswagNo ratings yet

- Homework Exercise 4: Statistical Learning, Fall 2020-21Document3 pagesHomework Exercise 4: Statistical Learning, Fall 2020-21Elinor RahamimNo ratings yet

- A Probabilistic Theory of Deep Learning: Unit 2Document17 pagesA Probabilistic Theory of Deep Learning: Unit 2HarshitNo ratings yet

- Fast Label Embeddings via Randomized Linear AlgebraDocument15 pagesFast Label Embeddings via Randomized Linear Algebraomonait17No ratings yet

- Optimization MathematicsDocument9 pagesOptimization MathematicsKrishna AcharyaNo ratings yet

- Escape Analysis For JavaDocument19 pagesEscape Analysis For JavapostscriptNo ratings yet

- Sympy: Definite Integration Via Integration in The Complex Plane ProposalDocument3 pagesSympy: Definite Integration Via Integration in The Complex Plane Proposalrizgarmella100% (1)

- Random Sets Approach and Its ApplicationsDocument12 pagesRandom Sets Approach and Its ApplicationsThuy MyNo ratings yet

- Gji 149 3 625Document8 pagesGji 149 3 625Diiana WhiteleyNo ratings yet

- Deep Learning AnswersDocument36 pagesDeep Learning AnswersmrunalNo ratings yet

- Adaptive DEDocument6 pagesAdaptive DEHafizNo ratings yet

- Marginalized Denoising Auto-Encoders For Nonlinear RepresentationsDocument9 pagesMarginalized Denoising Auto-Encoders For Nonlinear RepresentationsAli Hassan MirzaNo ratings yet

- Sigmoid Function: Soft Computing AssignmentDocument12 pagesSigmoid Function: Soft Computing AssignmentOMSAINATH MPONLINE100% (1)

- S - T N: B O H - S B - R F: ELF Uning Etworks Ilevel Ptimization of Yperparameters Us ING Tructured EST Esponse UnctionsDocument25 pagesS - T N: B O H - S B - R F: ELF Uning Etworks Ilevel Ptimization of Yperparameters Us ING Tructured EST Esponse UnctionsTran Ngoc ThangNo ratings yet

- Data Analysis Process of Working Hydraulics of Small Mobile MachineDocument10 pagesData Analysis Process of Working Hydraulics of Small Mobile Machineyusufhussein431No ratings yet

- DL Unit1Document10 pagesDL Unit1Ankit MahapatraNo ratings yet

- Deep-Learning Notes 01Document8 pagesDeep-Learning Notes 01Ankit MahapatraNo ratings yet

- Important QuestionsDocument18 pagesImportant Questionsshouryarastogi9760No ratings yet

- Fluid99 PDFDocument11 pagesFluid99 PDFWilfried BarrosNo ratings yet

- A: A M S O: DAM Ethod For Tochastic PtimizationDocument13 pagesA: A M S O: DAM Ethod For Tochastic PtimizationAhmad KarlamNo ratings yet

- Nelder 1972Document16 pagesNelder 19720hitk0No ratings yet

- PerceptiLabs-ML HandbookDocument31 pagesPerceptiLabs-ML Handbookfx0neNo ratings yet

- Design of Transparent Mamdani Fuzzy Inference SystemsDocument9 pagesDesign of Transparent Mamdani Fuzzy Inference SystemsTamás OlléNo ratings yet

- Graphical Algorithms and Mining - Network Structural Vocabulary 2017Document26 pagesGraphical Algorithms and Mining - Network Structural Vocabulary 2017lakshmi.vnNo ratings yet

- Brazilian neural network conference proceedings on immunological RBF network initializationDocument6 pagesBrazilian neural network conference proceedings on immunological RBF network initializationTéoTavaresNo ratings yet

- Summarizing Data & Statistics: Reminder For Final ExamDocument10 pagesSummarizing Data & Statistics: Reminder For Final ExamJoseph QuesnelNo ratings yet

- ASSIGNMENT Basic StatisticsDocument2 pagesASSIGNMENT Basic StatisticsVijay BainsNo ratings yet

- A Handbook of Communication ResearchDocument70 pagesA Handbook of Communication ResearchJohana VangchhiaNo ratings yet

- P L Lohitha 11-11-22 Data Mining Business ReportDocument47 pagesP L Lohitha 11-11-22 Data Mining Business ReportLohitha PakalapatiNo ratings yet

- Applied Regression Analysis Final ProjectDocument8 pagesApplied Regression Analysis Final Projectbqa5055No ratings yet

- Assignment 2 QuestionDocument4 pagesAssignment 2 QuestionYanaAlihadNo ratings yet

- Moving Average Method Forecasting TechniquesDocument17 pagesMoving Average Method Forecasting TechniquesMahnoor KhalidNo ratings yet

- Regression Analysis ExplainedDocument111 pagesRegression Analysis ExplainedNicole Agustin100% (1)

- Practical Research 2Document44 pagesPractical Research 2Jeclyn FilipinasNo ratings yet

- MCA Exam Questions on Data Mining & WarehousingDocument3 pagesMCA Exam Questions on Data Mining & WarehousingMsec McaNo ratings yet

- Erbacher Vita 2010Document5 pagesErbacher Vita 2010HuntMNo ratings yet

- Intermediate Statistics Sample Test 1Document17 pagesIntermediate Statistics Sample Test 1muralidharan0% (3)

- Chapter Three Research MethodologyDocument5 pagesChapter Three Research MethodologyPatriqKaruriKimboNo ratings yet

- ETHIOPIADocument13 pagesETHIOPIANadanNadhifahNo ratings yet

- Research PresentationDocument22 pagesResearch PresentationeferemNo ratings yet

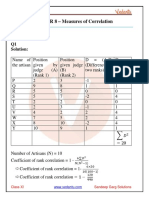

- Sandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationDocument5 pagesSandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationRiddhiman JainNo ratings yet

- Knowledge Discovery in Data Science: KDD Meets Big DataDocument6 pagesKnowledge Discovery in Data Science: KDD Meets Big DataPatricio UlloaNo ratings yet

- Analysis Vs ReportingDocument21 pagesAnalysis Vs Reportingselva rajNo ratings yet

- MasterThesis UniliverDocument76 pagesMasterThesis UniliverVita SonyaNo ratings yet

- Introduction To Correlationand Regression Analysis BY Farzad Javidanrad PDFDocument52 pagesIntroduction To Correlationand Regression Analysis BY Farzad Javidanrad PDFgulafshanNo ratings yet

- Chapter 4 - Forecasting: Mr. David P. Blain. C.Q.E. Management Department UnlvDocument34 pagesChapter 4 - Forecasting: Mr. David P. Blain. C.Q.E. Management Department UnlvPrerna Aggarwal0% (1)

- Time Series Method-Free Hand, Semi Average, Moving Average-Unit-4Document94 pagesTime Series Method-Free Hand, Semi Average, Moving Average-Unit-4Sachin KirolaNo ratings yet

- Hiring Process Analytics Project 4 On StatisticsDocument6 pagesHiring Process Analytics Project 4 On StatisticsNiraj Ingole100% (1)

- Guidebook For Six Sigma Implementation With Real Time ApplicationsDocument5 pagesGuidebook For Six Sigma Implementation With Real Time ApplicationsAyan GhoshNo ratings yet

- Statistics-for-Engineering-and-the-Sciences-Sixth-Edition - ESI 5219 PDFDocument1,170 pagesStatistics-for-Engineering-and-the-Sciences-Sixth-Edition - ESI 5219 PDFAnh H. Dinh100% (2)

- Processing of Data, MBA-II SemDocument3 pagesProcessing of Data, MBA-II SemDarshan NallodeNo ratings yet

- Chapter 14 Fixed Effects Regressions Least Square Dummy Variable Approach (EC220)Document26 pagesChapter 14 Fixed Effects Regressions Least Square Dummy Variable Approach (EC220)SafinaMandokhailNo ratings yet

- Educational Data Mining For The Analysis of Student DesertionDocument11 pagesEducational Data Mining For The Analysis of Student DesertionpadatreNo ratings yet

- MATLAB Econometric Model ForecastingDocument10 pagesMATLAB Econometric Model ForecastingOmiNo ratings yet

- LPG Marketing Analysis Using Descriptive StatisticsDocument6 pagesLPG Marketing Analysis Using Descriptive StatisticsSushama SinghNo ratings yet