Professional Documents

Culture Documents

CD ch1

Uploaded by

ibrahin mahamedOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

CD ch1

Uploaded by

ibrahin mahamedCopyright:

Available Formats

Compiler Design Dire Dawa University [DDIT]

Chapter One

1. Introduction to compiler and phases of compilers

Programming languages are notations for describing computations to people and to machines. The world as we know it depends

on programming languages, because all the software running on all the computers was written in some programming language.

But, before a program can be run, it first must be translated into a form in which it can be executed by a computer. The software

systems that do this translation are called compilers.

Compiler: is a program that reads a program written in one language (the source language) and translates it into an equivalent

program in another language (the target language).

Why we design compiler?

Computers are a balanced mix of software and hardware. Hardware is just a piece of mechanical device and its functions are

being controlled by compatible software. Hardware understands instructions in the form of electronic charge, which is the

counterpart of binary language in software programming. Binary language has only two alphabets, 0 and 1. To instruct, the

hardware codes must be written in binary format, which is simply a series of 1s and 0s. It would be a difficult and cumbersome

Prepared by: Andualem T. BSc 2013 E.C Page 1

Compiler Design Dire Dawa University [DDIT]

task for computer programmers to write such codes, which is why we have compilers to write such codes.

1.1. Overview of Language Processor

We have learnt that any computer system is made of hardware and software. The hardware understands a language, which

humans cannot understand. So we write programs in high-level language, which is easier for us to understand and remember.

These programs are then fed into a series of tools and OS components to get the desired code that can be used by the machine.

This is known as Language Processing System.

Language processing is an important component of programming. How high level languages are implemented to generate

machine code.

What is Language translators ?

A translator is a program that takes as input a program written in one language and produces as output a program in another

language. Beside program translation, the translator performs another very important role, the error-detection. Any violation of a

HLL specification would be detected and reported to the programmers.

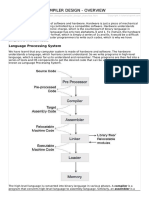

Language Processing Systems contains the following software packages sub-sequentlly: Preprocessor CompilerAssembler

Linker Loader

Prepared by: Andualem T. BSc 2013 E.C Page 2

Compiler Design Dire Dawa University [DDIT]

Language processing system

1.1.1. Preprocessor

A preprocessor, generally considered as a part of compiler, is a tool that produces input for compilers. It deals with macro-

processing, augmentation; file inclusion, language extension, etc.

1. Macro processing: A preprocessor may allow a user to define macros that are shorthand’s for longer constructs.

For example, in C, #define foo(x,y) (3*x+y*(2+x)) defines a macro foo, that when used in later in the program, is expanded by

the preprocessor. For example, a = foo (a,b) becomes a = (3*a+b*(2+a)). Each time the preprocessor sees the word foo(a,b), it

Prepared by: Andualem T. BSc 2013 E.C Page 3

Compiler Design Dire Dawa University [DDIT]

inserts 3*a+b*(2+a)) into the place.

2. File inclusion: A preprocessor may include header files into the program text.

For example, in C, #include “header.h” is replaced by the contents of the file header.h.

3. Rational preprocessor: these preprocessors augment older languages with more modern flow-of-control and data

structuring facilities.

4. Language Extensions: These preprocessor attempts to add capabilities to the language by certain amounts to build-in macro.

1.1.2. Compiler

Compiler is a program which translates a program written in one language (the source language) to an equivalent program in

other language (the target language). Usually the source language is a high level language like Java, C, C++, FORTRAN etc.

whereas the target language is machine code or "code" that a computer's processor understands. The source language is

optimized for humans. It is more user-friendly, to some extent platform-independent. They are easier to read, write, and

maintain and hence it is easy to avoid errors.

Executing a program written in HLL programming language is basically of two parts. The source program must first be

compiled or translated into an object program. Then the resulting object program is loaded into a memory executed.

Source pgm obj pgm

Compiler

Obj pgm

input Obj pgm opj pgm output

Ultimately, programs written in a high-level language must be translated into machine language by a compiler. The target

machine language is efficient for hardware but lacks readability for human. An important role of the compiler is to recognize

legal (and illegal) programs, to report any errors in the source program that it detects during the translation process and manage

Prepared by: Andualem T. BSc 2013 E.C Page 4

Compiler Design Dire Dawa University [DDIT]

storage of all variables and code.

1.1.3. interpreters

An interpreter is another common kind of language processor. Instead of producing a target program as a translation, an

interpreter appears to directly execute the operations specified in the source program on inputs supplied by the user.

Interpreter: is a program that reads a source program and executes it Works by analyzing and executing the source program

commands one at a time. It does not translate the whole source program into object code at once.

It can convert line by line from the total source code.

Error will be displayed at the time of conversion.

Interpreter and compiler differences

Interpreter:

Interpreter takes one statement then translates it and executes it and then takes another statement.

Interpreter will stop the translation after it gets the first error.

Interpreter takes less time to analyze the source code.

Over all execution speed is less.

Compiler:

While compiler translates the entire program in one go and then executes it.

Compiler generates the error report after the translation of the entire program.

Compiler takes a large amount of time in analyzing and processing the high level language code.

Overall execution time is faster.

Prepared by: Andualem T. BSc 2013 E.C Page 5

Compiler Design Dire Dawa University [DDIT]

Interpretation is important when:

Programmer is working in interactive mode and needs to view and update variables. (Modification or

addition to user programs is required as execution proceeds)

Running speed is not important

1.1.4. Assembler

Assembler changes assembly language into re-locatable machine code. Large programs are compiled into small modules. The

re-locatable machine codes have to be linked together with other re-locatable object files and library files. This code actually

runs on the machine translation (object program).

1.1.5. Linker

Linker is combines or links object code (machine code that has not yet been linked) produced from compiling and assembling

many source programs, as well as standard library functions and resources supplied by the operating system in order to make an

executable file. All these files might have been compiled by separate assemblers. The major task of a linker is to search and

locate referenced module/routines in a program and to determine the memory location where these codes will be loaded, making

the program instruction to have absolute references.

1.1.6. Loader

Compilers, assemblers and linkers usually produce code whose memory references are made relative to an undetermined starting

location that can be anywhere in memory (re-locatable machine code).

Loaders are a part of operating system responsible for loading executable files into memory and execute them. It calculates the

size of a program (instructions and data) and creates memory space for it. It initializes various registers to initiate execution. A

loader is a program that places programs into memory and prepares them for execution.

.

Prepared by: Andualem T. BSc 2013 E.C Page 6

Compiler Design Dire Dawa University [DDIT]

1.2. Structure of the compiler design

A compiler can broadly be divided into two phases based on the way they compile.

a. Analysis (front end/Machine Independent/Language Dependent)

b. Synthesis(Back end /Machine Dependent/Language independent)

a. Analysis phase (front-end)

Analysis phase of the compiler reads the source program, divides it into constituent pieces or core parts, and then checks for

lexical, grammar, and syntax errors.

During analysis, the operations implied by the source program are determined and recorded in a hierarchical structure called a

tree. Often a special kind of tree called a syntax tree is used, in which each node represents an operation and the children of a

node represent the arguments of the operation.

It includes : Lexical analysis, Syntax analysis , and Semantic analysis

The analysis phase generates an intermediate representation of the source program and symbol table, which should be fed to the

Synthesis phase as input.

If the analysis part detects that the source program is either syntactically ill formed or semantically unsound, then it must provide

informative messages, so the user can take corrective action.

Prepared by: Andualem T. BSc 2013 E.C Page 7

Compiler Design Dire Dawa University [DDIT]

The analysis part also collects information about the source program and stores it in a data structure called a symbol table,

which is passed along with the intermediate representation (IR) to the synthesis part

Front end

Recognize legal code

Report errors

Produce IR

Preliminary storage map

Shape code for the back end

b. Synthesis Phase(back end)

The synthesis part constructs the desired target program from the intermediate representation and the information in the symbol

table.

It includes Code optimizer , code generator

Back end

Translate IR into target machine code

Choose instructions for each IR operation

Prepared by: Andualem T. BSc 2013 E.C Page 8

Compiler Design Dire Dawa University [DDIT]

Decide what to keep in registers at each point

1.2.1. Phases Of Compiler

The compilation process is a sequence of various phases. Each phase takes input from its previous stage, has its own

representation of source program, and feeds its output to the next phase of the compiler.

The process of analysis/synthesis model of compilation is split up into six phases, each of which interacts with a symbol table

manager and an error handler. These phases are:

Lexical Analysis

Syntax Analysis

Semantic Analysis

Intermediate Code Generation

Code Optimization

Code Generation

Prepared by: Andualem T. BSc 2013 E.C Page 9

Compiler Design Dire Dawa University [DDIT]

Each phase:

Transforms the source program from one representation into another representation and

They communicate with error handlers and the symbol table

1. Lexical Analysis

The first phase of a compiler is called lexical analysis or scanning. The tool used is called lexical analyzer (LA) or scanner. The

lexical analyzer reads the stream of characters making up the source program is read from left to right and is grouped into

tokens.

A token is a sequence of characters having a collective meaning. It describes a pattern of characters having same meaning in the

Prepared by: Andualem T. BSc 2013 E.C Page 10

Compiler Design Dire Dawa University [DDIT]

source program. (Such as: identifiers, operators, keywords, numbers, delimiters and so on).

General form: (token-name, attribute-value) that it passes on to the subsequent phase, syntax analysis.

Token-name: an abstract symbol that is used during syntax analysis.

Attribute-value: entry in the symbol table for this token and it is optional.

For example, suppose a source program contains the assignment statement

Position = initial + rate * 60

<Id, 1> <=> <id, 2><+> <id, 3> <*> <60>

Tokens are: position is identifier, = is assignment operator, initial is identifier, + is an add operator, rate is identifier, * is

multiplication operator, 60 is a number

Position: is a lexeme that mapped into a token (id, 1); Id - identifier and 1 points to the symbol- table

=: is a lexeme that mapped into the token <=>

Initial: is a lexeme that mapped into the token <id, 2>

+: is a lexeme that mapped into the token <+>

Rate: is a lexeme that mapped into the token <id, 3>

* : is a lexeme that mapped into the token <*>

60: is a lexeme that mapped into the token <60>

NB: Blanks separating the lexemes would be discarded by the lexical analyzer.

QUIZE One: how many tokens are present in the following expression and list all tokens?

Prepared by: Andualem T. BSc 2013 E.C Page 11

Compiler Design Dire Dawa University [DDIT]

a) Newvalue = oldvalue + 12 b) a[index] = 4 + 2;

Generally Lexical Analysis

1. Skips or ignores comments and white spaces outside of lexemes

2. Identifies candidate tokens from the given lexemes ( from source program)

3. Put information about tokens in symbol table

4. Detect lexical errors and report errors to the user

Note:

Regular expressions are used to describe tokens (lexical constructs)

A Deterministic Finite State Automaton can be used in the implementation of a lexical analyzer

2. Syntax analysis or Parsing

The second phase of the compiler is syntax analysis or parsing. It takes the token produced by lexical analysis as input and

generates a parse tree (or syntax tree). A typical representation is a syntax tree in which each interior node represents an

operation and the children of the node represent the arguments of the operation.

The tool used in syntax analysis is called Syntax Analyzer (SA) or parser

Consider the example:

Position = initial + rate * 60

The Syntax tree is in the form of:

Prepared by: Andualem T. BSc 2013 E.C Page 12

Compiler Design Dire Dawa University [DDIT]

In a parse tree, all terminals are at leaves.

All inner nodes are non-terminals in a context free grammar.

The syntax of a language is specified by a context free grammar (CFG)

A SA checks whether a given program satisfies the rules implied by a CFG or not

– if it satisfies the SA creates a parse tree for the given program

Depending on how the parse tree is created, there are different parsing techniques. These parsing techniques are categorized in to

two groups

Top-down parsing:

Construction of the parse tree starts at root, and proceeds towards the leaves.

Efficient top-down parsers can be easily constructed by hand

Recursive descent parsing, Recursive predictive parsing, non-recursive predictive parsing (LL parsing) are the techniques

used

Bottom- up parsing

Construction of the parse tree starts at the leaves, and proceeds towards the root

Normally efficient bottom-up parsers are created with the help of some software tools

Prepared by: Andualem T. BSc 2013 E.C Page 13

Compiler Design Dire Dawa University [DDIT]

Bottom-up parsing is also known as shift reduce parsing

LR (0),SLR(1),LR(1), LALR(1) are the techniques used in bottom-up parsing

3. Semantic Analysis

A semantic analyzer checks the source program for semantic errors and collects the type of information for the code generation.

The semantic analyzer uses the syntax tree and the information in the symbol table to check the source program for semantic

consistency with the language definition. It also gathers type information and saves it in the symbol table, for subsequent use

during intermediate-code generation.

An important part of semantic analysis is:

Type checking : the compiler checks that each operator has matching operands

Type conversion: Declarations of variables and constants before use

Calling functions that exist (predefined in a library or defined by the user) and Passing parameters properly.

The semantic analyzer does the following:

Checks the static semantics of the language- checked by the compiler

Annotates the syntax tree with type information

Example from the above expression:

Position=initial + rate*60

Suppose that:

Position, initial, and rate have been declared to be floating-point numbers, and that the lexeme 60 by itself forms an

integer.

The operator * is applied to a floating-point number rate and an integer 60

The type checker in the semantic analyzer may convert the lexeme 60 into a floating-point number

Prepared by: Andualem T. BSc 2013 E.C Page 14

Compiler Design Dire Dawa University [DDIT]

The output has an extra node for the operator inttofloat, which explicitly converts its integer argument into a

floating-point number.

The output of the semantic analysis is the same with syntax tree plus meaning associated with each expression appreciable.

4. Intermediate Code Generation: three-address code

After syntax and semantic analysis of the source program, many compilers generate an explicit low-level or machine-like

intermediate representation (a program for an abstract machine). This intermediate representation should have two important

properties:

It should be easy to produce and

It should be easy to translate into the target machine.

The considered intermediate form called three-address code, which consists of a sequence of assembly-like instructions with

three operands per instruction. Each operand can act like a register.

Prepared by: Andualem T. BSc 2013 E.C Page 15

Compiler Design Dire Dawa University [DDIT]

5. Code Optimization: to generate better target code

The machine-independent code-optimization phase attempts to improve the intermediate code so that better target code will

result. Usually better means:

– Faster, shorter code(less memory), or target code that consumes less power.

The optimizer can deduce that the conversion of 60 from integer to floating point can be done once and for all at compile time,

so the int to float operation can be eliminated by replacing the integer 60 by the floating-point number 60.0. Moreover, t3 is

used only once

There are simple optimizations that significantly improve the running time of the target program without slowing down

compilation too much.

Ex. Unnecessary lines of code in loops (i.e. code that could be executed outside of the loop) are moved out of the loop.

for(i=1; i<10; i++){

x = y+1;

z = x+i; }

Prepared by: Andualem T. BSc 2013 E.C Page 16

Compiler Design Dire Dawa University [DDIT]

x = y+1;

for(i=1; i<10, i++)

z = x+i;

6. Code Generation: takes as input an intermediate representation of the source program and maps it into the target

language. If the target language is machine code; registers or memory locations are selected for each of the variables used

by the program. Then, the intermediate instructions are translated into sequences of machine instructions that perform

the same task. A crucial aspect of code generation is the judicious assignment of registers to hold variables.

Symbol-Table Management

The symbol table is a data structure containing a record for each variable name, with fields for the attributes of the name.

Identifier, numbers, variables, functions, parameters, types, fields, etc

Tokens are entered by the scanner and parser

Semantic analyzer adds type information and other attributes

Code generation and optimization phases use the information in the symbol table

The data structure should be designed to allow the compiler to find the record for each name quickly and to store or retrieve data

Prepared by: Andualem T. BSc 2013 E.C Page 17

Compiler Design Dire Dawa University [DDIT]

from that record quickly

These attributes may provide information about the storage allocated for a name, its type, its scope (where in the program its

value may be used), and in the case of procedure names, such things as the number and types of its arguments, the method of

passing each argument (for example, by value or by reference), and the type returned.

The symbol table makes it easier for the compiler to quickly search the identifier record and retrieve it.

Performance Issues

Insertion, deletion, and search operations need to be efficient because they are frequent

More than one symbol table may be used

Literal Table

Stores constant values and string literals in a program.

One literal table applies globally to the entire program.

Used by the code generator to:

o Assign addresses for literals.

Avoids the replication of constants and strings.

Error Handing

One of the most important functions of a compiler is the detection and reporting of errors in the source program. The

error message should allow the programmer to determine exactly where the errors have occurred. Errors may occur in

all or the phases of a compiler. Whenever a phase of the compiler discovers an error, it must report the error to the error

handler, which issues an appropriate diagnostic msg.

Prepared by: Andualem T. BSc 2013 E.C Page 18

Compiler Design Dire Dawa University [DDIT]

Example: Translation of an assignment statement

Prepared by: Andualem T. BSc 2013 E.C Page 19

Compiler Design Dire Dawa University [DDIT]

Prepared by: Andualem T. BSc 2013 E.C Page 20

Compiler Design Dire Dawa University [DDIT]

The Grouping of Phases into Passes

Phases of compiler deals with the logical organization of a compiler. In an implementation, activities from several phases may be

grouped together into a pass that reads an input file and writes an output file.

For example, the front-end phases of lexical analysis, syntax analysis and semantic analysis, might be grouped together into one

pass. Code optimization might be an optional pass. Then there could be a back-end pass consisting of code generation for a

particular target machine.

A “pass” is a complete traversal of the source program, or a complete traversal of some internal representation of the source

program (such as an AST). A pass can correspond to a “phase” but it does not have to!. Sometimes a single pass corresponds to

several phases that are interleaved in time.

Types of Compiler

1. Depend on their platform

a. Native code compiler: The compiler used to compile a source code for same type of platform only. The output

generated by this type of compiler can only be run on the same type of computer system and Os that the compiler itself

runs on.

b. Cross-compiler: A compiler that runs on platform (A) and is capable of generating executable code for platform (B) is

called.

c. Source-to-source Compiler: Source to source compiler is a term used when the source code of one programming

language is translated into the source of another language. It can take up a code written in Pascal and can transform it

into C-conversion of one high level language into another high level language having same type of abstraction.

2. Depend on compiler passes

Prepared by: Andualem T. BSc 2013 E.C Page 21

Compiler Design Dire Dawa University [DDIT]

a. Single Pass Compiler: It is a type of compiler that compiles the whole process in only one-pass source code directly

transforms into machine code. It makes a single pass over the source text, parsing, analyzing, and generating code all at

once.

b. Two pass Compiler: it is divided into two sections front end and back end.

c. Multi Pass Compiler: A multi pass compiler makes several passes over the program. The output of a preceding phase

is stored in a data structure and used by subsequent phases.

Dependency diagram of a typical Multi Pass Compiler:

Summary of grouping of Compiler Phases and pass

Front end

Consist of those phases that depend on the source language but largely independent of the target machine.

Prepared by: Andualem T. BSc 2013 E.C Page 22

Compiler Design Dire Dawa University [DDIT]

Back end

Consist of those phases that are usually target machine dependent such as optimization and code generation.

Several phases can be implemented as a single pass consist of reading an input file and writing an output file.

A typical multi-pass compiler looks like:

First pass: preprocessing, macro expansion

Second pass: syntax-directed translation, IR code generation

Third pass: optimization

Last pass: target machine code generation

Compiler-Construction Tools

The compiler writer, like any software developer, can profitably use modern software development environments containing

tools such as language editors, debuggers, version managers, profilers, test harnesses, and so on.

Scanner generator (lexical analyzers): that produces tokens from a regular-expression description of the source language.

Parser generators (syntax analyzers): that automatically produce syntax tree from a grammatical description of a programming

language

Syntax-directed translation engine: that produces collections of routines for walking a parse tree and generating intermediate

code

Data-flow analysis engine: that facilitate the gathering of information about how values are transmitted from one part of a

program to each other part. Data-flow analysis is a key part of code optimization.

Automatic Code-generator generators: that produces a code generator from a collection of rules for translating each operation

of the intermediate language into the machine language for a target machine

Compiler-construction toolkits: provide an integrated set of routines for constructing various phases of a compiler.

Prepared by: Andualem T. BSc 2013 E.C Page 23

You might also like

- Starships of The Galaxy (Bookmarked)Document162 pagesStarships of The Galaxy (Bookmarked)Anna GarbiniNo ratings yet

- COMPUTER PROGRAMMING FOR KIDS: An Easy Step-by-Step Guide For Young Programmers To Learn Coding Skills (2022 Crash Course for Newbies)From EverandCOMPUTER PROGRAMMING FOR KIDS: An Easy Step-by-Step Guide For Young Programmers To Learn Coding Skills (2022 Crash Course for Newbies)No ratings yet

- Computer Program - Part 1Document5 pagesComputer Program - Part 1kulNo ratings yet

- Principles of Compiler Design PDFDocument177 pagesPrinciples of Compiler Design PDFLavlesh Jaiswal0% (1)

- Unit 1Document120 pagesUnit 1Sweta KamatNo ratings yet

- Deep Slimhole Project ExperienceDocument7 pagesDeep Slimhole Project ExperienceElgi Zacky ZachryNo ratings yet

- Chapter 4 Software Concepts PDFDocument12 pagesChapter 4 Software Concepts PDFRahul Choudhary100% (1)

- Comp Fundamentals Unit 1Document34 pagesComp Fundamentals Unit 1Govardhan ReghuramNo ratings yet

- Confidence in Action: Clearvue 850Document16 pagesConfidence in Action: Clearvue 850MaritzaIraholaZallesNo ratings yet

- Unit-1, - Programming LanguageDocument35 pagesUnit-1, - Programming LanguageLuffy RaiNo ratings yet

- Computer ProgrammingDocument75 pagesComputer ProgrammingSudarsan ChaudharyNo ratings yet

- Information Communication Technology (Ict) : UNIT IV: ProgrammingDocument255 pagesInformation Communication Technology (Ict) : UNIT IV: ProgrammingBikal ShresthaNo ratings yet

- Rhino ScriptDocument73 pagesRhino Scriptwadorno100% (1)

- LP - Multiplication of IntegersDocument4 pagesLP - Multiplication of IntegersRowena FanuncioNo ratings yet

- What Is Compiler: Name: Shubham More Roll No: 14 Class: Msccs-I Subject: CompilerDocument6 pagesWhat Is Compiler: Name: Shubham More Roll No: 14 Class: Msccs-I Subject: CompilerShubham MoreNo ratings yet

- HabaDocument41 pagesHabatemsNo ratings yet

- C Note - Sudip - SirDocument75 pagesC Note - Sudip - SirSunil TamangNo ratings yet

- Module 1Document9 pagesModule 1ARSHIYA KNo ratings yet

- Lec - 2 C ProgrammingDocument22 pagesLec - 2 C ProgrammingjolieprincesseishimweNo ratings yet

- Compiler Design - OverviewDocument2 pagesCompiler Design - Overviewsumit jadeNo ratings yet

- CSC 437 Chapter 1Document82 pagesCSC 437 Chapter 1Ema NishyNo ratings yet

- Compiler Design Unit-1 - 1 Cont..Document4 pagesCompiler Design Unit-1 - 1 Cont..sreethu7856No ratings yet

- 001chapter One - Introduction To Compiler DesignDocument45 pages001chapter One - Introduction To Compiler DesigndawodNo ratings yet

- Clanguage2013 2014Document78 pagesClanguage2013 2014mikinaniNo ratings yet

- System Software and Programming Techniques 1Document5 pagesSystem Software and Programming Techniques 1reginaamondi133No ratings yet

- Unit 2 - Introduction To C Language Processing SystemDocument5 pagesUnit 2 - Introduction To C Language Processing SystembarnabasNo ratings yet

- Compiler Design Notes Unit-1 & Unit-2Document59 pagesCompiler Design Notes Unit-1 & Unit-2Kartik sharmaNo ratings yet

- CA Lecture 9Document28 pagesCA Lecture 9White HatNo ratings yet

- Process of Execution of A Program:: Compiler DesignDocument26 pagesProcess of Execution of A Program:: Compiler DesignNaresh SoftwareNo ratings yet

- Programming Languages and Program Development ToolsDocument15 pagesProgramming Languages and Program Development ToolsOh ReviNo ratings yet

- Advanced Computer Systems: Compiler Design & ImplementationDocument20 pagesAdvanced Computer Systems: Compiler Design & ImplementationxliizbethxNo ratings yet

- Systems Software: Topics in This ChapterDocument16 pagesSystems Software: Topics in This Chapterblessy thomasNo ratings yet

- SE Compiler Chapter 1Document19 pagesSE Compiler Chapter 1mikiberhanu41No ratings yet

- Lecture 2Document5 pagesLecture 2Rohit SenNo ratings yet

- Wa0005.Document8 pagesWa0005.nehasatkur99No ratings yet

- Structured ProgrammingDocument42 pagesStructured ProgrammingAkendombi EmmanuelNo ratings yet

- Unit 1 Spos NotesDocument23 pagesUnit 1 Spos Notes47-Rahul RAjpurohitNo ratings yet

- Introduction of Compiler DesignDocument63 pagesIntroduction of Compiler DesignSayli GawdeNo ratings yet

- 2 Compiler (GCC Compiler)Document24 pages2 Compiler (GCC Compiler)Vaishvi GuptaNo ratings yet

- 3 Computer Languages and TranslatorsDocument14 pages3 Computer Languages and TranslatorsBilal IqbalNo ratings yet

- Unit 1 Spos Notes - DocxDocument24 pagesUnit 1 Spos Notes - DocxSrushti GhiseNo ratings yet

- Programming NotesDocument58 pagesProgramming NotesClementNo ratings yet

- FoP TheoryDocument38 pagesFoP Theorymoses manedNo ratings yet

- Computer Assignment 3Document6 pagesComputer Assignment 3Saba NafeesNo ratings yet

- Unit - 1Document43 pagesUnit - 1slcascs2022No ratings yet

- 3Document29 pages3Eash G.No ratings yet

- Module - 1: Overview of Compilation & Lexical AnalysisDocument29 pagesModule - 1: Overview of Compilation & Lexical Analysis121910315060 gitamNo ratings yet

- Unit 1 - Compiler Design - WWW - Rgpvnotes.inDocument16 pagesUnit 1 - Compiler Design - WWW - Rgpvnotes.inSanjay PrajapatiNo ratings yet

- TCP Lec01Document32 pagesTCP Lec01AbcNo ratings yet

- Language Processing SystemDocument17 pagesLanguage Processing SystemRhymeNo ratings yet

- Chapter-6 User Computer InterfaceDocument39 pagesChapter-6 User Computer InterfaceMallu MuziqNo ratings yet

- Automata and Compiler Design - Lecture Notes On UNIT 1Document25 pagesAutomata and Compiler Design - Lecture Notes On UNIT 1Rue ChagaresangoNo ratings yet

- CSC411 Compiler Construction - MO Onyesolu and OU Ekwealor - First Semester 2020/2021 SessionDocument27 pagesCSC411 Compiler Construction - MO Onyesolu and OU Ekwealor - First Semester 2020/2021 SessionKelly IsaacNo ratings yet

- Compiler Design NotesDocument156 pagesCompiler Design NotesPlayboy EditographyNo ratings yet

- CD Unit-IDocument21 pagesCD Unit-IShashank TNo ratings yet

- CD - Unit 1Document67 pagesCD - Unit 1Dr. K. Sivakumar - Assoc. Professor - AIDS NIETNo ratings yet

- Lecture No.1: Compiler ConstructionDocument22 pagesLecture No.1: Compiler ConstructionMisbah SajjadNo ratings yet

- Compiler Construction Lecture 1Document13 pagesCompiler Construction Lecture 1MinahilNo ratings yet

- Week 3 Language TranslatorsDocument6 pagesWeek 3 Language Translatorspowelljean16No ratings yet

- What Is A CompilerDocument5 pagesWhat Is A CompilerIhsan UllahNo ratings yet

- Introduction-Elementary Programming Principles Elementary Programming PrinciplesDocument38 pagesIntroduction-Elementary Programming Principles Elementary Programming PrinciplesOmagbemi WilliamNo ratings yet

- Computer Programming in CDocument208 pagesComputer Programming in CLucky SinghNo ratings yet

- Computer Hardware and SoftwareDocument7 pagesComputer Hardware and Softwareirfanahmed.dbaNo ratings yet

- 3Document29 pages3Sagar ThapliyalNo ratings yet

- Curb-Energy-0 0 2Document28 pagesCurb-Energy-0 0 2JasonNo ratings yet

- Review Paper On Future Distribution Feeder Protection Using Directional Overcurrent ElementsDocument8 pagesReview Paper On Future Distribution Feeder Protection Using Directional Overcurrent ElementsIJRASETPublicationsNo ratings yet

- First PagesDocument11 pagesFirst PagesEswar KarriNo ratings yet

- Forms PDFDocument18 pagesForms PDFRavi ShankarNo ratings yet

- U2000 - General PunctuationDocument6 pagesU2000 - General PunctuationSándor NagyNo ratings yet

- ARTS Lesson GuideDocument3 pagesARTS Lesson GuideWanettNicoNo ratings yet

- Straight Line Sheet (NVSIR)Document20 pagesStraight Line Sheet (NVSIR)vigesa6945No ratings yet

- Moore. It Is Called The TS Chart. T Is For Temperature While S Is For Entropy. This Chart IsDocument8 pagesMoore. It Is Called The TS Chart. T Is For Temperature While S Is For Entropy. This Chart IsSheena Jai Hyacinth SamsonNo ratings yet

- System Requirement Specification (SRS) : Project: Recruitment Solution Agency (RSA)Document36 pagesSystem Requirement Specification (SRS) : Project: Recruitment Solution Agency (RSA)Nur HazlindaNo ratings yet

- VibroSight Release NotesDocument50 pagesVibroSight Release NotesfazzlieNo ratings yet

- Norma Aisc 341 (Capítulo E)Document22 pagesNorma Aisc 341 (Capítulo E)ElmerNo ratings yet

- T Lymphocytes and Macrophages in The Intestinal Tissues of Dogs Infected With Leishmania InfantumDocument12 pagesT Lymphocytes and Macrophages in The Intestinal Tissues of Dogs Infected With Leishmania InfantumWillNo ratings yet

- Viper12A-E: Low Power Offline Switched-Mode Power Supply Primary SwitcherDocument21 pagesViper12A-E: Low Power Offline Switched-Mode Power Supply Primary SwitcherChristianBellNo ratings yet

- Cambridge Lower Secondary ICT Level 7 Unit 2Document4 pagesCambridge Lower Secondary ICT Level 7 Unit 2عالم الضحك100No ratings yet

- Ansys Statics2 v61Document7 pagesAnsys Statics2 v61api-3833671No ratings yet

- Lab 1 Introduction To Linux Environment and C CompilerDocument6 pagesLab 1 Introduction To Linux Environment and C CompilerRabiatul AdawiahNo ratings yet

- Gas-Insulated Switchgear ELK-14: The Modular System For GIS, 245 KVDocument20 pagesGas-Insulated Switchgear ELK-14: The Modular System For GIS, 245 KVmaruf048No ratings yet

- 7 QC Tools - StratificationDocument3 pages7 QC Tools - Stratificationraja100% (1)

- 7.14 ML Marine - 1103Document29 pages7.14 ML Marine - 1103Givan IsmailNo ratings yet

- SMV 3000 User ManualDocument217 pagesSMV 3000 User ManualGabriel DediuNo ratings yet

- Design of Open Newel Stair Case With Quarter Space Landing: LoadsDocument4 pagesDesign of Open Newel Stair Case With Quarter Space Landing: LoadsD SRINIVASNo ratings yet

- Difference Between Fieldbus, Profibus and HART ProtocolsDocument6 pagesDifference Between Fieldbus, Profibus and HART ProtocolslampfievNo ratings yet

- BTCS (703) Network Pogramming Unit IDocument41 pagesBTCS (703) Network Pogramming Unit IVivek SoniNo ratings yet

- Behavioral Model of Pipeline Adc by Using SimulinkrDocument5 pagesBehavioral Model of Pipeline Adc by Using SimulinkrSounakDuttaNo ratings yet

- Intro To CFD Course DetailsDocument1 pageIntro To CFD Course Detailsmaheshj90No ratings yet