0% found this document useful (0 votes)

904 views35 pagesGram-Schmidt Process

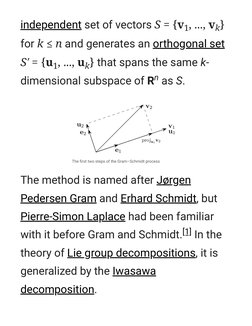

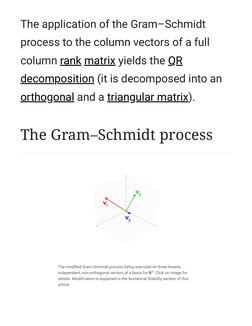

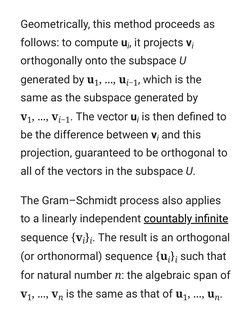

The Gram-Schmidt process takes a set of linearly independent vectors and generates an orthogonal set that spans the same subspace. It works by projecting each vector onto the subspace spanned by the preceding vectors and setting the difference as the orthogonal vector. While simple, the classical Gram-Schmidt process can lose numerical precision due to rounding errors. The modified Gram-Schmidt process is more stable by recomputing the projections to minimize errors.

Uploaded by

Balayya BCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

904 views35 pagesGram-Schmidt Process

The Gram-Schmidt process takes a set of linearly independent vectors and generates an orthogonal set that spans the same subspace. It works by projecting each vector onto the subspace spanned by the preceding vectors and setting the difference as the orthogonal vector. While simple, the classical Gram-Schmidt process can lose numerical precision due to rounding errors. The modified Gram-Schmidt process is more stable by recomputing the projections to minimize errors.

Uploaded by

Balayya BCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

- Introduction to Gram-Schmidt Process: Introduces the Gram-Schmidt process, its significance in linear algebra, and initial definitions.

- Procedure and Derivation: Explains the step-by-step procedure of the Gram-Schmidt process including vector projection and orthogonalization formulas.

- Orthogonalization Examples: Provides examples demonstrating the orthogonalization method through mathematical derivation.

- Properties and Stability: Discusses the properties of the Gram-Schmidt process and considerations regarding numerical stability.

- Modified Gram-Schmidt Algorithm: Describes the modified version of the process for improved numerical precision and reduced error.

- Implementation: MATLAB Algorithm: Provides MATLAB code for implementing the Gram-Schmidt orthonormalization algorithm.

- Advanced Topics and Variants: Covers advanced concepts such as geometric algebra, alternatives, and the determinant formula.

- Reference and Further Reading: Lists references and sources for further reading on the Gram-Schmidt process and related mathematical topics.

- External Links and Resources: Includes links to external resources and demonstrations related to orthogonalization techniques.