Professional Documents

Culture Documents

Guidelines For Automation Testing

Uploaded by

api-3787635Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Guidelines For Automation Testing

Uploaded by

api-3787635Copyright:

Available Formats

Controlled copy

Guidelines

for

Automated Testing

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected

Controlled copy Automated Testing Guidelines

Table of Contents

1.0 Introduction............................................................................... .............................3

1.1 About Automated Testing...................................................................... ...........................3

2.0 Definitions and Acronyms............................................................. ........................5

3.0 Tasks............................................................................................. ..........................6

3.1 Choosing what to automate...................................................................................... ........6

3.2 Evaluation of Testing Tools............................................................................................... 6

3.3 Script Planning & Design...................................................................................... ............8

3.4 Test Environment Setup............................................................................................ ........8

3.5 Development of Scripts............................................................................................... ......8

3.6 Deployment/Testing of Suites ..................................................................................... .....9

3.7 Maintenance of Test Suites...................................................................................... .........9

3.8 Test Automation Methodology.................................................................................... ....10

4.0 Additional Guidelines.................................................................................. .........13

4.1 Important factors to consider before Automation............................... .........................13

4.2 Recommended Test Tools.......................................................................................... .....14

5.0 Common Pitfalls............................................................................... ....................16

6.0 Templates................................................................................................... ...........17

7.0 References............................................................................................ ................17

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 2 of 17

Controlled copy Automated Testing Guidelines

1.0Introduction

This document gives in detail the necessary guidelines for carrying out an automated

testing effectively.

1.1About Automated Testing

Automated testing is recognized as a cost-efficient way to increase application reliability,

while reducing the time and cost of software quality programs. In the past, most

software tests were performed using manual methods. Owing to the size and complexity

of today’s advanced software applications, manual testing is no longer a viable option

for most testing situations. Some of the common reasons for automating are:

1. Reducing Testing Time

Since testing is a repetitive task, Automation of testing processes allows machines

to complete the tedious, repetitive work while humans can perform other tasks. An

automated test executes the next operation in the test hierarchy at machine speed,

allowing tests to be completed many times faster than the fastest individual.

Furthermore, some types of testing, such as load/stress testing, are virtually

impossible to perform manually.

2. Reducing Testing Costs

The cost of performing manual testing is prohibitive when compared to automated

methods. But when load / stress testing needs to be done, automated testing is the

only solution as simulation for n number of users can be done easily with a single

computer compared to manual testing where n number of computers have to be

arranged with individual testers. Imagine if load testing has to be carried for 1000

people!

3. Replicating Testing Across Different Platforms

Automation allows the testing organization to perform consistent and repeatable

tests. When applications need to be deployed across different hardware or software

platforms, standard or benchmark tests can be created and repeated on target

platforms to ensure that new platforms operate consistently.

4. Repeatability and Control

By using automated techniques, the tester has a very high degree of control over

which types of tests are being performed, and how the tests will be executed. Using

automated tests enforces consistent procedures that allow developers to evaluate

the effect of various application modifications as well as the effect of various user

actions.

For example, automated tests can be built that extract variable data from external

files or applications and then run a test using the data as an input value. Most

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 3 of 17

Controlled copy Automated Testing Guidelines

importantly, automated tests can be executed as many times as necessary without

requiring a user to recreate a test script each time the test is run.

5. Greater Application Coverage

The productivity gains delivered by automated testing allow and encourage

organizations to test more often and more completely. Greater application test

coverage also reduces the risk of exposing users to malfunctioning or non-

compliant software. In some industries such as healthcare and pharmaceuticals,

organizations are required to comply with strict quality regulations as well as being

required to document their quality assurance efforts for all parts of their systems.

6. Results Reporting

Full-featured automated testing systems also produce convenient test reporting and

analysis. These reports provide a standardized measure of test status and results,

thus allowing more accurate interpretation of testing outcomes. Manual methods

require the user to self-document test procedures and test results.

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 4 of 17

Controlled copy Automated Testing Guidelines

2.0Definitions and Acronyms

Acronym Definition

None

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 5 of 17

Controlled copy Automated Testing Guidelines

3.0Tasks

3.1Choosing what to automate

Most, but not all, types of tests can be automated. Certain types of tests like

user comprehension tests, tests that run only once, and tests that require constant

human intervention are usually not worth the investment to automate. The following are

examples of criteria that can be used to identify tests that are prime candidates for

automation.

High Path Frequency - Automated testing can be used to verify the performance of

application paths that are used with a high degree of frequency when the software is

running in full production. Examples include: creating customer records, invoicing and

other high volume activities where software failures would occur frequently.

Critical Business Processes - In many situations, software applications can literally

define or control the core of a company’s business. If the application fails, the company

can face extreme disruptions in critical operations. Mission-critical processes are prime

candidates for automated testing. Examples include: financial month-end closings,

production planning, sales order entry and other core activities. Any application with a

high-degree of risk associated with a failure is a good candidate for test automation.

Repetitive Testing - If a testing procedure can be reused many times, it is also a prime

candidate for automation. For example, common outline files can be created to establish

a testing session, close a testing session and apply testing values. These automated

modules can be used again and again without having to rebuild the test scripts. This

modular approach saves time and money when compared to creating a new end-to-end

script for each and every test.

Applications with a Long Life Span - If an application is planned to be in production

for a long period of time, the greater the benefits are from automation.

3.2Evaluation of Testing Tools

Choosing an automated software testing tool is an important step, and one which often

poses enterprise-wide implications. Here are several key issues, which should be

addressed when selecting an application testing solution.

Test Planning and Management -

A robust testing tool should have the capability to manage the testing process, provide

organization for testing components, and create meaningful end-user and management

reports. It should also allow users to include non-automated testing procedures within

automated test plans and test results. A robust tool will allow users to integrate existing

test results into an automated test plan. Finally, an automated test should be able to link

business requirements to test results, allowing users to evaluate application readiness

based upon the application's ability to support the business requirements.

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 6 of 17

Controlled copy Automated Testing Guidelines

Testing Product Integration -

Testing tools should provide tightly integrated modules that support test component

reusability. Test components built for performing functional tests should also support

other types of testing including regression and load/stress testing. All products within the

testing product environment should be based upon a common, easy-to-understand

language. User training and experience gained in performing one testing task should be

transferable to other testing tasks. Also, the architecture of the testing tool environment

should be open to support interaction with other technologies such as defect or bug

tracking packages.

Internet/Intranet Testing -

A good tool will have the ability to support testing within the scope of a web browser.

The tests created for testing Internet or intranet-based applications should be portable

across browsers, and should automatically adjust for different load times and

performance levels.

Ease of Use -

Testing tools should be engineered to be usable by non-programmers and application

end-users. With much of the testing responsibility shifting from the development staff to

the departmental level, a testing tool that requires programming skills is unusable by

most organizations. Even if programmers are responsible for testing, the testing tool

itself should have a short learning curve.

GUI and Client/Server Testing -

A robust testing tool should support testing with a variety of user interfaces and create

simple-to manage, easy-to-modify tests. Test component reusability should be a

cornerstone of the product architecture.

Load and Performance Testing -

The selected testing solution should allow users to perform meaningful load and

performance tests to accurately measure system performance. It should also provide

test results in an easy-to-understand reporting format.

Methodologies and Services -

For those situations that require outside expertise, the testing tool vendor should be

able to provide extensive consulting, implementation, training, and assessment

services. The test tools should also support a structured testing methodology.

Note: Refer to “Test Tools Evaluation and Selection Report” template for evaluating

various tools on common parameters

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 7 of 17

Controlled copy Automated Testing Guidelines

3.3Script Planning & Design

Careful planning is the key to any successful process. To guarantee the best possible

result from an automated testing program, those evaluating test automation should

consider these fundamental planning steps. The time invested in detailed planning

significantly improves the benefits resulting from test automation.

Evaluating Business Requirements

Begin the automated testing process by defining exactly what tasks your application

software should accomplish in terms of the actual business activities of the end-user.

The definition of these tasks, or business requirements, defines the high-level,

functional requirements of the software system in question. These business

requirements should be defined in such a way as to make it abundantly clear that the

software system correctly (or incorrectly) performs the necessary business functions.

For example, a business requirement for a payroll application might be to calculate a

salary, or to print a salary check.

Script Planning & Design

This is the most crucial phase in the Automation cycle. Script planning essentially

establishes the test architecture. All the modules are identified at this stage. The various

modules are used for navigation, manipulating controls, data entry, data validation, error

identification, and writing out logs. Reusable modules consist of commands, logic, and

data. Generic modules used throughout the testing system, such as initialization and

setup functionality, are generally grouped together in files named to reflect their primarily

function, such as "Init" or "setup". Others that are more application specific, designed to

service controls on a customer window, for example, are also grouped together and

named similarly. All the identified functions/modules/libraries/scripts are named as per

the Standard Naming Conventions followed. The test data planning is also done in this

phase.

3.4Test Environment Setup

Test Environment setup consists of setting up the connectivity to access the AUT, the

test automation tools, the test repository, setting up the configuration management tool,

identification of the machines to create and run the tests, installation of the browsers,

configuration of defect management tool.

Development of Libraries

All common functions are developed and tested to promote repeatability and

maintainability.

3.5Development of Scripts

The actual scripts are developed and tested.

Development of Test Suites

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 8 of 17

Controlled copy Automated Testing Guidelines

The scripts and libraries are integrated to form test suites that can run independently

with minimum user intervention.

3.6Deployment/Testing of Suites

The various test suites are run on the different machine configurations. The defects are

reported and tracked with a defect-tracking tool. The suites are run again if regression

testing is needed.

3.7Maintenance of Test Suites

If there are changes to the application in subsequent releases, the changes in the

functionality are identified and a similar analysis is done again to arrive at the required

changes to the test suites. The base lined test scripts are modified and appropriate

versioning of the scripts are done after affecting the changes.

Test Case

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 9 of 17

Controlled copy Automated Testing Guidelines

3.8Test Automation Methodology

Automation Functional Specification/Test Plan/Test Cases

Objectives

Tool Tool-Identification Process/Functional

Test Requirement Questionnaire

Identification

Script

Planning & Naming Conventions

Design

Test H/W, S/W (Tools,

Environment Browsers,

Setup connectivity)

Development Script Test Suite

of Libraries Development Development

Test Suite

Deployment/Testi

ng

Version Control

Input

Tasks Description Deliverables

Identification of Understanding the objectives of Automation for Test

Automation Automation

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 10 of 17

Controlled copy Automated Testing Guidelines

Objectives/Test design of an effective test architecture Strategy

Requirements Document

Study Understanding of the test

requirements/application requirements for

automation

Identification of Normally done in the proposal stage itself None

Tool

Application of Tool Selection Process of eTest

Center to arrive at the best fit for the given

automation objectives

Script Planning & Design of Test Architecture Design

Design Document

Identification of Reusable functions

Identification of required libraries

Identification of test initialization parameters

Identification of all the scripts

Preparation of Test design document –

Application of Naming Conventions

Test Data Planning

Test Environment Hardware None

Setup

Software – Application, Browsers

Test Repositories

Version Control Repositories

Tool setup

Development of Development of libraries Phased

Libraries delivery if

Debug/Testing of libraries applicable

Development of Development of scripts Phased

Scripts delivery if

Debug/Testing of scripts applicable

Development of Integration of Scripts into test Suites Test Suites &

Test Suites Libraries

Debug/Testing of Suites

Deployment/Testin Deployment of Test Suites Test Run

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 11 of 17

Controlled copy Automated Testing Guidelines

g of Scripts Results, Defect

Reports, Test

Report

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 12 of 17

Controlled copy Automated Testing Guidelines

4.0Additional Guidelines

4.1Important factors to consider before Automation

1. Scope - It is not practical to try to automate everything, nor is there the time available

generally. Pick very carefully the functions/areas of the application that are to be

automated.

2. Preparation Timeframe - The preparation time for automated test scripts has to be

taken into account. In general, the preparation time for automated scripts can be up to

2/3 times longer than for manual testing. In reality, chances are that initially the tool will

actually increase the testing scope. It is therefore very important to manage

expectations. An automated testing tool does not replace manual testing, nor does it

replace the test engineer. Initially, the test effort will increase, but when automation is

done correctly it will decrease on subsequent releases.

3. Return on Investment - Because the preparation time for test automation is so long, I

have heard it stated that the benefit of the test automation only begins to occur after

approximately the third time the tests have been run.

4. When is the benefit to be gained? Choose your objectives wisely, and seriously think

about when & where the benefit is to be gained. If your application is significantly

changing regularly, forget about test automation - you will spend so much time updating

your scripts that you will not reap many benefits. [However, if only disparate sections of

the application are changing, or the changes are minor - or if there is a specific section

that is not changing, you may still be able to successfully utilize automated tests]. Bear

in mind that you may only ever be able to do a complete automated test run when your

application is almost ready for release – i.e. nearly fully tested!! If your application is

very buggy, then the likelihood is that you will not be able to run a complete suite of

automated tests – due to the failing functions encountered

5. The Degree of Change – The best use of test automation is for regression testing,

whereby you use automated tests to ensure that pre-existing functions (e.g. functions

from version 1.0 - i.e. not new functions in this release) are unaffected by any changes

introduced in version 1.1. And, since proper test automation planning requires that the

test scripts are designed so that they are not totally invalidated by a simple gui change

(such as renaming or moving a particular control), you need to take into account the

time and effort required to update the scripts. For example, if your application is

significantly changing, the scripts from version 1.0. may need to be completely re-written

for version 1.1, and the effort involved may be at most prohibitive, at least not taken into

account! However, if only disparate sections of the application are changing, or the

changes are minor, you should be able to successfully utilize automated tests to regress

these areas.

1. Test Integrity - how do you know (measure) whether a test passed or failed? Just

because the tool returns a ‘pass’ does not necessarily mean that the test itself passed.

For example, just because no error message appears does not mean that the next step

in the script successfully completed. This needs to be taken into account when

specifying test script fail/pass criteria.

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 13 of 17

Controlled copy Automated Testing Guidelines

6.Test Independence - Test independence must be built in so that a failure in the first test

case won't cause a domino effect and either prevents, or cause to fail, the rest of the test

scripts in that test suite. However, in practice this is very difficult to achieve.

7.Debugging or "testing" of the actual test scripts themselves - time must be allowed for

this, and to prove the integrity of the tests themselves.

8.Record & Playback - DO NOT RELY on record & playback as the SOLE means to

generate a script. The idea is great. You execute the test manually while the test tool sits in

the background and remembers what you do. It then generates a script that you can run to

re-execute the test. It's a great idea - that rarely works (and proves very little).

9.Maintenance of Scripts - Finally, there is a high maintenance overhead for automated

test scripts - they have to be continuously kept up to date, otherwise you will end up

abandoning hundreds of hours work because there has been too many changes to an

application to make modifying the test script worthwhile. As a result, it is important that the

documentation of the test scripts is kept up to date also.

4.2Recommended Test Tools

Functionality Description Representative Tools

Functional Testing Record and Playback tools Win Runner, Rational Robot,

with scripting support aid in Silk Test and QA Run. Tools

automating the functional like CA-Verify can be used in

testing of online applications the m/f environment

Test Management Management the test effort Test Director

Test Coverage Analyzer Reports from the tool provide Rational Pure Coverage

data on coverage per unit like

Function, Program, and

Application

File Comparators Verify regression test results Comparex (from Sterling

(by comparison of results from Software)

original and changed

applications).

Load Testing Performance and scalability Load Runner, Performance

testing Studio, Silk Performer and QA

Load

Run-time error checking Detect hard to find run-time Rational Purify

errors, memory leaks, etc.

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 14 of 17

Controlled copy Automated Testing Guidelines

Debugging tools Simplify isolation and fixing of Xpediter, ViaSoft (Mainframe

errors applications), VisualAge

debuggers and many other

debuggers that come with

development kits.

Test Bed Generator Tools aid in preparing test data CA-Datamacs

by analyzing program flows

and conditional statements

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 15 of 17

Controlled copy Automated Testing Guidelines

5.0Common Pitfalls

• The various tools used throughout the development lifecycle did not easily

integrate.

• Spending more time on automating test scripts than on actual testing. It is

important to decide first which tests should be automated and which cannot be

automated.

• Everyone on the testing team trying to automate scripts. It is preferable to

assign test script generation to people who have some development

background so that manual testers can concentrate on other testing aspects

• Elaborate test scripts being developed, duplicating the development effort.

• Test tool training being given late in the process, which results in test engineers

having a lack of tool knowledge

• Testers resisting the tool. It is important to have a Tool Champion who can

advocate the features of the tool in the early stages to avoid resistance

• Expectation for Return of Investment on Test Automation is high. When a

testing tool is introduced, initially the testing scope will become larger but if

automation is done correctly, then it will decrease in subsequent releases

• Tool having problem in recognizing third-party controls (widgets).

• A lack of test development guidelines

• Reports produced by the tool being useless as the data required to produce the

report was never accumulated in the tool

• Tools being selected and purchased before a system engineering environment

gets defined.

• Various tool versions being in use resulting in scripts created in one tool not

running in another. One way to prevent this is to ensure that tool upgrades are

centralized and managed by a configuration management team.

• The new tool upgrade not being compatible with the existing system

engineering environment. It is first preferred to do a beta test with the new tool

before rolling out in the project

• The tool’s database not allowing for scalability. It is better to pick a tool that

allows for scalability using a robust database. Additionally, it is important to

back up the test database

• Incorrect use of a test tool’s management functionality resulting in waste of

time.

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 16 of 17

Controlled copy Automated Testing Guidelines

6.0Templates

• Test Plan

• Test Cases & Log

• Test Tool Evaluation and Selection Report

7.0References

• Test Strategy Guidelines

Release ID: QGTS-AUTOMAT.doc / 8.01 / 05.03.2004 C3: Protected Page 17 of 17

You might also like

- NCSC CAF v3.0Document38 pagesNCSC CAF v3.0CesarNo ratings yet

- What Is RAID?Document2 pagesWhat Is RAID?Maleeha SabirNo ratings yet

- A Management Approach to Software Validation RequirementsDocument8 pagesA Management Approach to Software Validation RequirementsHuu TienNo ratings yet

- Source Code Management Using IBM Rational Team ConcertDocument56 pagesSource Code Management Using IBM Rational Team ConcertSoundar Srinivasan0% (1)

- Critical Systems ValidationDocument41 pagesCritical Systems Validationapi-25884963No ratings yet

- 1805 Abacus DiagnosticaDocument10 pages1805 Abacus DiagnosticaAnton MymrikovNo ratings yet

- USDE - Software Verification and Validation Proc - PNNL-17772Document28 pagesUSDE - Software Verification and Validation Proc - PNNL-17772thu2miNo ratings yet

- 03 System TestingDocument17 pages03 System TestingramNo ratings yet

- Defect Tracking & ReportingDocument8 pagesDefect Tracking & ReportingRajasekaran100% (3)

- Audit Computer SystemsDocument11 pagesAudit Computer SystemsROJI LINANo ratings yet

- SQA Test PlanDocument5 pagesSQA Test PlangopikrishnaNo ratings yet

- Reg A USP 1058 Analytical Instrument QualificationDocument8 pagesReg A USP 1058 Analytical Instrument QualificationRomen MoirangthemNo ratings yet

- Software Testing Strategies and Methods ExplainedDocument70 pagesSoftware Testing Strategies and Methods Explained2008 AvadhutNo ratings yet

- Auditing Registered Starting Material ManufacturersDocument23 pagesAuditing Registered Starting Material ManufacturersAl RammohanNo ratings yet

- LABWORKS 6.9 Admin GuideDocument534 pagesLABWORKS 6.9 Admin GuideBilla SathishNo ratings yet

- Code ReviewDocument2 pagesCode Reviewhashmi8888No ratings yet

- Smoke Vs SanityDocument7 pagesSmoke Vs SanityAmodNo ratings yet

- CO - CSA - AgilentDocument19 pagesCO - CSA - AgilentAK Agarwal100% (1)

- Test Strategy for <Product/Project name> v<VersionDocument11 pagesTest Strategy for <Product/Project name> v<VersionshygokNo ratings yet

- Selecting, Implementing and Using FDA Compliance Software SolutionsDocument29 pagesSelecting, Implementing and Using FDA Compliance Software SolutionsSireeshaNo ratings yet

- Differences Between The Different Levels of Tests: Unit/component TestingDocument47 pagesDifferences Between The Different Levels of Tests: Unit/component TestingNidhi SharmaNo ratings yet

- CSV SopDocument1 pageCSV SopjeetNo ratings yet

- GAMP 5 Categories, V Model, 21 CFR Part 11, EU Annex 11 - AmpleLogicDocument7 pagesGAMP 5 Categories, V Model, 21 CFR Part 11, EU Annex 11 - AmpleLogicArjun TalwakarNo ratings yet

- Types of Software TestingDocument18 pagesTypes of Software Testinghira fatimaNo ratings yet

- Testing ChecklistDocument3 pagesTesting Checklistvinoth1128No ratings yet

- Malvern Access Configurator (Mac) User Guide: MAN0602-01-EN-00 July 2017Document50 pagesMalvern Access Configurator (Mac) User Guide: MAN0602-01-EN-00 July 2017Pentesh NingaramainaNo ratings yet

- Software Project ManagementDocument6 pagesSoftware Project ManagementLovekush KewatNo ratings yet

- Bilgisayarlı SistemlerDocument14 pagesBilgisayarlı Sistemlerttugce29No ratings yet

- Smart Lab E BookDocument24 pagesSmart Lab E BookNaveed MubarikNo ratings yet

- AWS CIS Foundations BenchmarkDocument89 pagesAWS CIS Foundations BenchmarkJAYA PRAKASH REDDY DorsalaNo ratings yet

- Test Environment Setup and ExecutionDocument5 pagesTest Environment Setup and ExecutionAli RazaNo ratings yet

- Unit No:1 Software Quality Assurance Fundamentals: by Dr. Rekha ChouhanDocument133 pagesUnit No:1 Software Quality Assurance Fundamentals: by Dr. Rekha ChouhanVrushabh ShelkeNo ratings yet

- Client-Server Application Testing Plan: EDISON Software Development CentreDocument7 pagesClient-Server Application Testing Plan: EDISON Software Development CentreEDISON Software Development Centre100% (1)

- Server Installation and Configuration GuideDocument66 pagesServer Installation and Configuration GuideMaria TahreemNo ratings yet

- Software Development Life Cycle by Raymond LewallenDocument6 pagesSoftware Development Life Cycle by Raymond Lewallenh.a.jafri6853100% (7)

- SQA PreparationDocument4 pagesSQA PreparationRasedulIslamNo ratings yet

- Software Assessment FormDocument4 pagesSoftware Assessment FormCathleen Ancheta NavarroNo ratings yet

- Auditing Operating Systems Networks: Security Part 1: andDocument24 pagesAuditing Operating Systems Networks: Security Part 1: andAmy RillorazaNo ratings yet

- What Is Software Quality Assurance (SQA) : A Guide For BeginnersDocument8 pagesWhat Is Software Quality Assurance (SQA) : A Guide For BeginnersRavi KantNo ratings yet

- Lecture 9 - QAQC PDFDocument37 pagesLecture 9 - QAQC PDFTMTNo ratings yet

- Overview of Software TestingDocument12 pagesOverview of Software TestingBhagyashree SahooNo ratings yet

- Writing Skills EvaluationDocument17 pagesWriting Skills EvaluationMarco Morin100% (1)

- SOA Test ApproachDocument4 pagesSOA Test ApproachVijayanthy KothintiNo ratings yet

- SE20192 - Lesson 2 - Introduction To SEDocument33 pagesSE20192 - Lesson 2 - Introduction To SENguyễn Lưu Hoàng MinhNo ratings yet

- Test Design Specification TemplateDocument4 pagesTest Design Specification TemplateRifQi TaqiyuddinNo ratings yet

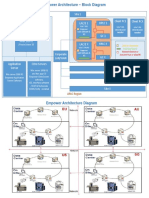

- Empower ArchitectureDocument2 pagesEmpower ArchitecturePinaki ChakrabortyNo ratings yet

- A 177 e Records Practice PDFDocument8 pagesA 177 e Records Practice PDFlastrajNo ratings yet

- Log Monitoring & Security StandardsDocument12 pagesLog Monitoring & Security StandardssharadNo ratings yet

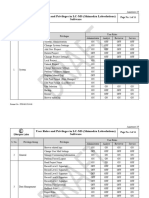

- 19 - User Roles and Privileges in LC-MS (Labsolutions)Document11 pages19 - User Roles and Privileges in LC-MS (Labsolutions)Dayanidhi dayaNo ratings yet

- Code Review Report SampleDocument91 pagesCode Review Report Sampletran quoc to tran quoc toNo ratings yet

- 40 05 Computer PolicyDocument5 pages40 05 Computer PolicyAnonymous 01E2HeMNo ratings yet

- EMA Work Instruction For Trackwise PDFDocument8 pagesEMA Work Instruction For Trackwise PDFantonygamalpharmaNo ratings yet

- 21CFR11 Assessment FAQ Metler Toledo STAREDocument51 pages21CFR11 Assessment FAQ Metler Toledo STAREfurqan.malikNo ratings yet

- Software Testing COGNIZANT NotesDocument175 pagesSoftware Testing COGNIZANT Notesapi-372240589% (44)

- ESCOM1999 Test Point Analysis - A Method For Test Estimation - tcm158-35882Document15 pagesESCOM1999 Test Point Analysis - A Method For Test Estimation - tcm158-35882api-3787635No ratings yet

- EdiDocument11 pagesEdiapi-3738703100% (3)

- How To Successfully Automate The Functional Testing ProcessDocument9 pagesHow To Successfully Automate The Functional Testing Processapi-26353284No ratings yet

- 101 MotivationDocument27 pages101 Motivationkyashwanth100% (85)

- PL SQL User's Guide & Reference 8.1.5Document590 pagesPL SQL User's Guide & Reference 8.1.5api-25930603No ratings yet

- EDIDocument42 pagesEDIapi-37876350% (1)

- Tuning Linux OS On Ibm Sg247338Document494 pagesTuning Linux OS On Ibm Sg247338gabjonesNo ratings yet

- KVH V11 HTS User ManualDocument178 pagesKVH V11 HTS User Manualmarine f.No ratings yet

- Cisco AnyConnect Vendor VPNDocument9 pagesCisco AnyConnect Vendor VPNArvind RazdanNo ratings yet

- TP DCDC 4824 HP - Product - Details - 2018 04 27 - 0902 PDFDocument2 pagesTP DCDC 4824 HP - Product - Details - 2018 04 27 - 0902 PDFLuis Humberto AguilarNo ratings yet

- Principles of Operating Systems and Its ApplicationsDocument140 pagesPrinciples of Operating Systems and Its Applicationsray bryant100% (1)

- Ax2012 Enus Deviii 05Document16 pagesAx2012 Enus Deviii 05JoeNo ratings yet

- Micro Focus Enterprise 2.3.1 Quick Start GuideDocument41 pagesMicro Focus Enterprise 2.3.1 Quick Start GuidepoojagirotraNo ratings yet

- Lecture # 7 Software Testing & Defect Life CycleDocument30 pagesLecture # 7 Software Testing & Defect Life CycleAfnan MuftiNo ratings yet

- CS497 Presentation MillerDocument30 pagesCS497 Presentation MillerAzizi AbdullahNo ratings yet

- Basic Introduction To The UCCE Servers and ComponentsDocument24 pagesBasic Introduction To The UCCE Servers and ComponentsDaksin SpNo ratings yet

- D1 - Building Next-Gen Security Analysis Tools With Qiling Framework - Kai Jern Lau & Simone Berni PDFDocument46 pagesD1 - Building Next-Gen Security Analysis Tools With Qiling Framework - Kai Jern Lau & Simone Berni PDFmomoomozNo ratings yet

- Vacon NX OPTCI Modbus TCP Board User Manual DPD009Document32 pagesVacon NX OPTCI Modbus TCP Board User Manual DPD009TanuTiganuNo ratings yet

- Workera ReportDocument22 pagesWorkera ReportJITENDRA PATELNo ratings yet

- IoT Based Raspberry Pi Home Automation ProjectDocument18 pagesIoT Based Raspberry Pi Home Automation ProjectageesNo ratings yet

- 1W STEREO KA2209 AMPLIFIER MODULEDocument3 pages1W STEREO KA2209 AMPLIFIER MODULEAlberto MoscosoNo ratings yet

- GE-ANT-1C WorkflowDocument29 pagesGE-ANT-1C WorkflowNur Hidayat NurdinNo ratings yet

- Kkkl-3144 Power Engineering " ": Name: Ahmad Kamil Bin Mat Hussin (A122459) Lecturer: Dr. Hussain ShareefDocument6 pagesKkkl-3144 Power Engineering " ": Name: Ahmad Kamil Bin Mat Hussin (A122459) Lecturer: Dr. Hussain ShareefAhmad KamilNo ratings yet

- Section 1 QuizDocument5 pagesSection 1 Quizsinta indriani86% (7)

- The NT Insider: Writing Filters Is Hard WorkDocument32 pagesThe NT Insider: Writing Filters Is Hard WorkOveja NegraNo ratings yet

- Oracle DatabasesDocument187 pagesOracle DatabasesMonica MarciucNo ratings yet

- Machine InfDocument6 pagesMachine Infmelissad23No ratings yet

- Manjaro User Guide PDFDocument130 pagesManjaro User Guide PDFAmanda SmithNo ratings yet

- Microsoft Defender XDR Markus LintualaDocument44 pagesMicrosoft Defender XDR Markus LintualaArif IslamNo ratings yet

- Man 8035M InstDocument412 pagesMan 8035M InstshankarsreekumarNo ratings yet

- Hawkboard Quick Start GuideDocument34 pagesHawkboard Quick Start GuideneduninnamonnaNo ratings yet

- Task Scheduling in Cloud Infrastructure Using Optimization Technique Genetic AlgorithmDocument6 pagesTask Scheduling in Cloud Infrastructure Using Optimization Technique Genetic Algorithmu chaitanyaNo ratings yet

- ResumeDocument2 pagesResumeAdam GoldfineNo ratings yet

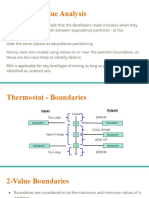

- Boundary Value AnalysisDocument9 pagesBoundary Value AnalysisChristien Froi FelicianoNo ratings yet

- LimbologDocument8 pagesLimbologJulio Alberto Perez matos vipNo ratings yet

- PPH 2108Document2 pagesPPH 2108Moery MrtNo ratings yet