Professional Documents

Culture Documents

B. Lexer Generating D. Language Modeling: Figure 3. Tokenization and POS Tagging in Source Code Analysis

Uploaded by

TsalOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

B. Lexer Generating D. Language Modeling: Figure 3. Tokenization and POS Tagging in Source Code Analysis

Uploaded by

TsalCopyright:

Available Formats

lines of code (LOC) of dataset rows with individual labels from the dataset to analyze.

alyze. Next, this analysis iterates

(Figure 2). The ultimate goal of these steps is to create a through each line of code and turns it into a stream of tokens

specific dataset with the appropriate lines of code and Java using a lexer. We then collect all the tokens for each line of

statement labels, which can be used for further source code code into an array. We then concatenate all token types in

analysis and language modeling. each line of code and combine them with spaces to form

common natural language sentences that are easy to process

in NLP.

B. Lexer Generating

ANTLR is a flexible tool developer can use to analyze D. Language Modeling

source code and make language processing tools for Language modeling is creating a statistical model that

different applications [2]. This generator can analyze source can predict the possible word order in a given language. A

code by generating source code for any programming token stream is a sequence of text data from the previous

language, provided the user provides the grammar for that process. This research needs to make a numerical

language. ANTLR is based on Java but can generate source representation. One common approach is the frequency

code in many other programming languages. This research inverse document frequency (TF-IDF) method [3], which

uses ANTLR version 4 as the latest version of the tool. In assigns a weight to each token based on how frequently it

general, ANTLR will generate lexers, listeners, and parsers, occurs in the code and how unique it is across the dataset.

while this study only uses lexers. A lexer is a software First, the language modeling import the Python libraries

component that reads a stream of program code characters like CountVectorizer and TfidfTransformer, which will let

and converts it into a stream of tokens, representing a us use TF-IDF to change the textual data in the token stream

programming language's fundamental building blocks. into a numeric format. From each line of code or statement,

The first thing to do to use ANTLR to make a lexer is to we use the CountVectorizer to calculate the frequency of

get the ANTLR library from the official site. After each token in each statement, then use the TfidfTransformer

downloading the library, the next step is to provide the Java to calculate the TF-IDF weights for each token. This method

grammar that defines the rules and tokens of the will turn the token stream into a numerical representation

programming language to analyze. Grammars must be that shows each token’s importance in the source code

written in the ANTLR grammar format. The ANTLR tool context. This method results in 53 features from a dataset

generates a lexer for Java source code programs based on a with 594 lines of code. These features can then be used to

Java grammar file as input and generates source code in the classify each statement into its type.

user's specified language. This study develops the lexer

E. Machine Learning Classification

program in Python language to make classification easier.

Machine learning classification is a powerful way to sort

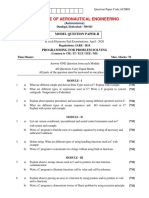

C. Source Code Analysis text data into different groups. This method is beneficial in

Source code analysis is the process of figuring out what source code analysis for finding and putting source code

the source code of a program means by looking at its statements into groups. Many machine learning models are

structure and syntax. The component used in this process is available for text classification, including Naïve Bayes,

the lexer. This process is analogous to tokenization and POS SVM, kNN, Decision Tree, and Rochio Algorithms [4].

tagging in natural language processing (NLP). Tokenization Each model has strengths and weaknesses, and choosing the

is breaking source code text into constituent words or suitable model for a particular task is essential. In order to

determine the best model for source code statement

tokens. POS tagging identifies each token types, such as

classification, it is necessary to compare the performances of

identifier, operator, keyword, or other token types. This

these different models.

information can be used to create a token stream, a list of

token names and types representing a source code file. We use Python libraries to model machine learning

Figure 3 shows a sample of this research’s the tokenization algorithms for the classification process. The classification,

and POS Tagging. process as well as the evaluation process, use K-Fold Cross-

Validation. This process use four folds so it divide the

dataset into 75% of training data and 25% of testing data.

After splitting the data set, the classification constructs a

classification model from the training data using the selected

machine learning model alternately. The program record the

model's accuracy and time consumption for the test dataset in

each fold. Finally, it calculates the average accuracy and time

consumption in each model. This process allows us to

compare the performance of different machine learning

models and choose the best fit for our source code assertion

classification.

Figure 3. Tokenization and POS Tagging in source code analysis

First, this source code analysis process imports the

generated Lexer program. Then it reads the source code file

IV. RESULT AND DISCUSION 70000

60000

Accuracy Time Consuming

50000

% (ms)

40000

Decision Tree 95.3% 4.53

30000

Naïve Bayes 94.4% 3.79

20000

SVM 95.1% 5.34

10000

RVM 96.1% 64908.16

0

kNN 87.3% 49.13 Decision Naïve SVM RVM kNN Rochio

Rochio 83.7% 61.86 Tree Bayes

Tampilkan semua table, grafik semua yg dihasilkan Figure 5 Time consumption in Java statement classification

Dilakukan pembahasan

Sehingga bisa disimpulkan (mengarah ke kesimpulan)

V. CONCLUSION

Hasil terbaik

ACKNOWLEDGEMENT

This work was supported by the Indonesian Government

through the Scholarship Schema of LPDP RI and Puslapdik

Kemendikbudristek.

REFERENCES

[1] S. Brudzinski, "Open LaTeX Studio," 2018. [Online].

Available: https://github.com/sebbrudzinski/Open-

LaTeX-Studio/. [Accessed 2023].

[2] T. Parr, The Definitive ANTLR 4 Reference, The

Pragmatic Programmers, 2013, pp. 1-326.

[3] Z. Yun-tao, G. Ling and W. Yong-cheng, "An Improved

Figure 4 Accuracy in Java statemsent classification

TF-IDF Approach for Text Classification," Journal of

Zhejiang University-Science, vol. 6, pp. 49-55, 2005.

[4] B. Agarwal and N. Mittal, "Text Classification Using

Machine Learning Methods-A Survey," Proceedings of

the Second International Conference on Soft Computing

for Problem Solving, vol. 236, pp. 701-709, 2012.

Data Preparation

• Cari OSS berbahasa Java yang tersedia di Github

• Terpilih Open Latex Studio

• 511 KB 120 file

• Diambil 4 file *.java 37KB

• Dipindah setiap baris ke CSV 806 baris kode

• Diberi label: Declaration, Expression, Control

• Dibuang yang lain: Comment, Braces, Parentheses

• Tersisa 594 baris dataset

Lexer Generating

• Download library ANTLR & grammar Java

• Set konfigurasi ANTLR

• Set target program ke Python

• Generate program Python untuk mengolah source code Java

• Dapat Lexer, Parser, Listener

• Ambil Lexernya

Source Code Analysis

• Import program Lexer yang sudah di-generate

• Baca tiap baris dataset

• Lakukan Analisis dengan Lexer (Tokenization & POS Tagging)

• Hasilnya: TokenStream (array)

• Tokenization: mendapatkan token-token dari source code

• POS Tagging: menandai jenis token

• Mengubah TokenStream ke dalam String yang berisi jenis token

• Menggabungkan jenis token setiap baris dengan pemisah spasi

• Dapat kalimat yang seperti bahasa alami, siap untuk diproses NLP

Language Modeling

• Import library Python: CountVectorizer & TFIDFTransformer

• CountVectorizer: Menghitung kemunculan tiap token di setiap baris & di kumpulan baris

• TFIDFTransformer: Menghitung bobot token-token yang relevan di setiap baris

• Dapat dataframe: 53 fitur bobot di 594 baris dataset

• Siap diklasifikasi

Statement Classification

• Import library Python untuk model ML: DecisionTree, NaiveBayes, SVM, RVM, kNN, Rochio

• Membuat K-Fold CrossValidation: 4 folds

• 75% data training & 25% data testing

• Memilih metode ML

• Mengeksekusi 4 iterasi:

• Membangun model klasifikasi berdasarkan metode

• Menghitung akurasi & waktu

• Menghitung rerata akurasi & waktu setiap metode ML

You might also like

- Object-Oriented Compiler Construction: Extended AbstractDocument6 pagesObject-Oriented Compiler Construction: Extended AbstractMairy PapNo ratings yet

- Abstract Syntax Tree Based Clone Detection For Java ProjectsDocument3 pagesAbstract Syntax Tree Based Clone Detection For Java ProjectsIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Compiler DesignDocument10 pagesCompiler DesignDeSi ChuLLNo ratings yet

- Automata Theory and Compiler DesignDocument55 pagesAutomata Theory and Compiler Designhiremathsuvarna6No ratings yet

- Compiler Construction: Instructor: Aunsia KhanDocument35 pagesCompiler Construction: Instructor: Aunsia KhanMuhammad MohsinNo ratings yet

- Speaker Recognition Using MatlabDocument14 pagesSpeaker Recognition Using MatlabSandeep SreyasNo ratings yet

- Binary Classification Tutorial With The Keras Deep Learning LibraryDocument33 pagesBinary Classification Tutorial With The Keras Deep Learning LibraryShudu TangNo ratings yet

- Retrieval Engine DocumentationDocument2 pagesRetrieval Engine Documentationsurajlambor18No ratings yet

- Code2vec Learning Distributed Representations of CodeDocument30 pagesCode2vec Learning Distributed Representations of CodeaaabbaaabbNo ratings yet

- Explore MetaprogramDocument13 pagesExplore MetaprogramBenjamin CulkinNo ratings yet

- Compilers Thamar Universtiy Lec1 PDFDocument21 pagesCompilers Thamar Universtiy Lec1 PDFhashem AL-shrfiNo ratings yet

- Suvarna DocDocument4 pagesSuvarna DocGarima SarojNo ratings yet

- Key Data Extraction and Emotion Analysis of Digital Shopping Based On BERTDocument14 pagesKey Data Extraction and Emotion Analysis of Digital Shopping Based On BERTsaRIKANo ratings yet

- 18BCS47S U2Document118 pages18BCS47S U2Shreyas DeodhareNo ratings yet

- Module 3 CDSS PDFDocument44 pagesModule 3 CDSS PDFGanga Nayan T LNo ratings yet

- Lexical Analyzer Using DFA by Ingale, Vayadande, Verma, Yeole, Zawar and JamadarDocument4 pagesLexical Analyzer Using DFA by Ingale, Vayadande, Verma, Yeole, Zawar and JamadarItiel LópezNo ratings yet

- 15 SentimentAnalysisDocument17 pages15 SentimentAnalysisMuhammad Fahmi PamungkasNo ratings yet

- Compiler Design - Phases of CompilerDocument2 pagesCompiler Design - Phases of Compilerswarna_793238588No ratings yet

- Improving Bug Localization With Character-Level Convolutional Neural Network and Recurrent Neural NetworkDocument2 pagesImproving Bug Localization With Character-Level Convolutional Neural Network and Recurrent Neural NetworkNguyen Van ToanNo ratings yet

- An Introduction To Support Vector Machines and Other Kernel-Based Learning Methods - Applications of Support Vector MachinesDocument13 pagesAn Introduction To Support Vector Machines and Other Kernel-Based Learning Methods - Applications of Support Vector MachinesJônatas Oliveira SilvaNo ratings yet

- CD 1Document23 pagesCD 1PonnuNo ratings yet

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABNo ratings yet

- CD AssignmentDocument16 pagesCD Assignmentadithyavinod.mecNo ratings yet

- Feature Extraction PhaseDocument3 pagesFeature Extraction PhaseOsama AbbassNo ratings yet

- PhasesDocument3 pagesPhasesOsama AbbassNo ratings yet

- Compiler Construction CS-4207 Lecture - 01 - 02: Input Output Target ProgramDocument8 pagesCompiler Construction CS-4207 Lecture - 01 - 02: Input Output Target ProgramFaisal ShehzadNo ratings yet

- The Compilation ProcessDocument8 pagesThe Compilation ProcessZoe Prophetic MajorNo ratings yet

- Primer Simulador Java 6Document14 pagesPrimer Simulador Java 6Rogelio AlemanNo ratings yet

- Neural NetworksDocument8 pagesNeural Networksgesicin232No ratings yet

- COMPILERDESIGNDocument3 pagesCOMPILERDESIGNkevinrejijohnNo ratings yet

- Aqsa Shamsa Hira SCD ProjectDocument11 pagesAqsa Shamsa Hira SCD ProjectAqsa TabassumNo ratings yet

- BIS RaysRecogDocument16 pagesBIS RaysRecogSaranyaRoyNo ratings yet

- Lecture 2 Introduction To Phases of CompilerDocument4 pagesLecture 2 Introduction To Phases of CompileruzmaNo ratings yet

- Report On Text Classification Using CNN, RNN & HAN - Jatana - MediumDocument15 pagesReport On Text Classification Using CNN, RNN & HAN - Jatana - Mediumpradeep_dhote9No ratings yet

- Speaker Recognition SystemDocument7 pagesSpeaker Recognition SystemJanković MilicaNo ratings yet

- CC Assignment No 03Document12 pagesCC Assignment No 03IKhlaq HussainNo ratings yet

- Introduction To Compilers: Analysis of Source Program, Phases of A Compiler, Grouping ofDocument12 pagesIntroduction To Compilers: Analysis of Source Program, Phases of A Compiler, Grouping ofKaran BajwaNo ratings yet

- Compiler Design Unit-1 - 2Document3 pagesCompiler Design Unit-1 - 2sreethu7856No ratings yet

- Part - 9: Complier Design: 9.1 Introduction To CompilersDocument6 pagesPart - 9: Complier Design: 9.1 Introduction To CompilersVedang SinghNo ratings yet

- Part - 9: Complier Design: 9.1 Introduction To CompilersDocument6 pagesPart - 9: Complier Design: 9.1 Introduction To CompilersVelmurugan JeyavelNo ratings yet

- Bayesian OptimizationDocument15 pagesBayesian Optimizationsrikanth madakaNo ratings yet

- Compiler Design - WebviewDocument10 pagesCompiler Design - WebviewSneha RNo ratings yet

- 82001Document85 pages82001Mohammed ThawfeeqNo ratings yet

- 1-Phases of CompilerDocument66 pages1-Phases of CompilerHASNAIN JANNo ratings yet

- Compiler CostructionDocument15 pagesCompiler CostructionAnonymous UDCWUrBTSNo ratings yet

- Notes - IAE-1-CDDocument14 pagesNotes - IAE-1-CDNandhini ShanmugamNo ratings yet

- Compiler DesignDocument117 pagesCompiler DesignⲤʟᴏᴡɴᴛᴇʀ ᏀᴇɪꜱᴛNo ratings yet

- Refactoring Support Based On Code Clone Analysis: (Y-Higo, Kusumoto, Inoue) @ist - Osaka-U.ac - JPDocument14 pagesRefactoring Support Based On Code Clone Analysis: (Y-Higo, Kusumoto, Inoue) @ist - Osaka-U.ac - JPLucas SantosNo ratings yet

- Definition of TermsDocument3 pagesDefinition of TermsJane RobNo ratings yet

- Machine Translation Using Natural Language ProcessDocument6 pagesMachine Translation Using Natural Language ProcessFidelzy MorenoNo ratings yet

- Online Stock ManagementDocument28 pagesOnline Stock Managementpalraj20021121No ratings yet

- Irjet V5i8212 PDFDocument3 pagesIrjet V5i8212 PDFsparsh GuptaNo ratings yet

- System Software NotesDocument81 pagesSystem Software Notesuma sNo ratings yet

- DEEP LEARNING TECHNIQUES: CLUSTER ANALYSIS and PATTERN RECOGNITION with NEURAL NETWORKS. Examples with MATLABFrom EverandDEEP LEARNING TECHNIQUES: CLUSTER ANALYSIS and PATTERN RECOGNITION with NEURAL NETWORKS. Examples with MATLABNo ratings yet

- Major Project Presentation 2 - G6Document13 pagesMajor Project Presentation 2 - G6Hdyo mdmdNo ratings yet

- Chapter 2 Lexical Analysis (Scanning) EditedDocument46 pagesChapter 2 Lexical Analysis (Scanning) EditedDaniel Bido RasaNo ratings yet

- 10 1016@j Eswa 2020 114348Document27 pages10 1016@j Eswa 2020 114348minhNo ratings yet

- Phases of A CompilerDocument6 pagesPhases of A CompilerKashif KashifNo ratings yet

- File Structure ProgramsDocument63 pagesFile Structure Programsbala2266No ratings yet

- STM Q Paper 2-MidDocument2 pagesSTM Q Paper 2-Mid7killers4uNo ratings yet

- RastalabsDocument54 pagesRastalabsmehmet100% (1)

- Global Sample Project PPT CSEDocument21 pagesGlobal Sample Project PPT CSESandeep RaoNo ratings yet

- Chap 6 - Software ReuseDocument51 pagesChap 6 - Software ReuseMohamed MedNo ratings yet

- CMake ListsDocument4 pagesCMake ListsPeithoNo ratings yet

- CAST AI PlatformDocument4 pagesCAST AI PlatformhbabtiwaNo ratings yet

- Aws 1Document3 pagesAws 1shrikant_more41612No ratings yet

- Literature Review On Crime File Management SystemDocument7 pagesLiterature Review On Crime File Management SystemafdtzfutnNo ratings yet

- PCA Block DescriptionDocument6 pagesPCA Block DescriptionMarcos Daniel WiechertNo ratings yet

- District Calendar - McNairy County SchoolsDocument3 pagesDistrict Calendar - McNairy County SchoolschefchadsmithNo ratings yet

- 82001Document85 pages82001Mohammed ThawfeeqNo ratings yet

- Guide For PythonDocument78 pagesGuide For Pythondude_a_b_cNo ratings yet

- Research Process On Software Development ModelDocument9 pagesResearch Process On Software Development ModelRuth amelia GNo ratings yet

- Applications: Unit - ViDocument30 pagesApplications: Unit - ViRavi TejaNo ratings yet

- C.S CH 2 &3 Preeti Arora Exercises PDFDocument24 pagesC.S CH 2 &3 Preeti Arora Exercises PDFRasleen PahujaNo ratings yet

- bitsler-JS-script WORKINGDocument2 pagesbitsler-JS-script WORKINGsudarsanamNo ratings yet

- Java IntroductionDocument77 pagesJava IntroductionMaithy TonNo ratings yet

- Automating Network With Ansible and Cisco NSO: Enable Continuous Integration and Deployment With Devops ApproachDocument13 pagesAutomating Network With Ansible and Cisco NSO: Enable Continuous Integration and Deployment With Devops ApproachBalan WvNo ratings yet

- AutoCAD VBA Programming Tools and Techniques - Exploiting The Power of VBA in AutoCAD 2000 (PDFDrive)Document741 pagesAutoCAD VBA Programming Tools and Techniques - Exploiting The Power of VBA in AutoCAD 2000 (PDFDrive)Pedro Henrry Marza ColqueNo ratings yet

- Cse Programming For Problem Solving Model Question Papers 2Document4 pagesCse Programming For Problem Solving Model Question Papers 2TAMMISETTY VIJAY KUMARNo ratings yet

- Parallel and Distributed ComputingDocument1 pageParallel and Distributed ComputingNavin ChinnusamyNo ratings yet

- GDST1 0TechnicalImplementationGuidancefinalDocument30 pagesGDST1 0TechnicalImplementationGuidancefinalxio stNo ratings yet

- Combined Cheat SheetDocument12 pagesCombined Cheat Sheetnaveenpurohit2003No ratings yet

- Moshell InstallDocument5 pagesMoshell InstallAzea ADANo ratings yet

- Automatic Timetable GeneratorDocument27 pagesAutomatic Timetable Generator44 Bhushan BhurkeNo ratings yet

- Working With MongoDB Data in Nodejs Apps With MongooseDocument153 pagesWorking With MongoDB Data in Nodejs Apps With MongoosegreeneyedprincessNo ratings yet

- Siebel Release Notes / Known IssuesDocument99 pagesSiebel Release Notes / Known Issues谢义军No ratings yet

- Create Functional Location Task List - HCA - WIDocument5 pagesCreate Functional Location Task List - HCA - WITomáš DemovičNo ratings yet

- DMCM ReportsDocument202 pagesDMCM ReportseswaranirttNo ratings yet