Professional Documents

Culture Documents

Stateless Algorithms in Reinforcement Learning

Uploaded by

LOGESH WARAN P0 ratings0% found this document useful (0 votes)

16 views4 pagesStateless algorithms in reinforcement learning operate without explicitly storing or referencing past states. This makes them more computationally efficient for environments with large or continuous state spaces. Three common stateless algorithms are: 1) Naive algorithms which choose actions based solely on rewards without considering states, 2) Epsilon-greedy algorithms which balance exploration and exploitation by choosing random actions with probability ε, and 3) Upper bounding methods like UCB which use optimistic estimates to encourage exploration of unknown actions.

Original Description:

algo

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentStateless algorithms in reinforcement learning operate without explicitly storing or referencing past states. This makes them more computationally efficient for environments with large or continuous state spaces. Three common stateless algorithms are: 1) Naive algorithms which choose actions based solely on rewards without considering states, 2) Epsilon-greedy algorithms which balance exploration and exploitation by choosing random actions with probability ε, and 3) Upper bounding methods like UCB which use optimistic estimates to encourage exploration of unknown actions.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

16 views4 pagesStateless Algorithms in Reinforcement Learning

Uploaded by

LOGESH WARAN PStateless algorithms in reinforcement learning operate without explicitly storing or referencing past states. This makes them more computationally efficient for environments with large or continuous state spaces. Three common stateless algorithms are: 1) Naive algorithms which choose actions based solely on rewards without considering states, 2) Epsilon-greedy algorithms which balance exploration and exploitation by choosing random actions with probability ε, and 3) Upper bounding methods like UCB which use optimistic estimates to encourage exploration of unknown actions.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 4

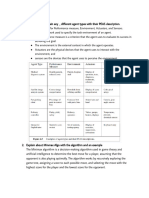

Stateless Algorithms in Reinforcement Learning:

Stateless algorithms in RL differ from traditional state-based approaches

by not explicitly storing or referencing past states in their decision-making

processes. This can be advantageous for environments with large or

continuous state spaces, where storing and handling states becomes

impractical. Stateless algorithms in Reinforcement Learning (RL) operate

without explicitly storing information about past states or experiences.

This makes them computationally efficient and suitable for scenarios

where memory limitations are a concern. Stateless algorithms in RL differ

from stateful methods like Q-learning by not explicitly storing and

updating a model of the environment (e.g., a Q-table). This makes them

computationally efficient and suitable for situations where large state

spaces are impractical to manage.

1. Naive Algorithm:

Concept: Chooses actions purely based on a pre-defined reward function

without considering the current state or context.

The simplest stateless algorithm, also known as "greedy" or "exploitation-

only."

The agent always chooses the action with the highest expected reward in

the current state, based on its current knowledge.

This can be effective in exploiting existing knowledge but lacks

exploration, potentially missing out on better strategies in the long run.

The agent directly optimizes its policy function, which maps observations

to actions.

Implementation: Randomly generate actions and evaluate their

performance based on received rewards. Update the policy function to

favor actions that have led to higher average rewards.

Example: An agent in a bandit problem might simply pull the arm that has

historically given the highest average reward, regardless of the current

arm configurations.

Pros: Simple to implement and computationally efficient.

Simple to implement, no need for state storage.

Cons: Ignores potentially valuable information in the current state,

leading to suboptimal performance in complex environments.

Can be slow to converge, prone to noise due to random exploration.

2. Epsilon-Greedy Algorithm:

Introduces a balance between exploitation and exploration.

With probability ε, the agent selects a random action to explore the state

space.

With probability (1-ε), the agent chooses the action with the highest

expected reward (greedy action).

The ε value can be adjusted dynamically to control the exploration-

exploitation trade-off.

This is a widely used stateless algorithm due to its simplicity and

effectiveness in various RL tasks.

Introduces a balance between exploiting known good actions and

exploring new ones.

Implementation: With probability (1 - ε), the agent chooses the action

with the highest perceived value (exploitation). With probability ε, it

randomly chooses another action (exploration).

Tuning: Epsilon (ε) controls the exploration-exploitation trade-off. Higher ε

encourages exploration, but might sacrifice immediate rewards, while

lower ε prioritizes exploiting known good actions.

Combines exploration (trying new actions) and exploitation (leveraging

known good actions).

Implementation: With an ε probability, choose a random action

(exploration). With (1-ε) probability, choose the action with the highest

estimated value in the current policy (exploitation).

Pros: Enables exploration while leveraging learned knowledge, often

leading to faster convergence than purely random or greedy approaches.

Balances exploration and exploitation, relatively simple to implement.

Cons: Choosing ε can be challenging, depending on the problem

complexity and learning objectives.

Tuning ε is crucial for performance, might not be suitable for complex

environments.

3. Upper Bounding Methods:

Concept: Employ optimistic estimates of possible future rewards for

unknown actions, encouraging exploration through controlled optimism.

Focus on guaranteeing a lower bound on the optimal value instead of

estimating the exact expected reward for each action.

These methods build confidence intervals around the estimated values,

ensuring that the chosen action has a high probability of being near

optimal.

Examples include UCB (Upper Confidence Bound) and Thompson

Sampling algorithms.

Upper bounding methods can be computationally more expensive than

epsilon-greedy but offer better theoretical guarantees and can be

effective in situations with limited data or uncertainty.

Example: UCB1 (Upper Confidence Bound) algorithm adds a confidence

bonus to the estimated reward of each action, favoring less explored

options with potentially higher rewards.

Estimate upper bounds on the expected value of each action and choose

the one with the highest upper bound.

Implementation: Build confidence intervals around expected values using

techniques like UCB (Upper Confidence Bound) or Thompson Sampling.

Choose the action with the most optimistic (highest) upper bound.

Pros: Guarantees theoretical bounds on regret (difference between

optimal and achieved rewards), offering good performance in uncertain

environments.

Efficient exploration, can handle large state spaces and continuous

actions.

Cons: Can be computationally expensive for large action spaces due to

recalculations for each bound and action value.

More complex to implement than simpler algorithms, might be

computationally expensive.

You might also like

- How To Build Your Own Neural Network From Scratch in PythonDocument11 pagesHow To Build Your Own Neural Network From Scratch in Pythonsachin vermaNo ratings yet

- Data Science Interview QuestionsDocument68 pagesData Science Interview QuestionsAva White100% (1)

- Breast Cancer ClassificationDocument16 pagesBreast Cancer ClassificationTester100% (2)

- Unit 4 Machine Learning Tools, Techniques and ApplicationsDocument78 pagesUnit 4 Machine Learning Tools, Techniques and ApplicationsJyothi PulikantiNo ratings yet

- Machine LearningDocument115 pagesMachine LearningRaja Meenakshi100% (4)

- Unit-2 Part 1Document4 pagesUnit-2 Part 1anshul.saini0803No ratings yet

- Machine Learning Mod 5Document15 pagesMachine Learning Mod 5Vishnu ChNo ratings yet

- RL Module 1Document6 pagesRL Module 1Amitesh SNo ratings yet

- FALL SEMESTER 2019-20 AI With Python: ECE4031 Digital Assignment - 1Document14 pagesFALL SEMESTER 2019-20 AI With Python: ECE4031 Digital Assignment - 1sejal mittalNo ratings yet

- Unit III. Heuristic Search TechniqueDocument15 pagesUnit III. Heuristic Search Techniqueszckhw2mmcNo ratings yet

- Reinforcement LearningDocument49 pagesReinforcement LearningabasthanaNo ratings yet

- Unit 2 Hill Climbing TechniquesDocument23 pagesUnit 2 Hill Climbing Techniquesharshita.sharma.phd23No ratings yet

- DW 01Document14 pagesDW 01Seyed Hossein KhastehNo ratings yet

- Reinforcement Learning: A Survey: Leslie Pack Kaelbling Michael L. Littman Andrew W. MooreDocument49 pagesReinforcement Learning: A Survey: Leslie Pack Kaelbling Michael L. Littman Andrew W. MooresomiiipmNo ratings yet

- Reinf 2Document4 pagesReinf 2faria shahzadiNo ratings yet

- Reinforcement Learning: Russell and Norvig: CH 21Document16 pagesReinforcement Learning: Russell and Norvig: CH 21ZuzarNo ratings yet

- 18AI71 - AAI INTERNAL 1 QB AnswersDocument14 pages18AI71 - AAI INTERNAL 1 QB AnswersSahithi BhashyamNo ratings yet

- CS405-6 2 1 2-WikipediaDocument7 pagesCS405-6 2 1 2-WikipediaJ.J. SigüenzaNo ratings yet

- Genetic Reinforcement Learning Algorithms For On-Line Fuzzy Inference System Tuning "Application To Mobile Robotic"Document31 pagesGenetic Reinforcement Learning Algorithms For On-Line Fuzzy Inference System Tuning "Application To Mobile Robotic"Nelson AriasNo ratings yet

- Unit-5 Part C 1) Explain The Q Function and Q Learning Algorithm Assuming Deterministic Rewards and Actions With Example. Ans)Document11 pagesUnit-5 Part C 1) Explain The Q Function and Q Learning Algorithm Assuming Deterministic Rewards and Actions With Example. Ans)QUARREL CREATIONSNo ratings yet

- CSD311: Artificial IntelligenceDocument11 pagesCSD311: Artificial IntelligenceAyaan KhanNo ratings yet

- Coincent Data Analysis AnswersDocument16 pagesCoincent Data Analysis AnswersGURURAJ V A ,DeekshaNo ratings yet

- Unit VDocument24 pagesUnit Vbushrajameel88100% (1)

- Data AnalyticsDocument32 pagesData Analyticsprathamesh patilNo ratings yet

- Artificial IntelligenceDocument13 pagesArtificial IntelligenceGadgetNo ratings yet

- AI KEY - June-2023Document2 pagesAI KEY - June-2023srinivas kanakalaNo ratings yet

- Unit 2 AIDocument14 pagesUnit 2 AIPriyankaNo ratings yet

- Statistics Interview 02Document30 pagesStatistics Interview 02Sudharshan Venkatesh100% (1)

- Zeyu Tan AI Assessment ReportDocument14 pagesZeyu Tan AI Assessment ReportAnna LionNo ratings yet

- AI Question Bank SolvedDocument16 pagesAI Question Bank SolvedSAHIL LADVANo ratings yet

- Ai Module1 QB SolutionsDocument15 pagesAi Module1 QB SolutionsTejas SuriNo ratings yet

- Reinforcement LN-6Document13 pagesReinforcement LN-6M S PrasadNo ratings yet

- Artificial IntelligenceDocument11 pagesArtificial IntelligenceKhanam KhanNo ratings yet

- Problem Solving & MEADocument7 pagesProblem Solving & MEAateeq mughalNo ratings yet

- (More) Efficient Reinforcement Learning Via Posterior SamplingDocument10 pages(More) Efficient Reinforcement Learning Via Posterior SamplinghbuddyNo ratings yet

- Reinforcement LearningDocument23 pagesReinforcement LearningRajachandra VoodigaNo ratings yet

- Unit 2Document26 pagesUnit 2skraoNo ratings yet

- AI Lecture Four Heuristic SearchDocument15 pagesAI Lecture Four Heuristic Searchنور هادي حمودNo ratings yet

- Mlt-Cia Iii Ans KeyDocument14 pagesMlt-Cia Iii Ans KeyDarshu deepaNo ratings yet

- AI Lab Assignmentc7Document5 pagesAI Lab Assignmentc7Sagar PrasadNo ratings yet

- Task 1: Search Algorithms: Depth First Search (DFS) - Is An Algorithm For Traversing or Searching Tree or Graph DataDocument8 pagesTask 1: Search Algorithms: Depth First Search (DFS) - Is An Algorithm For Traversing or Searching Tree or Graph DataPrakash PokhrelNo ratings yet

- A Gent P R O G R A M S: Agent ProgramsDocument7 pagesA Gent P R O G R A M S: Agent ProgramsS.Srinidhi sandoshkumarNo ratings yet

- Training With Proximal Policy Optimization Training With Proximal Policy OptimizationDocument7 pagesTraining With Proximal Policy Optimization Training With Proximal Policy OptimizationCecille DevilleNo ratings yet

- Q No. 1 1.1machine Learning:: Machine Learning Is The Study of Computer Algorithms That Improve AutomaticallyDocument10 pagesQ No. 1 1.1machine Learning:: Machine Learning Is The Study of Computer Algorithms That Improve AutomaticallysajidNo ratings yet

- ML Module VDocument21 pagesML Module VCrazy ChethanNo ratings yet

- Learning Active Learning From DataDocument11 pagesLearning Active Learning From DataMaximillian Fornitz VordingNo ratings yet

- Types of Machine Learning AlgorithmsDocument14 pagesTypes of Machine Learning AlgorithmsVipin RajputNo ratings yet

- Paper 32-A New Automatic Method To Adjust Parameters For Object RecognitionDocument5 pagesPaper 32-A New Automatic Method To Adjust Parameters For Object RecognitionEditor IJACSANo ratings yet

- ML Unit 1Document74 pagesML Unit 1mr. potterNo ratings yet

- 11 Most Common Machine Learning Algorithms Explained in A Nutshell by Soner Yıldırım Towards Data ScienceDocument16 pages11 Most Common Machine Learning Algorithms Explained in A Nutshell by Soner Yıldırım Towards Data ScienceDheeraj SonkhlaNo ratings yet

- AdaptiDocument6 pagesAdaptiYanuan AnggaNo ratings yet

- AnwermlDocument10 pagesAnwermlrajeswari reddypatilNo ratings yet

- Unit 1 Question With Answer2Document9 pagesUnit 1 Question With Answer2Abhishek GoyalNo ratings yet

- DisertatieDocument5 pagesDisertatieFilote CosminNo ratings yet

- Chapter ThreeDocument46 pagesChapter ThreeJiru AlemayehuNo ratings yet

- Simulated Annealing OverviewDocument10 pagesSimulated Annealing OverviewJoseJohnNo ratings yet

- 1.1 Proposed Algorithms 1.1.1 Random Forest Algorithm: Ensemble LearningDocument81 pages1.1 Proposed Algorithms 1.1.1 Random Forest Algorithm: Ensemble LearningVijay ReddyNo ratings yet

- CS6659 & Artificial Intelligence Unit IDocument17 pagesCS6659 & Artificial Intelligence Unit IPraveena papa2712No ratings yet

- Long MLDocument25 pagesLong MLdarvish9430801762No ratings yet

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- Additional CNNDocument82 pagesAdditional CNNLOGESH WARAN PNo ratings yet

- CNS Unit 2Document38 pagesCNS Unit 2LOGESH WARAN PNo ratings yet

- Unit 3 Problems On Financial Analysis MAR 21, 2023Document5 pagesUnit 3 Problems On Financial Analysis MAR 21, 2023LOGESH WARAN PNo ratings yet

- CNS Unit 3Document15 pagesCNS Unit 3LOGESH WARAN PNo ratings yet

- CNS Unit 5Document22 pagesCNS Unit 5LOGESH WARAN PNo ratings yet

- DAA SyllabusDocument3 pagesDAA SyllabusLOGESH WARAN PNo ratings yet

- Unit 2Document112 pagesUnit 2LOGESH WARAN PNo ratings yet

- Unit 2Document28 pagesUnit 2LOGESH WARAN PNo ratings yet

- Ensemble MethodsDocument4 pagesEnsemble MethodsLOGESH WARAN PNo ratings yet

- The Doomed Dice ChallengeDocument2 pagesThe Doomed Dice ChallengeLOGESH WARAN P50% (2)

- EC8393-Fundamentals of Data Strucutres in CDocument3 pagesEC8393-Fundamentals of Data Strucutres in Csyed1188No ratings yet

- Time Integration Fundamentals For ClassDocument50 pagesTime Integration Fundamentals For ClasscucrasNo ratings yet

- Dynamic TreesDocument6 pagesDynamic TreesPIPALIYA NISARGNo ratings yet

- Example Of: (Z) Matrix Building AlgorithmDocument10 pagesExample Of: (Z) Matrix Building AlgorithmAhmed aliNo ratings yet

- Lecture-13 - ESO208 - Aug 31 - 2022Document28 pagesLecture-13 - ESO208 - Aug 31 - 2022Imad ShaikhNo ratings yet

- Feedforward Neural NetworkDocument5 pagesFeedforward Neural NetworkSreejith MenonNo ratings yet

- Matlab Exam 2 Review MatlabDocument3 pagesMatlab Exam 2 Review MatlabRichard KwofieNo ratings yet

- Operation Research 2 Dynamic ProgrammingDocument34 pagesOperation Research 2 Dynamic ProgrammingGideon Eka DirgantaraNo ratings yet

- Huffman CodesDocument27 pagesHuffman CodesNikhil YadalaNo ratings yet

- Ada Lab Manual - Shitanshu JainDocument28 pagesAda Lab Manual - Shitanshu Jainshubh agrawalNo ratings yet

- Quiz 6: CS 436/536: Introduction To Machine LearningDocument2 pagesQuiz 6: CS 436/536: Introduction To Machine LearningKarishma JaniNo ratings yet

- RecursionDocument9 pagesRecursionMada BaskoroNo ratings yet

- MODULE - I Numerical MethodsDocument3 pagesMODULE - I Numerical MethodsHarsha KasaragodNo ratings yet

- Unit - I: Random Access Machine ModelDocument39 pagesUnit - I: Random Access Machine ModelShubham SharmaNo ratings yet

- Yousef Saad - Iterative Methods For Sparse Linear Systems-Society For Industrial and Applied Mathematics (2003)Document460 pagesYousef Saad - Iterative Methods For Sparse Linear Systems-Society For Industrial and Applied Mathematics (2003)Disinlung Kamei DisinlungNo ratings yet

- Chapter - 3Document45 pagesChapter - 3Swathi KalagatlaNo ratings yet

- An Introduction To Support Vector MachinesDocument22 pagesAn Introduction To Support Vector Machinesfarheen shaikhNo ratings yet

- Ec 7005 1 Information Theory and Coding Dec 2020Document3 pagesEc 7005 1 Information Theory and Coding Dec 2020Rohit kumarNo ratings yet

- Gauss-Seidel Itration MethodDocument2 pagesGauss-Seidel Itration MethodNayli AthirahNo ratings yet

- bmt1014 Chap03 Linear ProgrammingDocument26 pagesbmt1014 Chap03 Linear Programmingapi-305852139No ratings yet

- Representation of Bandpass SignalsDocument10 pagesRepresentation of Bandpass SignalsManoj GowdaNo ratings yet

- Self-Quiz Unit 3 - Attempt ReviewDocument12 pagesSelf-Quiz Unit 3 - Attempt Reviewveewhyte51No ratings yet

- Supervised Learning Classification Algorithms ComparisonDocument6 pagesSupervised Learning Classification Algorithms Comparisonpriti yadavNo ratings yet

- Example of The Baum-Welch AlgorithmDocument6 pagesExample of The Baum-Welch AlgorithmStevan MilinkovicNo ratings yet

- DL Slides 2Document162 pagesDL Slides 2ABI RAJESH GANESHA RAJANo ratings yet

- Blind Source Separation MATLAB CodeDocument2 pagesBlind Source Separation MATLAB CodeHariprasath SubbaraoNo ratings yet

- Practice Problem Set 3: OA4201 Nonlinear ProgrammingDocument6 pagesPractice Problem Set 3: OA4201 Nonlinear Programmingfatihy73No ratings yet

- Error Detection (Data Communication)Document54 pagesError Detection (Data Communication)Atif IslamNo ratings yet

- He Masked Autoencoders Are Scalable Vision Learners CVPR 2022 PaperDocument10 pagesHe Masked Autoencoders Are Scalable Vision Learners CVPR 2022 PaperJohnathan XieNo ratings yet