Professional Documents

Culture Documents

Correlation Analysis

Uploaded by

udaywal.nandiniOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Correlation Analysis

Uploaded by

udaywal.nandiniCopyright:

Available Formats

(3.

1) Correlation Analysis

B.Com (Hons.) Ist Year

Unit no: 1

Paper No:IV

Types of Statisical Data

Fellow

Dr.Kawal Gill, Associate Professor

Department/College: Shri Guru Gobind Singh College of Commerce

Author

Dr. Madhu Gupta, Associate Professor

College/Department: Janki devi Memorial College of Commerce,

University of Delhi

Reviewer

Dr. Bindra Prasad, Associate Professor

College/ Department: Department of Commerce, Shaheed Bhagat

Singh College, University of Delhi

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Table of Contents

(3.1) Correlation Analysis

o 1.1 Introduction

o 1.2 Scatter Diagram

o 1.3 Karl Pearson’s Coefficient Of Correlation

o 1.4 Probable Error Of Correlation Coefficient

o 1.5 Spearman’s rank correlation

o Summary

o Exercise

o References

o Glossary

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

1.1 Introduction

So far, we have analyzed series involving a single variable (or measurement), such as

height of different people or marks of students or wages of workers. This is referred to

as univariate data, i.e., data involving only one variable. In this Chapter and the next,

we will study ‘bivariate data’ i.e., we will collect and analyze data relating to two

variables (measurements) for each element of the population or sample. For example,

we may

Figure 1.1: Bi variate Data and Covariation

measure height and weight of different persons or we may measure price and demand of

different commodities. Such bivariate data is studied to analyze the relationship between

the two variables. For example, if we study height and weight of different individuals of a

group, we must see whether these two variables have any association or covariation

between them so that if one variable changes the other also changes either in the same

direction or in the opposite direction. We may notice that taller men are usually heavier

and shorter men are usually lighter. Similarly, if we study price and demand of a

commodity we may find, as price increases demand decreases and as price decreases

demand increases. When we observe such kind of phenomenon, we say that the two or

more variables are mutually related or co-related. Under correlation, this relationship

between two or more variables is studied.

1.1.1 Meaning

Correlation is one of the most common and most useful statistics. Correlation is an

analysis of covariation between two or more variables. If, with a change in one variable,

other variable also changes in the same or in the opposite direction, then we say the two

variables are correlated. For example, height and weight, price and demand, cost and

profits are generally correlated.

1.1.2 Types Of Correlation

1. Positive and Negative Correlation

Correlation is positive, when the variables moves in the same direction, that is, when one

variable increases the other also increases and if one decreases, the other decreases.

Some examples of positive correlation are

Expenditure on advertisement and sales

Personal Income and savings

Temperature and sale of icecream

Profits of a company and the market price of its shares

Yield of crop and amount of fertilizer used

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Correlation is negative, when the two variables move in opposite direction, that is, an

increase in the value of one variable causes a decrease in the value of other variable or

the decrease in the value of one variable causes an increase in the value of other

variable. Some examples of negative correlation are

Price and demand of a product

Medical facilities and death rate

Cost and profits

Temperature and demand of heater

Exports and custom duty

Figure1.3: Positive and Negative Correlation

2. Simple, Multiple and Partial CorrelationThis classification is based on the number

of variables involved in the study and the techniques involved in measuring the

correlation.

When we study the relationship between two variables, we call it simple correlation like

studying the relationship between marks in statistics and marks in accountancy of

different students.

When we study relationship among three or more variables, then it is called multiple

correlation. Thus, if we study the relationship between profit, sales, and cost of

production of any product, we call it multiple correlation.

3. Linear and Non-linear CorrelationIf the ratio of the amount of change in one

variable to the amount of corresponding change in the other variable is constant,

correlation is said to be linear. In other words, when two variables X and Y form a linear

relationship Y = a + bX, it will be a case of linear correlation. Consider the following

example:

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

X 3 5 9 12 13

Y 9 13 21 27 29

Here, when X changes from 3 to 5 (i.e., by 2 units), Y changes from 9 to 13 (i.e., by 4

units). The ratio of the two changes is When X changes from 5 to 9, Y

changes from 13 to 21. The ratio of two changes is (same as before). You may

take any combination of the changes in the two variables; the ratio of changes in the

example is always . This is linear correlation. The values of X and Y fall on the straight

line Y = 3 + 2X. In the case of linear correlation the pairs of values of X and Y, when

plotted on a graph paper, give a straight line.

However, if the value of the amount of change in one variable to the amount of change in

the other variable is not constant, correlation is said to be non-linear or curvilinear.

Figure1.4: Linear and Non-linear Correlations

1.1.3 Correlation And Causation

Correlation between two variables does not necessarily indicate causation i.e., a cause

and effect relationship between variable. The coefficient of correlation must be thought of

only as a measure of covariation and not as something that proves causation (see web

link 1.1). However, causation will always result in correlation. An observed correlation

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

may be due to any one or more of the following reasons:

Figure 1.5: Correlation and Causation

1.There is a cause and effect relationship between the two variables

A variation in one variable may be caused (directly or indirectly) by a variation in the

other, that is, one variable being a cause of the other. The variable that is supposed to

be the cause of variation in the other is taken as independent variable and the other as

dependent variable. As to which is cause and which the effect is to be judged from the

circumstances of the case. For example, dividends on shares affect the share prices

rather than vice versa. Thus dividend series would be called the independent variable and

would be shown on X axis and share prices as dependent variable and would be shown

on Y-axis on the graph.

Figure1.6: Cause and Effect Relationship

2. Both the variables are mutually affecting each other

The correlation between the two variables may be a result of inter dependent relationship

so that neither can be designated as the cause and the other the effect. Generally in case

of economic variables both the correlated variables influence each other. For example,

quantity demanded and price of a product affect each other. Similarly, there is mutual

dependence between price and production.

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Figure1.7: Mutual Dependence of Variables

3. Both the correlated variables are affected by some other variable

Covariation of the two variables may be due to a common cause or causes affecting each

variable in the same way or in the opposite ways. For example, a high degree of positive

correlation between the yield per acre of rice and jute may be due to the fact that both

are related to the amount of rainfall but otherwise none of the two variables is the cause

of the other.

Figure1.8: Both Variables Affected by a Third Variable

4. The correlation may arise due to random or chance factor

It might sometimes happen that a fair degree of correlation between two variables is

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

observed in a sample even though there is no relationship whatsoever between the

variables in the universe from which the sample is drawn. For example, it might be found

that in a given group of persons, there was a positive correlation between the size of

their shoes and the amount of money in their pockets but actually, there exists no such

relationship. Moreover, there are chances that another sample would yield quite different

results. This especially happens in case of small samples. It may also arise, because of

the bias of the investigator in selecting the sample. Such correlations are known as

spurious or nonsense correlations (see web link 1.2 and 1.3).

Figure1.9: Nonsense Correlation

The above points make it clear that correlation is only a mathematical relationship, which

implies nothing in itself about cause and effect. Thus, while interpreting the correlation

coefficient it is essential to see if there is any likelihood of any relationship existing

between variables under study. If there were no such likelihood, the observed

correlations would be meaningless.

1.1.4 Methods Of Studying Correlation

There are many methods of studying correlation. The most popular ones are:

1. Scatter diagram

2. Pearson’s co-efficient of correlation and

3. Rank method

1.2.1 Meaning And Method

Under this method, the observed data is plotted on a graph paper taking one variable on

X-axis and other on Y-axis. The scatterdness of the dots, so plotted, gives the indication

whether the correlation is positive or negative and also an idea about the degree of such

relationship. That is why it is called scatter diagram. The greater the scatter of the

plotted points on the graph, the lesser is the relationship between the two variables. If

the points fall on a straight line having either a positive or a negative slope the

correlation is perfect. If this line runs from bottom left corner to top right, the correlation

is said to be perfect positive but if it runs from left to bottom right, it is perfect negative

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

correlation.

Figure1.10: Perfect +ve and –ve Correlation

1.2 Scatter Diagram

Illustration: From the data given below, draw a scatter diagram and comment on the

nature of correlation.

18 16 14 12 10 8 6 4 2

Hours of study (X)

Marks(Y) 30 32 28 26 22 24 20 16 18

Solution:

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Figure1.13: Scatter Diagram

The points plotted are rising from the lower left hand corner to the upper right hand

corner of the graph, which shows that the two variables have positive correlation. As

the points are not on a straight line but are very close to it, there is a high degree of

positive correlation between them.

1.2.2 Merits And Limitations

Merits

1. It is a very simple method of studying correlation. It is easy to draw, understand and

interpret a scatter diagram.

2. It is not affected by the values of extreme items.

Limitations

1. It does not give the precise degree of relationship between the variables. It only gives

an idea about the degree of correlation.

2. It is not amenable to mathematical treatment.

Figure 1.14: Merits & Limitations

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

1.3 Karl Pearson’s Coefficient of Correlation

1.3.1 Meaning And Calculation

Karl Pearson’s method of calculating the coefficient of correlation, suggested by great

British statistician Karl Pearson (1867-1936), is the most widely used method in practice.

Also known as product moment coefficient of correlation, it measures the intensity or

magnitude of linear relationship between two variable series based on covariance of the

concerned variables. The Pearson’s coefficient of correlation is denoted by the symbol ‘r’.

The formula for computing correlation coefficient (r) for two variables X and Y is

Figure 1.15: Karl Pearson- The Great Statistician

N = numbers of pairs of observations

= standard deviation of series X and

= standard deviation of series Y

This formula, can also be written as

The value of correlation coefficient (r) will always lie between 1. When r = +1, it means

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

there is a perfect positive correlation between the two values. When r = -1, it means the

two variables have perfect negative correlation between them. When r = 0, then there is

no correlation between the variables. When r is positive, the correlation is also positive;

when r is negative, correlation is also negative. Thus, Pearson’s coefficient of correlation

tells us both the degree as well as the direction of relationship between two variables.

Illustration: following is the data relating to sales revenues and corresponding

advertising expenditure made by a company. Calculate Karl Pearson’s coefficient of

correlation from the given data.

23 27 28 28 29 30 31 33 35 36

Sales

revenue (in

Rs lakhs) X

Advertising 18 20 22 27 21 29 27 29 28 29

expenditure

(in 000Rs) Y

Solution: Calculation of Karl Pearson’s coefficient of correlation

Advertising (X – 30) (Y – 25)

Sales expenditure x y

revenue (in (in 000Rs) Y x2 y2 xy

Rs lakhs) X

23 18 -7 -7 49 49 49

27 20 -3 -5 9 25 15

28 22 -2 -3 4 9 6

28 27 -2 2 4 4 -4

29 21 -1 -4 1 16 4

30 29 0 4 0 16 0

31 27 1 2 1 4 2

33 29 3 4 9 16 12

35 28 5 3 25 9 15

36 29 6 4 36 16 24

300 250 0 0 138 164 123

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Indirect methods of finding correlation coefficient

The above discussed formula of finding the coefficient of correlation may be called direct

formula (i.e., the formula that comes directly from the definition of the coefficient of

correlation). The formula can be given several other shapes. Some of the shapes, that

are convenient for calculation purposes, have been adopted as indirect methods.

When no deviations are taken

The formula for calculating correlation coefficient without taking deviations from mean is

The formula is useful when calculations are done using calculator or computer.

Illustration: Calculate coefficient of correlation from the following data:

N = 10, ∑X = 60, ∑Y = 40, ∑X2 = 494, ∑Y2 = 212 and ∑XY = 288

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Illustration: Compute Karl Pearson’s coefficient of correlation, between price and

demand from the data given below:

100 102 104 107 105 112 105 101

Price ( in Rs)

Demand (in 150 120 130 110 120 120 190 250

units)

Solution: Calculation of Karl Pearson’s coefficient of correlation

Demand (in dx = dy = d x2 d y2 d xd y

Price units) X - 104 Y - 148

(in Rs) Y

X

100 150 -4 2 16 4 -8

102 120 -2 -28 4 784 56

104 130 0 -18 0 324 0

107 110 3 -38 9 1444 -114

105 120 1 -28 1 784 -28

112 120 8 -28 64 784 -224

105 190 1 42 1 1764 42

101 250 -3 102 9 10404 -306

836 1190 4 6 104 16292 -582

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Correlation of grouped data

When the data in the bivariate frequency distribution is large it can be classified into a

bivariate frequency table (or correlation table as it is generally called); taking one

variable in the column headings and the other in the stubs. In between the table, the

frequencies are specified. Similar formulae for calculating correlation coefficient may be

deduced.

Direct method

Indirect methods

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Here f is the corresponding frequency and N = ∑f. the meaning of other symbols is the

same as before. The last formula is the most useful for calculation purposes.

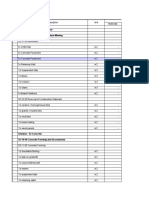

Illustration: Find Karl Pearson’s coefficient of correlation from the following data of

income (in 000Rs) and savings (in 000 Rs).

100 101 102 103 104 Total

5 - 1 - - - 1

6 2 - 3 1 - 6

7 - 5 2 1 2 10

8 - - 2 2 1 5

9 - - - 2 1 3

Total 2 6 7 6 4 25

Solution: Calculation of Karl Pearson’s coefficient of correlation

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

(Note: figures in boxes are the product of cell frequency and corresponding d x and dy

giving rise to fdxdy of the cell.)

We have ∑f =25, ∑fdx =4, ∑fdx2= 36, ∑fdy =3, ∑fdy2=27 and ∑fdxdy =17

1.3.2 Interpretation Of ‘r’

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Figure1.17: Interpretation of ‘r’

Great care must be exercised in interpreting the value of Pearson’s correlation coefficient

otherwise fallacious conclusions can be drawn. The following general rules will be helpful

while interpreting the value of ‘r’:

1. r = +1 implies that there is a perfect positive correlation between the variables

2. r = -1 implies that there is a perfect negative correlation between the variables

3. r = 0 implies that there is no correlation between the variables

4. There are no set guidelines for the interpretation of the values of r, which lies

between +1 and -1. The maximum we can conclude is that the closer the r to +1

or -1, the closer is the relationship between the variables.

5. The reliability or significance of r depends on many other measures. Some of

these are probable error, coefficient of determination and student’s t-test.

Correlation coefficient is better understood and interpreted with these measures,

as it is observed that the closeness of the relationship between variables is not

proportional to the value of r (explained later in the chapter).

1.3.3 Properties Of Correlation Coefficient (r)

1. Correlation coefficient is a pure number and written without any units of

measurement.

2. The value of coefficient of correlation lies between -1 and +1. Symbolically

-1 ≤ r ≤ +1

3. Correlation coefficient is independent of change of origin and scale of the variable X

and Y. If all the values in the X series and /or Y series are multiplied (or divided) and/or

increased (or decreased) by a constant, the correlation coefficient (r) will not be affected.

4. The correlation coefficient is the geometric mean of two regression coefficients, i.e.,

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

r = bxy.byx

(regression coefficients are discussed in the next chapter)

Illustration: (i) Compute the correlation coefficient between the corresponding values of

cost and revenue given in the following table.

2 4 5 6 8 11

Cost ( in 000 Rs) X

Revenue( in 000 Rs) Y 18 12 10 8 7 5

(ii) Multiply each value of cost in the table by 4 and add 2. Multiply each value of revenue

in the table by 2 and subtract 5. Find the correlation coefficient between two new sets of

values. Explain why you do or do not obtain the same result as in (i).

Solution: (i) Calculation of Karl Pearson’s coefficient of correlation

Y x=X-6 y = Y - 10 x2 y2 xy

X

2 18 -4 8 16 64 -32

4 12 -2 2 4 4 -4

5 10 -1 0 1 0 0

6 8 0 -2 0 4 0

8 7 2 -3 4 9 -6

11 5 5 -5 25 25 -25

36 60 0 0 50 106 -67

Putting values, we get

(ii) Let us define new cost data as X/ and new revenue data as Y/ so that

X/ = 4X + 2 and Y/ = 2Y – 5

Calculation of Karl Pearson’s coefficient of correlation between X/ and Y/

X/ Y/ x/ = y/ = x/2 y/2 x/y/

X/ - 26 Y/ - 15

10 31 -16 16 256 256 -256

18 19 -8 4 64 16 -32

22 15 -4 0 16 0 0

26 11 0 -4 0 16 0

34 9 8 -6 64 36 -48

46 5 20 -10 400 100 -200

156 90 0 0 800 424 -536

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

r

x y / /

x y 2 2

Putting values, we get

536

r 0.92

800 424

The correlation between new series of cost and revenue is same as between the original

series of cost and revenue. This is because the correlation coefficient is independent of

the change in scale and origin.

1.3.4 Assumptions of the Pearson’s coefficient

1. There is a linear relationship between the two variables under study.

2. The two variables are affected by a number of factors so that they give rise to a

normal frequency distribution. For example, height, weight, age, demand, sales etc. are

variables which are affected by not one factor but many independent causes and thus

form a normal distribution.

Figure 1.22: Assumptions

3. There is a cause and effect relationship between the two variables. If variables are

independent of each other, there cannot be any relationship between them. For example,

there is no relationship between size of the shoe and income of a person even if r

calculated on the basis of a sample comes out to be a very significant figure. Such

correlations are called nonsense or spurious correlation. Sometimes two variables are

affected by a third variable which may give rise to such spurious correlation. For

example, sale of ice cream and sale of cold drinks are related to weather conditions of

the area. They may show a positive correlation but they are not related to each other.

1.3.5 Merits And Limitations

Merits

1. It is the most popular method of studying correlation. It gives direction as well as

the degree of relationship between the two variables.

2. The correlation coefficient along with regression analysis helps in estimating the

value of the dependent variable from the known value of an independent variable.

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Figure 1.23: Merits & Limitations

Limitations

1. Compared to other methods this method take more time to compute the value of

coefficient of correlation.

2. The value of correlation coefficient is unduly affected by the presence of extreme

values.

3. It is based on a large number of assumptions (like linear relationship, normality

of the distributions, cause and effect relationship) which may not always hold well.

4. It is very much likely to be misinterpreted (see web link 1.4).

1.4 Probable Error of Correlation Coefficient

1.4.1 Meaning And Calculation

One of the measures, which help in interpreting the value of correlation coefficient is its

probable error. It helps in testing the reliability of an observed value of r so far as it

depends upon the condition of random sampling. It is an amount, which when added and

subtracted from the correlation coefficient, produces limits within which the population

coefficient of correlation will have 50% chance to lie. Probable error denoted by P.E., is

given by the following formula

P.E. = .6745

Where r is the correlation coefficient of the random sample and N is the number of pairs

of observations in the sample.

1.4.2 Population Coefficient Of Correlation

Probable error tells us the limit within which the various values of r of the various

samples taken out of the entire group will vary. By adding and subtracting the value of

the probable error from correlation coefficient, we get the limit within which correlation

coefficient of the population will have 50% chance to lie. Symbolically

where is the correlation coefficient of the whole population.

For example if r = 0.9 and N = 36

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

The 50% limits of the population coefficient of correlation given by will be

(0.9 – 0.0214) and (0.9 + 0.0214)

= 0.8786 and 0.9214

1.4.3 Interpretation Of ‘r’ Based On Probable Error Interpretation

of r with the help of probable error is done as follows:

1. If r is less than probable error, there is no correlation, and

2. If r is greater than six times the probable error, there is decided evidence of

correlation and the value of r is significant.

3. In other situations nothing can be concluded with certainty.

1.4.4 Conditions For The Use Of Probable Error

The following conditions must be fulfilled for the use of probable error:

1. The data must have been drawn from a normal population,

2. The conditions of random sampling should prevail in selecting the observations for

the sample,

3. The number of observation in the sample should be large.

If the above conditions are not satisfied, the use of probable error may lead to fallacious

conclusions.

1.4.5 Standard Error

The value in the formula of probable error is the standard error of correlation. The

standard error gives a measure of how well a sample represents the population. When

the sample is representative, the standard error will be small. The division by the square

root of the sample size is a reflection of the speed with which an increasing sample size

gives an improved representation of the population. Reason for taking the factor .6745 in

probable error is that in a normal distribution 50% of the observations lie in the range of

, where is the mean and is the standard deviation.

1.5 Spearman’s rank correlation

1.5.1 Meaning and Formula

Sometimes, the phenomenon under study cannot be measured quantitatively but can be

serially arranged or ranked. For example, we can rank a group of 10 girls on the basis of

beauty, intelligence or honesty, but their quantitative measurement on the basis of such

attributes is not possible. We can find the coefficient of correlation of ranks by using Karl

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Pearson’s method, but calculations can substantially be reduced by using Spearman’s

formula as under:

Figure 1.24: Charles Edward Spearman (September 10, 1863 - September 7, 1945)

rs = 1 -

Where rs stands for Spearman’s coefficient of correlation for ranks, D for difference of

ranks between paired items in the two series and N for the number of items.

The formula has been derived from the Karl Pearson’s formula and is applicable only to

ranks. Ranks can also be assigned to the phenomena measured quantitatively.

Here each observation in both the series is given a rank according to its size. We can

rank the values either way, from the smallest to largest value or from the largest to

smallest value, but the same way it is to be done for both the series. If the smallest item

is given rank ‘1’ in series one, the smallest value in the series two should be given rank

‘1’. Under ranking method, original values are not taken into account therefore, it gives

only approximate results. The value of rs is a pure number and here also it varies from -1

to +1. It is interpreted the same way as Pearson’s coefficient of correlation i.e.

If r = 0, there is no correlation,

If r = +1, there is perfect positive correlation, and

If r = -1, there is perfect negative correlation between the two variables.

1.5.2 Uses of Rank Correlation

Rank correlation is used

1. Where items cannot be measured in quantitative terms, but they can be arrayed

or ranked, according to some variable attribute, such as beauty, intelligence and

honesty.

2. Where, the variables do not show a linear relationship.

3. Where, a variable departs markedly from normality. The Karl Pearson’s correlation

coefficient assumes that the parent population, from which sample is drawn, is

normal. Spearman’s Rank correlation coefficient is distribution free (or non-

parametric), i.e., it does not make any assumption about the parameters of the

population.

4. Where data are irregular or extreme items are erratic or inaccurate and may

influence the value of r considerably.

5. Where, sample is very small and we want to have a rough estimate of the degree

of correlation without going through the lengthy calculations.

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

1.5.3 Calculation Of Rank Correlation Coefficient

In rank correlation, we may have two types of problems:

(a) When two or more ranks are not tied for the same rank

1. Where ranks are given

2. Where ranks are not given

(b) When two or more items are tied for the same rank.

(a) When two or more ranks are not tied for the same rank

(1) When ranks are given

In this situation, we first compute the difference of ranks and then apply the formula to

get the value of correlation coefficient (rs).

Illustration: in a beauty contest, two judges gave the following ranks to 10 girls.

A B C D E F G H I J

Girls

Ranks by 1st judge 1 5 4 8 9 6 10 7 3 2

Ranks by 2nd judge 4 8 7 6 5 9 10 3 2 1

What is the coefficient of correlation of ranks provided by the two judges?

Figure 1.25: A Beauty Contest

Solution: Calculation of coefficient of rank correlation

Ranks by Ranks by D2 = (Rx – Ry)2

Girls 1st judge 2nd judge

Rx Ry

A 1 4 9

B 5 8 9

C 4 7 9

D 8 6 4

E 9 5 16

F 6 9 9

G 10 10 0

H 7 3 16

I 3 2 1

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

J 2 1 1

Total 74

(2) When ranks are not given

When we are given the actual data and not the ranks, we assign ranks to different values

of the variables by taking either the highest value as 1 or the lowest value as 1.

However, whether we start with the lowest value or the highest value, we must follow

the same method in both the series.

Illustration: The following data relate to the marks obtained by 10 students of a class in

statistics and costing.

39 65 62 90 82 75 25 98 36 78

Statistics

Costing 47 53 58 86 62 68 60 91 51 84

Calculate spearman’s rank correlation coefficient.

Solution: let X denote the marks of statistics and Y denote the marks in costing; and

assign ranks to different values of X and Y series by taking the highest value as 1.

Calculation of coefficient of rank correlation

Y Rx Ry D2 = (Rx – Ry)2

X

39 47 8 10 4

65 53 6 8 4

62 58 7 7 0

90 86 2 2 0

82 62 3 5 4

75 68 5 4 1

25 60 10 6 16

98 91 1 1 0

36 51 9 9 0

78 84 4 3 1

2

∑ D = 30

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

= 1- 0.182 = .82

Rank coefficient of correlation is 0.82; this means there is high positive correlation between the

given variables.

b) When two or more items are tied for the same rank

When two or more values of a series have same magnitude, it is necessary to rank them as equal.

In such cases, the rank assigned to these equal values is the average of the ranks, which these

values would have got had they slightly differed from each other. For example, if three values

stand for the 5th position in a series, they would have got 5th, 6th and 7th positions had they been

slightly different. Thus, all the three will get rank for the purpose of calculating

coefficient of rank correlation and nextitem will be ranked 8.

When equal ranks are assigned to two or more series, an adjustment is made in the above formula

for calculating the rank correlation coefficient. The adjustment consists of adding to the

value of ∑D2, where m stands for the number of items with common rank. This value is added as

many times as the number of groups having equal ranks in the two series. The formula for the

spearman’s correlation coefficient can, thus, be written as

Illustration: From the following observations, find spearman’s coefficient of correlation.

10 15 23 7 35 55 15 75

Marks in

statistics

Pocket 35 25 45 25 15 5 25 55

money(Rs)

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Figure1.26: Students with Marks and Pocket Money

Solution: let X denote the marks in statistics and Y denote the Pocket money; and assign ranks to

different values of

X and Y series by taking the lowest value as 1.

Calculation of coefficient of rank correlation

Y Rx Ry D2 = (Rx – Ry)2

X

10 35 2 6 16

15 25 3.5 4 0.25

23 45 5 7 4

7 25 1 4 9

35 15 6 2 16

55 5 7 1 36

15 25 3.5 4 0.25

75 55 8 8 0

∑ D2 = 81.5

rs = 1 –

Here ∑ D2 = 81.5 and N = 8; in series X there are two items having equal ranks (3.5) therefore m 1

= 2 and in series Y there are three items having equal rank (4) therefore m 2 = 3. Putting values,

we get

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Thus, there is no correlation between marks in statistics and pocket money

1.5.4. Merits and Limitations

Merits

1. The method is easy to use.

2. Even when actual values are given, this method is applied when sample is small and we

want a rough estimate of the relationship between the variables. This method saves time,

as it is more quickly calculated.

3. It is also employed usefully, where extreme values are present or where the population of

the variables under study is not normal and the relationship is non-linear.

Figure 1.28: Merits

Limitations

1. This method cannot be used in case of bivariate frequency table.

2. It can be conveniently used only when N is small (say upto 30) otherwise calculation

becomes tedious.

3. It is not as accurate as Karl Pearson’s method (applied to original values), as here original

values are not taken into account. The result obtained is only approximate.

Figure 1.29: Limitations

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Summary

Under Scatter diagram method the observed data are plotted on a graph paper taking one

variable on X-axis and other on Y-axis. The scatterdness of the dots so plotted gives the

indication whether the correlation is positive or negative and also an idea about the degree

of such relationship.

Karl Pearson’s method of calculating coefficient of correlation is the most widely used

method in practice. Also known as product moment coefficient of correlation, it measures

the intensity or magnitude of linear relationship between two variable series on the basis of

covariance of the concerned variables. Coefficient of correlation is a pure number without

any unit.

Correlation coefficient is independent of change of origin and scale of the variable X and Y.

The value of correlation coefficient will always lie between 1. Pearson’s coefficient of

correlation tells us both the degree as well as the direction of relationship between two

variables.

Probable error helps in testing the reliability of an observed value of r so far as it depends

upon the condition of random sampling. It is an amount which when added to and

subtracted from the correlation coefficient produces limits within which the population

coefficient of correlation will have 50% chance to lie.

The standard error gives a measure of how well a sample represents the population. When

the sample is representative, the standard error will be small.

Where items cannot be measured in quantitative terms or where the variables do not show

a linear relationship or depart markedly from normality, we apply a method based on ranks

for measuring correlation.

Exercise

1.1 What is time series? What are its important components? Give an example of each component.

1.2 What is meant by analysis of time series? Discuss its importance in business and economics.

1.3 Explain cyclical variations in a time series. How do seasonal variations differ from them?

1.4 What is secular trend? How does it differ from other short term variations in a time series

data?

1.5 Explain briefly the additive and multiplicative models of time series. What are their underlying

assumptions? Which of these models is more popular in practice and why?

References

Berenson and Levine, "Basic Business Statistics: Concepts and Applications", Prentice

Hall.Chou, Ya-lun Holt,Rinehart and Winston, New York. Croxton and Cowden, , Prentice

Hall, London ."Statistical analysis”

“Applied general statistics”

David P. Doane & Lori E.Seward, :Applied Statistics for business and economics" Tata

McGraw Hill Publishing Co. ltd.

Dhingra, I.C., and M.P. Gupta, "Lectures in Business Statistics", Sultan Chand

Douglas A,Lind, William G Marshal & Samuel A. Wathen, "Statistics techniques for business

and economics" Tata McGraw Hill Publishing Co. ltd.

Frank , Harry and Steven C. Althoen, "Statistics: Concepts and Applications", Cambride

Low-priced Editions, 1995.

Gupta, S.C., "Fundamentals of Statistics", Himalaya Publishing House.

Institute of Lifelong Learning, University of Delhi

(3.1) Correlation Analysis

Gupta, S.P., and Archana Gupta, "Statistical Methods", Sultan Chand and Sons, New Delhi.

Kakkar N.K. & Vohra N.D. "Statistics-an introductory analysis" jnanada prakashan

Levin, Richard and David S. Rubin, "Statistics for Management", 7th Edition, Prentice Hall of

India.

Sharma J.K., "Business Statistics" second edition ’pearsons education.

Srivastava T.N. & Shailja Rego, "Statistics for Management", Tata McGraw Hill Publishing

Co. ltd.

Siegel, Andrew F., "Practical Business Statistics", International Edition (4th Ed.), Irwin

McGraw Hill.

Spiegel M.D., "Theory and Problems of Statistics", Schaum’s Outlines Series, McGraw Hill

Publishing Co.

Yule and Kendal, "An introduction to the theory of statistics", Charles Griffen & co., London

Web Links

1.1 http://en.wikipedia.org/wiki/Time_series

1.2 http://www.itl.nist.gov/div898/handbook/pmc/section4/pmc41.htm

1.3

http://mathematics.nayland.school.nz/Year_13_Stats/3.1_timeseries/Time_Series_home.htm#top

1.4

http://mathematics.nayland.school.nz/Year_13_Stats/3.1_timeseries/3_seasonal_variation.htm

Glossary

Additive model: A model for the decomposition of time series which assumes that the four

components of time series interact in additive fashion in order to produce the observed values.

Analysis of time series: Analysis of figures comprising a time series for evaluation and

forecasting.

Cyclical variations: Regular but not uniformly periodic short term fluctuations in a time series

caused by business cycles.

Irregular variations: Short term variations in a time series which are purely random and are

the result of unforeseen and unpredictable forces.

Multiplicative model: A model for the decomposition of time series which assumes that the

four components of a time series interact in multiplicative fashion to produce the observed

values.

Seasonal variations: Short term fluctuations in a time series which occur regularly and

periodically within a period of less than one year.

Time series: Data collected over a period of time at regular intervals, and arranged in

chronological sequence.

Trend: General tendency of the data to increase or to decrease or to remain constant over a

long period of time.

Institute of Lifelong Learning, University of Delhi

You might also like

- Strategic ManagementDocument114 pagesStrategic ManagementDinikaNo ratings yet

- Correlation Analysis PDFDocument30 pagesCorrelation Analysis PDFtoy sanghaNo ratings yet

- StatisticsDocument21 pagesStatisticsVirencarpediemNo ratings yet

- Business Statistics Unit 3-5Document113 pagesBusiness Statistics Unit 3-5Hemant BishtNo ratings yet

- Correleation Analysis: Chapter - 2Document23 pagesCorreleation Analysis: Chapter - 2ParasNo ratings yet

- QT-Correlation and Regression-1Document3 pagesQT-Correlation and Regression-1nikhithakleninNo ratings yet

- Simple Correlation Converted 23Document5 pagesSimple Correlation Converted 23Siva Prasad PasupuletiNo ratings yet

- Correlation and Its Applications in EconomicsDocument22 pagesCorrelation and Its Applications in EconomicsjitheshlrdeNo ratings yet

- CORRELATION-AN OVERVIEWDocument17 pagesCORRELATION-AN OVERVIEWBaViNo ratings yet

- Correlation AnalysisDocument20 pagesCorrelation AnalysisVeerendra NathNo ratings yet

- Correlational ResearchDocument3 pagesCorrelational ResearchJenny Rose Peril CostillasNo ratings yet

- Role of Correlation in Business and Day To Day LifeDocument6 pagesRole of Correlation in Business and Day To Day LifeEditor IJTSRDNo ratings yet

- Regression and Correlation Analysis ExplainedDocument12 pagesRegression and Correlation Analysis ExplainedK Raghavendra Bhat100% (1)

- Associative Hypothesis TestingDocument18 pagesAssociative Hypothesis TestingGresia FalentinaNo ratings yet

- Correlational Research IntroductionDocument3 pagesCorrelational Research Introductionjaneclou villasNo ratings yet

- Correlation AnalysisDocument36 pagesCorrelation AnalysisAmir SadeeqNo ratings yet

- Correlation Analysis: Measuring Relationship Between VariablesDocument48 pagesCorrelation Analysis: Measuring Relationship Between VariablesPeter LoboNo ratings yet

- Unit 2 - (A) Correlation & RegressionDocument15 pagesUnit 2 - (A) Correlation & RegressionsaumyaNo ratings yet

- Research Methodology Exam - Understanding the Relationship between VariablesDocument2 pagesResearch Methodology Exam - Understanding the Relationship between VariablesiKonic blinkNo ratings yet

- Statistics Midsem AssignmentDocument6 pagesStatistics Midsem AssignmentTaniskha LokhonaryNo ratings yet

- ONLINE ETIQUETTESDocument49 pagesONLINE ETIQUETTESProtikNo ratings yet

- Unit 3 Correlation and Regression (1)Document27 pagesUnit 3 Correlation and Regression (1)roopal.chaudharyNo ratings yet

- Core Tion and RegressionDocument3 pagesCore Tion and RegressionPINTUNo ratings yet

- CORRELATION ANALYSIS: MEASURING RELATIONSHIPSDocument8 pagesCORRELATION ANALYSIS: MEASURING RELATIONSHIPSGanesh BabuNo ratings yet

- Correlation: Self Instructional Study Material Programme: M.A. Development StudiesDocument21 pagesCorrelation: Self Instructional Study Material Programme: M.A. Development StudiesSaima JanNo ratings yet

- Correlation Analysis TechniquesDocument21 pagesCorrelation Analysis TechniquesMayank MajokaNo ratings yet

- Etha Seminar Report-Submitted On 20.12.2021Document12 pagesEtha Seminar Report-Submitted On 20.12.2021AkxzNo ratings yet

- Correlation and Regression AnalysisDocument100 pagesCorrelation and Regression Analysishimanshu.goelNo ratings yet

- AlanemadDocument8 pagesAlanemadAlan MasihaNo ratings yet

- Business Statistic-Correlation and RegressionDocument30 pagesBusiness Statistic-Correlation and RegressionBalasaheb ChavanNo ratings yet

- 318 Economics Eng Lesson10Document26 pages318 Economics Eng Lesson10Akuma JohanNo ratings yet

- Assignment On Correlation Analysis Name: Md. Arafat RahmanDocument6 pagesAssignment On Correlation Analysis Name: Md. Arafat RahmanArafat RahmanNo ratings yet

- Correlation StudyDocument23 pagesCorrelation StudyMona PrakashNo ratings yet

- Unit 3Document24 pagesUnit 3swetamakka1026No ratings yet

- Business Statistics Unit 4 Correlation and RegressionDocument27 pagesBusiness Statistics Unit 4 Correlation and RegressiondipanajnNo ratings yet

- Research in Daily Life 2Document11 pagesResearch in Daily Life 2ameracasanNo ratings yet

- Correlation: CharcteristicsDocument6 pagesCorrelation: CharcteristicsBhuv SharmaNo ratings yet

- Correlation Studies: Examining Relationships Between VariablesDocument39 pagesCorrelation Studies: Examining Relationships Between VariablesMariah ZeahNo ratings yet

- Unit 3-1Document12 pagesUnit 3-1Charankumar GuntukaNo ratings yet

- Definition 3. Use of Regression 4. Difference Between Correlation and Regression 5. Method of Studying Regression 6. Conclusion 7. ReferenceDocument11 pagesDefinition 3. Use of Regression 4. Difference Between Correlation and Regression 5. Method of Studying Regression 6. Conclusion 7. ReferencePalakshVismayNo ratings yet

- DSC 402Document14 pagesDSC 402Mukul PNo ratings yet

- Regression AnalysisDocument30 pagesRegression Analysisudaywal.nandiniNo ratings yet

- Causation Vs CorrelationDocument4 pagesCausation Vs CorrelationDea Deliana KamalNo ratings yet

- 1929605138eco Ma1 23 FebruaryDocument4 pages1929605138eco Ma1 23 Februarynancy712602No ratings yet

- Correlation: (For M.B.A. I Semester)Document46 pagesCorrelation: (For M.B.A. I Semester)Arun Mishra100% (2)

- QM (UM20MB502) - Unit 3 Correlation and Decision Analysis - Notes 1. What Is Correlation?Document9 pagesQM (UM20MB502) - Unit 3 Correlation and Decision Analysis - Notes 1. What Is Correlation?PrajwalNo ratings yet

- Correlation and Regression: Jaipur National UniversityDocument32 pagesCorrelation and Regression: Jaipur National UniversityCharu SharmaNo ratings yet

- Correlation Analysis-Students NotesMAR 2023Document24 pagesCorrelation Analysis-Students NotesMAR 2023halilmohamed830No ratings yet

- B. CORRELATION and REGRESSIONDocument4 pagesB. CORRELATION and REGRESSIONJeromeNo ratings yet

- Ba ReportingDocument3 pagesBa ReportingLea PascoNo ratings yet

- Correlation Rev 1.0Document5 pagesCorrelation Rev 1.0Ahmed M. HashimNo ratings yet

- Bivariate Analysis: Research Methodology Digital Assignment IiiDocument6 pagesBivariate Analysis: Research Methodology Digital Assignment Iiiabin thomasNo ratings yet

- Aiml M3 C3Document37 pagesAiml M3 C3Vivek TgNo ratings yet

- Correlation CoefficientDocument7 pagesCorrelation CoefficientDimple PatelNo ratings yet

- Correlation and Regression AnalysisDocument69 pagesCorrelation and Regression Analysisankur basnetNo ratings yet

- Correlation Analysis ChapterDocument46 pagesCorrelation Analysis ChapterShyam SundarNo ratings yet

- Business Statistics Chapter 5Document43 pagesBusiness Statistics Chapter 5K venkataiahNo ratings yet

- Define Endogenous Variable With ExampleDocument4 pagesDefine Endogenous Variable With ExampleRafiulNo ratings yet

- What is correlation and how is it measuredDocument9 pagesWhat is correlation and how is it measuredDeepshikha SonberNo ratings yet

- probe part 4Document17 pagesprobe part 4udaywal.nandiniNo ratings yet

- probe part 5Document32 pagesprobe part 5udaywal.nandiniNo ratings yet

- Travel Agency Model CaseDocument4 pagesTravel Agency Model Caseudaywal.nandiniNo ratings yet

- probe part 7Document27 pagesprobe part 7udaywal.nandiniNo ratings yet

- SEC (2)Document55 pagesSEC (2)udaywal.nandiniNo ratings yet

- probr part 3Document34 pagesprobr part 3udaywal.nandiniNo ratings yet

- probe part 6Document10 pagesprobe part 6udaywal.nandiniNo ratings yet

- Travel Agency Model CaseDocument4 pagesTravel Agency Model Caseudaywal.nandiniNo ratings yet

- Rlac Mun '24 BrochureDocument8 pagesRlac Mun '24 Brochureudaywal.nandiniNo ratings yet

- Ethics in PracticeDocument1 pageEthics in Practiceudaywal.nandiniNo ratings yet

- JD - BDA updatedDocument2 pagesJD - BDA updatedudaywal.nandiniNo ratings yet

- Vedic Maths PPT - PPTX - 3081 (New One)Document16 pagesVedic Maths PPT - PPTX - 3081 (New One)udaywal.nandiniNo ratings yet

- Questions (Panelist) DOCDocument2 pagesQuestions (Panelist) DOCudaywal.nandiniNo ratings yet

- 92734883-Unit-1 (1)Document80 pages92734883-Unit-1 (1)udaywal.nandiniNo ratings yet

- 92734883-Unit-1 (1)Document80 pages92734883-Unit-1 (1)udaywal.nandiniNo ratings yet

- Vedic Presentation (1) NEWDocument22 pagesVedic Presentation (1) NEWudaywal.nandiniNo ratings yet

- MH Awareness and Substance Use All StudentsDocument1 pageMH Awareness and Substance Use All Studentsudaywal.nandiniNo ratings yet

- Internship Profile DescriptionDocument2 pagesInternship Profile Descriptionudaywal.nandiniNo ratings yet

- Regression AnalysisDocument30 pagesRegression Analysisudaywal.nandiniNo ratings yet

- Gupta Empire ReportDocument2 pagesGupta Empire Reportudaywal.nandiniNo ratings yet

- Vedic Maths PPT - PPTX - 3081 (New One)Document16 pagesVedic Maths PPT - PPTX - 3081 (New One)udaywal.nandiniNo ratings yet

- Logical Reasoning - 1Document2 pagesLogical Reasoning - 1udaywal.nandiniNo ratings yet

- Ethical Decision-Making FrameworkDocument1 pageEthical Decision-Making Frameworkudaywal.nandiniNo ratings yet

- Correlation and Regression AnalysisDocument28 pagesCorrelation and Regression AnalysisManjula singhNo ratings yet

- Active Directory: Lab 1 QuestionsDocument2 pagesActive Directory: Lab 1 QuestionsDaphneHarrisNo ratings yet

- 0520 Int OTG P4 MSDocument12 pages0520 Int OTG P4 MSTrévina JosephNo ratings yet

- AlternatorDocument3 pagesAlternatorVatsal PatelNo ratings yet

- Unit Rates and Cost Per ItemDocument213 pagesUnit Rates and Cost Per ItemDesiree Vera GrauelNo ratings yet

- 3343 - C-Data-EPON-OLT-FD1108S-CLI-User-Manual-V1-3Document82 pages3343 - C-Data-EPON-OLT-FD1108S-CLI-User-Manual-V1-3Roar ZoneNo ratings yet

- Malabsorption and Elimination DisordersDocument120 pagesMalabsorption and Elimination DisordersBeBs jai SelasorNo ratings yet

- Password ManagementDocument7 pagesPassword ManagementNeerav KrishnaNo ratings yet

- Danbury BrochureDocument24 pagesDanbury BrochureQuique MartinNo ratings yet

- Gartner CRM Handbook FinalDocument0 pagesGartner CRM Handbook FinalghanshyamdassNo ratings yet

- Construction Materials and Testing: "WOOD"Document31 pagesConstruction Materials and Testing: "WOOD"Aira Joy AnyayahanNo ratings yet

- REMOVE CLASS 2024 SOW Peralihan MajuDocument4 pagesREMOVE CLASS 2024 SOW Peralihan MajuMohd FarezNo ratings yet

- UnitTest D10 Feb 2024Document26 pagesUnitTest D10 Feb 2024dev.shah8038No ratings yet

- Classification of AnimalsDocument6 pagesClassification of Animalsapi-282695651No ratings yet

- Wolfgang KohlerDocument16 pagesWolfgang KohlerMaureen JavierNo ratings yet

- The Mysteries of Plato: Lunar NotebookDocument2 pagesThe Mysteries of Plato: Lunar NotebookDavor BatesNo ratings yet

- Notes (Net) para Sa KritikaDocument4 pagesNotes (Net) para Sa KritikaClaire CastillanoNo ratings yet

- HEC-HMS Tutorials and Guides-V3-20210529 - 140315Document756 pagesHEC-HMS Tutorials and Guides-V3-20210529 - 140315Ervin PumaNo ratings yet

- Altered Ventilatory Function Assessment at Pamantasan ng CabuyaoDocument27 pagesAltered Ventilatory Function Assessment at Pamantasan ng Cabuyaomirai desuNo ratings yet

- Unit 1Document50 pagesUnit 1vaniphd3No ratings yet

- 3 Manacsa&Tan 2012 Strong Republic SidetrackedDocument41 pages3 Manacsa&Tan 2012 Strong Republic SidetrackedGil Osila JaradalNo ratings yet

- Mondstadt City of Freedom Travel GuideDocument10 pagesMondstadt City of Freedom Travel GuideShypackofcheetos100% (3)

- RIBA Outline Plan of Work ExplainedDocument20 pagesRIBA Outline Plan of Work ExplainedkenNo ratings yet

- Christos A. Ioannou & Dimitrios A. Ioannou, Greece: Victim of Excessive Austerity or of Severe "Dutch Disease"? June 2013Document26 pagesChristos A. Ioannou & Dimitrios A. Ioannou, Greece: Victim of Excessive Austerity or of Severe "Dutch Disease"? June 2013Christos A IoannouNo ratings yet

- INSYS - EBW Serie EbookDocument4 pagesINSYS - EBW Serie EbookJorge_Andril_5370No ratings yet

- 06 Dielectrics Capacitance 2018mkDocument41 pages06 Dielectrics Capacitance 2018mkTrần ĐứcAnhNo ratings yet

- Arduino Programming Step by Step Guide To Mastering Arduino Hardware and SoftwareDocument109 pagesArduino Programming Step by Step Guide To Mastering Arduino Hardware and SoftwareMohan100% (3)

- Reporte Corporativo de Louis Dreyfus Company (LDC)Document21 pagesReporte Corporativo de Louis Dreyfus Company (LDC)OjoPúblico Periodismo de InvestigaciónNo ratings yet

- KJR 20 880 PDFDocument14 pagesKJR 20 880 PDFNam LeNo ratings yet

- Samsung RAM Product Guide Feb 11Document24 pagesSamsung RAM Product Guide Feb 11Javed KhanNo ratings yet

- Ch10 Stress in Simple WordsDocument7 pagesCh10 Stress in Simple Wordsmanaar munthirNo ratings yet