Professional Documents

Culture Documents

Topic - Chapter 8 - Sampling Distribution and Estimation

Uploaded by

23006122Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Topic - Chapter 8 - Sampling Distribution and Estimation

Uploaded by

23006122Copyright:

Available Formats

Chapter 8.

Sampling distribution and estimation Nguyen Thi Thu Van - August 12, 2023

Population distribution Sample distribution Sampling distribution

10

0

300 400 500 600 700 800 More

A sample distribution of 20 GMAT scores (n=20)

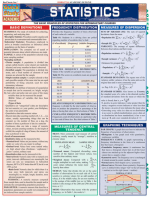

Point estimate. A sample mean 𝑥̅ calculated from a random sample is Sampling distribution of an estimator 𝑋̅ is a probability distribution based on a large

a point estimate of the unknown population mean μ. number of samples of size 𝑛 from a given population.

Estimator (say, 𝑋̅) is a statistic/a function used to estimate the value of an unknown

Sampling error is the difference between an estimate and the parameter of a population. Random samples vary, so an estimator is a random

corresponding population parameter. variable.

Example, for the population mean: 𝑥̅ − 𝜇. Bias is the difference between the expected value of estimator and the true parameter,

for example, for the mean: 𝐸(𝑋̅) − 𝜇. An estimator is unbiased if its expected value

is the parameter being estimated, i.e., 𝐸(𝑋̅) = 𝜇.

Central Limit Theorem states that the sample Interval estimate. Because samples vary, we need to indicate our uncertainty about

mean 𝑥̅ is centered at 𝜇 and follows a normal the true value of a population parameter.

distribution when 𝑛 is large (𝑛 ≥ 30), regardless Based on our knowledge of the sampling distribution of 𝑋̅, we construct a

of the population shape. This theorem is also

confidence interval (CI) for the unknown parameter 𝜇 by adding and subtracting

applied to a sample proportion. 𝜎

a margin of error from sample statistic: 𝑋̅ ± 𝑧𝛼/2

√𝑛

To calculate CI, we first think of confidence level 1 − 𝛼 as the probability on the

procedure used to compute the confidence interval:

𝜎 𝜎

𝑃 (𝑋̅ − 𝑧𝛼/2 ≤ 𝜇 ≤ 𝑋̅ + 𝑧𝛼/2 ) = 1 − 𝛼

√𝑛 √𝑛

Now when we have taken a random sample and calculated 𝑥̅ , the number 𝟏 − 𝜶

no longer is thought as probability but is the level of confidence that the interval

contains 𝜇.

Confidence interval for a mean 𝝁 Confidence interval for a proportion 𝝅

𝑥

With known variance With unknown variance (possibly use Student T developed by William Sealy Gosset [1876 –1937]) The sample proportion 𝑝 = may be assumed normal if 𝑛𝑝 ≥ 10 and 𝑛(1 − 𝑝) ≥ 10

𝑛

𝑛 ≥ 30 𝑛 < 30

𝑥̅ ± 𝑧𝛼/2 × 𝜎/√𝑛 𝑥̅ ± 𝑡𝛼/2 × 𝑠/√𝑛 or 𝑥̅ ± 𝑧𝛼/2 × 𝑠/√𝑛 𝑥̅ ± 𝑡𝛼/2 × 𝑠/√𝑛

𝑝(1 − 𝑝)

𝑝 ± 𝑧𝛼/2 √

Simply, because when 𝑑. 𝑓. is large, 𝑡 ≈ 𝑧 and 𝑡 is slightly larger than 𝑧. 𝑛

𝑧𝛼/2 ≡ 𝑁𝑂𝑅𝑀. 𝑆. 𝐼𝑁𝑉(𝛼/2) 𝑡𝛼/2 ≡ 𝑇. 𝐼𝑁𝑉(𝛼/2, 𝑑𝑓 ); 𝑑. 𝑓. = 𝑛 − 1 is called the degree of freedom. 𝑧𝛼/2 ≡ 𝑁𝑂𝑅𝑀. 𝑆. 𝐼𝑁𝑉(𝛼/2)

Estimating from a finite population [sampling without replacement, 𝒏 > 𝟓% × 𝑵]

𝜎 𝑁−𝑛 𝑠 𝑁−𝑛 𝑝(1 − 𝑝) 𝑁−𝑛

𝑥̅ ± 𝑧𝛼/2 ×√ 𝑥̅ ± 𝑡𝛼/2 ×√ 𝑝 ± 𝑧𝛼/2 √ ×√

√𝑛 𝑁−1 √𝑛 𝑁−1 𝑛 𝑁−1

𝑁−𝑛

√ is called the finite population correction factor (FPCF). This factor helps reduce the margin of error and provides a more precise interval when 𝑛 > 5% × 𝑁. Recall that if 𝑛 < 5% × 𝑁 then the population is effectively infinite.

𝑁−1

𝑧𝛼/2 𝜎 2 To estimate a population proportion 𝜋 with a maximum allowable margin of error of

To estimate a population mean 𝜇 with a maximum allowable margin of error of ±𝐸, we need sample size of 𝑛 = ( ) . Note that always round n to the next higher integer to be

𝐸

𝑧𝛼/2 2

conservative. ±𝐸, we need a sample size of 𝑛 = ( ) × 𝑝(1 − 𝑝)

𝐸

You might also like

- Class 12Document19 pagesClass 12Akash IslamNo ratings yet

- Revision SB Chap 8 12 Updated 1Document44 pagesRevision SB Chap 8 12 Updated 1Ngan DinhNo ratings yet

- Sampling Notes Part 01Document13 pagesSampling Notes Part 01rahulNo ratings yet

- Population Sample: Parameters:, , StatisticsDocument20 pagesPopulation Sample: Parameters:, , StatisticsDarian ChettyNo ratings yet

- Chapter 8. Sampling Distribution and Estimation Nguyen Thi Thu Van (This Version Is Dated On 22 Aug, 2021)Document1 pageChapter 8. Sampling Distribution and Estimation Nguyen Thi Thu Van (This Version Is Dated On 22 Aug, 2021)aaxdhpNo ratings yet

- Sampling Distribution EstimationDocument21 pagesSampling Distribution Estimationsoumya dasNo ratings yet

- Chapter 6Document7 pagesChapter 6Rocel Marie LopezNo ratings yet

- Business Modelling Confidence Intervals: Prof Baibing Li BE 1.26 E-Mail: Tel 228841Document11 pagesBusiness Modelling Confidence Intervals: Prof Baibing Li BE 1.26 E-Mail: Tel 228841Marina DragiyskaNo ratings yet

- Stats 201 Midterm SheetDocument2 pagesStats 201 Midterm SheetLisbon AndersonNo ratings yet

- RN5 - BEEA StatPro RN - Estimating Parameter Values - EB - DC - FINALDocument13 pagesRN5 - BEEA StatPro RN - Estimating Parameter Values - EB - DC - FINALkopii utoyyNo ratings yet

- EstimationDocument53 pagesEstimationEyota AynalemNo ratings yet

- Inferential PDFDocument9 pagesInferential PDFLuis MolinaNo ratings yet

- AP Statistics - Chapter 8 Notes: Estimating With Confidence 8.1 - Confidence Interval BasicsDocument2 pagesAP Statistics - Chapter 8 Notes: Estimating With Confidence 8.1 - Confidence Interval BasicsRhivia LoratNo ratings yet

- Econometric SDocument37 pagesEconometric Sestheradrian419No ratings yet

- Statistics and Probability Module 6: Week 6: Third QuarterDocument7 pagesStatistics and Probability Module 6: Week 6: Third QuarterALLYSSA MAE PELONIANo ratings yet

- Stats (This or That Supplement)Document2 pagesStats (This or That Supplement)reslazaroNo ratings yet

- Chapter TwoDocument28 pagesChapter TwoDawit MekonnenNo ratings yet

- Chapter 8 and 9Document7 pagesChapter 8 and 9Ellii YouTube channelNo ratings yet

- University of Gondar College of Medicine and Health Science Department of Epidemiology and BiostatisticsDocument119 pagesUniversity of Gondar College of Medicine and Health Science Department of Epidemiology and Biostatisticshenok birukNo ratings yet

- MGMT 222 Ch. IVDocument30 pagesMGMT 222 Ch. IVzedingel50% (2)

- Lecture 3: Sampling and Sample DistributionDocument30 pagesLecture 3: Sampling and Sample DistributionMelinda Cariño BallonNo ratings yet

- 8.1 Sampling and EstimationDocument51 pages8.1 Sampling and EstimationNguyễn Thanh NhậtNo ratings yet

- CH-6-inferential StatisticsDocument119 pagesCH-6-inferential StatisticsMulugeta AlehegnNo ratings yet

- Sampling Distribution and Simulation in RDocument10 pagesSampling Distribution and Simulation in RPremier PublishersNo ratings yet

- BALANGUE ALLEN JOHN Lesson 7Document12 pagesBALANGUE ALLEN JOHN Lesson 7James ScoldNo ratings yet

- CHAPTER 7 Sampling DistributionsDocument8 pagesCHAPTER 7 Sampling DistributionsPark MinaNo ratings yet

- Credit Session 4 (ESTIMATES)Document23 pagesCredit Session 4 (ESTIMATES)Amey KashyapNo ratings yet

- Stats 4TH Quarter ReviewerDocument2 pagesStats 4TH Quarter ReviewerKhryzelle BañagaNo ratings yet

- Lesson 10 Estimating Population MeansDocument5 pagesLesson 10 Estimating Population MeansSamleedigiprintsNo ratings yet

- Cheat Sheet Stats For Exam Cheat Sheet Stats For ExamDocument3 pagesCheat Sheet Stats For Exam Cheat Sheet Stats For ExamUrbi Roy BarmanNo ratings yet

- Biostat Inferential StatisticsDocument62 pagesBiostat Inferential Statisticstsehay asratNo ratings yet

- Inferential StatisticsDocument119 pagesInferential StatisticsG Gጂጂ TubeNo ratings yet

- Statistical InferenceDocument15 pagesStatistical InferenceDynamic ClothesNo ratings yet

- 06 Stat EstDocument41 pages06 Stat EstKonica RokeyaNo ratings yet

- CH Ii Business StatDocument27 pagesCH Ii Business StatWudneh AmareNo ratings yet

- Statistical Inference Vs Descriptive Statistics: ConclusionsDocument19 pagesStatistical Inference Vs Descriptive Statistics: ConclusionsMaxence AncelinNo ratings yet

- CHAPTER 9 Estimation and Confidence IntervalsDocument45 pagesCHAPTER 9 Estimation and Confidence IntervalsAyushi Jangpangi100% (1)

- Lecture 8Document85 pagesLecture 8Abuki TemamNo ratings yet

- Alternative Hypothesis: Central Limit TheoremDocument3 pagesAlternative Hypothesis: Central Limit TheoremEunice C. LoyolaNo ratings yet

- Community Medicine Trans - Epidemic Investigation 2Document10 pagesCommunity Medicine Trans - Epidemic Investigation 2Kaye Nee100% (1)

- Session#13Document11 pagesSession#13ajith vNo ratings yet

- Buss. Stat CH-2Document13 pagesBuss. Stat CH-2Jk K100% (1)

- 2estimation of The Mean PDFDocument11 pages2estimation of The Mean PDFJim Paul ManaoNo ratings yet

- 03 Statistical Inference v0 0 22052022 050030pmDocument17 pages03 Statistical Inference v0 0 22052022 050030pmSaif ali KhanNo ratings yet

- Inferential Statistic: 1 Estimation of A Population MeanDocument8 pagesInferential Statistic: 1 Estimation of A Population MeanshahzebNo ratings yet

- Stat For Fin CH 4 PDFDocument17 pagesStat For Fin CH 4 PDFWonde BiruNo ratings yet

- BBA IV Business StatisticsDocument270 pagesBBA IV Business StatisticsVezza JoshiNo ratings yet

- Chapter#5Document28 pagesChapter#5Lam LamNo ratings yet

- Application of Estimation and Point Estimation in BiostatisticsDocument10 pagesApplication of Estimation and Point Estimation in BiostatisticsAdeel AhmadNo ratings yet

- 7 EstimationDocument91 pages7 EstimationTESFAYE YIRSAWNo ratings yet

- Statistics 2Document25 pagesStatistics 2reetiNo ratings yet

- BS - CH II EstimationDocument10 pagesBS - CH II Estimationnewaybeyene5No ratings yet

- Chapter 3, Part A Descriptive Statistics: Numerical MeasuresDocument7 pagesChapter 3, Part A Descriptive Statistics: Numerical MeasuresKlo MonNo ratings yet

- Chapter 8 Sampling and EstimationDocument14 pagesChapter 8 Sampling and Estimationfree fireNo ratings yet

- Stat ExamDocument21 pagesStat Exampizza.bubbles.007No ratings yet

- Activity 3 Experimental Errors and Acoustics (Ver10182020) - UnlockedDocument8 pagesActivity 3 Experimental Errors and Acoustics (Ver10182020) - UnlockednyorkNo ratings yet

- Chapter 3Document81 pagesChapter 3Afifa SuibNo ratings yet

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignFrom EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignNo ratings yet

- Oracle 11amDocument15 pagesOracle 11amgavhaned1718No ratings yet

- Stat331 Final ProjectDocument3 pagesStat331 Final ProjectjdnNo ratings yet

- Using Oracle Sharding PDFDocument177 pagesUsing Oracle Sharding PDFHarish NaikNo ratings yet

- Banking Management SystemDocument40 pagesBanking Management Systemdivya kathareNo ratings yet

- Exam I Sample QuestionsDocument6 pagesExam I Sample QuestionsManit ShahNo ratings yet

- Project: Automated Cargo Management SystemDocument23 pagesProject: Automated Cargo Management SystemThanga RajNo ratings yet

- Lectuer-5 Database Design and Applications: BITS PilaniDocument27 pagesLectuer-5 Database Design and Applications: BITS PilaniamitNo ratings yet

- Jobsheet 2Document31 pagesJobsheet 2Irham GamingNo ratings yet

- Fyp-Proposal 111Document15 pagesFyp-Proposal 111Tayyab ayyubNo ratings yet

- Accessing Linux File SystemsDocument8 pagesAccessing Linux File SystemspmmanickNo ratings yet

- CICS NotesDocument34 pagesCICS Notesprashanthy2jNo ratings yet

- TSI Link External API v11!3!20Document7 pagesTSI Link External API v11!3!20spasatNo ratings yet

- Rishabh Software: A Digital Transformation Enabler - Microsoft TechnologiesDocument24 pagesRishabh Software: A Digital Transformation Enabler - Microsoft Technologiesmasterajax44No ratings yet

- HRIS by Mrs MAMELO Pradines HND Level 200Document78 pagesHRIS by Mrs MAMELO Pradines HND Level 200Pradines MameloNo ratings yet

- Transaction Processing SystemDocument11 pagesTransaction Processing SystemAdmire ChaniwaNo ratings yet

- J2EE Lab ManualDocument83 pagesJ2EE Lab ManualShilpa Pramod BalaganurNo ratings yet

- Welcome: Machine LearningDocument31 pagesWelcome: Machine LearningBùi Hữu ThịnhNo ratings yet

- OpenLDAP Admin GuideDocument267 pagesOpenLDAP Admin Guidejonas leonel da silvaNo ratings yet

- Making PubMed Searching SimpleDocument11 pagesMaking PubMed Searching SimpleGinna Paola Saavedra MartinezNo ratings yet

- Project Report On Computer Shop Management SystemDocument145 pagesProject Report On Computer Shop Management SystemPCLAB Software83% (6)

- 4-Confluence of Multiple Disciplines, Classifictaion, Integration-08-Feb-2021Material - I - 08-Feb-2021 - Mod1 - Confluence - ClassifictaionDocument4 pages4-Confluence of Multiple Disciplines, Classifictaion, Integration-08-Feb-2021Material - I - 08-Feb-2021 - Mod1 - Confluence - ClassifictaionRohan Aggarwal0% (1)

- EF CoreDocument21 pagesEF CoreAbhishek KumarNo ratings yet

- QM Developer Api Database Schema Guide Cisco 115Document233 pagesQM Developer Api Database Schema Guide Cisco 115hieuvt2008No ratings yet

- Filter Rules For Central Finance 20221012Document16 pagesFilter Rules For Central Finance 20221012aliNo ratings yet

- F Oracle Rac Dba SyllabusDocument3 pagesF Oracle Rac Dba SyllabusBe A LegendNo ratings yet

- Sap Bo Interview Questions Answers ExplanationsDocument2 pagesSap Bo Interview Questions Answers ExplanationsAmit Singh100% (1)

- Vnrvjiet: Name of The Experiment: Name of The Laboratory: Experiment No: DateDocument16 pagesVnrvjiet: Name of The Experiment: Name of The Laboratory: Experiment No: DateKalyan GuttaNo ratings yet

- Using Oracle Autonomous Database Dedicated Exadata Infrastructure 2Document138 pagesUsing Oracle Autonomous Database Dedicated Exadata Infrastructure 2sunny297No ratings yet

- Applies To:: Symptoms Changes Solution ReferencesDocument2 pagesApplies To:: Symptoms Changes Solution Referenceselcaso34No ratings yet

- Oracle FMW12c On SLES12-SP3 PDFDocument298 pagesOracle FMW12c On SLES12-SP3 PDFCarlos BanderaNo ratings yet