Professional Documents

Culture Documents

Customer Churn Analysis - GRP - 11

Uploaded by

Saquib NazeerOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Customer Churn Analysis - GRP - 11

Uploaded by

Saquib NazeerCopyright:

Available Formats

CUSTOMER CHURN ANALYSIS

BY MACHINE LEARNING USING PYTHON

Created By-

SUBHAMAY SADHUKHAN,GURU NANAK INSTITUTE OF TECHNOLOGY, 161430110175 of 2016-17

PRITHWISH DAS,GURU NANAK INSTITUTE OF TECHNOLOGY, 161430110145 of 2016-17

RITWICK DAS,GURU NANAK INSTITUTE OF TECHNOLOGY, 161430110150 of 2016-17

SUMIT SAHA,GURU NANAK INSTITUTE OF TECHNOLOGY, 161430110178 of 2016-17

Customer Churn Analysis 1

Table of Contents

SL. content Page no.

NO.

1 Acknowledgement

2 Project Objective

3 Project Scope

4 Data Description

5 Model Building

6 Code

7 Future scope of

improvements

8 Certificate

Customer Churn Analysis 2

Acknowledgement

I take this opportunity to express my profound gratitude and deep regards to my faculty

Mr. Titas Roy Chowdhury for his exemplary guidance, monitoring and constant

encouragement throughout the course of this project. The blessings, help and guidance

given time to time shall carry me a long way in the journey of life on which I am about to

embark.

I am obliged to my project team members for the valuable information provided by them in

their respective fields. I am grateful for their co operation during the period of my

assignment.

Subhamay Sadhukhan

Customer Churn Analysis 3

Acknowledgement

I take this opportunity to express my profound gratitude and deep regards to my faculty

Mr. Titas Roy Chowdhury for his exemplary guidance, monitoring and constant

encouragement throughout the course of this project. The blessings, help and guidance

given time to time shall carry me a long way in the journey of life on which I am about to

embark.

I am obliged to my project team members for the valuable information provided by them in

their respective fields. I am grateful for their co operation during the period of my

assignment.

Prithwish Das

Customer Churn Analysis 4

Acknowledgement

I take this opportunity to express my profound gratitude and deep regards to my faculty

Mr. Titas Roy Chowdhury for his exemplary guidance, monitoring and constant

encouragement throughout the course of this project. The blessings, help and guidance

given time to time shall carry me a long way in the journey of life on which I am about to

embark.

I am obliged to my project team members for the valuable information provided by them in

their respective fields. I am grateful for their co operation during the period of my

assignment.

Ritwick Das

Customer Churn Analysis 5

Acknowledgement

I take this opportunity to express my profound gratitude and deep regards to my faculty

Mr. Titas Roy Chowdhury for his exemplary guidance, monitoring and constant

encouragement throughout the course of this project. The blessings, help and guidance

given time to time shall carry me a long way in the journey of life on which I am about to

embark.

I am obliged to my project team members for the valuable information provided by them in

their respective fields. I am grateful for their co operation during the period of my

assignment.

Sumit Saha

Customer Churn Analysis 6

Project Scope:

It is no secret that customer retention is a top priority for many

companies.

Acquiring new customers can be several times more expensive than

retaining existing ones.

Furthermore, gaining an understanding of the reasons customers churn

and estimating the risk associated with individual customers are both

powerful components of designing a data-driven retention strategy.

A churn model can be the tool that brings these elements together and

provides insights and outputs that drive decision making across an

organization.

Customer Churn Analysis 7

Project objective

Describe the problem:

Simply put, customer churn occurs when customers or subscribers stop

doing business with a company or service. Also known as customer

attrition, customer churn is a critical metric because it is much less

expensive to retain existing customers than it is to acquire new customers

– earning business from new customers means working leads all the way

through the sales funnel, utilizing your marketing and sales resources

throughout the process. Customer retention, on the other hand, is

generally more cost-effective as you’ve already earned the trust and

loyalty of existing customers.

Customer churn impedes growth, so companies should have a defined

method for calculating customer churn in a given period of time. By being

aware of and monitoring churn rate, organizations are equipped to

determine their customer retention success rates and identify strategies

for improvement.

Various organizations calculate customer churn rate in a variety of ways,

as churn rate may represent the total number of customers lost, the

percentage of customers lost compared to the company’s total customer

count, the value of recurring business lost, or the percent of recurring

value lost. Other organizations calculate churn rate for a certain period of

time, such as quarterly periods or fiscal years. One of the most commonly

used methods for calculating customer churn is to divide the total number

of clients a company has at the beginning of a specified time period by the

number of customers lost during the same period.

Customer Churn Analysis 8

Causes of Customer Churn

There are a multitude of issues that can lead customers to leave a

business, but there are a few that are considered to be the leading causes

of customer churn. The first is poor customer service. One study found

that nearly nine out of ten customers have abandoned a business due to a

poor experience. We are living and working in the era of the customer,

and customers are demanding exceptional customer service and

experiences. When they don’t receive it, they flock to competitors and

share their negative experiences on social media: 59% of 25-34-year-olds

share poor customer experiences online. Poor customer service,

therefore, can result in many more customers churning than simply the

one customer who had a poor service experience.

Other causes of customer churn include a poor onboarding process, a lack

of ongoing customer success, natural causes that occur for all businesses

from time to time, a lack of value, low-quality communications, and a lack

of brand loyalty.

Customer Churn Analysis 9

Disadvantages of Customer Churn

There is a direct relationship between customer lifetime value and the

ability to grow your business. As such, the higher your customer churn

rate, the lower your chances of growing your business. Even if you have

some of the best marketing campaigns in your industry, your bottom line

suffers if you are losing customers at a high rate, as the cost of acquiring

new customers is so high. Much has been written on the subject of the

cost of retaining customer vs acquiring customer, especially because study

after study shows that customer acquisition costs far exceed customer

retention costs. Generally, companies spend seven times more on

customer acquisition than customer retention, and the average global

value of a lost customer is $243. Obviously, customer churn is costly for

businesses.

What is the objective

In order to build a sustainable SaaS business, companies need to focus

their efforts on reducing customer churn. According to the authors of

“Leading on the Edge of Chaos”, reducing customer churn by 5% can

increase profits 25-125%. Therefore to reduce churn, most SaaS

companies perform customer churn analysis. But what is customer churn

analysis and what are its benefits?

Customer Churn Analysis 10

Churn Analysis

Customer churn analysis refers to the customer attrition rate in a

company. This analysis helps SaaS companies identify the cause of the

churn and implement effective strategies for retention.

Gainsight understands the negative impact that churn rate can have on

company profits. Named as the "2014 cool vendor for CRM sales" (by

Gartner), Gainsight’s customer intelligence and retention process

automation technology:

Gathers available customer behavior, transactions,

demographics data and usage pattern

Converts structured and unstructured data/information into

meaningful insights

Utilizes these insights to predict customers who are likely to

churn

Identifies the causes for churn and works to resolve those issues

Engages with customers to foster relationships

Implements effective programs for customer retention

Statistics show that acquiring new customers can cost five times

more than retaining existing customers. Gainsight performs

customer churn analysis to reduce churn, control retention and

improve performance.

Customer Churn Analysis 11

>>How are we planning to solve:

Analyse the data frame

Drop the columns which have high multi collinearity and

low contribution towards ‘label’.

Apply different classification models and note down the

scores for each models.

Select the models of the best scores.

Customer Churn Analysis 12

Linear Regression Model (LRM)

To predict customer satisfaction, regression analysis model is another

popular technique that is based on supervised learning model. In this

model a data set of past observation is used to see future values of

explanatory and numerical targeted variable

Customer Churn Analysis 13

Naive Bayes Model (NBM)

Naive Bayes Model (NBM) In this model the probabilities of a given

input sample are calculated that belongs to a particular class y. The set

of variable is given (X1---Xn). The given formula is used to calculate

probabilities

Customer Churn Analysis 14

Decision Tree (DT)

The decision tree is the most prominent predictive model that is used

for the purpose of classification of upcoming trial The decision tree

consists of two steps, tree building and tree pruning. In tree building

the training set data is recursively partitioned in accordance with the

values of the attributes. This process goes on until there is no one

partition is left to have identical values. In this process some values

may be removed from the data due to noisy data. The largest

estimated error rate branches are selected and then removed in

pruning. To predict accuracy and reducing complexity of the decision

tree is called tree pruning

Customer Churn Analysis 15

Project scope

Customer Churn Analysis 16

Data description

>st – state:

State code of each customer.

Data type: string

Value type: Categorical

Null value percentage: 0

>acclen - account length

Data type: Integer

Customer Churn Analysis 17

The normalize form

Value type: continuous

Null percentage: 0

5 number statistics: 1 , 74 , 101 , 127 , 243

----------------------------------------------------------------------------------

arcode - area code

Customer Churn Analysis 18

Data type: Integer

Value type: categorical

Null percentage: 0

--------------------------------------------------------------------------------

>phnum - phone number

Data type: Integer

Value type: continuous

Null percentage:0

-------------------------------------------------------------------------------

Customer Churn Analysis 19

>intplan - internet plan

Data type: Object

Value type: categorical

Null percentage: 0

--------------------------------------------------------------------------------

Customer Churn Analysis 20

>Voice- voice plan

Voice = voice plan

Data type: Object

Value type: categorical

Null percentage: 0

-----------------------------------------------------------------------------------

Customer Churn Analysis 21

>nummailmes - no of email messages:

Data type: Integer

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 0 , 0 , 20 , 51

Customer Churn Analysis 22

------------------------------------------------------------------------------

>tdmin - total day messages

Customer Churn Analysis 23

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0, 143.7, 179.4, 216.4, 350.8

------------------------------------------------------------------------

Customer Churn Analysis 24

>tdcal - total day time calls

Customer Churn Analysis 25

Data type: integer

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 87 , 101 , 114 , 165.

--------------------------------------------------------------------------

Customer Churn Analysis 26

>tdchar - total day time charges

Customer Churn Analysis 27

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 24.43, 30.5 , 36.79, 59.64

Customer Churn Analysis 28

>temin - total evening time minutes

Customer Churn Analysis 29

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 166.6, 201.4, 235.3, 363.7

Customer Churn Analysis 30

>tecahr- total evening time charges

Customer Churn Analysis 31

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 14.16, 17.12, 20 , 30.91

Customer Churn Analysis 32

>Tnmin – Total night time minutes

Customer Churn Analysis 33

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 14.16, 17.12, 20 , 30.91

Customer Churn Analysis 34

>tn cal- total night time calls

Customer Churn Analysis 35

Data type: integer

Value type: continuous

Null percentage: 0

5 number statistics: 33 , 87 , 100 , 113 , 175.

Customer Churn Analysis 36

>Tnchar- total night time charges

Customer Churn Analysis 37

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 1.04, 7.52, 9.05, 10.59, 17.77

Customer Churn Analysis 38

>Timin- total international minutes

Customer Churn Analysis 39

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0 , 8.5, 10.3, 12.1, 20

Customer Churn Analysis 40

>Tical- total international calls

Customer Churn Analysis 41

Data type: integer

Value type: continuous

Null percentage: 0

5 number statistics: 0, 3 , 4 , 6 , 20

Customer Churn Analysis 42

>Tichar- total international charges

Customer Churn Analysis 43

Data type: float

Value type: continuous

Null percentage: 0

5 number statistics: 0, 2.3 , 2.78, 3.27, 5.4

Customer Churn Analysis 44

>Ncsc- number of customer service calls

Data type: float

Value type: categorical

Null percentage: 0

---------------------------------------------------------------------------------------

Customer Churn Analysis 45

>Label: Churned?

Data type: object

Value type: categorical

Null percentage: 0

Customer Churn Analysis 46

Heatmap:

1. from the heatmap of co-relation we can obtain that tdmin &

tdchar,temin & tecahr,tnmin & tnchar are co-linear.

2. so we are dropping tnchar ,temin and tdchar to reduce multi

co-linearity

Customer Churn Analysis 47

Data analysis:

1. by the data analysis we analysed that there are 4 catagorical

column and 16 continues data

2.We drop some columns which we don’t need in the model because

those columns don’t affect the model named :-

i.st=state

ii.acclen=account length

iii.Tdcal=total daytime call

iv.tecal=total evening time call

v.temin=total evening time minutes

vi.tnchar=total night time charges

vii.tdchar=total day time charges

Customer Churn Analysis 48

Output of our project:

From the output, we can see that decision tree

classifier is giving the best solution for our data sets.

So Desiciontree is elected as the optimum model.

>Confusion Matrix:

The confusion matrix for optimum model is

[1379 64]

[ 65 159]

Customer Churn Analysis 49

Model building

Loading The Data

We will work with a toy dataset emulating the data that an e-

business company (and more precisely an internet market place)

might have.

First, create a new project, then import the two main datasets into

DSS:

o Events are a log of what happened on your website: what page

users see, what product they like or purchase…

o Product is a look-up table containing a product id, and information

about its category and price. Make sure to set product_id column as

bigint in the schema.

As with any data science project, the first step is to explore the data.

Start by opening the events dataset: it contains a user id, a

timestamp, an event type, a product id and a seller id. For instance,

we may want to check the distribution of the event types. From the

column header, just click on Analyze:

Customer Churn Analysis 50

Data Attributes and Labels

The initial ingredient for building any predictive pipeline is data. For churn

specifically, historical data is captured and stored in a data warehouse,

depending on the application domain. The process of churn definition and

establishing data hooks to capture relevant events is highly iterative. It is

very important to keep this in mind as the initial churn definition, with its

associated data hooks, may not be applicable or relevant anymore as a

product or a service matures. That’s why it’s essential for data scientists to

not only monitor the performance of the predictive pipeline over time but

also to pay close attention to the alignment of churn definition with the

product’s changes as they might affect who the churners are.

The specific attributes used in a churn model are highly domain dependent.

However, broadly speaking, the most common attributes capture user

behavior with regards to engagement level with a product or service. This

can be thought of as the number of times that a user logs into her/his

account in a week or the amount of time that a user spends on a portal. In

short, frequency and intensity of usage/engagement are among the

strongest signals to predict churn.

Customer Churn Analysis 51

LogisticReggression:

LogisticReggression is the appropriate regression analysis to conduct

when the dependent variable is dichotomous (binary). Like all

regression analyses, the logistic regression is a predictive analysis.

Logistic regression is used to describe data and to explain the

relationship between one dependent binary variable and one or more

nominal, ordinal, interval or ratio-level independent variables.

Sometimes logistic regressions are difficult to interpret; the Intellectus

Statistics tool easily allows you to conduct the analysis, then in plain

English interprets the output.

Decision Tree Classifier :

Introduction :

Decision Tree Classifier is a simple and widely used classification

technique. It applies a straitforward idea to solve the classification

problem. Decision Tree Classifier poses a series of carefully crafted

questions about the attributes of the test record. Each time time it

receive an answer, a follow-up question is asked until a conclusion

about the calss label of the record is reached.

Build A Decision Tree :

Build a optimal decision tree is key problem in decision tree classifier.

In general, may decision trees can be constructed from a given set of

attributes. While some of the trees are more accurate than others,

finding the optimal tree is computationally infeasible because of the

exponential size of the search space.

However, various efficient algorithms have been developed to

construct a resonably accurate, albeit suboptimal, decision tree in a

Customer Churn Analysis 52

reasonable amount of time. These algorithms ususally employ a greedy

strategy that grows a decision tree by making a serise of locally

optimum decisions about which attribute to use for partitioning the

data. For example, Hunt's algorithm, ID3, C4.5, CART, SPRINT are

greedy decision tree induction algorithms.

Algorithm for Decision Tree Induction :

The decision tree induction algorithm works by recursively selecting

the best attribute to split the data and expanding the leaf nodes of the

tree until the stopping cirterion is met. The choice of best split test

condition is determined by comparing the impurity of child nodes and

also depends on which impurity measurement is used. After building

the decision tree, a tree-prunning step can be performed to reduce the

size of decision tree. Decision trees that are too large are susceptible to

a phenomenon known as overfitting. Pruning helps by trimming the

branches of the initail tree in a way that improves the generalization

capability of the decision tree.

Here is a example recursive function [ 2 ] that builds the tree by

choosing the best dividing criteria for the given data set. It is called

with list of rows and then loops through every column (except the last

one, which has the result in it), finds every possible value for that

column, and divides the dataset into two new subsets. It calculates the

weightedaverage entropy for every pair of new subsets by multiplying

each set’s entropy by the fraction of the items that ended up in each

set, and remembers which pair has the lowest entropy. If the best pair

of subsets doesn’t have a lower weighted-average entropy than the

current set, that branch ends and the counts of the possible outcomes

are stored. Otherwise, buildtree is called on each set and they are

added to the tree. The results of the calls on each subset are attached

to the True and False branches of the nodes, eventually constructing an

entire tree.

Customer Churn Analysis 53

def buildtree(rows,scoref=entropy):

if len(rows)==0: return decisionnode( )

current_score=scoref(rows)

# Set up some variables to track the best criteria

best_gain=0.0

best_criteria=None

best_sets=None

column_count=len(rows[0])-1

for col in range(0,column_count):

# Generate the list of different values in

# this column

column_values={}

for row in rows:

column_values[row[col]]=1

# Now try dividing the rows up for each value

# in this column

for value in column_values.keys( ):

(set1,set2)=divideset(rows,col,value)

Customer Churn Analysis 54

# Information gain

p=float(len(set1))/len(rows)

gain=current_score-p*scoref(set1)-(1-p)*scoref(set2)

if gain>best_gain and len(set1)>0 and len(set2)>0:

best_gain=gain

best_criteria=(col,value)

best_sets=(set1,set2)

# Create the subbranches

if best_gain>0:

trueBranch=buildtree(best_sets[0])

falseBranch=buildtree(best_sets[1])

return decisionnode(col=best_criteria[0],value=best_criteria[1],

tb=trueBranch,fb=falseBranch)

else:

return decisionnode(results=uniquecounts(rows))

Customer Churn Analysis 55

Naïve Bayes Classifier :

Introduction :

Naive Bayes is a simple technique for constructing classifiers:

models that assign class labels to problem instances, represented

as vectors of feature values, where the class labels are drawn

from some finite set. There is not a single algorithm for training

such classifiers, but a family of algorithms based on a common

principle: all naive Bayes classifiers assume that the value of a

particular feature is independent of the value of any other

feature, given the class variable. For example, a fruit may be

considered to be an apple if it is red, round, and about 10 cm in

diameter. A naive Bayes classifier considers each of these features

to contribute independently to the probability that this fruit is an

apple, regardless of any possible correlations between the color,

roundness, and diameter features.

For some types of probability models, naive Bayes classifiers can

be trained very efficiently in a supervised learning setting. In many

practical applications, parameter estimation for naive Bayes

models uses the method of maximum likelihood; in other words,

one can work with the naive Bayes model without

accepting Bayesian probability or using any Bayesian methods.

Despite their naive design and apparently oversimplified

assumptions, naive Bayes classifiers have worked quite well in

many complex real-world situations. In 2004, an analysis of the

Bayesian classification problem showed that there are sound

theoretical reasons for the apparently implausible efficacy of

naïve Bayes classifiers. Still, a comprehensive comparison with

other classification algorithms in 2006 showed that Bayes

classification is outperformed by other approaches, such

as boosted trees or random forests.

Customer Churn Analysis 56

An advantage of naive Bayes is that it only requires a small

number of training data to estimate the parameters necessary for

classification.

K-Nearest Neighbors(K-NN) :

Introduction :

The k-nearest neighbors algorithm (k-NN) is a non-parametric method

used for classification and regression. In both cases, the input consists

of the k closest training examples in the feature space. The output

depends on whether k-NN is used for classification or regression.

k-NN is a type of instance-based learning, or lazy learning, where the

function is only approximated locally and all computation is deferred

until classification. The k-NN algorithm is among the simplest of

all machine learning algorithms.

Both for classification and regression, a useful technique can be used

to assign weight to the contributions of the neighbors, so that the

nearer neighbors contribute more to the average than the more

distant ones. For example, a common weighting scheme consists in

giving each neighbor a weight of 1/d, where d is the distance to the

neighbor.

The neighbors are taken from a set of objects for which the class (for k-

NN classification) or the object property value (for k-NN regression) is

known. This can be thought of as the training set for the algorithm,

though no explicit training step is required.

A peculiarity of the k-NN algorithm is that it is sensitive to the local

structure of the data

Customer Churn Analysis 57

Algorithm :

The training examples are vectors in a multidimensional feature space,

each with a class label. The training phase of the algorithm consists

only of storing the feature vectors and class labels of the training

samples.

In the classification phase, k is a user-defined constant, and an

unlabeled vector (a query or test point) is classified by assigning the

label which is most frequent among the k training samples nearest to

that query point.

A commonly used distance metric for continuous variables is Euclidean

distance. For discrete variables, such as for text classification, another

metric can be used, such as the overlap metric (or Hamming distance).

In the context of gene expression microarray data, for example, k-NN

has also been employed with correlation coefficients such as Pearson

and Spearman. Often, the classification accuracy of k-NN can be

improved significantly if the distance metric is learned with specialized

algorithms such as Large Margin Nearest Neighbor or Neighbourhood

components analysis.

A drawback of the basic "majority voting" classification occurs when

the class distribution is skewed. That is, examples of a more frequent

class tend to dominate the prediction of the new example, because

they tend to be common among the k nearest neighbors due to their

large number. One way to overcome this problem is to weight the

classification, taking into account the distance from the test point to

each of its k nearest neighbors. The class (or value, in regression

problems) of each of the k nearest points is multiplied by a weight

proportional to the inverse of the distance from that point to the test

point. Another way to overcome skew is by abstraction in data

representation. For example, in a self-organizing map(SOM), each node

is a representative (a center) of a cluster of similar points, regardless of

their density in the original training data. K-NN can then be applied to

the SOM.

Customer Churn Analysis 58

Parameter selection :

The best choice of k depends upon the data; generally, larger values

of k reduces effect of the noise on the classification, but make

boundaries between classes less distinct. A good k can be selected by

various heuristic techniques (see hyperparameter optimization). The

special case where the class is predicted to be the class of the closest

training sample (i.e. when k = 1) is called the nearest neighbor

algorithm.

The accuracy of the k-NN algorithm can be severely degraded by the

presence of noisy or irrelevant features, or if the feature scales are not

consistent with their importance. Much research effort has been put

into selecting or scaling features to improve classification. A

particularly popular approach is the use of evolutionary algorithms to

optimize feature scaling. Another popular approach is to scale features

by the mutual information of the training data with the training classes.

In binary (two class) classification problems, it is helpful to choose k to

be an odd number as this avoids tied votes. One popular way of

choosing the empirically optimal k in this setting is via bootstrap

method.

Customer Churn Analysis 59

KNN Regression :

In K-NN regression, the k-NN algorithm is used for estimating

continuous variables. One such algorithm uses a weighted average of

the k nearest neighbors, weighted by the inverse of their distance. This

algorithm works as follows:

1. Compute the Euclidean or Mahalanobis distancefrom the query

example to the labeled examples.

2. Order the labeled examples by increasing distance.

3. Find a heuristically optimal number k of nearest neighbors, based

on RMSE. This is done using cross validation.

4. Calculate an inverse distance weighted average with the k-nearest

multivariate neighbors.

Customer Churn Analysis 60

finally accepted model

code

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn import model_selection

from sklearn import linear_model

from sklearn import preprocessing

from sklearn import utils

from sklearn import metrics

from sklearn import tree

from sklearn import feature_selection

from sklearn import neighbors

from sklearn import naive_bayes

df=pd.read_csv("d:/folder/churn_train.txt",sep=", ",engine='python')

df1=pd.read_csv("d:/folder/churn_test.txt",sep=", ",engine='python')

Customer Churn Analysis 61

le=preprocessing.LabelEncoder()

le1=preprocessing.LabelEncoder()

df.info()

df["st"]=le.fit_transform(df['st'])

df["intplan"]=le.fit_transform(df['intplan'])

df["voice"]=le.fit_transform(df['voice'])

df["label"]=le.fit_transform(df['label'])

df.dropna(inplace=True)

Xtrain=df.drop("phnum",axis=1)

Xtrain=Xtrain.drop("label",axis=1)

ytrain=df[['label']]

Xtrain.describe()

ytrain.describe()

ytrain['label'].value_counts()

#feature extraction

df['st'].value_counts()

sns.boxplot(y="st",data=df,x="label")

df['st'].isnull().sum()

Customer Churn Analysis 62

sns.countplot(y='st',data=df,hue='label')

sns.distplot(df['st'])

df['acclen'].value_counts()

sns.boxplot(y="acclen",data=df,x="label")

sns.distplot(df['acclen'])

qr=np.percentile(df['acclen'],[0,25,50,75,100])

qr

Xtrain['intplan'].value_counts()

sns.boxplot(y="intplan",data=df,x="label")

sns.distplot(df['intplan'])

sns.countplot(y='intplan',data=df,hue='label')

Xtrain['voice'].value_counts()

sns.countplot(y='voice',data=df,hue='label')

sns.distplot(df['voice'])

sns.boxplot(y="voice",data=df,x="label")

Xtrain['nummailmes'].value_counts()

sns.boxplot(y="nummailmes",data=df,x="label")

sns.distplot(df['nummailmes'])

Customer Churn Analysis 63

Xtrain['tdmin'].value_counts()

sns.boxplot(y="tdmin",data=df,x="label")

sns.distplot(df['tdmin'])

Xtrain['tdcal'].value_counts()

sns.boxplot(y="tdcal",data=df,x="label")

sns.distplot(df['tdcal'])

Xtrain['tdchar'].value_counts()

sns.boxplot(y="tdchar",data=df,x="label")

sns.distplot(df['tdchar'])

Xtrain['temin'].value_counts()

sns.boxplot(y="temin",data=df,x="label")

sns.distplot(df['temin'])

Xtrain['tecal'].value_counts()

sns.boxplot(y="tecal",data=df,x="label")

sns.distplot(df['tecal'])

Xtrain['tecahr'].value_counts()

sns.boxplot(y="tecahr",data=df,x="label")

Customer Churn Analysis 64

sns.distplot(df['tecahr'])

sns.countplot(x='intplan',data=df,hue='label')

Xtrain['tnmin'].value_counts()

sns.boxplot(y="tnmin",data=df,x="label")

sns.distplot(df['tnmin'])

Xtrain['tncal'].value_counts()

sns.boxplot(y="tncal",data=df,x="label")

sns.distplot(df['tncal'])

Xtrain['tnchar'].value_counts()

sns.boxplot(y="tnchar",data=df,x="label")

sns.distplot(df['tnchar'])

Xtrain['timin'].value_counts()

sns.boxplot(y="timin",data=df,x="label")

sns.distplot(df['timin'])

Xtrain['intplan'].value_counts()

sns.boxplot(y="intplan",data=df,x="label")

sns.distplot(df['intplan'])

sns.countplot(y='intplan',data=df,hue='label')

Customer Churn Analysis 65

Xtrain['tical'].value_counts()

sns.boxplot(y="tical",data=df,x="label")

sns.distplot(df['tical'])

sns.countplot(x='tical',data=df,hue='label')

Xtrain['tichar'].value_counts()

sns.boxplot(y="tichar",data=df,x="label")

sns.distplot(df['tichar'])

Xtrain['intplan'].value_counts()

sns.boxplot(y="intplan",data=df,x="label")

sns.distplot(df['intplan'])

sns.countplot(x='intplan',data=df,hue='label')

df['ncsc'].value_counts()

sns.boxplot(y="ncsc",data=df,x="label")

sns.distplot(df['ncsc'])

sns.countplot(x='ncsc',data=df,hue='label')

Xtrain=Xtrain.drop("st",axis=1)

Xtrain=Xtrain.drop("acclen",axis=1)

Xtrain=Xtrain.drop("tdcal",axis=1)

Customer Churn Analysis 66

Xtrain=Xtrain.drop("tecal",axis=1)

Xtrain=Xtrain.drop("temin",axis=1)

Xtrain=Xtrain.drop("tnchar",axis=1)

Xtrain=Xtrain.drop("tdchar",axis=1)

ml=tree.DecisionTreeClassifier()

ml.fit(Xtrain,ytrain)

print("AUC:",metrics.roc_auc_score(ytrain,ml.predict(Xtrain)))

print("recall:",metrics.recall_score(ytrain,ml.predict(Xtrain)))

df1["st"]=le1.fit_transform(df1['st'])

df1["intplan"]=le1.fit_transform(df1['intplan'])

df1["voice"]=le1.fit_transform(df1['voice'])

df1["label"]=le1.fit_transform(df1['label'])

df1.dropna(inplace=True)

Xtest=df1.drop("phnum",axis=1)

Xtest=Xtest.drop("label",axis=1)

Customer Churn Analysis 67

Xtest=Xtest.drop("st",axis=1)

Xtest=Xtest.drop("acclen",axis=1)

Xtest=Xtest.drop("tdcal",axis=1)

Xtest=Xtest.drop("tecal",axis=1)

Xtest=Xtest.drop("temin",axis=1)

Xtest=Xtest.drop("tnchar",axis=1)

Xtest=Xtest.drop("tdchar",axis=1)

ytest=df1[['label']]

ml=tree.DecisionTreeClassifier()

ml.fit(Xtrain,ytrain)

print("AUC:",metrics.roc_auc_score(ytest,ml.predict(Xtest)))

print("recall:",metrics.recall_score(ytest,ml.predict(Xtest)))

print("F1:",metrics.precision_score(ytest,ml.predict(Xtest)))

print("F1:",metrics.accuracy_score(ytest,ml.predict(Xtest)))

Customer Churn Analysis 68

confmat=metrics.confusion_matrix(ytest,ml.predict(Xtest))

print(confmat)

#continouse heatmap

df2=df

df2=df2.drop('st',axis=1)

df2=df2.drop('intplan',axis=1)

df2=df2.drop('tichar',axis=1)

df2=df2.drop('voice',axis=1)

df2=df2.drop('label',axis=1)

sns.heatmap(df2.corr())

def modelstats(Xtrain,Xtest,ytrain,ytest):

stats=[]

modelnames=["LR","DecisionTree","KNN","NB"]

models=list()

models.append(linear_model.LogisticRegression())

models.append(tree.DecisionTreeClassifier())

models.append(neighbors.KNeighborsClassifier())

Customer Churn Analysis 69

models.append(naive_bayes.GaussianNB())

for name,model in zip(modelnames,models):

if name=="KNN":

k=[l for l in range(5,17,2)]

grid={"n_neighbors":k}

grid_obj =

model_selection.GridSearchCV(estimator=model,param_grid=grid,scori

ng="f1")

grid_fit =grid_obj.fit(Xtrain,ytrain)

model = grid_fit.best_estimator_

model.fit(Xtrain,ytrain)

name=name+"("+str(grid_fit.best_params_["n_neighbors"])+")"

print(grid_fit.best_params_)

else:

model.fit(Xtrain,ytrain)

trainprediction=model.predict(Xtrain)

testprediction=model.predict(Xtest)

scores=list()

scores.append(name+"-train")

scores.append(metrics.accuracy_score(ytrain,trainprediction))

scores.append(metrics.precision_score(ytrain,trainprediction))

scores.append(metrics.recall_score(ytrain,trainprediction))

scores.append(metrics.roc_auc_score(ytrain,trainprediction))

stats.append(scores)

Customer Churn Analysis 70

scores=list()

scores.append(name+"-test")

scores.append(metrics.accuracy_score(ytest,testprediction))

scores.append(metrics.precision_score(ytest,testprediction))

scores.append(metrics.recall_score(ytest,testprediction))

scores.append(metrics.roc_auc_score(ytest,testprediction))

stats.append(scores)

colnames=["MODELNAME","ACCURACY","PRECISION","RECALL","AU

C"]

return pd.DataFrame(stats,columns=colnames)

print(modelstats(Xtrain,Xtest,ytrain,ytest))

Customer Churn Analysis 71

Conclusion and Future Works

In conclusion, the K-Means combined with Naïve Bayes method

demonstrated better sensitivity and accuracy for predicting

customer churn in telecommunications sector. The results

obtained after optimization of parameters for Model A has proven

that by increasing the number of clusters of the corresponding

attribute helps in improving the true positive performance of the

model. However, this technique experiences one shortcoming

which is the tradeoff point in correctly predicting the true positive

and true negative output. The result obtained has addressed the

impact of class imbalance problem which makes it difficult for

classifier to make prediction. The training set that consists of

3,333 instances has only 14.49% of churned customers while the

remaining 85.50% is non-churn instances. Thus, this requires

further investigation theoretically and experimentally by

considering several pertinent issues. One of the approaches to

improve the model is to study the interval boundaries during data

discretization process; also, the number of intervals could possibly

be another factor that affects the learning rate of the classifier.

Lastly, this technique can also be improved by adopting different

machine learning algorithm such as support vector machine,

decision tree as well as the Bayesian network that allows learning

of non-linear data sample.

Customer Churn Analysis 72

Certificate

This is to certify that Mr. SUBHAMAY SADHUKHAN, GURU NANAK INSTITUTE

OF TECHNOLOGY ,registration number: 161430110175,has successfully

completed a project on ‘Customer Churn Analysis’ using “Machine

learning using Python” under the guidance of Mr. Titas Roy

Chowdhury.

________________________________

(Mr. Titas Roy Chowdhury)

Globsyn Finishing School

Customer Churn Analysis 73

Certificate

This is to certify that Mr. PRITHWISH DAS, GURU NANAK INSTITUTE OF

TECHNOLOGY, registration number: 161430110145,has successfully

completed a project on ‘Customer Churn Analysis’ using “Machine

learning using Python” under the guidance of Mr. Titas Roy

Chowdhury.

________________________________

(Mr. Titas Roy Chowdhury)

Globsyn Finishing School

Customer Churn Analysis 74

Certificate

This is to certify that Mr. RITWICK DAS, GURU NANAK INSTITUTE OF

TECHNOLOGY, registration number: 161430110150 ,has successfully

completed a project on ‘Customer Churn Analysis’ using “Machine

learning using Python” under the guidance of Mr. Titas Roy

Chowdhury.

________________________________

(Mr. Titas Roy Chowdhury)

Globsyn Finishing School

Customer Churn Analysis 75

Certificate

This is to certify that Mr. SUMIT SAHA, GURU NANAK INSTITUTE OF

TECHNOLOGY, registration number: 161430110178,has successfully

completed a project on ‘Customer Churn Analysis’ using “Machine

learning using Python under the guidance of Mr. Titas Roy Chowdhury.

________________________________

(Mr. Titas Roy Chowdhury)

Globsyn Finishing School

Customer Churn Analysis 76

THANK YOU

Customer Churn Analysis 77

You might also like

- The Successful Strategies from Customer Managment ExcellenceFrom EverandThe Successful Strategies from Customer Managment ExcellenceNo ratings yet

- CRM in Religare Securities Ltd.Document71 pagesCRM in Religare Securities Ltd.gu786ruNo ratings yet

- Ishpreet Singh Report On (Reliance Jio) PGDocument68 pagesIshpreet Singh Report On (Reliance Jio) PGmailtoprateekk30No ratings yet

- Lead While Serving: An Integrated Approach to Managing Your Stakeholders and CustomersFrom EverandLead While Serving: An Integrated Approach to Managing Your Stakeholders and CustomersNo ratings yet

- The Six Principles of Service Excellence: A Proven Strategy for Driving World-Class Employee Performance and Elevating the Customer Experience from Average to ExtraordinaryFrom EverandThe Six Principles of Service Excellence: A Proven Strategy for Driving World-Class Employee Performance and Elevating the Customer Experience from Average to ExtraordinaryNo ratings yet

- An Executive’s Guide to Software Quality in an Agile Organization: A Continuous Improvement JourneyFrom EverandAn Executive’s Guide to Software Quality in an Agile Organization: A Continuous Improvement JourneyNo ratings yet

- CSP Multichannel Campaign Management A Complete Guide - 2020 EditionFrom EverandCSP Multichannel Campaign Management A Complete Guide - 2020 EditionNo ratings yet

- EmployeeAttrition AbhinandanDocument76 pagesEmployeeAttrition AbhinandanSaquib NazeerNo ratings yet

- Customer Relationship Management IN Airtel: Bachelor of Business AdministrationDocument77 pagesCustomer Relationship Management IN Airtel: Bachelor of Business AdministrationRohit ThakurNo ratings yet

- Lean Enterprise: The Ultimate Guide for Entrepreneurs. Learn Effective Strategies to Innovate and Maximize the Performance of Your Business.From EverandLean Enterprise: The Ultimate Guide for Entrepreneurs. Learn Effective Strategies to Innovate and Maximize the Performance of Your Business.No ratings yet

- Volkswagen SurVeyDocument73 pagesVolkswagen SurVeySanant Goyal67% (3)

- Customer Satisfaction and Promotional ActivitiesDocument64 pagesCustomer Satisfaction and Promotional ActivitiesvishalNo ratings yet

- Measuring Customer Experience: How to Develop and Execute the Most Profitable Customer Experience StrategiesFrom EverandMeasuring Customer Experience: How to Develop and Execute the Most Profitable Customer Experience StrategiesNo ratings yet

- Project Report ON: "Customer Relationship Management Religare Securities LTD"Document68 pagesProject Report ON: "Customer Relationship Management Religare Securities LTD"Inder ChahalNo ratings yet

- Customer Loyalty Strategies A Complete Guide - 2020 EditionFrom EverandCustomer Loyalty Strategies A Complete Guide - 2020 EditionNo ratings yet

- Customer Engagement Channels A Complete Guide - 2019 EditionFrom EverandCustomer Engagement Channels A Complete Guide - 2019 EditionNo ratings yet

- Customer Retention Strategies A Complete Guide - 2019 EditionFrom EverandCustomer Retention Strategies A Complete Guide - 2019 EditionNo ratings yet

- AnirudhDocument81 pagesAnirudhbalki123No ratings yet

- Global supply-chain finance Complete Self-Assessment GuideFrom EverandGlobal supply-chain finance Complete Self-Assessment GuideNo ratings yet

- Shahnawaz Service Marketing-1Document39 pagesShahnawaz Service Marketing-1Yash RajNo ratings yet

- Knowledge Management for Customer Service A Clear and Concise ReferenceFrom EverandKnowledge Management for Customer Service A Clear and Concise ReferenceNo ratings yet

- Customer Retention in Banking SectorDocument59 pagesCustomer Retention in Banking SectorIsrael Gardner100% (1)

- A Study On Customer Service Towards V Mart PatnaDocument63 pagesA Study On Customer Service Towards V Mart Patnaraunitraman7544100% (1)

- Amway Projject FinalDocument50 pagesAmway Projject FinalVikas BhardwajNo ratings yet

- Customer-Centricity: Putting Clients at the Heart of BusinessFrom EverandCustomer-Centricity: Putting Clients at the Heart of BusinessNo ratings yet

- Angel BrokingDocument98 pagesAngel Brokingravi singhNo ratings yet

- Sales & Communication: Listen to Understand. Empathise to Build Trust.From EverandSales & Communication: Listen to Understand. Empathise to Build Trust.Rating: 5 out of 5 stars5/5 (1)

- A Summer Training ReportDocument59 pagesA Summer Training ReportRahul SharmaNo ratings yet

- Customer Satisfaction in HyundaiDocument82 pagesCustomer Satisfaction in HyundaiAjith Aji100% (2)

- The Financial Advisor's Success Manual: How to Structure and Grow Your Financial Services PracticeFrom EverandThe Financial Advisor's Success Manual: How to Structure and Grow Your Financial Services PracticeNo ratings yet

- Customer Satisfaction Thesis PDFDocument5 pagesCustomer Satisfaction Thesis PDFPaySomeoneToWriteMyPaperSingapore100% (2)

- Global Supply Chain Finance A Complete Guide - 2020 EditionFrom EverandGlobal Supply Chain Finance A Complete Guide - 2020 EditionNo ratings yet

- Managing Service Excellence: The Ultimate Guide to Building and Maintaining a Customer-Centric OrganizationFrom EverandManaging Service Excellence: The Ultimate Guide to Building and Maintaining a Customer-Centric OrganizationRating: 5 out of 5 stars5/5 (2)

- Thesis On Customer Loyalty PDFDocument5 pagesThesis On Customer Loyalty PDFfjncb9rp100% (2)

- Using Information to Develop a Culture of Customer Centricity: Customer Centricity, Analytics, and Information UtilizationFrom EverandUsing Information to Develop a Culture of Customer Centricity: Customer Centricity, Analytics, and Information UtilizationNo ratings yet

- Bsit Readings in Philippine HistoryDocument7 pagesBsit Readings in Philippine HistoryMark Dipad0% (2)

- Hpux Cheatsheet PDFDocument32 pagesHpux Cheatsheet PDFRex TortiNo ratings yet

- Test Flight FaqDocument7 pagesTest Flight FaqBishalGhimireNo ratings yet

- Dspic33Fj32Gp302/304, Dspic33Fj64Gpx02/X04, and Dspic33Fj128Gpx02/X04 Data SheetDocument402 pagesDspic33Fj32Gp302/304, Dspic33Fj64Gpx02/X04, and Dspic33Fj128Gpx02/X04 Data Sheetmikehibbett1No ratings yet

- Tugas Gambar Teknik AutocadDocument6 pagesTugas Gambar Teknik AutocadPrietaOpikasariNo ratings yet

- Qarshi Industries ProjectDocument40 pagesQarshi Industries ProjectZeeshan SattarNo ratings yet

- Matrix Operations C ProgramDocument10 pagesMatrix Operations C ProgramMark Charles TarrozaNo ratings yet

- ACKNOWLEDGEMENTDocument25 pagesACKNOWLEDGEMENTSuresh Raghu100% (1)

- Swept EllipsoidDocument14 pagesSwept EllipsoidkillerghostYTNo ratings yet

- CrackzDocument29 pagesCrackzGabriel TellesNo ratings yet

- Internet ExplorerDocument37 pagesInternet ExplorerNajyar YarNajNo ratings yet

- DB2 9.5 Real-Time Statistics CollectionDocument44 pagesDB2 9.5 Real-Time Statistics Collectionandrey_krasovskyNo ratings yet

- Maskolis TemplateDocument51 pagesMaskolis TemplateNannyk WidyaningrumNo ratings yet

- Psholix Wins Prize For Breakthrough Tech: 3D Without GlassesDocument3 pagesPsholix Wins Prize For Breakthrough Tech: 3D Without GlassesPR.comNo ratings yet

- (ET) PassMark OSForensics Professional 5.1 Build 1003 TORRENT (v5Document7 pages(ET) PassMark OSForensics Professional 5.1 Build 1003 TORRENT (v5Ludeilson RodriguesNo ratings yet

- OS-Lab-10 SolutionDocument5 pagesOS-Lab-10 SolutionKashif UmairNo ratings yet

- Systems Programming With Standard CDocument10 pagesSystems Programming With Standard CNam Le100% (1)

- Digital Thermometer Using C# and ATmega16 Microcontroller - CodeProjectDocument10 pagesDigital Thermometer Using C# and ATmega16 Microcontroller - CodeProjectAditya ChaudharyNo ratings yet

- Mcafee DLP 11.0.400Document215 pagesMcafee DLP 11.0.400hoangcongchucNo ratings yet

- Curriculum Vitae Sukma WardaniDocument3 pagesCurriculum Vitae Sukma Wardanisukma wardaniNo ratings yet

- Canadian Senior Mathematics Contest: The Centre For Education in Mathematics and Computing Cemc - Uwaterloo.caDocument4 pagesCanadian Senior Mathematics Contest: The Centre For Education in Mathematics and Computing Cemc - Uwaterloo.caHimanshu MittalNo ratings yet

- Codigos Autodesk 2015Document3 pagesCodigos Autodesk 2015RubenCH59No ratings yet

- Definition of Basis Values and An In-Depth Look at CMH-17 Statistical AnalysisDocument14 pagesDefinition of Basis Values and An In-Depth Look at CMH-17 Statistical Analysisjuanpalomo74No ratings yet

- Cylinder Mounting Equipment 350 Lbs - Kidde - PN WK-281866-001Document2 pagesCylinder Mounting Equipment 350 Lbs - Kidde - PN WK-281866-001Gustavo SenaNo ratings yet

- Internet Institute RepositoryDocument300 pagesInternet Institute RepositoryAkhila ShajiNo ratings yet

- 02 Laboratory Exercise 1 PDFDocument1 page02 Laboratory Exercise 1 PDFAeirra Mae Mislang ReyesNo ratings yet

- COMSATS University Islamabad (Lahore Campus) : Department of Electrical & Computer EngineeringDocument4 pagesCOMSATS University Islamabad (Lahore Campus) : Department of Electrical & Computer EngineeringAsfand KhanNo ratings yet

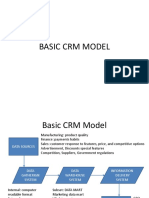

- CRM Model and ArchitectureDocument33 pagesCRM Model and ArchitectureVivek Jain100% (1)

- Note!: LicenseDocument236 pagesNote!: LicenseMAveRicK135No ratings yet

- Final OrganizedDocument70 pagesFinal OrganizedRajLaxmi Book Depot Pune.No ratings yet