Professional Documents

Culture Documents

Reinforcement Learning: Pablo Zometa - Department of Mechatronics - GIU Berlin 1

Uploaded by

jessica magdy0 ratings0% found this document useful (0 votes)

4 views12 pagesOriginal Title

L1

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

4 views12 pagesReinforcement Learning: Pablo Zometa - Department of Mechatronics - GIU Berlin 1

Uploaded by

jessica magdyCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 12

Reinforcement Learning

Introduction

Pablo Zometa – Department of Mechatronics – GIU Berlin 1

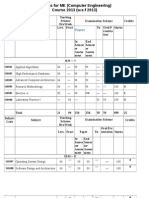

Organization

Teaching staff Assessment

▶ Pablo Zometa ▶ 20 % Quizzes (Best of 2)

Office 6.05 ▶ 20 % Assignments

▶ Ali Tarek ▶ 25 % Midterm

Office 6.01 ▶ 35 % Final

Other info.:

▶ Lecture v1.0

Pablo Zometa – Department of Mechatronics – GIU Berlin 2

Course Overview

Part I: Reinforcement Part II: Optimal Control

Learning ▶ Convex Optimization

▶ Markov Decision Process ▶ Linear Quadratic Regulator

▶ Dynamic Programming ▶ Model Predictive Control

▶ Temporal difference and

Q-Learning

▶ Neural Networks and Deep

Q-Networks

Reinforcement Learning text book (freely available):

Reinforcement Learning: An Introduction, second edition, R. S.

Sutton and A. G. Barto

Optimization text book (freely available)

Convex Optimization, S. Boyd and L. Vandenberghe

Pablo Zometa – Department of Mechatronics – GIU Berlin 3

Reinforcement Learning (RL) in a Nutshell

Reinforcement learning: learning what actions to take in any

particular state to maximize a numerical reward over time.

action = f (state)

Agent Environment

reward, state+

RL is typically modelled using the Agent/Environment framework:

▶ Agent: The learner and action taker

▶ Environment: return rewards and transition to a new state,

depending on the chosen actions

▶ the boundary between agent and environment depends on

what needs to be learned and the states chosen as

representation, and not on physical boundaries

Pablo Zometa – Department of Mechatronics – GIU Berlin 4

Motivation

Why reinforcement learning?

▶ Learn from experience: solve a complex ”puzzle” (e.g., robot

navigation), recommendation system.

▶ Use in dynamic environments: autonomous robot navigating

on a busy factory floor

▶ Ability to handle uncertainty: stock trading, a game of Chess

or Go, autonomous driving

▶ Flexibility:

from computer games (e.g. Super Mario Bros. to learning

how to walk (e.g., Learn to walk).

Pablo Zometa – Department of Mechatronics – GIU Berlin 5

Reinforcement Learning (RL)

RL: learn what actions to take in any particular state to maximize

a numerical reward:

▶ map a state x to an action a: π(x) = a

▶ which action a to take at state x is not predefined, i.e., π(x)?

▶ π(x) must be discovered by experimentation, i.e., which

action a delivers the highest reward at state x?

▶ after taking action a at x, a reward ̸= 0 may not be immediate

The two most important distinguishing features of RL:

▶ trial-and-error search for ”best” action and

▶ delayed reward for an action taken

Unique to RL is the trade-off between

▶ exploration: to discover the best actions to exploit, it has to

try new actions that may yield better (or worse) results

▶ exploitation: retake actions that have lead to positive rewards

Pablo Zometa – Department of Mechatronics – GIU Berlin 6

The RL landscape

Currently, the dominant branch of artificial intelligence is machine

learning. Three main branches of machine learning:

▶ supervised learning: labeled samples, e.g., linear regression,

image classification

▶ unsupervised learning: finding structure hidden in collections

of unlabeled data, e.g., clustering

▶ reinforcement learning: trying to maximize a reward signal,

e.g. play a (video)game

Within RL, there are several branches, among them:

▶ Q-learning: model-free method. Builds a table of the expected

value (reward) of taking an action at a given state. For

discrete action spaces.

▶ Policy gradient: model-free methods that directly optimize the

policy function π(x). For continuous action spaces.

▶ Actor-critic: actor learn the policy π(x), while the critic checks

how good π(x) is based on expected reward of a = π(x).

▶ Deep RL: use deep neural networks to extend these methods

Pablo Zometa – Department of Mechatronics – GIU Berlin 7

History of RL: animal learning psychology

Reinforcement learning drew inspiration from psychological

learning theories. For instance: Rat basketball.

▶ In 1898, based on experiments on animal escaping puzzle

boxes, Thorndike formulates the Law of effect: learning by

trial and error.

▶ In 1920s, Russian physiologist Ivan Pavlov introduce the idea

of Pavlovian or classical conditioning: Connecting new stimuli

to innate reflexes.

▶ In 1950s, Skinner popularizes the idea of operant conditioning:

consequences lead to changes in voluntary behaviour

▶ In 1958, Skinner recognizes the effectiveness of shaping

behaviour with small intermediate rewards which reinforce

step-wise changes, until a desired complex behaviour is learnt

Classical vs. Operant conditioning.

RL does not attempt to replicate or explain how animals learn.

Pablo Zometa – Department of Mechatronics – GIU Berlin 8

History of RL: optimal control

In 1950, the main mathematical concepts of optimal control (e.g.,

linear quadratic regulator) were developed:

▶ minimize a cost (i.e., maximize performance) of a dynamical

system over time

▶ Bellman equation relates states with a value function

▶ Dynamic programming (DP) was developed as a way to solve

the Bellman equation

▶ Although efficient, for large problems DP becomes intractable

(curse of dimensionality)

▶ Bellman also introduced the use of Markov decision process

(MDP) to solve discrete stochastic problems

In 1989 Watkins treated RL using MDP. In some sense, RL is seen

as a way to approximately solve the Bellman equation, for

problems where DP is not feasible.

Both fields (OC and RL) evolved separately, and have developed

different terms for the same concepts.

Pablo Zometa – Department of Mechatronics – GIU Berlin 9

RL: exploration vs exploitation trade-off

Unique to RL is the trade-of between exploration and exploitation

▶ exploration: to discover the best actions to exploit, it has to

try new actions that may yield better (or worse) results

▶ Half-Cheetah: exploration

▶ exploitation: retake actions that in the past lead to positive

rewards

▶ Half-Cheetah: exploitation

How the reward signal is defined is crucial in how the agent learns

to solve a task.

Q: What could be the rewards for the half-Cheetah example?

Pablo Zometa – Department of Mechatronics – GIU Berlin 10

Modern Examples

Modern examples:

▶ Several RL examples that taught themselves how to play

games: RL: Atari , Super Mario Bros.

▶ AlphaZero: it has learned how to play Go and Chess without

human instruction, just data generated by playing against

itself. It has reached super-human playing capabilities.

▶ ChatGPT: has been fine-tuned (an approach to transfer

learning) using both supervised and reinforcement learning

techniques. (Source Wikipedia)

Pablo Zometa – Department of Mechatronics – GIU Berlin 11

Perspective: RL and OC for mechatronic systems?

Nowadays, reinforcement learning

▶ is mostly used in software applications: games, chatbots,

recommendation systems, etc.

▶ for mechatronic systems, it is mostly limited to research.

Currently, model-based approaches of the robot and environment

(based on physics) dominate, in particular, for simple applications.

Classical and optimal control are commonly used.

Limitations of RL for complex applications (e.g., learning to walk):

▶ performing the training with the real robot may be dangerous,

slow, and expensive

▶ performing the training with simulations may require a

extremely accurate model

▶ industrial applications often prefer simple approaches

Still, RL may have an edge as high-level decision maker on

uncertain environments, and may be combined with OC as

low-level controller.

Pablo Zometa – Department of Mechatronics – GIU Berlin 12

You might also like

- Reinforcement Learning Applied To Games: João Crespo Andreas WichertDocument16 pagesReinforcement Learning Applied To Games: João Crespo Andreas WichertRobert MaximilianNo ratings yet

- Proposal PDFDocument8 pagesProposal PDFmarcoNo ratings yet

- Practical Reinforcement LearningDocument270 pagesPractical Reinforcement Learningsravan100% (2)

- Research Trends in Deep Reinforcement Learning: Danyil KosenkoDocument6 pagesResearch Trends in Deep Reinforcement Learning: Danyil KosenkoNil KosenkoNo ratings yet

- Machine Learning: Version 2 CSE IIT, KharagpurDocument9 pagesMachine Learning: Version 2 CSE IIT, KharagpurSatyanarayan Reddy KNo ratings yet

- Chapter 6 AIDocument63 pagesChapter 6 AIMULUKEN TILAHUNNo ratings yet

- Lect02 Understanding Supervised Machine Learning StudentDocument50 pagesLect02 Understanding Supervised Machine Learning Studentsayali sonavaneNo ratings yet

- Reinforcement Learning in Robotics A SurveyDocument37 pagesReinforcement Learning in Robotics A Surveyaleong1No ratings yet

- Reinforcement Learning OptimizationDocument6 pagesReinforcement Learning OptimizationrhvzjprvNo ratings yet

- A Review of Deep Deterministic Policy Gradients in Reinforcement Learning For Robotics 1Document8 pagesA Review of Deep Deterministic Policy Gradients in Reinforcement Learning For Robotics 1api-461820735No ratings yet

- Robots RLDocument73 pagesRobots RLumutNo ratings yet

- Lecture 8Document113 pagesLecture 8Ruben KempterNo ratings yet

- Lecture 1Document69 pagesLecture 1Beerbhan NaruNo ratings yet

- Reinforcement Learning For IoT - FinalDocument45 pagesReinforcement Learning For IoT - Finalali hussainNo ratings yet

- Reinforcement Learning TutorialDocument17 pagesReinforcement Learning TutorialSagar100% (1)

- Reinforcement Learning: A Survey: Leslie Pack Kaelbling Michael L. Littman Andrew W. MooreDocument49 pagesReinforcement Learning: A Survey: Leslie Pack Kaelbling Michael L. Littman Andrew W. MooresomiiipmNo ratings yet

- Genetic Reinforcement Learning Algorithms For On-Line Fuzzy Inference System Tuning "Application To Mobile Robotic"Document31 pagesGenetic Reinforcement Learning Algorithms For On-Line Fuzzy Inference System Tuning "Application To Mobile Robotic"Nelson AriasNo ratings yet

- 2702 PDFDocument7 pages2702 PDFAbsolute ZERONo ratings yet

- 3.5 Intro2DeepQLearningDocument12 pages3.5 Intro2DeepQLearninganxo4spamNo ratings yet

- Unit-5 Part C 1) Explain The Q Function and Q Learning Algorithm Assuming Deterministic Rewards and Actions With Example. Ans)Document11 pagesUnit-5 Part C 1) Explain The Q Function and Q Learning Algorithm Assuming Deterministic Rewards and Actions With Example. Ans)QUARREL CREATIONSNo ratings yet

- 08 Reinforcement LearningDocument33 pages08 Reinforcement LearningAbdoh AldenhamiNo ratings yet

- 06 Learning SystemsDocument81 pages06 Learning SystemssanjusunishNo ratings yet

- Reinforcement Learning in Robotics: A Survey: Jens Kober J. Andrew Bagnell Jan PetersDocument38 pagesReinforcement Learning in Robotics: A Survey: Jens Kober J. Andrew Bagnell Jan PetersLaxmikanth CherukupalliNo ratings yet

- Kael Bling Survey of RL 123Document49 pagesKael Bling Survey of RL 123Kowndinya RenduchintalaNo ratings yet

- Balancing A CartPole System With Reinforcement LeaDocument8 pagesBalancing A CartPole System With Reinforcement LeaKeerthana ChirumamillaNo ratings yet

- Reinforcement LearningDocument18 pagesReinforcement LearningDarshan R GowdaNo ratings yet

- ML Module 5 2Document32 pagesML Module 5 2Lahari bilimaleNo ratings yet

- Practical Deep Reinforcement Learning with Python: Concise Implementation of Algorithms, Simplified Maths, and Effective Use of TensorFlow and PyTorch (English Edition)From EverandPractical Deep Reinforcement Learning with Python: Concise Implementation of Algorithms, Simplified Maths, and Effective Use of TensorFlow and PyTorch (English Edition)Rating: 4 out of 5 stars4/5 (1)

- Lec07 Baysian OptiDocument94 pagesLec07 Baysian Optinada montasser gaberNo ratings yet

- Ai Unit 4 BecDocument64 pagesAi Unit 4 BecNaga sai ChallaNo ratings yet

- Online Learning Applications: Advertising ProjectDocument82 pagesOnline Learning Applications: Advertising ProjectRoberto ReggianiNo ratings yet

- Learning Robot Control - 2012Document12 pagesLearning Robot Control - 2012cosmicduckNo ratings yet

- Machine Learning For Developers PDFDocument234 pagesMachine Learning For Developers PDFKhant Thu AungNo ratings yet

- Reinforcement Learning: Nazia BibiDocument61 pagesReinforcement Learning: Nazia BibiKiran Malik100% (1)

- Machine Learning SlidesDocument46 pagesMachine Learning Slidesveerendranadh7901241149No ratings yet

- DW 01Document14 pagesDW 01Antonio RodriguesNo ratings yet

- Artificial Intelligence: Unit-VDocument41 pagesArtificial Intelligence: Unit-Vsramalingam288953No ratings yet

- Advanced RoboticsDocument381 pagesAdvanced RoboticsakozyNo ratings yet

- Bayesian Deep Reinforcement Learning Via Deep Kernel LearningDocument8 pagesBayesian Deep Reinforcement Learning Via Deep Kernel LearningMohammadNo ratings yet

- Live 301 1562 Jair PDFDocument49 pagesLive 301 1562 Jair PDFChampumaharajNo ratings yet

- Unit 1 Question With Answer2Document9 pagesUnit 1 Question With Answer2Abhishek GoyalNo ratings yet

- CH 8 - Introduction To Machine LearningDocument74 pagesCH 8 - Introduction To Machine LearningMizna AmousaNo ratings yet

- Notes Artificial Intelligence Unit 5Document11 pagesNotes Artificial Intelligence Unit 5Goku KumarNo ratings yet

- Hanna2021 Article GroundedActionTransformationFoDocument31 pagesHanna2021 Article GroundedActionTransformationFoAyman TaniraNo ratings yet

- 11EC65R09 - Intelligent Tutorial SystemDocument26 pages11EC65R09 - Intelligent Tutorial Systemsoumyabose_etcNo ratings yet

- Things You Need To Know About Reinforcement Learning PDFDocument3 pagesThings You Need To Know About Reinforcement Learning PDFNarendra PatelNo ratings yet

- Car Crash PracticalDocument4 pagesCar Crash Practicalapi-260818426100% (1)

- 6COM1044 2023 2024 SVM ClassificationDocument50 pages6COM1044 2023 2024 SVM ClassificationAmir Mohamed Nabil Saleh ElghameryNo ratings yet

- Machine Learning Tutorial Machine Learning TutorialDocument33 pagesMachine Learning Tutorial Machine Learning TutorialSudhakar MurugasenNo ratings yet

- Lec 01Document76 pagesLec 01g18518553618No ratings yet

- Thesis Fabrizio GalliDocument22 pagesThesis Fabrizio GalliFabri GalliNo ratings yet

- Introduction - ML NOTESDocument17 pagesIntroduction - ML NOTESAkash SinghNo ratings yet

- 1.to Study Supervisedunsupervisedreinforcement Learning ApproachDocument6 pages1.to Study Supervisedunsupervisedreinforcement Learning ApproachRAHUL DARANDALENo ratings yet

- Internship ReportDocument31 pagesInternship ReportNitesh BishtNo ratings yet

- Ai Unit5 SvcetDocument41 pagesAi Unit5 SvcetDevadharshini SelladuraiNo ratings yet

- AI Chapter 6Document28 pagesAI Chapter 6Abdurezak AhmedNo ratings yet

- Image Classification: Step-by-step Classifying Images with Python and Techniques of Computer Vision and Machine LearningFrom EverandImage Classification: Step-by-step Classifying Images with Python and Techniques of Computer Vision and Machine LearningNo ratings yet

- Reinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AIFrom EverandReinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AINo ratings yet

- Tactile Sensing, Skill Learning, and Robotic Dexterous ManipulationFrom EverandTactile Sensing, Skill Learning, and Robotic Dexterous ManipulationNo ratings yet

- Chapter 12 Vector Mechanics For Engineers DynamicsiDocument165 pagesChapter 12 Vector Mechanics For Engineers Dynamicsijessica magdyNo ratings yet

- Lec 4Document16 pagesLec 4jessica magdyNo ratings yet

- Lec 3Document15 pagesLec 3jessica magdyNo ratings yet

- Reinforcement Learning: Markov Decision ProcessDocument17 pagesReinforcement Learning: Markov Decision Processjessica magdyNo ratings yet

- 6G - The Road Map To AI Empowered Wireless NetworksDocument8 pages6G - The Road Map To AI Empowered Wireless NetworksPanashe MbofanaNo ratings yet

- Future Generation Computer Systems:, Xin WangDocument8 pagesFuture Generation Computer Systems:, Xin WangBotez MartaNo ratings yet

- Advances of Machine Learning in Materials Science: Ideas and TechniquesDocument40 pagesAdvances of Machine Learning in Materials Science: Ideas and TechniquesdaniloNo ratings yet

- Semi-: Supervised LearningDocument40 pagesSemi-: Supervised LearningRajachandra VoodigaNo ratings yet

- Lake Et Al 2017 BBSDocument72 pagesLake Et Al 2017 BBSVaroonNo ratings yet

- Lecture 9: Exploration and Exploitation: David SilverDocument47 pagesLecture 9: Exploration and Exploitation: David Silver司向辉No ratings yet

- A2c BotDocument20 pagesA2c Botluis.parerasNo ratings yet

- DeepEdge A New QoE-Based Resource Allocation Framework Using Deep Reinforcement Learning For Future Heterogeneous Edge-IoT ApplicationsDocument13 pagesDeepEdge A New QoE-Based Resource Allocation Framework Using Deep Reinforcement Learning For Future Heterogeneous Edge-IoT Applicationsbondgg537No ratings yet

- Literature ReviewDocument37 pagesLiterature ReviewFelix WalterNo ratings yet

- On The Robustness of Safe Reinforcement Learning Under Observational PerturbationsDocument30 pagesOn The Robustness of Safe Reinforcement Learning Under Observational Perturbations刘天岑No ratings yet

- Deep Reinforcement Learning For 5G Networks: Joint Beamforming, Power Control, and Interference CoordinationDocument30 pagesDeep Reinforcement Learning For 5G Networks: Joint Beamforming, Power Control, and Interference CoordinationBoy azNo ratings yet

- Walking Robot BOOKDocument161 pagesWalking Robot BOOKCarlos Ramirez100% (1)

- Chapter 1 PDFDocument45 pagesChapter 1 PDFDavid MartínezNo ratings yet

- Parametrized Quantum Policies For Reinforcement LearningDocument33 pagesParametrized Quantum Policies For Reinforcement LearningLakshika RathiNo ratings yet

- Deep Learning in Neural Networks An OverviewDocument89 pagesDeep Learning in Neural Networks An OverviewAnjan Kumar SahooNo ratings yet

- Deep Reinforcement Learning in Mario: Final Project Report of CS747: Foundations of Intelligent Learning AgentsDocument6 pagesDeep Reinforcement Learning in Mario: Final Project Report of CS747: Foundations of Intelligent Learning AgentsToonz NetworkNo ratings yet

- MACHINE LEARNING TipsDocument10 pagesMACHINE LEARNING TipsSomnath KadamNo ratings yet

- The Reinforcement Learning Workshop - Alessandro PalmasDocument598 pagesThe Reinforcement Learning Workshop - Alessandro PalmasNguyen Duc AnhNo ratings yet

- 5587-Article Text-8812-1-10-20200512Document8 pages5587-Article Text-8812-1-10-20200512jhonnyeNo ratings yet

- Unit-Ii Knowledge Representation and Reasoning Part-ADocument10 pagesUnit-Ii Knowledge Representation and Reasoning Part-APapithaNo ratings yet

- Self Driving Car PrototypeDocument9 pagesSelf Driving Car Prototypeavi kumarNo ratings yet

- Chain of Hindsight Aligns Language Models With Feedback PDFDocument18 pagesChain of Hindsight Aligns Language Models With Feedback PDFE LeNo ratings yet

- Reinforcement 2Document2 pagesReinforcement 2KelechiNo ratings yet

- Smart Agriculture Emerging Pedagogies of Deep Learning Machine Learning and Internet of Things - Govind Singh Patel Amrita Rai Nripendra Narayan Das R.P. SinghDocument222 pagesSmart Agriculture Emerging Pedagogies of Deep Learning Machine Learning and Internet of Things - Govind Singh Patel Amrita Rai Nripendra Narayan Das R.P. Singhohundper100% (1)

- The Direction Analysis On Trajectory of Fast Neural Network Learning RobotDocument11 pagesThe Direction Analysis On Trajectory of Fast Neural Network Learning RobotRachma OktaNo ratings yet

- Knowledge Based and Neural Network LearningDocument6 pagesKnowledge Based and Neural Network LearningMehlakNo ratings yet

- MEDocument37 pagesMEMrunal UpasaniNo ratings yet

- Lift CalculationDocument32 pagesLift Calculationsuwono radukNo ratings yet

- Education: Rishabh AgarwalDocument2 pagesEducation: Rishabh AgarwalGopinath BalamuruganNo ratings yet

- Deep Reinforcement Learning Based Mobile Robot Navigation A ReviewDocument18 pagesDeep Reinforcement Learning Based Mobile Robot Navigation A Reviewlex tokNo ratings yet