Professional Documents

Culture Documents

HW

Uploaded by

bsharma013467Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

HW

Uploaded by

bsharma013467Copyright:

Available Formats

1. What is rate monotonic scheduling algorithm? What are various assumptions in this algorithm?

Rate Monotonic Scheduling Algorithm

The Rate Monotonic Scheduling Algorithm (RMS) is important to real-time systems designers because it allows one to guarantee that a set of tasks is schedulable. A set of tasks is said to be schedulable if all of the tasks can meet their deadlines. RMS provides a set of rules which can be used to perform a guaranteed schedulability analysis for a task set. This analysis determines whether a task set is schedulable under worstcase conditions and emphasizes the predictability of the system's behavior. It has been proven that: RMS is an optimal static priority algorithm for scheduling independent, preemptible, periodic tasks on a single processor. RMS is optimal in the sense that if a set of tasks can be scheduled by any static priority algorithm, then RMS will be able to schedule that task set. RMS bases it schedulability analysis on the processor utilization level below which all deadlines can be met. RMS calls for the static assignment of task priorities based upon their period. The shorter a task's period, the higher its priority. For example, a task with a 1 millisecond period has higher priority than a task with a 100 millisecond period. If two tasks have the same period, then RMS does not distinguish between the tasks. However, RTEMS specifies that when given tasks of equal priority, the task which has been ready longest will execute first. RMS's priority assignment scheme does not provide one with exact numeric values for task priorities. For example, consider the following task set and priority assignments: Task 1 2 3 4 Period (in milliseconds) 100 50 50 25 Priority Low Medium Medium High

RMS only calls for task 1 to have the lowest priority, task 4 to have the highest priority, and tasks 2 and 3 to have an equal priority between that of tasks 1 and 4. The actual RTEMS priorities assigned to the tasks must only adhere to those guidelines. Many applications have tasks with both hard and soft deadlines. The tasks with hard deadlines are typically referred to as the critical task set, with the soft deadline tasks being the non-critical task set. The critical task set can be scheduled using RMS, with the non-critical tasks not executing under transient overload, by simply assigning priorities such that the lowest priority critical task (i.e. longest period) has a higher priority than the highest priority non-critical task. Although RMS may be used to assign priorities to the non-critical tasks, it is not necessary. In this instance, schedulability is only guaranteed for the critical task set. The schedulability analysis rules for RMS were developed based on the following assumptions:

The requests for all tasks for which hard deadlines exist are periodic, with a constant interval between requests. Each task must complete before the next request for it occurs. The tasks are independent in that a task does not depend on the initiation or completion of requests for other tasks.

The execution time for each task without preemption or interruption is constant and does not vary. Any non-periodic tasks in the system are special. These tasks displace periodic tasks while executing and do not have hard, critical deadlines.

2. Why task synchronization is required in real time operating system? Explain. Most operating systems, including RTOSs, offer a variety of mechanisms for communication and synchronization between tasks. These mechanisms are necessary in a preemptive environment of many tasks, because without them the tasks might well communicate corrupted information or otherwise interfere with each other. For instance, a task might be preempted when it is in the middle of updating a table of data. If a second task that preempts it reads from that table, it will read a combination of some areas of newly-updated data plus some areas of data that have not yet been updated. [New Yorkers would call this a mish-mash.] These updated and old data areas together may be incorrect in combination, or may not even make sense. An example is a data table containing temperature measurements that begins with the contents 10 C. A task begins updating this table with the new value 99 F, writing into the table character-by-character. If that task is preempted in the middle of the update, a second task that preempts it could possibly read a value like 90 C or 99 C. or 99 F, depending on precisely when the preemption took place. The partially updated values are clearly incorrect, and are caused by delicate timing coincidences that are very hard to debug or reproduce consistently. An RTOSs mechanisms for communication and synchronization between tasks are provided to avoid these kinds of errors. Synchronization is essential for tasks to share mutually exclusive resources (devices, buffers, etc) and/or allow multiple concurrent tasks to be executed (e.g. Task A needs a result from task B, so task A can only run till task B produces it). Task synchronization is achieved using two types of mechanisms: Event Objects Event objects are used when task synchronization is required without resource sharing. They allow one or more tasks to keep waiting for a specified event to occur. Event object can exist either in triggered or non-triggered state. Triggered state indicates resumption of the task. Semaphores. A semaphore has an associated resource count and a wait queue. The resource count indicates availability of resource. The wait queue manages the tasks waiting for resources from the semaphore. A semaphore functions like a key that define whether a task has the access to the resource. A task gets an access to the resource when it acquires the semaphore. There are three types of semaphore: Binary Semaphores Counting Semaphores Mutually Exclusion(Mutex) Semaphores

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Examples of FallaciesDocument2 pagesExamples of FallaciesSinta HandayaniNo ratings yet

- Matthew - Life For Today Bible Commentary - Andrew Wommack PDFDocument231 pagesMatthew - Life For Today Bible Commentary - Andrew Wommack PDFPascal Chhakchhuak100% (2)

- Case Study ICM Solution For MindTreeDocument3 pagesCase Study ICM Solution For MindTreevinodNo ratings yet

- Vistas.1 8 PDFDocument215 pagesVistas.1 8 PDFCool PersonNo ratings yet

- Reading Plan Homework - Animal FarmDocument2 pagesReading Plan Homework - Animal FarmEstefania CorreaNo ratings yet

- NOTES On CAPITAL BUDGETING PVFV Table - Irr Only For Constant Cash FlowsDocument3 pagesNOTES On CAPITAL BUDGETING PVFV Table - Irr Only For Constant Cash FlowsHussien NizaNo ratings yet

- CHEM BIO Organic MoleculesDocument7 pagesCHEM BIO Organic MoleculesLawrence SarmientoNo ratings yet

- Creative Works - Main Report - ABCDDocument65 pagesCreative Works - Main Report - ABCDvenkatesh subbaiyaNo ratings yet

- 3 Articles From The Master - Bill WalshDocument31 pages3 Articles From The Master - Bill WalshRobert Park100% (1)

- Holding Together Federalism Coming Together Federalism: SSPM'SDocument6 pagesHolding Together Federalism Coming Together Federalism: SSPM'SVivek NairNo ratings yet

- ME 457 Experimental Solid Mechanics (Lab) Torsion Test: Solid and Hollow ShaftsDocument5 pagesME 457 Experimental Solid Mechanics (Lab) Torsion Test: Solid and Hollow Shaftsanon-735529100% (2)

- Evaluation of Modulus of Subgrade Reaction (KS) in Gravely Soils Based On SPT ResultsDocument5 pagesEvaluation of Modulus of Subgrade Reaction (KS) in Gravely Soils Based On SPT ResultsJ&T INGEOTECNIA SERVICIOS GENERALES SACNo ratings yet

- Before Tree of LifeDocument155 pagesBefore Tree of LifeAugusto Meloni100% (1)

- Tyler. The Worship of The Blessed Virgin Mary in The Church of Rome: Contrary To Holy Scripture, and To The Faith and Practice of The Church of Christ Through The First Five Centuries. 1844.Document438 pagesTyler. The Worship of The Blessed Virgin Mary in The Church of Rome: Contrary To Holy Scripture, and To The Faith and Practice of The Church of Christ Through The First Five Centuries. 1844.Patrologia Latina, Graeca et Orientalis0% (1)

- Is Understanding Factive - Catherine Z. Elgin PDFDocument16 pagesIs Understanding Factive - Catherine Z. Elgin PDFonlineyykNo ratings yet

- FULLER Thesaurus of Epigrams 1943Document406 pagesFULLER Thesaurus of Epigrams 1943Studentul2000100% (1)

- Truncation Errors and Taylor Series: Numerical Methods ECE 453Document21 pagesTruncation Errors and Taylor Series: Numerical Methods ECE 453Maria Anndrea MendozaNo ratings yet

- View ResultDocument1 pageView ResultHarshal RahateNo ratings yet

- Forging A Field: The Golden Age of Iron Biology: ASH 50th Anniversary ReviewDocument13 pagesForging A Field: The Golden Age of Iron Biology: ASH 50th Anniversary ReviewAlex DragutNo ratings yet

- Prato 1999Document3 pagesPrato 1999Paola LoloNo ratings yet

- AISHE Final Report 2018-19 PDFDocument310 pagesAISHE Final Report 2018-19 PDFSanjay SharmaNo ratings yet

- Full Download Introduction To Operations and Supply Chain Management 3rd Edition Bozarth Test BankDocument36 pagesFull Download Introduction To Operations and Supply Chain Management 3rd Edition Bozarth Test Bankcoraleementgen1858100% (42)

- I. Plate TectonicsDocument16 pagesI. Plate TectonicsChristian GanganNo ratings yet

- ME 261 Numerical Analysis: System of Linear EquationsDocument15 pagesME 261 Numerical Analysis: System of Linear EquationsTahmeed HossainNo ratings yet

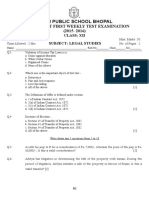

- Legal Studies XII - Improvement First Weekly Test - 2Document3 pagesLegal Studies XII - Improvement First Weekly Test - 2Surbhit ShrivastavaNo ratings yet

- Visual Interpretation QuestionsDocument7 pagesVisual Interpretation Questionssoomro76134No ratings yet

- Anime ListDocument9 pagesAnime ListTeofil MunteanuNo ratings yet

- English A SbaDocument13 pagesEnglish A Sbacarlton jackson50% (2)

- Role EfficacyDocument4 pagesRole EfficacyEkta Soni50% (2)

- The Impact of Culture On The Quality of Internal Audit An Empirical Study.Document22 pagesThe Impact of Culture On The Quality of Internal Audit An Empirical Study.Vaisal AmirNo ratings yet