0% found this document useful (0 votes)

55 views8 pagesQuantile Regression Lecture Notes

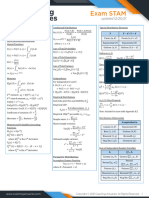

Quantile regression (QR) extends classical regression by analyzing the conditional distribution of a variable Y across different quantiles, rather than just focusing on the mean. Developed by Koenker and Bassett in 1978, QR allows for a more comprehensive understanding of relationships between Y and other variables X by utilizing optimization techniques for estimating conditional quantile functions. The quantile function serves as the inverse of the cumulative distribution function, providing insights into the distribution of data values at specified probabilities.

Uploaded by

prottoy142000Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

55 views8 pagesQuantile Regression Lecture Notes

Quantile regression (QR) extends classical regression by analyzing the conditional distribution of a variable Y across different quantiles, rather than just focusing on the mean. Developed by Koenker and Bassett in 1978, QR allows for a more comprehensive understanding of relationships between Y and other variables X by utilizing optimization techniques for estimating conditional quantile functions. The quantile function serves as the inverse of the cumulative distribution function, providing insights into the distribution of data values at specified probabilities.

Uploaded by

prottoy142000Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd