Professional Documents

Culture Documents

Dynamic Programming1

Uploaded by

Mohammad Bilal MirzaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Dynamic Programming1

Uploaded by

Mohammad Bilal MirzaCopyright:

Available Formats

1

Chapter 15

Dynamic Programming

2

Introduction

Optimization problem: there can be many possible solution.

Each solution has a value, and we wish to find a solution

with the optimal (minimum or maximum) value

Dynamic Programming VS. Divide-and-Conquer

Solve problems by combining the solutions to sub-problems

The sub-problems of D-A-C are non-overlap

The sub-problems of D-P are overlap

Sub-problems share sub-sub-problems

D-A-C solves the common sub-sub-problems repeatedly

D-P solves every sub-sub-problems once and stores its

answer in a table

Programming refers to a tabular method

3

Development of A Dynamic-

Programming Algorithm

Characterize the structure of an optimal solution

Recursively define the value of an optimal solution

Compute the value of an optimal solution in a bottom-up

fashion

Construct an optimal solution from computed information

4

Assembly-Line Scheduling

5

Problem Definition

e

1

, e

2

: time to enter assembly lines 1 and 2

x

1

, x

2

: time to exit assembly lines 1 and 2

t

i,j

: time to transfer from assembly line 12

or 21

a

i,j

: processing time in each station

Time between

adjacent stations

are 0

2

n

possible solutions

6

7

Step 1

The structure of the fastest way through the factory (from

the starting point)

The fastest possible way through S

1,1

(similar for S

2,1

)

Only one way take time e

1

The fastest possible way through S

1,j

for j=2, 3, ..., n (similar for S

2,j

)

S

1,j-1

S

1,j

: T

1,j-1

+ a

1,j

If the fastest way through S

1,j

is through S

1,j-1

must have

taken a fastest way through S

1,j-1

S

2,j-1

S

1,j

: T

2,j-1

+ t

2,j-1

+ a

1,j

Same as above

An optimal solution contains an optimal solution to sub-

problems optimal substructure (Remind: D-A-C)

8

S1,1 2 + 7 = 9

S2,1 4 + 8 = 12

9

S1,1 2 + 7 = 9

S2,1 4 + 8 = 12

S1,2 =

S1,1 + 9 = 9 + 9 = 18

S2,1 + 2 + 9 = 12 + 2 + 9 = 23

S2,2 =

S1,1 + 2 + 5 = 9 + 2 + 5 = 16

S2,1 + 5 = 12 + 5 = 17

10

S1,2 18

S2,2 16

S1,3 =

S1,2 + 3 = 18 + 3 = 21

S2,2 + 1 + 3 = 16 + 1 + 3 = 20

S2,3 =

S1,2 + 3 + 6 = 16 + 3 + 6 = 25

S2,2 + 6 = 16 + 6 = 22

11

Step 2

A recursive solution

Define the value of an optimal solution recursively in terms of the

optimal solution to sub-problems

Sub-problem here: finding the fastest way through station j on both

lines

f

i

[j]: fastest possible time to go from starting pint through S

i,j

The fastest time to go all the way through the factory: f*

f* = min(f

1

[n] + x

1

, f

2

[n] + x

2

)

Boundary condition

f

1

[1] = e

1

+ a

1,1

f

2

[1] = e

2

+ a

2,1

12

Step 2 (Cont.)

A recursive solution (Cont.)

The fastest time to go through S

i,j

(for j=2,..., n)

f

1

[j] = min(f

1

[j-1] + a

1,j

, f

2

[j-1] + t

2,j-1

+ a

2,j

)

f

2

[j] = min(f

2

[j-1] + a

2,j

, f

1

[j-1] + t

1,j-1

+ a

2,j

)

l

i

[j]: the line number whose station j-1 is used in a fastest way

through S

i,j

(i=1, 2, and j=2, 3,..., n)

l* : the line whose station n is used in a fastest way through the

entire factory

13

Step 3

Computing the fastest times

you can write a divide-and-conquer recursive algorithm now...

But the running time is O(2

n

)

r

i

(j) = the number of recurrences made to f

i

[j] recursively

r

1

(n) = r

2

(n) =1

r

1

(j) = r

2

(j) = r

1

(j+1) + r

2

(j+1) r

i

(j) =2

n-j

Observe that for j>2, f

i

[j] depends only one f

1

[j-1] and f

2

[j-1]

compute f

i

[j] in order of increasing station number j and store f

i

[j]

in a table

O(n)

14

15

16

Step 4

Constructing the fastest way through the factory

line 1, station 6

line 2, station 5

line 2, station 4

line 1, station 3

line 2, station 2

line 1, station 1

17

Elements of Dynamic

Programming

Optimal substructure

Overlapping subproblems

18

Optimal Substructure

A problem exhibits optimal substructure if an optimal

solution contains within it optimal solutions to subproblems

Build an optimal solution from optimal solutions to subproblems

Example

Assembly-line scheduling: the fastest way through station j of either

line contains within it the fastest way through station j-1 on one line

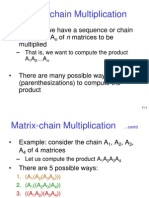

Matrix-chain multiplication: An optimal parenthesization of A

i

A

i+1

A

j

that splits the product between A

k

and A

k+1

contains within it optimal

solutions to the problem of parenthesizing A

i

A

i+1

A

k

and

A

k+1

A

k+2

A

j

19

Common Pattern in Discovering

Optimal Substructure

Show a solution to the problem consists of making a choice.

Making the choice leaves one or more subproblems to be

solved.

Suppose that for a given problem, the choice that leads to

an optimal solution is available.

Given this optimal choice, determine which subproblems

ensue and how to best characterize the resulting space of

subproblems

Show that the solutions to the subproblems used within the

optimal solution to the problem must themselves be optimal

by using a cut-and-paste technique and prove by

contradiction

20

Illustration of Optimal

SubStructure

A

1

A

2

A

3

A

4

A

5

A

6

A

7

A

8

A

9

Suppose ((A

7

A

8

)A

9

) is optimal ((A

1

A

2

)(A

3

((A

4

A

5

)A

6

)))

Minimal

Cost_A

1..6

+ Cost_A

7..9

+p

0

p

6

p

9

(A

3

((A

4

A

5

)A

6

)) (A

1

A

2

) Then must be optimal for A

1

A

2

A

3

A

4

A

5

A

6

Otherwise, if ((A

4

A

5

)A

6

) (A

1

(A

2

A

3

)) is optimal for A

1

A

2

A

3

A

4

A

5

A

6

Then ((A

1

(A

2

A

3

)) ((A

4

A

5

)A

6

)) ((A

7

A

8

)A

9

) will be better than

((A

7

A

8

)A

9

) ((A

1

A

2

)(A

3

((A

4

A

5

)A

6

)))

21

Characterize the Space of

Subproblems

Rule of thumb: keep the space as small as possible, and

then to expand it as necessary

Assembly-line scheduling: S

1,j

and S

2,j

are enough

Matrix-chain multiplication: how about A

1

A

2

A

j

?

A

1

A

2

A

k

and A

k+1

A

k+2

A

j

need to vary at both hand

Therefore, the subproblems should have the form A

i

A

i+1

A

j

22

Characteristics of Optimal

Substructure

How many subproblems are used in an optimal solution to the original

problem?

Assembly-line scheduling: 1 (S

1,j-1

or S

2,j-1

)

Matrix-chain scheduling: 2 (A

1

A

2

A

k

and A

k+1

A

k+2

A

j

)

How may choice we have in determining which subproblems to use in

an optimal solution?

Assembly-line scheduling: 2 (S

1,j-1

or S

2,j-1

)

Matrix-chain scheduling: j - i (choice for k)

Informally, the running time of a dynamic-programming algorithm relies

on: the number of subproblems overall and how many choices we look

at for each subproblem

Assembly-line scheduling: O(n) * 2 = O(n)

Matrix-chain scheduling: O(n

2

) * O(n) = O(n

3

)

23

Dynamic Programming VS.

Greedy Algorithms

Dynamic programming uses optimal substructure in a

bottom-up fashion

First find optimal solutions to subproblems and, having solved the

subproblems, we find an optimal solution to the problem

Greedy algorithms use optimal substructure in a top-down

fashion

First make a choice the choice that looks best at the time and

then solving a resulting subproblem

24

Subtleties Need Experience

Sometimes an optimal substructure does not exist

Consider the following two problems in which we are given a

directed graph G=(V, E) and vertices u, v eV

Unweighted shortest path: Find a path from u to v consisting the

fewest edges. Such a path must be simple (no cycle).

Optimal substructure? YES

We can find a shortest path from u to v by considering all

intermediate vertices w, finding a shortest path from u to w and a

shortest path from w to v, and choosing an intermediate vertex w

that yields the overall shortest path

Unweighted longest simple path: Find a simple path from u to v

consisting the most edges.

Optimal substructure? NO. WHY?

25

A

B

C

D

E

F

G

UnWeighted Shortest Path

ABEGH is optimal for A to H

Therefore, ABE must be optimal for A to E; GH must be optimal for G to H

H

I

26

No Optimal Substructure in

Unweighted Longest Simple Path

Sometimes we cannot assemble a legal solution to the problem from solutions to

subproblems (qstr + rqst = qstrqst)

Unweighted longest simple path is NP-complete: it is

unlikely that it can be solved in polynomial time

27

Independent Subproblems

In dynamic programming, the solution to one subproblem

must not affect the solution to another subproblem

The subproblems in finding the longest simple path are not

independent

qt: qr + rt

qstr: we can no longer use s and t in the second

subproblem ... Sigh!!!

28

Overlapping SubProblems

The space of subproblems must be small in the sense that a

recursive algorithm for the problem solves the same

subproblems over and over, rather than always generating

new subproblems

Typically, the total number of distinct subproblems is a polynomial in

the input size

Divide-and-Conquer is suitable usually generate brand-new

problems at each step of the recursion

Dynamic-programming algorithms take advantage of

overlapping subproblems by solving each subproblem once

and then storing the solution in a table where it can be

looked up when needed, using constant time per lookup

29

m[3,4] is computed twice

30

Comparison

31

Recursive Procedure for

Matrix-Chain Multiplication

The time to compute m[1..n] is at least exponential in n

Prove T(n) = O(2

n

) using the substitution method

Show that T(n) > 2

n-1

+ >

+ + + >

>

=

1

1

1

1

) ( 2 ) (

) 1 ) ( ) ( ( 1 ) (

1 ) 1 (

n

i

n

k

n i T n T

k n T k T n T

T

1 1

2

0

1

1

1

2 ) 2 2 ( ) 1 2 ( 2

2 2 2 2 ) (

=

> + = + =

= +

= + >

n n n

n

i

i

n

i

i

n n

n n n T

32

Reconstructing An Optimal

Solution

As a practical matter, we often store which choice we made

in each subproblem in a table so that we do not have to

reconstruct this information from the costs that we stored

Self study the costs of reconstructing optimal solutions in the cases

of assembly-line scheduling and matrix-chain multiplication, without

l

i

[j] and s[i, j] (Page 347)

33

Memoization

A variation of dynamic programming that often offers the

efficiency of the usual dynamic-programming approach

while maintaining a top-down strategy

Memoize the natural, but inefficient, recursive algorithm

Maintain a table with subproblem solutions, but the control structure

for filling in the table is more like the recursive algorithm

Memoization for matrix-chain multiplication

Calls in which m[i, j] = O(n

2

) calls

Calls in which m[i, j] < O(n

3

) calls

Turns an O(2

n

)-time algorithm into an O(n

3

)-time algorithm

34

35

LOOKUP-CHAIN(p, i, j)

if m[i, j] <

then return m[i, j]

if i=j

then m[i, j] 0

else for k i to j-1

do qLOOKUP-CHAIN(p, i, k)

+ LOOKUP-CHAIN(p, k+1, j) + p

i-1

p

k

p

j

if q < m[i, j]

then m[i, j] q

return m[i, j]

Comparison

36

37

Dynamic Programming VS.

Memoization

If all subproblems must be solved at least once, a bottom-up

dynamic-programming algorithm usually outperforms a top-

down memoized algorithm by a constant factor

No overhead for recursion and less overhead for maintaining table

There are some problems for which the regular pattern of table

accesses in the dynamic-programming algorithm can be exploited to

reduce the time or space requirements even further

If some subproblems in the subproblem space need not be

solved at all, the memoized solution has the advantage of

solving only those subproblems that are definitely required

38

Self-Study

Two more dynamic-programming problems

Section 15.4 Longest common subsequence

Section 15.5 Optimal binary search trees

You might also like

- Dynamic ProgrammingDocument47 pagesDynamic Programmingrahulj_171No ratings yet

- Dynamic ProgrammingDocument52 pagesDynamic ProgrammingattaullahchNo ratings yet

- Dynamic ProgrammingDocument44 pagesDynamic ProgrammingAarzoo VarshneyNo ratings yet

- Fundamentals of Algorithms 10B11CI411: Dynamic Programming Instructor: Raju PalDocument70 pagesFundamentals of Algorithms 10B11CI411: Dynamic Programming Instructor: Raju PalSurinder PalNo ratings yet

- COSC 3101A - Design and Analysis of Algorithms 7Document50 pagesCOSC 3101A - Design and Analysis of Algorithms 7Aaqib InamNo ratings yet

- Programacion Dinamica Sin BBDocument50 pagesProgramacion Dinamica Sin BBandresNo ratings yet

- To Print - Dynprog2Document46 pagesTo Print - Dynprog2sourish.js2021No ratings yet

- Dynamicprogramming 160512234533Document30 pagesDynamicprogramming 160512234533Abishek joshuaNo ratings yet

- Design and Analysis of AlgorithmDocument89 pagesDesign and Analysis of AlgorithmMuhammad KazimNo ratings yet

- DAADocument62 pagesDAAanon_385854451No ratings yet

- To Read Dynprog2Document50 pagesTo Read Dynprog2sourish.js2021No ratings yet

- COSC 3100 Brute Force and Exhaustive Search: Instructor: TanvirDocument44 pagesCOSC 3100 Brute Force and Exhaustive Search: Instructor: TanvirPuneet MehtaNo ratings yet

- Lec11 Dynamic ProgrammingDocument36 pagesLec11 Dynamic Programmingmarah qadiNo ratings yet

- Dynamic ProgrammingDocument35 pagesDynamic ProgrammingMuhammad JunaidNo ratings yet

- Dynamic ProgrammingDocument7 pagesDynamic ProgrammingGlenn GibbsNo ratings yet

- Dynamic ProgrammingDocument38 pagesDynamic ProgrammingSagar LabadeNo ratings yet

- U 2Document20 pagesU 2ganeshrjNo ratings yet

- 01 CS251 Ch2 Getting StartedDocument20 pages01 CS251 Ch2 Getting StartedAhmad AlarabyNo ratings yet

- Dynamic Programming - General MethodDocument13 pagesDynamic Programming - General MethodArunNo ratings yet

- Design & Analysis of Algorithms (DAA) Unit - IIIDocument17 pagesDesign & Analysis of Algorithms (DAA) Unit - IIIYogi NambulaNo ratings yet

- Greedy Appraoch and Dynamic ProgrammingDocument60 pagesGreedy Appraoch and Dynamic Programmingrocky singhNo ratings yet

- Recursion: Breaking Down Problems Into Solvable SubproblemsDocument26 pagesRecursion: Breaking Down Problems Into Solvable Subproblemsalbert_kerrNo ratings yet

- Btech Degree Examination, May2014 Cs010 601 Design and Analysis of Algorithms Answer Key Part-A 1Document14 pagesBtech Degree Examination, May2014 Cs010 601 Design and Analysis of Algorithms Answer Key Part-A 1kalaraijuNo ratings yet

- Design Analysis and AlgorithmDocument83 pagesDesign Analysis and AlgorithmLuis Anderson100% (2)

- Unit 4Document28 pagesUnit 4Dr. Nageswara Rao EluriNo ratings yet

- Course Hero Final Exam Soluts.Document3 pagesCourse Hero Final Exam Soluts.Christopher HaynesNo ratings yet

- MIT Dynamic Programming Lecture SlidesDocument261 pagesMIT Dynamic Programming Lecture SlidesscatterwalkerNo ratings yet

- Dynamic ProgrammingDocument51 pagesDynamic ProgrammingAbhishekGoyal0% (1)

- Week 7 & 11 - Dynamic Programming StrategyDocument58 pagesWeek 7 & 11 - Dynamic Programming StrategyDisha GuptaNo ratings yet

- Unit-1 DAA - NotesDocument25 pagesUnit-1 DAA - Notesshivam02774No ratings yet

- Algorithms Analysis: Minimum and Maximum AlgDocument44 pagesAlgorithms Analysis: Minimum and Maximum Algajay_zadbukeNo ratings yet

- הרצאה 9Document64 pagesהרצאה 9דוד סיידוןNo ratings yet

- Ada Module 3 NotesDocument40 pagesAda Module 3 NotesLokko PrinceNo ratings yet

- Design & Analysis of Algorithms: Bits, Pilani - K. K. Birla Goa CampusDocument27 pagesDesign & Analysis of Algorithms: Bits, Pilani - K. K. Birla Goa CampusSohan MisraNo ratings yet

- Dynamic Programing and Optimal ControlDocument276 pagesDynamic Programing and Optimal ControlalexandraanastasiaNo ratings yet

- Daa Unit VnotesDocument6 pagesDaa Unit VnotesAdi SinghNo ratings yet

- Operations ResearchDocument19 pagesOperations ResearchKumarNo ratings yet

- Unit 1 Daa Notes Daa Unit 1 NoteDocument26 pagesUnit 1 Daa Notes Daa Unit 1 NoteSaurabh GuptaNo ratings yet

- Dynamic ProgrammingDocument36 pagesDynamic ProgrammingHuzaifa MuhammadNo ratings yet

- MCS 031Document15 pagesMCS 031Bageesh M BoseNo ratings yet

- Lecture 24 (Matrix Chain Multiplication)Document17 pagesLecture 24 (Matrix Chain Multiplication)Almaz RizviNo ratings yet

- Eng HuyDQ Chapter-1-Fundementals 2023Document83 pagesEng HuyDQ Chapter-1-Fundementals 2023Nghĩa MaiNo ratings yet

- Matrix-Chain Multiplication: - Suppose We Have A Sequence or Chain A, A,, A of N Matrices To Be MultipliedDocument15 pagesMatrix-Chain Multiplication: - Suppose We Have A Sequence or Chain A, A,, A of N Matrices To Be Multipliedrosev15No ratings yet

- Algorithms and Data StructureDocument29 pagesAlgorithms and Data StructurealinNo ratings yet

- Dynamic Programming: Ananth Grama, Anshul Gupta, George Karypis, and Vipin KumarDocument42 pagesDynamic Programming: Ananth Grama, Anshul Gupta, George Karypis, and Vipin KumarAnikVNo ratings yet

- 2-Divide and Conquer ApproachDocument162 pages2-Divide and Conquer ApproachKartik VermaNo ratings yet

- Dynamic ProgrammingDocument26 pagesDynamic Programmingshraddha pattnaikNo ratings yet

- Cse408 Ete MCQ Que AnsDocument23 pagesCse408 Ete MCQ Que Anssatishpakalapati65No ratings yet

- DAA-Module 4Document75 pagesDAA-Module 4Zeha 1No ratings yet

- Practice Sheet Divide and ConquerDocument5 pagesPractice Sheet Divide and ConquerApoorva GuptaNo ratings yet

- Levitin: Introduction To The Design and Analysis of AlgorithmsDocument35 pagesLevitin: Introduction To The Design and Analysis of Algorithmsjani28cseNo ratings yet

- Data Structures and Algorithms Unit - V: Dynamic ProgrammingDocument19 pagesData Structures and Algorithms Unit - V: Dynamic ProgrammingVaruni DeviNo ratings yet

- CORE - 14: Algorithm Design Techniques (Unit - 1)Document7 pagesCORE - 14: Algorithm Design Techniques (Unit - 1)Priyaranjan SorenNo ratings yet

- 55 - BD - Data Structures and Algorithms - Narasimha KarumanchiDocument19 pages55 - BD - Data Structures and Algorithms - Narasimha KarumanchiTritonCPCNo ratings yet

- Branch&Bound MIPDocument35 pagesBranch&Bound MIPperplexeNo ratings yet

- Unit 1 Daa Notes Daa Unit 1 NoteDocument26 pagesUnit 1 Daa Notes Daa Unit 1 NoteJayanti GuptaNo ratings yet

- Module 4 AOADocument97 pagesModule 4 AOANiramay KNo ratings yet

- University Solution 19-20Document33 pagesUniversity Solution 19-20picsichubNo ratings yet

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"From EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Rating: 2.5 out of 5 stars2.5/5 (2)

- Nopcommerce: Presented By: Muhammad Bilal (Bilal M.) Pse Datumsquar It ServicesDocument5 pagesNopcommerce: Presented By: Muhammad Bilal (Bilal M.) Pse Datumsquar It ServicesMohammad Bilal MirzaNo ratings yet

- Presented To:: Dr. Nadir ShahDocument15 pagesPresented To:: Dr. Nadir ShahMohammad Bilal MirzaNo ratings yet

- Housing Dte IssueDocument1 pageHousing Dte IssueMohammad Bilal MirzaNo ratings yet

- NaatDocument2 pagesNaatMohammad Bilal Mirza0% (1)

- Assembly Language Programming - CS401 Power Point Slides Lecture 08Document27 pagesAssembly Language Programming - CS401 Power Point Slides Lecture 08Mohammad Bilal MirzaNo ratings yet

- High Performance ComputingDocument6 pagesHigh Performance ComputingMohammad Bilal MirzaNo ratings yet

- ACA Microprocessor and Thread Level ParallelismDocument41 pagesACA Microprocessor and Thread Level ParallelismMohammad Bilal MirzaNo ratings yet

- Back TrackingDocument36 pagesBack TrackingMohammad Bilal MirzaNo ratings yet

- Darcy Ripper User ManualDocument17 pagesDarcy Ripper User ManualMohammad Bilal MirzaNo ratings yet

- Text Figure (4.2) 1D Discrete Fourier TransformDocument20 pagesText Figure (4.2) 1D Discrete Fourier TransformMohammad Bilal MirzaNo ratings yet

- FreebsdDocument47 pagesFreebsdMohammad Bilal MirzaNo ratings yet

- G52MAL: Lecture 18: Recursive-Descent Parsing: Elimination of Left RecursionDocument26 pagesG52MAL: Lecture 18: Recursive-Descent Parsing: Elimination of Left RecursionMohammad Bilal MirzaNo ratings yet

- Opnet Projdec RipDocument7 pagesOpnet Projdec RipMohammad Bilal MirzaNo ratings yet

- Aa GTG 000024Document35 pagesAa GTG 000024Edith Moreno UrzuaNo ratings yet

- Learning Centered AssDocument4 pagesLearning Centered AssNica Rowena Bacani CajimatNo ratings yet

- Listening Was Defined by Julian Treasure A2Document2 pagesListening Was Defined by Julian Treasure A2kim olimbaNo ratings yet

- Equipment For Science Ed En93Document100 pagesEquipment For Science Ed En93Rene John Bulalaque EscalNo ratings yet

- Loads Dead Loads Imposed Loads Floor Roof Determining Load Per M and m2 WindDocument58 pagesLoads Dead Loads Imposed Loads Floor Roof Determining Load Per M and m2 Windwaheedopple3998No ratings yet

- Olympic Message SystemDocument16 pagesOlympic Message Systemtrevor randyNo ratings yet

- #1Document74 pages#1Brianne Yuen TyskNo ratings yet

- 7.2.5 APQP Phase 2 Checklist Dec 2013Document21 pages7.2.5 APQP Phase 2 Checklist Dec 2013Mani Rathinam RajamaniNo ratings yet

- What Is SAP Reconciliation Account - ERProofDocument10 pagesWhat Is SAP Reconciliation Account - ERProofShailesh SuranaNo ratings yet

- SAP CRM Technical CourseDocument8 pagesSAP CRM Technical CoursesupreethNo ratings yet

- Epilogue Magazine, March 2010Document56 pagesEpilogue Magazine, March 2010Epilogue MagazineNo ratings yet

- STAT1008 Cheat SheetDocument1 pageSTAT1008 Cheat SheetynottripNo ratings yet

- Answers To Case Application Ch1-8Document13 pagesAnswers To Case Application Ch1-8553601750% (2)

- East Sitra Housing Development: Schedule of ColumnDocument1 pageEast Sitra Housing Development: Schedule of ColumnKhaleelNo ratings yet

- Atex Flow ChartDocument1 pageAtex Flow ChartMohammad KurdiaNo ratings yet

- Kaizen MR - Final PDFDocument65 pagesKaizen MR - Final PDFbhatiaharryjassiNo ratings yet

- Cobol Batch To Groovy ScriptDocument34 pagesCobol Batch To Groovy ScriptDavid BermudezNo ratings yet

- Cellular Respiration ExplanationDocument2 pagesCellular Respiration Explanationvestermail50% (2)

- Action Research: Repeated Reading To Improve Students' Reading FluencyDocument4 pagesAction Research: Repeated Reading To Improve Students' Reading FluencyIylia NatasyaNo ratings yet

- Strategic Planning ModelDocument55 pagesStrategic Planning ModelShamkant KomarpantNo ratings yet

- Command ReferenceDocument368 pagesCommand Referenceom007No ratings yet

- CS604 - Operating SystemsDocument11 pagesCS604 - Operating SystemsAsadNo ratings yet

- 36-217 Fall 2013 HW04Document2 pages36-217 Fall 2013 HW04Gabriel Bernard MullenNo ratings yet

- The ChE As Sherlock Holmes Investigating Process IncidentsDocument7 pagesThe ChE As Sherlock Holmes Investigating Process IncidentsCamilo MoraNo ratings yet

- The Art of Fishing PDFDocument27 pagesThe Art of Fishing PDFsilentkillersbh1729No ratings yet

- 1st Opp ExamPaper - CISM 122Document4 pages1st Opp ExamPaper - CISM 122Sbu JohannesNo ratings yet

- NSG 541 Final RequirementDocument4 pagesNSG 541 Final Requirementapi-2605143920% (1)

- Correspondence Option: Society of Cosmetic Chemists South Africa Tel:011 792-4531 Email:bridget@coschem - Co.zaDocument1 pageCorrespondence Option: Society of Cosmetic Chemists South Africa Tel:011 792-4531 Email:bridget@coschem - Co.zanblaksNo ratings yet

- Holliday - Native SpeakerismDocument3 pagesHolliday - Native SpeakerismDánisaGarderesNo ratings yet

- Gender Theory SummaryDocument10 pagesGender Theory SummaryDanar CristantoNo ratings yet