0% found this document useful (0 votes)

448 views18 pagesTheory of Constrained Optimization

This document discusses the theory of constrained optimization, including:

- Using Lagrange multipliers to convert constrained problems into unconstrained problems by defining a Lagrange function

- The Karush-Kuhn-Tucker (KKT) conditions that provide both necessary and sufficient conditions for optimality for problems with equality and inequality constraints

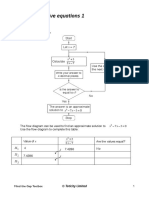

- Solving the KKT equations using an iterative procedure that determines which inequality constraints are active at each step

Uploaded by

Bro EdwinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

448 views18 pagesTheory of Constrained Optimization

This document discusses the theory of constrained optimization, including:

- Using Lagrange multipliers to convert constrained problems into unconstrained problems by defining a Lagrange function

- The Karush-Kuhn-Tucker (KKT) conditions that provide both necessary and sufficient conditions for optimality for problems with equality and inequality constraints

- Solving the KKT equations using an iterative procedure that determines which inequality constraints are active at each step

Uploaded by

Bro EdwinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd