Professional Documents

Culture Documents

Goal:: Want To Find The Maximum or Minimum of A Function Subject To Some Constraints

Uploaded by

arst0 ratings0% found this document useful (0 votes)

15 views7 pageskkt conditions

Original Title

KKT

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentkkt conditions

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

15 views7 pagesGoal:: Want To Find The Maximum or Minimum of A Function Subject To Some Constraints

Uploaded by

arstkkt conditions

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 7

Optimization

Goal:

Want to find the maximum or minimum of a function subject to

some constraints.

Formal Statement of Problem:

Given functions f , g1 , . . . , gm and h1 , . . . , hl defined on some

domain ⌦ ⇢ Rn the optimization problem has the form

min f (x) subject to gi (x) 0 8i and hj (x) = 0 8j

x2⌦

In these notes..

We will derive/state sufficient and necessary for (local) optimality

when there are

1 no constraints,

2 only equality constraints,

3 only inequality constraints,

4 equality and inequality constraints.

From this fact Lagrange Multipliers make sense

Remember our constrained optimization problem is

min f (x) subject to h(x) = 0

x2R2

Define the Lagrangian as note L(x⇤ , µ⇤ ) = f (x⇤ )

#

L(x, µ) = f (x) + µ h(x)

Then x⇤ a local minimum () there exists a unique µ⇤ s.t.

1 rx L(x⇤ , µ⇤ ) = 0 encodes rx f (x⇤ ) = µ⇤ rx h(x⇤ )

2 rµ L(x⇤ , µ⇤ ) = 0 encodes the equality constraint h(x⇤ ) = 0

3 yt (r2xx L(x⇤ , µ⇤ ))y 0 8y s.t. rx h(x⇤ )t y = 0

"

Positive definite Hessian tells us we have a local minimum

Summary of optimization with one inequality constraint

Given

min f (x) subject to g(x) 0

x2R2

If x⇤ corresponds to a constrained local minimum then

Case 1: Case 2:

Unconstrained local minimum Unconstrained local minimum

occurs in the feasible region. lies outside the feasible region.

1 g(x⇤ ) < 0 1 g(x⇤ ) = 0

2 rx f (x⇤ ) = 0 2 rx f (x⇤ ) = rx g(x⇤ )

with > 0

3 rxx f (x⇤ ) is a positive

semi-definite matrix. 3 yt rxx L(x⇤ ) y 0 for all

y orthogonal to rx g(x⇤ ).

Karush-Kuhn-Tucker conditions encode these conditions

Given the optimization problem

min f (x) subject to g(x) 0

x2R2

Define the Lagrangian as

L(x, ) = f (x) + g(x)

Then x⇤ a local minimum () there exists a unique ⇤

s.t.

1 rx L(x⇤ , ⇤

)=0

⇤

2 0

⇤

3 g(x⇤ ) = 0

4 g(x⇤ ) 0

5 Plus positive definite constraints on rxx L(x⇤ , ⇤

).

These are the KKT conditions.

Let’s check what the KKT conditions imply

Case 1 - Inactive constraint:

• When ⇤ = 0 then have L(x⇤ , ⇤) = f (x⇤ ).

• Condition KKT 1 =) rx f (x⇤ ) = 0.

• Condition KKT 4 =) x⇤ is a feasible point.

Case 2 - Active constraint:

• When ⇤ > 0 then have L(x⇤ , ⇤) = f (x⇤ ) + ⇤ g(x⇤ ).

• Condition KKT 1 =) rx f (x⇤ ) = ⇤r

x g(x

⇤ ).

• Condition KKT 3 =) g(x⇤ ) = 0.

• Condition KKT 3 also =) L(x⇤ , ⇤ ) = f (x⇤ ).

KKT for multiple equality & inequality constraints

Given the constrained optimization problem

min f (x)

x2R2

subject to

hi (x) = 0 for i = 1, . . . , l and gj (x) 0 for j = 1, . . . , m

Define the Lagrangian as

t

L(x, µ, ) = f (x) + µt h(x) + g(x)

⇤

Then x⇤ a local minimum () there exists a unique s.t.

1 rx L(x⇤ , µ⇤ , ⇤

)=0

⇤

2 j 0 for j = 1, . . . , m

⇤

3 j gj (x⇤ ) = 0 for j = 1, . . . , m

4 gj (x⇤ ) 0 for j = 1, . . . , m

5 h(x⇤ ) = 0

6 Plus positive definite constraints on rxx L(x⇤ , ⇤

).

You might also like

- Lagrange PDFDocument22 pagesLagrange PDFTrần Mạnh HùngNo ratings yet

- K TtheoryDocument7 pagesK TtheoryMarcelo AlonsoNo ratings yet

- NLP Lecture NoteDocument9 pagesNLP Lecture NoteVinay DuttaNo ratings yet

- Karush Kuhn TuckerDocument14 pagesKarush Kuhn TuckerAVALLESTNo ratings yet

- MS&E 318 (CME 338) Large-Scale Numerical Optimization: Stanford University, Management Science & Engineering (And ICME)Document6 pagesMS&E 318 (CME 338) Large-Scale Numerical Optimization: Stanford University, Management Science & Engineering (And ICME)JerryNo ratings yet

- Baccari2004 - On The Classical Necessary Second-Order Optimality ConditionsDocument9 pagesBaccari2004 - On The Classical Necessary Second-Order Optimality ConditionsvananhnguyenNo ratings yet

- Lecture 6: Optimality Conditions For Nonlinear ProgrammingDocument28 pagesLecture 6: Optimality Conditions For Nonlinear ProgrammingWei GuoNo ratings yet

- Constrained Optimization Rel. 01Document5 pagesConstrained Optimization Rel. 01alessandropuce85No ratings yet

- Operaciones Repaso de OptimizacionDocument21 pagesOperaciones Repaso de OptimizacionMigu3lspNo ratings yet

- Chapter 3Document7 pagesChapter 3Bedboudi Med LamineNo ratings yet

- LA Opti Assignment 53.1 Lagrangian DualityDocument8 pagesLA Opti Assignment 53.1 Lagrangian DualityGandhimathinathanNo ratings yet

- Lecture3 PDFDocument42 pagesLecture3 PDFAledavid Ale100% (1)

- Lagrangian Opt PDFDocument2 pagesLagrangian Opt PDF6doitNo ratings yet

- Andreani2010 - Constant-Rank Condition and Second-Order Constraint QualificationDocument12 pagesAndreani2010 - Constant-Rank Condition and Second-Order Constraint QualificationvananhnguyenNo ratings yet

- Augmented Lagrangian Methods: M Ario A. T. Figueiredo and Stephen J. WrightDocument54 pagesAugmented Lagrangian Methods: M Ario A. T. Figueiredo and Stephen J. WrightJerryNo ratings yet

- KKT Conditions and Duality: March 23, 2012Document36 pagesKKT Conditions and Duality: March 23, 2012chgsnsaiNo ratings yet

- Karush Kuhn Tucker SlidesDocument45 pagesKarush Kuhn Tucker Slidesmatchman6No ratings yet

- The Method of Lagrange MultipliersDocument4 pagesThe Method of Lagrange MultipliersSon HoangNo ratings yet

- Homework 2: Mathematics For AI: AIT2005Document3 pagesHomework 2: Mathematics For AI: AIT2005Anh HoangNo ratings yet

- Convex Module BDocument29 pagesConvex Module Bchinaski06No ratings yet

- Viscosity Solutions For Stochastic Control Problems SeminarDocument68 pagesViscosity Solutions For Stochastic Control Problems SeminarOpalNo ratings yet

- Slides 01Document139 pagesSlides 01supeng huangNo ratings yet

- Wright SlidesDocument232 pagesWright SlidesKun DengNo ratings yet

- Chapter 4: Derivatives of Logarithmic Function Logarithmic FunctionsDocument19 pagesChapter 4: Derivatives of Logarithmic Function Logarithmic Functionsathia solehahNo ratings yet

- DP NoasDocument10 pagesDP Noasapurva.tudekarNo ratings yet

- Operation ResearchDocument23 pagesOperation ResearchTahera ParvinNo ratings yet

- Non Linear Programming ProblemsDocument66 pagesNon Linear Programming Problemsbits_who_am_iNo ratings yet

- Necessary and Sufficient Conditions For Optimality of Primal-Dual PairsDocument17 pagesNecessary and Sufficient Conditions For Optimality of Primal-Dual PairsSamuel Alfonzo Gil BarcoNo ratings yet

- Quantum MechanicsDocument11 pagesQuantum MechanicsEverly NNo ratings yet

- 16.323 Principles of Optimal Control: Mit OpencoursewareDocument3 pages16.323 Principles of Optimal Control: Mit OpencoursewareMohand Achour TouatNo ratings yet

- F (T, X, X, - . - , X F Is A Function Defined On Some Subset of T R, R, R, RDocument6 pagesF (T, X, X, - . - , X F Is A Function Defined On Some Subset of T R, R, R, RPres Asociatie3kNo ratings yet

- Problems Session 5 PDFDocument5 pagesProblems Session 5 PDFsovvalNo ratings yet

- KKT PDFDocument5 pagesKKT PDFTrần Mạnh HùngNo ratings yet

- Summary Notes On Maximization: KC BorderDocument20 pagesSummary Notes On Maximization: KC BorderMiloje ĐukanovićNo ratings yet

- Theory of Constrained OptimizationDocument18 pagesTheory of Constrained OptimizationBro EdwinNo ratings yet

- Equality Constrained Optimization: Daniel P. RobinsonDocument33 pagesEquality Constrained Optimization: Daniel P. RobinsonJosue CofeeNo ratings yet

- Part II: Lagrange Multiplier Method & Karush-Kuhn-Tucker (KKT) ConditionsDocument5 pagesPart II: Lagrange Multiplier Method & Karush-Kuhn-Tucker (KKT) ConditionsagrothendieckNo ratings yet

- Constrained OptimizationDocument9 pagesConstrained OptimizationananyaajatasatruNo ratings yet

- Karush-Kuhn-Tucker Conditions: Geoff Gordon & Ryan Tibshirani Optimization 10-725 / 36-725Document26 pagesKarush-Kuhn-Tucker Conditions: Geoff Gordon & Ryan Tibshirani Optimization 10-725 / 36-725DalionzoNo ratings yet

- Sec Order ProblemsDocument10 pagesSec Order ProblemsyerraNo ratings yet

- Limits and Continuity 2.2. Limit of A Function and Limit LawsDocument6 pagesLimits and Continuity 2.2. Limit of A Function and Limit Lawsblack bloodNo ratings yet

- Solving Dual Problems: 1 M T 1 1 T R RDocument14 pagesSolving Dual Problems: 1 M T 1 1 T R RSamuel Alfonzo Gil BarcoNo ratings yet

- Algebraic and Transcendental Functions: First Year of Engineering Program Department of Foundation YearDocument37 pagesAlgebraic and Transcendental Functions: First Year of Engineering Program Department of Foundation YearRathea UTHNo ratings yet

- Classical Optimization Theory: Constrained Optimization (Equality Constraints)Document22 pagesClassical Optimization Theory: Constrained Optimization (Equality Constraints)Aswini KnairNo ratings yet

- No Calculators. Closed Book and Notes. Write Your Final Answers in The Box If Provided. You May Use The Back of The PagesDocument7 pagesNo Calculators. Closed Book and Notes. Write Your Final Answers in The Box If Provided. You May Use The Back of The PagesLyonChenNo ratings yet

- Excersices Lagrange MultipliersDocument2 pagesExcersices Lagrange MultipliersAlfanum EricosNo ratings yet

- Linear Programming DualityDocument17 pagesLinear Programming DualityDr-Junaid ShajuNo ratings yet

- CMM 2017 01 17Document6 pagesCMM 2017 01 17enrico.michelatoNo ratings yet

- Lecture17 PDFDocument4 pagesLecture17 PDFreshmeNo ratings yet

- Karush-Kuhn-Tucker (KKT) Conditions: Lecture 11: Convex OptimizationDocument4 pagesKarush-Kuhn-Tucker (KKT) Conditions: Lecture 11: Convex OptimizationaaayoubNo ratings yet

- Slack VariableDocument19 pagesSlack VariabletapasNo ratings yet

- Lecture33 PDFDocument6 pagesLecture33 PDFkkkNo ratings yet

- MA-203: Note On Green's Function: April 19, 2020Document10 pagesMA-203: Note On Green's Function: April 19, 2020Vishal MeenaNo ratings yet

- L31 - Non-Linear Programming Problems - Unconstrained Optimization - KKT ConditionsDocument48 pagesL31 - Non-Linear Programming Problems - Unconstrained Optimization - KKT ConditionsNirmit100% (1)

- Hamilton-Jacobi-Bellman Equations and Optimal Control: !birkh Auser Verlag BaselDocument10 pagesHamilton-Jacobi-Bellman Equations and Optimal Control: !birkh Auser Verlag BaselMaraNo ratings yet

- Mathematics For Economics (ECON 104)Document51 pagesMathematics For Economics (ECON 104)Experimental BeXNo ratings yet

- Lecture 10Document4 pagesLecture 10Tấn Long LêNo ratings yet

- Nonlinear Programming: Chemical Engineering Department National Tsing-Hua University Prof. Shi-Shang Jang May, 2003Document33 pagesNonlinear Programming: Chemical Engineering Department National Tsing-Hua University Prof. Shi-Shang Jang May, 2003Maurice MonjereziNo ratings yet

- The Big Theorem On The Frobenius Method, With ApplicationsDocument34 pagesThe Big Theorem On The Frobenius Method, With Applications斎藤 くんNo ratings yet

- The Definitive Guide To Facilitating Remote Workshops (V1.1)Document76 pagesThe Definitive Guide To Facilitating Remote Workshops (V1.1)pericles9100% (1)

- Critical Chain ConceptsDocument16 pagesCritical Chain ConceptsPrateek GargNo ratings yet

- 9 Tips For Writing Useful Requirements: White PaperDocument5 pages9 Tips For Writing Useful Requirements: White PaperHeidi BartellaNo ratings yet

- TOCICO Expanding The World of TOC Pres PubDocument24 pagesTOCICO Expanding The World of TOC Pres PuballiceyewNo ratings yet

- 2 Kendall Zahora JZ Add 23 TOCPA USA 21 22 March 2016 PDFDocument18 pages2 Kendall Zahora JZ Add 23 TOCPA USA 21 22 March 2016 PDFarstNo ratings yet

- Delay OnePageDocument1 pageDelay OnePagearstNo ratings yet

- Using Strategic and Tactic To Make Toc MainstreamDocument5 pagesUsing Strategic and Tactic To Make Toc MainstreamarstNo ratings yet

- Wardley Maps - Simon WardleyDocument709 pagesWardley Maps - Simon Wardleyarst100% (1)

- To C Powerpoint NotebookDocument42 pagesTo C Powerpoint NotebookarstNo ratings yet

- Learning From ONE EventDocument35 pagesLearning From ONE EventarstNo ratings yet

- CH 10 1 (M)Document8 pagesCH 10 1 (M)aishaNo ratings yet

- Lobatto MethodsDocument17 pagesLobatto MethodsPJNo ratings yet

- Classnotes - Coursework of OptimizationDocument14 pagesClassnotes - Coursework of OptimizationHudzaifa AhnafNo ratings yet

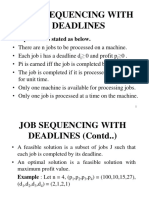

- Job Sequencing With The DeadlineDocument5 pagesJob Sequencing With The DeadlineAbhiNo ratings yet

- CBSE Class 10 Maths Real NumbersDocument1 pageCBSE Class 10 Maths Real Numbersaryan bhanssali100% (1)

- CMR - Transportation ProblemDocument14 pagesCMR - Transportation Problemqry01327No ratings yet

- Finite Element MethodDocument5 pagesFinite Element MethodAhmed ElhewyNo ratings yet

- Lesson 1 Linear ProgrammingDocument77 pagesLesson 1 Linear Programmingasra010110No ratings yet

- Lecture 4: Equality Constrained Optimization: Tianxi WangDocument14 pagesLecture 4: Equality Constrained Optimization: Tianxi Wanggenius_2No ratings yet

- Big M Method: REC, Deapartment of Mathemati Cs 1Document18 pagesBig M Method: REC, Deapartment of Mathemati Cs 1Amarnath ChandranNo ratings yet

- LostFile XLSX 238034304Document566 pagesLostFile XLSX 238034304JHANSEL YAIR MARRUFO CERCADONo ratings yet

- CN 5 Limit of Polynomial and Rational FunctionsDocument2 pagesCN 5 Limit of Polynomial and Rational FunctionsDenise Nicole T. LopezNo ratings yet

- False Position MethodDocument8 pagesFalse Position Methodmuzamil hussainNo ratings yet

- Splines and Piecewise Interpolation: Powerpoints Organized by Dr. Michael R. Gustafson Ii, Duke UniversityDocument17 pagesSplines and Piecewise Interpolation: Powerpoints Organized by Dr. Michael R. Gustafson Ii, Duke UniversityAdil AliNo ratings yet

- Unit 2 (Polynomials) : Exercise 2.1: Multiple Choice Questions (MCQS)Document22 pagesUnit 2 (Polynomials) : Exercise 2.1: Multiple Choice Questions (MCQS)Ivana ShawNo ratings yet

- Digital and Logic Design: Lab-5 Karnaugh-Map, POS & SOPDocument9 pagesDigital and Logic Design: Lab-5 Karnaugh-Map, POS & SOPsaadNo ratings yet

- MA 214: Introduction To Numerical Analysis: Shripad M. Garge. IIT Bombay (Shripad@math - Iitb.ac - In)Document71 pagesMA 214: Introduction To Numerical Analysis: Shripad M. Garge. IIT Bombay (Shripad@math - Iitb.ac - In)nrjttnrjNo ratings yet

- Assignment - 2 Numerical Solutions of Ordinary and Partial Differential EquationsDocument1 pageAssignment - 2 Numerical Solutions of Ordinary and Partial Differential EquationsManish MeenaNo ratings yet

- M33 PDFDocument5 pagesM33 PDFLehoang HuyNo ratings yet

- 5 Linear ProgDocument44 pages5 Linear ProgLeonardo JacobinaNo ratings yet

- Exercise - Types of Algebraic ExpressionsDocument4 pagesExercise - Types of Algebraic ExpressionsNarayanamurthy AmirapuNo ratings yet

- Clicker Question Bank For Numerical Analysis (Version 1.0 - May 14, 2020)Document91 pagesClicker Question Bank For Numerical Analysis (Version 1.0 - May 14, 2020)Mudasar AbbasNo ratings yet

- Linear Programming Simplex in Matrix Form and The Fundamental InsightDocument151 pagesLinear Programming Simplex in Matrix Form and The Fundamental InsightMichael TateNo ratings yet

- Constraint Satisfaction Problems: Artificial Intelligence COSC-3112 Ms. Humaira AnwerDocument15 pagesConstraint Satisfaction Problems: Artificial Intelligence COSC-3112 Ms. Humaira AnwerMUHAMMAD ALINo ratings yet

- Module B SolutionsDocument13 pagesModule B SolutionsQaisar ShafiqueNo ratings yet

- Bab-2 Linear Algebraic EquationsDocument51 pagesBab-2 Linear Algebraic EquationsNata CorpNo ratings yet

- Taylor and Maclaurin SeriesDocument38 pagesTaylor and Maclaurin SeriescholisinaNo ratings yet

- Session 5Document40 pagesSession 5Gilbert AggreyNo ratings yet

- Lecture Notes 3Document79 pagesLecture Notes 3Hussain AldurazyNo ratings yet

- Karush-Kuhn-Tucker (KKT) Conditions: Lecture 11: Convex OptimizationDocument4 pagesKarush-Kuhn-Tucker (KKT) Conditions: Lecture 11: Convex OptimizationaaayoubNo ratings yet