100% found this document useful (1 vote)

458 views24 pages6.control System Stability - Notes

This document discusses stability analysis of feedback control systems using modern control theory. It provides an outline of topics covered, including an overview of feedback control, state-space analysis, stability definitions, types of stability (internal and bounded-input bounded-output), stability of linear time-invariant systems, and stability analysis using Lyapunov's direct method. Examples are also given to illustrate key concepts like stability definitions, Lyapunov function generation for linear systems, and determining stability conditions.

Uploaded by

VeNkat SeshamsettiCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

100% found this document useful (1 vote)

458 views24 pages6.control System Stability - Notes

This document discusses stability analysis of feedback control systems using modern control theory. It provides an outline of topics covered, including an overview of feedback control, state-space analysis, stability definitions, types of stability (internal and bounded-input bounded-output), stability of linear time-invariant systems, and stability analysis using Lyapunov's direct method. Examples are also given to illustrate key concepts like stability definitions, Lyapunov function generation for linear systems, and determining stability conditions.

Uploaded by

VeNkat SeshamsettiCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

- Introduction to Modern Control Theory: Provides an introductory overview of the Modern Control Theory course, including contact information and visual illustrations of control applications.

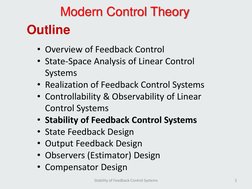

- Outline of Modern Control Theory: Lists the major topics that will be covered in the course, such as feedback control and system design methodologies.

- References: Provides a list of textbooks and resources for further reading and study in Modern Control Systems.

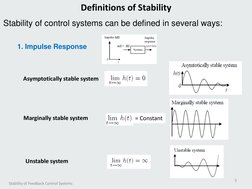

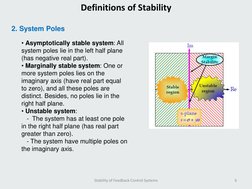

- Stability of Feedback Control Systems: Begins the discussion on control system stability, covering various types of stability and introductory concepts.

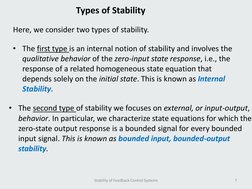

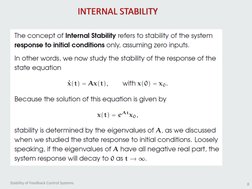

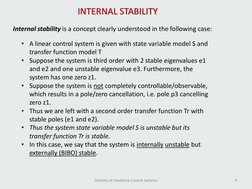

- Internal Stability: Details the concept of internal stability and its relevance to system response to initial conditions.

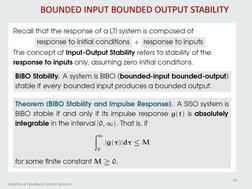

- Bounded Input, Bounded Output Stability: Explores the conditions and implications of bounded input, bounded output (BIBO) stability in control systems.

- Stability of Linear Time Invariant Systems: Analyzes the stability criteria for linear time-invariant systems based on system matrix properties.

- Stability by the Direct Method of Lyapunov: Introduces the direct method of Lyapunov for assessing stability, including theory and applications.