Professional Documents

Culture Documents

Python GTU Study Material Presentations Unit-5 20112020032922AM

Uploaded by

Kushal ParmarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Python GTU Study Material Presentations Unit-5 20112020032922AM

Uploaded by

Kushal ParmarCopyright:

Available Formats

Python for Data Science (PDS) (3150713)

Unit-05

Data Wrangling

Prof. Arjun V. Bala

Computer Engineering Department

Darshan Institute of Engineering & Technology, Rajkot

arjun.bala@darshan.ac.in

9624822202

Outline

Looping

Classes in Scikit-learn library

Applications of Data Science

Hashing Trick

Deterministic Selection

Considering Timing and Performance

Parallel Processing

The EDA Approach

Understanding Correlation

Modifying Data Distributions

Classes in Scikit-learn

Understanding how classes work is an important prerequisite for being able to use the Scikit-

learn package appropriately.

Scikit-learn is the package for machine learning and data science experimentation favored by

most data scientists.

It contains wide range of well-established learning algorithms, error functions and testing

procedures.

Install : conda install scikit-learn

There are four class types covering all the basic machine-learning functionalities,

Classifying

Regressing

Grouping by clusters

Transforming data

Even though each base class has specific methods and attributes, the core functionalities for

data processing and machine learning are guaranteed by one or more series of methods and

attributes.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 3

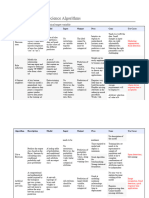

Choosing a correct algorithm in Scikit-learn

Get

more

>50 Start

samples

data

Predicting a Predicting a No

category? Quantity? Yes

Not working

Do you have

Labeled

data?

Clustering Regression <100K

<100K

no. of samples Randomized

category No PCA

samples known

Algo SGD Few

Linear Regressor features are <10K

SGD <10K <10K

SVC samples samples important samples

Classifier

Text Mini Batch RidgeRegressor

kernel KMeans Lasso Kernel Isomap

data? approximation KMeans SVR(kernel=‘linear’) approximation

ElasticNet Spectral

Spectral Embedding

Naïve

Bayes

Kneighbors

Classifier

SVC Clustering Mean Shift

EnsembleRegressor Dimensionality

Ensemble GMM

Classification Classifiers

VBGMM SVR(kernel=‘rbf’) Reduction LLE

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 4

Supervised V/S Unsupervised Learning

Supervised Learning Unsupervised Learning

Supervised learning algorithms are trained using labeled data. Unsupervised learning algorithms are trained using unlabeled

data.

Supervised learning model takes direct feedback to check if it is Unsupervised learning model does not take any feedback.

predicting correct output or not.

The goal of supervised learning is to train the model so that it The goal of unsupervised learning is to find the hidden patterns

can predict the output when it is given new data. and useful insights from the unknown dataset.

Supervised learning can be categorized Unsupervised Learning can be classified

in Classification and Regression problems. in Clustering and Associations problems.

Supervised learning model produces an accurate result. Unsupervised learning model may give less accurate result as

compared to supervised learning.

It includes various algorithms such as Linear Regression, It includes various algorithms such as Clustering, KNN, and

Logistic Regression, Support Vector Machine, Multi-class Apriori algorithm.

Classification, Decision tree, Bayesian Logic, etc.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 5

Machine Learning Process for Supervised Learning

Test Model

Data Testing

Collecting Cleaning Splitting Update Model

data data data Parameters Deployment

Train Model

Data Training

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 6

Basic Structure of Scikit-Learn

Step – 01 (import) Step – 02 (create object)

from sklearn.family import Model m = Model(parameters)

Example Example

from sklearn.linear_model import LinearRegression lr = LinearRegression()

Step – 03 (split data) (Only For Supervised Learning)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.3)

Step – 04 (Train Model) Step – 06 (Evaluate Model)

m.fit(X_train,y_train) #For Supervised Learning • Evaluate the model and update the parameters if

OR required.

m.fit(X_train) #For Unsupervised Learning • Evaluating technique will be different for

regression, classification and clustering.

Step – 05 (Predict) (Only For Supervised Learning) • We will explore all the techniques in later

sessions.

predictions = m.predict(X_train)

Example Model Evaluation for Regression

from sklearn import metrics

print(metrics_mean_squared_error(ytest,predictions))

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 7

Linear Regression (Example) (iPhone Prices)

iPhoneLinReg.py

1 import pandas as pd

iphones.csv

2 df = pd.read_csv('iphones.csv')

3

4 from sklearn.linear_model import LinearRegression

5 lr = LinearRegression()

6 X = df['iphonenumber'].values.reshape(-1,1)

7 y = df['price']

8

9 lr.fit(X,y)

10

11 lr.predict([[14]]) # price for the next iphone Output

12

13 predicted = lr.predict(X)

14

15 import matplotlib.pyplot as plt

16 %matplotlib inline

17

18 plt.scatter(df['iphonenumber'],df['price'],c='r')

19 plt.scatter(df['iphonenumber'],predicted,c='b')

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 8

Support Vector Regression (SVR) (Example)

iPhoneLinReg.py

1 import pandas as pd

WorldBank.csv

2 df = pd.read_csv('WorldBank.csv')

3 Download link

4 pop15 = df[df['Indicator Name']=='Population ages

5 15-64 (% of total population)']

Output

6 X = np.arange(1960,2020)

7 y = pop15.values[0][4:-1]

8

9 from sklearn.svm import SVR

10 model = SVR()

11 model.fit(X.reshape(-1,1),y)

12

13 predictPoly = model.predict(X.reshape(-1,1))

14

15 import matplotlib.pyplot as plt

16 %matplotlib inline

17

18 plt.scatter(X,y,c='r')

19 plt.scatter(X,predictPoly,c='b')

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 9

Bag of Words Model (Topic from Unit-03)

Before we can perform analysis on textual data, we must tokenize every word within the

dataset.

The act of tokenizing the words creates a bag of words.

The creation of a bag of words revolves around Natural Language Processing (NLP).

Before we can do NLP we should perform below specified processes

Remove special characters

Such as html tags and special characters

Remove the stop words

Some of the English stop words are a, an, the, are, at, this, is etc….

Stemming words

Stemming the words means removing suffix and prefix of the word

Example :

Play

Playing

Play

Plays

Played

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 10

Hashing Trick

Most Machine Learning algorithms uses numeric inputs, if our data contains text instead we

need to convert those text into numeric values first, this can be done using hashing tricks.

For Example our dataset is something like below table,

Id Salary Gender Id Salary Gender Gender_Numeric

1 10000 Male 1 10000 Male 1

2 15000 Female 2 15000 Female 0

3 12000 Male 3 12000 Male 1

4 11000 Female 4 11000 Female 0

5 20000 Male 5 20000 Male 1

We can not apply the machine learning algorithms in text like Male/Female, we need numeric

values instead of this, One thing which we can do here is assigning the number to each word

and replace that number with the word.

If we assign 1 to male and 0 to female we can use ML algorithms, same data set in numeric

values are given above.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 11

Hashing Trick (Cont.)

In machine learning, feature hashing, also known as the hashing trick, is a fast and space-

efficient way of vectorising features, i.e. turning arbitrary features into indices in a vector or

matrix.

When dealing with text, one of the most useful solutions provided by the Scikit‐learn package is

the hashing trick.

Example :

Input Numeric Representation

darshan is the best engineering college in rajkot 1 2 3 4 5 6 7 8

rajkot is famous for engineering 8 2 9 10 5

college is located in rajkot morbi road 6 2 11 7 8 12 13

1 2 3 4 5 6 7 8 9 10 11 12 13

darshan is the best engineering college in rajkot famous for located morbi road

1 1 1 1 1 1 1 1 0 0 0 0 0

0 1 0 0 1 0 0 1 1 1 0 0 0

0 1 0 0 0 1 1 1 0 0 1 1 1

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 12

Implementing Hashing Trick

hashTrick.py Output

1 a = ["darshan is the best engineering college {'darshan': 2,

2 in rajkot","rajkot is famous for 'is': 7,

3 engineering","college is located in rajkot 'the': 12,

4 morbi road"] 'best': 0,

5 'engineering': 3,

6 from sklearn.feature_extraction.text import * 'college': 1,

7 'in': 6,

8 countVector = CountVectorizer() 'rajkot': 10,

9 'famous': 4,

10 X_train_counts = countVector.fit_transform(a) 'for': 5,

11 'located': 8,

12 print(countVector.vocabulary_) 'morbi': 9,

13 'road': 11}

14 print(X_train_counts.toarray())

array([[1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1],

[0, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0],

[0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0]],

dtype=int64)

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 13

CountVectorizer

CountVectorizer contains many parameters which can be very useful

while dealing with the textual data, some of the important parameters are

max_features

Build a vocabulary that only consider the top max_features ordered by term frequency across the corpus.

stop_words

Will remove the stops words, default is None, we can set to ‘english’ if we want to remove english stop words.

ngram_range

The lower and upper boundary of the range of n-values for different word n-grams or char n-grams to be extracted.

hashTrick.py Output

1 from sklearn.feature_extraction.text import * {'darshan': 2, 'best': 0,

2 a = ["darshan is the best engineering college in rajkot","rajkot is 'engineering': 3, ……… ,

3 famous for engineering","college is located in rajkot morbi road"] 'morbi': 6, 'road': 8}

4 cv1 = CountVectorizer(stop_words="english")

5 cv2 = CountVectorizer(ngram_range=(2,2)) {'darshan is': 3, 'is the':

6 X_train_1 = cv1.fit_transform(a) 10, 'the best': 15, 'best

7 X_train_2 = cv2.fit_transform(a) engineering': 0, ………… , 'is

8 located': 9, 'located in':

9 print(cv1.vocabulary_) 11, 'rajkot morbi': 14,

10 print(cv2.vocabulary_) 'morbi road': 12}

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 14

timeit (Magic Command in Jupyter Notebook)

We can find the time taken to execute a statement or a cell in a Jupyter Notebook with the help

of timeit magic command.

This command can be used both as a line and cell magic:

In line mode you can time a single-line statement (though multiple ones can be chained with using

semicolons).

In cell mode, the statement in the first line is used as setup code (executed but not timed) and the body of

the cell is timed. The cell body has access to any variables created in the setup code.

Syntax :

Line : %timeit [-n<N> -r<R> [-t|-c] -q -p<P> -o]

Cell : %%timeit [-n<N> -r<R> [-t|-c] -q -p<P> -o]

Here, -n flag represents the number of loops and –r flag represents the number of repeats

Example :

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 15

Memory Profiler

This is a python module for monitoring memory consumption of a process as well as line-by-

line analysis of memory consumption for python programs.

Installation : pip install memory_profiler

Usage :

First Load the profiler by writing %load_ext memory_profiler in the notebook

Then write %memit on each statement you want to monitor memory.

Example :

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 16

Running in Parallel

Most computers today are multicore (two or more processors in a single package), some with

multiple physical CPUs.

One of the most important limitations of Python is that it uses a single core by default. (It was

created in a time when single cores were the norm.)

Data science projects require quite a lot of computations.

Part of the scientific aspect of data science relies on repeated tests and experiments on

different data matrices.

Also the data size may be huge, which will take lots of processing power.

Using more CPU cores accelerates a computation by a factor that almost matches the number

of cores.

For example, having four cores would mean working at best four times faster.

But practically we will not have four times faster processing as assigning the different cores to different

processes will take some amount of time.

So parallel processing will works best with huge datasets or where extreme processing power is required.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 17

Running in Parallel (Cont.)

In Scikit-learn library we need not to do any special programming in order to do the parallel

(multiprocessing) processing, we can simply use n_jobs parameter to do so.

If we set number grater than 1 in the n_jobs it will uses that much of the processor, if we set

-1 to n_jobs it will use all available processor from the system.

Lets see the Example how we can achieve parallel processing in Scikit-learn liabrary.

Using Single Processor

Using Multi Processor

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 18

Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) refers to the critical process of performing initial

investigations on data so as to discover patterns, to spot anomalies, to test hypothesis and to

check assumptions with the help of summary statistics and graphical representations.

EDA was developed at Bell Labs by John Tukey, a mathematician and statistician who wanted

to promote more questions and actions on data based on the data itself.

In one of his famous writings, Tukey said:

“The only way humans can do BETTER than computers is to take a chance of doing WORSE than them.”

Above statement explains why, as a data scientist, your role and tools aren’t limited to

automatic learning algorithms but also to manual and creative exploratory tasks.

Computers are unbeatable at optimizing, but humans are strong at discovery by taking

unexpected routes and trying unlikely but very effective solutions.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 19

EDA (cont.)

With EDA we,

Describe data

Closely explore data distributions

Understand the relationships between variables

Notice unusual or unexpected situations

Place the data into groups

Notice unexpected patterns within the group

Take note of group differences

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 20

EDA - Measuring Central Tendency

We can use many inbuilt pandas functions in order to find central tendency of the numerical

data, Some of the functions are

df.mean()

df.median()

df.std()

df.max()

df.min()

df.quantile(np.array([0,0.25,0.5,0.75,1]))

We can define normality of the data using below measures

Skewness defines the asymmetry of data with respect to the mean.

It will be negative if the left tail is too long.

It will be positive if the right tail is too long.

It will be zero if left and right tail are same.

Kurtosis shows whether the data distribution, especially the peak and the tails, are of the right shape.

It will be positive if distribution has marked peak.

It will be zero if distribution is flat.

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 21

Obtaining the frequencies

We can use pandas built-in function value_counts() to obtain the frequency of the

categorical variables.

valueCount.py Output

1 print(df["Sex"].value_counts()) male 577

female 314

Name: Sex, dtype: int64

We also can use another pandas built-in function describe() to obtain insights of the data.

valueCount.py Output

1 print(df.describe())

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 22

Visualization for EDA

We can use many types of graphs, some of them are listed below

if we want to show how various data elements contribute towards a whole, we should use pie chart.

If we want to compare data elements, we should use bar chart.

If we want to show distribution of elements, we should use histograms.

If we want to depict groups in elements, we should use boxplots.

If we want to find patterns in data, we should use scatterplots.

If we want to display trends over time, we should use line chart.

If we want to display geographical data, we should use basemap.

If we want to display network, we should use networkx.

In addition to all these graphs, we also can use seaborn library to plot advanced graphs like,

Countplot

Heatmap

Distplot

Pairplot

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 23

Covariance and Correlation

Covariance

Covariance is a measure used to determine how much two variables change in tandem.

The unit of covariance is a product of the units of the two variables.

Covariance is affected by a change in scale, The value of covariance lies between -∞ and +∞.

Pandas does have built-in function to find covariance in DataFrame named cov()

Correlation

The correlation between two variables is a normalized version of the covariance.

The value of correlation coefficient is always between -1 and 1.

Once we’ve normalized the metric to the -1 to 1 scale, we can make meaningful statements and compare

correlations.

covCorr.py

1 print(df.cov())

2 print(df.corr())

Prof. Arjun V. Bala #3150713 (PDS) Unit 05 – Data Wrangling 24

You might also like

- CPAS-3000 V2.6 Operator Guide 5230790386 PDFDocument90 pagesCPAS-3000 V2.6 Operator Guide 5230790386 PDFroshan mungurNo ratings yet

- Microsoft Machine Learning Algorithm Cheat Sheet v6 PDFDocument1 pageMicrosoft Machine Learning Algorithm Cheat Sheet v6 PDFkeyoor_patNo ratings yet

- Operating SystemDocument29 pagesOperating SystemFrancine EspinedaNo ratings yet

- Boot Multiple ISO From USBDocument4 pagesBoot Multiple ISO From USBvert333No ratings yet

- TVL CSS11 Q4 M8Document16 pagesTVL CSS11 Q4 M8Richard Sugbo100% (1)

- Thingworx Kepware Server ManualDocument329 pagesThingworx Kepware Server Manualair_jajaNo ratings yet

- Machine Learning with Scikit-Learn: A Cheat SheetDocument11 pagesMachine Learning with Scikit-Learn: A Cheat SheetAmber Joy TingeyNo ratings yet

- SE GTU Study Material Presentations Unit-3 26092020084433AMDocument51 pagesSE GTU Study Material Presentations Unit-3 26092020084433AMKushal ParmarNo ratings yet

- OOPS Notes For 3rd Sem ALL ChaptersDocument62 pagesOOPS Notes For 3rd Sem ALL Chaptersabhishek singh83% (6)

- Module 02 Implement Azure Functions 1Document34 pagesModule 02 Implement Azure Functions 1Xulfee100% (1)

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: NAIVE BAYES, NEAREST NEIGHBORS and NEURAL NETWORKS: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: NAIVE BAYES, NEAREST NEIGHBORS and NEURAL NETWORKS: Examples with MATLABNo ratings yet

- Scikit LearnDocument1 pageScikit Learnmax bisceneNo ratings yet

- ML - Nov - Noida - Batch - 1674286508637Document11 pagesML - Nov - Noida - Batch - 1674286508637saby aroraNo ratings yet

- Machine Learning FondamentalsDocument9 pagesMachine Learning Fondamentalsjemai.mohamedazeNo ratings yet

- Skill DS. MLDocument5 pagesSkill DS. MLFahmi FathonyNo ratings yet

- NGS Data PreprocessingDocument20 pagesNGS Data PreprocessingFadhiliNo ratings yet

- 16 Comparison of Data Science AlgorithmsDocument13 pages16 Comparison of Data Science AlgorithmsshardullavandeNo ratings yet

- Data BasicsDocument32 pagesData BasicsFadhiliNo ratings yet

- BroomDocument172 pagesBroomNcube BonganiNo ratings yet

- Chapter 3Document33 pagesChapter 3Adriano ViannaNo ratings yet

- Machine Learning: Mona Leeza Email: Monaleeza - Bukc@bahria - Edu.pkDocument60 pagesMachine Learning: Mona Leeza Email: Monaleeza - Bukc@bahria - Edu.pkzombiee hookNo ratings yet

- Machine LearningDocument1 pageMachine LearningFareedNo ratings yet

- Machine Learning FileDocument7 pagesMachine Learning FilebuddyNo ratings yet

- Syllabus - Learn - From - Home - Machine LearningDocument4 pagesSyllabus - Learn - From - Home - Machine LearningAdarsh AvNo ratings yet

- Leo Breiman 2001 Random Forest Algorithm Weka - Google ScholarDocument6 pagesLeo Breiman 2001 Random Forest Algorithm Weka - Google ScholarPrince AliNo ratings yet

- Newton School 1D ArrayDocument1 pageNewton School 1D ArrayJenifer juilius saraNo ratings yet

- ML AlgorithmsDocument4 pagesML AlgorithmsamitNo ratings yet

- ML-DL Training CurriculumDocument2 pagesML-DL Training CurriculumShubhendra vatsaNo ratings yet

- ML - Classification Vs Regression - GeeksforGeeksDocument5 pagesML - Classification Vs Regression - GeeksforGeekssantosh KhadasareNo ratings yet

- Machine Learning Use Cases With Azure by Chris McHenryDocument41 pagesMachine Learning Use Cases With Azure by Chris McHenrySon DinhNo ratings yet

- Feature Extraction: - Saheni PatraDocument17 pagesFeature Extraction: - Saheni PatraArindam RoyNo ratings yet

- Advanced Statistics (Week 3)Document14 pagesAdvanced Statistics (Week 3)Dileep Kumar MotukuriNo ratings yet

- Alpha CurriculumDocument7 pagesAlpha CurriculumHUCHU PUCHUNo ratings yet

- MACHINE LEARNING TipsDocument10 pagesMACHINE LEARNING TipsSomnath KadamNo ratings yet

- Marcin Elantkowski and Sebastian Kaczor : A Toolkit For Research in Zero-Shot LearningDocument1 pageMarcin Elantkowski and Sebastian Kaczor : A Toolkit For Research in Zero-Shot LearningdfgdgsgseNo ratings yet

- WWW GeeksforgeeksDocument2 pagesWWW GeeksforgeeksTest accNo ratings yet

- Machine Learning Basics: Shusen WangDocument30 pagesMachine Learning Basics: Shusen WangMInh ThanhNo ratings yet

- MNIST DatabaseDocument4 pagesMNIST Databasemcdonald212No ratings yet

- Data-Science-&-Machine-Learning-2024Document2 pagesData-Science-&-Machine-Learning-2024Maham noorNo ratings yet

- BAAIDocument4 pagesBAAIShreenidhi M RNo ratings yet

- Core Concepts of Data Mining in 40 CharactersDocument128 pagesCore Concepts of Data Mining in 40 CharactersDragon Monkey DNo ratings yet

- Data Science Course ContentDocument4 pagesData Science Course ContentLakshmi MadavanNo ratings yet

- Chapter 6. Decision Tree ClassificationDocument19 pagesChapter 6. Decision Tree Classificationmuhamad saepulNo ratings yet

- Geeksforgeeks Org Array Data StructureDocument2 pagesGeeksforgeeks Org Array Data StructureTest accNo ratings yet

- 1st Research PaperDocument4 pages1st Research PaperJaveria IsmailNo ratings yet

- Semisupervised Learning Image Classification2Document13 pagesSemisupervised Learning Image Classification2Sabbir AhmedNo ratings yet

- ML Lec07 KNNDocument37 pagesML Lec07 KNNossamasamir.work100% (2)

- RAG Slide ENGDocument41 pagesRAG Slide ENGWellcareNo ratings yet

- Article in Press: A New Kernel Discriminant Analysis Framework For Electronic Nose RecognitionDocument10 pagesArticle in Press: A New Kernel Discriminant Analysis Framework For Electronic Nose RecognitionDavies SegeraNo ratings yet

- Optimal Decision Fusion Using Sparse Representation-Based Classifiers On Monogenic-Signal Dictionaries For SAR ATRDocument3 pagesOptimal Decision Fusion Using Sparse Representation-Based Classifiers On Monogenic-Signal Dictionaries For SAR ATRMohamad Farzan SabahiNo ratings yet

- WebsiteDocument7 pagesWebsiteTushar BhattNo ratings yet

- ASEMAP in DetailDocument33 pagesASEMAP in Detailmohit bhallaNo ratings yet

- Lesson 8 - ClassificationDocument74 pagesLesson 8 - ClassificationrimbrahimNo ratings yet

- Gini Vs EntrophyDocument8 pagesGini Vs EntrophyJorge Yupanqui DuranNo ratings yet

- Uji Paired Sampel T-Test-Terdistribusi NormalDocument2 pagesUji Paired Sampel T-Test-Terdistribusi Normalrusli MudiNo ratings yet

- Linda KeceDocument19 pagesLinda KeceLinda Wahyu UtamiiNo ratings yet

- Index Filter Predicates 2011 09 07Document2 pagesIndex Filter Predicates 2011 09 07Abhishek Hariharan - ConsultantNo ratings yet

- Machine Learning Guide: Data to DeploymentDocument1 pageMachine Learning Guide: Data to DeploymentaymanmabdelsalamNo ratings yet

- NPar Tests Results SummaryDocument16 pagesNPar Tests Results SummaryTita Steward CullenNo ratings yet

- CSE-3501 Information Security Analysis and Audit ELA (L9+L10) Digital Assignment-5Document9 pagesCSE-3501 Information Security Analysis and Audit ELA (L9+L10) Digital Assignment-5Yash AgarwalNo ratings yet

- Npar Tests: Npar Tests /K-S (Normal) Pendapatan Pendidikan Minat /missing AnalysisDocument12 pagesNpar Tests: Npar Tests /K-S (Normal) Pendapatan Pendidikan Minat /missing AnalysisNur Khafidz ArrosyidNo ratings yet

- Deep Learning-Based Feature Extraction in Iris Recognition: Use Existing Models, Fine-Tune or Train From Scratch?Document9 pagesDeep Learning-Based Feature Extraction in Iris Recognition: Use Existing Models, Fine-Tune or Train From Scratch?Satyanarayan GuptaNo ratings yet

- CurriculumDocument6 pagesCurriculumVimal RajNo ratings yet

- Paired T-Test (Pretest & Posttest)Document2 pagesPaired T-Test (Pretest & Posttest)SylvinceterNo ratings yet

- Diabetes Prediction Using Machine LearningDocument8 pagesDiabetes Prediction Using Machine LearningIJRASETPublicationsNo ratings yet

- Align competency, assessment and activities for laws of motionDocument21 pagesAlign competency, assessment and activities for laws of motionKianna Marie VitugNo ratings yet

- Execution Analysis of Various Clustering Algorithms in Weka: Muhammad SohailDocument4 pagesExecution Analysis of Various Clustering Algorithms in Weka: Muhammad SohailJaveria IsmailNo ratings yet

- 10-Genericsa 4 in 1 PDFDocument14 pages10-Genericsa 4 in 1 PDFAnonymous drmfyRzsNo ratings yet

- GTU BE-SEMESTER-IV OOP Exam QuestionsDocument1 pageGTU BE-SEMESTER-IV OOP Exam QuestionsKushal ParmarNo ratings yet

- NumPy Basics and OperationsDocument70 pagesNumPy Basics and OperationsKushal Parmar100% (1)

- Python GTU Study Material Presentations Unit-2 24072020062038AMDocument18 pagesPython GTU Study Material Presentations Unit-2 24072020062038AMKushal ParmarNo ratings yet

- CN GTU Study Material Presentations Unit-5 25102021013726PMDocument40 pagesCN GTU Study Material Presentations Unit-5 25102021013726PMKushal ParmarNo ratings yet

- Hen4Ec8: Performance Series AccessoryDocument2 pagesHen4Ec8: Performance Series AccessoryJoão Carlos AlmeidaNo ratings yet

- Vegarobokit CatloDocument24 pagesVegarobokit CatloMonali NiphadeNo ratings yet

- Understanding Entity Relationship DiagramsDocument46 pagesUnderstanding Entity Relationship Diagramssmallcar88No ratings yet

- MCQ On ARRAYDocument22 pagesMCQ On ARRAYShweta ShahNo ratings yet

- 2.6 Global Settings - KISS - Ini: 2.6.1 Definitions in (PATH)Document1 page2.6 Global Settings - KISS - Ini: 2.6.1 Definitions in (PATH)Krishna PrasadNo ratings yet

- DynaTrace Analysis OverviewDocument12 pagesDynaTrace Analysis OverviewSreenivasulu Reddy SanamNo ratings yet

- 3-Lecture Three - (Chapter Two-N-gram Language Models)Document28 pages3-Lecture Three - (Chapter Two-N-gram Language Models)Getnete degemuNo ratings yet

- Exp01 CCRDocument16 pagesExp01 CCRChristopher crNo ratings yet

- XGODY Navigation FAQDocument1 pageXGODY Navigation FAQspiridovic82No ratings yet

- Ijser: Iot Based Voting System For ElectionsDocument6 pagesIjser: Iot Based Voting System For ElectionsDawit DessieNo ratings yet

- Pluma ManualDocument2 pagesPluma ManualRozen MoragaNo ratings yet

- Presentacion Cisco PueacademyDocument55 pagesPresentacion Cisco PueacademyDamian PrimoNo ratings yet

- 08-4. Room, LiveData, and ViewModelDocument85 pages08-4. Room, LiveData, and ViewModelVăn DũngNo ratings yet

- Cab Service Management ReportDocument125 pagesCab Service Management ReportUniq ManjuNo ratings yet

- Fortigate 400f SeriesDocument10 pagesFortigate 400f SeriesPablo Daniel MiñoNo ratings yet

- International Journal of ControlDocument18 pagesInternational Journal of ControlMMHFNo ratings yet

- Desp 1Document2 pagesDesp 1DDDDNo ratings yet

- S5-211053rev1 Rel-17 CR TS 28.552 Add PLMN Granularity For Packet Delay MeasurementsDocument7 pagesS5-211053rev1 Rel-17 CR TS 28.552 Add PLMN Granularity For Packet Delay MeasurementsGlb manishNo ratings yet

- Berry Whetstone Crim - ColdFusionDocument6 pagesBerry Whetstone Crim - ColdFusionBharathan BaaluNo ratings yet

- UltrasoundDocument3 pagesUltrasoundAfiq HusainiNo ratings yet

- Diploma in Applied Network and Cloud Technology DNCT703 Network and Cloud SecurityDocument17 pagesDiploma in Applied Network and Cloud Technology DNCT703 Network and Cloud SecurityAnchal SinghNo ratings yet

- 1 Course OverviewDocument7 pages1 Course OverviewPaul KaguoNo ratings yet

- XVR1B04H-I Datasheet 20220530Document3 pagesXVR1B04H-I Datasheet 20220530Juan Ramon RodriguezNo ratings yet