Professional Documents

Culture Documents

Lecture 1 Introduction To PDC

Uploaded by

nimranoor1370 ratings0% found this document useful (0 votes)

8 views17 pagesOriginal Title

Lecture 1 Introduction to PDC

Copyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

8 views17 pagesLecture 1 Introduction To PDC

Uploaded by

nimranoor137Copyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You are on page 1of 17

Parallel and Distributed Computing

CS 3006 (BCS-7A | BDS-7A)

Lecture 1

Danyal Farhat

FAST School of Computing

NUCES Lahore

Introduction to Parallel and

Distributed Computing

Motivation for Parallel and Distributed Computing

• Uniprocessor are fast but

Some problems require too much computation

Some problems use too much data

Some problems have too many parameters to explore

• For example

Weather simulations, gaming, web Servers, code breaking

Need for Parallelism?

• Developing parallel hardware and software has traditionally been

time and effort intensive

• If one is to view this in the context of rapidly improving

uniprocessor speeds, one is tempted to question the need for

parallel computing

• Latest trends in hardware design indicate that uni-processors may

not be able to sustain the rate of realizable performance

increments in the future

• A number of fundamental physical and computational limitations

• The emergence of standardized parallel programming

environments, libraries, and hardware have significantly reduced

time to develop (parallel) solution

Moore’s Law

• Number of transistors incorporated in a chip will approximately

double every 18 months.

Moore’s Law (Cont.)

Doubling the transistors does doubles the speed?

• True for single core chips but now the increase in number of

transistor per processor is due to multi-core CPU’s

• In multi-core CPUs, speed will depend on the proper utilization

of these parallel resources

Will Moore’s law hold forever?

• Adding multiple cores on single chip causes heat issues

• Increasing the number of cores, may not be able to increase

speeds [Due to inter-process interactions]

• Transistors would eventually reach the limits of miniaturization

at atomic levels

Need for Parallel and Distributed Computing

• Need for:

Good parallel hardware

Good system software

Good Sequential algorithms

Good Parallel algorithms

• Parallel and Distributed Systems

Parallel and Distributed Systems

• Parallel and distributed computing is going to be more and

more important

Dual and quad core processors are very common

Up to six and eight cores for each CPU

Multithreading is growing

• Hardware structure or architecture is important for

understanding how much it is possible to speed up beyond a

single CPU

• Also capability of compilers to generate efficient code is very

important (e.g. converting for loop into threads)

• It is always difficult to distinguish between HW and SW

influences

Distributed System vs Distributed Computing

Distributed Systems:

• “A collection of autonomous computers, connected

through a network and distribution middleware.”

• Enables computers to coordinate their activities and to

share the resources of the system

• The system is usually perceived as a single, integrated

computing facility

• Mostly concerned with the hardware-based accelerations

Distributed System vs Distributed Computing (Cont)

Distributed Computing:

• “A specific use of distributed systems to split a large and

complex processing into subparts and execute them in

parallel to increase the productivity.”

• Computing mainly concerned with software-based

accelerations (i.e., designing and implementing algorithms)

Parallel vs Distributed Computing

Parallel Computing:

• The term is usually used for developing concurrent

solutions for following two types of the systems:

Multi-core Architecture

Many core architectures (i.e., GPU’s)

Distributed Computing:

• The type of computing mainly concerned with developing

algorithms for the distributed cluster systems

• Here distributed means a geographical distance between

the computers without any shared-Memory

Parallel vs Distributed Computing (Cont.)

Parallel Computing: Distributed Computing:

• Shared memory • Distributed memory

• Multiprocessors • Clusters and networks of

• Processors share logical workstations

address spaces • Processors do not share

• Processors share physical address space

No sharing of physical memory

memory

• Also referred to the study • Study of theoretical

of parallel algorithms distributed algorithms or

neural networks

Practical Applications of P&D Computing

Scientific Applications:

• Functional and structural characterization of genes and

proteins

• Applications in astrophysics have explored the evolution of

galaxies, thermonuclear processes, and the analysis of

extremely large datasets from telescope

Practical Applications of P&D Computing (Cont.)

Scientific Applications:

• Advances in computational physics and chemistry have

explored new materials, understanding of chemical

pathways, and more efficient processes

Example: Large Hydron Collider (LHC) at European Organization for Nuclear

Research (CERN) generates petabytes of data for a single collision

• Weather modeling for simulating the track of natural

hazards like extreme cyclones (storms), flood prediction

Practical Applications of P&D Computing (Cont.)

Commercial Applications:

• Some of the largest parallel computers power the Wall

Street!

• Data mining-analysis for optimizing business and

marketing decisions

• Large scale servers (mail and web servers) are often

implemented using parallel platforms

• Applications such as information retrieval and search are

typically powered by large clusters

Practical Applications of P&D Computing (Cont.)

Computer Systems Applications:

• Network intrusion detection: a large amount of data needs

to be analyzed and processed

• Cryptography (the art of writing or solving codes) employs

parallel infrastructures and algorithms to solve complex

codes – SHA 256

• Graphics processing – Each pixel represents a binary

• Embedded systems increasingly rely on distributed control

algorithms - Modern automobiles use embedded systems

Thank You!

You might also like

- Lecture 1Document18 pagesLecture 1wmanjonjoNo ratings yet

- The New Trends of Parallel ProcessingDocument5 pagesThe New Trends of Parallel ProcessingMohamed EL-FayomyNo ratings yet

- Lecture 1Document23 pagesLecture 1Lets clear Jee mathsNo ratings yet

- 2-INTRODUCTION TO PDC - MOTIVATION - KEY CONCEPTS-03-Dec-2019Material - I - 03-Dec-2019 - Module - 1 PDFDocument63 pages2-INTRODUCTION TO PDC - MOTIVATION - KEY CONCEPTS-03-Dec-2019Material - I - 03-Dec-2019 - Module - 1 PDFANTHONY NIKHIL REDDYNo ratings yet

- Week - 01 - Lec1 03 03 2021Document19 pagesWeek - 01 - Lec1 03 03 2021Zainab ShahzadNo ratings yet

- Basics of Parallel Programming: Unit-1Document79 pagesBasics of Parallel Programming: Unit-1jai shree krishnaNo ratings yet

- PDC Lecture No. 1Document21 pagesPDC Lecture No. 1Kashif MehmoodNo ratings yet

- CC Unit 1Document24 pagesCC Unit 1hawih58680No ratings yet

- Rohini 74684926776Document24 pagesRohini 74684926776gynoceNo ratings yet

- 02 - Lecture #2Document29 pages02 - Lecture #2Fatma mansourNo ratings yet

- 10 Parallel ComputingDocument15 pages10 Parallel Computingفيصل محمدNo ratings yet

- L1 IntroductionDocument12 pagesL1 IntroductionKarthik LaxmikanthNo ratings yet

- Pendahuluan Paralel KomputerDocument167 pagesPendahuluan Paralel KomputerBudi SetiawanNo ratings yet

- Introduction To Parallel Computing: Ananth Grama, Anshul Gupta, George Karypis, and Vipin KumarDocument15 pagesIntroduction To Parallel Computing: Ananth Grama, Anshul Gupta, George Karypis, and Vipin Kumarsatish161188No ratings yet

- Cloud Computing Unit-I JNTUH R16Document31 pagesCloud Computing Unit-I JNTUH R16vizierNo ratings yet

- Cluster and Computing Grids: Dr. Smita KacholeDocument31 pagesCluster and Computing Grids: Dr. Smita KacholeSmita SaudagarNo ratings yet

- Introduction To Parallel Computing LLNLDocument44 pagesIntroduction To Parallel Computing LLNLAntônio ArapiracaNo ratings yet

- 11 Introduction To Parallel ComputingDocument14 pages11 Introduction To Parallel ComputinglavNo ratings yet

- NPTEL Cloud Computing NotesDocument26 pagesNPTEL Cloud Computing Notesrupa reddy0% (1)

- Chapter 1 (A)Document27 pagesChapter 1 (A)SABITA RajbanshiNo ratings yet

- Multiprocessors - Parallel Processing Overview: "The Real World Is Inherently Concurrent Yet Our ComputationalDocument78 pagesMultiprocessors - Parallel Processing Overview: "The Real World Is Inherently Concurrent Yet Our ComputationalLyuben DimitrovNo ratings yet

- CCL AssignmentsDocument11 pagesCCL AssignmentsChinmay JoshiNo ratings yet

- Grid ComputingDocument24 pagesGrid ComputingkomalNo ratings yet

- FlynnsDocument41 pagesFlynnsAnonymous FPMwLUw8cNo ratings yet

- BCSE412L - Parallel Computing 01Document27 pagesBCSE412L - Parallel Computing 01aavsgptNo ratings yet

- Computational GridsDocument40 pagesComputational GridsdaiurqzNo ratings yet

- Gridcomputingppt 090724084528 Phpapp01Document30 pagesGridcomputingppt 090724084528 Phpapp01Sudeesh MenonNo ratings yet

- Lecture Week - 3 Amdahl Law 1Document19 pagesLecture Week - 3 Amdahl Law 1imhafeezniaziNo ratings yet

- Cloud ComputingDocument25 pagesCloud ComputingmanikeshNo ratings yet

- Data Parallel ArchitectureDocument17 pagesData Parallel ArchitectureSachin Kumar BassiNo ratings yet

- Introduction To Parallel ComputingDocument14 pagesIntroduction To Parallel ComputingdavNo ratings yet

- Ch1 Computing ParadigmsDocument18 pagesCh1 Computing Paradigmsmba20238No ratings yet

- Technical Seminar Report On: "High Performance Computing"Document14 pagesTechnical Seminar Report On: "High Performance Computing"mit_thakkar_2No ratings yet

- CS0051 - Module 01Document52 pagesCS0051 - Module 01Ron BayaniNo ratings yet

- Areas in CSDocument21 pagesAreas in CSUday KumarNo ratings yet

- Lecture 1 - Overview of Distributed ComputingDocument71 pagesLecture 1 - Overview of Distributed ComputingĐình Vũ TrầnNo ratings yet

- Lecture 1 - Parallel and Distributed ComputingDocument25 pagesLecture 1 - Parallel and Distributed ComputingSibgha IsrarNo ratings yet

- Part 1 - Lecture 1 - Introduction Parallel ComputingDocument33 pagesPart 1 - Lecture 1 - Introduction Parallel ComputingAhmad AbbaNo ratings yet

- CH 1 Intro To Parallel ArchitectureDocument18 pagesCH 1 Intro To Parallel Architecturedigvijay dholeNo ratings yet

- Term Paper Cse 211Document20 pagesTerm Paper Cse 211Nancy GoyalNo ratings yet

- Foundations of Sequential and Parallel Programming: Raising A New Generation of LeadersDocument34 pagesFoundations of Sequential and Parallel Programming: Raising A New Generation of Leadersekechi berniveNo ratings yet

- CC - Unit IDocument28 pagesCC - Unit I20-A0503 soumya anagandulaNo ratings yet

- CS0051 - Module 01 - Subtopic 1Document27 pagesCS0051 - Module 01 - Subtopic 1ronbayani2000No ratings yet

- Parallel Computing TerminologyDocument11 pagesParallel Computing Terminologymaxsen021No ratings yet

- 2 SPCDocument79 pages2 SPCRNo ratings yet

- Computers: - Computers Impact Our Lives in A Huge Number of WaysDocument14 pagesComputers: - Computers Impact Our Lives in A Huge Number of WaysDevendra BhavsarNo ratings yet

- CC Unit-1Document17 pagesCC Unit-1sreeharidas2124No ratings yet

- 532ebdistributed ProcessingDocument53 pages532ebdistributed ProcessingAtul RanaNo ratings yet

- Gopallapuram, Renigunta-Srikalahasti Road, Tirupati: by B. Hari Prasad, Asst - ProfDocument18 pagesGopallapuram, Renigunta-Srikalahasti Road, Tirupati: by B. Hari Prasad, Asst - Profbhariprasad_mscNo ratings yet

- Management Information System: Ghulam Yasin Hajvery University LahoreDocument42 pagesManagement Information System: Ghulam Yasin Hajvery University LahoreBilawal ShabbirNo ratings yet

- 01 Intro Parallel ComputingDocument40 pages01 Intro Parallel ComputingHilmy MuhammadNo ratings yet

- Darshan Institute of Engineering & TechnologyDocument49 pagesDarshan Institute of Engineering & TechnologyDhrumil DancerNo ratings yet

- HWSW Co Design Unit-1notesDocument195 pagesHWSW Co Design Unit-1notesswapna revuriNo ratings yet

- Slide 4Document41 pagesSlide 4dejenedagime999No ratings yet

- Lec 1Document21 pagesLec 1pritam044No ratings yet

- Operating Systems Week4Document30 pagesOperating Systems Week4Yano NettleNo ratings yet

- Introduction To: Parallel DistributedDocument32 pagesIntroduction To: Parallel Distributedaliha ghaffarNo ratings yet

- Slide 4Document41 pagesSlide 4dejenedagime999No ratings yet

- Design and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesFrom EverandDesign and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesNo ratings yet

- Multicore Software Development Techniques: Applications, Tips, and TricksFrom EverandMulticore Software Development Techniques: Applications, Tips, and TricksRating: 2.5 out of 5 stars2.5/5 (2)

- Lecture 5 Principles of Parallel Algorithm DesignDocument30 pagesLecture 5 Principles of Parallel Algorithm Designnimranoor137No ratings yet

- Lecture 3 Flynn's Classical TaxonomyDocument29 pagesLecture 3 Flynn's Classical Taxonomynimranoor137No ratings yet

- Lecture 2 Amdahl's Law and Karp-Flatt MetricDocument14 pagesLecture 2 Amdahl's Law and Karp-Flatt Metricnimranoor137No ratings yet

- Lecture 4 Network Topologies For Parallel ArchitectureDocument34 pagesLecture 4 Network Topologies For Parallel Architecturenimranoor137No ratings yet

- PrinectSignaStation Training PMADocument304 pagesPrinectSignaStation Training PMAremote1 onlineNo ratings yet

- Learn Python The Right WayDocument300 pagesLearn Python The Right WayJimmy FarfánNo ratings yet

- Protrader Presentation WebDocument30 pagesProtrader Presentation WebraviNo ratings yet

- Smith Micro Moh-WPS OfficeDocument3 pagesSmith Micro Moh-WPS OfficeMk AkangbeNo ratings yet

- Regional Group ProjectsDocument3 pagesRegional Group Projectsclasesespiritules44No ratings yet

- 7 PI3070igDocument1 page7 PI3070igGiovanny AntelizNo ratings yet

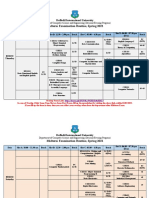

- (CC & DSC) Midterm Exam Routine-CSE-Spring 2022Document7 pages(CC & DSC) Midterm Exam Routine-CSE-Spring 2022Md Minhazur RahmanNo ratings yet

- Unit I VB 6.0 NotesDocument31 pagesUnit I VB 6.0 NotesKamalNo ratings yet

- Roof Con/Truss Con-Manual For BeginnersDocument24 pagesRoof Con/Truss Con-Manual For BeginnersDaniel DincaNo ratings yet

- JavaDocument33 pagesJavags_logic7636No ratings yet

- Pariksha Vani Jeev Vigyan Book PDFDocument130 pagesPariksha Vani Jeev Vigyan Book PDFDHUVADU JAGANATHAM100% (3)

- Protect Your Source Code From Decompiling or Reverse EngineeringDocument4 pagesProtect Your Source Code From Decompiling or Reverse EngineeringYamini ShindeNo ratings yet

- Line-Upsw P032Document4 pagesLine-Upsw P032Almustapha AbdulrahmanNo ratings yet

- US OOAD ProjectsDocument25 pagesUS OOAD ProjectsNgọc NhânNo ratings yet

- Tracker HelpDocument135 pagesTracker HelpiamjudhistiraNo ratings yet

- WWW Ictdemy Com Java Basics Netbeans Ide and Your First Console ApplicationDocument7 pagesWWW Ictdemy Com Java Basics Netbeans Ide and Your First Console ApplicationMark John SalaoNo ratings yet

- PgfplotsDocument263 pagesPgfplotspssrcNo ratings yet

- KEPServerEX Connectivity Guide GE CIMPLICITY PDFDocument10 pagesKEPServerEX Connectivity Guide GE CIMPLICITY PDFHumberto BalderasNo ratings yet

- Dream PinballDocument48 pagesDream PinballUbiritanNo ratings yet

- Read Me!!Document13 pagesRead Me!!Thushianthan KandiahNo ratings yet

- Day 2Document15 pagesDay 2kryptonNo ratings yet

- Basic Fundamental QuestionDocument5 pagesBasic Fundamental Questiontoday computerNo ratings yet

- Notes On Chapters 1, 2, 3, 6, 7, 8 & 10Document32 pagesNotes On Chapters 1, 2, 3, 6, 7, 8 & 10Nicholas RobertsNo ratings yet

- Red - Hat - Virtualization 4.4 Release - Notes en UsDocument97 pagesRed - Hat - Virtualization 4.4 Release - Notes en UsGNo ratings yet

- 4 MP IR Wedge Network CameraDocument3 pages4 MP IR Wedge Network CameraROBERT RAMIREZNo ratings yet

- Human Computer Interaction: University of HargeisaDocument34 pagesHuman Computer Interaction: University of HargeisaAidarusNo ratings yet

- Intel® Core™ I3-9100f Processor (6M Cache, Up To 4.20 GHZ) Specificatii Tehnice ProdusDocument5 pagesIntel® Core™ I3-9100f Processor (6M Cache, Up To 4.20 GHZ) Specificatii Tehnice ProdusNicu MuțNo ratings yet

- Adsm 201Document8 pagesAdsm 201Maurice ZimmermannNo ratings yet

- Chapter 2 Group 2Document46 pagesChapter 2 Group 2Alexandra Tesoro CorillaNo ratings yet

- CygNet 95 Release NotesDocument42 pagesCygNet 95 Release NotesEDUARDO ARMANDO RODRIGUEZ VASQUEZNo ratings yet