0% found this document useful (0 votes)

32 views27 pagesLogistic Regression Techniques

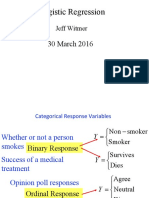

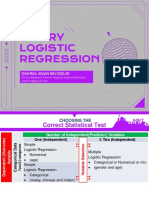

This document provides an overview of logistic regression compared to linear regression and discusses some key aspects of conducting logistic regression analyses. Logistic regression predicts the probability of categorical outcomes based on independent variables, whereas linear regression predicts continuous, interval-ratio dependent variables. The document also covers interpreting logistic regression coefficients, comparing effects of variables, hypothesis testing using Wald and likelihood ratio tests, and calculating marginal effects.

Uploaded by

Huseyin OztoprakCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

32 views27 pagesLogistic Regression Techniques

This document provides an overview of logistic regression compared to linear regression and discusses some key aspects of conducting logistic regression analyses. Logistic regression predicts the probability of categorical outcomes based on independent variables, whereas linear regression predicts continuous, interval-ratio dependent variables. The document also covers interpreting logistic regression coefficients, comparing effects of variables, hypothesis testing using Wald and likelihood ratio tests, and calculating marginal effects.

Uploaded by

Huseyin OztoprakCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd