Professional Documents

Culture Documents

Robust Background Subtraction Under Rapidly Changing Illumination Conditions

Robust Background Subtraction Under Rapidly Changing Illumination Conditions

Uploaded by

Satish NagabhushanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Robust Background Subtraction Under Rapidly Changing Illumination Conditions

Robust Background Subtraction Under Rapidly Changing Illumination Conditions

Uploaded by

Satish NagabhushanCopyright:

Available Formats

Copy holder

The Department of Electrical Engineering of the Eindhoven University of Technology accepts

no reponsibility for the contents of M.Sc. theses or practical training reports

Department of Electrical Engineering

Studentenadministratie

Den Dolech 2, 5612 AZ Eindhoven

P.O. Box 513, 5600 MB Eindhoven

The Netherlands

http://w3.ele.tue.nl/nl/

Author

Luc P.J. Vosters

Order issuer

Prof. De Haan

Group/Chair: Electronic Systems

Reference

Series: Master graduation

paper, Electrical Engineering

Date

5 November 2009

Robust Background Subtraction

under Rapidly Changing

Illumination Conditions

Prof. De Haan

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

1

Abstract Many current background segmentation algorithms

can only cope with gradual illumination changes. In this paper,

we aim to design an indoor background subtraction method that

is also robust to sudden changes over a broad range of lighting

conditions. To this end, three state-of-the-art methods, all

designed to be robust to sudden illumination changes, are

compared and analyzed. Based on the analysis, a new method is

proposed, which combines elements from the earlier methods

augmented with a recursive post-processing to improve temporal

consistency. The result is a very robust algorithm that operates on

regional and picture level. Complexity analysis shows that the

algorithm can run real-time. In our benchmarking, we provide

visual results of the algorithms, as well as a quantitative

performance indication in terms of precision and recall.

Index TermsBackground subtraction, background modeling,

Eigen space models, Gaussian mixture models, image change

detection, tonal alignment.

I. INTRODUCTION

MBIENT intelligence refers to electronic environments that

are sensitive and responsive to the presence of people [1].

In order to be responsive to people, ambient intelligence

systems should extract information with regard to body

posture, gesture, facial expressions, age, gender, focus of

attention and objects of interest from people. A typical context

of such environment is an indoor area in which one or multiple

fixed cameras are installed.

An important element of a computer vision based ambient

intelligence system is a background subtraction module. This

module should differentiate between background pixels, which

should be ignored, and foreground pixels, which should be

processed for information extraction. The background

subtraction module aims to model the background image of a

scene captured by a video camera so that any object which is

not present in the background model image will be detected

and labeled as foreground. Since the foreground mask will be

processed by other algorithms for information extraction, the

background subtraction module should reveal the location and

size of foreground objects in the foreground mask.

To avoid ambiguity, we define foreground as all objects

emerging in the input scene, which differ from the modeled

background. Foreground objects can distinguish themselves by

motion, e.g. a person walking into a room, but they can also be

motionless, e.g. a person sleeping on a sofa.

We focus on applications adopting a fixed camera in which

the background scene can be modeled by a background image.

We assume that differences from an input image with a

background model image are caused by foreground objects.

However, these background model images are not static.

During the capturing process various lighting conditions and

lighting changes can occur which will alter the appearance of

the background model image. Furthermore moving

background elements like shades, curtains but also shadows

cast by moving objects can change the appearance of the

background. These changes should not be detected as

foreground.

In this paper we choose to focus primarily on rapidly

changing lighting conditions. Changes in lighting conditions

can be either global, affecting all pixels in a scene, or local,

affecting only a specific region of the background scene. In a

typical indoor environment illumination changes are caused

by:

1) Indoor lighting equipment: The combination of turning

multiple light sources on or off in a room, causes different

lighting conditions to occur. Some parts of the background are

covered in shadows while others are highlighted or clipped.

These shadows and highlights alter the appearance of the

background and can be easily mistaken as structural

differences from the background model. This leads to false

positives in the detected foreground mask. Turning on or off a

light switch causes a rapid often global illumination change in

a room.

2) Time of day: The light intensity changes gradually during

the day, which can cause a gradual illumination change indoor.

3) Shadows cast by foreground objects: These shadows are

local illumination effects which appear different from the

modeled background and can have motion. Therefore, they are

easily mistaken as foreground objects.

4) Opaque, reflecting or (semi-) transparent objects: Curtains,

mirrors, shades and windows are objects which can change the

lighting conditions by blocking, reflecting or (partly) passing

through light. These objects can influence the lighting

conditions in the room and alter the appearance of the

background globally as well as locally.

Many current background subtraction algorithms can cope

with gradual illumination changes but remain vulnerable to

sudden illumination changes and different lighting conditions

Robust Background Subtraction under Rapidly

Changing Illumination Conditions

L.P.J. Vosters

Electronic Systems group

Email: l.p.j.vosters@student.tue.nl

A

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

2

[5]. In this paper we aim to develop a background subtraction

algorithm which is robust to rapidly changing illumination

conditions. First we compare three state of the art background

subtraction algorithms, which are specifically designed to be

robust to illumination changes. These methods are described in

[3], [4] and [5]. We will refer to them as tonal alignment (TA),

Eigenbackground (EB) and statistical illumination (SI)

respectively.

TA and EB both operate on frame level [2]. They both try to

reconstruct the background model image under the current

lighting conditions from the input frame. Ideally the

reconstructed background model image should comprise the

illumination effects which altered the appearance of the

background scene in the input frame. However with TA only

global illumination effects can be retrieved. EB is a training

based method which can reconstruct global as well as local

illumination effects. Both methods use image differencing to

obtain the foreground mask. The problem with image

differencing is that the threshold is arbitrary and no pixel

dependencies are taken into account. This leads to a

suboptimal segmentation.

SI operates on region level. It is based on the shading model

[6] to obtain an algorithm which is claimed to be robust to

sudden illumination changes [5]. However, this method cannot

model local scene changes because it only uses a single

background model image. Therefore, local illumination effects

which change the appearance of the background, e.g. shadows,

highlights and clipping, are often detected as foreground. SI

only works for global illumination effects. This is

demonstrated in section III.

The problem of TA and SI is their inability to reconstruct

rapid local illumination effects. The problem of EB is its poor

thresholding which is arbitrary and does not take into account

pixel dependencies. The method we propose in this paper

solves these problems by combining EB and SI. EB is applied

to reconstruct a background model image for each input frame

which comprises local background scene changes which

cannot be modeled by the region based model of SI. Then SI

in a modified form is applied to obtain the change mask. The

statistical framework of this method allows for more flexibility

in the segmentation than simple image differencing, leading to

an improved segmentation.

The contributions made to SI involve complexity reduction

for real time operation, and a spatial likelihood model which is

updated at each frame by exploiting reliably detected

foreground and background pixels to mitigate the need for

training on ground truth data. Furthermore we propose a

recursive post filter to obtain spatio-temporal coherence in the

foreground mask.

The remainder of the paper is organized as follows. Section

II gives a brief overview of existing background subtraction

algorithms with the focus on TA, EB and SI. Section III gives

a review of SI [5]. Section IV describes the developed

algorithm along its various stages. Section V presents some

comparative experimental results. Finally the paper is

concluded in section VI with discussions and future work.

II. PREVIOUS WORK

A. Background Subtraction

Background subtraction is a widely studied research topic in

computer vision. Many algorithms have been developed for

background modeling during the past two decades. The

existing background modeling techniques can be classified

into three categories with regards to the locality of the

background and foreground model used: 1) Pixel based

models, 2) Region based models and 3) frame based models.

Modern pixel based methods have independent models for

each pixel, which are progressively updated over time. In [7]

and [8], it was first proposed to model the pixel intensity of

each pixel by a Gaussian Mixture Model (GMM) to account

for the multimodality of the underlying background probability

density function (pdf). Pixel based models can cope with

gradual illumination effects as well as moving background

elements such as tree leaves and flowing water. The main

disadvantage of these models is that the model update is

relatively slow which makes it difficult to rapidly adjust to

sudden illumination changes. Furthermore the spatial

dependencies among pixels are not taken into account. Still,

the method described in [7] and [8] has become a standard

among background subtraction methods. Therefore, we use it

as a reference method in our comparisons of section V. We

will refer to this method as Adaptive Background Mixture

Model (ABMM).

The second category uses region based models of the

background to exploit the spatial relationships among pixels

and improve the detection accuracy. The nonparametric kernel

density estimation method in [12] uses two single kernel

density estimators (KDEs) to model the background and

foreground pdfs. These background and foreground models

are integrated into a Maximum A Posteriori Markov Random

Field (MAP-MRF) framework, which enforces spatial context

among pixels in the segmentation. This method can deal with

dynamic backgrounds. However, since the background and

foreground model update is relatively slow this method cannot

deal with a sudden change in illumination conditions. Local

kernel histogram based methods also operate on region level.

In [13] spatial dependencies among pixels are retrieved by

calculating kernel histograms of color, in square overlapping

image regions between a reference and input frame. In [14] a

local kernel histogram of gradients instead of color is used to

obtain robustness to illumination changes.

Frame based models build a model of the complete

background scene at once. The main advantage of these

models is that they model complete areas in a scene.

Therefore, frame based models are better suited to model large

and dynamic changes in lighting conditions in a room than

pixel wise or region based models. Two frame based

approaches are EB and TA which are to be discussed in more

detail in the next section.

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

3

B. Sudden Illumination Invariant Background Subtraction

In this section an overview of existing approaches on

making background subtraction robust to sudden illumination

changes is given.

In [9] the fusion of texture and color differences, between a

stored background image and input frame is used, to perform

illumination invariant background subtraction. In textured

areas of an image the ordering among pixels in a neighborhood

will be preserved even in the presence of heavy photometric

distortions [10]. Therefore texture is regarded as more reliable

and robust than intensity. Li and Leungs method depends

mostly on intensity in image areas which have no texture.

Therefore, it is not robust to sudden illumination changes if the

input frame does not contain texture.

In [11] texture and intensity are fused in a MRF-based

framework which in addition to spatial dependencies also

includes temporal dependencies among pixels. The methods in

[9],[10] and [11] however only rely on one static background

model image. If the background appearance significantly

changes due to illumination effects, like shadows, highlights

and clipping, these methods will fail.

In [14] a local kernel histogram based method is described

in which the oriented gradient, an illumination invariant

histogram feature, is used instead of color. The oriented

gradient consists of a gradient vector and norm which indicate

the direction and strength of a contour in a textured image

area. The computational complexity of this method is low.

However, since this method solely depends on gradients and

no color information is taken into account, the method does

not work well in untextured areas.

In [3] the TA method is proposed. It applies tonal alignment

to reconstruct the background image. For each frame, the

change detection algorithm of [17] is applied to reliably detect

background pixels. The subset of reliable background pixels

are employed to compute the histogram specification

transformation [16] which tonally aligns a fixed background

model frame with the current input frame. The foreground

mask is obtained by a pixel wise subtraction and thresholding

between the input frame and the tonally aligned background

model frame. The histogram specification method can only

reconstruct illumination effects on a global scale. It cannot

reconstruct local structural changes in the background such as

shadows and highlights. Therefore this method only works for

global sudden illumination effects.

SI, models the background, foreground and shadows of a

scene in all three RGB channels with a single mixture of

Gaussian distributions. It employs a statistical illumination

model, based upon the shading model, in which the ratio of

intensities between a stored background image and an input

image in all three channels is modeled as a GMM to account

for illumination effects. The GMM foreground distribution is

modeled by color and consists of three channel RGB Gaussian

kernels complemented by a uniform distribution. These

foreground kernels model foreground objects, occluding the

background scene. Furthermore a spatial likelihood model,

which is trained from ground truth data, is incorporated in the

GMM to model pixel dependencies. Finally the Expectation

Maximization (EM) algorithm assigns pixels to one of the

distributions in the GMM and then optimizes the distribution

parameters [5]. In section III, SI is reviewed and modified.

Global and local variations of illumination effects in the

background scene can be successfully modeled by EB [4].

Instead of using only one background reference image, EB

exploits a training set of background images recorded under

changing lighting conditions. The frames in the training set are

written as column vectors and the mean and covariance matrix

of these vectors are calculated. The covariance matrix is then

efficiently decomposed into an Eigen space model which

describes the range of appearances of the background scene

(e.g. the different lighting conditions in a room) that have been

observed during the training period [15]. For each input frame

the corresponding background frame is then reconstructed by

projecting the input frame onto the Eigen space. The

reconstructed background frame is then subtracted from the

input frame and a simple threshold is applied to obtain the

foreground mask. A dynamic pixel based threshold, which

models the difference between a pixel in the input frame and

reconstructed background image by a Gaussian distribution

which is updated over time, has also been proposed in [4].

Since the Eigenvectors in this Eigen space model are

background appearances themselves, this method is called

Eigenbackground. Unlike Fourier decomposition, which can

reconstruct any possible signal with its orthogonal basis

functions, Eigen space decomposition can only reconstruct

frames which are a linear combination of the Eigenvectors

from the background scenes present in the training set.

Therefore it is not robust to completely new illumination

conditions. However, EB allows for a rapid incremental Eigen

TABLE I

PROS AND CONS ILLUMINATION INVARIANT BACKGROUND SUBTRACTION

METHODS

Method Pros Cons

Li Leung

[9]

- Robust to noise

- Spatial dependencies

modeled

- Cannot handle background

shadows, highlights, clipping

-Manual parameter tuning for

different sequences.

Local

Kernel

Histograms

[16]

- Robust to noise

- Spatial dependencies

modeled

- Does not work in

untextured image areas

- Cannot handle background

shadows, highlights, clipping

Tonal

alignment

[3]

- Robust to sudden

illumination change

- Cannot handle background

shadows, highlights, clipping

- Only for global effects

Statistical

illum.

Model [5]

- Parameter optimization

by EM

- Spatial likelihood

model

- Robust to sudden

illumination change

- Cannot handle background

shadows, highlights, clipping

-Training on ground truth

data required

Eigenback-

ground [4]

- Extremely robust to

heavy local and global

illumination changes.

- Incremental Eigen

space update

- Large training set required

to train all possible

illumination conditions

- Can only handle lighting

conditions which are a linear

combinations of lighting

condition present in training

set

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

4

space model update whenever new background model frames

emerge in the input video stream [4].

Table I summarizes the pros and cons of the methods

mentioned in this section, which are designed to be robust to

illumination changes.

III. REVIEW STATISTICAL ILLUMINATION METHOD

As was mentioned in section II, SI is based upon the

Shading model [6]. The Shading model states that the ratio

between pixels in a reference frame, which is recorded under

uniform illumination conditions and without cast shadows, and

an input frame is constant. SI models a scene with K different

illumination ratios. By following a similar notation as in [5],

the probability of each illumination ratio l

i

is given by

( ) ( )

k

i

k

i

z

k k i

K

k

z

k i i

l N z l p =

=

, | , , |

1

. (1)

where,

[ ]

T

b

i

b

i

g

i

g

i

r

i

r

i i

m u m u m u l / , / , / =

,

is a vector which contains the illumination ratio between the

input image u and background model image m for pixel i, for

all three R, G and B channels respectively, z

i

k

is a binary latent

variable which indicates to which class of illumination ratios

pixel i belongs,

k

weights the relative importance of each

different mixture component k, and , denote all parameters

of the K Gaussian kernels N. In Fig. 1 the ratio between an

input frame and background model frame is plotted.

The foreground is modeled as a mixture of K

FG

Gaussian

kernels. Since at any time instant the probability of observing a

foreground pixel at any location of any color is uniform [12],

the mixture is complemented by a uniform distribution. Then

the probability of observing pixel u

i

becomes

( ) , , |

i i

z u p =

( )

k

i

FG

k

i

FG

K K

i

FG

z

k k i

K

K k

z

k

z

K K

l N

|

|

\

|

+ =

+ +

+ +

, |

256

1

3

1

1

. (2)

The statistical illumination model can be expressed in pixel

color instead of illumination ratio by dividing Eq. 1 by |J

i

|, the

determinant of the Jacobian of function l

i

to obtain

( ) ( )

i i i i i

J z l p z u p / , , | , , | = , (3)

where, |J

i

| = m

i

r

m

i

g

m

i

b

. By multiplying Eq.s 1, divided by |J

i

|,

and 2 we obtain the joint log likelihood function p(u, z | ),

where = {, , } are the GMM parameters.

A. Spatial Likelihood Model

Instead of modeling spatial dependencies in the joint log

likelihood with a prior model on z, the spatial likelihood

model tries to capture spatial dependencies among pixels by

using two features that can be computed very fast from the

luminance channel. The first feature f

i

1

is the normalized cross

correlation (NCC) for each window around pixel i between

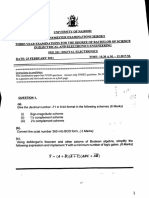

Fig. 1. From left to right from top to bottom: Input frame, ratio between input

and background model frame, fi

1

, fi

2

, background histogram probability and

foreground histogram probability for each pixel.

background model frame and input frame. It is given by

( ) ( )

=

i i i i

i i i

w j w j

j j

w j w j

j j

w j w j

j j

w j

j j

i

m m n u u n

m u m u n

f

2

2

2

2

1

, (4)

where, n is the number of pixels in the window centered

around pixel i. However, NCC is meaningless in untextured

areas, due to the normalization in the denominator of Eq. 4,

therefore, it cannot make the difference between a uniform

background and a uniform foreground region. Therefore, the

second feature f

i

2

is a measure of the amount of texture. It is

given by

( ) ( )

+ =

i i i i

w j w j

j j

w j w j

j j i

m m u u f

2

2

2

2 2

. (5)

Fig. 1 shows f

i

1

and f

i

2

for each pixel in the input image.

In [5] the background and foreground distributions

p

BG

(f

i

1

, f

i

2

| u

i

) and p

FG

(f

i

1

, f

i

2

| u

i

) are independently modeled

by two dimensional histograms. The histograms are learned

from a set of manually segmented images and are denoted by

h

BG

and h

FG

. The background histogram corresponds to

background mixture components k=1K, the foreground

histogram corresponds to foreground mixture components

k=K+1K

FG

+1. To achieve real time performance integral

imaging techniques [19] can be applied. With integral images

the features in Eq. 4 and 5 can be computed with a complexity

which is linear w.r.t. the number of pixels in the image and

constant w.r.t. the window size. Furthermore the histogram

features of the background frame can be pre computed. The

background and foreground probabilities for each pixel in the

input frame according to the background and foreground

histograms are shown in Fig. 1.

B. Maximum Likelihood Parameter Estimation

By combining the statistical illumination model, the

foreground model and the spatial likelihood model and

marginalizing over z, we obtain the combined p(u, f | ). The

parameters = {, , } are estimated by maximum likelihood

(ML) from Eq. 6. In order to reduce the computational burden

of the model, it is assumed that all pixels are independent

identically distributed (i.i.d). The ML estimate of Eq. 6 is then

simplified to Eq. 7 which can be maximized by the EM

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

5

algorithm.

( )

)

`

z

z f u p

| , , log max arg

. (6)

( )

i z

k

i i i

k

i

z f u p

| , , log max arg

, (7)

where,

( ) ( ) ( ) =

=

i

BG

z

k k i

K

k

z

k

i

k i i

f h l N

J

z f u p

k

i

k

i

, |

1

| , ,

1

( ) ( )

i

FG

z

k k i

K

K k

z

k

z

K K

f h l N

k

i

FG

k

i

FG

K K

i

FG

|

|

\

|

+ =

+ +

+ +

, |

256

1

3

1

1

.

The EM algorithm alternates between the E-and M-step. In the

E-step the posterior distribution p(z

i

k

| u

i

,

t

) =

t

i,k

for each

pixel i and for each mixture component k are calculated. In the

M-step the parameters are updated to maximize the log

likelihood. Depending on the initialization the EM-algorithm

will converge to a local or global maximum of the joint log

likelihood. The resulting update equations for the pdf in Eq. 7

are given in appendix Fig. A1. The probability that pixel i

belongs to the foreground is given by P

i

BG

. The segmentation

is performed by thresholding P

i

BG

for all pixels in the image.

Instead of the i.i.d. assumption of Eq. 7, the spatial pixel

dependencies can be modeled explicitly by spatial priors. In

[12], pixel dependencies in a 4-connected neighborhood are

modeled by Ising model to obtain a MAP-MRF framework. To

maximize the MAP-MRF framework, graph cut optimization

or other iterative approximations like iterated common modes

simulated annealing or mean field are required. This will result

in a higher computational complexity which will not allow real

time operation. The spatial likelihood model of SI, however,

models pixel dependencies in textured areas of the image

while keeping the computational complexity low.

IV. PROPOSED APPROACH

SI has three undesired properties: 1) the spatial likelihood

model requires training on manually segmented ground truth

data, 2) it does not operate real time 3) it cannot cope with

local illumination effects. In this section:

A. We modify the spatial likelihood model to mitigate the

need for manually segmenting video data.

B. We reduce the complexity of SI to allow for real time

operation.

C. We propose a post processing filter which improves the

spatio-temporal consistency in the foreground mask.

D. We combine EB with the modified SI to obtain the

proposed algorithm. This algorithm is more accurate and

robust to rapid local illumination changes than EB and SI.

A. Modification Spatial Likelihood Model

The spatial likelihood model significantly improves the

segmentation results because it captures interpixel

Fig. 2. Improvement of segmentation with spatial likelihood model. From left

to right: segmentation with spatial likelihood model, segmentation without

spatial likelihood model and input frame.

dependencies and removes false positives. This is illustrated in

Fig. 2. Manually segmenting video data in background and

foreground in order to train the spatial likelihood model, is a

tedious and time consuming task, especially because a large

training set is needed to include many possible illumination

effects. Furthermore the histograms wont remain valid if

drastic changes in lighting conditions occur. Here we propose

to calculate the spatial likelihood model directly from the input

frames by reliably detecting background and foreground

pixels. For each input frame the background and foreground

histograms are then updated with the histogram features of the

reliable background and foreground pixels respectively.

In [17] a method for template matching is proposed which is

robust to sudden illumination changes. It uses a measure to

determine the amount of order preservation between an image

template and a window surrounding a pixel in the input image.

Since the ordering of pixels in textured image areas will be

preserved even in the presence of heavy photometric

distortions [10], this measure is extremely robust to detect

reliable background pixels for our application.

The order preservation can be determined by looking at the

sign of the pixel differences in a neighborhood. If the sign of

the difference between two pixels does not change, even in the

presence of lighting changes, the order is preserved. To

evaluate the degree of order preservation for pixel i, the NCC

of the image differences between pixels in a window around i

in the input frame and background model frame is calculated.

The value returned by the NCC is in the range [0, 1] and it is

independent of the amplitude of the differences between the

input frame and template due to normalization in the

denominator of Eq. 9.

We modify the method in [17] to compare the order

preservation of pixels in the input frame with the

corresponding pixels in the background frame instead of a

template. We represent the intensity of a pixel by F(x

i

, y

i

),

where x

i

, y

i

are the horizontal and vertical image position of

pixel i, respectively. For each pixel i in the input and

background model frame a vector of image differences

IN

(x

i

,y

i

) and

BG

(x

i

,y

i

) is calculated by

( )

( ) ( )

( ) ( )

( ) ( )

( ) ( )

(

(

(

(

+

+

=

1 , 1 ,

, 1 , 1

, 1 ,

, , 1

,

i i i i

i i i i

i i i i

i i i i

i i IN

y x F y x F

y x F y x F

y x F y x F

y x F y x F

y x

,

( )

( ) ( )

( ) ( )

( ) ( )

( ) ( )

(

(

(

(

+

+

=

1 , 1 ,

, 1 , 1

, 1 ,

, , 1

,

i i i i

i i i i

i i i i

i i i i

i i BG

y x F y x F

y x F y x F

y x F y x F

y x F y x F

y x

.(8)

The matching function which returns the measure for the

amount of order preservation of pixel i is then given by Eq. 9,

where w

i

is a rectangular window around pixel i. Eq. 8 shows

that each pixel has unique intensity differences, to prevent the

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

6

( )

i i

y x MF ,

=

( ) ( )

( ) ( ) ( ) ( )

i i

i

w i

i i FG

T

i i FG

w i

i i BG

T

i i BG

w i

i i FG

T

i i BG

y x y x y x y x

y x y x

, , , ,

, ,

(9)

intensity difference between the same two pixels from

occurring multiple times in the calculation of MF(x

i

, y

i

). The

matching function of Eq. 9 tries to match high contrast regions

of the two blocks under comparison, since only high intensity

differences can provide a contribution to the matching score.

High contrast regions appear near edges and in textured image

regions. Uniform or clipped regions in the input frame have no

contrast and are therefore unreliable. They will not be detected

as background pixels. Pixels are considered reliable

background if their matching score is larger than a threshold.

Fig. 3 shows reliable background pixels detected after a

sudden illumination change. Generally during illumination

changes without clipping, all background pixels in textured

regions and near edges are detected as reliable background,

while the number of false positives is very low.

Since Eq. 9 like Eq. 4 and 5 represents a NCC, we

implemented it very efficiently with integral image tables. The

advantage of integral image tables is that the sum of pixels in

window can be calculated with only 3 addition operations and

4 table look up operations. The numerator in Eq. 9 for

example can then be calculated with only 4 multiplications, 20

addition and 16 table lookup operations. If we assume a

window size M, then without integral images 4M

2

multiplications, 20M

2

additions and 8M

2

table lookups are

required. The complexity with integral images thus is

independent of the window size M. The same holds for the

products in the denominator.

Foreground pixels of moving objects are detected by a 3-

DRS motion estimator [18]. 3-DRS is a fast an efficient

motion estimation algorithm which applies recursive search

block matching. Here we apply it to generate motion vectors

for each pixel in the input image. The sum of absolute

difference (SAD) is used as the criterion to select the most

likely candidate vector for each block of pixels in the current

frame. All blocks for which the absolute value of the motion

vector is larger than a threshold will be considered foreground.

To remove noisy isolated motion vectors and motion vectors

which exceed object boundaries, a block filter is repeatedly

applied. This filter removes all motion vectors which have

more than 2 neighbors with zero motion vectors. Experiments

indicate that most false positive motion vectors are removed

by repeating the block filter 3 times. This procedure will

remove some non noisy motion vectors as well. This is no

problem because the spatial likelihood model can still be

updated with the remaining motion vectors to obtain an

accurate foreground histogram. To eliminate the block

structures and to obtain a smoother motion vector field we

apply block erosion [18].

3-DRS cannot cope with rapid illumination changes because

they are treated as motion. Undesirably motion vectors will be

assigned to the pixels which undergo an illumination change.

Furthermore, 3-DRS suffers from ghosting effects, which are

Fig. 3. Reliable background pixel detection under rapidly changing

illumination conditions. The green pixels indicate the reliably detected

background.

caused by motion vectors of moving objects which extend into

uniform background areas. Therefore we dont use the results

of the motion estimator to update the foreground histogram if

the percentage of reliable foreground pixels which overlap the

reliable background pixels is larger than 5%. If the number of

detected foreground pixels is smaller than 0.5% of the total

number pixels in the input frame, we wont update the

foreground histogram either, since then only a small fraction of

the foreground object is detected. This prevents updating the

foreground histogram with unreliable foreground pixels

detected by 3-DRS. The thresholds 5% and 0.5% are

determined empirically.

After calculating reliable background and foreground pixels

the background and foreground histograms of the current

frame, h

*

BG

and h

*

FG

, are calculated. The bins b

l

of the spatial

likelihood histograms h

BG

and h

FG

are then update according to

( ) ( ) ( ) ( )

l BG BG l BG BG l BG

b h b h b h

+ = 1

BG l

h b ,

, and

( ) ( ) ( ) ( )

l FG FG l FG FG l FG

b h b h b h

+ = 1

FG l

h b ,

, (10)

where,

BG

and

FG

are the learning rate parameters of the

background and foreground respectively. They indicate the

update speed of the histograms. Initially h

BG

and h

FG

are

uniform. If a foreground object is motionless h

FG

remains

uniform until the object starts moving.

B. Complexity Reduction

The algorithm in [5] does not run real time for a standard

resolution of 640x480 pixels. Therefore we reduce its

complexity by first downscaling the frame, then applying SI on

the downscaled frame and finally upscaling the segmentation

result. Theres a tradeoff between speed vs. segmentation

accuracy. Downscaling implies more loss in segmentation

accuracy because edges and small details will be lost,

however, it will speed up the segmentation. Since the

foreground mask will be further processed by other algorithms

for information extraction, it is sufficient for our application to

indicate the location and size of foreground objects. In our

implementation we therefore downscale with a factor 5 to

allow real time operation. Furthermore downscaling improves

the signal to noise ratio (SNR) of the image. This makes SI

more robust to noise.

C. Post processing

To improve the performance of SI a median filter on the

segmented frame can be applied before upscaling. Fig. 4

shows this filter improves the segmentation results by

removing false positive detections in the background and false

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

7

Fig. 4. Left: segmentation without post processing. Middle: segmentation

with post processing. Right: input frame.

Fig. 5. Left: segmentation with SI. Middle: Input frame. Right: background

model frame.

negatives detections in foreground objects, which appear as

random noise in the segmented image. However, this filter

does not remove temporal inconsistencies from the foreground

mask. Temporal inconsistencies manifest themselves as

flickering or the sudden emergence or disappearance of a

group of foreground pixel in the foreground mask. To combat

temporal inconsistencies and obtain a smooth foreground mask

we propose the recursive spatio-temporal filter described by

( )

( )

>

=

otherwise

thd n r F

n r F

o

o

, 0

, , 1

,

*

where, r=[x, y]

T

and

n

I r

( )

= =

=

|

|

\

|

+

+

|

|

\

|

+

(

(

(

|

|

\

|

(

+

+

|

|

\

|

+

|

|

\

|

(

|

|

\

|

+

|

|

\

|

(

|

|

\

|

|

|

\

|

(

+

+

|

|

\

|

+

|

|

\

|

(

+

|

|

\

|

=

|

|

\

|

(

1

1

1

1

*

1

1

* * *

1 , ,

1 1

,

1

0

1

,

1

0

1

,

1 1

,

1 1

1 ,

i j

o

i

o o o

n

j y

i x

F

j

i

h n

y

i x

F

i

h

n

y

x

F h n

y

x

F h

n

y

i x

F

i

h n

y

i x

F

i

h n

y

x

F

(11)

where thd is threshold value in the range [0,1], F

o

(r,n){0,1}

is the output foreground mask at location r for frame n, I

n

is

the set of all pixel locations in frame n, F[0,1] denotes the

input foreground mask, h is a 3 by 3 filter kernel with

normalized weights and [0,1] is a constant which weighs

the contribution of the pixels in the foreground mask of the

previous frame n-1. First the filter is applied from top left to

bottom right. To remove temporal inconsistencies in the

opposite direction the filter is applied again from bottom right

to top left. From Eq. 11 it can be noted that both spatial and

temporal recursiveness is embedded in our filter because the

filter uses all available output pixels in its aperture. In this way

flickering and holes in foreground objects will be removed

from the foreground mask. This is demonstrated in section V,

where the proposed filter is analyzed.

D. Proposed Method

SI can cope well with sudden global uniform illumination

changes. However, it completely fails when the appearance of

the background scene significantly changes due to cast

shadows, highlights and clipping. This is illustrated in Fig. 5,

where an input image in which the background is affected by

local illumination effects is shown. Ideally the ratio between

the input and background image pixels belongs to one of the

constant illumination ratios in SI. Fig. 5 shows that in the

presence of different local lighting conditions this does not

hold and that the pixels in the background have many different

illumination ratios. SI cannot model this.

As mentioned in section II, the background reconstruction

method of EB can reconstruct all possible local and global

illumination effects which are present in the training set. It

exploits multiple background frames in which valuable

information about lighting conditions is stored. By combining

the modified SI, which only uses one background frame, with

EB we obtain a segmentation method which is robust to heavy

photometric changes under different lighting conditions. For

each input frame we let EB reconstruct the background model

image separately in the three RGB channels. The reconstructed

background image is then used in SI. This will make the ratios

between the background model frame and the background

areas of the input image more constant. This will improve the

performance of SI, because the background pixel ratios will fit

the background model better. A block scheme of the resulting

algorithm is shown in Fig. 6.

The advantage of combining EB with SI is that it allows for

more flexibility in classifying a pixel than the pixel wise

differencing and thresholding of EB. Compared to the pixel

wise differencing of EB, the proposed method models the

background pixel ratios with Gaussians distributions of which

its parameters are automatically adjusted by the EM algorithm

to satisfy the maximum likelihood criterion. Furthermore, SI

models the spatial relations among pixels with the spatial

likelihood model. The EM algorithm will automatically update

the GMM parameters to achieve convergence to an optimum

of the likelihood function in Eq. 7. This allows for better

adaptation to the frame content compared to the thresholding

in [4]. By using EB the reliable background pixel detection

will be improved too. The proposed method thus effectively

combines the flexibility and EM parameter update of SI and

the background reconstruction capabilities of EB. The

experiments of section VI indicate that the proposed method

gives better segmentation results than EB or SI individually.

V. EXPERIMENTAL RESULTS

In this section the performance of TA, SI, EB with fixed and

dynamic threshold and the proposed method are compared

qualitatively and quantitatively in terms of precision and

recall. The ABMM of [20] is used as reference. This method is

an improvement of the pixel based method described in [7]

and [8], because it learns faster and more accurately adapts to

changing environments. Furthermore the effectiveness of the

modified spatial likelihood model and the proposed recursive

spatio-temporal post processing filter are demonstrated.

No benchmark sequences are available to measure the

performance of background subtraction algorithms under

rapidly changing illumination conditions. Therefore, we

recorded a very challenging test and training sequence with a

Logitech webcam. The sequence shows a room with a person

in which rapidly varying illumination conditions occur by

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

8

randomly turning on different combinations of light sources

and opening and closing shades, which block daylight from

entering the room.

A. Parameter Settings

We empirically choose the image differencing threshold in

TA method to give the best quantitative segmentation results

for the processed sequence. The fixed image differencing

threshold for EB is 20% of the largest difference between

input frame and reconstructed background model frame. The

parameters for the dynamic threshold are optimized

empirically to obtain the best segmentation results. For EB, SI

and the proposed method we use a downscale factor of 5. We

construct an Eigen space model separately for each RGB

channel, from a training set of 500 frames in which a diversity

of possible lighting conditions is captured. For each input

frame 10 Eigen vectors are used to reconstruct the background

image. Following [5], we use 2 background and 2 foreground

components in SI. Experiments with the number of

background and foreground components in the proposed

method point out that only 1 background and 2 foreground

components in SI suffice. The number of EM iterations per

frame is determined empirically. We dont observe any more

noticeable improvement in segmentation after more than two

EM iterations per frame. Therefore, we use 2 EM iterations

per frame for SI and the proposed method. We empirically

choose

BG

and

FG

as 0.2 and 0.4 respectively.

A median filter is applied as a post processing step to each

foreground mask obtained by the five algorithms. The window

size of the median filter is adapted for each method to obtain

the best segmentation results in terms of precision and recall.

This leads to a windows size of 5 by 5, for the proposed

method, EB, SI and the ABMM. A window size of 9 by 9 is

selected for TA.

B. Analysis

We performed a quantitative analysis at pixel level, on 750

frames of an indoor sequence in which illumination conditions

change rapidly. The ground truth foreground mask for the

quantitative comparison is obtained by manual segmentation of

that sequence. The detection rates for the five algorithms are

plotted in Fig. A2 in terms of precision and recall per frame,

defined by

% 100

#

#

=

Detected Positives of Total

Positives True of

precision

, and

% 100

#

#

=

Pixels Foreground Total

Positives True of

recall

.

Here precision is the percentage of detected true positive

foreground pixels from the total number of detected

foreground pixels, while recall is the percentage of all

foreground pixels detected. A recall of 100% can easily be

achieved by detecting the whole frame as foreground.

However, the precision will then be very low due to the large

number of false positives. On the other hand if only 1 pixel is

detected and that pixel correctly belongs to the foreground a

Fig. 6. Block diagram of the proposed method.

precision of 100% is achieved while the recall will be very

low. Therefore both precision and recall are needed for the

evaluation.

From the plots of the precision and recall in Fig. A2 and

from Table II it can be seen that the overall detection accuracy

for the proposed method is significantly higher. This method

achieves the highest average precision and recall. Having a

standard deviation of only 5.8% the precision of the proposed

method stays relatively constant compared to the other

methods. This means that in the foreground mask of our

method significantly less false positives occur.

For some intervals in Fig. A2 the recall of TA is higher by a

small amount. This indicates that TA detects more foreground

pixels in those intervals. However, the corresponding precision

values of our method are still significantly higher. The

implication of this can be seen in Fig. A3, where snapshots of

the foreground mask of every method are compared. In the

foreground mask of frame 437 and 747, processed by TA, the

recall is higher since more foreground pixel are detected,

however, the precision is clearly lower because many of the

positives detected belong to the background. For frame 600 to

640 the ABMM achieves a higher precision than our method,

however, its recall is significantly worse. Frame 620 of Fig.

A3 illustrates this well. With ABMM, 98% of the positives in

the foreground mask are foreground pixels, versus 85% in our

method. However our method detects 70% of all foreground

pixels while ABMM only detects 30%.

From Fig. A2 it can be clearly seen that SI and TA do not

work well on our sequence. This is because both methods only

use a single background model image to model the

background. Therefore, local illumination effects cannot be

modeled and they result as misdetections in the foreground

mask. Frames 202, 253, 592 and 747 in Fig. A3 illustrate this.

In frames where the background model image corresponds

well to the background scene of the input frame and where the

illumination change only affects the scene globally, TA and SI

both perform comparable to the proposed method.

Similar to the proposed method EB can reconstruct local

illumination effects, therefore local illumination changes will

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

9

0 100 200 300 400 500 600 700 800

50

60

70

80

90

100

Frame Number

(a) Precision

0 100 200 300 400 500 600 700 800

0

20

40

60

80

100

Frame Number

(b) Recall

Fig. 7. Quantitative comparison of applying the recursive spatio-temporal

filter (blue line) versus a median filter (red line) on the foreground mask

yielded by our proposed method. The optimal window size of the median

filter was found to be 5 by 5.

not be detected as foreground. The ability to reconstruct local

illumination effects is reflected in the precision. EB with

dynamic threshold detects equally much foreground pixels as

TA, however, its precision is 12.6% higher.

ABMM completely fails when sudden illumination changes

occur. Due to the pixel based nature of ABMM, pixels which

undergo a significant change in intensity will be considered

foreground, since they do not match any background

distributions in their GMM. Frame 14, 25 and 437 illustrate

this. Furthermore in ABMM the GMM of each pixel is

updated progressively over time. Therefore, pixels of objects,

which do not move for a number of consecutive frames, will

become part of a background distribution in their GMM,

causing them to disappear into the background in the

foreground mask.

In Fig. A2(c)-(d) and Table II the proposed method and EB

with fixed and dynamic threshold are compared. A 15%

increase in recall is obtained by dynamic instead of fixed

thresholding, however, the precision for dynamic thresholding

drops with 6%. Even if dynamic thresholding is applied, the

proposed method will still outperform EB.

Table II shows the contribution of the spatial likelihood

model in the proposed method. By applying the spatial

likelihood model an increase in precision of 6% is achieved

while the recall is hardly affected.

For the tested sequence, the proposed method on average

detects 57.3% of the foreground pixels with a precision higher

than 90%. The quantitative (Fig. A2) and qualitative (Fig. A3)

comparisons in this section confirm that the proposed method

outperforms the other methods on the tested sequence, by

having a higher recall and a significantly higher precision. The

proposed method thus detects more foreground pixels with a

higher accuracy and less misdetections for the tested sequence.

C. Analysis Recursive Spatio-Temporal filter

Here the recursive spatio-temporal filter of Eq. 11 is

analyzed and compared with a median filter, both

quantitatively and qualitatively. We use =0.4 and thd=0.42.

In Fig. 7 the precision and recall of the foreground mask of the

method processed with a median filter and the proposed filter

are plotted. The average precision and recall are reported in

Table II. The window size of the median filter was 5 by 5.

From Table II it can be observed that on average compared

to median filtering the recall is increased by 5% while the

precision stays approximately the same. Fig. 7 clearly shows

that the recall is higher by applying our filter. For example,

from frame 100 to frame 230 the recall of the median filtered

sequence drops while the recall of the sequence filtered with

the proposed filter even slightly increases. The precision is not

affected at all. The spatio-temporal recursiveness of our filter

will oppose sudden transitions and therefore achieve a

spatially and temporally smooth foreground mask. This is

shown in Fig. A4. If suddenly a new foreground object

emerges the filter needs about 3 frames before convergence is

reached and the object is fully detected.

VI. CONCLUSIONS AND FUTURE WORK

This paper proposed a fast method for the task of

background subtraction under rapidly changing illumination

conditions. This method is a combination of Eigenbackground

(EB) [4] and the modified statistical illumination model (SI).

SI models the background and foreground distribution by

Gaussian mixture model components. Maximum likelihood

parameter estimates are obtained by the expectation

maximization (EM) algorithm. However, SI cannot deal with

local illumination effects like clipping, highlights and

shadows. EB can reconstruct these effects very well but its

fixed or dynamic pixel based thresholding do not make any

assumptions about the foreground distribution in obtaining the

foreground mask, resulting in degraded performance. By

combining both methods we benefit from the modeling

capabilities and EM parameter updates of SI and the

background reconstruction capabilities of EB.

The spatial likelihood model is modified to mitigate the

need for training its spatial likelihood model on ground truth

data by allowing an online spatial likelihood model update per

frame. The modified spatial likelihood model will improve the

precision of the proposed method by 6% on the tested

TABLE II

COMPARISON OF THE AVERAGE AND STANDARD DEVIATION OF THE PRECISION

AND RECALL OF THE SEQUENCE USED IN FIG. A2.

Method

Precision

(%)

Recall

(%)

Proposed (recursive filter) 91.6 4.7 57.3 21.0

Proposed (median filter) 90.5 5.8 52.3 19.4

Proposed(recursive filter no histograms) 85.8 8.7 59.7 24.5

Proposed(median filter no histograms) 85.8 9.9 55.4 23.1

Eigenbackground (fixed threshold) [4] 75.8 16.6 29.2 21.5

Eigenbackground (dynamic threshold) [4] 69.6 21.1 45.4 24.9

Statistical Illumination [5] 61.9 26.0 20.6 16.9

Tonal Alignment [3] 57.0 17.0 44.0 21.7

ABMM [23] 38.1 31.5 31.8 23.2

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

10

sequence. Furthermore we proposed a temporal recursive filter

to obtain a spatially and temporally smooth foreground mask.

This post processing filter improves the recall of our method

by 5%.

We compared the proposed method with a reference method

and 3 state of the art background subtraction methods, which

claim to be robust to sudden illumination changes. Because no

benchmark sequences were available we conducted the

experiments on a challenging test sequence which contains

sudden illumination changes over a broad range of lighting

conditions. The results indicate that our method significantly

outperforms these methods on the tested sequence. With the

proposed post processing filter an average precision and recall

of 91.6% and 57.3% are obtained respectively. Furthermore

the precision of the proposed method is more constant with a

standard deviation of 5%. With the complexity reduction we

achieved an average frame rate of 15 fps which easily meets

the real time requirements for the application in ambient

intelligence systems.

Although we made an honest attempt to test the algorithms

on challenging and relevant video material, final conclusions,

regarding the superiority of the proposed method, require

generally accepted, and perhaps more diverse, benchmarks not

currently available.

A. Future Work

The proposed method is very robust under rapidly changing

illumination conditions provided the appearance of the

background in the input frame can be reconstructed by a linear

combination of the Eigen vectors obtained from the training

set. However, like EB and SI the proposed method cannot

cope with moved background objects. The object will be

detected forever after the movement since its new location

does not correspond with its location in the background model

image. Another problem is that for some applications it is

complicated to obtain background model images under

different illumination conditions. These problems can be

tackled by extending the proposed method with an incremental

Eigen space update in which each pixel is weighted before

updating the Eigen space model [4]. In this way new

appearances of the background are integrated into the Eigen

space model during runtime.

Input frames with large foreground objects, cause

degradation in the Eigenbackground reconstruction. These

degradations occur as errors which are spread over the entire

reconstructed background image resulting in inaccurate

segmentation [21]. This problem can be circumvented by

extending the proposed method with recursive error

compensation as described in [21].

The reliable foreground pixel detection in the spatial

likelihood model can be improved by using normalized cross

correlation (NCC) instead of sum of absolute difference (SAD)

as a block match criterion in the 3-DRS motion estimator.

NCC is less vulnerable to the amplitude differences between

the previous and current frame, which are caused by

illumination changes, due to normalization in its denominator.

ACKNOWLEDGEMENTS

The author would like to acknowledge his gratitude for the

support provided by C. Shan and T. Gritti. Special thanks go

out to G. de Haan for his advice and enabling me to complete

my graduation project at Philips Research laboratories.

REFERENCES

[1] E. Aarts, R. Harwig, M. Schuurmans, Ambient Intelligence, in The

Invisible Future: The Seamless Integration Of Technology Into

Everyday Life, P. J. Denning, Ed. New York: McGraw-Hill, 2002, pp.

235-250.

[2] K. Toyama, J. Krumm, B. Brumitt, and B. Meyers, Wallflower:

Principles and practice of background maintenance," Proc. ICCV, vol.

1, pp. 255261, Kerkyra, Greece, September, 1999.

[3] L. Di Stefano, F. Tombari, S. Mattoccia Robust and Accurate Change

Detection under Sudden Illumination Variations, Proc. ACCV, vol. 1,

pp. 103-109, Tokyo, November, 2007.

[4] B. Han, R. Jain Real-Time Subspace-Based Background Modeling

Using Multi-channel Data, ISVC, Part II, LNCS 4842, pp. 162-172,

Lake Tahoe, USA, November, 2007.

[5] J.Pilet, C. Stretcha, P. Fua, Making Background Subtraction Robust to

Sudden Illumination Changes, ECCV, part IV, LNCS 5305, pp. 567-

580, Marseille, France, October, 2008.

[6] R.J. Radke, S. Andra, O. Al-Kofahi, B. Roysam, Image Change

Detection Algorithms A systematic Survey, IEEE Transactions on

Image Processing, vol. 14, pp. 294-307, 2005.

[7] N. Friedman, S. Russell, Image Segmentation in Video Sequences: A

Probabilistic Approach, Proc.UAI, pp. 175-181, Rhode Island, USA,

August, 1997.

[8] C. Stauffer, W. Grimson, Learning Patterns of Activity Using Real-

Time Tracking, IEEE Transactions on Pattern Analysis and Machine

Intelligence, vol. 22, pp. 747-757, August, 2000.

[9] L. Li, M. K. H. Leung, Integrating Intensity and Texture Differences

for Robust Change Detection, IEEE Transactions on Image

Processing, vol. 11, pp. 105-112, February, 2002.

[10] B. Xie, V. Ramesh, T. Boult, Sudden Illumination Change Detection

Using Order Consistency, Image and Vision Computing, vol. 22, pp.

117-125, 2004.

[11] X. Zhao, W. He, S. Luo, L. Zhang, MRF-Based Adaptive Approach for

Foreground Segmentation under Sudden Illumination Change, Proc.

ICICS, pp. 1-4, Singapore, December, 2007.

[12] Y. Sheikh, M. Shah, Bayesian Modeling of Dynamic Scenes for Object

Detection, IEEE Transactions on Pattern Analysis and Machine

Intelligence, vol. 27, pp. 1778-1792, 2005.

[13] P. Noriega, B. Bascle, O. Bernier, Local kernel color histograms for

background subtraction, In INSTICC Press, editor, VISAPP, vol. 1, pp.

213219, 2006.

[14] P. Noriega, O. Bernier Real Time Illumination Invariant Background

Subtraction Using Local Kernel Histograms, Proc. BMVC, vol. 3, pp.

979-988, Edinburgh, September, 2006.

[15] N.M. Oliver, B. Rosario, A.P. Pentland, A Bayesian Computer Vision

System for Modeling Human Interactions, IEEE Transactions on

Pattern Analysis and Machine Intelligence, vol. 22, pp. 831-843, 2000.

[16] R. Gonzalez, R.Woods. Digital Image Processing, 2nd ed. Upper

Saddle River, New Jersey: Prentice Hall, 2002, pp. 88-103.

[17] F. Tombari, L. Di Stefano, and S. Mattoccia, A robust measure for

visual correspondence, Proc. ICIAP, pp. 376-381, Modena, Italy,

September, 2007.

[18] G. de Haan, P.W.A.C. Biezen, H. Huijgen, O. Ojo. True Motion

Estimation with 3-D Recursive Search Block Matching, IEEE

Transactions on Circuits and Systems for Video Technology, vol. 3, pp.

368-388, 1993.

[19] D.-M. Tsai C.-T. Lin, Fast Normalized Cross Correlation for Defect

Detection, Pattern Recognition Letters, vol. 24, pp. 2625-2631, 2003.

[20] P. Kaewtrakulpong, R. Bowden An Improved Adaptive Background

Mixture Model for Real-time Tracking with Shadow Detection,

AVBSS, Kingston upon Thames, UK, September, 2001.

[21] Z. Xu, P. Shi, I. Gu, An Eigenbackground Subtraction Method Using

Recursive Error Compensation, PCM, pp. 779-787, Hangzhou, China,

September, 2006.

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

11

APPENDIX

Fig. A1. Update equations for maximizing the log likelihood of Eq. 7 by means of the EM algorithm. P is the number of pixels in a frame

and

T

denotes the transposed of a vector.

0 100 200 300 400 500 600 700 800

0

20

40

60

80

100

Frame Number

(a) Precision

0 100 200 300 400 500 600 700 800

0

20

40

60

80

100

Frame Number

Proposed

Eigenbackground [4]

Statistical Illumination [5]

Tonal Allignment [3]

ABMM [20]

(b) Recall

0 100 200 300 400 500 600 700 800

0

20

40

60

80

100

Frame Number

Proposed

Eigen Background Dynamic Threshold

Eigen Background Fixed Threshold

(c) Precision

0 100 200 300 400 500 600 700 800

0

20

40

60

80

100

Frame Number

(d) Recall

Fig. A2. (a)-(b) Quantitative comparison of the proposed method, EB [4], SI [5], TA [3] and ABMM [20] in terms of precision and recall per frame. (c)-(d)

Quantitative comparison proposed method, EB with dynamic threshold and EB with fixed threshold [4].

E-step M-step

( ) ( )

i

BG

t

k

t

k

i

i

t

k

t

K k i

f h l N

J N

=

+

=

, |

1 1

1

... 1 ,

( ) ( )

i

FG

t

k

t

k

i

t

k

t

K K k i

f h u N

N

FG

=

+

+ =

, |

1

1

... 1 ,

( )

i

FG

t

t

K K i

f h

N

FG K K

FG

|

|

\

|

=

+ + +

+ + 3

1

1 ,

256

1 1

+ +

=

+

=

= =

1

1

1

,

1

,

,

FG

K K

k

t

k i

K

k

k i

i

BG

N where P

( )

( )

=

+

=

+

+

P

i

i

t

k i k

P

i

i

t

k i k t

k

u N

l N

1

1

,

1

1

1

,

1

1

K k

K k

>

,

,

( ) ( )( )

( ) ( )( )

=

+ + +

=

+ + +

+

P

i

T

t

k

i

t

k

i

t

k i k

P

i

T

t

k

i

t

k

i

t

k i k t

k

u u N

l l N

1

1 1 1

,

1

1

1 1 1

,

1

1

K k

K k

>

,

,

=

+

+ +

=

+

= =

P

i

t

k i k K K

k

k

k t

k

N where

N

N

FG 1

1

, 1

1

1

,

GRADUATION SYMPOSIUM, 27 OCTOBER 2009

12

Frame Number Input Ground-truth Proposed EB SI TA ABMM

frame 14

frame 25

frame 202

frame 253

frame 380

frame 437

frame 592

frame 620

frame 644

frame 747

Fig. A3. Qualitative comparison of the foreground masks yielded by the five methods. From left to right: Input frame, ground truth, our proposed method,

Eigenbackground [4], the Statistical Illumination Model [5], Tonal Alignment [3] and the Adaptive Background Mixture Model [20]. Snapshots of the

foreground masks of all five methods are depicted for some frames of the sequence used in the quantitative comparison of Fig. A2.

Fig. A4. Qualitative comparison of applying a median filter (top) versus the proposed recursive spatio-temporal filter (bottom) on

frame 635-641.

You might also like

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5813)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Transmission, IdentificationDocument2 pagesTransmission, IdentificationHumberto LojanNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- CFA Level III - Combined Total Formula Mini TestDocument18 pagesCFA Level III - Combined Total Formula Mini TestcpacfaNo ratings yet

- SSP1776 Table of LimitsDocument174 pagesSSP1776 Table of LimitskjdhimanNo ratings yet

- Estimating Oil in Place in Hampson RussellDocument13 pagesEstimating Oil in Place in Hampson Russelljing6shaNo ratings yet

- Hadoop Installation Manual 2.odtDocument20 pagesHadoop Installation Manual 2.odtGurasees SinghNo ratings yet

- Allowable DP CalculationDocument22 pagesAllowable DP CalculationAJAY1381No ratings yet

- Brute Force Attack With Burp Suite: Adita - Si: Maximize Information System Value For BusinessDocument35 pagesBrute Force Attack With Burp Suite: Adita - Si: Maximize Information System Value For Businessbudi.hw748No ratings yet

- Digital Electronics ExamDocument4 pagesDigital Electronics ExamPeter JumreNo ratings yet

- Street Strain CurveDocument2 pagesStreet Strain CurveAnamika YadavNo ratings yet

- Product, Process and Schedule Design II.: Chapter 2 of The Textbook Plan of The LectureDocument43 pagesProduct, Process and Schedule Design II.: Chapter 2 of The Textbook Plan of The LectureSiddhant KumarNo ratings yet

- Log Log PlotDocument4 pagesLog Log Plotmark_torreonNo ratings yet

- Orifice and Jet FlowDocument4 pagesOrifice and Jet FlowTiee TieeNo ratings yet

- Programme Des Cours STANFORD UNIVDocument4 pagesProgramme Des Cours STANFORD UNIVAbu FayedNo ratings yet

- Rman DuplicateDocument13 pagesRman DuplicategmasayNo ratings yet

- G Code WikiDocument19 pagesG Code WikiShaukat Ali ShahNo ratings yet

- Molecular Characterization of B-Cell Epitopes For The Major Fish AllergenDocument11 pagesMolecular Characterization of B-Cell Epitopes For The Major Fish AllergenFede JazzNo ratings yet

- ARCC Module 2 HandoutsDocument30 pagesARCC Module 2 HandoutsChelsieCabansagNo ratings yet

- 21 - M - SC - Computer Science &IT Syllabus (2017-18)Document33 pages21 - M - SC - Computer Science &IT Syllabus (2017-18)Kishore KumarNo ratings yet

- Developing Your Musicianship: Abdullah ShahzadDocument1 pageDeveloping Your Musicianship: Abdullah ShahzadAbdullah ShahzadNo ratings yet

- Data Management - Best PracticesDocument10 pagesData Management - Best PracticesPromoservicios CANo ratings yet

- Starch GelatinizationDocument1 pageStarch GelatinizationFaleh Setia BudiNo ratings yet

- Di Grooved Fittings - 90 ElBOWDocument3 pagesDi Grooved Fittings - 90 ElBOWDaniel Garces DavilaNo ratings yet

- RGH22 Series Readhead Data SheetDocument4 pagesRGH22 Series Readhead Data SheetRui QiaoNo ratings yet

- Capacitance and DielectricsDocument47 pagesCapacitance and DielectricsWael DoubalNo ratings yet

- Heater & Air Conditioning Control System: SectionDocument187 pagesHeater & Air Conditioning Control System: SectionВладиславГолышевNo ratings yet

- DNA Barcoding in TuberoseDocument7 pagesDNA Barcoding in Tuberosemarigolddus22No ratings yet

- User's Manual: DX100/DX200/DX200C/ MV100/MV200 DaqstandardDocument84 pagesUser's Manual: DX100/DX200/DX200C/ MV100/MV200 DaqstandardMilton ManjarresNo ratings yet

- Tugas 2Document5 pagesTugas 2Beard brother IndonesiaNo ratings yet

- Fluid Substitution With Partial SaturationDocument27 pagesFluid Substitution With Partial SaturationElias LiraNo ratings yet

- Test Pensamiento Crítico CornellDocument397 pagesTest Pensamiento Crítico CornellFernando GiraldoNo ratings yet