Professional Documents

Culture Documents

Digital Image Processing Full Report

Digital Image Processing Full Report

Uploaded by

Jomin PjoseCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Digital Image Processing Full Report

Digital Image Processing Full Report

Uploaded by

Jomin PjoseCopyright:

Available Formats

A Paper Presentation On DIGITAL IMAGE PROCESSING

Abstract: Over the past dozen years forensic and medical applications of technology first developed to record and transmit pictures from outer space have changed the way we see things here on earth, including Old English manuscripts. With their talents combined, an electronic camera designed for use with documents and a digital computer can now frequently enhance the legibility of formerly obscure or even invisible texts. The computer first converts the analogue image, in this case a videotape, to a digital image by dividing it into a microscopic grid and numbering each part by its relative brightness. Specific image processing programs can then radically improve the contrast, for example by stretching the range of brightness throughout the grid from black to white, emphasizing edges, and suppressing random background noise that comes from the equipment rather than the document. Applied to some of the most illegible passages in the Beowulf manuscript, this new technology indeed shows us some things we had not seen before and forces us to reconsider some established readings.

Introduction to Digital Image Processing: Vision allows humans to perceive and understand the world surrounding us. Computer vision aims to duplicate the effect of human vision by electronically perceiving and understanding an image. Giving computers the ability to see is not an easy task - we live in a three dimensional (3D) world, and when computers try to analyze objects in 3D space, available visual sensors (e.g., TV cameras) usually give two dimensional (2D) images, and this projection to a lower number of dimensions incurs an enormous loss of information. In order to simplify the task of computer vision understanding, two levels are usually distinguished; low-level image processing and high level image understanding.

Usually very little knowledge about the content of images High level processing is based on knowledge, goals, and plans of how to achieve those goals. Artificial intelligence (AI) methods are used in many cases. Highlevel computer vision tries to imitate human cognition and the ability to make decisions according to the information contained in the image.

This course deals almost exclusively with low-level image processing, high level in which is a continuation of this course. Age processing is discussed in the course Image Analysis and Understanding, which is a continuation of this course.

History: Many of the techniques of digital image processing, or digital picture processing as it was often called, were developed in the 1960s at the Jet Propulsion Laboratory, MIT, Bell Labs, University of Maryland, and few other places, with application to satellite imagery, wire photo standards conversion, medical imaging, videophone, character recognition, and photo enhancement. But the cost of processing was fairly high with the computing equipment of that era. In the 1970s, digital image processing proliferated, when cheaper computers Creating a film or electronic image of any picture or paper form. It is accomplished by scanning or photographing an object and turning it into a matrix of dots (bitmap), the meaning of which is unknown to the computer, only to the human viewer. Scanned images of text may be encoded into computer data (ASCII or EBCDIC) with page recognition software (OCR).

Basic Concepts:

A signal is a function depending on some variable with physical meaning. Signals can be o One-dimensional (e.g., dependent on time), o Two-dimensional (e.g., images dependent on two co-ordinates in a plane), o Three-dimensional (e.g., describing an object in space), o Or higher dimensional.

Pattern recognition is a field within the area of machine learning. Alternatively, it can be defined as "the act of taking in raw data and taking an action based on the category of the data" [1]. As such, it is a collection of methods for supervised learning. Pattern recognition aims to classify data (patterns) based on either a priori knowledge or on statistical information extracted from the patterns. The patterns to be classified are usually groups of measurements or observations, defining points in an appropriate multidimensional space. Are to represent, for example, color images consisting of three component colors.

Image functions:

The image can be modeled by a continuous function of two or three variables; Arguments are co-ordinates x, y in a plane, while if images change in time a third variable t might be added. The image function values correspond to the brightness at image points. The function value can express other physical quantities as well (temperature, pressure distribution, distance from the observer, etc.). The brightness integrates different optical quantities - using brightness as a basic quantity allows us to avoid the description of the very complicated process of image formation.

The image on the human eye retina or on a TV camera sensor is intrinsically 2D. We shall call such a 2D image bearing information about brightness points an intensity image.

The real world, which surrounds us, is intrinsically 3D. The 2D intensity image is the result of a perspective projection of the 3D scene. When 3D objects are mapped into the camera plane by perspective projection a lot of information disappears as such a transformation is not one-to-one.

Recognizing or reconstructing objects in a 3D scene from one image is an illposed problem. Recovering information lost by perspective projection is only one, mainly geometric, problem of computer vision. The second problem is how to understand image brightness. The only information available in an intensity image is brightness of the appropriate pixel, which is dependent on a number of independent factors such as

o

Object surface reflectance properties (given by the surface material, microstructure and marking), Illumination properties, And object surface orientation with respect to a viewer and light source.

o o

Digital image properties:

Metric properties of digital images:

Distance is an important example. The distance between two pixels in a digital image is a significant quantitative measure. The Euclidean distance is defined by Eq. 2.42

City block distance

Chessboard distance Eq. 2.44

Pixel adjacency is another important concept in digital images. 4-neighborhood 8-neighborhood It will become necessary to consider important sets consisting of several adjacent pixels -- regions.

Region is a contiguous set.

Contiguity

paradoxes

of

the

square

grid

One possible solution to contiguity paradoxes is to treat objects using 4neighborhood and background using 8-neighborhood (or vice versa). A hexagonal grid solves many problems of the square grids ... any point in the hexagonal raster has the same distance to all its six neighbors. Border R is the set of pixels within the region that have one or more neighbors outside R ... inner borders, outer borders exist. Edge is a local property of a pixel and its immediate neighborhood --it is a vector given by a magnitude and direction.

The edge direction is perpendicular to the gradient direction which points in the direction of image function growth. Border and edge ... the border is a global concept related to a region, while edge expresses local properties of an image function.

Crack edges ... four crack edges are attached to each pixel, which are defined by its relation to its 4-neighbors. The direction of the crack edge is that of increasing brightness, and is a multiple of 90 degrees, while its magnitude is the absolute difference between the brightness of the relevant pair of pixels. (Fig. 2.9)

Topological properties of digital images

Topological properties of images are invariant to rubber sheet transformations. Stretching does not change contiguity of the object parts and does not change the number One such image property is the Euler--Poincare characteristic defined as the difference between the number of regions and the number of holes in them.

Convex hull is used to describe topological properties of objects. r of holes in regions. The convex hull is the smallest region which contains the object, such that any two points of the region can be connected by a straight line, all points of which belong to the region.

Useses A scalar function may be sufficient to describe a monochromatic image, while vector functions are to represent, for example, color images consisting of three component colors. CONCLUSION Further, surveillance by humans is dependent on the quality of the human operator and lot off actors like operator fatigue negligence may lead to degradation of performance. These factors may can intelligent vision system a better option. As in systems that use gait signature for recognition in vehicle video sensors for driver assistance.

You might also like

- A Survey of Evolution of Image Captioning PDFDocument18 pagesA Survey of Evolution of Image Captioning PDF_mboNo ratings yet

- DSP QuestionsDocument46 pagesDSP QuestionsAnonymous tLOxgFkyNo ratings yet

- BrainGizer BookDocument102 pagesBrainGizer BookRania NabilNo ratings yet

- Data Compression and Huffman AlgorithmDocument18 pagesData Compression and Huffman Algorithmammayi9845_9304679040% (1)

- Artificial Intelligence and Neural Network - HumayunKabirDocument30 pagesArtificial Intelligence and Neural Network - HumayunKabir37Azmain Fieak100% (1)

- Frequency Domain Filtering Image ProcessingDocument24 pagesFrequency Domain Filtering Image ProcessingSankalp_Kallakur_402100% (1)

- Image Enchancement in Spatial DomainDocument117 pagesImage Enchancement in Spatial DomainMalluri LokanathNo ratings yet

- Image ProcessingDocument39 pagesImage ProcessingawaraNo ratings yet

- Literature Review On Single Image Super ResolutionDocument6 pagesLiterature Review On Single Image Super ResolutionEditor IJTSRDNo ratings yet

- Qustionbank1 12Document40 pagesQustionbank1 12Bhaskar VeeraraghavanNo ratings yet

- Module 1:image Representation and ModelingDocument48 pagesModule 1:image Representation and ModelingPaul JoyNo ratings yet

- Min Filter - Matlab Code - Image ProcessingDocument3 pagesMin Filter - Matlab Code - Image Processingaloove660% (2)

- Unit-6bThe Use of Motion in SegmentationDocument4 pagesUnit-6bThe Use of Motion in SegmentationHruday HeartNo ratings yet

- Final 2D Transformations Heran Baker NewDocument64 pagesFinal 2D Transformations Heran Baker NewImmensely IndianNo ratings yet

- 1.5 Image Sampling and QuantizationDocument17 pages1.5 Image Sampling and Quantizationkuladeep varmaNo ratings yet

- Identification of Brain Tumor Using MATLABDocument4 pagesIdentification of Brain Tumor Using MATLABIJMTST-Online JournalNo ratings yet

- 06 - Food Calorie EstimationDocument39 pages06 - Food Calorie Estimationjeeadvance100% (1)

- Heart Beat MonitoringDocument5 pagesHeart Beat MonitoringVishnu Sadasivan100% (1)

- The Matlab NMR LibraryDocument34 pagesThe Matlab NMR Librarysukanya_13No ratings yet

- Enhancement in Spatial DomainDocument25 pagesEnhancement in Spatial DomainKumarPatraNo ratings yet

- Image Segmentation For Object Detection Using Mask R-CNN in ColabDocument5 pagesImage Segmentation For Object Detection Using Mask R-CNN in ColabGRD JournalsNo ratings yet

- Edge Detection and Hough Transform MethodDocument11 pagesEdge Detection and Hough Transform MethodDejene BirileNo ratings yet

- Lab Manual No 01Document28 pagesLab Manual No 01saqib idreesNo ratings yet

- Basics of Image ProcessingDocument38 pagesBasics of Image ProcessingKarthick VijayanNo ratings yet

- Image Analysis With MatlabDocument52 pagesImage Analysis With MatlabSaurabh Malik100% (1)

- A Literature Survey On Applications of Image Processing For Video SurveillanceDocument3 pagesA Literature Survey On Applications of Image Processing For Video SurveillanceInternational Journal of Innovative Science and Research Technology0% (1)

- DSP Lab ManualDocument38 pagesDSP Lab ManualXP2009No ratings yet

- Digital Image Segmentation of Water Traces in Rock ImagesDocument19 pagesDigital Image Segmentation of Water Traces in Rock Imageskabe88101No ratings yet

- Brain Tumor Detection Using Machine Learning TechniquesDocument7 pagesBrain Tumor Detection Using Machine Learning TechniquesYashvanthi SanisettyNo ratings yet

- Brain Tumor Final Report LatexDocument29 pagesBrain Tumor Final Report LatexMax WatsonNo ratings yet

- Hough TransformDocument13 pagesHough TransformAkilesh ArigelaNo ratings yet

- Chapter - 1Document16 pagesChapter - 1Waseem MaroofiNo ratings yet

- Image DeblurringDocument35 pagesImage DeblurringMarco DufresneNo ratings yet

- CE 324 Digital Image ProcessingDocument3 pagesCE 324 Digital Image ProcessingTooba ArshadNo ratings yet

- Image Fusion PresentationDocument33 pagesImage Fusion PresentationManthan Bhatt100% (1)

- ThresholdingDocument38 pagesThresholdingMahi Mahesh100% (1)

- Image Caption Generator Using Deep Learning: Guided by Dr. Ch. Bindu Madhuri, M Tech, PH.DDocument9 pagesImage Caption Generator Using Deep Learning: Guided by Dr. Ch. Bindu Madhuri, M Tech, PH.Dsuryavamsi kakaraNo ratings yet

- EEG Report FinalDocument45 pagesEEG Report FinalSanketNo ratings yet

- DigitalImageFundamentalas GMDocument50 pagesDigitalImageFundamentalas GMvpmanimcaNo ratings yet

- Final New Pro v2.7 New12Document128 pagesFinal New Pro v2.7 New12Manjeet SinghNo ratings yet

- GLCMDocument2 pagesGLCMprotogiziNo ratings yet

- Prediction of Autism Spectrum DisorderDocument25 pagesPrediction of Autism Spectrum DisorderVijay Kumar t.gNo ratings yet

- World's Best DJ in 2017 - Top Best DJ's by Benjo Dhumal - YouTubeDocument2 pagesWorld's Best DJ in 2017 - Top Best DJ's by Benjo Dhumal - YouTubedeepakbhanwala66No ratings yet

- Class XI Unsolved Question&Answers (Part B-Unit-1 To 4)Document13 pagesClass XI Unsolved Question&Answers (Part B-Unit-1 To 4)Deep MakwanaNo ratings yet

- Csi 5155 ML Project ReportDocument24 pagesCsi 5155 ML Project Report77Gorde Priyanka100% (1)

- Pneumonia Disease Detection Using Deep Learning Methods From Chest X-Ray Images: ReviewDocument7 pagesPneumonia Disease Detection Using Deep Learning Methods From Chest X-Ray Images: ReviewWARSE JournalsNo ratings yet

- Project Title: Spatial Domain Image ProcessingDocument11 pagesProject Title: Spatial Domain Image ProcessinganssandeepNo ratings yet

- MC0086 Digital Image ProcessingDocument9 pagesMC0086 Digital Image ProcessingGaurav Singh JantwalNo ratings yet

- Question BankDocument37 pagesQuestion BankViren PatelNo ratings yet

- Epidemiology, Pathophysiology and Symptomatic Treatment of Sciatica: A ReviewDocument12 pagesEpidemiology, Pathophysiology and Symptomatic Treatment of Sciatica: A ReviewPankaj Vatsa100% (1)

- Finite Word Length EffectsDocument9 pagesFinite Word Length Effectsohmshankar100% (2)

- Retinal Report DownloadedDocument56 pagesRetinal Report DownloadedSushmithaNo ratings yet

- Color Video Formation and PerceptionDocument20 pagesColor Video Formation and PerceptiontehazharrNo ratings yet

- Brain Tumor Detection Using Machine LearningDocument9 pagesBrain Tumor Detection Using Machine LearningRayban PolarNo ratings yet

- Pneumonia Detection Using Convolutional Neural Networks (CNNS)Document14 pagesPneumonia Detection Using Convolutional Neural Networks (CNNS)shekhar1405No ratings yet

- Digital Image Processing Full ReportDocument4 pagesDigital Image Processing Full ReportLovepreet VirkNo ratings yet

- Digital Image Processing Full ReportDocument9 pagesDigital Image Processing Full ReportAkhil ChNo ratings yet

- Digital Image ProcessingDocument10 pagesDigital Image ProcessingAnil KumarNo ratings yet

- Digital Image ProcessingDocument10 pagesDigital Image ProcessingAkash SuryanNo ratings yet

- Computer Stereo Vision: Exploring Depth Perception in Computer VisionFrom EverandComputer Stereo Vision: Exploring Depth Perception in Computer VisionNo ratings yet

- Superscripts: 2 2x 2x 2x Subscripts: X X X XDocument1 pageSuperscripts: 2 2x 2x 2x Subscripts: X X X Xammayi9845_930467904No ratings yet

- Simcom 900 GSM Module: GSM/GPRS Modem FeturesDocument3 pagesSimcom 900 GSM Module: GSM/GPRS Modem Feturesammayi9845_930467904No ratings yet

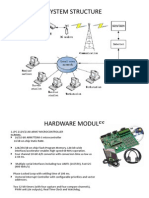

- System StructureDocument3 pagesSystem Structureammayi9845_930467904No ratings yet

- Continued: Detection of A/D ConvertionDocument2 pagesContinued: Detection of A/D Convertionammayi9845_930467904No ratings yet

- Agenda: Phytomonitoring System Recommended Setup Block Diagram Work Done Future Work Plan ReferenceDocument3 pagesAgenda: Phytomonitoring System Recommended Setup Block Diagram Work Done Future Work Plan Referenceammayi9845_930467904No ratings yet

- Work Plan: Month PlansDocument3 pagesWork Plan: Month Plansammayi9845_930467904No ratings yet

- February: Interfacing Multiple Sensors Using Wireless Network and Using Visual Software Display On MonitorDocument3 pagesFebruary: Interfacing Multiple Sensors Using Wireless Network and Using Visual Software Display On Monitorammayi9845_930467904No ratings yet

- Data Acquisition - Why 3G?Document3 pagesData Acquisition - Why 3G?ammayi9845_930467904No ratings yet

- Future Work Plan: Month TasksDocument2 pagesFuture Work Plan: Month Tasksammayi9845_930467904No ratings yet

- Design RequirementsDocument5 pagesDesign Requirementsammayi9845_930467904No ratings yet

- LCD Interfacing With lpc2148: Header File and Variable Declaration Initialization FunctionDocument3 pagesLCD Interfacing With lpc2148: Header File and Variable Declaration Initialization Functionammayi9845_930467904No ratings yet

- Work Flow ChartDocument3 pagesWork Flow Chartammayi9845_930467904No ratings yet

- Work Done Till First ReviewDocument3 pagesWork Done Till First Reviewammayi9845_930467904No ratings yet

- Phytomonitoring System: Data Colection Software SensorsDocument3 pagesPhytomonitoring System: Data Colection Software Sensorsammayi9845_930467904No ratings yet

- Design RequirementsDocument2 pagesDesign Requirementsammayi9845_930467904No ratings yet

- Declaration Certificate Acknowledgement List of Figures List of Tables AcronymsDocument2 pagesDeclaration Certificate Acknowledgement List of Figures List of Tables Acronymsammayi9845_930467904No ratings yet

- New Proj - SynopsisDocument2 pagesNew Proj - Synopsisammayi9845_930467904No ratings yet

- Swami Proj. ProposalDocument1 pageSwami Proj. Proposalammayi9845_930467904No ratings yet

- Figure 1: A Block Diagram of A Basic FilterDocument10 pagesFigure 1: A Block Diagram of A Basic Filterammayi9845_930467904No ratings yet

- Adaptive Noise Cancellation - NewDocument21 pagesAdaptive Noise Cancellation - Newammayi9845_930467904No ratings yet

- List of Tables: Table No. Name of Table Page NoDocument1 pageList of Tables: Table No. Name of Table Page Noammayi9845_930467904No ratings yet

- Improving The Effectiveness of The Median Filter: Kwame Osei Boateng, Benjamin Weyori Asubam and David Sanka LaarDocument13 pagesImproving The Effectiveness of The Median Filter: Kwame Osei Boateng, Benjamin Weyori Asubam and David Sanka Laarammayi9845_930467904No ratings yet

- List of Figures Fig. No. Title of The Figure Page NoDocument2 pagesList of Figures Fig. No. Title of The Figure Page Noammayi9845_930467904No ratings yet