Professional Documents

Culture Documents

Cache1 2

Uploaded by

Venkat SrinivasanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cache1 2

Uploaded by

Venkat SrinivasanCopyright:

Available Formats

Memory

Chapter 7

Cache Memories

Memory Challenges

Ideally one desires a huge amount of very fast memory for little

cost, but:

Fast memory is expensive

Cheap memory is slow

The solution on a fixed budget for memory is a hierarchy

A small amount of very fast memory (Think SRAM)

A medium amount of slower memory (Think DRAM)

A large amount of slower yet memory (Think Disk)

Comparing:

Technology Access Time

SRAM

Cost/GB

0.5 5 ns

DRAM

Disk

50 70 ns

5 20 ms

$4,000 - $10,000

$100 - $200

$0.50 - $2

Recall: We used 200ps or 0.2 ns in our pipeline study. Why the difference?

The Memory Wall

Clocks per DRAM access

Logic vs DRAM speed gap continues to grow

Clocks per instruction

Philosophically

How does one UTILIZE the very fast memory effectively?

Think The Principal of Locality

Temporal Locality (Close in Time)

- Memory that has been accessed recently is most likely to be accessed

sooner

Spatial Locality (Close in location)

- Memory that is close to memory that has been accessed recently is

most likely to be accessed sooner

Organize memory in blocks

Keep blocks likely to be used soon in the very fast memory

Keep the next most likely blocks in medium fast memory

Keep those not likely to be used soon in slower memory

Hierarchical Memory Organization

Cache memory

What is cache?

How is it organized?

Likely in place of the last used block

How do we rate the performance of the cache?

An access fails to find the word in the cache

Where does it get placed in the cache?

When a block in main memory is more likely to be needed, that block

replaces a block in the cache.

How do we know it is needed?

Organized in a number of uniform sized blocks of memory that have

a high likelihood of being used.

How is kept current?

A small amount of very high speed memory between the main

memory and the CPU

Based upon Hit rates and Miss rates

Should there be Instruction Caches and Data Caches?

Hierarchical Memory Organization

Registers are the fastest

Cache is the fastest Memory - SRAM

DRAM makes good main memory

Disk is best for the rest (majority)

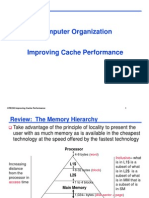

The Memory Hierarchy

Take advantage of the principle of locality to present

the user with as much memory as is available in the

cheapest technology at the speed offered by the

fastest technology

Inclusive

Processor

4-8 bytes (words)

Increasing

distance

from the

processor

in access

time

L1$

8-32 bytes (block)

L2$

1 to 4 blocks

Main Memory

what is in

L1$ is a

subset of

what is in

L2$ is a

subset of

what is in

MM that is a

subset of is

in SM

1,024+ bytes (disk sector = page)

Secondary Memory

(Relative) size of the memory at each level

The Memory Hierarchy: Pictorially

Temporal Locality (Locality in Time):

Keep most recently accessed data items closer to the processor

Spatial Locality (Locality in Space):

Move blocks consisting of contiguous words to the upper levels

To Processor Upper Level

Memory

Lower Level

Memory

Blk X

From Processor

Blk Y

The Memory Hierarchy: Terminology

Hit: data is in some block in the upper level (Blk X)

Hit Rate: the fraction of memory accesses found in the upper level

Hit Time: Time to access the upper level which consists of

RAM access time + Time to determine hit/miss

To Processor Upper Level

Memory

Lower Level

Memory

Blk X

From Processor

Blk Y

Miss: data is not in the upper level so needs to be retrieve from a

block in the lower level (Blk Y)

Miss Rate = 1 - (Hit Rate)

Miss Penalty: Time to replace a block in the upper level

+ Time to deliver the block the processor

Hit Time << Miss Penalty

How is the Hierarchy Managed?

registers memory

cache main memory

by compiler (or programmer?)

by the cache controller hardware

main memory disks

by the operating system (virtual memory)

virtual to physical address mapping assisted by the hardware (TLB)

by the programmer (files)

Cache

Two questions to answer (in hardware):

Q1: How do we know if a data item is in the cache?

Q2: If it is, how do we find it?

Direct Mapped Caching

For each item of data at the lower level, there is exactly one

location in the cache where it might be - so lots of items at

the lower level must share locations in the upper level

Address mapping:

(block address) modulo (# of blocks in the cache)

First consider block sizes of one word

Caching: A Simple First Example

Cache

Index Valid Tag

Data

00

01

10

11

Q1: Is it there?

Compare the cache

tag to the high order 2

memory address bits

to tell if the memory

block is in the cache

Main Memory

0000xx

0001xx Two low order bits

0010xx define the byte in the

word (32b words)

0011xx

0100xx

0101xx

0110xx

0111xx

1000xx Q2: How do we find it?

1001xx

1010xx Use next 2 low order

1011xx memory address bits

1100xx the index to

1101xx determine which

1110xx cache block (i.e.,

1111xx modulo the number of

blocks in the cache)

(block address) modulo (# of blocks in the cache)

Direct Mapped Cache

Consider the main memory word reference string

Start with an empty cache - all

blocks initially marked as not valid

0 miss

00

01

00

00

00

00

Mem(0)

4 miss

4

Mem(0)

Mem(1)

Mem(2)

Mem(3)

0 1 2 3 4 3 4 15

1 miss

00

Mem(0)

00

Mem(1)

2 miss

00

00

00

3 hit

01

00

00

00

8 requests, 6 misses

Mem(4)

Mem(1)

Mem(2)

Mem(3)

Mem(0)

Mem(1)

Mem(2)

4

01

00

00

00

3 miss

00

00

00

00

hit

Mem(4)

Mem(1)

Mem(2)

Mem(3)

Mem(0)

Mem(1)

Mem(2)

Mem(3)

15 miss

01

00

00

11 00

Mem(4)

Mem(1)

Mem(2)

Mem(3)

15

MIPS Direct Mapped Cache Example

One word/block, cache size = 1K words

31 30

Hit

Tag

...

13 12 11

20

...

2 1 0

Byte

offset

Data

10

Index

Index Valid

Tag

Data

0

1

2

.

.

.

1021

1022

1023

20

32

What kind of locality are we taking advantage of?

Handling Cache Hits

Read hits (I$ and D$)

this is what we want no challenges

Write hits (D$ only)

What is the problem here?

Strategies

allow cache and memory to be inconsistent

- write the data only into the cache block (write-back the cache

contents to the next level in the memory hierarchy when that cache

block is evicted)

- need a dirty bit for each data cache block to tell if it needs to be

written back to memory when it is evicted

require the cache and memory to be consistent

- always write the data into both the cache block and the next level in

the memory hierarchy (write-through) so dont need a dirty bit

- writes run at the speed of the next level in the memory hierarchy

so slow! or can use a write buffer, so only have to stall if the write

buffer is full

Read / Write Strategies

Read Through: Word read from memory

No Read Through: Word word from cache after block is read from memory

Write Through: Word written to both Cache and Memory

Write Back: Word written only to Cache

Write Allocate: Block is loaded on a write miss, followed by a write hit

Write No Allocate: Block is modified on a write miss but not loaded

Write Hit Policy

Write Through

Write Miss Policy

Write Allocate

Write Through *

Write No Allocate *

Write Back *

Write Allocate *

Write Back

No Write Allocate

Write Buffer for Write-Through Caching

Cache

Processor

DRAM

write buffer

Write buffer between the cache and main memory

Processor: writes data into the cache and the write buffer

Memory controller: writes contents of the write buffer to memory

The write buffer is just a FIFO

Typical number of entries: 4

Works fine if store frequency (w.r.t. time) << 1 / DRAM write cycle

Memory system designers nightmare

When the store frequency (w.r.t. time) 1 / DRAM write cycle

leading to write buffer saturation

- One solution is to use a write-back cache; another is to use an L2 cache

Another Reference String Mapping

Consider the main memory word reference string

Start with an empty cache - all

blocks initially marked as not valid

0 miss

00

00

01

Mem(0)

0 miss

Mem(4)

01

00

01

00

0 4 0 4 0 4 0 4

4 miss

Mem(0)

4 miss

Mem(0)

00

01

00

01

0 miss

0

01

00

0 miss

0

Mem(4)

01

00

Mem(4)

4 miss

Mem(0)4

4 miss

Mem(0)

8 requests, 8 misses

Ping pong effect due to conflict misses - two memory locations

that map into the same cache block

Sources of Cache Misses

Compulsory (cold start or process migration, first reference):

First access to a block, cold fact of life, not a whole lot you can do

about it

If you are going to run millions of instruction, compulsory misses

are insignificant

Conflict (collision):

Multiple memory locations mapped to the same cache location

Solution 1: increase cache size or block length

Solution 2: increase associativity

Capacity:

Cache cannot contain all blocks accessed by the program

Solution: increase cache size

What about the relationship between cache size and block length?

Handling Cache Misses

Read misses (I$ and D$)

stall the entire pipeline, fetch the block from the next level in the

memory hierarchy, install it in the cache and send the requested word

to the processor, then let the pipeline resume

Write misses (D$ only)

1.

stall the pipeline, fetch the block from next level in the memory

hierarchy, install it in the cache (which may involve having to evict a

dirty block if using a write-back cache), write the word from the

processor to the cache, then let the pipeline resume or (normally used

in write-back caches)

2.

Write allocate just write the word into the cache updating both the

tag and data, no need to check for cache hit, no need to stall or

(normally used in write-through caches with a write buffer)

3.

No-write allocate skip the cache write and just write the word to

the write buffer (and eventually to the next memory level), no need

to stall if the write buffer isnt full; must invalidate the cache block

since it will be inconsistent (now holding stale data)

Multiword Block Direct Mapped Cache

Four words/block, cache size = 1K words

31 30 . . .

Hit

Tag

13 12 11

20

...

4 32 10

Byte

offset

Data

Block offset

Index

Data

Index Valid Tag

0

1

2

.

.

.

253

254

255

20

32

What kind of locality are we taking advantage of?

Taking Advantage of Spatial Locality

Let cache block hold more than one word

0 1 2 3 4 3 4 15

Start with an empty cache - all

blocks initially marked as not valid

0 miss

00

Mem(1)

1 hit

Mem(0)

00

Mem(0)

Mem(2)

01

00

00

3 hit

00

00

Mem(1)

Mem(3)

Mem(1)

Mem(0)

4 miss

5

4

Mem(1) Mem(0)

Mem(3) Mem(2)

4 hit

01

00

Mem(5)

Mem(3)

00

00

2 miss

Mem(1) Mem(0)

Mem(3) Mem(2)

3 hit

01

00

Mem(5)

Mem(3)

15 miss

Mem(4)

Mem(2)

8 requests, 4 misses

1101

00

Mem(5) Mem(4)

15

14

Mem(3) Mem(2)

Mem(4)

Mem(2)

Miss Rate vs Block Size vs Cache Size

Miss rate goes up if the block size becomes a significant fraction

of the cache size because the number of blocks that can be held in

the same size cache is smaller (increasing capacity misses)

Block Size Tradeoff

Larger block sizes take advantage of spatial locality but

If the block size is too big relative to the cache size, the miss rate

will go up

Larger block size means larger miss penalty

- Latency to first word in block + transfer time for remaining words

Miss Exploits Spatial Locality

Rate

Average

Access

Time

Miss

Penalty

Increased Miss

Penalty

& Miss Rate

Fewer blocks

compromises

Temporal Locality

Block Size

Block Size

In general, Average Memory Access Time

= Hit Time + Miss Penalty x Miss Rate

Block Size

Multiword Block Considerations

Read misses (I$ and D$)

Processed the same as for single word blocks a miss returns the

entire block from memory

Miss penalty grows as block size grows

- Early restart datapath resumes execution as soon as the requested

word of the block is returned

- Requested word first requested word is transferred from the

memory to the cache (and datapath) first

Nonblocking cache allows the datapath to continue to access the

cache while the cache is handling an earlier miss

Write misses (D$)

Cant use write allocate or will end up with a garbled block in the

cache (e.g., for 4 word blocks, a new tag, one word of data from

the new block, and three words of data from the old block), so

must fetch the block from memory first and pay the stall time

Cache Summary

The Principle of Locality:

Program likely to access a relatively small portion of the address

space at any instant of time

- Temporal Locality: Locality in Time

- Spatial Locality: Locality in Space

Three major categories of cache misses:

Compulsory misses: sad facts of life. Example: cold start misses

Conflict misses: increase cache size and/or associativity Nightmare

Scenario: ping pong effect!

Capacity misses: increase cache size

Cache design space

total size, block size, associativity (replacement policy)

write-hit policy (write-through, write-back)

write-miss policy (write allocate, write buffers)

Measuring Cache Performance

Assuming cache hit costs are included as part of the normal CPU

execution cycle, then

CPU time = IC CPI CC

= IC (CPIideal + Memory-stall cycles) CC

CPIstall

Memory-stall cycles come from cache misses (a sum of readstalls and write-stalls)

Read-stall cycles = reads/program read miss rate

read miss penalty

Write-stall cycles = (writes/program write miss rate

write miss penalty)

+ write buffer stalls

For write-through caches, we can simplify this to

Memory-stall cycles = miss rate miss penalty

Impacts of Cache Performance

Relative cache penalty increases as processor performance

improves (faster clock rate and/or lower CPI)

The memory speed is unlikely to improve as fast as processor cycle

time. When calculating CPIstall, the cache miss penalty is measured in

processor clock cycles needed to handle a miss

The lower the CPIideal, the more pronounced the impact of stalls

A processor with a CPIideal of 2, a 100 cycle miss penalty, 36%

load/store instrs, and 2% I$ and 4% D$ miss rates

Memory-stall cycles = 2% 100 + 36% 4% 100 = 3.44

So

CPIstalls = 2 + 3.44 = 5.44

What if the CPIideal is reduced to 1? 0.5? 0.25?

What if the processor clock rate is doubled (doubling the miss

penalty)?

Reducing Cache Miss Rates #1

Allow more flexible block placement

In a direct mapped cache a memory block maps to exactly one

cache block

At the other extreme, could allow a memory block to be mapped

to any cache block fully associative cache

A compromise is to divide the cache into sets each of which

consists of n ways (n-way set associative). A memory block

maps to a unique set (specified by the index field) and can be

placed in any way of that set (so there are n choices)

(block address) modulo (# sets in the cache)

You might also like

- Computer Architecture Cache DesignDocument28 pagesComputer Architecture Cache Designprahallad_reddy100% (3)

- Unit-III: Memory: TopicsDocument54 pagesUnit-III: Memory: TopicsZain Shoaib MohammadNo ratings yet

- Lecture 16Document22 pagesLecture 16Alfian Try PutrantoNo ratings yet

- Memory Hierarchy - Introduction: Cost Performance of Memory ReferenceDocument52 pagesMemory Hierarchy - Introduction: Cost Performance of Memory Referenceravi_jolly223987No ratings yet

- Understand CPU Caching ConceptsDocument14 pagesUnderstand CPU Caching Conceptsabhijit-k_raoNo ratings yet

- 05) Cache Memory IntroductionDocument20 pages05) Cache Memory IntroductionkoottyNo ratings yet

- Cache Memory: CS2100 - Computer OrganizationDocument45 pagesCache Memory: CS2100 - Computer OrganizationamandaNo ratings yet

- Memory Hierarchy Design UNIT-V - Reducing Cache Miss Penalty and Rate via ParallelismDocument54 pagesMemory Hierarchy Design UNIT-V - Reducing Cache Miss Penalty and Rate via ParallelismmannanabdulsattarNo ratings yet

- Cache DesignDocument59 pagesCache DesignChunkai HuangNo ratings yet

- Memory Hierarchies (Part 2) Review: The Memory HierarchyDocument7 pagesMemory Hierarchies (Part 2) Review: The Memory HierarchyhuyquyNo ratings yet

- Memory Hierarchy DesignDocument115 pagesMemory Hierarchy Designshivu96No ratings yet

- AC14L08 Memory HierarchyDocument20 pagesAC14L08 Memory HierarchyAlexNo ratings yet

- L5: ROM and Memory HierarchyDocument14 pagesL5: ROM and Memory HierarchyNeel RavalNo ratings yet

- Large and Fast: Exploiting Memory Hierarchy: Topics To Be CoveredDocument13 pagesLarge and Fast: Exploiting Memory Hierarchy: Topics To Be CoveredMd Iqbal HossainNo ratings yet

- The Memory Hierarchy: - Ideally One Would Desire An Indefinitely Large Memory Capacity SuchDocument23 pagesThe Memory Hierarchy: - Ideally One Would Desire An Indefinitely Large Memory Capacity SuchHarshitaSharmaNo ratings yet

- CODch 7 SlidesDocument49 pagesCODch 7 SlidesMohamed SherifNo ratings yet

- Memory Hierarchy Basics: Levels, Technologies, and PerformanceDocument37 pagesMemory Hierarchy Basics: Levels, Technologies, and PerformanceLalala LandNo ratings yet

- Cache Memory Organization LectureDocument18 pagesCache Memory Organization LectureMatthew R. PonNo ratings yet

- Embedded Systems Unit 8 NotesDocument17 pagesEmbedded Systems Unit 8 NotesPradeep Kumar Goud NadikudaNo ratings yet

- Memory Interface & Controller Lecture 3Document77 pagesMemory Interface & Controller Lecture 3Abdullahi Zubairu SokombaNo ratings yet

- Cache Memory in Computer OrganizatinDocument12 pagesCache Memory in Computer OrganizatinJohn Vincent BaylonNo ratings yet

- Memory Cache: Computer Architecture and OrganizationDocument41 pagesMemory Cache: Computer Architecture and Organizationnurali arfanNo ratings yet

- COA Lecture 25 26Document13 pagesCOA Lecture 25 26Chhaveesh AgnihotriNo ratings yet

- Lec 07Document15 pagesLec 07Rama DeviNo ratings yet

- Memory OrganisationDocument34 pagesMemory OrganisationricketbusNo ratings yet

- FALLSEM2020-21 CSE2001 TH VL2020210104506 Reference Material I 05-Sep-2020 CacheDocument35 pagesFALLSEM2020-21 CSE2001 TH VL2020210104506 Reference Material I 05-Sep-2020 CacheRahul PandeyNo ratings yet

- Chapter03 (1)Document57 pagesChapter03 (1)Nguyen Huu Duc ThoNo ratings yet

- CH 06Document58 pagesCH 06Yohannes DerejeNo ratings yet

- CPU CacheDocument19 pagesCPU Cache22194No ratings yet

- The Motivation For Caches: Memory SystemDocument9 pagesThe Motivation For Caches: Memory SystemNarender KumarNo ratings yet

- William Stallings Computer Organization and Architecture: Internal MemoryDocument60 pagesWilliam Stallings Computer Organization and Architecture: Internal MemoryreinaldoopusNo ratings yet

- Cache Memory Hierarchy and DesignDocument19 pagesCache Memory Hierarchy and DesignMUHAMMAD ABDULLAHNo ratings yet

- Module One - Part I - Memory IntroDocument20 pagesModule One - Part I - Memory Intronirosha vijayNo ratings yet

- Unit 5Document40 pagesUnit 5anand_duraiswamyNo ratings yet

- Lec 6Document18 pagesLec 6Rama DeviNo ratings yet

- 4 Unit Speed, Size and CostDocument5 pages4 Unit Speed, Size and CostGurram SunithaNo ratings yet

- Lecture 5: Memory Hierarchy and Cache Traditional Four Questions For Memory Hierarchy DesignersDocument10 pagesLecture 5: Memory Hierarchy and Cache Traditional Four Questions For Memory Hierarchy Designersdeepu7deeptiNo ratings yet

- Lecture 12: Memory Hierarchy - Cache Optimizations: CSCE 513 Computer ArchitectureDocument69 pagesLecture 12: Memory Hierarchy - Cache Optimizations: CSCE 513 Computer ArchitectureFahim ShaikNo ratings yet

- Large and Fast: Exploiting Memory HierarchyDocument48 pagesLarge and Fast: Exploiting Memory Hierarchyapi-26072581No ratings yet

- Cache Memory: Maninder KaurDocument18 pagesCache Memory: Maninder KaurMisha AnonyNo ratings yet

- More Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitectureDocument31 pagesMore Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitecturedjriveNo ratings yet

- Merging Write BuffersDocument14 pagesMerging Write BuffersvijayarajupptNo ratings yet

- L - 3-AssociativeMapping - Virtual MemoryDocument52 pagesL - 3-AssociativeMapping - Virtual MemoryLekshmiNo ratings yet

- Research Paper On Cache MemoryDocument8 pagesResearch Paper On Cache Memorypib0b1nisyj2100% (1)

- ECE875Spr23 Week07a Lecture11Document34 pagesECE875Spr23 Week07a Lecture11Sivaprasad Reddy BogireddyNo ratings yet

- CS140 Computer Organization: Chapter 6: MemoryDocument81 pagesCS140 Computer Organization: Chapter 6: MemoryG Srilatha G SrilathaNo ratings yet

- Memory Hierarchy BasicsDocument19 pagesMemory Hierarchy BasicsSeptian PratamaNo ratings yet

- Unit 5 1 Cache Performance V 2Document29 pagesUnit 5 1 Cache Performance V 2LekshmiNo ratings yet

- Cache Memory 130126022606 Phpapp02Document18 pagesCache Memory 130126022606 Phpapp02ddscraft123No ratings yet

- Lect 05 DramDocument63 pagesLect 05 DramTupa HasanNo ratings yet

- CS 61C: Great Ideas in Computer Architecture: Lecture 12 - Memory Hierarchy/Direct-Mapped CachesDocument27 pagesCS 61C: Great Ideas in Computer Architecture: Lecture 12 - Memory Hierarchy/Direct-Mapped Cachesmapppppp234No ratings yet

- Coa BlogDocument17 pagesCoa BlogA Zizi ZiziNo ratings yet

- Chapter 4Document39 pagesChapter 4عبدالآله الشريفNo ratings yet

- CPU Cache: From Wikipedia, The Free EncyclopediaDocument19 pagesCPU Cache: From Wikipedia, The Free Encyclopediadevank1505No ratings yet

- Memory-Management StrategiesDocument70 pagesMemory-Management StrategiesNico Jhon R AlmarinezNo ratings yet

- ECE4680 Computer Organization and Architecture Memory Hierarchy: Cache SystemDocument25 pagesECE4680 Computer Organization and Architecture Memory Hierarchy: Cache SystemNarender KumarNo ratings yet

- Cache 13115Document20 pagesCache 13115rohan KottawarNo ratings yet

- Improving Cache PerformanceDocument24 pagesImproving Cache Performanceramakanth_komatiNo ratings yet

- UNIT-3 OS NOTESDocument33 pagesUNIT-3 OS NOTESlinren2005No ratings yet

- Lecture 9-Design Patterns Part 2Document60 pagesLecture 9-Design Patterns Part 2Venkat SrinivasanNo ratings yet

- VAX Virtual MemoryDocument3 pagesVAX Virtual MemoryVenkat SrinivasanNo ratings yet

- Lecture 8-Design Patterns IntroductionDocument24 pagesLecture 8-Design Patterns IntroductionVenkat SrinivasanNo ratings yet

- Lecture 7-Information HidingDocument27 pagesLecture 7-Information HidingVenkat SrinivasanNo ratings yet

- The Microarchitecture of The LC-3b, Basic MachineDocument14 pagesThe Microarchitecture of The LC-3b, Basic MachineJeff PrattNo ratings yet

- Cache1 2Document30 pagesCache1 2Venkat SrinivasanNo ratings yet

- CS203A Computer Architecture Cache and Memory Technology and Virtual MemoryDocument28 pagesCS203A Computer Architecture Cache and Memory Technology and Virtual MemoryBhuppi LatwalNo ratings yet

- The Principles of Energy HarvestDocument2 pagesThe Principles of Energy HarvestVenkat SrinivasanNo ratings yet