Professional Documents

Culture Documents

Eim Perf Flowchart Final

Eim Perf Flowchart Final

Uploaded by

Narla Raghu Sudhakar ReddyCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Eim Perf Flowchart Final

Eim Perf Flowchart Final

Uploaded by

Narla Raghu Sudhakar ReddyCopyright:

Available Formats

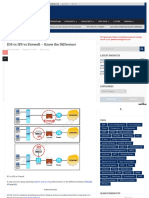

Simplified Siebel EIM Optimization for SQL Server

Visit www.siebelonmicrosoft.com for more information.

1. Read

2. Capture

3. Evaluate

4. Resolve & Optimize

Start at

www.siebelonmicrosoft.com

Get a baseline

Are there any hints in the query.

Yes

Modify the IFB file to remove hints.

Rerun Base Line

Put in IFB file: USE INDEX HINTS = FALSE USE ESSENTIAL HINTS = FALSE

Still have hints?

Yes

Use trace flag 8602

This will disable hints at the optimizer level for all queries even if coded.

Search for: Siebel Data Loading Best Practices on SQL Server. Paper & PPT.

Turn on SQL Profiler. Capture Events:

RPC: Completed SQL: BatchCompleted

Query Hints: These will be seen as (INDEX =) in the TextData column of Profiler. Use Ctrl F to search.

No

Filter on data loading ID, ex. SADMIN

Search on: TechNote: Query Repro

Load one batch of 5,000 rows as a sample from the EIM table to Base table

Catalog taking a large percent of load time?

Yes

Configure IFB file for only tables needed

Configure IFB using ONLY BASE TABLES, IGNORE BASET TABLES, ONLY BASE COLUMNS, IGNORE BASE COLUMNS. Read Siebel Technote #409.

This will be seen as a lot of sp_cursorfetch before the first EIM table is queried

No

Search on: TechNote: Disk Layout

Turn off SQL Profiler

Any Reads, Writes > 5K

Yes

100% NULL Indexes

Yes

100% NULL indexes usually will never be used, take up space and hurt perf on loads.

Run Poor Index Script

Look at EIM and Base tables Put back on base tables when done.

Look at DBCC SHOW_ST ATISTICS

Drop indexes as needed

Look at EIM and Base tables

Use EM to make recreate scripts prior to dropping.

Consider Only the Clustered Index on EIM table

Search on: TechNote: Memory Addressing for the Siebel Database Server

No

Read Siebel Technote 409 on EIM Perf & Optimization

Long Running Queries

Yes

Check for Disk Queueing

Check for High CPU usage

Check for Memory Pressure

Is Disk the Bottleneck?

Yes

Spread data over more spindles

Separate data from log and tempdb. Read disk slicing note.

Work with disk vendor

Use NT Perfmon.Exe and look at physical and logical disk queueing events.

No

Download Poor Index Stored Procedure

No

CPU the Bottleneck

Yes

Is MAXDOP set to 1?

Yes

Change it in sp_configure

sp_configure 'max degree of parallelism ' Siebel recommends setting MAXDOP to 1.

No

No

Work with admins and HW vendor to add more CPU

Memory the Bottleneck

Yes

Read Memory Note

Add RAM as needed

Look at memory counters for indications of thrashing.

No

Unpredictable Plan

Yes

SQL Agent Job to Update Statistics

Put only Clustered index on EIM table

Consider reducing number of records in EIM table

Update statistics via a scheduled job. Sample with higher frequency.

Run DBCC SHOWCONTIG on Base Tables

Is there Fragmentation ?

Yes

Is EIM table on own file group?

Yes

Use DBCC DBREINDEX

On the base table, leave room on the page by changing the fill factor and pad index on the clustered index

Pack to 100% on static lookup talbes

On static data, you will get better perf on 100% full due to less reads for same qty of data.

No

Read disk layout note and data loading presentation.

Yes

Is EIM table being truncated?

No

No

Archive out EIM table

Dont keep more than 100K rows in the EIM table. Can cause perf problems if you get a bad plan

No

Is there a NULL check on a NonNULL column?

Yes

Use Siebel Tools to make column NOT NULL

By making it not null in tools, the query will not generate this check in the WHERE clause.

Check Query Plan

Run the query in QA, look at SET STATISTICS SHOWPLAN ALL

Any Index Scans?

Yes

Update Statistics

Stale statistics, especially in EIM, can cause bad plans.

Add Index to Base Table as Needed

No

Book Mark Lookups?

Yes

Try a covering index.

No

Table Spools?

Yes

Index on ORDER BY

No

Is it optimized for everything else?

Yes

Size of Batch Optimized?

Yes

Running Multiple Batches in Parallel?

Yes

If all is optimized. You are done.

No

No

Start Perf Iteration Over Again

Change Batch Size in IFB file and EIM table

Enable row level locking, disable page level locking with sp_indexoption.

This will prevent page level locks. Hold row level locks. Thus help concurrency on large batch loads in hitting the same table(s) in parallel.

There is often a sweet spot in which the batch size is optimized for the process. Just test different sizes for your process and hardware. The idea is that the cost of reading in the repository is spread out over as many rows as possible. Note, start with 20K and go up or down in 5K increments. 100K is usually the max for a batch size.

Start two parallel batches at the same time.

Keep trying more batches with more records to find what the max capacity is. Eventually you will start disk queueing and slow the system down.

Is there blocking and lock escalation?

Yes

Insert one dummy row and begin a transaction around it.

Example: Insert into EIM values (999) Begin tran Update EIM where ROW = 999 (and dont commit it) This will hold a lock and prevent a table level lock escalation.

No

If all is optimized. You are done.

You will always have a bottleneck. Usually this comes at some point when your disk cannot keep up with the database. With EIM, this happens when you are running a whole lot of loads in parallel. After a certain point, you cause thrashing and diminishing returns. This is only found through trial and error.

You might also like

- ABAP On HANA Interview QuestionsDocument26 pagesABAP On HANA Interview QuestionsNagesh reddyNo ratings yet

- SAP BASIS Tutorial 3 Unix & Linux - SAP BASIS Administration and Learning TutorialsDocument13 pagesSAP BASIS Tutorial 3 Unix & Linux - SAP BASIS Administration and Learning Tutorialsvlsharma7100% (1)

- Abaphana IqDocument25 pagesAbaphana IqDora BabuNo ratings yet

- DBMS Unit5Document20 pagesDBMS Unit5Ponugoti Sai shashankNo ratings yet

- Accessing One Program Data in Another Program Easily Without Export and Import - Set and Get - SAPCODESDocument4 pagesAccessing One Program Data in Another Program Easily Without Export and Import - Set and Get - SAPCODESapostolos thomasNo ratings yet

- ABAP 7.40 Quick Reference - SAP BlogsDocument48 pagesABAP 7.40 Quick Reference - SAP BlogsMohan TummalapalliNo ratings yet

- Exp 0011Document34 pagesExp 0011chandra9000No ratings yet

- Creating A Physical Standby Database Using RMAN Restore Database From ServiceDocument12 pagesCreating A Physical Standby Database Using RMAN Restore Database From ServiceSamuel AsmelashNo ratings yet

- 2021 WebinarSeries BW4HANA V1Document18 pages2021 WebinarSeries BW4HANA V1k84242000No ratings yet

- State-of-the-Art ABAP - A Practical Programming GuideDocument26 pagesState-of-the-Art ABAP - A Practical Programming GuidesrikanthabapNo ratings yet

- XI - Step-By-step Guide To Develop Adapter Module To Read Excel FileDocument4 pagesXI - Step-By-step Guide To Develop Adapter Module To Read Excel FilemohananudeepNo ratings yet

- The Information Schema - MySQL 8 Query Performance Tuning - A Systematic Method For Improving Execution SpeedsDocument12 pagesThe Information Schema - MySQL 8 Query Performance Tuning - A Systematic Method For Improving Execution SpeedsChandra Sekhar DNo ratings yet

- Collect, Manage and Share All The Answers Your Team Needs: The Enterprise Q&A PlatformDocument7 pagesCollect, Manage and Share All The Answers Your Team Needs: The Enterprise Q&A PlatformmutNo ratings yet

- SAP Real Estate Management 101 PDFDocument107 pagesSAP Real Estate Management 101 PDFSanjeevNo ratings yet

- Internal Table Expressions in ABAP 7.4 Release - SAP FREE Tutorials PDFDocument5 pagesInternal Table Expressions in ABAP 7.4 Release - SAP FREE Tutorials PDFcpsusmithaNo ratings yet

- What Is SAP ALV - SAPHubDocument2 pagesWhat Is SAP ALV - SAPHubTAMIRE SANTOSH MOHANNo ratings yet

- Abap 7Document34 pagesAbap 7marianalarabNo ratings yet

- Part 1Document6 pagesPart 1Kshitij NayakNo ratings yet

- Total Content Map SQLDocument1 pageTotal Content Map SQLGaurav SalunkheNo ratings yet

- The Power of Performance Optimized ABAP and Parallel Cursor in SAP BIDocument8 pagesThe Power of Performance Optimized ABAP and Parallel Cursor in SAP BIkrishnan_rajesh5740No ratings yet

- Create A Simple Editable ALV GridDocument6 pagesCreate A Simple Editable ALV GridRam PraneethNo ratings yet

- Alert Configuration Example in PI - PO Single Stack - SAP Integration Hub PDFDocument17 pagesAlert Configuration Example in PI - PO Single Stack - SAP Integration Hub PDFSujith KumarNo ratings yet

- ABAP On HANA Interview QuestionsDocument20 pagesABAP On HANA Interview QuestionsShashikantNo ratings yet

- Reading Sample Sappress Integrating Sap SuccessfactorsDocument63 pagesReading Sample Sappress Integrating Sap SuccessfactorsMuhammad AliNo ratings yet

- Conditional Formatting With Formulas (10 Examples) - ExceljetDocument8 pagesConditional Formatting With Formulas (10 Examples) - ExceljetLeeza GlamNo ratings yet

- ABAP in SAP S - 4HANA® - Dynamic Selection Screen With ALV IDA and Excel - N SPRODocument4 pagesABAP in SAP S - 4HANA® - Dynamic Selection Screen With ALV IDA and Excel - N SPROArun Varshney (MULAYAM)No ratings yet

- Existing Code: Is It Still Valid?: Week 2 Unit 1Document61 pagesExisting Code: Is It Still Valid?: Week 2 Unit 1evasat111No ratings yet

- JDBC - Manual - MACHBASEDocument21 pagesJDBC - Manual - MACHBASEVojislav MedićNo ratings yet

- Dax 1677526120Document8 pagesDax 1677526120b5gbf67b5pNo ratings yet

- Drop Down Action in LIST UIBB - SAPCODES PDFDocument7 pagesDrop Down Action in LIST UIBB - SAPCODES PDFLk SoniNo ratings yet

- Ipwithease - Com - Firewall Vs Ips Vs Ids - PDFDocument7 pagesIpwithease - Com - Firewall Vs Ips Vs Ids - PDFAditya NandwaniNo ratings yet

- Uploading An Excel FileDocument2 pagesUploading An Excel FileVenkata SatyasubrahmanyamNo ratings yet

- Essbase Oracle Essbase Interview Questions and AnswersDocument23 pagesEssbase Oracle Essbase Interview Questions and AnswersparmitchoudhuryNo ratings yet

- ABAP 7.40 QuickDocument42 pagesABAP 7.40 Quickkamal singh100% (1)

- Chapter 11 - ABAP OOP - SAP ABAP - Hands-On Test Projects With Business Scenarios PDFDocument33 pagesChapter 11 - ABAP OOP - SAP ABAP - Hands-On Test Projects With Business Scenarios PDFପରିଡା ରଣେଦ୍ରNo ratings yet

- Part 8Document6 pagesPart 8Kshitij NayakNo ratings yet

- FICA - Events & Mass Activity Set UP - SAPCODESDocument18 pagesFICA - Events & Mass Activity Set UP - SAPCODESARPITA BISWASNo ratings yet

- CADSTAR SI Verify Self TeachDocument199 pagesCADSTAR SI Verify Self TeachKABOOPATHINo ratings yet

- Optimizing DAX ResourceDocument8 pagesOptimizing DAX ResourceYeyejNo ratings yet

- Part 3Document5 pagesPart 3Kshitij NayakNo ratings yet

- A Course in In-Memory Data Management: Prof. Hasso PlattnerDocument6 pagesA Course in In-Memory Data Management: Prof. Hasso PlattnerJony CNo ratings yet

- A Course in In-Memory Data Management: Prof. Hasso PlattnerDocument6 pagesA Course in In-Memory Data Management: Prof. Hasso PlattnerJony CNo ratings yet

- Adding SE78 Logo in Adobe Form - SAPCODESDocument1 pageAdding SE78 Logo in Adobe Form - SAPCODESOrsis LynxNo ratings yet

- Resolving The Truncation of Multi-Byte Characters in DMEE File - 2Document2 pagesResolving The Truncation of Multi-Byte Characters in DMEE File - 2Arun Varshney (MULAYAM)No ratings yet

- Deployment Values - IBM Cloud DocsDocument23 pagesDeployment Values - IBM Cloud Docssatyab172No ratings yet

- Abap News For Release 740 Sp05 1Document37 pagesAbap News For Release 740 Sp05 1Surya Reddy LakshminarasimhaNo ratings yet

- PG Program in Data ScienceDocument11 pagesPG Program in Data ScienceAjay PalNo ratings yet

- Ricef Sap AbapDocument18 pagesRicef Sap AbapAdaikalam Alexander RayappaNo ratings yet

- ABAP 7.40 Quick Reference - SAP BlogsDocument25 pagesABAP 7.40 Quick Reference - SAP BlogsSathish GunaNo ratings yet

- OpenSAP Ui51 Week 1 All SlidesDocument43 pagesOpenSAP Ui51 Week 1 All SlidesfarisNo ratings yet

- Siebel EAI and Performance of Child Object UpdatesDocument5 pagesSiebel EAI and Performance of Child Object Updatesaneel7jNo ratings yet

- SAP Basis Interview Questions, Answers, and ExplanationsDocument3 pagesSAP Basis Interview Questions, Answers, and Explanationspratham7391No ratings yet

- Avoid Using Deprecated SAP Gateway APIs in Your OData Service ImplementationDocument5 pagesAvoid Using Deprecated SAP Gateway APIs in Your OData Service ImplementationArun Varshney (MULAYAM)No ratings yet

- API Tester RoadmapDocument1 pageAPI Tester RoadmapPraneet kumarNo ratings yet

- Select Using Work Area Inline Declaration ABAP 7.4Document2 pagesSelect Using Work Area Inline Declaration ABAP 7.4Frbf FrbfbnNo ratings yet

- Topic NotesDocument398 pagesTopic Noteszaklam98No ratings yet

- Generate Insights From Unstructured Financial DataDocument3 pagesGenerate Insights From Unstructured Financial DataAzharudeen salimNo ratings yet

- AMDP - Avoiding FOR ALL ENTRIES and Pushing Calculation To Database Layer - SAP BlogsDocument11 pagesAMDP - Avoiding FOR ALL ENTRIES and Pushing Calculation To Database Layer - SAP BlogsheavyNo ratings yet

- Item ConversionDocument52 pagesItem Conversionredro0% (1)

- SQL TechnologyDocument66 pagesSQL Technologyprodman8402No ratings yet

- Create An Enterprise Web ApplicationDocument16 pagesCreate An Enterprise Web Applicationed.collioNo ratings yet

- Quiz 2Document91 pagesQuiz 2Alex FebianNo ratings yet

- D80194GC11 Les16Document17 pagesD80194GC11 Les16Thanh PhongNo ratings yet

- Advanced Data Base NoteDocument62 pagesAdvanced Data Base NoteDesyilalNo ratings yet

- List of SQL CommandsDocument7 pagesList of SQL Commandstxungo100% (2)

- Nhibernate ReferenceDocument178 pagesNhibernate Referenceprashant18574100% (2)

- Oracle Developer Interview QuestionsDocument100 pagesOracle Developer Interview Questionsvoineac_3No ratings yet

- Relational Data ModelDocument108 pagesRelational Data ModelPriya YadavNo ratings yet

- Dbms PracticalDocument3 pagesDbms PracticalKartik RawalNo ratings yet

- ITETL0001Document23 pagesITETL0001api-37543200% (1)

- Oracle Placement Sample Paper 5Document16 pagesOracle Placement Sample Paper 5Naveen PalNo ratings yet

- SQL QueriesDocument49 pagesSQL QueriesSrihitha GunapriyaNo ratings yet

- Mca Practiles of 5th SemDocument24 pagesMca Practiles of 5th SemdilipkkrNo ratings yet

- Wipro Interview QuestionsDocument39 pagesWipro Interview QuestionsSANJAY BALA100% (2)

- BI Design Best PracticesDocument157 pagesBI Design Best PracticesBogdan TurtoiNo ratings yet

- Platform Developer-2 SU18Document42 pagesPlatform Developer-2 SU18vijitha munirathnamNo ratings yet

- Create Database ObjectsDocument15 pagesCreate Database ObjectsPerbz JayNo ratings yet

- VD9aSc - HMW - 1498016685 - CLASS 12 HHW 2017Document51 pagesVD9aSc - HMW - 1498016685 - CLASS 12 HHW 2017Sangeeta YadavNo ratings yet

- Test Questions - MysqlDocument64 pagesTest Questions - MysqlMuhammad RaisNo ratings yet

- Microstrategy Advanced ReportingDocument1,050 pagesMicrostrategy Advanced Reportingsachchidanand89No ratings yet

- Oracle Midterm Part 2Document52 pagesOracle Midterm Part 2Raluk Nonsense100% (3)

- Background: Alter TableDocument31 pagesBackground: Alter Tablekerya ibrahimNo ratings yet

- The SQL Tutorial For Data Analysis v2Document103 pagesThe SQL Tutorial For Data Analysis v2Madalin ElekNo ratings yet

- DBMS Sample Practical FileDocument33 pagesDBMS Sample Practical File565 Akash SinghNo ratings yet

- LAB Assignment - 2Document19 pagesLAB Assignment - 2Devashish SharmaNo ratings yet

- DbUnit - Getting StartedDocument7 pagesDbUnit - Getting Startedbharat_malik13No ratings yet

- Bcom Ecom Syllabus 2015 2018Document87 pagesBcom Ecom Syllabus 2015 2018sathishNo ratings yet

- SQL DBXDocument74 pagesSQL DBXPedro LópezNo ratings yet