Professional Documents

Culture Documents

DOI 2010 Meta-Analysis of Heterogeneous Clinical Trials

DOI 2010 Meta-Analysis of Heterogeneous Clinical Trials

Uploaded by

benjaminsidebotto11Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

DOI 2010 Meta-Analysis of Heterogeneous Clinical Trials

DOI 2010 Meta-Analysis of Heterogeneous Clinical Trials

Uploaded by

benjaminsidebotto11Copyright:

Available Formats

Meta-analysis of heterogeneous clinical trials: An empirical example

Suhail A.R. Doi

a,

, Jan J. Barendregt

b

, Ellen L. Mozurkewich

c

a

Clinical Epidemiology Unit, School of Population Health, University of Queensland, Brisbane, Australia

b

Centre for Burden of Disease and Cost Effectiveness, School of Population Health, University of Queensland, Brisbane, Australia

c

Department of Obstetrics and Gynecology, Division of Maternal-Fetal Medicine, University of Michigan Medical School, Ann Arbor, MI, USA

a r t i c l e i n f o a b s t r a c t

Article history:

Received 17 July 2010

Accepted 6 December 2010

Available online 13 December 2010

Meta-analysis of heterogeneous clinical trials is currently sub-optimal. This is because there has

been no improvement in the method of weighted averaging for such studies since the DL method

in 1986. This article presents the argument for the use of situation specific weights to integrate

results from such trials. An empirical example is given with data from a meta-analysis done

10 years earlier. Previously reported data on 21 studies that looked at the effect of working

conditions onpretermbirths were re-analyzed. Several methods were usedtoestimate the overall

effect sizes. Study specic scores were included in the weighting process when combining studies

and it was shown that this model not only was more conservative than the model of DL but also

retains the legitimacy of the pooledeffect size. The inclusionof appropriate study specic scores in

an appropriate meta-analysis model permits the quantication of the variation between studies

based on something tangible as opposed to the randomadjustments made by the randomeffects

model to the pooled effect size. It is important that such differences are recognized by the wider

research community so that meta-analyses remain a valid tool for synthesizing research.

2010 Elsevier Inc. All rights reserved.

Keywords:

Meta-analysis

Random-effect

Quality-effect

Heterogeneity

Working conditions

1. Introduction

Today, meta-analysis is widely used in a wide range of

disciplines in particular epidemiology and evidence-based

medicine where results of some meta analyses have led to

major changes in clinical practice and health care policies.

Meta-analyses combine the result of several studies that

address a set of related research hypotheses using statistical

methods. The basic hypothesis is that the pooled results from

a group of studies can allow a more accurate estimate of an

effect than an individual study since it overcomes the

problem of reduced statistical power in studies with small

sample sizes.

One problem with meta-analyses today is that differences

between trials, such as sources of bias, are not addressed

appropriately by current meta-analysis models [1]. There are

several reasons for such differences which include chance,

different denitions of treatment effects, credibility related

heterogeneity (quality), and nally unexplainable and real

differences [2]. An important explainable difference is

credibility related heterogeneity (quality) and this refers to

the likelihood of the trial design to generate unbiased results

that are sufciently precise and allow application in clinical

practice [3]. Naturally, the aws in the design of individual

studies will have obvious relevance to creating heterogeneity

between trials as well as an inuence on the magnitude of the

meta-analysis results. If the quality of the primary material is

inadequate, this may falsify the conclusions of the review,

regardless of the presence or absence of effect size hetero-

geneity. The need for addressing heterogeneity in trials via

study specic assessment has been obvious for a long time

and the solution involves more than just inserting a random

termbased on effect size heterogeneity [4] as is done with the

random effects model.

The previous studies [58] that have attempted to

investigate incorporation of some study specic component

in the weighting of the overall estimate concluded that

Contemporary Clinical Trials 32 (2011) 288298

Corresponding author. Clinical Epidemiology Unit, School of Population

Health, University of Queensland, Herston Road, QLD4006, Australia. Tel.: +61

404 181134; fax: +61 7 3365 5599.

E-mail address: sardoi@gmx.net (S.A.R. Doi).

1551-7144/$ see front matter 2010 Elsevier Inc. All rights reserved.

doi:10.1016/j.cct.2010.12.006

Contents lists available at ScienceDirect

Contemporary Clinical Trials

j our nal homepage: www. el sevi er. com/ l ocat e/ concl i nt r i al

incorporating such information into weights provided incon-

sistent adjustment of the estimates of treatment effect. While

these authors follow the same assumption we do that studies

with deciencies are less informative and should have less

inuence on overall outcomes, they focused on individual

studies disregarding the fact that a weight change in one

study impacts all other studies in the meta-analysis. Such

attempts therefore did not reduce bias in the pooled estimate,

and may rather have resulted in an increase in bias.

Recently, a study score adjusted model that overcomes

these limitations has been introduced [9,10]. Our objective

now is to present this quality effects (QE) model that

incorporates study specic scores as weights with consider-

ation of the impact on all studies and compare it to the xed

effects (FE) and random effects (RE) models in a meta-

analysis on working conditions and adverse pregnancy

outcome. We also introduce two important updates to the

QE model as originally published.

2. Rationale

In a group of homogenous trials, it is assumed that because

the effect sizes are homogenous, the studies are all estimating

the same target effect (we can call them type A trials). In this

situation, the inverse variance weights of Woolf [11] will

minimize the variance since:

MSE = Expected EstimateTrue

2

= Variance + Bias

2

:

Bias is zero if the underlying true effect size's are equal and

thus minimizing variance is optimal and the weighted

MSE=Variance. It is thought that this inverse variance

weighted analysis tests the null hypothesis that all studies

in the meta-analysis are identical and show no effect of the

intervention under consideration regardless of homogeneity

[4]. This of course requires the assumption that trials are

exchangeable so that if one large trial is null and multiple

small trials show an effect, the large trial essentially conrms

that the null hypothesis is true. Exchangeability however is a

big assumption and therefore if we do not believe the trials

are exchangeable then in this situation we have two

alternatives: Either the trials have been affected by bias

even though underlying true effects are identical (we can call

these type B trials) or the trials represent different underlying

true effects (we can call these type C trials). In the former

case, the trial effect size from a biased trial might seem like it

is coming from a different underlying true effect, thus giving

the same impression as type C trials: that the trials represent

different underlying true effects. In both types B and C trials,

inverse variance weights will not minimize the variance as it

will just exaggerate and create gross bias in these situations.

Furthermore, any set of weights in a type A situation

estimates the same target, but in a type B or C situation

each set of weights estimates a different target. Thus inverse

variance weights in the latter situation just increases bias and

is not optimal for type B or C trials. Thus in type B or C trials

we would want to use situation specic weights.

One such situation specic weight that has been suggested

for type B trials is weighting according to the probability (Q

i

)

of credibility (internal validity or quality) [810] of the

studies making up the meta-analysis. Although this can

correct for distortions due to systematic error, it can also

introduce errors of another type. For example a study of a

small sample, which is not representative of the underlying

population, may get undeserved weightage from a large

underlying population and this can skew the data. It might

thus be informative to weigh according to precision (inverse

variance) and then re-distribute the weights according to

situation specic requirements. In this case importance of

smaller studies will only get upgraded if the larger or more

precise study is deemed poor by its situation specic weight.

This line of thought is not newas this is precisely what the

random effects model attempts to do [12]. The unfortunate

thing however is that the situation specic weight used in

this particular model is an index of the variability of the effect

sizes across trials and the same situation specic weight is

applied to all trials. This creates two problems, rst that these

weights are meaningless and second that they are not study

specic. In order to rectify this situation, an alternative

approach has been proposed in 2008 [9,10]. In the QE model,

both of the draw-backs of the random model type of re-

distribution have been addressed and an example of this

applied to meta-analysis is provided below.

One further consideration, in type C trials, which deal

usually with burden of disease where true differences across

populations are expected, is the fractional population repre-

sented by the effect size in each study. Of course, a study of, for

example, 1000 respondents is equally useful in examining the

mortality ina country with10 millioninhabitants as it wouldbe

in a country with a population of only 1 million. Without

weighting, any gures that combine data for two or more

countries would over-represent smaller countries at the

expense of larger ones. So a population size weight is needed

to make an adjustment to ensure that each country risk is

represented in the pooled estimate proportional to its

population size. Although such weighting has been attempted

previously, it has been improperly applied [13]. The best

method is to assign a proportional weight between zero and 1

of each study in relation to the largest based on the underlying

population size. The population size weight (Pweight) is thus

the proportional weight Psize

i

/Psize

max

. This corrects for the

fact that most studies may have very similar sample sizes, no

matter how large or small their underlying population. These

weights can be multiplied by internal validity weights (also

between zero and 1) to yield modied internal validity weights

that can then be utilized in the QE model (see Appendix 4).

3. Differences in weighting between models

The standard approach frequently used in meta-analysis in

clinical research is termed the inverse variance method or FE

model based on Woolf [11]. The average effect size across all

studies is computed, whereby the weights are equal to the

inverse variance of each study's effect estimator. Larger studies

andstudies withless randomvariationare givengreater weight

than smaller studies. The weights (w) allocated to each of the

studies are then inversely proportional to the square of the

standard error (se), thus for the ith study

w

i

=

1

se

2

i

289 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

which gives greater weight to those studies with smaller

standard errors.

As can be seen above the variability within each study is

used to weight each studies effect in the current approach to

combining them into a weighted average as this minimizes

the variance (assuming each study is estimating the same

target). So if a study reports a higher variance for its effect size

estimate it would get lesser weight in the nal combined

estimate and vice versa. This approach however does not take

into account the innate variability that exists between the

studies arising from differences inherent to the study such as

their protocols and how well they were executed and

conducted. This major limitation has been well recognized

and it gave rise to the random effects (RE) model approach

[12]. Here, a constant is generated from the homogeneity

statistic Q and using this and other study parameters a

random effects variance component (

2

) is generated. The

inverse of the sampling variance plus this constant that

represents the variability across the population effects is then

used as the weight

w

i

=

1

se

2

i

+

2

where w

i

*

is the random effects weight for the ith study.

However, because of the limitations of the RE model,

when used in a meta-analysis of badly designed studies, it

will still result in biased estimates even though there is

statistical adjustment for effect size heterogeneity [4].

Furthermore, such adjustments, based on an articially

inated variance, lead to a widened condence interval,

supposedly to reect effect size uncertainty, but do not have

much clinical relevance [4,14]. Therefore, a new method for

adjusting for heterogeneity can use situation specic para-

meters and in this case we use study specic scores. These are

then rescaled to a probability Q

i

, the judgement of the

probability (0 to 1) that study i is credible or the proportion

(01) that the underlying study i population makes up of the

largest underlying population of interest and so on. From Q

i

a

study specic composite called

Q

i

is generated that takes into

consideration study specic information and its relationship

to other studies to re-distribute inverse variance weights. As

studies increase in quality or are more representative of the

population, re-distribution becomes progressively less and

ceases when all studies are of perfect quality or representa-

tion. This is totally different from the direct adjustment for

quality of xed effects weights reported by several authors

[5,7,8]. With the QE model, rather than use study specic

scores directly, the composite

Q

i

is generated [10]. The QE

weight, w

i

, is then:

w

i

=

Q

i

w

i

:

Detailed aspects of computation of this model are given in

Appendix 1 with an important update for overdispersion

using a correction factor for individual study variances. A

simulation of 10,000 iterations is detailed in Appendix 2 that

compares the properties of QE and RE models under different

levels of systematic error. This further conrms the validity of

the QE model both under varying levels of between study

differences and in comparison to the RE model.

The extent of redistribution of these weights due to non-

credibility can be assessed via a Q-index dened as follows:

Q =

n

1

1Q

i

w

i

n

1

w

i

_

_

_

_

_

_

_

_

_

_

:

The rationale for this index is that it tells us the probability

that the information contained within the studies in the

meta-analysis is non-credible. The higher the Q-index, the

more likely that the information contained is non-credible

and thus, as Q-index increases, the weights in the meta-

analysis come together and the condence interval of the

pooled estimate increases.

4. Results of application of each of these models

Data from a meta-analysis of 21 studies on the association

of working conditions with preterm birth published between

1983 and 1998 are utilized. The studies were those reported

10 years earlier by Mozurkewich et al. [15]. Each study had

evaluated the effect of working conditions on pretermbirth of

women with a control group. Data abstraction and quality

assessment of each study have previously been reported.

These studies represent a mix of casecontrol, cross-sectional

and retrospective cohorts with large asymmetry between

distribution of individuals in experimental and control arms

and while effect sizes are relatively homogenous, the

included studies are far from homogenous as also indicated

by their distribution of quality scores in Table 1.

The xedeffect (FE) model (inverse variance weighted), the

DerSimonianand Laird's RE method (randomre-distribution of

inverse variance weights) [12] and the Doi and Thalib QE

method (study specic re-distribution of inverse variance

weights) [9,10] were used to calculate the overall effect size.

The quality scores and the effect size estimates of the effect of

working conditions on preterm birth are presented in Table 1,

which also shows the study-specic weights, as a proportion of

the sum of weights, for the xed, random and quality effects

models. We rst showed that since effect size heterogeneity

was minimal (

2

=0.0027), the pooled effect size fromall three

models was comparable. However, because of fairly homoge-

nous effect sizes, QE weighting serves to decrease our

condence in the pooled effect size rather than alter the

estimate of the pooled effect size. Incorporation of the quality

scores was alsoassociatedwitha more conservative condence

interval (1.031.44) thanwiththe REmodel (1.121.3) because

with decreasing effect size heterogeneity, the RE model

approaches the FE model. Differences in weight of individual

studies by QE versus RE model ranges from 18.1 to +3.5%

(Table 1, QE weight minus RE weight). The Q-index was 0.62

suggesting this probability of non-credibility of information

within the studies.

We next explored the impact of changes in the effect size

of the largest study because that is the study with the greatest

impact on a meta-analysis. The rationale for doing this is to

create several levels of effect size heterogeneity. When the

odds ratio (OR) for the largest study (study 9 in Table 1,

N=104,262) was biased to different degrees, there were

290 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

ensuing alterations in the random effects variance compo-

nent (

2

).There was a U shaped relationship between the

2

and the odds ratio with the minimum

2

corresponding to an

OR of 1.24 (Fig. 1) and the randomeffect weight of the largest

study (study 9) had a clear decrease with increasing

2

(Fig. 2). There was no change in the QE weight of study 9 with

increase in heterogeneity of effect sizes (Fig. 2), conrming

that effect size heterogeneity has nothing to do with the way

the QE model's pooled estimate is computed.

The relationship between effect size in the biggest study

and its impact on the pooled RE model effect size is depicted

in Fig. 3. An effect size of 1.24 was associated with the least

effect size heterogeneity (

2

) and thus maximum weight of

study 9 (centre vertical line in Fig. 3). Effect size heteroge-

neity progressively increases on either side of this line with

the weight of study 9 decreasing as heterogeneity increased.

Despite these predictable changes in effect size heterogeneity

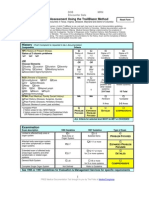

Table 1

Data from 21 studies on the effect of working conditions on preterm births in healthy women along with study specic weight for the xed effects, Der Simonian

Laird random effects model and the Doi Thalib quality effects model.

Study Design N

EXP

/N

CONT

Events

E

EXP

/E

CONT

Quality score

(Q

i

)

OR

(95% CI)

w

i

/w

i

(%)

w

i

/w

i

(%)

w

i

/w

i

(%)

Absolute deviation of

OR from median

1 Case control 87/144 25/48 0.57 0.81

(0.451.44)

0.8 1.7 4.2 0.46

2 Cross-sectional 578/1350 47/65 0.42 1.75

(1.182.58)

1.7 3.6 3.4 0.48

3 Retrospective survey 328/1934 20/89 0.33 1.36

(0.832.23)

1 2.3 2.5 0.09

4 Case control 67/291 29/141 0.71 0.81

(0.481.39)

0.9 2 5.3 0.46

5 Cohort 3518/1700 127/56 0.50 1.1

(0.81.51)

2.5 5.2 4.5 0.17

6 Case control 6245/429 579/26 0.50 1.57

(1.052.35)

1.5 3.4 4 0.3

7 Cohort 472/6628 62/746 0.50 1.2

(0.911.58)

3.3 6.7 4.8 0.07

8 Prospective cohort 258/638 15/24 0.42 1.59

(0.823.06)

0.6 1.3 3 0.32

9 Prospective cohort 32,784/

71,478

1836/

3288

0.33 1.23

(1.161.3)

73 42.2 24.1 0.04

10 Cross-sectional 250/624 15/30 0.50 1.27

(0.672.4)

0.6 1.4 3.6 0

11 Cross-sectional 249/286 22/23 0.25 1.11

(0.62.03)

0.7 1.5 1.8 0.16

12 Cross-sectional 2479/1911 131/104 0.50 0.97

(0.741.26)

3.6 7.3 5 0.3

13 Prospective cohort 72/11 5/0 0.50 3.51

(0.06204)

0 0 3.3 2.24

14 Case control 771/699 127/83 0.64 1.46

(1.091.97)

2.8 5.9 6 0.19

15 Prospective cohort 1113/3107 37/121 0.58 0.85

(0.591.24)

1.8 3.9 4.8 0.42

16 Prospective cohort 287/325 32/42 0.33 0.85

(0.521.38)

1.1 2.4 2.5 0.42

17 Cross-sectional 504/1897 71/214 0.50 1.29

(0.971.72)

3 6.3 4.7 0.02

18 Prospective cohort 79/434 3/21 0.58 0.83

(0.252.73)

0.2 0.4 3.9 0.44

19 Retrospective cohort 56/862 5/52 0.25 1.59

(0.624.07)

0.3 0.7 1.7 0.32

20 Prospective cohort 39/1127 9/189 0.42 1.52

(0.713.22)

0.4 1 2.9 0.25

21 Prospective cohort 122/224 9/13 0.58 1.3

(0.543.1)

0.3 0.8 4 0.03

Pooled OR All 1.22

(1.161.28)

1.2

(1.121.3)

1.22

(1.031.44)

OR, Odds Ratio; w

i

, xed effect weight; w

i

, random effects weight, w

i

, quality effects weight.

0

0.01

0.02

0.03

0.04

0.05

0.06

0.8 1 1.2 1.4 1.6

Biased study 9 OR

2

a

f

t

e

r

b

i

a

s

i

n

s

t

u

d

y

9

Fig. 1. Relationship between effect size of the biggest study and Tau squared

in the RE model. Tau squared was recalculated for various biases in the effect

size of study 9 created by varying the experimental group event rate. Tau

squared was minimal at an OR for study 9 of 1.24.

291 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

and study 9 weight, the effect on the pooled effect size was

unpredictable and bore no relationship to the degree of bias

in the effect size of study 9 (Fig. 3).

In the QE model, regardless of what was the extent of the

effect size bias introduced, weight of study 9 remains similar

and therefore the pooled odds ratio for the meta-analysis

under the QE model was also biased in the same direction

(given the high study precision) but only if the study was

deemed to be of good quality (Fig. 4). However, if the

magnitude of the effect size bias is assumed to increase

(irrespective of direction) with decreases in quality, then

there will be minimization of the effect of this bias on the

pooled estimate in the QE model as when the quality of this

study was progressively decreased, the pooled estimate

gradually returned towards the original estimate of an OR

of approximately 1.22 (Fig. 4).

The way the 95% CI of the overall effect size estimate of the

QE model changes with quality is shown in Figs. 5 and 6.

When study 9 is biased to an OR of 0.96, the QE model pooled

estimate was biased in that direction. However, as its quality

of study 9 was decreased, the pooled estimate returned to its

baseline value albeit with a wider condence interval

suggesting that we should have less condence in this pooled

value (Fig. 5). When effect size heterogeneity was minimal

across studies and study 9 was non-biased (Fig. 6), as

credibility of study 9 was decreased, our condence in the

pooled estimate also decreased (wider condence interval),

despite the pooled effect size remaining constant.

Finally, the weight gain (over inverse variance weight) of

the smaller studies that received additional weight in both

the RE and QE models was plotted against a measure of their

heterogeneity. The measure in the RE model was a deviation

of the study effect size from the median and in the QE model

was the probability that the study was credible. The RE re-

distribution bore no relationship to heterogeneity in effect

size (Fig. 7), but the QE model bore a denite linear

relationship to quality (Fig. 8).

5. Discussion

The inclusion of study specic scores in meta-analyses

permits a better understanding of the variation between

studies. The QE method, just described, can be easily applied

and seems to generate more conservative overall estimates

when there is heterogeneity in quality between studies. This

is evident from the fact that the QE summary had a higher

variance and, consequently, wider condence interval than

the RE summary in this meta-analysis. Thus, RE summaries

are not predictably conservative and this has been a problem

even in their relationship to xed effect summaries [16].

0.8

0.9

1

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

0.7 0.9 1.1 1.3 1.5 1.7

Biased study 9 OR

P

o

o

l

e

d

R

E

M

O

R

a

n

d

9

5

%

C

I

Increasing heterogeneity Increasing heterogeneity

Fig. 3. Relationship between the effect size in the largest study and the

pooled RE model effect size. The pooled RE effect size was recalculated for

various biases in the effect size of study 9 created by varying the

experimental group event rate. The vertical line is the point where tau

squared is minimal and heterogeneity in effect size increases on either side of

the line.

0.8

0.9

1

1.1

1.2

1.3

1.4

1.5

1.6

0 0.2 0.4 0.6 0.8 1

Quality of study 9

P

o

o

l

e

d

Q

E

M

O

R

OR 0.82

OR 0.96

OR 1.1

OR 1.24

OR 1.38

OR 1.53

OR 1.68

Fig. 4. Relationship between quality of the largest study, its effect size bias

and the pooled QE model effect size. The pooled QE effect size was

recalculated for various biases in the quality of study 9 created by varying

this by 0.1 each time as well as for various biases in the effect size of study 9

created by varying the experimental group event rate. The vertical line

represents the true quality of study 9. Regardless of the direction or

magnitude of the effect size bias, the pooled effect size returned to its original

value when quality decrements were deemed to be associated with

increased effect size bias in study 9.

0.8

0.9

1

1.1

1.2

1.3

1.4

1.5

1.6

0.00 0.20 0.40 0.60 0.80 1.00

Probability that study 9 is credible (0-1)

Study 9 OR biased to 0.96

P

o

o

l

e

d

Q

E

M

O

R

a

n

d

9

5

%

C

I

Fig. 5. Relationship between quality and the 95% condence interval of the

QE model pooled effect size when study 9 effect size is biased to 0.96 (OR).

The thin lines are the 95% condence limits on the pooled QE model effect

size (thick line).

0

10

20

30

40

50

0 0.02 0.04 0.06 0.08 0.1

Tau squared

W

e

i

g

h

t

o

f

b

i

g

g

e

s

t

s

t

u

d

y

(

%

)

Fig. 2. Relationship between tau squared and weight allocated to the biggest

study in both the RE and QE models. As tau squared increased, weight

progressively decreased in the RE model (circles) but there was no

signicant impact on the QE model (squares).

292 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

In the RE model, the weight of the larger studies are

redistributed to smaller studies but

2

has a decreasing effect

as study precision declines [14]. The size of

2

is determined

by howheterogeneous the effect sizes are and if

2

is zero, the

RE model defaults to the FE model. If we focus on the largest

study, the bigger its difference from other studies, the bigger

the

2

and the decrease in weight of this study.

2

has a

U-shaped association with effect size in the largest study,

being minimal when the largest study conforms to other

study effect sizes, and as this effect size departs from that of

other studies,

2

increases. The weight of the largest study

then declines as

2

increases. However, while the biggest

individual study weight decrements associated with bigger

2

follow a predictable pattern, the impact of different

2

values

on the pooled estimate is unpredictable. This happens

because while individual study weight changes are predict-

able from

2

, the relationship of weight gain across smaller

studies bears no relationship to which study shows the most

effect size heterogeneity (Fig. 7).

In contrast, with the QE model, the weight of the larger

studies is redistributed to smaller studies only if their

credibility is deemed to be lower. The correction,

i

, is

individualized to each study and increases as study credibility

declines but with an impact proportional to study precision

[9,10]. If

i

is zero for all studies, the QE model defaults to the

FE model. The correction fromone study is redistributed to all

other studies proportional to their credibility. The impact on

the pooled estimate is thus totally driven by what each study

has to offer and maintains a relationship of weight gained

across studies based on heterogeneity in quality (Fig. 8).

If we focus on the largest study again, the bigger its

decrease in credibility, the bigger the penalty applied to this

study by the QE model. Thus a non-credible large study will

not be able to alter estimates of the pooled effect much even if

its effect size is considerably different from the rest of the

studies. But non-credibility also leads to less condence in the

pooled estimate, reected by wider condence intervals. We

have shown in this meta-analysis that if the effect size of the

largest study was biased to an OR of 0.96, the pooled QE

model summary estimate changes in favor of this study only if

its credibility was high. As credibility is decreased the pooled

estimate returns towards its former value. Credibility infor-

mation thus acts as a regulatory mechanism that controls the

impact any one study can have on the pooled estimate.

While this mechanism pre-supposes that non-credibility

leads to bias in the effect-size, this supposition is backed by

clear evidence suggesting that inadequate methodological

reporting correlates with bias in estimation of treatment

effects [1,6,1720]. However, there could be instances where

credibility information does not lead to bias in the estimation

of treatment effects or alternatively where such biases may

have been obscured by the lack of credibility and this meta-

analysis is an example of the latter situation. In such cases, the

QE model is still valid and credibility information results

simply in decreased condence (wider condence intervals)

in the pooled estimate. We do not delete lower quality studies

because every study has something to add to the weighted

estimate and we do not know what the relationship of study

specic scores are to the magnitude nor direction of bias.

However if this weighting is not basedonstudy or goal specic

attributes then the weighted estimate loses meaning. A

sensitivity analysis on the other hand can only tell us that

subgroups are heterogeneous but not what the true estimate

0.9

1

1.1

1.2

1.3

1.4

1.5

1.6

0.00 0.20 0.40 0.60 0.80 1.00

Probability that study 9 is credible (0-1)

Non-biased study 9 OR

P

o

o

l

e

d

Q

E

M

O

R

a

n

d

9

5

%

C

I

Fig. 6. Relationship between quality and the 95% condence interval of the

QE model pooled effect size when study 9 effect size is un-biased. The thin

lines are the 95% condence limits on the pooled QE model effect size (thick

line). As study 9 had minimal effect size heterogeneity, the pooled QE model

effect size did not change with decrements in quality but the condence

interval widened.

0.0

1.0

2.0

3.0

4.0

5.0

0 0.2 0.4 0.6

Deviation of study ES (OR) from the median

A

d

d

i

t

i

o

n

a

l

w

e

i

g

h

t

%

u

n

d

e

r

R

E

m

o

d

e

l

Fig. 7. Relationship between measure of heterogeneity in each study and the

additional weight given (over inverse variance weighting) to each of the

smaller studies under the RE model (study 9 that lost weight was excluded).

Heterogeneity was calculated as the absolute value of the median effect size

across all 21 studies minus the study effect size.

0.0

1.0

2.0

3.0

4.0

5.0

0.20 0.40 0.60 0.80

Probability that study is credible

A

d

d

i

t

i

o

n

a

l

w

e

i

g

h

t

%

u

n

d

e

r

Q

E

m

o

d

e

l

Fig. 8. Relationship between measure of heterogeneity in each study and the

additional weight given (over inverse variance weighting) to each of the

smaller studies under the QE model (study 9 that lost weight was excluded).

Heterogeneity was calculated as the probability that the study was credible.

293 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

is likely to be. In type B studies (systematic error), study

specic scores can lead to the best approximation of the true

effect size while in type C studies (true underlying differ-

ences), using population weights to pool studies can give a

better idea of the population wide estimate. None of these

would be possible with either the RE model or sensitivity

analyses.

While weighting study estimates by their study specic

scores, we must keep in mind that these scores do not tell us

the direction or magnitude of the change in effect size that is

attributable to that score. The QE method of Doi and Thalib

[9], is not constrained by this limitation, because, unlike

previous methods, it does not adjust a study weight directly

but discounts it in relation to all other study weights based on

its quality status. This is exactly what the RE model does too,

the major difference being that the latter adds on weight to

smaller studies without any rationale for doing so and the

process ultimately becomes random. This is because

2

is not

individualized to each study as is

i

in the QE model. This is

demonstrated in Figs. 78 where a gradual increase in weight

of smaller studies with quality is seen but not with effect size

heterogeneity. This also explains why previous attempts to

incorporate study specic scores into weights have failed to

provide sufcient adjustment of the estimates of treatment

effects as they failed to consider heterogeneity individualized

from one study to the next, or worse, even thought of

incorporating study specic scores over the random redistri-

bution in an RE model [5,8].

Greenland suggested more than a decade ago that quality

scoring merges objective information with arbitrary judg-

ments in a manner that can obscure important sources of

heterogeneity among study results [21]. He gave the example

of dietary quality scoring in the Nurses Health Study and states

that the result would likely indicate no diet effects associated

with disease if the effects of important quality items are

confounded within strata of the summary quality score [21].

The problemis to use the information regarding quality in this

way. If we viewedthe diet quality score as the probabilitythat a

Nurses diet is accurately measured (by dividing each score by

the maximumpossible score), we wouldbe able to rank Nurses

by best to worse reliability of dietary information. Even if this

ranking is subjective or poor, we would still be more condent

about diets relationship to disease in high scorers than in low

scorers. This is the correct use of quality scores, but would not

work with conventional meta-analysis models because spread

of precision and effect size take precedence over any

stratication done by quality score [14]. The fact that previous

authors used scores as exclusion criteria or to sequentially

combine trial results using these models would only increase

bias by altering the range of precision and effect size

differences among stratied studies [14]. This is probably

why the reports of stratication of meta-analyses by quality

score report noclear impact onthe pooledestimate [19,2224].

Study specic assessment has not, till now, found an

acceptable means of becoming an important part of meta-

analyses. More than half of published meta-analyses do not

specify in the methods whether and how they would use

study specic assessment in the analysis and interpretation of

results and only about one in a thousand systematic reviews

consider weighting by quality score [25]. This is probably

because of the lack, until now, of an adequate model to do so

and therefore those meta-analyses that had an a priori

conceptualization of quality simply linked it to the interpre-

tation of results or to limit the scope of the review. Although,

there is no gold standard and we still do not knowhowbest to

measure quality, this is not an obstacle to the quality effects

analysis because it works with any quality score [9]. Given

that we have demonstrated that the RE model randomly

adjusts estimates of treatment effects in a meaningless

fashion, it may now be time to switch from observed random

statistical effect size heterogeneity to models that are based

on measured study specic estimates of their heterogeneity.

Appendix 1. The quality effects model computations

The quality adjustor,

i

is given by:

i

=

w

i

w

i

Q

i

N1

where w

i

is the inverse variance weight and Q

i

is the

credibility of study i ranging from zero to 1 and N is the

number of studies in the meta-analysis. This quality adjustor

is then used to compute tau hat. There was a slight error in

the previously published [10] computation of tau hat by the

alternate quality adjustor that allowed in certain situations a

negative weight. This was rectied by computing an adjusted

Q

i

rst as follows:

Q

i

adj =

N

i =1

Q

i

_ _

N

i =1

i

_ _

N1

_

_

_

_

_

_

_

_

_

_

_

_

+ Q

i

if Q

i

Q

i

b 1

Q

i

Otherwise

Tau hat is then given by:

i

=

N

i =1

i

_ _

N

Q

i

adj

N

i =1

Q

i

adj

_

_

_

_

_

_

_

_

_

_

i

:

Tau hat then is used to compute the study specic

variance component Q hat as follows:

Q

i

= Q

i

+

i

w

i

_ _

:

What these equations do is to replace the random effects

variance component with study specic variance components

so that the target this meta-analysis is estimating becomes

meaningful. The nal summary estimate is then given by:

ES

QE

=

w

i

ES

_ _

w

i

=

Q

i

w

i

ES

i

_ _

Q

i

w

i

_ _

where ES is the pooled effect size measure and it has a

variance (v) given by

V

QE

= v

i

w

i

w

i

_ _

2

:

294 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

And thus since w

i

= w

i

Q

i

(where w

i

=

1

v

i

) this reduces to

V

QE

=

Q

2

i

w

i

_ _

Q

i

w

i

_ _ _ _

2

:

However, based on simulation studies, there is signicant

over-dispersion and thus this variance estimate underesti-

mates the true variance and leads to a condence interval

with poor coverage. To rectify this, we added a correction

factor (CF) for over-dispersion based on the Q statistic as

follows:

CF = 1max 0;

Q N1

Q

_ _ _ _

0:25

:

For computation of the variance of the weighted average,

the variance of each study was then inated to the power CF

as follows:

w

i

=

1

v

CF

i

if v

i

b 1 or w

i

=

1

v

2CF

i

if v

i

N 1:

This was then used to compute V

QE

as follows:

V

QE

=

Q

2

i

w

i

_ _

Q

i

w

i

_ _ _ _

2

:

Based on the simulation outlined below in Appendix 2, the

coverage probability of this condence interval is not less than

90% and usually more than the nominal level of 95% for the QE

model even in the presence of substantial heterogeneity.

Assuming the distribution of these estimates is asymptotically

normal, the 95% condence limits are easily obtained by:

95%CI = ES F1:96

v

QE

_

_ _

:

The effect size used in this study was the Ln(OR) with the

se for each study given by:

se =

1

a

+

1

b

+

1

c

+

1

d

_

where a, b, c and d represent the cell counts in a 22 table for

each study. To account for zero outcomes in one of the

studies, a continuity correction was applied by adding 0.5 to

all cell counts [26].

All analyses were done using MetaXL (www.epigear.com)

or an excel spreadsheet available fromthe authors on request.

Appendix 2. Simulation study

This meta-analysis was made up of a fairly homogenous group of studies and thus the comparison above actually compares

essentially a FE and QE model. We therefore decided to simulate what happens when heterogeneity is increased by adding

systematic error to each study. This error needs to be in either direction and of variable magnitude for each study score. We did this

by rst replacing the numbers of events in both arms of the studies by a generation from a binomial distribution with parameters

(N1,p1) and (N2,p2) in the intervention and control arms respectively. N1 and N2 were xed to the same as the original study

data. p2 was generated from a uniform distribution with parameters (0.03, 0.48) which represented the range of control group

event rates in the original meta-analysis. p1 was then computed to deliver an odds ratio of 1.22 which is the odds ratio in the

original QE meta-analysis. This was done by assigning p1 as follows:

p1 =

p2 1:22

1p2 + p2 1:22

:

The standard error (se

i

) for each study thus generated was computed and then inated by a fraction of 0.5 based on each study's

credibility to mimic systematic error as follows:

se

= se

i

+ 0:5 1Q

i

:

A new effect size (LnES

new

) adjusted for systematic error was then generated from a normal distribution with parameters

(Ln1.22, se). P2 generated previously was then used to re-compute p1 that would deliver an odds ratio of exp(LnES

new

) as

follows:

p1

=

p2 exp LnES

new

1p2 + p2 exp LnES

new

:

Anewmeta-analysis was thencomputedwithevents generatedfromabinomial distributionwithparameters (N1,p1) and(N2,p2) in

the intervention and control arms respectively. This meta-analysis was then run 10,000 times using Ersatz simulation software (www.

epigear.com). The simulation was then run two more times, once with decreasing quality with decrease in study size (0.9 for the three

largest studies, then0.8 for the next three andso on) andonce more witha randomnumber betweenzero andone for quality. The results

comparing the QE and RE models are given in the tables below.

295 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

Appendix 3. Overdispersion correction

In a study with overdispersed data, the mean or

expectation structure () is adequate but the variance

structure [

2

()] is inadequate. Individuals in the study can

have the outcome with some degree of dependence on study

specic parameters unrelated to the intervention. If such data

are analyzed as if the outcomes were independent, then

sampling variances tend to be too small giving a false sense of

precision. One approach is to think of the true variance

structure as following the form [()

2

()]; however it is

complex to t such a form. As a simpler approach we suppose

()=c, so that the true variance structure [c

2

()] is some

constant multiplier of the theoretical variance structure. A

common method of estimating c is to use the observed chi

squared goodness of t statistic for the pooled studies divided

by its degrees of freedom [2729]:

c =

2

= df:

If there is no overdispersion or lack of t, c=1 (because

the expected value of the chi squared statistic is equal to its

degrees of freedom) and if there is then cN1. In a meta-

analysis this goodness of t chi squared divided by its df is

equal to H

2

as dened by Higgins [30].

The problem of using the overdispersion parameter as a

constant multiplier of the variances of each study in the meta-

analysis presupposes that for a constant increase in this

parameter, there is a constant increase in variance. This

means that the impact of the parameter is not capped and a

Appendix Table S2.1

Results of 10,000 simulations using original quality scores. True underlying effect size was 1.22.

Parameter QE results RE results

Coverage probability of the condence interval 99.3% 88.1%

Mean pooled estimate (95% CI)

Range

1.237 (1.2321.239)

0.711.94

1.252 (1.2491.254)

0.841.91

Median variance (IQR)

Range

0.033 (0.0170.054)

0.00390.414

0.007 (0.00530099)

0.00120.043

Pooled effect size N1.12 and b1.32 44.97% 55.8%

Pooled effect size b1 6.7% 1.51%

Pooled effect size N1.5 6.8% 3.3%

Median Tau squared (IQR)

Range

0.105 (0.0730.158)

0.00680.825

Median Q-index (IQR) 0.63 (0.620.64)

Appendix Table S2.2

Results of 10,000 simulations using greater quality for bigger studies. True underlying effect size was 1.22.

Parameter QE results RE results

Coverage probability of the condence interval 95.5% 86.4%

Mean pooled estimate (95% CI)

Range

1.22 (1.2241.226)

1.01.48

1.25 (1.2481.252)

0.891.76

Median variance (IQR)

Range

0.0034 (0.00240.0047)

0.00260.0329

0.0036 (0.00290.0044)

0.00340.011

Pooled effect size N1.12 and b1.32 87.28% 69.01%

Pooled effect size b1 0% 0.27%

Pooled effect size N1.5 0% 1.04%

Median Tau squared (IQR)

Range

0.042 (0.0310.055)

00.175

Median Q-index (IQR) 0.13 (0.1290.146)

Appendix Table S2.3

Results of 10,000 simulations using random quality scores. True underlying effect size was 1.22.

Parameter QE results RE results

Coverage probability of the condence interval 99.2% 87.7%

Mean pooled estimate (95% CI)

Range

1.230 (1.2281.233)

0.732.03

1.252 (1.2491.254)

0.871.85

Median variance (IQR)

Range

0.0272 (0.0150.049)

0.00040.7196

0.0067 (0.00490093)

0.00080.0679

Pooled effect size N1.12 and b1.32 56.75% 56.68%

Pooled effect size b1 4.19% 1.4%

Pooled effect size N1.5 4.04% 3.59%

Median Tau squared (IQR)

Range

0.097 (0.0640.148)

0.0031.35

Median Q-index (IQR) 0.499 (0.320.686)

296 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

point is eventually reached where there is over-ination of

the variances for a given level of overdispersion resulting in

overcorrection and condence intervals that are too wide. In

order to reduce the impact of large values of H

2

we can

transform H

2

to its reciprocal and use this to proportionally

inate the variances. Interestingly, Higgins [30] has also

dened an I

2

parameter, which is an index of dispersion that

is restricted between zero (no dispersion) and 1. If we reverse

the I

2

scale (by subtracting it from 1) so that no dispersion

(only sampling error) is now 1 as opposed to zero, then

(1I

2

) is indeed the reciprocal of H

2

. We thus used (1I

2

) as

an exponent to proportionally inate study variances b1. For

variance N1, we used 2 minus this overdispersion parameter

(which reduces to [I

2

+1]) as the ination factor. Additional

re-scaling was done by scaling (1I

2

) to various roots and

using the simulation described above to see the impact on

coverage of the condence interval. The fourth root was

found to result in an acceptable simulated coverage of the

condence interval around 95%. We thus used [(1I

2

)

1/4

] as

the nal overdispersion correction factor. This is also

equivalent to (1/H

2

)

1/4

. This correction was then used to

inate the variances of individual studies resulting in a more

conservative meta-analysis pooled variance. Even if the

accuracy of this approximation is questionable, common

sense suggests that it is better to perform this correction,

implicitly making the (more or less incorrect) assumption

that the distribution of c is approximated well enough by a

2

-distribution with k1 degrees of freedom than not to

perform any correction at all, implicitly making the (certainly

incorrect) assumption that there is no overdispersion in the

data [28]. Finally, it must be pointed out that this adjustment

in the QE model corrects for overdispersion within studies

that affect the precision of the pooled estimate, not for

heterogeneity between studies that affect the estimate itself.

Appendix 4. Quality scores and population impact scores

For a quality effects type of meta-analysis, a reproducible, and effective scheme of quality assessment is required. However, any

quality score can be used with the method and thus we are not constrained to any one method. The scheme we used in the meta-

analysis that we report here was actually the same used in the original meta-analysis and adapted from criteria for quality

evaluation of the internal validity of observational studies dened by Realini and Goldzieher [31]. After study-specic

modication, this system allowed a maximum overall score of 14 for casecontrol studies and 12 for other observational study

designs (cross-sectional studies, prospective and retrospective cohorts). For casecontrol studies, 02 points were assigned based

on each of 7 components:

1) predetermined method for selection of cases and controls,

2) dened work-related exposure ,

3) unbiased data collection,

4) equivalent patient recall (anamnestic equivalence)

5) exclusions unlikely to create bias (avoidance of constrained cases and controls)

6) equal demographic susceptibility and

7) equal clinical susceptibility.

For cohort and cross-sectional studies, 02 points were assigned for each of six parameters which included:

1) equal demographic susceptibility,

2) equal clinical susceptibility

3) adherence monitoring,

4) analysis of dropouts,

5) representativeness of population and

6) prospective versus retrospective cohort.

There are many different quality assessment instruments and most have got parameters that allow us to assess the likelihood

for bias. While there are many different items that relate to study quality, in an attempt to standardize these a Delphi method has

been used [32]. This is so called because the 206 items associated with study quality that were initially listed were reduced to nine

by means of the Delphi consensus technique. This scheme seeks to assess three dimensions of the quality of studies (internal

validity, external validity and statistical analysis), and focuses on experimental trials. Although the importance of such quality

assessment of experimental studies is well established, quality assessment of other study designs in systematic reviews is far less

well developed [33]. The feasibility of creating one quality checklist to apply to various study designs has been explored [34], and

research has gone into developing an instrument to measure the methodological quality of observational studies [35], and a scale

to assess the quality of observational studies in meta-analyses [36]. Nevertheless, there is as yet no consensus on howto synthesize

information about quality from a range of study designs within a systematic review. We now know that a more balanced view of

observational and experimental evidence is necessary [37], and therefore we proposed previously [9] a combination of the

NewcastleOttawa quality assessment scale for observational studies and the Delphi model for experimental studies as a possible

step forwards. The way Q

i

is computed from the score for each study and the additional use of population weights (for burden of

disease studies) is depicted in the table below.

297 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

References

[1] Conn VS, Rantz MJ. Research methods: managing primary study quality

in meta-analyses. Res Nurs Health 2003;26(4):32233.

[2] Bailey KR. Inter-study differences: how should they inuence the

interpretation and analysis of results? Stat Med 1987;6(3):35160.

[3] Verhagen AP, de Vet HC, de Bie RA, Boers M, van den Brandt PA. The art

of quality assessment of RCTs included in systematic reviews. J Clin

Epidemiol 2001;54(7):6514.

[4] Senn S. Trying to be precise about vagueness. Stat Med 2007;26(7):

141730.

[5] Berard A, Bravo G. Combining studies using effect sizes and quality

scores: application to bone loss in postmenopausal women. J Clin

Epidemiol 1998;51(10):8017.

[6] Moher D, Pham B, Jones A, et al. Does quality of reports of randomised

trials affect estimates of intervention efcacy reported in meta-

analyses? Lancet 1998;352(9128):60913.

[7] Leeang M, Reitsma J, Scholten R, et al. Impact of adjustment for quality

on results of metaanalyses of diagnostic accuracy. Clin Chem 2007;53

(2):16472.

[8] Tritchler D. Modelling study quality in meta-analysis. Stat Med 1999;18

(16):213545.

[9] Doi SA, Thalib L. A quality-effects model for meta-analysis. Epidemiology

2008;19(1):94100.

[10] Doi SA, Thalib L. An alternative quality adjustor for the quality effects

model for meta-analysis. Epidemiology 2009;20(2):314.

[11] Woolf B. On estimating the relation between blood group and disease.

Ann Hum Genet 1955;19(4):2513.

[12] DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin

Trials 1986;7(3):17788.

[13] BathamA, Gupta MA, Rastogi P, et al. Calculating prevalence of hepatitis

B in India: using population weights to look for publication bias in

conventional meta-analysis. Indian J Pediatr 2009;76(12):124757.

[14] Al Khalaf MM, Thalib L, Doi SA. Combining heterogenous studies using

the 724 random-effectsmodel is amistake and leads to inconclusive

meta-analyses. J Clin Epidemiol 2011;64(2):11923.

[15] Mozurkewich EL, Luke B, Avni M, Wolf FM. Working conditions and

adverse pregnancy outcome: a meta-analysis. Obstet Gynecol 2000;95

(4):62335.

[16] Poole C, Greenland S. Random-effects meta-analyses are not always

conservative. Am J Epidemiol 1999;150(5):46975.

[17] Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias.

Dimensions of methodological quality associated with estimates of

treatment effects in controlled trials. JAMA 1995;273(5):40812.

[18] Kjaergard LL, Villumsen J, Gluud C. Reported methodologic quality and

discrepancies between large and small randomized trials in meta-analyses.

Ann Intern Med 2001;135(11):9829.

[19] Balk EM, Bonis PA, Moskowitz H, et al. Correlation of quality measures

with estimates of treatment effect in meta-analyses of randomized

controlled trials. JAMA 2002;287(22):297382.

[20] Egger M, Juni P, Bartlett C, Holenstein F, Sterne J. How important are

comprehensive literature searches and the assessment of trial quality in

systematic reviews? Empirical study. Health Technol Assess 2003;7(1):

176.

[21] Greenland S. Invited commentary: a critical look at some popular meta-

analytic methods. Am J Epidemiol 1994;140(3):2906.

[22] Herbison P, Hay-Smith J, Gillespie WJ. Adjustment of meta-analyses on

the basis of quality scores should be abandoned. J Clin Epidemiol

2006;59(12):124956.

[23] Juni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of

clinical trials for meta-analysis. JAMA 1999;282(11):105460.

[24] Whiting P, Harbord R, Kleijnen J. No role for quality scores in systematic

reviews of diagnostic accuracy studies. BMC Med Res Methodol 2005;5:

19.

[25] Moja LP, Telaro E, D'Amico R, et al. Assessment of methodological

quality of primary studies by systematic reviews: results of the

metaquality cross sectional study. BMJ 2005;330(7499):1053.

[26] Sweeting MJ, Sutton AJ, Lambert PC. What to add to nothing? Use and

avoidance of continuity corrections in meta-analysis of sparse data. Stat

Med 2004;23(9):135175.

[27] McCullagh P, Nelder JA. Generalized linear models. London: Chapman &

Hall; 1983. edn.

[28] Tjur T. Nonlinear regression, quasi likelihood, and overdispersion in

generalized linear models. Am Stat 1998;52(3):2227.

[29] Lindsey JK. On the use of corrections for overdispersion. Appl Stat

1999;48:55361.

[30] Higgins JP, Thompson SG. Quantifying heterogeneity in a meta-analysis.

Stat Med 2002;21(11):153958.

[31] Realini JP, Goldzieher JW. Oral contraceptives and cardiovascular

disease: a critique of the epidemiologic studies. Am J Obstet Gynecol

1985;152(6 Pt 2):72998.Notes: GENERAL NOTE: PIP: TJ: AMERICAN

JOURNAL OF OBSTETRICS AND GYNECOLOGY.

[32] Verhagen AP, de Vet HC, de Bie RA, et al. The Delphi list: a criteria list for

quality assessment of randomized clinical trials for conducting

systematic reviews developed by Delphi consensus. J Clin Epidemiol

1998;51(12):123541.

[33] Deeks JJ, Dinnes J, D'Amico R, et al. Evaluating non-randomised

intervention studies. Health Technol Assess 2003;7(27):1173 iiix.

Notes: CORPORATE NAME: International Stroke Trial Collaborative

Group CORPORATE NAME: European Carotid Surgery Trial Collabora-

tive Group.

[34] Downs SH, Black N. The feasibility of creating a checklist for the

assessment of the methodological quality both of randomised and non-

randomised studies of health care interventions. J Epidemiol Community

Health 1998;52(6):37784.

[35] SlimK, Nini E, Forestier D, et al. Methodological index for non-randomized

studies (minors): development and validation of a newinstrument. ANZ J

Surg 2003;73(9):7126.

[36] Wells G, Shea B, O'Connell D, et al. The NewcastleOttawa Scale (NOS)

for assessing the quality of nonrandomised studies in meta-analyses.

http://www.ohri.ca/programs/clinical_epidemiology/oxford.htm2007

last accessed 15th June.

[37] Concato J. Observational versus experimental studies: what's the

evidence for a hierarchy? NeuroRx 2004;1(3):3417.

Table S4.1

Hypothetical calculation of Q

i

for use in QE meta-analyses.

a

Study name Points assigned

based on quality

checklist (maximum

possible eg12 points)

Probability that

study is credible

(Qi)

Population at risk

(if applicable and only

for burden of disease

studies)

Population weight Modified Qi based on

population weight

Study A 5 5/12 = 0.42 100,000 100,000/400,000 =

0.25

0.42 0.25 = 0.1

Study B 7 7/12 = 0.58 400,000 400,000/400,000 =

1

0.58 1 = 0.58

Study C 10 10/12 = 0.83 200,000 200,000/400,000 =

0.5

0.83 0.5 = 0.42

a

Shaded portions only in burden of disease (type C) studies.

298 S.A.R. Doi et al. / Contemporary Clinical Trials 32 (2011) 288298

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5811)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Trailblazer Medicare Audit ToolDocument4 pagesTrailblazer Medicare Audit Tooladultmedicalconsultants100% (10)

- BPQ Questionnaire PDFDocument4 pagesBPQ Questionnaire PDFAymen DabboussiNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Barriers To EbpDocument47 pagesBarriers To Ebpvallal100% (1)

- International Aquafeed - May - June 2016 FULL EDITIONDocument80 pagesInternational Aquafeed - May - June 2016 FULL EDITIONInternational Aquafeed magazineNo ratings yet

- Executive Order - BeswmcDocument3 pagesExecutive Order - BeswmcChristopher Torres85% (26)

- CIS Yuva Bharat Health PolicyDocument7 pagesCIS Yuva Bharat Health PolicyS PNo ratings yet

- History of Physical TherapyDocument11 pagesHistory of Physical Therapyapi-271035341No ratings yet

- Literature ReviewDocument4 pagesLiterature Reviewapi-549799448No ratings yet

- Changes in Adolescents Health 7Document2 pagesChanges in Adolescents Health 7Cristine Joy GardoceNo ratings yet

- Tretman Otpadnih Voda Zenice - MesicDocument9 pagesTretman Otpadnih Voda Zenice - MesicNarcisNo ratings yet

- File Ibu LiaDocument710 pagesFile Ibu LiaArum Nadia HafifiNo ratings yet

- Visitacion Act 1 Tdee Stats ReportDocument3 pagesVisitacion Act 1 Tdee Stats ReportHannah VisitacionNo ratings yet

- Labyrinth It IsDocument45 pagesLabyrinth It IsNana OkujavaNo ratings yet

- Diease LossDocument10 pagesDiease LossGeetha EconomistNo ratings yet

- Commentary: Dr. Brian BudgellDocument6 pagesCommentary: Dr. Brian Budgellsolstar1008No ratings yet

- S - Lession of The Infratemporal Fossa and Parapharyngeal Region, Congenital and Developmental Anomalies - RGDocument38 pagesS - Lession of The Infratemporal Fossa and Parapharyngeal Region, Congenital and Developmental Anomalies - RGanon_744980746No ratings yet

- Ce Registtration CertDocument306 pagesCe Registtration CertMuhammad usman khalidNo ratings yet

- SHAHRANIDocument6 pagesSHAHRANIMishellKarelisMorochoSegarraNo ratings yet

- YBP327 MatKool Media BriefDocument2 pagesYBP327 MatKool Media BriefAngel PigNo ratings yet

- Full Spectrum Health, LLC: 307 E Northern Lights, Ste 201 Anchorage, AK 99503:: 907-229-9766Document4 pagesFull Spectrum Health, LLC: 307 E Northern Lights, Ste 201 Anchorage, AK 99503:: 907-229-9766Tracey WieseNo ratings yet

- Ahme Paper RevisedDocument54 pagesAhme Paper Revisedkassahun meseleNo ratings yet

- Human Spinal Cord Picture C1 To S5 VertebraDocument3 pagesHuman Spinal Cord Picture C1 To S5 Vertebraajjju02No ratings yet

- Earth Essence CBD GummiesDocument6 pagesEarth Essence CBD GummiesketsaadanNo ratings yet

- Physioex Lab Report: Pre-Lab Quiz Results Experiment ResultsDocument4 pagesPhysioex Lab Report: Pre-Lab Quiz Results Experiment ResultscharessaNo ratings yet

- Factors Associated With Compassion Satisfaction, Burnout, andDocument30 pagesFactors Associated With Compassion Satisfaction, Burnout, andpratiwi100% (1)

- Trichiasis: Prepared By:pooja Adhikari Roll No.: 27 SMTCDocument27 pagesTrichiasis: Prepared By:pooja Adhikari Roll No.: 27 SMTCsushma shresthaNo ratings yet

- Why Do We Believe in Conspiracy Theories?Document6 pagesWhy Do We Believe in Conspiracy Theories?Tony A. SnmNo ratings yet

- Biology 2 JournalDocument5 pagesBiology 2 JournalKadymars JaboneroNo ratings yet

- StarHealthAssureInsurancePolicy ProposalFormDocument4 pagesStarHealthAssureInsurancePolicy ProposalFormshirishkanhegaonkar2No ratings yet

- Febrile Seizure CPGDocument7 pagesFebrile Seizure CPGLM N/ANo ratings yet