Professional Documents

Culture Documents

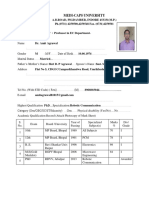

A Review On Optical Interconnects: Babita Jajodia Roll No:11610241

Uploaded by

Babita JajodiaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Review On Optical Interconnects: Babita Jajodia Roll No:11610241

Uploaded by

Babita JajodiaCopyright:

Available Formats

1

A Review on Optical Interconnects

Babita Jajodia Roll no:11610241

AbstractThis paper gives us a brief overview of the development of optical interconnects to silicon integrated circuits and also discusses the present status of optical computing,which leads to progress in optical computing and gives an overall idea of optical interconnects and gives an overview of various applications of Optical Interconnects in present scenario like data centers Networks,High performance computing etc.It starts from roots in early optical switching phenomena, proceeds through novel semiconductor and quantum well optical and optoelectronic physics and devices, rst proposals for optical interconnects, and optical computing and photonic switching demonstrators, to hybrid integrations of optoelectronic and silicon circuits.This paper also describes the pros and cons of the various present Optical Interconnects.The history of optical interconnects is strongly intertwined with the broader eld of optics in digital computing and switching. Index TermsOptical Interconnects,Card-to-card interconnects, freespace optics, recongurable optical interconnects,Computer networks, high-performance Computing (HPC), optical interconnections, SuperComputing, Data Center Networks.

INTRODUCTION

With the advances in VLSI technologies, device densities and speeds have increased to a point where further increases in chip performance are difcult to accomplish. Due to the physical limitations of conventional interconnects, the performance of current VLSI systems will be a major bottleneck for network communications.Solutions mentioned in the Semiconductor Industry Association (SIA) Roadmap include copper technologies with low-k dielectrics,microwave technologies, and optical technologies for inter- and intra-chip connections. The semiconductor industry has initially turned to copper and low-k dielectrics. However, this path does not promise problem solutions lasting beyond one technology generation: the physical limitations for copper and low-k dielectric place an upper bound on the performance. By contrast, optical technology has the potential to support performance requirements of networks far into the future. Efforts to develop optical technology for intra-chip interconnects focus on implementing an Emitter Waveguide Receiver system. Digital signals are transmitted through waveguide in the form of light pulses among multiple locations, on a chip or between chips.Emitter and receiver implementation is accomplished either with Silicon[1] or non-silicon components[2] and A Sibased optical emitter receiver system. Factors determining whether optical interconnect is viable for the future technologies include area, power, and speed requirements. Based on the investigations of Miller,Optical interconnect has clear advantages in the surface-area requirements, as long metal lines replaced by waveguide reduce the total area of the emitter-receiver system.[3]

The increasing chip speeds have levelled off recently, multicore architectures have helped lling the gap. More new innovations will be required with the increased use of specialised computing elements or accelerators(GPU). Interconnect Bandwidth requirements continue to scale at all physical levels of the system. Optical circuit-switched networks may have a role to play in large-scale computing systems, as a more efcient means to move large amounts of data between compute nodes or allow recongurability in the network topology.The parameters which should be rethinked in designing optical interconnects are: Storage links were the rst to utilize optical interconnects. As the price of optics continued to drop during this period, the longer links were replaced with optics. This change relieved signicant cable congestion in these systems. By assessing the total system Bandwidth requirements, one can determine the total optics cost and power in relation to the machine targets. To achieve cost goals, careful rethinking of Optical Interconnects for low-cost high-volume manufacturing will be required. Furthermore, to achieve the lowest power links, optics modules will need to be situated close to the signal source, requiring very dense modules, of order 1 Tbps/cm square. By locating optics closer to the signal source, electrical link power can be reduced, but optimization of optics packaging close to the signal source will require much denser and more integrated optical modules which will require greater cooperation between systems providers and optical interconnect suppliers.

2 P REFERENCE OF O PTICAL I NTERCONNECTS OVER E LECTRICAL I NTERCONNECTS

Electrical interconnects become much more difcult to successfully design as data rates begin to exceed 10 Gbps,due to frequency dependent losses, crosstalk, and frequency resonance effects. Optical interconnects do not suffer such strong signal integrity degradations, and provide additional benets,including reduced cable bulk, smaller connector size, and reduced electromagnetic interference. However, the electrical technologies are not suitable for future high-throughput interconnects due to the fundamental limitations, including the electric power consumption, heat dissipation, transmission latency, and electromagnetic interference. Optical interconnects offer higher bandwidth compared to electrical interconnects as their bandwidth being limited only by the optical modulators and receivers. Optical interconnects have very low attenuation and hence no

repeaters are required.In order to compare the optical interconnect, following metrics related to the performance and energy dissipation are considered. 1) Delay: This metric is more relevant for clock distribution and control signal transfer. 2) Bandwidth Density: This metric is relevant for heavy data transfer applications. 3) Bandwidth Density/Delay: This metric is relevant in those applications that are sensitive to both delay and Bandwidth. 4) Energy Dissipation: This metric is generally important for all applications. Due to the benets of optical interconnects, there has been a steadily increasing use of optics in these large systems, so much so, that the number of optical channels in a single HPC(High Performance Computing)supercomputing system today can be on par with the world-wide volume in parallel optical interconnects just a few years ago. To respond to the severe requirements of future systems that are approaching one exaop, optical interconnects will need to continue to make improvements in four major areas: 1) Power 2) Cost 3) Density 4) Reliability. In these large computing systems, the switching functionality of the network is a major power consumer and cost. Over the years, there have been many demonstrations of optical circuit switching as a more efcient alternative to electrical packet-switched networks. Optical circuitswitched networks may have a role to play in large-scale computing systems, as a more efcient means to move large amounts of data between compute nodes or allow recongurability in the network topology. One reason for potential energy saving in Optical Interconnects was that optical devices, being fundamentally quantum devices,could act as efcient impedance transformers between the high impedance of small electronic devices and the low impedance of classical wave propagation.The idea of using light beams to replace wires now dominates all long-distance communications and is progressively taking over in networks over shorter distances. Modern ber Optics has provided the network system designer with the tools to provide bandwidth at will and over any distance on the planet[4].The demand for bandwidth in the Internet is doubling every 100 days, and e-commerce is still a nascent business. The preference of incorporating Optics Outside the Box can be stated as: The LAN(Local Area Network) industry has developed standards for links connecting computers to the network and to storage devices.Ethernet has evolved from 10-Mbps electrical speci-cations through 1-Gbps gigabit Ethernet, which species both electrical and optical interfaces. A plethora of vendors are now looking at providing an array of solutions.All such solutions are based upon 850-nm, short-wavelength verticalcavity surface-emitting lasers (VCSELs), since these devices are in mass production (millions per year), reliable, easily coupled to ber, and easy to drive.

The preference of Incorporating Optics Inside the Box(i.e.into the Computer System itself) can be stated as: Find an application where the increasing bandwidth demand of the link makes optics the preferred engineering choice. Make the interconnect a standard to drive up volume, increase the supplier base, and lower costs.

H ISTORY OF O PTICAL I NTERCONNECTS

Modern thinking about the use of optics in digital computing dates from the 1960s. The laser had been invented and the semiconductor diode laser in its earliest form had been demonstrated.Nonlinear optics was an exciting emerging eld. The idea of using lasers or other nonlinear optical devices as the logic devices in computing was examined.But they conclude with the rst analysis that optical logic devices were not a good sub-stitute for transistors in an general-purpose computing machines,because it took too much energy.So,it introduced the idea to use optics as communications. But the idea was not too serious to use optics inside computers,because wires were still capable of delivering the required performance. After that,the optics research community were still driven by the idea that,optics would make faster than any electrical transistor and nally,they proved that,nonlinear optics is capable of making logic devices much faster than any electrical device and the nonlinearity effects in semiconductors were clearly understood.Thus,the optical bi-stability increased the interest in the eld of optics from mid 1970s to late 1980s.This leads to the idea of: 1) The use of resonant structures,which reduced the operating energy below electronic devices. 2) Semiconductor bistable devices required only one semiconductor with plane parallel surfaces (e.g.[5]) 3) Found particular enhanced optical properties in semiconductor quantum well structures,as they showed very strong peaks and relatively easy saturation of those peaks,which leaded to further interest in semiconductor optical switching devices.[6], [7], [8]. 4) The emergence of interconnection advantage as a reason for the use of optics in computing. 5) It was still restricted to interconnecting optical logic devices because at that particular time,there was no practical way of getting information in the form of light from electrical circuits at high densities and high speeds. 6) There were some early works,using liquid crystal spatial light modulators, to demonstrate elementary optical computers,that exploited the two-dimensional (2-D) parallelism of optics [9]. 7) The idea of very large scale integration(VLSI) electronics was proposed and successfully anticipated many subsequent developments, and was arguably the start of the eld of optical interconnects. 8) But there was no good way of nding large numbers of optical outputs from Silicon Integrated Circuits(ICs). 9) Subsequent research on making Silicon large emitters,as silicon because of its indirect band gap,is fundamentally a poor light emitter and not a very good light modulator. 10) A new modulation effect the quantum-conned Stark effect was discovered which allowed high-speed optical modulators.This effect was important for both optical

11)

12) 13)

14)

15)

16) 17) 18)

19)

computing and optical interconnects for the following reasons: a) It allowed low energy devices. b) The changes in absorption were large enough to make a modulator or switch that would work for light beams propagating perpendicular to the surface,allowing 2-D arrays. c) It had high enough yields to allow large arrays of devices to be made. Works based on optical computers based on simple bistability died out,as optical bi-stability was found to be impractical for making optical logic systems. Parallelism of optical arrays would make them interesting in practical applications. Experimental switching machines for telecommunication switching constructed by Bell Laboratories demonstrated the feasibility of dense free space optical array interconnections. Another important development during this time was the demonstration of viable vertical-cavity surface-emitting lasers (VCSELs) as much work has been done in turning them in practical systems,especially for low-cost optical ber connections and they are also candidate for optical interconnects to silicon chips. An important development in low-power VCSELs was the demonstration of oxide-conned devices for potentially much lower thresholds[10]. More recently, smaller arrays of VCSELs have also been successfully bonded to silicon circuits. Since the transistors get faster as they are scaled down, the wires do not scale to keep up with the transistors. The performance of the system cannot be improved either by miniaturizing the whole system or making larger; it is necessary to change the interconnect technology. This limit is known as the architectural aspect ratio [11]. Optical interconnects avoid this problem altogether because they do not have the resistive loss physics that gives rise to this behavior. The roadmaps began to be published by Semiconductor Industry Association for performance and technologies for future generations of silicon technology[12]. These roadmaps showed substantial problems for interconnects on silicon chips, with no known solution after approximately 2006 for interconnects to keep pace with the desired performance. Interconnects off of the chip, with their greater lengths, are likely a much larger problem.The issue of where optics might make sense as a solution to these problems has recently been analyzed in some details in [13].

Packaging Integration. Channel Integration. Margin. Cooling. Data-Rate. Power Consumption

4.2

The power of a link must consider not only the optical link but also the electrical part of the links to get to and from the optics as well.There is still much room for improvement in optical links by employing many of the same signal enhancing techniques which have been employed in electrical links today, such as pre-distortion, equalization,and decision feedback equalization.For example,the power consumption of a 20 Gbps optical links can be increase through the use of predistortion.[14] 4.3

Cost

PARAMETERS TERCONNECTS

F OR I MPROVEMENT IN O PTICAL IN -

The parameters which should be considered foor improvement in optical interconnects are categorised as follows: 4.1 Density

The costs associated with an optical link include the bill of materials, assembly and fabrication costs as well as test and adjustment for any yield fall out.The bill of materials may include such items as substrates, lenses, laser and photodiode arrays, micro-controller, driver and receiver chips, connectors (both optical and electrical),ber cabling, and heat sinks. Assembly and fabrication costs and considerations may include assembly throughput rate and equipment costs, single versus multi part (e.g.panel or wafer level) output, active versus passive alignment, manual versus automated assembly, tolerances versus yield, and nal assembly costs for the system.Finally,test and yield cost-related issues include tester time and equipment cost, built in self-test and bit error rate requirements versus test time. The optimised solution which require the detailed analysis of trade-offs are Base Manufacturing Cost. Channel Integration. Yield. Reliability. Data-Rate. These tradeoffs for cost need to be considered and balanced in each of these categories. For example, the issue of active versus passive alignment has been a long time tradeoff in the industry.From a parts cost and complexity point of view, passive alignment often requires tighter tolerance parts or additional alignment structures, while active alignment parts are simpler but require a longer and more intensive assembly process, with,therefore, higher fabrication costs. One area that clearly needs attention is Test costs. As module Bandwidth and channel count increase and the level of integration of optics in systems increases, the cost of test is becoming a larger portion of the overall costs.Modules with self-test capability or which can be acceptably tested in phases, partially at the optics supplier and partially as part of system assembly (without incurring high rework costs or yield fallout) will be needed. 4.4 Reliability

Future optical interconnects may require even denser optics, such as might be obtained by ip chip assemblies employing greater numbers of parallel channels.The optimised solution which require the detailed analysis of trade-offs are

In addition, reliability of optical components will require continued improvement. Due to the shear numbers of optics modules in these large systems, even small failure rates can

cause network failure, which if not managed carefully, can cause large jobs to stop, requiring a rerun from the last checkpoint.Reliability can be managed by a combination of low component fail rates and the use of redundant network topologies, providing alternate routing paths in the event of a link fail, and channel sparing, which can be used as a fail over to allow the link to continue operation.attention will need to be given to balancing not only single optical channel fail rates with sparing but also minimizing the multichannel failure rate and single points of failure scenarios for the entire link.The trend toward water cooling can reduce operating temperatures and, therefore, help to lower laser fail rates. 4.5 Competing With Copper

5.3 1) 2) 3) 4) 5.4

Timing: Predictability of the timing of signals. Precision of the timing of the clock signal. Removal of timing skew in signals. Reduction in power and area for clock distribution. Other Physical Benets:

For optics to compete with copper at shorter and shorter link distances, there are a number of areas for increased focus. Historically, increasing channel data rates has improved cost,power, and density and that trend will continue with data rates at least to 25 Gbps if not beyond, with the caveat that the power cost of multiplexing slower microprocessor and switch on chip data rates will be begin to rise, limiting the overall benet of higher data rates. Higher parallelism in optics modules (e.g., channels) will help amortize packaging costs and allow more area efcient packaging, but too high a channel count may outpace the volume market, contributing to higher costs.As discussed previously,integrating optics much closer to the signal source will eliminate excess electrical link power, but can result in too highly customized modules that may not have volume acceptance in the marketplace or require excessive development expense to integrate and test.Development of semicustomizable or standard building blocks for optical links can possibly mitigate the integration issue,however.

1) Reduction of power dissipation in interconnects. 2) Voltage isolation. 3) High interconnect density, especially for longer off-chip interconnects. 4) Possible non contact, parallel testing of chip operation. 5) Option of use of short optical pulses for synchronization and improved circuit performance. 6) Possibility of wavelength division multiplexed interconnects without the use of any electrical multiplexing circuitry.

HIGH PERFORMANCE COMPUTING

P RACTICAL B ENEFITS OF O PTICAL I NTERCONNECTS

The idea of changing the technology used for interconnects to silicon chips in computing and switching systems is a radial one and the resulting practical benets of Optical Interconnects are as follows [3]: 5.1 Design Simplication:

1) Absence of electromagnetic wave phenomena (impedance matching, crosstalk, and inductance difculties such as inductive voltage drops on pins and wires). 2) Distance independence of performance of optical interconnects. 3) Frequency independence of optical interconnects. 5.2 Architectural Advantages:

1) Larger synchronous zones. 2) Architectures with large numbers of long high-speed connections (i.e., avoiding the architectural aspect ratio scaling limit of electrical interconnects). 3) Regular interconnections of large numbers of crossing wires (useful, for example, in some switching and signal processing architectures). 4) Ability to have 2-D interconnects directly out of the area of the chip rather than from the edge. 5) Avoidance of the necessity of an interconnect hierarchy (once in the form of light, the signal can be sent very long distances without changing its form or amplifying or reshaping it, even on physically thin connections).

High-performance computing systems are of steadily growing interest to provide new levels of computational capability for an increasing range of applications.[15].These could range from geophysical data processing to drug discovery to multiscale modeling to environment and climate modeling to the analysis of huge datasets made available through our increasingly connected world. The growing use of and dependence on optical interconnects to meet these systems scaling Band Width demands has given rise to computercom as a distinct market segment, alongside the traditional datacom and telecom markets. interconnect BW requirements continue to scale at all physical levels of the system. Typical server interconnects include: 1) A core-to-core bus on a single chip. 2) Chip to Chip (for CPU-to-cache or CPU-to-CPU communications). 3) Chip-to-memory on card. 4) CPU-to-CPU. 5) Cluster fabric between cards and racks. 6) CPU node to storage(typically rack to rack). 7) LAN/WAN links (Local/Wide area networks) which go beyond the immediate computing or data center building [16]. More recently, the IBM Power 775 has made use of a ber cable optical backplane within the rack as well as for the rack-to-rack cluster fabric.For cluster fabric in particular, clever networking topologies can mitigate BW costs while maintaining reasonable performance, although not without tradeoffs. For example, mesh or torus networks can require many fewer interconnects, but will need more hops and, thus, have longer latency to pass data between more distant nodes.The IBM Power 775 supercomputer is an example of a two stage allto-all network (also known as a dragony)[17], [18] which is able to provide a low-latency,high-BW connectivity between random nodes in the system. This provides a high-performance network suitable for a large range of workloads. 6.1 Technologies Of Interest For HPC

This section describes a number of optical technologies which are of high interest to fulll High Performance Computing(HPC) future requirements.A brief introduction of some of the technologies are described as follows:

6.1.1

VCSEL/Fiber Technology

6.1.3 Silicon Photonics Technology Finally, silicon photonics is a promising technology which has been studied since the mid 1980s as a platform for optical communications. The technology utilizes single-mode ber in combination with unmodulated lasers and silicon-based modulators and detectors. This technology may offer the ultimate in integration capability as well as low cost by utilizing mature CMOS fabrication to produce highly integrated assemblies with most elements fabricated directly in CMOS. In addition, by greatly reducing the costs of introducing wavelength division multiplexing(WDM) capability, which allows multiple wavelengths on the same ber, the costs of ber cabling and connectors can be amortized over much greater BW per ber. The technology has matured signicantly over the years with commercial products available today in an active cable form. Since the technology requires use of single-mode ber and longer wavelengths typically nm or nm, to take advantage of already developed continuous wave (CW)telecommunications single-mode lasers which operate in a region where Si is transparent, Si-photonics-based transceivers are incompatible with the shorter wavelength and multi mode technology based on VCSELs. Laser light sources can be packaged onto the chip or located at a convenient location off-chip and coupled to the chip via ber. The on-chip location offers the convenience of more integrated and potentially lower cost packaging but will be ex-posed to a more challenging thermal environment. The offchip location offers a separated environment for the laser, such that the temperature (and therefore wavelength) can be more accurately controlled. A lower temperature environment may help improve laser reliability as well. In addition, one might conceive of high-power off-chip lasers which could be split among many transceivers thereby amortizing the cost of the laser and the laser packaging and cooling across many more optical channels. Packaging is another area that is often overlooked in discussions on Si photonics. While the Si photonics chip itself may be of relatively low cost, the coupling of the chip to ber and the addition of a CW laser can add substantial cost. Packaging which meets single-mode tolerances (typically m) can be considerably more expensive than packaging which meets multimode tolerances (m). In addition to the cost of the laser,an optical isolator may be required, as single-mode edge-emit-ting lasers are more sensitive to reected light than multi mode VCSELs, and the amount of optical feedback must generally be quite low. Finally, one must consider total power consumption for the Si photonics links. While there is signicant potential for very low power optical links (e.g., modulator performance in the fJ/bit range), designs with a practical balance of performance and temperature tolerance and a proper accounting for all power sources (including temperature control, CW laser, and any control or clocking logic) may nd that this potential advantage is signicantly eroded. 6.1.4 Passive Components and Cabling In addition to the active transceiver technologies linking these transceivers across cards, boards, or racks will require passive connectors and cabling. In the case of ber-based VCSEL links, these rely on well-developed parallel ber ribbon and connectors, e.g., MPO (Multi-ber Push On),which have been used

Historically, low-cost optical interconnects for datacom,and now computercom, have been based on multimode bers and VCSEL technology [19], [20].Rack-to-rack cluster fabric in particular has made good use of parallel optical modules employing these technologies. In comparison to single-mode technology, more commonly used in telecom, multimodetechnology is more alignment tolerant. Multimode VCSELs provide a cheaper and easier to test light source than edge-emit-ting single-mode lasers, and furthermore, are easy to make in compact arrays, and more power efcient as well. So, although multimode ber has distance limitations due to path differences between the various modes (e.g., typically in the 100 or 100s of meters range, getting worse with higher data rates), it is still the right choice for these primarily short datacom and computercom links. As the incumbent, this technology already enjoys a lowcost manufacturing infrastructure, and furthermore one that still has room for development.This technology will continue to improve, with higher, lower cost, lower power, and more compact modules.In addition,longer wavelengths enjoy a slight photo detector responsivity advantage (producing more current per unit optical power), and more relaxed eye-safety power limits.There is some disadvantage in the use of these longer wavelengths with polymer waveguides as the losses tend to be greater for these longer wavelengths. To further reduce overall costs of optical links, not only must the cost of the transceivers be reduced but the cost of ber connectors, cabling, and ber management must be as well. Higher data rates will help reduce these costs to some degree, but further reduction may be required.One way to accomplish this is to use multiple multi mode cores in a single ber to achieve much higher data rates.Ultimately, this technology may be limited by the heterogeneous nature of packaging integration required and the burgeoning costs of ber and ber management, but at present there is still much room for improvement. 6.1.2 VCSEL/Optical PCB Technology

To make further gains in cost and level of packaging integration, and compete with copper at on-card distances, optical PCB technology based on polymer waveguide integration with VCSELS may provide the right combination of lowcost manufacturing, module density, and semi-customizable integration[21], [22]. The vision for this technology is to provide an optics technology with the characteristics of electrical PCB technology. PCBs are based on low-cost mass manufacturing methods, yet are customizable for a particular users needs. An optical PCB would mitigate ber management problems within the card and provide high-density optical transceiver integration close to the processing chips. In order to facilitate a transition to this tech-nology, we anticipate that early offerings would be in the form of an easily replaceable waveguide on exible substrate assembly, mounted above the board, similar to aber ribbon. However, as the technology matures, the polymer waveguides would be incorporated on or within the PCB. While very promising, this technology still has some hurdles to overcome, including im-provement in polymer and connector losses and achievement of an infrastructure to allow widespread use of the technology.

for many years for these multimode links. Connector losses are typically no more than 0.5 dB, including misalignment tolerances. For polymer-waveguide-based links, longer connections(e.g.,1 m between boards) will require improved losses if polymer waveguides are to be used; however, the use of a waveguide to ber connector also allows use of lower loss ber for these links. Si photonics technology will require single-mode ber and connectors. These connectors can typically have 0.25 dB additional loss (over multimode) due to the tighter alignment tolerances required, although lower loss components are available at higher costs. In addition, greater care must be paid during assembly to prevent contamination from ambient particulates,which can more easily degrade these single-mode ber connections than multimode connections as dust can more easily occlude the smaller single-mode core size.

Low power dissipation can be mainly attributed to the introduction of InGaAs SL-QW and VCSEL structure called double intra cavity (DIC).InGaAs 1060-nm Double-green VCSEL arrays denitely play an important role in the next-generation power effective HPC and data center to meet indispensable requirements of power consumption, speed, and reliability. Especially, the reliability test results veried using lots of VCSELs encourage us to promote the new standard as the signal source for a 100-GbE parallel solution.

8

8.1

O PTICAL I NTERCONNECTS FOR DATA C ENTERS

Overview

7 O PTICAL I NTERCONNECTS FOR FORMANCE C OMPUTING

7.1 Overview

G REEN H IGH P ER -

Importance of green Information and Communication Technology (ICT) is expanding, especially to high-performance computing (HPC) and data center architecture for power usage effectiveness. The performance of HPC is growing rapidly with an improvement factor of 10 times in every four years, or 1000 times per 10 years, and it is predicted to reach one Exaops in 2018 [23]. At the same time,energy consumption has to be reduced.Therefore, new technologies will be needed to meet the Next Generation HPC system requirements.To catch up with increasing demands for higher bandwidth with lower power consumption, optical interconnect using parallel optical link called active optical cable (AOC) has been recognized as a promising solution. Arrayed vertical-cavity surface-emitting lasers (VCSELs) are widely used in this application.850-nm VCSELs have been used for these applications. On the other hand, intermediate wavelength VCSELs have been intensively investigated to meet the urgent requirements for higher speed, lower power consumption, and higher reliability, which will be indispensable in the HPC with 10 Petaops or more[24]. 7.2 Approach for Lower Power Dissipation

VCSELs with InGaAs/GaAs strained-layer quantum wells (SL-QW) with an emission wavelength of 9801060 nm have been anticipated to exhibit better reliability, higher modulation speed, and lower power consumption.Lower power dissipation was achieved for InGaAs VCSELs. The power dissipation Pdiss is given as, Pdiss = If Vf Pout = If (Vth + If Rd ) Pout where,If is given as forward current. Vf is the corresponding voltage. Pout is the light output power at If . Vth is the threshold voltage. Rd is the series resistance. The approach used to achieve the low power dissipation are as follows: Low bias Operation. Small turn-on Voltage. Small series Resistance. High Output Power. Large differential gain and Smaller Energy Gap categorise to InGaAs QWs(1060 nm). Less number of hetero junction and Optimised position of Standing Wave categorise to DIC structure.

Data centers are experiencing an exponential in-crease in the amount of network trafc that they have to sustain due to cloud computing and several emerging web applications like streaming video,social networking etc.To face this network load, large data centers are required with thousands of servers interconnected with high bandwidth switches. Current data center networks, based on electronic packet switches, consume excessive power to handle the increased communication bandwidth of emerging applications. Optical Interconnects have gained attention recently as a promising solution offering high throughput, low latency and reduced energy consumption compared to current networks based on commodity switches. As more and more processing cores are integrated into a single chip, the communication requirements between racks in the data centers will keep increasing signicantly [25]. While the peak performance will continue to increase rapidly, the budget for the total allowable power consumption that can be afforded by he data center is increasing in a much slower rate (2x every 4 years) due to several thermal dissipation issues. One of the most challenging issues in the design and deployment of a data center is the Power Consumption.Therefore, the reduction in the power consumption of the network devices has a signicant impact on the overall power consumption of the data center site.The power consumption of the data centers has also a major impact on the environment. In order to face this increased communication bandwidth demand and the power consumption in the data centers, new interconnection schemes must be developed that can provide high throughput, reduced latency and low power consumption. According to a report,all-optical networks could provide up to 75 % energy savings in the data centers [26]. Especially in large data centers used in enterprises the use of power efcient, high bandwidth and low latency interconnects is of paramount importance and there is signicant interest in the deployment of optical interconnects in these data centers. The present scenario of using Optical Interconnects for data centers can be summarised as follows [27]: Optical networks have been widely used in the last years in the long-haul telecommunication networks, providing high throughput, low latency and low power consumption. Several schemes have been presented for the exploitation of the lights high bandwidth such as Time Division Multiplexing (TDM)and Wavelength Division Multiplexing (WDM).In the case of WDM, the data are multiplexed using separate wavelength that can traverse simultaneously in the ber providing signicantly higher bandwidth. The optical telecommunication networks have evolved from traditional opaque networks toward all-optical (i.e. transparent) networks.

In opaque networks, the optical signal carrying trafc undergoes an optical-electronic-optical (OEO) conversion at every routing node. But as the size of opaque networks increases, network designers had to face several issues such as higher cost, heat dissipation, power consumption, and operation and maintenance cost. On the other hand, all-optical networks provide higher bandwidth, reduced power consumption and reduced operation cost using optical cross-connects and recongurable optical add/drop multiplexers (ROADM). Currently the optical technology is utilized in data centers only for point-to-point links in the same way as point-topoint optical links were used in older telecommunication networks (opaque networks). These links are based on low cost multi-mode bers(MMF)for short-reach communication. Current telecommunication networks are using transparent optical networks in which the switching is performed at the optical domain to face the high communication bandwidth. In the near future higher bandwidth transceivers are going to be adopted (for 40 Gbps and 100 Gbps Ethernet) such as 4x10 Gbps QSFP modules with four 10 Gbps parallel optical channels and CXP modules with 12 parallel 10 Gbps channels. Most of the current data centers are based on commodity switches for the interconnection network.The network is usually a canonical fat-tree 2-Tier or 3-Tier architecture[28].The main advantage of this architecture is that it can be scaled easily and that it is fault tolerant (e.g. a ToR switch is usually connected to 2 or more aggregate switches).The main drawback ofthese architectures is the high power consumption of the ToR, aggregate and core switches (due to O/E and E/O transceivers and the electronic switch fabrics) and the high number of links that are required. While there are several research efforts that try to increase the required bandwidth of the data centers that are based on commodity switches (e.g. using modied TCP or Ethernet enhancements,the overall improvements are constraints by the bottlenecks of the current technology.

In some cases there are some common trafc characteristics (e.g. average packet size) in all data centers while other characteristics (e.g. applications and trafcow) are quite different between the data center categories. The network trafc characteristics cab be described as follows: 1) Applications:The applications that are running on the data centers depend on the data center category. In campus data centers the majority of the trafc is HTTP trafc. On the other hand, in private data centers and in data centers used for cloud computing the trafc is dominated by HTTP, HTTPS, LDAP, and DataBase (e.g.MapReduce) trafc. 2) Trafc Flow Locality:The trafc ow locality affects signicantly the design of the network topology.In cases of high inter-rack communication trafc, high speed networks are required between the racks while low-cost commodity switches can be used inside the rack. 3) Trafc Flow Size and Duration:If the trafc ow lasts several seconds then an optical device with high reconguration time can sustain the reconguration overhead in order to provide higher bandwidth. 4) Concurrent Trafc ows: The number of concurrent trafc ows per server is also very important to the design of the network topology. If the number of concurrent ows can be supported by the number of optical connections then optical networks can provide signicant advantage over the networks based on electronic switches. 5) Packet size:The packet size in data centers exhibit a bimodal pattern with most packet sizes clustering around 200 and 1400 bytes. This is due to the fact that the packets are either small control packets or are parts of large les that are fragmented to the maximum packet size of the Ethernet networks (1550 bytes). 6) Link utilization: According to these studies, in all kinds of data centers the link utilization inside the rack and in the aggregate level is quite low, while the utilization on the core level is quite high.he link utilization shows that higher bandwidth links are required especially in the core network, while the current 1 Gbps Ethernet networks inside the rack can sustain the future network demands. 8.3 Optical Interconnects Schemes for Data Centers Networks The optical interconnects schemes that have been recently proposed for data center networks are as follows:

The basic optical modules that are utilized for the implementation of the optical interconnects targeting data centers are as follows[29]: 1) 2) 3) 4) 5) Splitter and Combiner. Coupler. Arrayed-Waveguide Grating (AWG). Wavelength Selective Switch (WSS). Micro-Electro-Mechanical Systems Switches (MEMSswitches). 6) Semiconductor Optical Amplier (SOA). 7) Tunable Wavelength Converters (TWC). 8.2 Network Flow Characteristics for Data Centers

In order to design a high performance network for a data center, a clear understanding of the data center trafc characteristics is required. The data centers can be categorized in three classes: 1) University Campus data centers. 2) Private Enterprise data centers. 3) Cloud-computing data centers.

C-Through: Part-time optics in data centers. Helios: A hybrid optical electrical switch. DOS: A scalable optical switch. Proteus data center network. Petabit Optical Switch. The OSMOSIS project. Space-Wavelength architecture. E-RAPID. The IRIS project. Bidirectional photonic network. Commercial optical interconnects. Polatis:While all of the above schemes are proposed by universities or industrial research centers there is also a commercial available optical interconnect for data center that is provided by Polatis Inc.

Intune Networks: Intune Network has developed the Optical Packet Switch and Transport (OPST) Technology that is based on their fast tunable optical transmitters. 8.4 Comparison of the Features of Optical Interconnects Schemes for Data Centers The features which provide the qualitative comparison of the features of the above schemes are as follows: 8.4.1 Technology The majority of the optical interconnects are all-optical, while only the c-Through and the Helios schemes are hybrid. The hybrid schemes offer the advantage of an incremental upgrade of an operating data center with commodity switches, reducing the cost of the upgrade.On the other hand, the hybrid schemes do not provide a viable solution for the future data center networks,whereas all-optical schemes provide a long-term solution that can sustain the increased bandwidth with low latency and reduced power consumption. But as these schemes require the total replacement of the commodity switches, they must provide signicant better characteristics in order to justify the increased cost of the replacement. 8.4.2 Scalability A major requirement for the adoption of optical networks in the data centers is the scalability. Optical networks need to scale easily to a large number of nodes (e.g. ToR switches) especially in warehouse-scale data centers. The hybrid schemes that are based on circuit switches have limited scalability since they are constrained by the number of optical ports of the switch. The Petabit and the IRIS architectures, although they are based on central switch, they can be scaled efciently to a large number of nodes adopting a Clos network. Finally, the commercially available schemes have a low scalability since they are based on modules with a limited number of ports. 8.4.3 Connectivity Another major distinction of the optical interconnects is whether are based on circuit or optical switching. Circuit switches are based usually on optical MEMS switches that have increased reconguration time.Furthermore, the circuitbased optical networks are targeting data centers where the average number of concurrent trafc ows in the servers can be covered by the number of circuit connections in the optical switches.On the other hand, packet-based optical switches are similar to the current network that is used in the data centers.An interesting exception is the Proteus architecture in which although it is based on circuit switching it provides an all-to-all communication though the use of multiple hops when two nodes are not directly connected. The Petabit architecture seems to combine efciently the best features of electronics and optics. 8.4.4 Capacity : Besides the scalability in terms of number of nodes the proposed schemes must be also easy to upgrade to higher capacities per node. The circuit-switch architectures that are based on MEMS switches (C-Through, Helios and Proteus) can be easily upgrade to 40 Gbps, 100 Gbps or higher bit rates since the MEMS switches can support any data rate.Therefore,

in these architectures the maximum capacity per node is determined by the data rate of the optical transceivers.DOS, Petabit and the IRIS architecture are all based on tunable wavelength converters for the switching. Therefore the maximum capacity per node is constrained by the maximum supported data rate of the TWC (currently up to 160 Gbps). 8.4.5 Routing

The routing of the packets in the data center networks is quite different from the Internet routing (e.g. OSPF), in order to take advantage of the network capacities. The routing algorithms can affect signicantly the performance of the network therefore efcient routing schemes must be deployed in the optical networks. In the case of the hybrid schemes (C-Through and Helios), the electrical network is based on a tree topology while the optical network is based on direct links between the nodes. 8.4.6 Cost and Power Consumption

The cost of network devices is a signicant issue in the design of a data center. However,many architectures presented in this study are based on optical components that are not commercially available thus it is difcult to compare the cost. The c-Through, the Helios and the Proteus scheme are based on readily available optical modules thus the cost is signicantly lower than other schemes that require special optical components designed especially for these networks. if the cost of the optical interconnect is the same as the current switches, the ROI can be achieved even if the optical interconnects consume 0.8 of the commodity switches. 8.4.7 Prototypes

The high cost of optical components prohibits the implementation of fully operational prototypes. However,in some cases prototypes have been implemented that show either a proof of concept or a complete system. The Helios architecture has been fully implemented since it is based on a commercially available optical circuit switch that is used in telecommunication networks.

H IGH S PEED R ECONFIGURABLE TO -C ARD I NTERCONNECTS

Overview

F REE -S PACE C ARD -

9.1

Sustained improvement in multi-channel on-chip and onboard interconnection has taken place.However, the capacity of interconnection between cards and racks has not kept the pace. Conventionally,copper-based cables are used for data transmission between cards and racks. However, the electrical technologies are not suitable for future high-throughput interconnects due to the fundamental limitations, including the electric power consumption, heat dissipation, transmission latency, and electromagnetic interference. To overcome the electrical card-to-card interconnect bandwidth limitation, the use of parallel short-range optical links has been proposed and studied and most of the reported optical interconnect schemes are based on the use of polymer wave guides and multi mode ber (MMF)ribbons.In particular, the fully integrated bidirectional parallel optical interconnect structures were employed.However, such point-to-point interconnection schemes are inherently non recongurable, and their exibility in dynamically interconnecting electronic cards is very limited.

The problems with the previous proposed structures are as follows:

9.3 Future prospects and Solutions to the Proposed Architecture

Low-link selection efciency. Considerably complicated tuning mechanism. limited tuning range As well as low bit rates that are insufcient for futuregeneration card-to-card interconnects.

9.2 Recent Proposed Architecture for High-Speed Free-Space Recongurable Card-to-Card Optical Interconnects[30] The recent proposed and demonstrated concept of a novel high-speed free-space recongurable card-to-card optical interconnect architecture offers exibility and high speed simultaneously. The proposed architecture employs VCSEL and photodiode (PD) arrays in conjunction with MEMS-based steering mirror arrays that serve as the link-selection block. Conventional optical interconnects employ VCSEL and PINPD arrays operating at the same wavelength.We adopt the same approach for the realization of recongurable free-space optical interconnects because

The most important future prospects with this recongurable card-to-card optical interconnect architecture is that it can easily be scaled up to highly dense 3-D parallel optical interconnects. It can be seen that,the discrepancy BER(Bit Error Rate) between channels increases with the horizontal distance, and thus impact of interchannel crosstalk is more pronounced.Thus,to mitigate the impact of crosstalk increase the pitch size of the MEMS-based steering mirror array at the receiver side. In order to achieve the same BER performance, a larger received power is required as the horizontal distance increases. To attain dense optical interconnects,it is more favorable to use an array with a small pitch size.

10 R EQUIREMENT OF V ERTICAL C AVITY S URFACE E MITTING L ASER (VCSEL) IN O PTICAL I NTERCON NECTS

It is cost-effective. It eliminates the need for complex circuitry for the precise control of the wavelength of the VCSEL elements. It increases the aggregate bit rate.

The architecture of the proposed concept can be summarised as follows:

A dedicated optical interconnect module is integrated onto each card (typically a PCB). This optical interconnect module mainly consists of a VCSEL array, a PD array, two microlens arrays, and two MEMS-based steering mirror arrays. At the transmitter side, the electrical signal from the attached card rst modulates the VCSEL optical beam, and then, the modulated optical beam is collimated by the associated microlens. To minimize the VCSEL beam divergence, the distance between the VCSEL and microlens is made equal to the focal length of the microlens. Since VCSEL propagate in free-space,which induces severe cross-talk, which is supressed using using a receiver MEMS steering mirror array with a large spacing between the elements. Experiments were also carried out to investigate the feasibility of reconguring the proposed optical interconnect architecture among different cards.

The trade-offs or limiting factors with the proposed architecture are:

Since the VCSEL beams propagate in free space, their diameter expands as the transmission distance increases. Therefore, severe inter channel crosstalk is induced, leading to a degraded BER performance. This crosstalk issue can be suppressed by using a receiver MEMS steering mirror array with a large spacing between the elements. The major limiting factors in the proposed architecture are the optical beam divergence in free space and the optical crosstalk induced by adjacent channels. The trade-off between the BER(Bit Error Rate) performance and the MEMS mirror spacing is also investigated. If the channel spacing is reduced, the BER performance and receiver sensitivity become worse.

The requirement of Vertical Cavity Surface Emitting Laser (VCSEL) in Optical Interconnects can be summarize as follows: Since CPUs are projected to run at many GHz in the next decade, but not faster, board ASICs will run at comparable rates, and area VCSEL links can provide the interconnection bandwidth required in the coming decade. VCSEL links are required for data com applications,i.e.required for low-power operation. VCSEL links can also be used as very high performance replacement for current large edge connectors. This can improve system noise margin because crosstalk and ground noise coupling become more difcult to control in traditional connectors as edge rates increase. An additional advantage is galvanic isolation that leads to a natural hotplug capability. Although some success in optical monolithic integration (lasers and lenses, lasers and receivers) has already been achieved and a number of efforts have successfully integrated CMOS with VCSEL devices.It should be clear that this is not a fundamental limitation, since multi gigahertz silicon VLSI is tractable. But there are still two problems related to the use of VCSEL links. The rst limitation is designing and building an array of VCSELs receivers, or hybrids of lasers and photo detectors on single assemblies that can modulate at many gigahertz with suitable electrical isolation and minimal crosstalk. A potentially more difcult problem is maintaining the alignment of free-space links.

11

C ONCLUSION

With the above discussion,we have a overall idea of interconnects for computing machines for the next ten years. Ironically, free-space optical interconnects which have yet to be commercialized are most promising for use inside the system to connect boards over the entire board area.What remains is a concerted effort by industry to develop products and a standard whereby these products will be used.The paper also discusses utilization of Optical Interconnects for High Performance Computing.The above discussion also gives an

10

overall survey of the optical networks targeting data centers and also provides a qualitative comparison on the features of these schemes such as connectivity, performance, scalability, and technology. Use of optical interconnects for data centers has helped to acheive reduced ROI(Return On Investment)time frame.Data centers play a key role in the nancial market such as in stock exchange,the added value of high bandwidth, low latency optical interconnects is even more signicant as the low latency communication has a major impact in the stock exchange transactions. Plasmonics is a new technology of manipulating and routing light at the sub-micron length scales in metallic nanostructures embedded in dielectric. Many researchers have highlighted their applicability for intra-chip communication.However due to an order of size mismatch between nanoelectronic components and the wavelength of light, the use of optical interconnects is restricted to the global interconnect level. Improved performance of computer systems has been achieved, in large part, by downscaling the IC minimum feature size. This allows the basic IC building block, the transistor, to operate at a higher frequency, performing more computations per second. However, downscaling of the minimum feature size also results in tighter packing of the wires on a microprocessor, which increases parasitic capacitance and signal propagation delay. Consequently, the delay due to the communication between the parts of a chip becomes comparable to the computation delay itself. This phenomenon, known as an interconnect bottleneck, is becoming a major problem in high-performance computer systems. This problem of interconnect bottleneck can be solved by utilizing optical interconnects to replace the long metallic interconnects.Optics has widespread use in long-distance communications; still it has not yet been widely used in chip-to-chip or on-chip interconnections because they (in cm or m range) are not yet industry-manufacturable owing to costlier technology and lack of fully mature technologies. As optical interconnections move from computer network applications to chip level interconnections, new requirements for high connection density and alignment reliability have become as critical for the effective utilization of these links. There are still many materials, fabrication, and packaging challenges in integrating optic and electronic technologies. With the idea of optical interconnects, the prognosis for the use of optics in digital computing and switching is more optimistic and realistic now than it likely has been at any point in the past. There are many challenges, including difcult issues such as packaging, cost, and reliability, and simply getting used to joining silicon circuits with optical links (e.g., receiver circuit issues). Computercom is an emerging market with a need for very short links, many less than 10 m, with very high aggregate BW and very high demands for low cost, low power, high reliability, and high density. Tightly integrated optics packaging will be required to achieve these goals along with a broad view to optimize technology across system requirements and close cooperation between the system providers and component suppliers as well. A growing acceptance and even hope in the mainstream electronic community that optics may be successfully incorporated at some point in the not too distant future.That hope is doubtless shared by the optics community,which has shown an unagging optimism that the days of optics as a major part

of information processing would nally arrive.

R EFERENCES

[1] [2] N.Akil, S.E.Kerns, D.V.Kerns, Jr.Hoffmann, and J.P.Charles, Photon generation by silicon diodes in avalanche breakdown, Appl. Phys. Lett.,, vol. 73,no.7, pp. 871872, 1998. A. V. Krishnamoorthy and D. A. B. Miller, Scaling optoelectronicvlsi circuits into the 21st century: a technology roadmap, IEEE Journal of Selected Topics in Quantum Electronics, vol. 2(I), pp. 5576, April 1996. D.A.B.Miller, Physical reasons for optical interconnection, Int. J. Optoelectron., vol. 11,no.3, pp. 155158, 1997. M.J.Yadlowsky, E.M.Deliso, and V. Silva, Optical bers and ampliers for wdm systems, Proc. IEEE, vol. 85, p. 1765, 1997. D. A. B. Miller, S. D. Smith, and A. Johnston, Optical bistability and signal amplication in a semiconductor crystal: Application of new low-power nonlinear effects in insb. Appl. Phys. Lett.,, vol. 35, pp. 658660, 1979. R. Dingle, W. Wiegmann, and C. H. Henry, Quantized states of conned carriers in very thin al ga asgaasalga as heterostructures, Phys. Rev. Lett.,, vol. 33, pp. 827830, 1974. R. Dingle and C. H. Henry, Quantum effects in heterostructure lasers, U.S. 3 982 207 Patent. D. A. B. Miller, D. S. Chemla, D. J. Eilenberger, P. W. Smith, A.C.Gossard, and W.T.Tsang, Large room-temperature optical nonlinearity in gaas/ga alas multiple quantum well structures, Appl. Phys. Lett.,, vol. 41,, pp. 679681, 1982. B. K. Jenkins, A. A. Sawchuk, T. C. Strand, R. Forchheimer, and B. H. Soffer, Sequential optical logic implementation, Appl. Opt.,, vol. 23, pp. 34553464, 1984. D. G. Deppe, D. L. Huffaker, K. Kumar, and T. J. Rogers, Native-oxide dened ring contact for low-threshold verticalcavity lasers, Appl. Phys. Lett., vol. 65, pp. 9799, 1994. D. A. B. Miller and H. M. Ozaktas, Limit to the bit-rate capacity of electrical interconnects from the aspect ratio of the system architecture, J. Parallel Distrib. Comput, vol. 41, p. 4252, 1997. International technology roadmap for semiconductors, Semiconductor Industry Association Std., 1999. D. A. B. Miller, Rationale and challenges for optical interconnects to electronic chips, Proc. IEEE, vol. 88, pp. 728749, 2000. C. Schow, Transmitter pre-distortion for simultaneous improvements in bit-rate, sensitivity, jitter, and power efciency in 20 gb/s cmos-driven vcsel links, Nat. Fiber Opt. Eng. Conf./ Opt. Fiber Conf. Expo, pp. 13, 2011. M. A. Taubenblatt, Optical interconnects for high-performance computing, IEEE Journal of Light Wave Technology, vol. 30,no.4, FEBRUARY 15, 2012. A. Benner, M. Ignatowski, J. Kash, D. Kuchta, and M. Ritter, Exploitation of optical interconnects in future server architectures, IBM J. Res. Develop, vol. 49, pp. 755775, 2005. B. Arimilli, R. Arimilli, V. Chung, S. Clark, W. Denzel, T. H. B. Drerup, J. Joyner, J. Lewis, L. Jian, N. Nan, and R. Rajamony, The percs high-performance interconnect, 18th IEEE Annu. Symp. High Perform. Interconnects, pp. 7582, 2010. J. Kim, W. J. Dally, S. Scott, and D. Abts, Technology-driven, highly-scalable dragony topology, Proc. 35th Annu. Int. Symp. Comput. Archit, pp. 7788, 2008. P.Pepeljugoski, S.E.Golowich, P. K. A.J.Ritger, and A. Risteski, Modeling and simulation of the next generation multimode ber, J. Lightw. Technol, vol. 21,no.5, pp. 12421255, May,2005. P. Pepeljugoski, M. J. Hackert, J. S. Abbott, S. E. Swanson, S. E. Golowich, A.J.Ritger, P.Kolesar, and Y. P.Pleunis, Development of system specication for laser optimized 50-um multimode ber for multigigabit short-wavelength lans, J. Lightw. Technol., vol. 21,no.5, pp. 12561275, May 2003. F. E. Doany, B. G. Lee, C. L. Schow, C. K. Tsang, C. Baks, Y. Kwark, R. John, J. U. Knickerbocker, and J. A. Kash, Terabit/s-class 24channel bidirectional opticaltransceiver module based on tsv si carrier for board-level interconnects, inProc. Electron. Compon. Technol. Conf, vol. Jun.2010, pp. 5865. D. Jubin, R. Dangel, N. Meier, T. F.Horst, R. B. J.Weiss, and B. J. Offrein, Polymer waveguide-based multilayer op-tical connector, in Proc. Int. Soc. Opt. Eng.,, vol. 7607, pp. 76 070K176 070K9, 2010. [online].available:http://www.top500.org/.

[3] [4] [5]

[6] [7] [8]

[9] [10] [11] [12] [13] [14]

[15] [16] [17]

[18] [19] [20]

[21]

[22]

[23]

11

[24] A.Kasukawa, Vcsel technology for green optical interconnects, IEEE Photonics Journal, vol. 4,no.2, pp. 642646, April,2012. [25] L. Schares, D. M. Kuchta, and A. F. Benner, Optics in future data center networks, In Symposium on High-Performance Interconnects, pp. 104108, 2010. [26] Vvision and roadmap: Routing telecom and data centers toward efcient energy use. vision and roadmap workshop on routing telecom and data centers. 2009. [27] C. Kachris and I. Tomkos, A survey on optical interconnects for data centers, IEEE Communications Surveys & tutorials,accepted for publication, 2012. [28] U. Hoelzle and L. A. Barroso, The Datacenter as a Computer: An Introduction to the Design of Warehouse-Scale Machines,, 1st, Ed. Morgan and Claypool Publishers, 2009. [29] I. Papadimitriou, C. Papazoglou, and A. S. Pomportsis, Optical switching: Switch fabrics, techniques, and architectures, Journal of Lightwave Technology, vol. 21, no. 2, p. 384, Feb 2003. [30] K. Wang, A. Nirmalathas, C. Lim, E. Skadas, and K. Alameh, High-speed recongurable free-space card-to-card optical interconnects, IEEE Photonics Journal, vol. 4,no.5, pp. 14071419, October 2012.

You might also like

- Interchip InterconnectionsDocument6 pagesInterchip InterconnectionsBESTJournalsNo ratings yet

- Optical Computers Technical Seminar Vtu EceDocument15 pagesOptical Computers Technical Seminar Vtu EceTomNo ratings yet

- Optical Computers Technical Seminar Report Vtu EceDocument33 pagesOptical Computers Technical Seminar Report Vtu EceTom100% (1)

- Opticalcomputing 090724084515 Phpapp02Document18 pagesOpticalcomputing 090724084515 Phpapp02sybil11No ratings yet

- Optical Computing: Saran Thampy D S7 Cse Roll No 17Document21 pagesOptical Computing: Saran Thampy D S7 Cse Roll No 17Flip FlopsNo ratings yet

- Ex JUnj JGve NBe DFNDocument24 pagesEx JUnj JGve NBe DFNnaincy kumariNo ratings yet

- OPTICAL COMPUTING TECHNOLOGY ADVANCESDocument18 pagesOPTICAL COMPUTING TECHNOLOGY ADVANCESGanesh KavetiNo ratings yet

- Optical Computing Seminar ReportDocument20 pagesOptical Computing Seminar ReportpoojALONDENo ratings yet

- Optoelectronic Integrated CircuitsDocument4 pagesOptoelectronic Integrated CircuitsLauren StevensonNo ratings yet

- Optical computing research paperDocument19 pagesOptical computing research paperkvk2221No ratings yet

- Photonic Switching in SpaceDocument42 pagesPhotonic Switching in Spacemcclaink06No ratings yet

- Optical Computing: Bachelor of Technology Electronics & CommunicationDocument25 pagesOptical Computing: Bachelor of Technology Electronics & Communicationyadavramanand15No ratings yet

- Silicon PhotonicsfinalDocument39 pagesSilicon PhotonicsfinalManojKarthikNo ratings yet

- 290 Optical ComputingDocument24 pages290 Optical ComputingNavitha ChethiNo ratings yet

- Abhishek Sharma Sudhir Kumar Sharma Pramod Sharma: EngineeringDocument4 pagesAbhishek Sharma Sudhir Kumar Sharma Pramod Sharma: EngineeringAbhishek SharmaNo ratings yet

- Optical Computing: Jitm, ParalakhemundiDocument23 pagesOptical Computing: Jitm, Paralakhemundichikulenka0% (1)

- Optical ComputersDocument46 pagesOptical ComputersManjunath MgNo ratings yet

- Optical Computing: 1. Optical Components and Storage SystemsDocument16 pagesOptical Computing: 1. Optical Components and Storage SystemsGaurav KaishanNo ratings yet

- Optical Computing: A Solution to Miniaturization ProblemsDocument4 pagesOptical Computing: A Solution to Miniaturization ProblemsAnirudha M VNo ratings yet

- Optical ComputingDocument29 pagesOptical ComputingrakeshranjanlalNo ratings yet

- Siddaganga Institute of Technology, Tumkur (A Technical Seminar Report On Optical ComputingDocument13 pagesSiddaganga Institute of Technology, Tumkur (A Technical Seminar Report On Optical ComputingNethravathinj NethraNo ratings yet

- Optical Computing TechnologyDocument16 pagesOptical Computing Technologybalajis_empathy620No ratings yet

- Photonic Switching For Data Center AppliDocument12 pagesPhotonic Switching For Data Center AppliTAJAMMUL HUSSAINNo ratings yet

- Optical Ring Network-On-Chip (Ornoc) : Architecture and Design MethodologyDocument6 pagesOptical Ring Network-On-Chip (Ornoc) : Architecture and Design MethodologyPham Van DungNo ratings yet

- Optical Computing Seminar ReportDocument25 pagesOptical Computing Seminar ReportManish PushkarNo ratings yet

- Optical-Computing Arun VsDocument23 pagesOptical-Computing Arun VskanchavuNo ratings yet

- Optical Computing ReportDocument19 pagesOptical Computing Reportupma20ecNo ratings yet

- Optical Signal ProcessingDocument38 pagesOptical Signal Processingsujay pujariNo ratings yet

- Optical SwitchesDocument1 pageOptical Switcheskavitha_alagandulaNo ratings yet

- Cubin Conversion Report PDFDocument25 pagesCubin Conversion Report PDFAsk Bulls BearNo ratings yet

- Photonic SwitchingDocument59 pagesPhotonic SwitchingShivam Gupta100% (1)

- Optical Computing: Sree Venkateswara Collge of EngineeringDocument18 pagesOptical Computing: Sree Venkateswara Collge of EngineeringIndela RithvikNo ratings yet

- The Role of Optics and Electronics inDocument19 pagesThe Role of Optics and Electronics inFaridFNo ratings yet

- VLSI Beyond CMOS: Nano, Single Electron & Spintronic DevicesDocument4 pagesVLSI Beyond CMOS: Nano, Single Electron & Spintronic Devicesশেখ আরিফুল ইসলামNo ratings yet

- Comparative Study of Modern Optical Data Centers DesignDocument5 pagesComparative Study of Modern Optical Data Centers DesignDr. Arunendra SinghNo ratings yet

- Optical ComputingDocument20 pagesOptical Computingrakeshranjanlal100% (2)

- TTM Immonen08 11Document4 pagesTTM Immonen08 11Santosa Valentinus YusufNo ratings yet

- Research Papers On Silicon PhotonicsDocument6 pagesResearch Papers On Silicon Photonicsjvfmdjrif100% (1)

- Optical Computing Technology: The Future of Faster ComputingDocument24 pagesOptical Computing Technology: The Future of Faster ComputingSai KrishnaNo ratings yet

- Wavelet Video TransformationDocument12 pagesWavelet Video TransformationKarthik BollepalliNo ratings yet

- Psota ATAC BARC 1-07Document2 pagesPsota ATAC BARC 1-07melwyn_thomasNo ratings yet

- Optical switching technologies and their advantagesDocument8 pagesOptical switching technologies and their advantagesneedanazeemaNo ratings yet

- Optical Computing (TDMA)Document9 pagesOptical Computing (TDMA)Channa AshanNo ratings yet

- OPTICAL COMPUTERS - The future of faster computingDocument25 pagesOPTICAL COMPUTERS - The future of faster computingVikas YadavNo ratings yet

- CHAMELEON: CHANNEL Efficient Optical Network-on-ChipDocument7 pagesCHAMELEON: CHANNEL Efficient Optical Network-on-ChipMilad EslaminiaNo ratings yet

- 06982195Document13 pages06982195JubinNo ratings yet

- Opt NetDocument0 pagesOpt NetKOUTIEBOU GUYNo ratings yet

- Optical Computing Seminar ReportDocument25 pagesOptical Computing Seminar ReportSeema DesaiNo ratings yet

- Optical Computing: A.Divyajyothi ECE ROLL NO: 096L1A0405Document19 pagesOptical Computing: A.Divyajyothi ECE ROLL NO: 096L1A0405Sarthi GNo ratings yet

- Optical Technologies Survey for Future Datacenter NetworksDocument18 pagesOptical Technologies Survey for Future Datacenter Networksjtsk2No ratings yet

- Introducing Neuromorphic SpikingDocument24 pagesIntroducing Neuromorphic Spikingrama chaudharyNo ratings yet

- Research Paper Topics On Optical Fiber CommunicationDocument5 pagesResearch Paper Topics On Optical Fiber Communicationgz8qarxzNo ratings yet

- Communication Technologies Augmenting Power TransmissionDocument54 pagesCommunication Technologies Augmenting Power TransmissionChaitanyaVigNo ratings yet

- Optical Computing: 2. Research TrendsDocument14 pagesOptical Computing: 2. Research TrendsSajeshp SajeshNo ratings yet

- Laser CommunicationDocument84 pagesLaser CommunicationKaushal VermaNo ratings yet

- NIST Optical Computing SeminarDocument24 pagesNIST Optical Computing SeminarShreyas As100% (1)

- Advances in Analog and RF IC Design for Wireless Communication SystemsFrom EverandAdvances in Analog and RF IC Design for Wireless Communication SystemsGabriele ManganaroRating: 1 out of 5 stars1/5 (1)

- Signal Integrity: From High-Speed to Radiofrequency ApplicationsFrom EverandSignal Integrity: From High-Speed to Radiofrequency ApplicationsNo ratings yet

- Telecommunications For Power Utilities Customer StoriesDocument8 pagesTelecommunications For Power Utilities Customer StoriesKelly chatNo ratings yet

- A Study On Consumer Perception Towards Adoption of 4G PDFDocument11 pagesA Study On Consumer Perception Towards Adoption of 4G PDFInaaNo ratings yet

- 3rd Generation Partnership Project Technical Specification Group Terminals Characteristics of The USIM Application (3G TS 31.102 Version 1.0.0)Document97 pages3rd Generation Partnership Project Technical Specification Group Terminals Characteristics of The USIM Application (3G TS 31.102 Version 1.0.0)Navneet6No ratings yet

- RF Based Remote Control System For Home AppliancesDocument4 pagesRF Based Remote Control System For Home AppliancesMukesh BadgalNo ratings yet

- Pakistan Telecom - Flare MagzineDocument102 pagesPakistan Telecom - Flare Magzineanwarulhaq100% (10)

- Advantech Satellite's Technical Guide to DVB-RCS/S2 VSAT SolutionsDocument34 pagesAdvantech Satellite's Technical Guide to DVB-RCS/S2 VSAT Solutionsgamer08100% (1)

- Psa Psic MswordDocument332 pagesPsa Psic MswordPio CampolivasNo ratings yet

- IT Applications in Dairy IndustryDocument194 pagesIT Applications in Dairy IndustryGanesh JadhavNo ratings yet

- Indian T.V IndustryDocument34 pagesIndian T.V IndustryPrachi Jain0% (1)

- E-Terragridcom E-DXC Brochure Brochure ENGDocument2 pagesE-Terragridcom E-DXC Brochure Brochure ENGsefovaraja100% (2)

- G-PON Amendment Adds Best Practices for 2.488 Gbps DownstreamDocument12 pagesG-PON Amendment Adds Best Practices for 2.488 Gbps DownstreamAlexander PischulinNo ratings yet

- Khyber Pakhtunkhwa Digital Policy 2018-2023Document23 pagesKhyber Pakhtunkhwa Digital Policy 2018-2023Insaf.PKNo ratings yet

- Csi S 270553 I C S: Ection Dentification FOR Ommunications YstemsDocument16 pagesCsi S 270553 I C S: Ection Dentification FOR Ommunications YstemsYogeshSharmaNo ratings yet

- Wirless Digital CommunicationDocument4 pagesWirless Digital CommunicationStalin BabuNo ratings yet

- High Speed Serial Interface: Technical Overview and ApplicationsDocument16 pagesHigh Speed Serial Interface: Technical Overview and ApplicationsRahul TiwariNo ratings yet

- Ethiopias New Investment Framework The RegulationDocument12 pagesEthiopias New Investment Framework The RegulationaschalewNo ratings yet

- Chapter 8 - Protocol Architecture - Computer NetworksDocument12 pagesChapter 8 - Protocol Architecture - Computer Networkschandushar1604100% (3)

- ONLINE ADVERTISING - Its's Scope & Impact On Consumer Buying BehaviourDocument90 pagesONLINE ADVERTISING - Its's Scope & Impact On Consumer Buying BehaviourSami Zama50% (2)

- The 10th TSSA Program Book v1.0 Final Version PDFDocument19 pagesThe 10th TSSA Program Book v1.0 Final Version PDFAyu Rosyida zainNo ratings yet

- Communication Systems Haykin Solution PDFDocument2 pagesCommunication Systems Haykin Solution PDFLane0% (1)

- Communication Switching System PDFDocument2 pagesCommunication Switching System PDFShayNo ratings yet

- Communication System TC-307: Lecture 1, Week 1 Course Instructor: Nida NasirDocument65 pagesCommunication System TC-307: Lecture 1, Week 1 Course Instructor: Nida NasirUmer farooqNo ratings yet

- Porter'S Five Forces Analysis of Indian Telecom Industry: I. Buyer PowerDocument6 pagesPorter'S Five Forces Analysis of Indian Telecom Industry: I. Buyer PowerRahul SinghNo ratings yet

- BX10 Integrated Service Platform Instruction Manual V2.1 (无标)Document63 pagesBX10 Integrated Service Platform Instruction Manual V2.1 (无标)Phạm Ngọc Tân100% (1)

- Apple S Notice of Opposition V Super Healthy Kids IncDocument548 pagesApple S Notice of Opposition V Super Healthy Kids IncKamal NaraniyaNo ratings yet

- Handbook Basic Ict ConceptsDocument39 pagesHandbook Basic Ict ConceptsjerahNo ratings yet

- Medicaps Application form-DrAmitAgrawalDocument6 pagesMedicaps Application form-DrAmitAgrawalDrAmit AgrawalNo ratings yet

- Gartner - BuySmart - Contract ReviewsDocument29 pagesGartner - BuySmart - Contract ReviewsgpppNo ratings yet

- Communication SystemsDocument28 pagesCommunication SystemsMarina TepordeiNo ratings yet

- Iti Limited PDFDocument7 pagesIti Limited PDFhemant kumarNo ratings yet