Professional Documents

Culture Documents

A Reply To "A Critique To Using Rasch Analysis To Create and Evaluate A Measurement Instrument For Foreign Language Classroom Speaking Anxiety' "

Uploaded by

lucianaeuOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Reply To "A Critique To Using Rasch Analysis To Create and Evaluate A Measurement Instrument For Foreign Language Classroom Speaking Anxiety' "

Uploaded by

lucianaeuCopyright:

Available Formats

A Reply to A Critique to Using Rasch

Analysis to Create and Evaluate a

Measurement Instrument for Foreign

Language Classroom Speaking Anxiety

Matthew T. Apple

Ritsumeikan University

n his response to my paper concerning the use of the Rasch model for

creating and evaluating foreign language classroom speaking anxiety

(Apple, 2013), Dr. Panayides makes some interesting observations;

however, there also appear to be several points of misinterpretation of the

study results. The initial issue is his opening assertion that my paper was

designed to show advantages of the Rasch model over classical test theory

(CTT) models as well as item response theory (IRT) models. In fact, the paper was designed only to demonstrate the advantages of the Rasch model

for Japan-based classroom teachers of English. I made no mention whatsoever of other IRT models. I also did not set the Rasch model against all

CTT methods; I merely demonstrated that simple descriptive statistics were

not as informative or useful as Rasch analysis when creating and evaluating

questionnaires.

JALT Journal, Vol. 35, No. 2, November 2013

210

Apple

211

The main argument of Panayidess critique is that, because the correlation

of mean raw scores and Rasch logits was not the expected 1.0, I had somehow miscalculated the raw scores or had not used Rasch analysis properly. As

a point of clarification, I did fail to clearly indicate the difference in N-size between the mean scores in Table 1 (p. 14), which was produced to compare raw

score results, and the Rasch item analysis in Table 2 (p. 15). Whereas Table 1

with traditional mean scores showed the original N-size of 172, Table 2 with

Rasch item analysis showed the reduced N-size of 152, following the removal

of 20 persons whose responses systematically misfit the model. The descriptive statistics were meant to show what a researcher with no knowledge of

the Rasch model would have done. The researcher would not have known

that 20 persons responses misfit the model, because merely summing up raw

scores from questionnaire items and then averaging them does not provide a

measure of person fit.

The issue in the correlation-based argument is the assertion that, because

both traditional mean scores based on classical test theory (CTT) and the

Rasch model use the raw score as a sufficient statistic for the estimation of

item difficulty, raw scores from Likert-type category data ought to correlate

highly with Rasch logits. As Panayides states, for a typical Rasch modelbased analysis of testing data, [the] sufficient statistic for estimating item

difficulty is simply the sum or count of the correct responses for an item over

all persons. However, there are two important points to be made regarding

the use of raw scores and the Rasch model.

First, there is a crucial distinction between CTT and the Rasch model,

which Bond and Fox (2007) have made explicit:

To the extent that the data fit the Rasch models specifications

for measurement [emphasis added], then N is the sufficient

statistic. (Bond & Fox, 2007, p. 267)

Data obtained from a well-established questionnaire with a large N-size

(N > 1000) may indeed show good item fit. However, the data in my paper were obtained from a newly created measurement instrument, which

had many misfitting items; the N-size, while adequate, was not large and

the participants were not well targeted by the items, which may have introduced measurement error. Additionally, data from a test, which has correct

answers, and a Likert category scale, which has no correct answers, are

necessarily different. The frequency of responses to the Likert response

categories for each item may adversely affect item fit and thus measurement

212

JALT Journal, 35.2 November 2013

of the construct (Linacre, 1999). Traditional raw scores take neither fit nor

measurement error into account, nor do they consider differences in Likert

category utility.

Second, the relatively low correlation of traditional mean scores to Rasch

logits demonstrates that Rasch logits based on Rasch model analysis are

not simply another type of descriptive statistics. The Rasch model is not a

model of observed responses, but a model of the probability of the observed

responses (Wilson, 2005, p. 90). In other words, the Rasch model attempts

to predict the likelihood of a questionnaire respondent to give the same answer to similar items on a future iteration of the questionnaire. Raw scores

are descriptions that do not take item or person fit, measurement error, or

Likert category scale functioning into account. Rasch logits are the result of

attempting to model probabilistic item responses as a function of the level of

endorsability of the construct for both respondents and items.

As I mentioned in my discussion on the drawbacks of traditional statistics

(pp. 7-8), there are two problematic assumptions with averaging or adding

raw scores such as participant responses to Likert-scale items on a questionnaire in order to create an item mean score. First, Likert-type category

data are not true interval data. With interval data, the distances between

each adjoining pair of data points are required to be equal. Although the

distances between points on a Likert scale may look equal on the surface,

they may actually vary from person to person and item to item. Second,

because Likert-type category data are ordinal and not interval, such data

are not additive. Different questionnaire respondents may have very different perceptions of the distinction between a strongly agree and an agree for

one item. Adding a 1 from one persons response to a 2 to another persons

response doesnt really equal 3. Averaging the two responses doesnt really

equal 1.5, either. Likewise, a response of a 3 on one item by one person is

not necessarily the same as a 3 on another item by the same person. The

use of mathematical averages ignores the possibility that responses from

different people on the same items or the same person on different items

may represent very different perceptions of the intended Likert categories.

Thus, any correlation of means, based on raw scores, to Rasch logits of data

from a Likert category scale seems of questionable value.

Additionally, I stated in the conclusion (p. 23) that not only did several

items on the questionnaire need revision, but that, indeed, several studies

had already used revised versions of the anxiety questionnaire. Each use of

the questionnaire with new participant samples required new Rasch analysis for validation; this construct has proved valid and reliable for Japan-

Apple

213

based samples. Readers are invited to review Apple (2011) and Hill, Falout,

and Apple (2013) for further information.

Finally, Panayides claims that I implied that item order change is common

practice in using the Rasch models. I did not imply this; I did, however, suggest that reliance on raw scores to judge item difficulties in a questionnaire

may lead to erroneous conclusions about which items are more difficult than

others to endorse, because raw scores do not take item fit or measurement

error into account. I thank Dr. Panayides, and I thank the editors of JALT

Journal for giving me this opportunity to respond to the issues raised and

give clarifications. I hope this exchange of ideas will encourage JALT Journal

readers to learn more about the use of Rasch model analysis for second and

foreign language teaching and research.

References

Apple, M. (2011). The Big Five personality traits and foreign language speaking

confidence among Japanese EFL students (Doctoral dissertation). Available from

ProQuest Dissertations and Theses database. (UMI No. 3457819)

Apple, M. (2013). Using Rasch analysis to create and evaluate a measurement instrument for foreign language classroom speaking anxiety. JALT Journal, 35, 5-28.

Bond, T. G., & Fox, C. M. (2007). Applying the Rasch model: Fundamental measurement

in the human sciences. Mahwah, NJ: Erlbaum.

Hill, G., Falout, J., & Apple, M. (2013). Possible L2 selves for students of science and

engineering. In N. Sonda & A. Krause (Eds.), JALT2012 Conference Proceedings

(pp. 210-220). Tokyo: JALT.

Linacre, J. (1999). Investigating rating scale category utility. Journal of Outcome

Measurement, 3, 103-122.

Wilson, M. (2005). Constructing measures: An item response modeling approach.

Mahwah, NJ: Erlbaum.

You might also like

- Likert Scale DissertationDocument7 pagesLikert Scale DissertationPaperWritersForCollegeUK100% (1)

- Analyzing and Interpreting Data From LikertDocument3 pagesAnalyzing and Interpreting Data From LikertAnonymous FeGW7wGm0No ratings yet

- The Hierarchy Consistency Index: Evaluating Person Fit For Cognitive Diagnostic AssessmentDocument33 pagesThe Hierarchy Consistency Index: Evaluating Person Fit For Cognitive Diagnostic AssessmentianchongNo ratings yet

- Research ArticleDocument14 pagesResearch ArticleZahoor AhmadNo ratings yet

- Rasch AnalysisDocument4 pagesRasch AnalysisFlora Mae Masangcay DailisanNo ratings yet

- Equidistance of Likert-Type Scales and Validation of Inferential Methods Using Experiments and SimulationsDocument13 pagesEquidistance of Likert-Type Scales and Validation of Inferential Methods Using Experiments and SimulationsIsidore Jan Jr. RabanosNo ratings yet

- Rasch AnalysisDocument5 pagesRasch AnalysisAashimaAggarwalNo ratings yet

- Survey Data Quality - RaschDocument42 pagesSurvey Data Quality - RaschsetyawarnoNo ratings yet

- QTPR ThesisDocument5 pagesQTPR Thesiscrystalalvarezpasadena100% (2)

- RM AssumptionsDocument7 pagesRM AssumptionsWahyu HidayatNo ratings yet

- The Likert Scale Used in Research On Affect - A Short Discussion of Terminology and Appropriate Analysing MethodsDocument11 pagesThe Likert Scale Used in Research On Affect - A Short Discussion of Terminology and Appropriate Analysing MethodsSingh VirgoNo ratings yet

- Likert ScaleDocument10 pagesLikert Scalelengers poworNo ratings yet

- Likert Scales: How To (Ab) Use Them Susan JamiesonDocument8 pagesLikert Scales: How To (Ab) Use Them Susan JamiesonFelipe Santana LopesNo ratings yet

- The Theory and Practice of Item Response TheoryDocument466 pagesThe Theory and Practice of Item Response Theoryjuangff2No ratings yet

- A Rasch Analysis On Collapsing Categories in Item - S Response Scales of Survey Questionnaire Maybe It - S Not One Size Fits All PDFDocument29 pagesA Rasch Analysis On Collapsing Categories in Item - S Response Scales of Survey Questionnaire Maybe It - S Not One Size Fits All PDFNancy NgNo ratings yet

- Description: Tags: MismatchDocument2 pagesDescription: Tags: Mismatchanon-75363No ratings yet

- Do Data Characteristics Change According To The Number of Scale Points Used ? An Experiment Using 5 Point, 7 Point and 10 Point Scales.Document20 pagesDo Data Characteristics Change According To The Number of Scale Points Used ? An Experiment Using 5 Point, 7 Point and 10 Point Scales.Angela GarciaNo ratings yet

- 01 A Note On The Usage of Likert Scaling For Research Data AnalysisDocument5 pages01 A Note On The Usage of Likert Scaling For Research Data AnalysisFKIANo ratings yet

- JKMIT 1.2 Final Composed 2.42-55 SNDocument15 pagesJKMIT 1.2 Final Composed 2.42-55 SNFauzul AzhimahNo ratings yet

- Theories Related To Tests and Measurement FinalDocument24 pagesTheories Related To Tests and Measurement FinalMargaret KimaniNo ratings yet

- Rasch Analysis An IntroductionDocument3 pagesRasch Analysis An IntroductionBahrouniNo ratings yet

- Item Response TheoryDocument14 pagesItem Response TheoryCalin Moldovan-Teselios100% (1)

- Item Response TheoryDocument11 pagesItem Response TheoryKerdid SimbolonNo ratings yet

- Thesis On Scale DevelopmentDocument6 pagesThesis On Scale Developmentbsfp46eh100% (1)

- BradleyDocument15 pagesBradleyImam Tri WibowoNo ratings yet

- Bondvalidity PDFDocument16 pagesBondvalidity PDFagraarimbawa12No ratings yet

- Likert PDFDocument4 pagesLikert PDFDody DarwiinNo ratings yet

- Analyzing Data Measured by Individual Likert-Type ItemsDocument5 pagesAnalyzing Data Measured by Individual Likert-Type ItemsCheNad NadiaNo ratings yet

- Classical Test TheoryDocument8 pagesClassical Test TheoryChinedu Bryan ChikezieNo ratings yet

- Using Likert Type Data in Social Science Research: Confusion, Issues and ChallengesDocument14 pagesUsing Likert Type Data in Social Science Research: Confusion, Issues and Challengesanon_284779974No ratings yet

- 3 Ways To Measure Employee EngagementDocument12 pages3 Ways To Measure Employee Engagementsubbarao venkataNo ratings yet

- Item Response Theory For Psychologists PDFDocument2 pagesItem Response Theory For Psychologists PDFDavid UriosteguiNo ratings yet

- Cred PVD lfs003Document24 pagesCred PVD lfs003Amir SofuanNo ratings yet

- Literature Review On Likert ScaleDocument5 pagesLiterature Review On Likert Scalefvgj0kvd100% (1)

- Likert Scales and Data Analyses + QuartileDocument6 pagesLikert Scales and Data Analyses + QuartileBahrouniNo ratings yet

- Literature Review by KothariDocument8 pagesLiterature Review by Kotharixwrcmecnd100% (1)

- List of Research Papers in StatisticsDocument8 pagesList of Research Papers in Statisticsgpnovecnd100% (1)

- Article On Projective TechniquesDocument5 pagesArticle On Projective TechniquesSergio CortesNo ratings yet

- Ex Post Facto ResearchDocument26 pagesEx Post Facto ResearchPiyush Chouhan100% (1)

- Chapter 4 Thesis Using Likert ScaleDocument6 pagesChapter 4 Thesis Using Likert Scaleafaydoter100% (2)

- Do Data Characteristics Change According To The Number of Scale Points Used ? An Experiment Using 5 Point, 7 Point and 10 Point ScalesDocument20 pagesDo Data Characteristics Change According To The Number of Scale Points Used ? An Experiment Using 5 Point, 7 Point and 10 Point ScalesMah Zeb SyyedNo ratings yet

- Likert Scale Research PDFDocument4 pagesLikert Scale Research PDFMoris HMNo ratings yet

- Psychometric Evaluation of A Knowledge Based Examination Using Rasch AnalysisDocument4 pagesPsychometric Evaluation of A Knowledge Based Examination Using Rasch AnalysisMustanser FarooqNo ratings yet

- J Ajp 2016 08 016Document7 pagesJ Ajp 2016 08 016Tewfik SeidNo ratings yet

- Statistics For The Behavioral Sciences 10th Edition Gravetter Solutions ManualDocument13 pagesStatistics For The Behavioral Sciences 10th Edition Gravetter Solutions Manualjerryperezaxkfsjizmc100% (15)

- Literature Review On Descriptive StatisticsDocument5 pagesLiterature Review On Descriptive Statisticstug0l0byh1g2100% (1)

- Likert Scales, Levels of Measurement and The 'Laws' of Statistics PDFDocument8 pagesLikert Scales, Levels of Measurement and The 'Laws' of Statistics PDFLoebizMudanNo ratings yet

- Rma Statistical TreatmentDocument5 pagesRma Statistical Treatmentarnie de pedroNo ratings yet

- Correlation of StatisticsDocument6 pagesCorrelation of StatisticsHans Christer RiveraNo ratings yet

- An Item Response Curves Analysis of The Force Concept InventoryDocument9 pagesAn Item Response Curves Analysis of The Force Concept InventoryMark EichenlaubNo ratings yet

- A Tutorial and Case Study in Propensity Score Analysis-An Application To Education ResearchDocument13 pagesA Tutorial and Case Study in Propensity Score Analysis-An Application To Education Researchapi-273239645No ratings yet

- Statistical Tools Used in ResearchDocument15 pagesStatistical Tools Used in ResearchKatiexeIncNo ratings yet

- How To Write Chapter 3 Research PaperDocument8 pagesHow To Write Chapter 3 Research Paperhnpawevkg100% (1)

- Example of Meta Analysis Research PaperDocument6 pagesExample of Meta Analysis Research Paperfvgczbcy100% (1)

- Likert Scales and Data AnalysesDocument4 pagesLikert Scales and Data AnalysesPedro Mota VeigaNo ratings yet

- Meta Analysis Homework EffectivenessDocument5 pagesMeta Analysis Homework Effectivenessafetygvav100% (1)

- What Is Likert ScaleDocument5 pagesWhat Is Likert ScaleSanya GoelNo ratings yet

- A Fundamental Conundrum in Psychology's Standard Model of Measurement and Its Consequences For Pisa Global Rankings.Document10 pagesA Fundamental Conundrum in Psychology's Standard Model of Measurement and Its Consequences For Pisa Global Rankings.Unity_MoT100% (1)

- Steps Quantitative Data AnalysisDocument4 pagesSteps Quantitative Data AnalysisShafira Anis TamayaNo ratings yet

- Forgetting Our Personal Past: Socially Shared Retrieval-Induced Forgetting of Autobiographical MemoriesDocument16 pagesForgetting Our Personal Past: Socially Shared Retrieval-Induced Forgetting of Autobiographical MemorieslucianaeuNo ratings yet

- Trends in Violence Research: An Update Through 2013: EditorialDocument7 pagesTrends in Violence Research: An Update Through 2013: EditoriallucianaeuNo ratings yet

- Council For Innovative ResearchDocument8 pagesCouncil For Innovative ResearchlucianaeuNo ratings yet

- Resilience Under Fire: Perspectives On The Work of Experienced, Inner City High School Teachers in The United StatesDocument14 pagesResilience Under Fire: Perspectives On The Work of Experienced, Inner City High School Teachers in The United StateslucianaeuNo ratings yet

- 1 s2.0 S0022096511001330 Main PDFDocument8 pages1 s2.0 S0022096511001330 Main PDFlucianaeuNo ratings yet

- Acta Psychologica: Alan Scoboria, Giuliana Mazzoni, Josée L. Jarry, Daniel M. BernsteinDocument8 pagesActa Psychologica: Alan Scoboria, Giuliana Mazzoni, Josée L. Jarry, Daniel M. BernsteinlucianaeuNo ratings yet

- Content ServerDocument11 pagesContent ServerlucianaeuNo ratings yet

- Speculating On Everyday Life: The Cultural Economy of The QuotidianDocument16 pagesSpeculating On Everyday Life: The Cultural Economy of The QuotidianlucianaeuNo ratings yet

- Acta Psychologica: Kimberley A. Wade, Robert A. Nash, Maryanne GarryDocument7 pagesActa Psychologica: Kimberley A. Wade, Robert A. Nash, Maryanne GarrylucianaeuNo ratings yet

- 1 s2.0 S0148296315003409 MainDocument9 pages1 s2.0 S0148296315003409 MainlucianaeuNo ratings yet

- Content ServerDocument17 pagesContent ServerlucianaeuNo ratings yet

- 2446 Lee Julie Anne Rm-04Document13 pages2446 Lee Julie Anne Rm-04lucianaeuNo ratings yet

- Teacher Diversity: Do Male and Female Teachers Have Different Self-Efficacy and Job Satisfaction?Document17 pagesTeacher Diversity: Do Male and Female Teachers Have Different Self-Efficacy and Job Satisfaction?lucianaeuNo ratings yet

- Self-Efficacy, Effort, Job Performance, Job Satisfaction, and Turnover Intention: The Effect of Personal Characteristics On Organization PerformanceDocument5 pagesSelf-Efficacy, Effort, Job Performance, Job Satisfaction, and Turnover Intention: The Effect of Personal Characteristics On Organization PerformancelucianaeuNo ratings yet

- How To Disassemble Dell Inspiron 17R N7110 - Inside My LaptopDocument17 pagesHow To Disassemble Dell Inspiron 17R N7110 - Inside My LaptopAleksandar AntonijevicNo ratings yet

- MK3000L BrochureDocument2 pagesMK3000L BrochureXuân QuỳnhNo ratings yet

- Contoh RPHDocument2 pagesContoh RPHAhmad FawwazNo ratings yet

- Franchise Model For Coaching ClassesDocument7 pagesFranchise Model For Coaching ClassesvastuperceptNo ratings yet

- Environmental Accounting From The New Institutional Sociology Theory Lens: Branding or Responsibility?Document16 pagesEnvironmental Accounting From The New Institutional Sociology Theory Lens: Branding or Responsibility?abcdefghijklmnNo ratings yet

- Why Are You Applying For Financial AidDocument2 pagesWhy Are You Applying For Financial AidqwertyNo ratings yet

- Rwanda National Examinations Council Organisational Chart DeputyDocument1 pageRwanda National Examinations Council Organisational Chart DeputymugobokaNo ratings yet

- Mathchapter 2Document12 pagesMathchapter 2gcu974No ratings yet

- Wood Support-Micro Guard Product DataDocument13 pagesWood Support-Micro Guard Product DataM. Murat ErginNo ratings yet

- Professional Education LET Reviewer 150 Items With Answer KeyDocument14 pagesProfessional Education LET Reviewer 150 Items With Answer KeyAngel Salinas100% (1)

- Curs FinalDocument8 pagesCurs FinalandraNo ratings yet

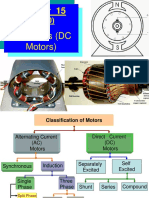

- Chapter 15.1.2.3 DC Drives PPT II Spring 2012Document56 pagesChapter 15.1.2.3 DC Drives PPT II Spring 2012Muhammad Saqib Noor Ul IslamNo ratings yet

- A Level Biology A Core Practical 10 - Ecology InvestigationDocument6 pagesA Level Biology A Core Practical 10 - Ecology InvestigationAlfred SangNo ratings yet

- Question Bank 1st UnitDocument2 pagesQuestion Bank 1st UnitAlapati RajasekharNo ratings yet

- Modern Guide To Plo ExtractDocument24 pagesModern Guide To Plo ExtractSteve ToddNo ratings yet

- HFAss 6Document1 pageHFAss 6Taieb Ben ThabetNo ratings yet

- Glazing Risk AssessmentDocument6 pagesGlazing Risk AssessmentKaren OlivierNo ratings yet

- A Review of Charging Algorithms For Nickel Andlithium - BattersehDocument9 pagesA Review of Charging Algorithms For Nickel Andlithium - BattersehRaghavendra KNo ratings yet

- All About Immanuel KantDocument20 pagesAll About Immanuel KantSean ChoNo ratings yet

- Automate Premium ApplicationDocument180 pagesAutomate Premium ApplicationpaulvaihelNo ratings yet

- Ooip Volume MbeDocument19 pagesOoip Volume Mbefoxnew11No ratings yet

- Improving Orientation With Yoked PrismDocument2 pagesImproving Orientation With Yoked PrismAlexsandro HelenoNo ratings yet

- Numerical Study of Depressurization Rate During Blowdown Based On Lumped Model AnalysisDocument11 pagesNumerical Study of Depressurization Rate During Blowdown Based On Lumped Model AnalysisamitNo ratings yet

- Transportation Research Part C: Xiaojie Luan, Francesco Corman, Lingyun MengDocument27 pagesTransportation Research Part C: Xiaojie Luan, Francesco Corman, Lingyun MengImags GamiNo ratings yet

- PrestressingDocument14 pagesPrestressingdrotostotNo ratings yet

- Ramana Cell: +91 7780263601 ABAP Consultant Email Id: Professional SummaryDocument4 pagesRamana Cell: +91 7780263601 ABAP Consultant Email Id: Professional SummaryraamanNo ratings yet

- 2 Wheel Drive Forklift For Industry WarehousesDocument5 pages2 Wheel Drive Forklift For Industry WarehousesSuyog MhatreNo ratings yet

- Pugh ChartDocument1 pagePugh Chartapi-92134725No ratings yet

- 20years Cultural Heritage Vol2 enDocument252 pages20years Cultural Heritage Vol2 enInisNo ratings yet

- T2 Homework 2Document3 pagesT2 Homework 2Aziz Alusta OmarNo ratings yet