Professional Documents

Culture Documents

Lyrics - V2i8 - Ijertv2is80652

Uploaded by

bhaveshlibOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lyrics - V2i8 - Ijertv2is80652

Uploaded by

bhaveshlibCopyright:

Available Formats

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

Lyrics Generation Using N-Gram Technology

Shivaji Arjun Kakad 1, Pro. A. R. Babhulgaonkar 2, Pro. Y. N. Patil

1&3

Department of Computer Engineering , Department of Information Technology ,

Dr. BAT University, Lonere, MH, India

Abstract- In this paper we are going to use N-Gram

model from the Natural Language Processing, for the

prediction of next word. Here we have to give single

word as input and producing Lyrics for same. By

using this technology we are generating Lyrics of

song. To do this we are going to use many

algorithms like N-gram, smoothing, discounting, etc.

We are using corpus of words and predicting a word

from previous one word, two words, three words,

etc. We have described other algorithms for

assigning words to classes based on the frequency of

their co-occurrence with other words.

INTRODUCTION

IJE

RT

Keywords- Corpus, Discounting, Lyrics, N-gram

model, Natural Language Processing, Smoothing.

Now a day, there is very instant increase in

use of Natural Language Processing. Hopefully most

of you concluded that a very likely word is call, or

international or phone, but probably not the. We

formalize this idea of WORD PREDICTION with

probabilistic models called N-grams, which predict

the next word from the previous N 1 Language

Models words, similarly (N-1)-grams predict the

next word from previous N-2 Language Models, and

so on. Such statistical models of word sequences are

also called language models or LMs. Computing the

probability of the next word will turn out to be

closely related to computing the probability of a

sequence of words.

In a number of natural language processing

tasks, we face the problem of recovering a string of

English words after it has been garbled by passage

through a noisy channel. To tackle this problem

successfully, we must be able to estimate the

probability with which any particular string of

English words will be presented as input to the noisy

channel. In this paper, we discuss a method for

making such estimates. We also discuss the related

topic of assigning words to classes according to

IJERTV2IS80652

statistical behavior in a large body of text. The

concept of a language model and give a definition of

n-gram models. In next Section, we look at the

subset of n-gram models in which the words are

divided into classes. We show that for n = 2 the

maximum likelihood assignment of words to classes

is equivalent to the assignment for which the average

mutual information of adjacent classes is greatest.

Finding an optimal assignment of words to classes is

computationally hard, but we describe two

algorithms for finding a suboptimal assignment. We

apply mutual information to two other forms of word

clustering. First, we use it to find pairs of words that

function together as a single lexical entity. Then, by

examining the probability that two words will appear

within a reasonable distance of one another, we use it

to find classes that have some loose semantic

coherence. In describing our work, we draw freely

on terminology and notation from the mathematical

theory of communication. The reader who is

unfamiliar with this field or who has allowed his or

her facility with some of its concepts to fall into

disrepair may profit from a brief perusal of Feller

(1950) and Gallagher (1968). In the first of these, the

reader should focus on conditional probabilities and

on Markov chains; in the second, on entropy and

mutual information.

Whether estimating probabilities of next

words or of whole sequences, the N-gram model is

one of the most important tools in speech and

language processing. N-grams are essential in any

task in which we have to identify words in noisy,

ambiguous input. In speech recognition, for ample,

the input speech sounds are very confusable and

many words sound extremely similar.

By using n-gram algorithm we can predict the

next word from previous words. The accuracy of

output is directly proportional to the number of

previous words used. That is accuracy of output

increases when we increase the number of previous

words. Here we have used previous one word i.e. Bigram model to get the output.

www.ijert.org

1744

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

Probabilities are based on counting things. Before we

do calculations for probabilities, we need to decide

what we are going to count. Counting of things in

natural language is based on a corpus (plural

corpora), an on-line collection of text CORPUS or

speech. Lets look CORPORA at two popular

corpora, Brown and Switchboard. The Brown

Corpus is a 1 million word collection of samples

from 500 written texts from different genres

(newspaper, novels, non-fiction, academic, etc.),

assembled at Brown University.

One thing we can do is to decompose this

probability using the chain rule of probability:

P(X1.Xn) = P(X1) P(X2|X1) P(X3|X21)....P(Xn|Xn-11)

= nk=1 P(Xk|Xk-11)

Applying the chain rule to words, we get:

P(wn1) = P(w1) P(w2|w1) P(w3|w21)....P(wn|wn-11)

= nk=1 P(wk|wk-11).

RELATED WORK

In this section we are going to explain the

related work of the Natural Language Processing

model i.e. N-gram model that we have done.

IJE

RT

2.1

OVERVIEW

Here we have introduced cleverer ways of

estimating the probability of a word w given a

history h, or the probability of an entire word

sequence W. Lets start with a little formalizing of

notation.

In order to represent the probability of a

particular random variable Xi taking on the value

the, or P(Xi = the), we will use the simplification

P(the). Well represent a sequence of N words either

as w1 . . .wn or wn1. For the joint probability of each

word in a sequence having a particular value P(X =

w1,Y = w2,Z = w3, ..., ) well use P(w1,w2, ...,wn).

Now we compute probabilities of entire sequences

like P(w1,w2, ...,wn).

2.2

N-GRAM MODEL

The intuition of the N-gram model is that

instead of computing the probability of a word given

its entire history, we will approximate the history by

just the last few words.

The

bigram

model,

for

example,

approximates the probability BIGRAM of a word

given all the previous words P(wn|wn-11) by the

conditional probability of the preceding word

P(wn|wn-1), instead of computing the probability. This

assumption that the probability of a word depends

only on the previous MARKOV word is called a

Markov assumption. The N-Gram model is one of

the most famous models for prediction of next word.

Markov models are the class of probabilistic models

that assume that we can predict the probability of

some future unit without looking too far into the

past. We can generalize the bigram (which looks one

word into N-GRAM the past) to the trigram (which

looks two words into the past) and thus to the Ngram (which looks N 1 words into the past). Thus

the general equation for this N-gram approximation

to the conditional probability of the next word in a

sequence is:

Source Model

Pr(W)

P(wn|wn-11)

*

Probability of complete word sequence:

Target Model

Pr(X|W)

=

X

P(wn|wn-1n-N+1)

Pr(W,X)

P(wn1)

nk=1 P(wk|wk-1).

For the general case Maximum likelihood Estimation

i.e. MLE N-Gram parameter estimation:

P(wn|wn-1n-N+1) =

C(wn|wn-1n-1

n-N+1 wn)

C(wn|w n-N+1)

Figure 1 Source-Target Model

IJERTV2IS80652

www.ijert.org

1745

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

Following figure shows the bigram counts

from a piece of a bigram grammar from the Berkeley

Restaurant Project. Note that the majority of the

values are zero. In fact, we have chosen the sample

words to cohere with each other; a matrix selected

from a random set of seven words would be even

more sparse.

mum

bai

0.10

..

..

..

0.008

3

0.52

..

..

..

..

have

to

go

0.002

0.33

have

0.0022

0.66

to

0.0008

3

0

0.0063

0.001

7

0.002

7

0

0.001

1

0.28

..

..

..

go

..

have

to

go

mumbai

..

2533

927

2417

746

158

..

Table 2 Number of counts in corpus for each word.

2.4 MARKOV MODEL

A bigram model is also called a first-order

Markov model because it has one element of

memory (one token in the past) Markov models are

essentially weighted FSAsi.e., the arcs between

states have probabilities. The states in the FSA are

words. Much more on Markov models when we hit

POS (Part Of Speech) tagging, in which the POS as

tags are given to the each word in the sentence.

EVALUATION OF N-GRAM

The correct way to evaluate the performance

of a language model is to embed it in an application

and measure the total performance of the application.

Such end-to IN VIVO end evaluation, also called in

vivo evaluation, is the only way to know if a

particular improvement in a component is really

going to help the task at hand. Thus for speech

recognition, we can compare the performance of two

language models by running the speech recognizer

twice, once with each language model, and seeing

which gives the more accurate transcription. Here

below we have described some concepts related with

our work.

IJE

RT

mum

bai

..

..

Table 1 Bigram probabilities for five words.

2.3 UNIGRAM, BIGRAM, TRIGRAM.

In case of Unigram model, we are using selfword only for prediction i.e. zero previous word. On

the other hand we are using one word in case of

Bigram and three words for Trigram respectively.

Therefore N-gram uses N-1 previous words. The

accuracy of prediction depends on number of N.

So, we have to increase the number of N to

get accurate next word. By using this technique we

have implemented a module for Lyrics generation by

predicting next word.

Unigram Counts

3.1 ENTROPY

Natural language can be viewed as a

sequence of random variables (a stochastic process

), where each random variable corresponds to a

word. Based on certain theorems and assumptions

(stationary) about the stochastic process, we have..

Bigram Counts

H(L) = lim - (1|n) log p (w1 w2..wn)

n

Root

N[u]

N[u,v]

N[u,v,w]

Trigram Counts

Strictly, we have an estimate q (w1

w2..wn), which is based on our language models,

and we dont know what true probability of words

i.e. p (w1 w2..wn) is.

Figure 2 N-Gram Models

IJERTV2IS80652

www.ijert.org

1746

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

3.2 CROSS ENTROPY

Cross Entropy is used when we dont know

the true probability distribution that generated the

observed data (natural language). Cross Entropy

(,) provides an upper bound on the Entropy

(). We have,

number of word types to the denominator (i.e., we

added one to every type of bigram, so we need to

account for all our numerator additions). Therefore,

this smoothing is called as add-one smoothing.

P* (wn | wn-1) = [ C ( wn-1, wn ) + 1] | [ C ( wn-1 ) + V ]

V = total number of word types / Vocabulary.

H (p, q) = lim - (1|n) log q (w1 w2..wn)

n

Here we can see that the above formula is

extremely similar to above formula of entropy.

Therefore, we can say entropy to mean cross

entropy.

4.3 INTERPOLATION

It linearly combines estimates of N-gram

models of increasing order. It is another technique in

smoothing techniques. This smoothing is called as

interpolation.

P^(wn | wn-2, wn-1) = 1 P(wn | wn-2, wn-1) + P(wn | wn-1)

+ P^(wn | wn)

IJE

RT

3.3 PERPLEXITY

Unfortunately, end-to-end evaluation is often

very expensive; evaluating a large speech

recognition test set, for example, takes hours or even

days. Thus we would like a metric that can be used

to quickly evaluate potential improvements in a

language model. Perplexity is the most common

evaluation metric for N-gram language models.

Perplexity is defined as.

4.2 BACK OFF

Only use lower-order model when data for

higher-order model is unavailable (i.e. count is zero).

Recursively back-off to weaker models until data is

available. This smoothing is called as back off.

Where:

PP (W) =

Learn proper values for by training to

(approximately) maximize the likelihood of a heldout dataset.

This quantity is the same as

() =

Therefore Perplexity: Trigram < Bigram < Unigram.

SMOOTHING ALGORITHMS

There are various algorithms are in the

natural language processing. These are some

techniques useful in N-gram model. When the

problem of overflow or underflow occurs at that time

these techniques are used.

4.1 ADD-ONE SMOOTHING

One way to smooth is to add a count of one to

every bigram- in order to still be a probability, all

probabilities need to sum to one, so, we add the

IJERTV2IS80652

DISCOUNTTING ALGORITHMS

2H(W)

Where H(W) is the cross entropy of the sequence W.

i i =1

Another way of viewing smoothing is as

discounting. Lowering non-zero counts to get the

probability mass we need for the zero count items.

The discounting factor can be defined as the ratio of

the smoothed count to the MLE count. Jurafsky and

Martin show that add-one smoothing can discount

probabilities by a factor of 8!

Discount the probability mass of seen events,

and redistribute the subtracted amount to unseen

events. Laplace (Add-One) Smoothing is a type of

discounting method => simple, but doesnt work

well in practice. Better discounting options are

1) Good-Turing, 2) Witten-Bell, 3) Kneser-Ney.

Advanced smoothing techniques are usually a

mixture of [discounting + back off] or [discounting +

interpolation].

www.ijert.org

1747

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

5.1 WRITTEN-BELL DISCOUNTING

Instead of simply adding one to every ngram, compute the probability of wi-1, wi by seeing

how likely wi-1 is at starting any bigram. Words that

begin lots of bigrams lead to higher unseen Bigram

probabilities. Non-zero bigrams are discounted in

essentially the same manner as zero count bigrams!

Here we are using this algorithm for

calculation of bigram probabilities.

IMPLEMENTATION

calculating probabilities problem of underflow

occurs. The next and last table is calculated by using

various methods of smoothing as explained in

previous section. Therefore we have smoothed the

table 1, by using Add-one smoothing algorithm as

shown in table 4.

i

have

to

go

mum

bai

.

IJE

RT

During the implementation of N-gram we

have got some issues regarding to underflow. This is

all because of more number of zeros occurred in the

database.

Always handle probabilities in log space.

Avoid underflow. Notice that multiplication in linear

space becomes addition in log space => this is good,

because addition is faster than multiplication.

Therefore we are changing the expression of

multiplication into addition as given below,

p1 * p2 * p3 * p4 =exp (log p1 + log p2 + log p3

+ log p4)

In the implementation part of the N-Gram we

have created four tables, namely count of each word,

count of each word after previous word, unsmoothed

probabilities of the word and at last probabilities

after smoothing the table.

The following table shows the count of each

word after previous word.

i

have

to

go

mumbai

..

11

1090

14

..

have

786

..

to

13

866

..

go

471

45

..

mumbai

81

..

..

have

to

go

0.002

1

0.002

4

0.000

9

0.005

6

0.006

4

.

0.331

0.002

0.000

2

0.000

3

0.002

0.661

0.00

8

0.00

14

0.28

0.000

9

.

0.001

7

0.002

7

0.000

5

.

0.00

04

0.00

6

.

mum

bai

0.101

0.007

0.008

32

0.521

0.000

1

.

.

.

.

Table 4 Probabilities after smoothing.

This is the last table which shows the

probability of the word with the previous words. The

number of previous words is based on the order of

N-Gram model. By using which we can see the next

word. By using this we have implemented a model

for Lyrics generation. This is fully based on above

model.

Output Window 1 shows to insert input.

Table 3 Number of counts with previous word.

are

There is a problem in above table is that there

more number of zeros, therefore while

IJERTV2IS80652

Here, we have shown two output windows

one is for getting input and another is for generating

output. While process we have used above four

www.ijert.org

1748

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 2 Issue 8, August - 2013

tables and n-gram model for generation of Lyrics of

song.

REFERENCES

[1]

An Introduction to Natural Language

Processing, Computational Linguistics, and Speech

Recognition from Second Edition, by Daniel

Jurafsky belongs to Stanford University and James

H. Martin belongs to University of Colorado at

Boulder.

[2]

Thorsten Brants, Ashok C. Popat, Peng Xu,

Franz J. Och, and Jeffrey Dean. 2007. Large

language models in machine translation.

[3]

Marcello Federico and Mauro Cettolo. 2007.

Efficient handling of n-gram language models for

statistical machine translation. In Proceedings of the

Second

Workshop

on

Statistical

Machine

Translation.

CONCLUSION

Marcello Federico and Mauro Cettolo. 2007.

[4]

Efficient handling of n-gram language models for

statistical machine translation. In Proceedings of the

Second Workshop on Statistical Machine

Translation.

IJE

RT

Output Window 2 shows the generated song for a word.

Thus, in this paper, by using n-gram

technique we have created the Lyrics of the new

songs. Here we focused on the applicability of ngram co-occurrence measures for the prediction of

next word. The N-Gram model is one of the most

famous models for prediction of next word. In the

model, the n-gram reference distribution is simply

defined as the product of the probabilities of

selecting lexical entries with machine learning

features of word and POS N-gram. For our

application you have to insert two words as input one

is song title and another is the starting word of the

song. Then it creates the Lyrics of song for the word

that you have entered.

ACKNOWLEDGEMENT

This author would like to thank Dr. A. R.

Babhulgaonkar for his guidance of N-Gram model,

by using which we have implemented this. We also

would like to thank Dr. A. W. Kiwelekar head of

department of Computer Engineering and other staff.

David Guthrie and Mark Hepple. 2010.

[5]

Storing the web in memory: space efficient language

models with constant time retrieval. In Proceedings

of the Conference on Empirical Methods in Natural

Language Processing.

[6]

Boulos Harb, Ciprian Chelba, Jeffrey Dean,

and Sanjay Ghemawat. 2009. Back-off language

model compression. In Proceedings of Interspeech.

[7]

Zhifei Li and Sanjeev Khudanpur. 2008. A

scalable decoder for parsing-based machine

translation with equivalent language model state

maintenance. In Proceedings of the Second

Workshop on Syntax and Structure in Statistical

Translation.

[8]

Abby Levenberg and Miles Osborne. 2009.

Streambased randomised language models for smt.

In Proceedings of the Conference on Empirical

Methods in Natural Language Processing.

[9]

Paolo Boldi and Sebastiano Vigna 2005.

Codes for the world wide web. Internet Mathematics.

IJERTV2IS80652

www.ijert.org

1749

You might also like

- Guidelines Colleges Library PDFDocument4 pagesGuidelines Colleges Library PDFbhaveshlibNo ratings yet

- Welcome To Andhra University, Visakhapatnam, India - 530 003Document3 pagesWelcome To Andhra University, Visakhapatnam, India - 530 003bhaveshlibNo ratings yet

- Consolidated LMC Data 22.02.2017Document31 pagesConsolidated LMC Data 22.02.2017bhaveshlibNo ratings yet

- Gharda Institute of Technology Mail - LokrajyaDocument1 pageGharda Institute of Technology Mail - LokrajyabhaveshlibNo ratings yet

- Fees and Charges For Debit CardDocument2 pagesFees and Charges For Debit CardSanjeev SharmaNo ratings yet

- Internet - Boon or Bane?: in FavorDocument4 pagesInternet - Boon or Bane?: in FavorbhaveshlibNo ratings yet

- Data Scientists Earning More Than CAs, Engineers - The Economic TimesDocument2 pagesData Scientists Earning More Than CAs, Engineers - The Economic TimesbhaveshlibNo ratings yet

- Naac Git LibraryDocument3 pagesNaac Git LibrarybhaveshlibNo ratings yet

- Branding and Marketing Your LibraryDocument7 pagesBranding and Marketing Your LibrarybhaveshlibNo ratings yet

- Week1-27687 636130119690314304Document1 pageWeek1-27687 636130119690314304bhaveshlibNo ratings yet

- TestsDocument3 pagesTestsbhaveshlibNo ratings yet

- NPTEL SheduleDocument10 pagesNPTEL ShedulebhaveshlibNo ratings yet

- Librarianship in Comfort ZoneDocument1 pageLibrarianship in Comfort ZonebhaveshlibNo ratings yet

- All Dept-Indian Journals List-2016Document814 pagesAll Dept-Indian Journals List-2016bhaveshlibNo ratings yet

- E&tc CBGSDocument1 pageE&tc CBGSbhaveshlibNo ratings yet

- SR No. Name of StudentDocument6 pagesSR No. Name of StudentbhaveshlibNo ratings yet

- Raviraj Enterprises: Date: 23/02/2016 To, Gharda Institute of Technology ChiplunDocument1 pageRaviraj Enterprises: Date: 23/02/2016 To, Gharda Institute of Technology ChiplunbhaveshlibNo ratings yet

- Civil Engineering Semester VII (List of Recommended Books As Per Mumbai University Syllabus)Document2 pagesCivil Engineering Semester VII (List of Recommended Books As Per Mumbai University Syllabus)bhaveshlibNo ratings yet

- Gmail - LIPS 2016 - Conference Announcement and Call For PaperDocument4 pagesGmail - LIPS 2016 - Conference Announcement and Call For PaperbhaveshlibNo ratings yet

- A Rebuke To India's Prime Minister Narendra ModiDocument3 pagesA Rebuke To India's Prime Minister Narendra ModibhaveshlibNo ratings yet

- Conference SNDTDocument8 pagesConference SNDTbhaveshlibNo ratings yet

- Scan 0001Document2 pagesScan 0001bhaveshlibNo ratings yet

- Quote For GITDocument3 pagesQuote For GITbhaveshlibNo ratings yet

- Chemical Engineers ScopeDocument3 pagesChemical Engineers ScopebhaveshlibNo ratings yet

- Survey Manet VanetDocument7 pagesSurvey Manet VanetbhaveshlibNo ratings yet

- Survey Paper Is 2013Document6 pagesSurvey Paper Is 2013bhaveshlibNo ratings yet

- Design, Development and Testing of An Impeller ofDocument6 pagesDesign, Development and Testing of An Impeller ofbhaveshlibNo ratings yet

- PP 172 181 SameerDocument10 pagesPP 172 181 SameerbhaveshlibNo ratings yet

- Cost - Reduaction - in File - Manufacturing PDFDocument5 pagesCost - Reduaction - in File - Manufacturing PDFbhaveshlibNo ratings yet

- Visualization Based Sequential Pattern Text MiningDocument6 pagesVisualization Based Sequential Pattern Text MiningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Solve Me If You CanDocument6 pagesSolve Me If You CanSujithNo ratings yet

- Feature Blast: Cyberstation and Web - Client V1.9Document6 pagesFeature Blast: Cyberstation and Web - Client V1.9Ricardo Liberona UrzuaNo ratings yet

- Delphi Com API Do WindowsDocument21 pagesDelphi Com API Do WindowsWeder Ferreira da SilvaNo ratings yet

- Lua Short Ref 51Document7 pagesLua Short Ref 51MaxiJaviNo ratings yet

- xv6 Rev5Document87 pagesxv6 Rev5Hirvesh MunogeeNo ratings yet

- MCA211 Software TestingDocument2 pagesMCA211 Software Testingbksharma7426No ratings yet

- Opencv TutorialsDocument451 pagesOpencv TutorialsAnto PadaunanNo ratings yet

- Programming in C VivaDocument14 pagesProgramming in C VivaMarieFernandes100% (1)

- Infoplc Net Sitrain 06 SymbolicDocument14 pagesInfoplc Net Sitrain 06 SymbolicBijoy RoyNo ratings yet

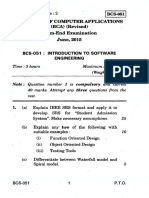

- BCS 051Document2 pagesBCS 051AnkitSinghNo ratings yet

- r05410408 Digital Image ProcessingDocument5 pagesr05410408 Digital Image ProcessingandhracollegesNo ratings yet

- 1 Sem M.tech SyllabusDocument9 pages1 Sem M.tech SyllabusAbhay BhagatNo ratings yet

- Introduction To Software EngineeringDocument25 pagesIntroduction To Software EngineeringAayush MalikNo ratings yet

- TE050 Systems Integration Test ScriptDocument9 pagesTE050 Systems Integration Test Scriptnahor02No ratings yet

- LinuxGym AnswersDocument16 pagesLinuxGym AnswersNhânHùngNguyễn100% (1)

- Exam 2004Document20 pagesExam 2004kib6707No ratings yet

- IT Sample PaperDocument12 pagesIT Sample PaperTina GroverNo ratings yet

- An Overview of Data Management: Creating The Great Business LeadersDocument90 pagesAn Overview of Data Management: Creating The Great Business LeadersVelisa Diani PutriNo ratings yet

- Manage DataDocument259 pagesManage Datamiguel m.No ratings yet

- Amazon Web Services (AWS) : OverviewDocument6 pagesAmazon Web Services (AWS) : Overviewplabita borboraNo ratings yet

- Using Nest SQL Queries in Maximo 7.1Document2 pagesUsing Nest SQL Queries in Maximo 7.1jverly1901No ratings yet

- CitectSCADA v7.0 - Cicode Reference GuideDocument862 pagesCitectSCADA v7.0 - Cicode Reference GuidePatchworkFishNo ratings yet

- Quiz 4Document5 pagesQuiz 4Surendran RmcNo ratings yet

- HFM FAQsDocument14 pagesHFM FAQsSyed Layeeq Pasha100% (1)

- X7 Access Control System User Manual 20121115 PDFDocument15 pagesX7 Access Control System User Manual 20121115 PDFSudesh ChauhanNo ratings yet

- p181 - Mars Exploration RoverDocument13 pagesp181 - Mars Exploration Roverjnanesh582No ratings yet

- Dce SyllabusDocument3 pagesDce SyllabusGajanan BirajdarNo ratings yet

- Fanuc CNC Programing Manual PDFDocument2 pagesFanuc CNC Programing Manual PDFJonna50% (2)

- 3 D Analysis Tools ConversionDocument12 pages3 D Analysis Tools ConversionShadi FadhlNo ratings yet

- CISSP Aide-Mémoire - Table of ContentsDocument7 pagesCISSP Aide-Mémoire - Table of ContentsecityecityNo ratings yet