Professional Documents

Culture Documents

7 Referred

Uploaded by

PanosKarampisCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

7 Referred

Uploaded by

PanosKarampisCopyright:

Available Formats

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

Human-computer Interaction using Pointing Gesture based on an

Adaptive Virtual Touch Screen

Pan Jing1 and Guan Ye-peng1, 2

1. School of Communication and Information Engineering, Shanghai University

2. Key Laboratory of Advanced Displays and System Application, Ministry of

Education

ypguan@shu.edu.cn

Abstract

Among the various hand gestures, pointing gesture is highly intuitive, and does not require

any priori assumptions. A major problem for pointing gesture recognition is the difficulty of

pointing fingertip tracking and the unreliability of the direction estimation. A novel

real-time method is developed for pointing gesture recognition using Kinect based depth

image and skeletal points tracking. An adaptive virtual touch screen is constructed instead of

estimating pointing direction. When a user stands in a certain distance from a large screen to

perform pointing behaviors, he interacts with the virtual touch screen as if it is just right in

front of him. The proposed method is suitable for both large and small pointing gestures, and

its not subject to users characteristics and environmental changes. Experiments have

highlighted that the proposed approach is robust and efficient to realize human-computer

interaction based on pointing gesture recognition by comparisons.

Keywords: Pointing gesture; Fingertip tracking; HCI; Kinect; Virtual touch screen

1. Introduction

Human-computer interaction (HCI) is a hot topic in the information technology age.

Operators can provide commands and get results with the interactive computing system

involves one or more interfaces. With the development of graphical operator interfaces,

operators with varying levels of computer skills have been allowed to use a wide variety of

software applications. Recent advancements in HCI provide more intuitive and natural ways

to interact with the computing devices.

There are many HCI patterns including facial expression, body posture, hand gesture,

speech recognition and so on. Among them hand gesture is an intuitive and easy learning

means. Wang et al., [1] proposed an automatic system that executes hand gesture spotting and

recognition simultaneously based on Hidden Markov Models (HMM). Suk et al., [2]

proposed a new method for recognizing hand gestures in a continuous video stream using a

dynamic Bayesian network or DBN model. Van den Bergh et al., [3] introduced a real-time

hand gesture interaction system based on a Time-of-Flight. Due to the diversity of hand

gesture, there exist some limitations for recognition. Comparatively, pointing gesture the

simplest hand gesture which can be easier to be recognized has attracted much attention. Park

and Lee [4] presented a 3D pointing gesture recognition algorithm based on a cascade hidden

Markov model (HMM) and a particle filter for mobile robots. Kehl and Gool [5] proposed a

multi-view method to estimate 3D directions of one or both arms. Michael [6] has set up two

orthogonal cameras from the top view and side view to detect hand regions, track the finger

pointing features, and estimate the pointing directions in 3D space. Most previous pointing

gesture recognition methods use the results of face and hand tracking [7] to recognize the

81

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

pointing direction. However, the recognition rate is limited by the unreliable face and hand

detection and tracking in 3D space. Another difficult problem is to recognize some small

pointing gestures which usually results in the wrong direction.

Body tracking or skeleton tracking techniques using an ordinary camera are not easy and

require extensive time in developing. From the body or skeleton tracking survey, to detect the

bone joints of human body is still a major problem since the depth of the human cannot be

determined through the use of a typical camera. However, some researchers have tried to use

more than one video camera to detect and determine the depth of the object, but the

consequence is that the cost increases and the processing ability slows down due to the

increased data processing. Fortunately, the Kinect sensor makes it possible to acknowledge

the depth and skeleton of operators. The Kinect has three autofocus cameras including two

infrared cameras optimized for depth detection and one standard visual-spectrum camera used

for visual recognition.

Take a recognition system robust and the cost of cameras into consideration, some

researchers have used Kinect sensor to provide a higher resolution at a lower cost instead. The

Microsoft Kinect sensor combines depth and RGB cameras in one compact sensor [8, 9]. Its

robust to all colors of clothing and background noise and provides an easy way for real-time

operator interaction [10-14]. There are some freely available libraries that can be used to

collect and process data from a Kinect sensor as well as skeletal tracking [15, 16].

Two main contributions are as follows in this paper. Firstly a new method to detect

pointing fingertip is proposed which is used to recognize pointing gestures and interact with

large screen instead of headhand line. Secondly a scalable and flexible virtual touch screen is

constructed which is adaptively adjusted as the operator moves as well as pointing arm

extends or contracts. Experimental results have shown that the developed method is robust

and efficient to recognize pointing gesture and realize HCI by comparisons.

The organization of the rest paper is as follows. In Section 2, pointing gesture recognition

method is described. How to construct a virtual touch screen is introduced in Section 3.

Experimental results are given in Section 4, and some appropriate conclusions are made in

Section 5.

2. Pointing Gesture Recognition

The input to the proposed method is a depth image acquired by the Kinect sensor, together

with its accompanying skeletal map. When there are some people standing in front of the

Kinect, the most important thing is how to determine the real operator. The horizontal view is

limited in a certain range, so the one who stands out of the range will be ignored. A user

standing closest to Kinect sensor is deemed as the operator, who will be tracked until he is

obscured by another one or leaves the scene.

2.1. Pointing Hand Segmentation

Huma hand tracking and locating is carried out by continuously processing RGB images

and depth images of an operator who is performing the pointing behavior to interact with

large screen. The RGB images and depth images are captured by the Kinect sensor which is

fixed in front of the operator. Microsoft Kinect has the ability to track the movements of 24

distinct skeletal joints on the human body, wherein head, hand and elbow points have been

used in this experiment.

When operator held out the hand to perform pointing gesture, the hand is closer than the

other one. The 3D coordinates of pointing hand joint can be obtained from skeletal map.

82

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

H ,

Hp = r

Hl ,

z r < zl

(1)

else

Where Hp is the pointing hand, Hr refers to right hand and Hl refers to left hand, zr and zl

present the z coordinates of right and left hand respectively.

In order to catch the hand motion used for controlling the large screen, it is need to

separate the hand from the depth image. A depth image records the depth of all the pixels of

the RGB image. The depth value of hand joint can be figured out through skeleton-to-depth

conversion, and it is taken as a segmentation threshold which is used to divide the hand

region Hd(i, j) from the raw depth image.

255, d (i, j ) < Td + e

H d (i , j ) =

, d (i, j ) D; i = 1,2, , m; j = 1,2, n

else

0,

(2)

Where d(i, j) is the pixel of depth image D, Td is the depth of the pointing hands joint, e

refers to a small range around threshold Td, m is the width of D, and n is the height of D.

2.2. Pointing Fingertip Detection

Most of existing methods for pointing gesture recognition use the operator hand-arm

motion to determine the pointing direction. To further study hand gestures, some approaches

for fingertips detection have been proposed. In this paper, pointing fingertip [17-19] with

more precise is detected instead of hand to interact with large screen. The hands minimum

bounding rectangle makes it easy to extract the pointing fingertip. The rules for pointing

fingertip detection especially index fingertip are as follows:

(1) Extract the tracked hand region using the minimum bounding rectangle by skeletal

information as well as the corresponding elbow.

(2) When the operators hand is pointing to left, his or her pointing hand joints coordinate

in the horizontal direction (x coordinate) is less than the corresponding elbows.

At this moment, if the hand joints coordinate in vertical direction (y coordinate) is also

less than elbows and the width of minimum bounding rectangle is less than its height, its not

difficult to find that index fingertip moves along the bottom edge of bounding rectangle;

If the pointing hand joints x coordinate is larger than the corresponding elbows and the

width of the minimum bounding rectangle is less than its height, the index fingertip moves

along the up edge of bounding rectangle; If the minimums width is larger than its height, the

index fingertip moves along the left edge of bounding rectangle.

(3) When the operators hand is pointing to right, his or her pointing hand joints x is larger

than that of the corresponding elbows one, the distinguishing rule is the same as step (2).

The distinguishing rules above are to find the pointing fingertip in depth image, and its 3D

coordinates can be figured out through depth-to-skeleton conversion.

2.3. Pointing Fingertip Tracking

In some cases, the fingertip will be detected by mistaken due to the surrounding

environmental interference. To avoid false detection, a tracking technique is introduced to

track the feature points. A Kalman filter [20-22] is used to record the detected fingertip

motion trajectory, the features of pointing gestures (including fingertips position and speed)

can be extracted through this tracking method.

83

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

Motion of pointing fingertip can be described by a linear dynamic model consisting of a

state vector x(k) and a state transition matrix (k). The state vector containing the position,

velocity in all three dimensions is described as (3).

x(k) = [x, y, z, vx, vy, vz]

(3)

where x, y, z presents the image coordinates of the detected fingertip, and vx, vy, vz presents

its displacement.

The Kalman filter model assumes the true state at time k is evolved from the state at (k1)

according to (4).

x(k) = (k)x(k-1) + W(k)

(4)

where (k) is the state transition model which is applied to the previous state x(k1);W(k)

is the process noise which is assumed to be drawn from a white Gaussian noise process with

covariance Q(k).

At time k an observation (or measurement) z(k) of the true state x(k) is made according to

(5).

z(k) = H(k)z(k) + V(k)

(5)

where H(k) is the observation model which maps the true state space into the observed

space and V(k) is the observation noise which is assumed to be zero mean Gaussian white

noise with covariance R(k).

In our method, the Kalman filter is initialized with six states and three measurements, the

measurements correspond directly with x, y, z in the state vector.

2.4. Pointing Gestures Recognition

Human motion is a continuous sequence of actions or gestures and non-gestures without

clear-cut boundaries. Gestures recognition refers to detecting and extracting meaningful

gestures from an input video. It is crucial how to recognize pointing gesture since the

recognition procedure is only performed for the detected pointing gestures. When a person

makes a pointing gesture, the whole motion can be separated into three phases including

non-gesture (hands and arms drop naturally, its unnecessary to find fingertip), move-hand

(the pointing hand is moving and its direction is changing), point-to (the hand is

approximately stationary). Among the three phases, only the point-to phase or the

corresponding pointing gesture is relevant to target selection.

To recognize pointing gestures, the three phases must be distinguished. When operator

moves his hand, the velocity at time t is estimated by (6)

v x = xt xt 1 , v y = y t y t 1 , v z = z t z t 1

(6)

where x, y, z represents the position of pointing fingertip in 3D space, respectively. If vx, vy

and vz maintains in a small specific range vT, it is approximately stationary. Assuming this

stationary state lasts for n frames, the initial value of n is 0

n + 1, v x < vT & v y < v T & v z < v T

n =

0,

else

(7)

When n reaches a certain amount, it presents the operator is pointing at the interaction

target, & is logical AND operation.

84

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

3. Virtual Touch Screen Construction

3.1. Kinect Coordinate System

When an operator stands in front of the Kinect sensor and interacts with a large screen. The

Kinect coordinate is defined as following shown in Figure 1. Axis X is upturned, axis Y is

rightward and axis Z is vertical. The Kinect can capture the depth of any objects in its

workspace.

3.2. Virtual Touch Screen

Many researchers pay much attention to human body skeleton, so hand-arm or hand-eye is

always used to determine the pointing direction. A virtual touch screen is constructed for

human computer interaction, which does not need estimate pointing direction.

The concept of virtual touch screen was introduced in [23]. The distance between the

operator and the virtual touch screen remains unchanged namely the arms length is assumed

to be constant. Apparently, its not suitable for different operators. A virtual touch screen is

constructed in this paper which is scalable and flexible. Define z coordinate of the virtual

screen as z one of users pointing fingertip. It will move forward or backward as the pointing

arm extends or contracts the screen which is more natural and suitable for different operators.

The 3D coordinates of head and pointing index fingertip are used to construct a virtual

touch screen as Figure 1. One can note that the large screen and Kinect are in the same plane,

which means its z coordinate is zero. Assume the large screen is divided into m n modules,

and the x coordinates of vertical demarcation points are x1, x2, xm, the y coordinates of the

horizontal demarcation points are y1, y2, , yn. Suppose the corresponding values on virtual

screen are xi, yj, i=1, 2, , m, j=1, 2, , n.

(x1,y1)

Large

(x2,y1)

scree

n disp

lay m

odel

(xm-1,y1)

(x1,y2)

Virtual touch screen

(x1',y1')

(xm,y1)

(x1,yn-1)

(xm',y1')

The center

of face

(x1,yn)

(0,0,0)

X

Figure 1. The Virtual Touch Screen

'

xh xi' y h y j z h z '

=

=

zh z

x h xi y h x j

(8)

where z is 0, and z equals to the pointing fingertips z coordinate, xh, yh and zh refer to the

3D coordinates of operators head joint.

The corresponding coordinates on virtual touch screen can be figured out as (9), (10),

xi' =

( x h xi ) ( z f z h )

zh

+ zh

(9)

85

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

y 'j =

( y h y j ) ( z f zh )

zh

+ yh

(10)

The virtual touch screen will move forward or backward with movement of the pointing

fingertip as well as human motion. If operators pointing fingertip stays in one block of

virtual screen for a while, the corresponding block on the large screen will be triggered.

4. Experimental Results and Analysis

The experimental evaluation is based on real-world sequences obtained by a Kinect sensor.

Kinect for Xbox is compatible with Microsoft Windows 7. Using the OpenNI the system is

developed in Microsoft Visual Studio 2010 with C++ and OpenCV. The proposed method

runs on a computer equipped with an Intel core i3- 2120 CPU, 4 GBs RAM. On this system,

the average frame rate is 30Hz.

4.1. Experimental Environment

The real experimental environment is show in Figure 2. We draw a piece of area on the

wall and divide it into six modules which are taken as a large screen, respectively. In order to

make the experimental results intuitive, six lamps are fixed on the center of each module. The

pointing lamp turns on or off is used to test the accuracy of pointing gesture recognition.

The distance between the operator and Kinect sensor within 0.8m to 3.5m, the large

screens width is 2.4m and its height is 1.3m. The demarcation points (x1, y1), , (x6, y6) can

be measured manually. Pointing gestures are recorded from seven volunteers with different

heights and postures.

Figure 2. Experimental Environment

4.2. Recognition of Pointing Gestures

Accuracy pointing hand detection is a premise condition for pointing fingertip extraction.

The 3D coordinates of hand joint can be obtained from skeletal image, and its position in

depth image can be figured out through skeleton-to-depth conversion. The depth value of

hand joint is taken as a threshold Td, and the hand region could be extracted from depth image

according to (2). Through some tests, defining e as 20 is enough to segment the pointing hand

completely.

Some non-hand regions detected by mistaken and the false detection blocks which doesnt

contains pointing hand joint must be removed as following.

H R = {R | R J H }

(11)

Where R refers to the separable regions by threshold segmentation, JH is the pointing hand

joint.

86

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

The first row of Figure 3 shows RGB image and its corresponding depth image, the second

row shows detected hand region.

The pointing fingertip is extracted with the method described in Section 2.2 shown in

Figure 4. The position of pointing fingertip in 3D space can be figured out through

depth-to-skeleton conversion.

Figure 3. Hand Segmentation

(a)

(b)

Figure 4. Pointing Fingertip Detection with Different Pointing Gestures. (a)

Right Hand is Performing Pointing Gesture. (b) Left Hand is the Pointing Hand

As described in Section 2.4, (6) and (7) are used to determinate if the operator performs

pointing gesture. When the operator is pointing at one lamp, his or her fingertip is

approximately stationary. According to some statistics, hand trembling range is within 0 to

4mm. In addition, a lot of experiments have been done to choose a favorable n for effective

pointing gesture recognition.

Rp =

Mr

Ma

(12)

87

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

Where Rp presents the rate of pointing gesture recognition, Mr refers to the frame number

of recognized pointing gesture and Ma refers to the actual pointing frame number.

(a)

(b)

Figure 5. Pointing Gesture Recognition Results. (a) Pointing Gesture

Recognition Rates with Different n. (b) Executive Time with Different n

It could be seen from Figure 5(a) that defining VT as 4mm is applicable. When n is less

than three, its overdone, When n is larger than three, some pointing gestures havent been

recognized. By analysis the n is elected as 3, program running time is about 98ms as shown in

Figure 5(b), which satisfies the real-time requirements. If the displacements of pointing

fingertip are within 0 to 4mm in continuous 3 frames, which means that the operator is

pointing at one target.

4.3. Target Selection

Traditionally, most existing methods for target selection use head-hand line to estimate the

position of pointing target. In this paper, when pointing gesture is recognized, a scalable and

removable virtual touch screen is constructed. If the pointing fingertip locates at one module

of the virtual screen, the corresponding lamp on the wall will turn on. Figure 6 is a simulation

image, which presents that when operators fingertip touches the module 2 on virtual screen,

the corresponding number 2 lamp on the large screen turns on.

Figure 6. Experimental Results of Target Selection

Rc =

Nc

100%

Np

(13)

Where Rc refers to the correct target selection rate, Np is the times of pointing to one target

and Nc is the times of correct target selection.

88

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

In the progress of the experiment, we find that sometimes the pointing fingertip is detected

by mistaken, which directly affects the results of target selection. So as described in Section

2.3, a Kalman filter is applied to record the pointing fingertip motion trajectory to decrease

the false detection.

Experimental results with Kalman filter or not are given in Table 1, Table 2 respectively.

Confusion matrix is used to summarize the results of target selection. Each column of the

matrix represents the recognized target, each row represents the actual targets, and the data

represents the average rates of correct target selection. L1, L2, L3, L4, L5, L6 refer to the six

lamps fixed on the wall as shown in Figure 6, respectively.

Table 1. Results of Target Selection without Kalman Filter

L1

L2

L3

L4

L5

L6

L1

96.9%

2.2%

0

0

0

0

L2

3.1%

97.8%

0

0

0

0

L3

0

0

99.0%

0.4%

0

0

L4

0

0

1.0%

99.6%

0

0

L5

0

0

0

0

96.9%

2.2%

L6

0

0

0

0

3.1%

97.8%

Table 2. Results of Target Selection with Kalman Filter

L1

L2

L3

L4

L5

L6

L1

L2

L3

L4

L5

L6

98.4%

0.5%

0

0

0

0

1.6%

99.5%

0

0

0

0

0

0

99.6%

0

0

0

0

0

0.4%

100%

0

0

0

0

0

0

98.4%

0.5%

0

0

0

0

1.6%

99.5%

To further analyze the proposed method, another six volunteers perform HCI based on

pointing gesture to control the lamps on the wall. The performance of proposed method is

compared with that of methods developed by Yamamoto et al., in [7] and Cheng in [23],

respectively. The comparison result is given in Table 3. One can note that the proposed

method is best by comparison from Table 3.

Table 3. Comparisons of Correct Target Selection Rate in Different

Methods

Rc (%)

Average Rc (%)

Method in [7]

90.1%

Method in [23]

94.8%

Proposal

99.2%

5. Conclusions

A new 3D real-time method is developed for HCI based on pointing gesture recognition.

The proposed method involves the use of a Kinect sensor and a flexible virtual touch screen

89

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

for interacting with a large screen. This method is free from illumination and surrounding

environmental changes using the depth and skeletal map generated by Kinect sensor. In

addition, the target selection is only relevant to operators pointing fingertip position, so its

suitable for both large and small pointing gestures as well as different operators.

Due to the widely application of speech recognition in HCI, our further work will focus on

the combination of speech and visual-multimodal features to improve the recognition rate.

Acknowledges

This work is supported in part by the Natural Science Foundation of China (Grant No.

11176016, 60872117), and Specialized Research Fund for the Doctoral Program of Higher

Education (Grant No. 20123108110014).

Reference

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

90

M. Elmezain, A. Al-Hamadi and B. Michaelis, Hand trajectory-based gesture spotting and recognition using

HMM, Proceedings of International Conference on Image Processing, (2009), pp. 3577-3580.

H.-I Suk, B.-K. Sin and S.-W. Lee, Hand gesture recognition based on dynamic Bayesian network

framework, Pattern Recognition, vol. 9, no. 43, (2010), pp. 3059-3072.

M. Van Den Bergh and L. Van Gool, Combining RGB and ToF cameras for real-time 3D hand gesture

interaction, Proceedings of 2011 IEEE Workshop on Applications of Computer Vision, (2011), pp. 66-72.

C.-B. Park and S.-W. Lee, Real-time 3D pointing gesture recognition for mobile robots with cascade HMM

and particle lter, Image and Vision Computing, vol. 1, no. 29, (2011), pp. 51-63.

R. Kehl and L. V. Gool, Real-time pointing gesture recognition for an immersive environment, Proceedings

of 6th IEEE International Conference on Automatic Face and Gesture Recognition, (2004), pp. 577-582.

J. R. Michael, C. Shaun and J. Y. Li, A multi-gesture interaction system using a 3-D iris disk model for gaze

estimation and an active appearance model for 3-D hand pointing, IEEE Transactions on Multimedia, vol. 3,

no. 13, (2011), pp. 474-486.

Y. Yamamoto, I. Yoda and K. Sakaue, Arm-pointing gesture interface using surrounded stereo cameras

system, Proceedings of International Conference on Pattern Recognition, vol. 4, (2004), pp. 965-970.

M. Van Den Bergh, D. Carton and R. De Nijs, Real-time 3D hand gesture interaction with a robot for

understanding directions from humans, Proceedings of IEEE International Workshop on Robot and Human

Interactive Communication, (2011), pp. 357-362.

Y. Li, Hand gesture recognition using Kinect, Proceedings of IEEE 3rd International Conference on

Software Engineering and Service Science, (2012), pp.196-199.

M. Raj, S. H. Creem-Regehr, J. K. Stefanucci and W. B. Thompson, Kinect based 3D object manipulation

on a desktop display, Proceedings of ACM Symposium on Applied Perception, (2012), pp. 99-102.

A. Sanna, F. Lamberti, G. Paravati, E. A. Henao Ramirez and F. Manuri, A Kinect-based natural interface

for quadrotor control, Lecture Notes of the Institute for Computer Sciences, Social-Informatics and

Telecommunications Engineering, 78 LNICST, (2012), pp. 48-56.

N. Villaroman, D. Rowe and B. Swan, Teaching natural operator interaction using OpenNI and the Microsoft

Kinect sensor, Proceedings of ACM Special Interest Group for Information Technology Education

Conference, (2011), pp. 227-231.

S. Lang, M. Block and R. Rojas, Sign language recognition using Kinect, Lecture Notes in Computer

Science, (2012), pp. 394-402.

L. Cheng, Q. Sun, H. Su, Y. Cong and S. Zhao, Design and implementation of human-robot interactive

demonstration system based on Kinect, Proceedings of 24th Chinese Control and Decision Conference,

(2012), pp. 971-975.

J. Ekelmann and B. Butka, Kinect controlled electro-mechanical skeleton, Proceedings of IEEE Southeast

Conference, (2012), pp. 1-5.

C. Sinthanayothin, N. Wongwaen and W. Bholsithi, Skeleton tracking using Kinect sensor and displaying in

3D virtual scene, International Journal of Advancements in Computing Technology, vol. 11, no. 4, (2012), pp.

213-223.

G. L. Du, P. Zhang, J. H. Mai and Z .L. Li, Markerless Kinect-based hand tracking for robot teleoperation,

International Journal of Advanced Robotic Systems, vol. 1, no. 9, (2012), pp. 1-10.

J. L. Raheja, A. Chaudhary and K. Singal, Tracking of fingertips and centers of palm using Kinect,

Proceedings of 3rd International Conference on Computational Intelligence, Modeling and Simulation, (2011),

pp. 248-252.

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

[19] N. Miyata, K. Yamaguchi and Y. Maeda, Measuring and modeling active maximum fingertip forces of a

human index finger, Proceedings of IEEE International Conference on Intelligent Robots and Systems,

(2007), pp. 2156-2161.

[20] N. Li, L. Liu and D. Xu, Corner feature based object tracking using adaptive Kalman filter, Proceedings of

International Conference on Signal, (2008), pp. 1432-1435.

[21] M. S. Benning, M. McGuire and P. Driessen, Improved position tracking of a 3D gesture-based musical

controller using a Kalman filter, Proceedings of 7th International Conference on New Interfaces for Musical

Expression, (2007), pp. 334-337.

[22] X. Li, T. Zhang, X. Shen and J. Sun, Object tracking using an adaptive Kalman filter combined with mean

shift, Optical Engineering, vol. 2, no. 49, (2010), pp. 020503-1-020503-3.

[23] K. Cheng and M. Takatsuka, Estimating virtual touch screen for fingertip interaction with large displays,

Proceedings of ACM International Conference Proceeding Series, (2006), pp. 397-400.

91

International Journal of Signal Processing, Image Processing and Pattern Recognition

Vol. 6, No. 4, August, 2013

92

You might also like

- 3D Hand Gesture Recognition Based On Sensor Fusion of Commodity HardwareDocument10 pages3D Hand Gesture Recognition Based On Sensor Fusion of Commodity HardwarechfakhtNo ratings yet

- 5416-5183-1-PBDocument9 pages5416-5183-1-PBVA8 blackNo ratings yet

- GIFT in IJAMAIDocument11 pagesGIFT in IJAMAIGDC Totakan MalakandNo ratings yet

- Survey On 3D Hand Gesture RecognitionDocument15 pagesSurvey On 3D Hand Gesture RecognitionMuhammad SulemanNo ratings yet

- A Survey On Sign Language Recognition With Efficient Hand Gesture RepresentationDocument7 pagesA Survey On Sign Language Recognition With Efficient Hand Gesture RepresentationIJRASETPublicationsNo ratings yet

- Improve The Recognition Accuracy of Sign Language GestureDocument7 pagesImprove The Recognition Accuracy of Sign Language GestureIJRASETPublicationsNo ratings yet

- Hand Gesture Recognition Using Neural Networks: G.R.S. Murthy R.S. JadonDocument5 pagesHand Gesture Recognition Using Neural Networks: G.R.S. Murthy R.S. JadonDinesh KumarNo ratings yet

- Real Time Hand Gesture Recognition Using Finger TipsDocument5 pagesReal Time Hand Gesture Recognition Using Finger TipsJapjeet SinghNo ratings yet

- A Gesture Based Camera Controlling Method in The 3D Virtual SpaceDocument10 pagesA Gesture Based Camera Controlling Method in The 3D Virtual SpaceStefhanno Rukmana Gunawan AbhiyasaNo ratings yet

- Dankomb 2000Document9 pagesDankomb 2000api-3709615No ratings yet

- 48 A Albert Prasanna Kumar SimulationDocument6 pages48 A Albert Prasanna Kumar SimulationchfakhtNo ratings yet

- Virtual Paint Application by Hand Gesture RecognitionDocument4 pagesVirtual Paint Application by Hand Gesture RecognitionGaurav MalhotraNo ratings yet

- Finger and Hand Tracking With Kinect SDKDocument6 pagesFinger and Hand Tracking With Kinect SDKXander MasottoNo ratings yet

- Vision Based Real Time Finger Counter For Hand Gesture RecognitionDocument5 pagesVision Based Real Time Finger Counter For Hand Gesture RecognitioncpmrNo ratings yet

- Gesture RecognitionDocument4 pagesGesture Recognitioneshwari2000No ratings yet

- Retrodepth: 3D Silhouette Sensing For High-Precision Input On and Above Physical SurfacesDocument10 pagesRetrodepth: 3D Silhouette Sensing For High-Precision Input On and Above Physical SurfacesJimmy OlayaNo ratings yet

- Finger Tracking in Real Time Human Computer InteractionDocument3 pagesFinger Tracking in Real Time Human Computer InteractionJishnu RemeshNo ratings yet

- Real Time Hand Gesture Movements Tracking and Recognizing SystemDocument5 pagesReal Time Hand Gesture Movements Tracking and Recognizing SystemRowell PascuaNo ratings yet

- Gesture Recognition Based On Kinect: Yunda Liu, Min Dong, Sheng Bi, Dakui Gao, Yuan Jing, Lan LiDocument5 pagesGesture Recognition Based On Kinect: Yunda Liu, Min Dong, Sheng Bi, Dakui Gao, Yuan Jing, Lan LiJHON HUMBERTO PEREZ TARAZONANo ratings yet

- Distance Transform Based Hand Gestures Recognition For Powerpoint Presentation NavigationDocument8 pagesDistance Transform Based Hand Gestures Recognition For Powerpoint Presentation NavigationAnonymous IlrQK9HuNo ratings yet

- 2.literature Review: 2.1 Bare Hand Computer InteractionDocument19 pages2.literature Review: 2.1 Bare Hand Computer Interactionapi-26488089No ratings yet

- Finger Tracking in Real Time Human Computer InteractionDocument14 pagesFinger Tracking in Real Time Human Computer InteractionToyeebNo ratings yet

- Dynamic Hand Gesture Recognition A Literature ReviewDocument8 pagesDynamic Hand Gesture Recognition A Literature Reviewc5r3aep1No ratings yet

- Kinect and OpenCV for digits recognition using hand static gesturesDocument8 pagesKinect and OpenCV for digits recognition using hand static gesturesivan fernandezNo ratings yet

- Hand Gesture Recognition Using RGB-D SensorsDocument19 pagesHand Gesture Recognition Using RGB-D SensorsAnimesh GhoshNo ratings yet

- Head and Hand Detection Using Kinect Camera 360Document7 pagesHead and Hand Detection Using Kinect Camera 360White Globe Publications (IJORCS)No ratings yet

- Sensors: Human-Computer Interaction Based On Hand Gestures Using RGB-D SensorsDocument19 pagesSensors: Human-Computer Interaction Based On Hand Gestures Using RGB-D SensorsshitalchiddarwarNo ratings yet

- A Vision-Based Method To Find Fingertips in A Closed HandDocument10 pagesA Vision-Based Method To Find Fingertips in A Closed HandIbrahim IsleemNo ratings yet

- Sixth Sense TechnologyDocument18 pagesSixth Sense TechnologySanthu vNo ratings yet

- Indian Sign Language Gesture ClassificationDocument22 pagesIndian Sign Language Gesture ClassificationVkg Gpta100% (2)

- (2011) Comparing Gesture Recognition Accuracy Using Color and Depth InformationDocument7 pages(2011) Comparing Gesture Recognition Accuracy Using Color and Depth InformationJayan KulathoorNo ratings yet

- Vision Based Gesture Recognition Systems - A SurveyDocument4 pagesVision Based Gesture Recognition Systems - A SurveyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Depth N Clor For SegDocument7 pagesDepth N Clor For SegSushilKesharwaniNo ratings yet

- Finger Tracking in Real Time Human Computer InteractionDocument12 pagesFinger Tracking in Real Time Human Computer InteractionPronadeep Bora89% (9)

- Optimized Hand Gesture Based Home Automation For FeeblesDocument11 pagesOptimized Hand Gesture Based Home Automation For FeeblesIJRASETPublicationsNo ratings yet

- Vision Based Hand Gesture RecognitionDocument6 pagesVision Based Hand Gesture RecognitionAnjar TriyokoNo ratings yet

- Gesture Controlled Virtual Mouse Using AIDocument8 pagesGesture Controlled Virtual Mouse Using AIIJRASETPublicationsNo ratings yet

- Ijcse V1i2p2 PDFDocument9 pagesIjcse V1i2p2 PDFISAR-PublicationsNo ratings yet

- Hand Gesture Recognition System For Image Process IpDocument4 pagesHand Gesture Recognition System For Image Process IpAneesh KumarNo ratings yet

- SSRN Id4359350Document21 pagesSSRN Id4359350YAOZHONG ZHANGNo ratings yet

- Full TextDocument6 pagesFull TextglidingseagullNo ratings yet

- Enabling Cursor Control Using On Pinch Gesture Recognition: Benjamin Baldus Debra Lauterbach Juan LizarragaDocument15 pagesEnabling Cursor Control Using On Pinch Gesture Recognition: Benjamin Baldus Debra Lauterbach Juan LizarragaBuddhika PrabathNo ratings yet

- Gesture Based Interaction in Immersive Virtual RealityDocument5 pagesGesture Based Interaction in Immersive Virtual RealityEngineering and Scientific International JournalNo ratings yet

- Real Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvDocument4 pagesReal Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvRamírez Breña JosecarlosNo ratings yet

- Research Article: Hand Gesture Recognition Using Modified 1$ and Background Subtraction AlgorithmsDocument9 pagesResearch Article: Hand Gesture Recognition Using Modified 1$ and Background Subtraction AlgorithmsĐào Văn HưngNo ratings yet

- Gesture Volume Control Project SynopsisDocument5 pagesGesture Volume Control Project SynopsisVashu MalikNo ratings yet

- Ricky JacobDocument1 pageRicky JacobManojkumar VsNo ratings yet

- The Kinect Sensor in Robotics Education: Michal Tölgyessy, Peter HubinskýDocument4 pagesThe Kinect Sensor in Robotics Education: Michal Tölgyessy, Peter HubinskýShubhankar NayakNo ratings yet

- A Robust Static Hand Gesture RecognitionDocument18 pagesA Robust Static Hand Gesture RecognitionKonda ChanduNo ratings yet

- Vision-Based Hand Gesture Recognition For Human-Computer InteractionDocument4 pagesVision-Based Hand Gesture Recognition For Human-Computer InteractionRakeshconclaveNo ratings yet

- Fast Hand Feature Extraction Based On Connected Component Labeling, Distance Transform and Hough TransformDocument2 pagesFast Hand Feature Extraction Based On Connected Component Labeling, Distance Transform and Hough TransformNishant JadhavNo ratings yet

- Cheng2019 Article JointlyNetworkANetworkBasedOnCDocument15 pagesCheng2019 Article JointlyNetworkANetworkBasedOnCVladimirNo ratings yet

- Hand Gesture Recognition For Hindi Vowels Using OpencvDocument5 pagesHand Gesture Recognition For Hindi Vowels Using OpencvInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Wieldy Finger and Hand Motion Detection For Human Computer InteractionDocument5 pagesWieldy Finger and Hand Motion Detection For Human Computer InteractionerpublicationNo ratings yet

- Hand Gesture Recognition Techniques For Human Computer Interaction Using OpencvDocument6 pagesHand Gesture Recognition Techniques For Human Computer Interaction Using Opencvmayur chablaniNo ratings yet

- Real-Time Robotic Hand Control Using Hand GesturesDocument5 pagesReal-Time Robotic Hand Control Using Hand GesturesmenzactiveNo ratings yet

- A Survey On Gesture Pattern Recognition For Mute PeoplesDocument5 pagesA Survey On Gesture Pattern Recognition For Mute PeoplesRahul SharmaNo ratings yet

- Wireless Cursor Controller Hand Gestures For Computer NavigationDocument8 pagesWireless Cursor Controller Hand Gestures For Computer NavigationIJRASETPublicationsNo ratings yet

- Hand Gesture Recognistion Based On Shape Parameters Using Open CVDocument5 pagesHand Gesture Recognistion Based On Shape Parameters Using Open CVlambanaveenNo ratings yet

- Uaswo71 191216 1310 59355Document87 pagesUaswo71 191216 1310 59355PanosKarampisNo ratings yet

- Laser Pointer Interaction - REFERREDDocument6 pagesLaser Pointer Interaction - REFERREDPanosKarampisNo ratings yet

- p167 KimDocument10 pagesp167 KimPanosKarampisNo ratings yet

- Docker For Java DevelopersDocument63 pagesDocker For Java DevelopersJose X Luis100% (1)

- Tracking Multiple Laser Pointers For Large Screen InteractionDocument2 pagesTracking Multiple Laser Pointers For Large Screen InteractionPanosKarampisNo ratings yet

- Aalborg UniversitetDocument9 pagesAalborg UniversitetPanosKarampisNo ratings yet

- Laser Pointer Interaction - REFERREDDocument6 pagesLaser Pointer Interaction - REFERREDPanosKarampisNo ratings yet

- Michael P. Papazoglou - Web Services Principles and TechnologyDocument393 pagesMichael P. Papazoglou - Web Services Principles and TechnologyPanosKarampis0% (1)

- Emerging TechnologiesDocument38 pagesEmerging TechnologiesPanosKarampisNo ratings yet

- Geing'The'Design'Right': Lab'#1:'Sketching'Document7 pagesGeing'The'Design'Right': Lab'#1:'Sketching'PanosKarampisNo ratings yet

- Avr DudeDocument34 pagesAvr DudeRoger Salomon QuispeNo ratings yet

- Emerging U I TechnologiesDocument23 pagesEmerging U I TechnologiesPanosKarampisNo ratings yet

- (READ) 3D Interaction With Volumetric Medical Data Experiencing The Wiimote-REFERREDDocument6 pages(READ) 3D Interaction With Volumetric Medical Data Experiencing The Wiimote-REFERREDPanosKarampisNo ratings yet

- AvariceDocument5 pagesAvaricesilver414No ratings yet

- Interacting at A Distance Measuring The Performance of Laser PointersDocument8 pagesInteracting at A Distance Measuring The Performance of Laser PointersPanosKarampisNo ratings yet

- Avr Libc User ManualDocument417 pagesAvr Libc User ManualitzjusmeNo ratings yet

- User-Defined Gestures For Surface ComputingDocument10 pagesUser-Defined Gestures For Surface ComputingtinkrboxNo ratings yet

- Fat Finger ReportDocument91 pagesFat Finger ReportPanosKarampisNo ratings yet

- Valvulas de Controle Ari Stevi Pro 422 462 - 82Document20 pagesValvulas de Controle Ari Stevi Pro 422 462 - 82leonardoNo ratings yet

- Mixer SoundDocument12 pagesMixer SoundRebeca FernandezNo ratings yet

- 18a.security GSM & CDMADocument18 pages18a.security GSM & CDMAwcdma123No ratings yet

- Model ICB 100-800 HP Boilers: Performance DataDocument4 pagesModel ICB 100-800 HP Boilers: Performance DatasebaversaNo ratings yet

- Reb500 Test ProceduresDocument54 pagesReb500 Test ProceduresaladiperumalNo ratings yet

- Model 3410: Shear Beam Load CellsDocument4 pagesModel 3410: Shear Beam Load CellsIvanMilovanovicNo ratings yet

- Quiz 3 Database Foundation Sesi 1 PDFDocument4 pagesQuiz 3 Database Foundation Sesi 1 PDFfajrul saparsahNo ratings yet

- Suva Exemplu Metoda-E BriciDocument35 pagesSuva Exemplu Metoda-E BricisilvercristiNo ratings yet

- Theory and Applications of HVAC Control Systems A Review of Model Predictive Control MPCDocument13 pagesTheory and Applications of HVAC Control Systems A Review of Model Predictive Control MPCKhuleedShaikhNo ratings yet

- Oxmetrics ManualDocument238 pagesOxmetrics ManualRómulo Tomé AlvesNo ratings yet

- CC 9045 Manual enDocument20 pagesCC 9045 Manual enKijo SupicNo ratings yet

- Boq ChandigarhDocument2 pagesBoq ChandigarhVinay PantNo ratings yet

- SIRIM QAS Intl. Corporate ProfileDocument32 pagesSIRIM QAS Intl. Corporate ProfileHakimi BobNo ratings yet

- ResumeDocument3 pagesResumedestroy0012No ratings yet

- Comparative Study of Bioethanol and Commercial EthanolDocument21 pagesComparative Study of Bioethanol and Commercial Ethanolanna sophia isabelaNo ratings yet

- Transformer Losses CalculationDocument4 pagesTransformer Losses Calculationwinston11No ratings yet

- Refractories: A Guide to Materials and UsesDocument4 pagesRefractories: A Guide to Materials and UsesMeghanath AdkonkarNo ratings yet

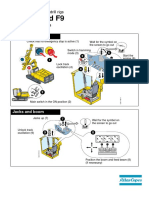

- Start The Engine For ROC F6 and F9Document2 pagesStart The Engine For ROC F6 and F9aaronNo ratings yet

- Cooling Water System : Daily Log Sheet Date: .. Shift TimeDocument4 pagesCooling Water System : Daily Log Sheet Date: .. Shift TimeNata ChaNo ratings yet

- Apxvll13 CDocument2 pagesApxvll13 C1No ratings yet

- MET 473-3 Mechatronics - 3-Pneumatics and Hydraulic Systems-2Document16 pagesMET 473-3 Mechatronics - 3-Pneumatics and Hydraulic Systems-2Sudeera WijetungaNo ratings yet

- ch05 HW Solutions s18Document7 pagesch05 HW Solutions s18Nasser SANo ratings yet

- Rice Milling: Poonam DhankharDocument9 pagesRice Milling: Poonam DhankharWeare1_busyNo ratings yet

- 2 Port USB 2.0 Cardbus: User's ManualDocument28 pages2 Port USB 2.0 Cardbus: User's Manualgabi_xyzNo ratings yet

- Technical Guidline On Migration TestingDocument30 pagesTechnical Guidline On Migration Testingchemikas8389No ratings yet

- Simple and Inexpensive Microforge: by G. HilsonDocument5 pagesSimple and Inexpensive Microforge: by G. Hilsonfoober123No ratings yet

- Ormulary: Photographers'Document3 pagesOrmulary: Photographers'AndreaNo ratings yet

- System Analysis, System Development and System ModelsDocument56 pagesSystem Analysis, System Development and System Modelsmaaya_ravindra100% (1)

- Valvula Solenoide Norgren v40Document4 pagesValvula Solenoide Norgren v40Base SistemasNo ratings yet