Professional Documents

Culture Documents

Implementing a waveform recovery algorithm on FPGAs using a zonal method (Hudgin

Uploaded by

manuales1000Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Implementing a waveform recovery algorithm on FPGAs using a zonal method (Hudgin

Uploaded by

manuales1000Copyright:

Available Formats

Implementation of a waveform recovery algorithm on FPGAs

using a zonal method (Hudgin)

J.J. Daz1,a , Alberto Davila-Gonzalez2 , L.F. Rodrguez-Ramos1 , J.M. Rodrguez-Ramos2 , Y.Martn1 ,

and J. Piqueras1

1

2

Instituto de Astrofisica de Canarias, Santa Cruz de Tenerife 38205, Spain

University of La Laguna, Tenerife, Spain

Abstract. The advent of Extremely Large Telescopes impose the existence of wavefront correctors to achieve

diffraction limited images. The light collection area is more evidently affected by atmospheric turbulences and

image distortion is more relevant than in smaller telescopes. AO system where mostly used to improve the performances while now turn to be a real need. Though the existence of a wavefront reconstructor is mandatory for

the next generation of telescopes, the requirements imposed for such large apertures are to be examined and the

new challenges must be faced. The multiplication factor, in terms of information to be processed and number of

actuators to be controlled, in conjunction with the fast response imposed by the atmosphere turbulence variation,

determine a new dimension of the technical system. This paper shows the performances of a zonal reconstruction

algorithm (Hudgin) for waveform recovery implemented on FPGAs.

1 Introduction

Changes in the diffraction index of the media, due to variations in parameters affecting the atmosphere,

produce wave front distortions. These aberrations affect the spatial resolution of the objects and disperse the photons in a bigger area on the detector making it less effective in the detection of faint

object. Today, and next generation telescope developments, claim for the detection of faint objects

while obtaining the best spatial resolution possible. If with a 10 m telescope, located in an observatory

with optimum observing conditions, a 0.4 arcsec structure can be resolved, when this resolution is

improved down to 0.04 arcsec, the resulting system performance is similar to what could be obtained

in a 100 m telescope under the same atmospheric conditions with no wavefront correction. This gives

an idea of the limitations introduced by the atmosphere and the importance of AO systems in the next

generation of telescopes where diameters from 30 to 100 meters are the goal. Today most observatories

provide AO facilities to allow a better spatial resolution as an additional feature. For next generation

of telescopes the huge diameters make it mandatory. The lost in spatial resolution, which apparently

results in an effective reduction of the total size of the telescope, is unacceptable as every portion of the

collecting area has to be effective for scientific and economic reasons. From now on the existence of

AO system is a need of the telescope and all ongoing projects consider the development of this as part

of the activities to be carried out to provide a successful facility. The size of these new infrastructures

poses additional requirements to the AO systems. There is a scale factor problem, now a larger area

of the waveform has to be sampled and better sampling resolution is required, but also an increase

in the system overall performance that results in faster response is mandatory. To adapt the existing

hardware to achieve the new requirements is not straightforward. The increase in calculation power

is not obviously achieved by multiplying the existing HW. Extrapolating the total volume and power

consumption of existing systems this turns to be a real limitation that prompts for new solutions. High

performance, compact in size, reliability and low power consumption are parameters that guide the

design of the new generation of AO systems.

a

e-mail: jdg@iac.es

Adaptive Optics for ELTs

Fig. 1. AO System components

2 AO SYSTEM FUNCTIONAL DESCRIPTION

The objective of an AO system that we consider here is the correction of the wavefront that reaches the

telescope with aberrations due to distortions introduced by the atmosphere along the light path. The

AO system requires three functions: To detect the wavefront, determine what waveform should have

reached the telescope in absence of a distorting media such as the atmosphere, and generate all the

required steps to produce a correction to compensate for the aberrations. This divides the AO system

in three main subsystems:

The waveform sensor: Detects the light entering the telescope

The waveform detection: Using the information obtained from the waveform sensor determines

the local variations of the waveform

The waveform recovery algorithm: Using the information provided by the local variations of the

incoming waveform recovers the information of the waveform that should have reached the telescope in absence of distortion

The calculation of the actuations required by the compensating component: Once the waveform

information is known then the compensation parameters that need to be applied to the compensator

are derived.

An active component: Usually a deformable mirror that may adapt to the required shape to compensate from the aberration.

3 AO SYSTEM HARDWARE

3.1 Sensor description

A Shark-Harmann sensor is a well known waveform sensor that uses an array of microlenses in

front of a detector to project subapertures of the waveform on it. The image of every single subaperture

follow into a section of the detector and, in the case of absence of distortion, a single light point would

be projected into the corresponding section of the detector. The effect of the aberration results in a

displacement of such projection producing dx-dy displacements values for each subaperture.

The number of microlenses that compose the array determines the spatial sampling of the waveform. Every subaperture is projected into a section of the detector where the displacement has to be

J.J. Daz et al.: Performance of a modal zone wavefront recovery algorithm (Hudgin)

Fig. 2. Shack-Hartmann Sensor

Fig. 3. Projection of subapertures onto the detector for a Shack-Hartmann sensor.

computed. The number of pixels corresponding to a subaperture determines the accuracy in resolving

the displacement of the subaperture projection dx-dy.

3.2 Estimation of the number of subapertures

For Extremely Large Telescopes (ELTs), with collecting apertures from 30 to 100 meters, the size

of the focal plane is bigger than for present telescopes. Even if the spatial sampling resolution is to

be maintained, the total number of subapertures will increase with a power of two factor. It is thus

advisable to assume that the number of apertures required to obtain a reasonable spatial sampling is in

the order of 32 * 32, which means that a 32 * 32 or larger microlenses array will be used.

3.3 Resolution. Number of pixels per subaperture

Also, to allow a better resolution in the gradient of the waveform in a certain subaperture, as a result

of the atmospheric distortion and indicated by the displacement of the projection of every projection on

the detector, the number of pixels per subaperture should be increased. To be on a safe side a number

of 32 * 32 pixels per subaperture is considered.

3.4 DX-DY matrix calculation

After the detection of photons using the Shack-Hartmann sensor, the gradient of the waveform

for each subaperture needs to be computed. Two matrixes DX and DY, with the dx and dy values

corresponding to the deviations of the centroid from the nominal position for every subaperture, are

obtained. Different methods have been used:

Adaptive Optics for ELTs

Fig. 4. The Hudgin Formula.

3.4.1 Centroiding or Gravity method

This method uses the well known gravity or weight method where every pixel contributes with a

factor corresponding to the total signal received multiplied by a factor representing the distance in x

or y to the nominal position in the non distorted waveform.

3.4.2 Image Correlation

Also image correlation techniques are applied to each subaperture to compare consecutive images

and to determine the DX and DY matrixes.

3.5 Waveform Recovery

Once the information of the local variations, gradients, of the waveform for every subaperture

is known, then the next step is to obtain the value of the waveform starting from the information

contained in the DX and DY matrixes. This method is the objective of this work and will be detailed.

3.6 Transformations and Deformable Mirror actuation

Once the waveform received is known, then the correction that needs to be applied to obtain a

non distorted waveform has to be calculated and the physical signals to be applied to the correcting

element, normally a deformable mirror, generated. This is the last process in the sequence that has

to be repeated with a time response fast enough to produce the correction before the distortion of the

incoming waveform changes significantly.

4 THE HUDGIN METHOD

4.1 Theory

Hudgin provides an iterative mathematical method that allows the reconstruction of a bidimensional function from the information contained in the local variations in both directions. The algorithm

is represented by the formula:

(M) = 1/4((M1)

+ (M1)

+ (M1)

+ (M1)

+ 1 + 2 + 3 + 4 )

1

2

3

4

M represents the algorithm iteration index

The phase values of neighboring subapertures are represented by 1 , 2 , 3 , 4

And the gradients are represented by 1 , 2 , 3 , 4

The Hudgin method, as is based on local variations of the function, does not allow the recovery of

the continuum value of the function. This is not necessary for our purpose as our need is to correct for

the distortions.

4.2 Numerical simulations

J.J. Daz et al.: Performance of a modal zone wavefront recovery algorithm (Hudgin)

Fig. 5. Waveform reconstruction from an image generated following the Kolmogorof statistics.From Top left to

bottom right. Initial waveform, DX-DY matrices and comparison of original and recovered function with error

for non border correction (middle) and with border correction (bottom)

Some numerical simulations have been performed to understand the capabilities of this method

and the feasibility to implement it in FPGAs where the floating point arithmetic is not efficient.

4.3 Accuracy vs number of iterations

The accuracy of the final result depends on the number of iterations performed. This directly affects

the time required to obtain a result that matches, with reasonable error, to the actual waveform reaching

the sensor. The actual number of iteration cycles may depend on the characteristics of the waveform

function. It has been found that, with little difference, for Gaussian and Kolmogorov like waveforms

iterations in the order of 500 give results with error results below 10-3 being the error in the order of

10-2 for about 200 iterations. More than 500 iterations will not produce significant error reduction and

thus this figure can be considered as the required number of iterations for a complete recovery of the

incoming waveform.

4.4 Singularities in the borders of the aperture

There is also a practical problem that has to be faced when applying the method to a limited area.

Hudgin applies to infinite surfaces, and this is the case of light, but as our aperture is limited we have

to work with limited waveforms to a certain area. We have to face the contour problems and the fact

that there are missing neighbors in the borders of the aperture. It has been assumed that the missing

subapertures required to complete the algorithm for the contour have the same value as the value of the

aperture of interest. It has proven to be a convenient assumption as the numerical simulations provided

good results

4.5 Numerical simulations

The actual application will deal with atmospheric like distorted waveforms. To validate the method

it is required to know the initial waveform to compare it with the result obtained after running the

method. Then it is necessary to synthesize atmospheric like waveforms. Assuming that Kolmogorov

statistics represents a valid model we used waveforms using this statistics. The result was that the

method works well for this type of waveforms.

5 Algorithm implementation in FPGAs. Feasibility.

5.1 Fix ponint vs floating point arithmetic.

Once the validity of the algorithm to recover waveforms as those likely to be produced by the

atmosphere has been proved, the next step is to provide a physical implementation of the algorithm

into a HW able to perform the required calculations in the time frame imposed by the application.

As mentioned before, the total time available to correct from waveform distortion, from the image

Adaptive Optics for ELTs

Fig. 6. Differences between Floating point and fixed point arithmetic simulations.

acquisition till compensation, has to be less than 10 ms. It is then advisable to make this computation,

and all the computations and actions required to compensate the aberrations, in the minimum time

possible. This work pretends to produce dedicated HW to make this calculations at the fastest speed

possible. The circuits will be implemented in FPGA. The HW inside the FPGA is rather efficient if

calculations are made in fixed point arithmetic. First it has to be demonstrated that the results, obtained

with this arithmetic follows the results in the previous simulations with floating point arithmetic. A

couple of assumptions have been made to provide relevant results:

Number of subapertures: We assume that the maximum number of subapertures is 256 * 256. This

parameter determines the maximum spatial information of the waveform. The spatial resolution.

Number of pixels per subaperture: We assume that the maximum number of pixels corresponding to the area of the detector associated to each subaperture is 256 * 256. This determines the

maximum resolution in the calculations of the dx and dy values. We then require 16 bits where 8

bits determine the subaperture while the other 8 bits indicate the position of the centroid, dx or dy,

within a particular subaperture. These numbers are defined in excess and will give a worse case in

terms of performance as the calculations will be more than those required by a real system.

The results obtained using Fixed and Floating point arithmetic have been compared and the error

found is in the order of 10-3. This is in the order of magnitude of the error in the waveform recovered

for floating point arithmetic and thus it can be concluded that the use of fixed point arithmetic will not

pose any significant error. The HW implementation on FPGA of the circuits to perform the Hudgin

algorithm is therefore feasible.

5.2 Design architecture

The circuit architecture has been design to facilitate parallelism. To calculate the new phase matrix

there will be as many circuits as files of subapertures operating in parallel. All the phases of a particular

column of subapertures is calculated in parallel

5.3 Memory

The values corresponding to the initial differences dx and dy will be supplied in practice by a

previous circuit that, using the centroiding, correlation or any other method will calculate them. As this

is out of the scope of our circuit, these initial values are considered as available and stored in memory

to be supplied to our circuit. There will be another memory, the phase memory, that is initially blank

and will be updated with the phase recovered as a result of our algorithm. This memory is updated

each iteration.

5.4 State Machine

The process flow is controlled using a state machine that performs the same operations in every

iteration cycle. The steps followed for every calculation consist of:

J.J. Daz et al.: Performance of a modal zone wavefront recovery algorithm (Hudgin)

Fig. 7. Memory architecture with DX-DY and Phase values.

Read the dx, dy and phase values: Required for the calculations of a particular subaperture. As the

state machine is based on a column index step, a line phase calculator requires 1 phase value, 2 dx

and 2 dy values. This means that the values of 3 consecutive columns are required to obtain the

phase corresponding to the aperture under consideration. The result of the first column of phases

is calculated after the data from the 2 first columns, plus the assumed values for column -1, are

available.

Operate the results: Once the dx, dy and phase values are available then the operations can be

performed. There are a couple of singularities:

The subapertures in the borders do not have neighbours and the missing values for the phase

are considered to have the same value as the phase to of the point under consideration during

the previous iteration

There is no chance to make any operation till the values of the 2 first columns are received.

Once the first 2 columns are read then the phase of the first singular column is calculated

assuming that there is a column 0 whose phase values equals the phase values of column 1.

Once this singular section has been covered then a new column of phases will be updated for

every new column of subapertures that is read.

To update the phase matrix: Once the new value of the phase has been calculated, before reading a

new column of phases, the new values of the column are updated in the phase memory.

5.5 Resources

The circuit has been described using VHDL as the behavioral description and planned for implementation on an FPGA. No assumptions of a particular FPGA architecture or custom resources have

been taken into account and thus further optimization is possible by customizing the components according to the particular FPGA resources. To provide the results and make an estimation of the benefits

given by this hardware approach the circuit has been implemented into a VIRTEX 5 FPGA from Xilinx. It is considered for 32 * 32 subapertures and uses 16 bit resolution. The results are shown in Fig

9.

6 Accuracy results

The testing system was designed to allow introducing the same DX, DY matrixes as used in the mathematical computations. The phase matrix was recovered and stored in a file after the simulation of a

circuit run. This resulting matrix was compared to the results obtained numerically. The same result

was obtained from both methods for equal number of iterations and different input waveforms. This

validates the calculations performed by the circuit inside the FPGA.

Adaptive Optics for ELTs

Virtex5

xc5vlx30-3ff324

Flip Flops

LUTs

Block RAM

Slices

Memory (KB)

Used

Available

% Used

4700

9357

48

2787

1710

51840

51840

96

12960

34654

9%

18%

50%

21%

49%

Fig. 8. Memory architecture with DX-DY and Phase values.

Fig. 9. Post Place and Route simulation results.

7 Time performance

A key parameter, that indicated the need of a HW implementation was the time required to run the

algorithm. The system post place and route simulations were done and the results are shown in Fig

10. The result, obtained implementing the circuit in this particular FPGA but with no optimization to

accommodate the circuits to the particular FPGA resources, for:

With a 100 MHz master clock

A full run with 10 iterations

For 32 * 32 sub-apertures and 16 bit resolution

The total time required is 45sec which means roughly 4.5sec per iteration.

If the error of the waveform recovered by the algorithm is acceptable after 200 iterations, then 0,9

ms are required to obtain the initial waveform. This implementation makes it an ideal choice for AO

systems such as those required by ELTs, where the number of phase sub-apertures 128*128 or even

256 *256 is considered.

The time performance of this circuit is not a limitation factor in the AO system

A proper reception sequence of the local variations of the wavefront allows processing in parallel

while still receiving the remaining information.

To allow a convenient sequence of local variation information the corresponding circuit and Phase

sensor architecture need to be considered from the design phase.

8 Comparison with previous implementations

The fastest implementation known for this algorithm is based on GNUs and the performance of this

implementation on FPGAs is about 2 orders of magnitude faster.

J.J. Daz et al.: Performance of a modal zone wavefront recovery algorithm (Hudgin)

9 Optimization

The optimization effort to improve time performance and resource consumption has been limited and

there is still margin to improve the results but with the present results it can be concluded that using the

proper HW architecture of the AO system components a noticeable improvement in time performance

could be obtained. Some of these key factors are mentioned.

10 Parallelism

It is advisable to run the algorithms in parallel and apply the corrections to different sections of the

waveform as soon as they are available. The waveform variations take place along time and the fastesr

the correction the better the result.

11 Detector Architecture

Referring only to the detector needs in terms of speed there is nothing new in this aspect. It is relevant

that the processing of the DX-DY matrices can only been calculated once all the pixels corresponding

to the subaperture are available. This indicates that the ideal detector architecture would provide an

output channel per aperture and all this channels, al least those channels corresponding to subapertures

in the same column of subapertures, are read and delivered with no delay. As this may imply too many

outputs, the configurations should be done in such a way that the pixels corresponding to a same

column are read at the same time with no dead times.

12 DX-DY HW Improvements

If the Hudgin algorithm is to be used then the subsystem used to calculate the difference matrixes

should deliver the DX and DY values column after column to facilitate the process as planned. Every

new column will provide enough information to start running the algorithm to recover the phase of

every aperture in the column.

13 Waveform recovery

Finally the Hudgin method would run as described in this paper but iterating not the full matrix of

apertures at a time but every column of subapertures independently. This would produce a column of

recovered phases after the iterations of such column of subapertures is finished.

14 Conclusions

* The time performance of this circuit is not a limitation factor in the AO system

* A proper reception sequence of the local variations of the wavefront allows processing in parallel

while still receiving the remaining information.

* To allow a convenient sequence of local variation information the corresponding circuit and

Phase sensor architecture need to be considered from the design phase.

Acknowledgments

This work has been partially funded by the Spanish Programa Nacional I+D+i (Project DPI 200607906) of the Ministerio de Educacion y Ciencia, and also by the European Regional Development

Fund (ERDF).

Adaptive Optics for ELTs

References

1. J.M. Hernandez, Atmospheric wavefront phase recovery using specialized hardware: GPUs and

FPGAs, SPIE (2005)

2. J.M.R. Ramos, Deteccion de frente de onda. Aplicacion a tecnicas de alta resolucion espacial y

alineamiento de superficies o pticas segmentadas (2005)

3. Y. Martn, Fixed-point vs Floating-point arithmetic comparison for adaptative optics real time

control computation (????)

You might also like

- Imaging Radar Simulation in Realistic Environment Using Shooting and Bouncing Rays TechniqueDocument7 pagesImaging Radar Simulation in Realistic Environment Using Shooting and Bouncing Rays TechniqueAsd DsaNo ratings yet

- Calibration For Increased Accuracy of The Range Imaging Camera SwissrangerDocument6 pagesCalibration For Increased Accuracy of The Range Imaging Camera SwissrangerEgemet SatisNo ratings yet

- Design of Linear Array Transducer Using Ultrasound Simulation Program Field-IIDocument7 pagesDesign of Linear Array Transducer Using Ultrasound Simulation Program Field-IIinventyNo ratings yet

- IWEX: Direct Ultrasonic Imaging of Subsurface DefectsDocument5 pagesIWEX: Direct Ultrasonic Imaging of Subsurface DefectsIndigo WaveNo ratings yet

- Application of LasersDocument9 pagesApplication of LasersAlexander DeckerNo ratings yet

- muller2020 (1)Document4 pagesmuller2020 (1)AHMADNo ratings yet

- Block-Matching Sub-Pixel Motion Estimation From Noisy, Under-Sampled Frames - An Empirical Performance EvaluationDocument10 pagesBlock-Matching Sub-Pixel Motion Estimation From Noisy, Under-Sampled Frames - An Empirical Performance Evaluationjuan.vesaNo ratings yet

- Paper Velocimeter SPIE RS07Document12 pagesPaper Velocimeter SPIE RS07Mounika ReddyNo ratings yet

- An UWB Through-The-Wall Radar With 3D ImagingDocument4 pagesAn UWB Through-The-Wall Radar With 3D ImagingMarc SchortgenNo ratings yet

- Opposite Phased Transducer Opt For Sizing of DefectsDocument12 pagesOpposite Phased Transducer Opt For Sizing of DefectsprabuNo ratings yet

- Michelson InterferometerDocument5 pagesMichelson InterferometerJiya KhanNo ratings yet

- Ultra-Wideband Microwave Imaging Via Space-Time BeamformingDocument27 pagesUltra-Wideband Microwave Imaging Via Space-Time BeamformingRobin Nojibur RahmanNo ratings yet

- Industrial and Measurement Applications of LasersDocument7 pagesIndustrial and Measurement Applications of LasersShaik RoshanNo ratings yet

- Radio Occultation Signal Analysis: ACE-Scientific Support StudyDocument38 pagesRadio Occultation Signal Analysis: ACE-Scientific Support StudySetyperNo ratings yet

- Era Paper1Document6 pagesEra Paper1john_j7No ratings yet

- Unit 1Document42 pagesUnit 1manojmuthyalaNo ratings yet

- FOL Unit 4Document7 pagesFOL Unit 4Sudhar SanNo ratings yet

- A Design of An Acousto-Optical SpectrometerDocument4 pagesA Design of An Acousto-Optical Spectrometeranasrl2006No ratings yet

- An Automatic Atmospheric Correction Algorithm For visible/NIR ImageryDocument9 pagesAn Automatic Atmospheric Correction Algorithm For visible/NIR ImageryVladimirodipostovNo ratings yet

- Spatial Sampling Issues in FK Analysis of Surface WavesDocument11 pagesSpatial Sampling Issues in FK Analysis of Surface WavesStefano VignaNo ratings yet

- Front-Vs Back-Illuminated CCD Cameras For Photometric Surveys: A Noise Budget AnalysisDocument4 pagesFront-Vs Back-Illuminated CCD Cameras For Photometric Surveys: A Noise Budget Analysisvideo76No ratings yet

- Multifocal AntennaDocument5 pagesMultifocal AntennaLazni NalogNo ratings yet

- Sensor Posicion Luz Art - DesbloqueadoDocument6 pagesSensor Posicion Luz Art - DesbloqueadoAngel SanchezNo ratings yet

- Experimental Investigation of Factors Affecting Stability of Interferometric Measurements With Ground Based Noise Waveform SARDocument5 pagesExperimental Investigation of Factors Affecting Stability of Interferometric Measurements With Ground Based Noise Waveform SARkudriashovvladimirNo ratings yet

- Detection of Water Leaks in Supply Pipes With Active Microwave GPR Sensors and Electromagnetic ModellingDocument3 pagesDetection of Water Leaks in Supply Pipes With Active Microwave GPR Sensors and Electromagnetic ModellingSanjay Kumar BeheraNo ratings yet

- Zhang2019-Teori RUmus IMUDocument5 pagesZhang2019-Teori RUmus IMUAlfi FajriNo ratings yet

- RCS VnaDocument12 pagesRCS VnaMarius CheroiuNo ratings yet

- PhysRevLett 131 053803Document6 pagesPhysRevLett 131 053803Marco SIMONENo ratings yet

- SPECTRALDocument6 pagesSPECTRALlogu_thalirNo ratings yet

- Absolute Phase and Amplitude Mapping of Surface Acoustic WavesDocument4 pagesAbsolute Phase and Amplitude Mapping of Surface Acoustic WavesEvilásio SouzaNo ratings yet

- Development of Fiber Optics Sensor For Partial Discharge MeasurementDocument37 pagesDevelopment of Fiber Optics Sensor For Partial Discharge MeasurementPintu PatelNo ratings yet

- 23541-Evaluation of A New Tool For Heliostat Field Flux Mapping-1Document8 pages23541-Evaluation of A New Tool For Heliostat Field Flux Mapping-1tiemplesNo ratings yet

- SAR image range resolution using MUSIC algorithmDocument5 pagesSAR image range resolution using MUSIC algorithmletiendung_dtvt7119No ratings yet

- Two-Wavelength Phase-Sensitive OTDR Sensor Using Perfect Periodic Correlation Codes For Measurement Range Enhancement, Noise Reduction and Fading CompensationDocument15 pagesTwo-Wavelength Phase-Sensitive OTDR Sensor Using Perfect Periodic Correlation Codes For Measurement Range Enhancement, Noise Reduction and Fading CompensationmanelNo ratings yet

- Ask A Demonstrator If You Are Unsure How To Use The Apparatus. Hand in Answers To DR - Waters at The End of The SessionDocument3 pagesAsk A Demonstrator If You Are Unsure How To Use The Apparatus. Hand in Answers To DR - Waters at The End of The SessionRoy VeseyNo ratings yet

- Anderson 2002 0129Document12 pagesAnderson 2002 0129Particle Beam Physics LabNo ratings yet

- Models - Roptics.czerny Turner MonochromatorDocument18 pagesModels - Roptics.czerny Turner MonochromatorHIPOLITO ARTURO RIVEROS GUEVARANo ratings yet

- b2p1 PDFDocument7 pagesb2p1 PDFEni RahayuNo ratings yet

- Basic RF Technic and Laboratory Manual: Dr. Haim Matzner&Shimshon Levy April 2002Document14 pagesBasic RF Technic and Laboratory Manual: Dr. Haim Matzner&Shimshon Levy April 2002progressksbNo ratings yet

- An Overview of Microwave Sensor TechnologyDocument8 pagesAn Overview of Microwave Sensor Technology박철승No ratings yet

- Automation of The Z-Scan Experiment Using LabVIEWDocument13 pagesAutomation of The Z-Scan Experiment Using LabVIEWulaganathan100% (1)

- Differentiating Walls From Corners Using The Amplitude of Ultrasonic EchoesDocument13 pagesDifferentiating Walls From Corners Using The Amplitude of Ultrasonic EchoesQassem MohaidatNo ratings yet

- ACC Seminar Report SummaryDocument22 pagesACC Seminar Report SummaryLatha DevarajNo ratings yet

- Interferometric OSEM Sensor Development: LIGO Laboratory / LIGO Scientific CollaborationDocument11 pagesInterferometric OSEM Sensor Development: LIGO Laboratory / LIGO Scientific CollaborationRakesh NayakNo ratings yet

- 2000 - Calibration of Displacement Sensors Up To 300um With Nanometre Accuracy and Direct Traceability To A Primary Standard of LengthDocument9 pages2000 - Calibration of Displacement Sensors Up To 300um With Nanometre Accuracy and Direct Traceability To A Primary Standard of Lengthamin342No ratings yet

- Broadband Fluxgate MagnetometerDocument8 pagesBroadband Fluxgate MagnetometerJose Roberto0% (1)

- Maximum Likelihood Estimation of Array Element Location and MVDR Beamforming Simulation and Experimental ResultsDocument5 pagesMaximum Likelihood Estimation of Array Element Location and MVDR Beamforming Simulation and Experimental ResultsMayssa RjaibiaNo ratings yet

- Measurement of Solar Extinction in Tower Plants With Digital CamerasDocument8 pagesMeasurement of Solar Extinction in Tower Plants With Digital CamerasUmer JamalNo ratings yet

- A Microcellular Ray-Tracing PropagationDocument14 pagesA Microcellular Ray-Tracing PropagationDenis MonteiroNo ratings yet

- Atmospheric Aberrations in Coherent Laser Systems: Snowmass, July 12, 2007 Aniceto BelmonteDocument40 pagesAtmospheric Aberrations in Coherent Laser Systems: Snowmass, July 12, 2007 Aniceto BelmonteImad Ha AliNo ratings yet

- Sakai Chapter 3Document20 pagesSakai Chapter 3Ronnel Mendoza VasquezNo ratings yet

- Advanced Beam Analysis For Integrated ModellingDocument10 pagesAdvanced Beam Analysis For Integrated ModellingnrmaheshNo ratings yet

- Embedded System For Distance Measurement and Surface Discrimination Applications - VFDocument7 pagesEmbedded System For Distance Measurement and Surface Discrimination Applications - VFShahjad PathanNo ratings yet

- Measuring Secondary Flow in a Two-Stage Turbine Rig Using Tunnelling VelocimetryDocument8 pagesMeasuring Secondary Flow in a Two-Stage Turbine Rig Using Tunnelling VelocimetryMarcelo Funes-GallanziNo ratings yet

- Time-Of-Flight (ToF) Measurement Using Pulse LasersDocument22 pagesTime-Of-Flight (ToF) Measurement Using Pulse Lasersengin kavakNo ratings yet

- Control flux distribution on solar tower receiverDocument15 pagesControl flux distribution on solar tower receivergldn1gldnNo ratings yet

- Topics On Optical and Digital Image Processing Using Holography and Speckle TechniquesFrom EverandTopics On Optical and Digital Image Processing Using Holography and Speckle TechniquesNo ratings yet

- Software Radio: Sampling Rate Selection, Design and SynchronizationFrom EverandSoftware Radio: Sampling Rate Selection, Design and SynchronizationNo ratings yet

- CertificateDocument1 pageCertificatemanuales1000100% (1)

- Jup 232556Document1 pageJup 232556manuales1000No ratings yet

- Meade DSI Pro II FixDocument15 pagesMeade DSI Pro II Fixmanuales1000No ratings yet

- AstromedidaDocument1 pageAstromedidamanuales1000No ratings yet

- USBDocument1 pageUSBmanuales1000No ratings yet

- ADH User GuideDocument30 pagesADH User GuideraflyNo ratings yet

- Google Earth Satellites 2Document1 pageGoogle Earth Satellites 2manuales1000No ratings yet

- PROYECTO1Document3 pagesPROYECTO1manuales1000No ratings yet

- Goo Earth Sat 2Document1 pageGoo Earth Sat 2manuales1000No ratings yet

- Google Earth SatellitesDocument1 pageGoogle Earth Satellitesmanuales1000No ratings yet

- 2222Document1 page2222manuales1000No ratings yet

- ADH User GuideDocument30 pagesADH User GuideraflyNo ratings yet

- PROYECTO1Document3 pagesPROYECTO1manuales1000No ratings yet

- Datos AstrometricaDocument1 pageDatos Astrometricamanuales1000No ratings yet

- Certificado LunarDocument1 pageCertificado Lunarmanuales1000No ratings yet

- TAN2012001 Getting Started FlyCap2.3 ARMDocument5 pagesTAN2012001 Getting Started FlyCap2.3 ARMmanuales1000No ratings yet

- Our Star - The Sun: National Aeronautics and Space AdministrationDocument2 pagesOur Star - The Sun: National Aeronautics and Space Administrationmanuales1000No ratings yet

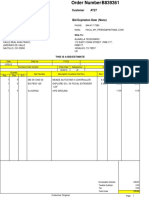

- Order for Telescope AccessoriesDocument1 pageOrder for Telescope Accessoriesmanuales1000No ratings yet

- Your Name to Mars CertificateDocument1 pageYour Name to Mars Certificatemanuales1000No ratings yet

- AutoDocument111 pagesAutomanuales1000100% (1)

- Exoplanet Observing For AmateursDocument170 pagesExoplanet Observing For AmateursMika'il al-AlmanyNo ratings yet

- Meade DSI Pro II FixDocument15 pagesMeade DSI Pro II Fixmanuales1000No ratings yet

- Baranec SmallApertureLaserAdaptiveOptics TEC OIRDocument10 pagesBaranec SmallApertureLaserAdaptiveOptics TEC OIRmanuales1000No ratings yet

- Nasa Technical Report TR R-277Document68 pagesNasa Technical Report TR R-277ConspiratorialPlanet.comNo ratings yet

- The Size, Shape, Density, and Albedo of Ceres From Its Occultation of BD +8 Deg 471Document37 pagesThe Size, Shape, Density, and Albedo of Ceres From Its Occultation of BD +8 Deg 471manuales1000No ratings yet

- Adaptative OpticsDocument11 pagesAdaptative Opticsmanuales1000No ratings yet

- PROYECTO1Document3 pagesPROYECTO1manuales1000No ratings yet

- SAS 2012 ProceedingsDocument246 pagesSAS 2012 Proceedingsmanuales1000No ratings yet

- Megrez 80Document12 pagesMegrez 80manuales1000No ratings yet

- (EMERSON) Loop CheckingDocument29 pages(EMERSON) Loop CheckingDavid Chagas80% (5)

- 10 Consulting Frameworks To Learn For Case Interview - MConsultingPrepDocument25 pages10 Consulting Frameworks To Learn For Case Interview - MConsultingPrepTushar KumarNo ratings yet

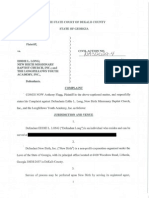

- Anthony Flagg's Complaint Against Eddie LongDocument23 pagesAnthony Flagg's Complaint Against Eddie LongRod McCullom100% (1)

- Bid Document PDFDocument125 pagesBid Document PDFAzharudin ZoechnyNo ratings yet

- MT R 108 000 0 000000-0 DHHS B eDocument68 pagesMT R 108 000 0 000000-0 DHHS B eRafal WojciechowskiNo ratings yet

- Successful Organizational Change FactorsDocument13 pagesSuccessful Organizational Change FactorsKenneth WhitfieldNo ratings yet

- Axtraxng™: Networked Access Control Management Software V27.XDocument2 pagesAxtraxng™: Networked Access Control Management Software V27.XChiluvuri VarmaNo ratings yet

- Nammo Bulletin 2014Document13 pagesNammo Bulletin 2014Dmitry Karpov0% (1)

- Lesson 3 - Materials That Undergo DecayDocument14 pagesLesson 3 - Materials That Undergo DecayFUMIKO SOPHIA67% (6)

- Spain Price List With VatDocument3 pagesSpain Price List With Vatsanti647No ratings yet

- 2 - Nested IFDocument8 pages2 - Nested IFLoyd DefensorNo ratings yet

- Terminología Sobre Reducción de Riesgo de DesastresDocument43 pagesTerminología Sobre Reducción de Riesgo de DesastresJ. Mario VeraNo ratings yet

- DEFCON ManualDocument13 pagesDEFCON Manualbuyvalve100% (1)

- 16 BPI V FernandezDocument1 page16 BPI V FernandezAngelica Joyce BelenNo ratings yet

- HetNet Solution Helps Telcos Improve User Experience & RevenueDocument60 pagesHetNet Solution Helps Telcos Improve User Experience & RevenuefarrukhmohammedNo ratings yet

- Rust Experimental v2017 DevBlog 179 x64 #KnightsTableDocument2 pagesRust Experimental v2017 DevBlog 179 x64 #KnightsTableIngrutinNo ratings yet

- Sugar Milling Contract DisputeDocument3 pagesSugar Milling Contract DisputeRomy IanNo ratings yet

- MMPX 403 Parametr ListDocument30 pagesMMPX 403 Parametr ListOğuz Kağan ÖkdemNo ratings yet

- Library Management System (Final)Document88 pagesLibrary Management System (Final)Ariunbat Togtohjargal90% (30)

- Sap Ewm - Erp Initial SetupDocument3 pagesSap Ewm - Erp Initial SetupVAIBHAV PARAB80% (5)

- DX133 DX Zero Hair HRL Regular 200 ML SDS 16.04.2018 2023Document6 pagesDX133 DX Zero Hair HRL Regular 200 ML SDS 16.04.2018 2023Welissa ChicanequissoNo ratings yet

- Amended ComplaintDocument38 pagesAmended ComplaintDeadspinNo ratings yet

- Parasim CADENCEDocument166 pagesParasim CADENCEvpsampathNo ratings yet

- Whats The Average 100 M Time For An Olympics - Google SearchDocument1 pageWhats The Average 100 M Time For An Olympics - Google SearchMalaya KnightonNo ratings yet

- A Dream Takes FlightDocument3 pagesA Dream Takes FlightHafiq AmsyarNo ratings yet

- TransformerDocument50 pagesTransformerبنیاد پرست100% (8)

- BC Specialty Foods DirectoryDocument249 pagesBC Specialty Foods Directoryjcl_da_costa6894No ratings yet

- Design of Shear Walls Using ETABSDocument97 pagesDesign of Shear Walls Using ETABSYeraldo Tejada Mendoza88% (8)

- Czar Alexander IIDocument11 pagesCzar Alexander IIMalachy ChinweokwuNo ratings yet

- Draft of The English Literature ProjectDocument9 pagesDraft of The English Literature ProjectHarshika Verma100% (1)