Professional Documents

Culture Documents

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Uploaded by

rahulOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Uploaded by

rahulCopyright:

Available Formats

Unit-III/ Computer Networking Truba College of Science & Tech.

Bhopal

Medium Access Control: In any broadcast network, the key issue is how to determine who

gets to use the channel deals when there is competition for it. To make this point clearer,

consider a conference call in which six people, on six different telephones, are all connected so

that each one can hear and talk to all the others. It is very likely that when one of them stops

speaking, two or more will start talking start once, leading to chaos. In a face-to-face meeting,

chaos is avoided by external means, for example, at a meeting; people raise their hands to

request permission to speak. Where only a single channel is available, determining who should

go next is much harder. So, broadcast channel are sometimes referred to as multi-access

channels or random access channels.

LAN and WAN

i) Static Channel Allocation in LAN and MAN

ii) Dynamic Channel Allocation in LAN and MAN

As the data link layer is overloaded, it is split into MAC and LLC sub layers. MAC sub-layer

is the bottom part of the data link layer. Medium access control is often used as a synonym

to multiple access protocol, since the MAC sub layer provides the protocol and control

mechanisms that are required for a certain channel access method. This unit deals with

broadcast networks and their protocols.

In any broadcast network, the key issue is how to determine who gets to use the channel when

there is a competition. When only one single channel is available, determining who should get

access to the channel for transmission is a very complex task. Many protocols for solving the

problem are known and they form the contents of this unit.

Thus unit provides an insight of a channel access control mechanism that makes it possible for

several terminals or network nodes to communicate within a multipoint network. The MAC

layer is essentially important in local area networks(LAN’s), many of which use a multi-access

channel as the basis for communication. WAN’s in contrast use a point to point networks.

To get a head start, let us define LANs and MANs.

Definition: A Local Area Network (LAN) is a network of systems spread over small

geographical area, for example a network of computers within a building or small campus.

The owner of a LAN may be the same organization within which the LAN network is set up. It

has higher data rates i.e. in scales of Mbps (Rates at which the data are transferred from one

system to another) because the systems to be spanned are very close to each other in proximity.

Definition: A WAN (Wide Area Network) typically spans a set of countries that have data

rates less than 1Mbps, because of the distance criteria.

The LANs may be owned by multiple organizations since the spanned distance is spread over

some countries.

i) Static Channel Allocation in LAN and MAN

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 1

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Before going for the exact theory behind the methods of channel allocations, we need to

understand the base behind this theory, which is given below:

The channel allocation problem

We can classify the channels as static and dynamic. The static channel is where the number of

users are stable and the traffic is not bursty. When the number of users using the channel keeps

on varying the channel is considered as a dynamic channel. The traffic on these dynamic

channels also keeps on varying. For example: In most computer systems, the data traffic is

extremely bursty. We see that in this system, the peak traffic to mean traffic ratios of 1000:1

are common.

· Static channel allocation

The usual way of allocating a single channel among the multiple users is frequency division

multiplexing (FDM). If there are N users, the bandwidth allocated is split into N equal sized

portions. FDM is simple and efficient technique for small number of users. However when the

number of senders is large and continuously varying or the traffic is bursty, FDM is not

suitable.

The same arguments that apply to FDM also apply to TDM. Thus none of the static channels

allocation methods work well with bursty traffic we explore the dynamic channels.

· Dynamic channels allocation in LAN’s and MAN’s

Before discussing the channel allocation problems that is multiple access methods we will see

the assumptions that we are using so that the analysis will become simple.

Assumptions:

1. The Station Model:

The model consists of N users or independent stations. Stations are sometimes called

terminals. The probability of frame being generated in an interval of length ∆t is λ.Δt, where λ

is a constant and defines the arrival rate of new frames. Once the frame has been generated, the

station is blocked and does nothing until the frame has been successfully transmitted.

2. Single Channel Assumption:

A single channel is available for all communication. All stations can transmit using this single

channel. All can receive from this channel. As far as the hard is concerned, all stations are

equivalent. It is possible the software or the protocols used may assign the priorities to them.

3. Collisions:

If two frames are transmitted simultaneously, they overlap in time and the resulting signal is

distorted or garbled. This event or situation is called a collision. We assume that all stations

can detect collisions. A collided frame must be retransmitted again later. Here we consider no

other errors for retransmission other than those generated because of collisions.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 2

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

4. Continuous Time

For a continuous time assumption we mean, that the frame transmission on the channel can

begin any instant of time. There is no master clock dividing the time into discrete intervals.

5. Slotted Time

In case of slotted time assumption, the time is divided into discrete slots or intervals. The

frame transmission on the channel begins only at the start of a slot. A slot may contain 0, 1, or

more frames. The 0 frame transmission corresponds to idle slot, 1 frame transmission

corresponds to successful transmission, and more frame transmission corresponds to a

collision.

6. Carrier Sense

Using this facility the users can sense the channel. i.e. the stations can tell if the channel is in

use before trying to use it. If the channel is sensed as busy, no station will attempt to transmit

on the channel unless and until it goes idle.

7. No Carrier Sense:

This assumption implies that this facility is not available to the stations. i.e. the stations cannot

tell if the channel is in use before trying to use it. They just go ahead and transmit. It is only

after transmission of the frame they determine whether the transmission was successful or not.

The first assumption states that the station is independent and work is generated at a constant

rate. It also assumes that each station has only one program or one user. Thus when the station

is blocked no new work is generated. The single channel assumption is the heart of this station

model and this unit. The collision assumption is also very basic. Two alternate assumptions

about time are discussed. For a given system only one assumption about time holds good, i.e.

either the channel is considered to be continuous time based or slotted time based. Also a

channel can be sensed or not sensed by the stations. Generally LANs can sense the channel but

wireless networks cannot sense the channel effectively. Also stations on wired carrier sense

networks can terminate their transmission prematurely if they discover collision. But in case of

wireless networks collision detection is rarely done.

ALOHA Protocols

In 1970s, Norman Abramson and his colleagues at University of Hawaii devised a new and

elegant method to solve the channel allocation problem. Their work has been extended by

many researchers since then. His work is called the ALOHA system which uses ground-based

radio broadcasting. This basic idea is applicable to any system in which uncoordinated users

are competing for the use of a shared channel.

Pure or Un-slotted Aloha

The ALOHA network was created at the University of Hawaii in 1970 under the leadership of

Norman Abramson. The Aloha protocol is an OSI layer 2 protocol for LAN networks

with broadcast topology.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 3

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

The first version of the protocol was basic:

· If you have data to send, send the data

· If the message collides with another transmission, try resending it later

Figure 3.1: Pure ALOHA

Figure 3.2: Vulnerable period for the node: frame

The Aloha protocol is an OSI layer 2 protocols used for LAN. A user is assumed to be always

in two states: typing or waiting. The station transmits a frame and checks the channel to see if

it was successful. If so the user sees the reply and continues to type. If the frame transmission

is not successful, the user waits and retransmits the frame over and over until it has been

successfully sent.

Let the frame time denote the amount of time needed to transmit the standard fixed length

frame. We assume the there are infinite users and generate the new frames according Poisson

distribution with the mean N frames per frame time.

· If N>1 the users are generating the frames at higher rate than the channel can handle. Hence

all frames will suffer collision.

· Hence the range for N is

0<N<1

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 4

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

· If N>1 there are collisions and hence retransmission frames are also added with the new

frames for transmissions.

Let us consider the probability of k transmission attempts per frame time. Here the

transmission of frames includes the new frames as well as the frames that are given for

retransmission. This total traffic is also poisoned with the mean G per frame time. That is G ≥

N

· At low load: N is approximately =0, there will be few collisions. Hence few retransmissions

that is G=N

· At high load: N >>1, many retransmissions and hence G>N.

· Under all loads: throughput S is just the offered load G times the probability of successful

transmission P0

S = G*P0

The probability that k frames are generated during a given frame time is given by Poisson

distribution

P[k]= Gke-G / K!

So the probability of zero frames is just e-G. The basic throughput calculation follows a

Poisson distribution with an average number of arrivals of 2G arrivals per two frame time.

Therefore, the lambda parameter in the Poisson distribution becomes 2G.

Hence P0 = e-2G

Hence the throughput S = GP0 = Ge-2G

We get for G = 0.5 resulting in a maximum throughput of 0.184, i.e. 18.4%.

Pure Aloha had a maximum throughput of about 18.4%. This means that about 81.6% of the

total available bandwidth was essentially wasted due to losses from packet collisions.

Slotted or Impure ALOHA

An improvement to the original Aloha protocol was Slotted Aloha. It is in 1972 Roberts

published a method to double the throughput of a pure ALOHA by using discrete time-slots.

His proposal was to divide the time into discrete slots corresponding to one frame time. This

approach requires the users to agree to the frame boundaries. To achieve synchronization one

special station emits a pip at the start of each interval similar to a clock. Thus the capacity of

slotted ALOHA increased to the maximum throughput of 36.8%.

The throughput for pure and slotted ALOHA system is shown in figure 3.3. A station can send

only at the beginning of a timeslot and thus collisions are reduced. In this case, the average

number of aggregate arrivals is G arrivals per 2X seconds. This leverages the lambda

parameter to be G. The maximum throughput is reached for G = 1.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 5

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Figure 3.3: Throughput versus offered load traffic

With Slotted Aloha, a centralized clock sends out small clock tick packets to the outlying

stations. Outlying stations are allowed to send their packets immediately after receiving a clock

tick. If there is only one station with a packet to send, this guarantees that there will never be a

collision for that packet. On the other hand if there are two stations with packets to send, this

algorithm guarantees that there will be a collision, and the whole of the slot period up to the

next clock tick is wasted. With some mathematics, it is possible to demonstrate that this

protocol does improve the overall channel utilization, by reducing the probability of collisions

by a half.

It should be noted that Aloha’s characteristics are still not much different from those

experienced today by Wi - Fi, and similar contention-based systems that have no carrier sense

capability. There is a certain amount of inherent inefficiency in these systems. It is typical to

see these types of networks’ throughput break down significantly as the number of users and

message burstiness increase. For these reasons, applications which need highly deterministic

load behavior often use token-passing schemes (such as token ring) instead of contention

systems.

For instance ARCNET is very popular in embedded applications. Nonetheless, contention

based systems also have significant advantages, including ease of management and speed in

initial communication. Slotted Aloha is used on low bandwidth tactical Satellite

communications networks by the US Military, subscriber based Satellite communications

networks, and contact less RFID technologies.

3.3 LAN Protocols

With slotted ALOHA, the best channel utilization that can be achieved is 1 / e. This is hardly

surprising since with stations transmitting at will, without paying attention to what other

stations are doing, there are bound to be many collisions. In LANs, it is possible to detect what

other stations are doing, and adapt their behavior accordingly. These networks can achieve a

better utilization than 1 / e.

CSMA Protocols:

Protocols in which stations listen for a carrier (a transmission) and act accordingly are

called Carrier Sense Protocols. "Multiple Access" describes the fact that multiple nodes

send and receive on the medium. Transmissions by one node are generally received by all

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 6

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

other nodes using the medium. Carrier Sense Multiple Access (CSMA) is

a probabilistic Media Access Control (MAC) protocol in which a node verifies the absence of

other traffic before transmitting on a shared physical medium, such as an electrical bus, or a

band of electromagnetic spectrum.

The following three protocols discuss the various implementations of the above discussed

concepts:

i) Protocol 1. 1-persistent CSMA:

When a station has data to send, it first listens to the channel to see if any one else is

transmitting. If the channel is busy, the station waits until it becomes idle. When the station

detects an idle channel, it transmits a frame. If a collision occurs, the station waits a random

amount of time and starts retransmission.

The protocol is so called because the station transmits with a probability of a whenever it finds

the channel idle.

ii) Protocol 2. Non-persistent CSMA:

In this protocol, a conscious attempt is made to be less greedy than in the

1-persistent CSMA protocol. Before sending a station senses the channel. If no one else is

sending, the station begins doing so itself. However, if the channel is already in use, the station

does not continuously sense the channel for the purpose of seizing it immediately upon

detecting the end of previous transmission. Instead, it waits for a random period of time and

then repeats the algorithm. Intuitively, this algorithm should lead to better channel utilization

and longer delays than 1-persistent CSMA.

iii) Protocol 3. p - persistent CSMA

It applies to slotted channels and the working of this protocol is given below:

When a station becomes ready to send, it senses the channel. If it is idle, it transmits with a

probability p. With a probability of q = 1 – p, it defers until the next slot. If that slot is also

idle, it either transmits or defers again, with probabilities p and q. This process is repeated until

either the frame has been transmitted or another station has begun transmitting. In the latter

case, it acts as if there had been a collision. If the station initially senses the channel busy, it

waits until the next slot and applies the above algorithm.

CSMA/CD Protocol

In computer networking, Carrier Sense Multiple Access with Collision Detection (CSMA/CD)

is a network control protocol in which a carrier sensing scheme is used. A

transmitting data station that detects another signal while transmitting a frame, stops

transmitting that frame, transmits a jam signal, and then waits for a random time interval. The

random time interval also known as "backoff delay" is determined using the truncated binary

exponential backoff algorithm. This delay is used before trying to send that frame

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 7

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

again. CSMA/CD is a modification of pure Carrier Sense Multiple Access (CSMA).

Collision detection is used to improve CSMA performance by terminating transmission as

soon as a collision is detected, and reducing the probability of a second collision on retry.

Methods for collision detection are media dependent, but on an electrical bus such as Ethernet,

collisions can be detected by comparing transmitted data with received data. If they differ,

another transmitter is overlaying the first transmitter’s signal (a collision), and transmission

terminates immediately. Here the collision recovery algorithm is nothing but an binary

exponential algorithm that determines the waiting time for retransmission. If the number of

collisions for the frame hits 16 then the frame is considered as not recoverable.

CSMA/CD can be in anyone of the following three states as shown in figure 3.4.

1. Contention period

2. transmission period

3. Idle period

Figure 3.4: States of CSMA / CD: Contention, Transmission, or Idle

A jam signal is sent which will cause all transmitters to back off by random intervals, reducing

the probability of a collision when the first retry is attempted. CSMA/CD is a layer 2 protocol

in the OSI model. Ethernet is the classic CSMA/CD protocol.

Collision Free Protocols

Although collisions do not occur with CSMA/CD once a station has unambiguously seized the

channel, they can still occur during the contention period. These collisions adversely affect the

system performance especially when the cable is long and the frames are short. And also

CSMA/CD is not universally applicable. In this section, we examine some protocols that

resolve the contention for the channel without any collisions at all, not even during the

contention period.

In the protocols to be described, we assume that there exists exactly N stations, each with a

unique address from 0 to N-1 “wired” into it. We assume that the propagation delay is

negligible.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 8

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

i) A Bit Map Protocol

In this method, each contention period consists of exactly N slots. If station 0 has a frame to

send, it transmits a 1 bit during the zeroth slot. No other station is allowed to transmit during

this slot. Regardless of what station 0 is doing, station 1 gets the opportunity to transmit a 1

during slot 1, but only if it has a frame queued. In general, station j may announce that it has a

frame to send by inserting a 1 bit into slot j. After all N stations have passed by, each station

has complete knowledge of which stations wish to transmit. At that point, they begin

transmitting in a numerical order.

Since everyone agrees on who goes next, there will never be any collisions. After the last ready

station has transmitted its frame, an event all stations can monitor, another N bit contention

period is begun. If a station becomes ready just after its bit slot has passed by, it is out of luck

and must remain silent until every station has had a chance and the bit map has come around

again.

Protocols like this in which the desire to transmit is broadcast before the actual transmission

are called Reservation Protocols.

ii) Binary Countdown

A problem with basic bit map protocol is that the overhead is 1 bit per station, so it odes not

scale well to networks with thousands of stations. We can do better by using binary station

address.

A station wanting to use the channel now broadcasts its address as a binary bit string, starting

with the high-order bit. All addresses are assumed to be of the same length. The bits in each

address position from different stations are Boolean ORed together. We call this protocol

as Binary countdown, which was used in Datakit. It implicitly assumes that the transmission

delays are negligible so that all stations see asserted bits essentially simultaneously.

To avoid conflicts, an arbitration rule must be applied: as soon as a station sees that a high-

order bit position that is 0 in its address has been overwritten with a 1, it gives up.

Example: If stations 0010, 0100, 1001, and 1010 are all trying to get the channel for

transmission, these are ORed together to form a 1. Stations 0010 and 0100 see the 1 and know

that a higher numbered station is competing for the channel, so they give up for the current

round. Stations 1001 and 1010 continue.

The next bit is 0, and both stations continue. The next bit is 1, so station 1001 gives up. The

winner is station 1010 because t has the highest address. After winning the bidding, it may now

transmit a frame, after which another bidding cycle starts.

This protocol has the property that higher numbered stations have a higher priority than lower

numbered stations, which may be either good or bad depending on the context.

iii) Limited Contention Protocols

Until now we have considered two basic strategies for channel acquisition in a cable network:

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 9

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Contention as in CSMA, and collision – free methods. Each strategy can be rated as to how

well it does with respect to the two important performance measures, delay at low load,

and channel efficiency at high load.

Under conditions of light load, contention (i.e. pure or slotted ALOHA) is preferable due to its

low delay. As the load increases, contention becomes increasingly less attractive, because the

overhead associated with channel arbitration becomes greater. Just the reverse is true for

collision free protocols. At low load, they have high delay, but as the load increases, the

channel efficiency improves.

It would be more beneficial if we could combine the best features of contention and collision

free protocols and arrive at a protocol that uses contention at low load to provide low delay,

but uses a collision free technique at high load to provide good channel efficiency. Such

protocols can be called Limited Contention protocols.

iv) Adaptive Tree Walk Protocol

A simple way of performing the necessary channel assignment is to use the algorithm devised

by US army for testing soldiers for syphilis during World War II. The Army took a blood

sample from N soldiers. A portion of each sample was poured into a single test tube. This

mixed sample was then tested for antibodies. If none were found, all the soldiers in the group

were declared healthy. If antibodies were present, two new mixed samples were prepared, one

from soldiers 1 through N/2 and one from the rest. The process was repeated recursively until

the infected soldiers were detected.

For the computerized version of this algorithm, let us assume that stations are arranged as the

leaves of a binary tree as shown in figure 3.4 below:

Figure 3.5: A tree for four stations

In the first contention slot following a successful frame transmission, slot 0, all stations are

permitted to acquire the channel. If one of them does, so fine. If there is a collision, then during

slot 1 only stations falling under node 2 in the tree may compete. If one of them acquires the

channel, the slot following the frame is reserved for those stations under node 3. If on the other

hand, two or more stations under node 2 want to transmit, there will be a collisions during slot

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 10

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

1, in which case it is node 4’s turn during slot 2.

In essence, if a collision occurs during slot 0, the entire tree is searched, depth first to locate all

ready stations. Each bit slot is associated with some particular node in a tree. If a collision

occurs, the search continues recursively with the node’s left and right children. If a bit slot is

idle or if only one station transmits in it, the searching of its node can stop because all ready

stations have been located.

When the load on the system is heavy, it is hardly worth the effort to dedicate slot 0 to node 1,

because that makes sense only in the unlikely event that precisely one station has a frame to

send.

At what level in the tree should the search begin? Clearly, the heavier the load, the farther

down the tree the search should begin.

3.4 IEEE 802 standards for LANs

IEEE has standardized a number of LAN’s and MAN’s under the name of IEEE 802. Few of

the standards are listed in figure 3.6. The most important of the survivor’s are 802.3 (Ethernet)

and 802.11 (wireless LAN). Both these two standards have different physical layers and

different MAC sub layers but converge on the same logical link control sub layer so they have

same interface to the network layer.

IEEE No Name Title

802.3 Ethernet CSMA/CD Networks (Ethernet)

802.4 Token Bus Networks

802.5 Token Ring Networks

802.6 Metropolitan Area Networks

802.11 WiFi Wireless Local Area Networks

802.15.1 Bluetooth Wireless Personal Area Networks

802.15.4 ZigBee Wireless Sensor Networks

802.16 WiMa Wireless Metropolitan Area Networks

Figure 3.6: List of IEEE 802 Standards for LAN and MAN

Ethernets

Ethernet was originally based on the idea of computers communicating over a shared coaxial

cable acting as a broadcast transmission medium. The methods used show some similarities to

radio systems, although there are major differences, such as the fact that it is much easier to

detect collisions in a cable broadcast system than a radio broadcast. The common cable

providing the communication channel was likened to the ether and it was from this reference

the name "Ethernet" was derived.

From this early and comparatively simple concept, Ethernet evolved into the complex

networking technology that today powers the vast majority of local computer networks. The

coaxial cable was later replaced with point-to-point links connected together by hubs and/or

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 11

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

switches in order to reduce installation costs, increase reliability, and enable point-to-point

management and troubleshooting. Star LAN was the first step in the evolution of Ethernet

from a coaxial cable bus to a hub-managed, twisted-pair network.

Above the physical layer, Ethernet stations communicate by sending each other data packets,

small blocks of data that are individually sent and delivered. As with other IEEE 802 LANs,

each Ethernet station is given a single 48-bit MAC address, which is used both to specify the

destination and the source of each data packet. Network interface cards (NICs) or chips

normally do not accept packets addressed to other Ethernet stations. Adapters generally come

programmed with a globally unique address, but this can be overridden, either to avoid an

address change when an adapter is replaced, or to use locally administered addresses.

The most kinds of Ethernets used were with the data rate of 10Mbps. The table 3.1 gives the

details of the medium used, number of nodes per segment and distance it supported, along with

the application.

Table 3.1 Different 10Mbps Ethernets used

Name Cable Max Nodes per Advantages

Type Segment Segment

Length

10Base5 Thick coax 500 m 100 Original Cable; Now

Obsolete

10Base2 Thin coax 185 m 30 No hub needed

10Base-T Twisted 100 m 1024 Cheapest system

Pair

10Base-F Fibre 2000 m 1024 Best between

Optics buildings

Fast Ethernet

Fast Ethernet is a collective term for a number of Ethernet standards that carry traffic at the

nominal rate of 100 Mbit/s, against the original Ethernet speed of 10 Mbit/s. Of the 100

megabit Ethernet standards 100baseTX is by far the most common and is supported by the vast

majority of Ethernet hardware currently produced. Full duplex fast Ethernet is sometimes

referred to as "200 Mbit/s" though this is somewhat misleading as that level of improvement

will only be achieved if traffic patterns are symmetrical. Fast Ethernet was introduced in 1995

and remained the fastest version of Ethernet for three years before being superseded by gigabit

Ethernet.

A fast Ethernet adaptor can be logically divided into a medium access controller (MAC) which

deals with the higher level issues of medium availability and a physical layer interface (PHY).

The MAC may be linked to the PHY by a 4 bit 25 MHz synchronous parallel interface known

as MII. Repeaters (hubs) are also allowed and connect to multiple PHYs for their different

interfaces.

· 100BASE-T is any of several Fast Ethernet standards for twisted pair cables.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 12

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

· 100BASE-TX (100 Mbit/s over two-pair Cat5 or better cable),

· 100BASE-T4 (100 Mbit/s over four-pair Cat3 or better cable, defunct),

· 100BASE-T2 (100 Mbit/s over two-pair Cat3 or better cable, also defunct).

The segment length for a 100BASE-T cable is limited to 100 meters. Most networks had to be

rewired for 100-megabit speed whether or not they had supposedly been CAT3 or CAT5 cable

plants. The vast majority of common implementations or installations of 100BASE-T are done

with 100BASE-TX.

100BASE-TX is the predominant form of Fast Ethernet, and runs over two pairs of category

5 or above cable. A typical category 5 cable contains 4 pairs and can therefore support two

100BASE-TX links. Each network segment can have a maximum distance of 100 metres. In its

typical configuration, 100BASE-TX uses one pair of twisted wires in each direction, providing

100 Mbit/s of throughput in each direction (full-duplex).

The configuration of 100BASE-TX networks is very similar to 10BASE-T. When used to

build a local area network, the devices on the network are typically connected to

a hub or switch, creating a star network. Alternatively it is possible to connect two devices

directly using a crossover cable.

In 100BASE-T2, the data is transmitted over two copper pairs, 4 bits per symbol. First, a 4 bit

symbol is expanded into two 3-bit symbols through a non-trivial scrambling procedure based

on a linear feedback shift register.

100BASE-FX is a version of Fast Ethernet over optical fibre. It uses two strands of multi-mode

optical fibre for receive (RX) and transmit (TX). Maximum length is 400 metres for half-

duplex connections or 2 kilometers for full-duplex.

100BASE-SX is a version of Fast Ethernet over optical fibre. It uses two strands of multi-mode

optical fibre for receive and transmit. It is a lower cost alternative to using 100BASE-FX,

because it uses short wavelength optics which are significantly less expensive than the long

wavelength optics used in 100BASE-FX. 100BASE-SX can operate at distances up to 300

meters.

100BASE-BX is a version of Fast Ethernet over a single strand of optical fibre (unlike

100BASE-FX, which uses a pair of fibres). Single-mode fibre is used, along with a special

multiplexer which splits the signal into transmit and receive wavelengths.

Gigabit Ethernet

Gigabit Ethernet (GbE or 1 GigE) is a term describing various technologies for

transmitting Ethernet packets at a rate of a gigabit per second, as defined by the IEEE 802.3-

2005 standard. Half duplex gigabit links connected through hubs are allowed by the

specification but in the marketplace full duplex with switches is the norm.

Gigabit Ethernet was the next iteration, increasing the speed to 1000 Mbit/s. The initial

standard for gigabit Ethernet was standardized by the IEEE in June 1998 as IEEE 802.3z.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 13

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

802.3z is commonly referred to as 1000BASE-X (where -X refers to either -CX, -SX, -LX, or -

ZX).

IEEE 802.3ab, ratified in 1999, defines gigabit Ethernet transmission over unshielded twisted

pair (UTP) category 5, 5e, or 6 cabling and became known as 1000BASE-T. With the

ratification of 802.3ab, gigabit Ethernet became a desktop technology as organizations could

utilize their existing copper cabling infrastructure.

Initially, gigabit Ethernet was deployed in high-capacity backbone network links (for instance,

on a high-capacity campus network). Fibre gigabit Ethernet has recently been overtaken by 10

gigabit Ethernet which was ratified by the IEEE in 2002 and provided data rates 10 times that

of gigabit Ethernet. Work on copper 10 gigabit Ethernet over twisted pair has been completed,

but as of July 2006, the only currently available adapters for 10 gigabit Ethernet over copper

requires specialized cabling.

InfiniBand connectors and is limited to 15 m. However, the 10GBASE-T standard specifies

use of the traditional RJ-45 connectors and longer maximum cable length. Different gigabits

Ethernet are listed in table 3.2.

Table 3.2 Different Gigabit Ethernets

Name Medium

1000BASE-T unshielded twisted pair

1000BASE-SX multi-mode

1000BASE-LX single-mode

1000BASE-CX balanced copper cabling

1000BASE-ZX single-mode

IEEE 802.3 Frame format

Preamble SOF Destination Source Length Data Pad Checksum

Address Address

Figure 3.7: Frame format of IEEE 802.3

· Preamble field

Each frame starts with a preamble of 8 bytes, each containing bit patterns “10101010”.

Preamble is encoded using Manchester encoding. Thus the bit patterns produce a 10MHz

square wave for 6.4 micro sec to allow the receiver’s clock to synchronize with the sender’s

clock.

· Address field

The frame contains two addresses, one for the destination and another for the sender. The

length of address field is 6 bytes. The MSB of destination address is ‘0’ for ordinary addresses

and ‘1’ for group addresses. Group addresses allow multiple stations to listen to a single

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 14

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

address. When a frame is sent to a group of users, all stations in that group receive it. This type

of transmission is referred to as multicasting. The address consisting of all ‘1’ bits is reserved

for broadcasting.

· SOF: This field is 1 byte long and is used to indicate the start of the frame.

· Length:

This field is of 2 bytes long. It is used to specify the length of the data in terms of bytes that is

present in the frame. Thus the combination of the SOF and the length field is used to mark the

end of the frame.

· Data :

The length of this field ranges from zero to a maximum of 1500 bytes. This is the place where

the actual message bits are to be placed.

· Pad:

When a transceiver detects a collision, it truncates the current frame, which means the stray

bits and pieces of frames appear on the cable all the time. To make it easier to distinguish valid

frames from garbage, Ethernet specifies that valid frame must be at least 64 bytes long, from

the destination address to the checksum, including both. That means the data field come must

be of 46 bytes. But if there is no data to be transmitted and only some acknowledgement is to

be transmitted then the length of the frame is less than what is specified for the valid frame.

Hence these pad fields are provided, i.e. if the data field is less than 46 bytes then the pad field

comes into picture such that the total data and pad field must be equal to 46 bytes minimum. If

the data field is greater than 46 bytes then pad field is not used.

· Checksum:

It is 4 byte long. It uses a 32-bit hash code of the data. If some data bits are in error, then the

checksum will be wrong and the error will be detected. It uses CRC method and it is used only

for error detection and not for forward error correction.

Fibre Optic Networks

Fibre optics is becoming increasingly important, not only for wide area point-to-point links,

but also for MANs and LANs. Fibre has high bandwidth, is thin and lightweight, is not

affected by electromagnetic interference from heavy machinery, power surges or lightning, and

has excellent security because it is nearly impossible to wiretap without detection.

FDDI (Fibre Distributed Data Interface)

It is a high performance fibre optic token ring LAN running at 100 Mbps over distances up to

200 km with up to 1000 stations connected. It can be used in the same way as any of the 802

LANs, but with its high bandwidth, another common use is as a backbone to connect copper

LANs.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 15

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

FDDI – II is a successor of FDDI modified to handle synchronous circuit switched PCM data

for voice or ISDN traffic, in addition to ordinary data.

FDDI uses multimode fibres. It also uses LEDs rather than lasers because FDDI may

sometimes be used to connect directly to workstations.

The FDDI cabling consists of two fibre rings, one transmitting clockwise and the other

transmitting counter clockwise. If any one breaks, the other can be used as a backup.

FDDI defines two classes of stations A and B. Class A stations connect to both rings. The

cheaper class B stations only connect to one of the rings. Depending on how important fault

tolerance is, an installation can choose class A or class B stations, or some of each.

Wireless LANs

Although Ethernet is widely used, it is about to get some competition. Wireless LANs are

increasingly popular, and more and more office buildings, airports and other public places are

being outfitted with them. Wireless LANs can operate in one of two configurations, a base

station and without a base station. Consequently, the 802.11 LAN standards take this into

account and makes provision for both arrangements.

802.11 Protocol Stack

The protocols used by all the 802 variants, including Ethernet, have a certain commonality of

structure. A partial view of the 802.11 protocol stack is given in diagram. The physical layer

corresponds to the OSI physical layer fairly well, but the data link layer in all the 802 protocols

is split into two or more sub layers. In 802.11, the MAC sub layer determines how the channel

is allocated, that is, who gets to transmit next. Above it is the LLC sublayer, whose job it is to

hide the differences between the different 802 variants and make them indistinguishable as far

as the network layer is concerned.

In 1977 802.11 standard specifies three transmission techniques allowed in the physical layer.

The infrared method uses much the same technology as television remote controls do. The

other two use short-range radio, using techniques called FHSS and DSSS. Both of these use a

part of the spectrum that does not require licensing. Radio-controlled garage door openers also

use this piece of the spectrum, so your notebook computer may find itself in competition with

your garage door. Cordless telephones and microwave oven also use this band.

Diagram: Part of the 802.11 protocol stack

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 16

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

802.11 Physical Layer

The purpose of this document is to explain the basic ideas laying in the foundation of the

technologies adopted by IEEE 802.11 standards for wireless communications at the physical

layer. It is designed for audience working with or administrating the devices complying to the

named standards, and willing to know their principles of operation believing that such

knowledge can help to make educated decisions regarding the related equipment, choose and

utilize the available hardware more efficiently.

Using Radio Waves For Data Transmission

Designing a wireless high speed data exchange system is not a trivial task to do. Neither is the

development of the standard for wireless local area networks. The major problems at the

physical layer here caused by the nature of the chosen media are:

bandwidth allocation;

external interference;

reflection.

802.11 First Standard For Wireless

LANs

The Institute of Electronic and

Electrical Engineers (IEEE) has

released IEEE 802.11 in June 1997.

The standard defined physical and

MAC layers of wireless local area

networks (WLANs).

The physical layer of the original

802.11 standardized three wireless

data exchange techniques:

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 17

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Infrared (IR);

Frequency hopping spread spectrum (FHSS);

Direct sequence spread spectrum (DSSS).

The 802.11 radio WLANs operate in the 2.4GHz (2.4 to 2.483 GHz) unlicensed Radio

Frequency (RF) band. The maximum isotropic transmission power in this band allowed by

FCC in US is 1Wt, but 802.11 devices are usually limited to the 100mWt value.

The physical layer in 802.11 is split into Physical Layer Convergence Protocol (PLCP) and the

Physical Medium Dependent (PMD) sub layers. The PLCP prepares/parses data units

transmitted/received using various 802.11 media access techniques. The PMD performs the

data transmission/reception and modulation/demodulation directly accessing air under the

guidance of the PLCP. The 802.11 MAC layer to the great extend is affected by the nature of

the media. For example, it implements a relatively complex for the second layer fragmentation

of PDUs.

802.11 MAC Sub layer Protocol

It is quite different from that of Ethernet due to the inherent complexity of the wireless

environment compared to that of a wired system. With Ethernet, a station just wait until the

ether goes silent and starts transmitting. If it does not receive a noise burst back within the first

64 bytes, the frame has almost assuredly been delivered correctly. With wireless, this situation

does not hold.

To start with, there is the hidden station problem mentioned earlier and illustrated again in Fig.

(a) & (b).

Since not all stations are within radio range of each other, transmissions going on in one part of

a cell may not be received elsewhere in the same cell. In this example, station C is transmitting

to station B. If A senses the channel, it will not hear anything and falsely conclude that it may

now start transmitting to B.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 18

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

In addition, there is a inverse problem, the exposed station problem in a (b) diagram Here B

wants to send to C so it listens to the channel. Want it hears a transmission; it falsely concludes

that it may not send to C, even though A may be transmitting to D. In addition, most radios are

half duplex, meaning that they cannot transmit and listen for noise bursts at the same time on a

single frequency. As a result of these problems, 802.11 do not use CSMA/CD, as Ethernet

does.

To deal with this problem, 802.11 support two modes of operation.

Distributed Coordination Function (DCF)

Point Coordination Function (PCF)

The protocol starts when A decides it wants to send data to B. It begins by sending an RTS

frame to B to request permission to send it a frame. When B receives this request, it may

decide to grant permission, in which case it sends a CTS frame back. Upon receipt of the CTS,

A now sends its frame and starts an ACK timer. Upon correct receipt of the data frame, B

responds with an ACK frame, terminating the exchange. If A’s ACK timer expires before the

ACK gets back to it, the whole protocol is run again.

Now Let us consider this exchange from the viewpoints of C and D. C is within range of A, so

it may receive the RTS frame. If it does, it realizes that someone is going to send data soon, so

for the good of all it desists from transmitting anything until the exchange is completed. From

the information provided in the RTS request, it can estimate how long the sequence will take,

including the final ACK, so it asserts a kind of virtual channel busy for itself, indicated by

NAV (Network Allocation Vector) in diagram D does not hear the RTS, but it does hear the

CTS, so it also asserts the NAV signal for itself. Note that the NAV signals are not transmitted;

they are just internal reminders to keep quiet for a certain period of time.

To deal with problem of noisy channels, 802.11allows frames to be fragmented into smaller

pieces, each with its own checksum. The fragments are individually numbered and ACK using

stop-and-wait protocol.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 19

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

Broadband Wireless

Wireless broadband is high-speed Internet and data service delivered through a wireless local

area network (WLAN) or wide area network (WWAN).

As with other wireless service, wireless broadband may be either fixed or mobile. A fixed

wireless service provides wireless Internet for devices in relatively permanent locations, such

as homes and offices. Fixed wireless broadband technologies include LMDS (Local Multipoint

Distribution System) and MMDS (Multichannel Multipoint Distribution Service) systems for

broadband microwave wireless transmission direct from a local antenna to homes and

businesses within a line-of-sight radius. The service is similar to that provided through digital

subscriber line (DSL) or cable modem but the method of transmission is wireless.

The 802.16 Protocol Stack

The general structure is similar to that of the other 802 networks, but with more sub layers.

The bottom sub layer deals with transmission. Traditional narrow-band radio is used with

conventional modulation schemes. Above the physical transmission layer comes a

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 20

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

convergence sublayer to hide the different technologies from the Data Link Layer.

The DLL consists of three sublayers. The bottom one deals with privacy and security, which is

far more crucial for public outdoor networks than for private indoor networks. It manages

encryption, decryption and key management.

Next comes the MAC sub layer common part. This is where the main protocols, such as

channel management, are located. The model is that the base station controls the system. It can

schedule the downstream channels very efficiently and plays a major a role in management the

upstream channel as well.

The service-specific convergence sublayer takes the place of the logical link sublayer in the

other 802 protocols. Its function is to interface to the network layer. A complication here is that

802.16 was designed to integrate seamlessly with both datagram protocols and ATM. The

problem is that packet protocols are connection-less and ATM is connection oriented.

The 802.16 Frame Structure

All MAC frames begin with a generic header. The header is followed by an optional payload

and an optional checksum (CRC). The Payload is not needed in control frames, for ex:- those

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 21

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

requesting channel slots. The checksum is also optional due to the error correction in the

physical layer and the fact that no attempt is ever made to retransmit real-time frames.

Bluetooth

In 1994, the L.M. Ericson company interested in connecting its mobile phones to other devices

without cables. Together with four other companies ( IBM, Intel, Nokia and Toshiba), it

formed a SIG to develop a wireless standard for interconnecting computing and

communication devices and accessories using short-range, low-power, inexpensive wireless

radios. The project was named Bluetooth, after Harald Blatand II, a Viking king who unified

Denmark and Norway, also without cables.

Undaunted by all this, in July 1999 the Bluetooth SIG issued a 1500-page specification of

V1.0. Shortly, thereafter, the IEEE standards group looking at wireless personal area networks,

802.15, adopted the Bluetooth document as a basis and began hacking on it.

Bluetooth Architecture

The basic unit of a Bluetooth system is a piconet, which consists of a master node and upto

seven active slave nodes within a distance of 10 meters. Multiple piconets can exist in the

same room and can even be connected via a bridge node, interconnected collection of piconets

is called a Scatternet.

In addition to the seven active slave nodes in a piconet, there can be up to 255 parked nodes in

the net. These are devices that the master has switched to a low-power state to reduce the drain

on their batteries. In parked state, a device cannot do anything except respond to an activation

or beacon signal from the master. There are also two intermediate power states, hold and sniff,

but these will not concern us here.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 22

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

The reason for the master/slave design is that the designers intended to facilitate the

implementations of complete Bluetooth chips for under $5. The consequence of this decision is

that the slaves are fairly dum, basically just doing whatever the master tells them to do. At its

heart, a piconet is a centralized TDM system, with the master controlling the clock and

determining which devices get to communicate in which time slot. All communication is

between the master and a slave; direct slave-slave communications is not possible.

Bluetooth Application

The Bluetooth Protocol Stack

The basic Bluetooth protocol architecture as modified by the 802 committee is shown as

above.

The bottom layer is the physical radio layer, which corresponds fairly well to the physical layer

in the OSI and 802 models. It deals with radio transmission and modulation. Many of the

concerns here have to do with the goal of making the system inexpensive so that it can become

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 23

Unit-III/ Computer Networking Truba College of Science & Tech. Bhopal

a mass market item.

The baseband layer is somewhat analogous to the MAC sublayer but also includes elements of

the physical layer. It deals with how the master controls time slots and how these slots are

grouped into frames.

Next comes a layer with a group of somewhat related protocols. The Link manager handles the

establishment of logical channels between devices, including power management,

authentication and quality of service. The L2CAP shields the upper layer from the details of

transmission. The audio and control protocols deal with audio and control respectively.

The next layer, which contains a mix of different protocols. The RFcomm, telephony and

service discovery protocols are native.

The top layer is where the application and profiles are located. They make use of the protocols

in lower layers to get their work done. Each application hs its own dedicated subset of the

protocols. Specific devices, such as headset, usually contain only those protocols needed by

that application and no them.

Prepared By: Ms. Nandini Sharma (CSE Deptt.) Page 24

You might also like

- Simulation of Digital Communication Systems Using MatlabFrom EverandSimulation of Digital Communication Systems Using MatlabRating: 3.5 out of 5 stars3.5/5 (22)

- Ams200 PDFDocument4 pagesAms200 PDFJair Jesús Cazares Rojas100% (2)

- Chapter-The Medium Access Control Sublayer: The Channel Allocation ProblemDocument8 pagesChapter-The Medium Access Control Sublayer: The Channel Allocation Problemkirti bhandageNo ratings yet

- 9.d CN Unit 4Document47 pages9.d CN Unit 4SK Endless SoulNo ratings yet

- Computer Networks UNIT-4 Syllabus: The Medium Access Control Sublayer-The Channel Allocation Problem-StaticDocument47 pagesComputer Networks UNIT-4 Syllabus: The Medium Access Control Sublayer-The Channel Allocation Problem-StaticCDTP G.P. NARENDRANAGARNo ratings yet

- Medium Access ControlDocument10 pagesMedium Access ControlVijay ParuchuriNo ratings yet

- Unit 3: Local Area NetworkDocument139 pagesUnit 3: Local Area NetworkRuchika TakkerNo ratings yet

- Unit2A FinalDocument74 pagesUnit2A FinalAkhila VeerabommalaNo ratings yet

- Module 2 Part 4Document53 pagesModule 2 Part 4leemong335No ratings yet

- CN - CHPT 5Document7 pagesCN - CHPT 5Deepak ChaudharyNo ratings yet

- 21CS52 PPT 2Document26 pages21CS52 PPT 2Manju p sNo ratings yet

- CN U3Document106 pagesCN U3NAMAN JAISWALNo ratings yet

- Computer NetworksDocument15 pagesComputer NetworksJeena Mol AbrahamNo ratings yet

- MAC SublayerDocument92 pagesMAC SublayerAlready ATracerNo ratings yet

- Static and Dynamic Channel AllocationDocument6 pagesStatic and Dynamic Channel AllocationGauriNo ratings yet

- CN Lecture 12 - Data Link Layer - 15 March 2023Document23 pagesCN Lecture 12 - Data Link Layer - 15 March 2023Swapna GuptaNo ratings yet

- Multiple Access Protocols: Multiple Access Method Allows Several Terminals Connected To The SameDocument31 pagesMultiple Access Protocols: Multiple Access Method Allows Several Terminals Connected To The Samemanish saraswatNo ratings yet

- Module2 2Document27 pagesModule2 2Anurag DavesarNo ratings yet

- Dynamic Channel Allocation in Lans and MansDocument9 pagesDynamic Channel Allocation in Lans and MansJing DelfinoNo ratings yet

- Lec4 WWW Cs Sjtu Edu CNDocument134 pagesLec4 WWW Cs Sjtu Edu CNAUSTIN ALTONNo ratings yet

- Medium Access Control Sublayer (Chapter 4) - CSHub PDFDocument15 pagesMedium Access Control Sublayer (Chapter 4) - CSHub PDFSpeed PianoNo ratings yet

- Medium Access Control Sublayer (Chapter 4) - CSHub PDFDocument15 pagesMedium Access Control Sublayer (Chapter 4) - CSHub PDFSpeed PianoNo ratings yet

- Introduction To NetworkingDocument17 pagesIntroduction To NetworkingJesse JeversNo ratings yet

- Unit-3: 1. Static Channel Allocation in Lans and MansDocument30 pagesUnit-3: 1. Static Channel Allocation in Lans and Mans09 rajesh DoddaNo ratings yet

- Random Access Career Sense Protocol A Comparative Analysis of Non Persistent and 1 Persistent CSMADocument5 pagesRandom Access Career Sense Protocol A Comparative Analysis of Non Persistent and 1 Persistent CSMAEditor IJRITCCNo ratings yet

- Carrier-Sence Multiprle Access (CSMA) Protocols: Leonidas Georgiadis February 13, 2002Document18 pagesCarrier-Sence Multiprle Access (CSMA) Protocols: Leonidas Georgiadis February 13, 2002pradeep singhNo ratings yet

- A Paper On EthernetDocument4 pagesA Paper On Ethernetaayushahuja1991No ratings yet

- IPU MCA Advance Computer Network Lecture Wise Notes (Lec04 (Standard Ethernet) )Document6 pagesIPU MCA Advance Computer Network Lecture Wise Notes (Lec04 (Standard Ethernet) )Vaibhav JainNo ratings yet

- Chapter4 MediumAccessControlSublayerDocument159 pagesChapter4 MediumAccessControlSublayersunilsmcsNo ratings yet

- Carrier-Sense Multiple Access CSMA ProtocolsDocument19 pagesCarrier-Sense Multiple Access CSMA Protocols4052-SRINJAY PAL-No ratings yet

- Pure ALOHADocument6 pagesPure ALOHASatya BaratamNo ratings yet

- Aloha Protocol Aloha ProtocolDocument16 pagesAloha Protocol Aloha Protocolsantosh.parsaNo ratings yet

- Unit2 Part 2 Notes Sem5 Communication NetworkDocument17 pagesUnit2 Part 2 Notes Sem5 Communication Network054 Jaiganesh MNo ratings yet

- A Multichannel CSMA MAC Protocol For Multihop Wireless NetworksDocument5 pagesA Multichannel CSMA MAC Protocol For Multihop Wireless Networksashish88bhardwaj_314No ratings yet

- Multiple Access Protocols in Computer NetworkDocument4 pagesMultiple Access Protocols in Computer Networkansuman jenaNo ratings yet

- Power Allocation For OFDM-based Cognitive Radio Systems Under Outage ConstraintsDocument5 pagesPower Allocation For OFDM-based Cognitive Radio Systems Under Outage ConstraintsBac NguyendinhNo ratings yet

- The Medium Access Control SublayerDocument159 pagesThe Medium Access Control SublayerAlaa Dawood SalmanNo ratings yet

- Computer NetworksDocument109 pagesComputer Networksshahenaaz3No ratings yet

- Module 2Document121 pagesModule 2geles73631No ratings yet

- 3.1 MAC ProtocolDocument48 pages3.1 MAC ProtocolVENKATA SAI KRISHNA YAGANTINo ratings yet

- Data Link Layer-III Multiple Access, Random Access, and ChannelizationDocument18 pagesData Link Layer-III Multiple Access, Random Access, and Channelizationimtiyaz beighNo ratings yet

- Computer Communication and NetworkingDocument32 pagesComputer Communication and NetworkingVankesh MathraniNo ratings yet

- CR ECE Unit 4Document88 pagesCR ECE Unit 4deepas dineshNo ratings yet

- Links in The Path. The Data Link Layer, Which Is Responsible For Transferring ADocument5 pagesLinks in The Path. The Data Link Layer, Which Is Responsible For Transferring AZihnil Adha Islamy MazradNo ratings yet

- CN - Chapter 4 - Class 1, 2Document4 pagesCN - Chapter 4 - Class 1, 2himanshuajaypandeyNo ratings yet

- Lecture06 Mac CsmaDocument12 pagesLecture06 Mac CsmaDr. Pallabi SaikiaNo ratings yet

- Computer Networks Long AnswersDocument32 pagesComputer Networks Long AnswersthirumalreddyNo ratings yet

- What Is A MAC Address?Document20 pagesWhat Is A MAC Address?peeyushNo ratings yet

- Unit2 1.MAC ProtocolDocument40 pagesUnit2 1.MAC Protocolharsharewards1No ratings yet

- Unit 5 DCN Lecture Notes 55 67Document19 pagesUnit 5 DCN Lecture Notes 55 67arun kaushikNo ratings yet

- VJJVJVJDocument7 pagesVJJVJVJAnirudh KawNo ratings yet

- Cat 1 Exam Notes - CN Unit 2Document46 pagesCat 1 Exam Notes - CN Unit 2Deepan surya RajNo ratings yet

- Seminar Report On SDMADocument13 pagesSeminar Report On SDMAdarpansinghalNo ratings yet

- CN NotesDocument109 pagesCN Notesanujgargeya27No ratings yet

- The Medium Access ControlDocument100 pagesThe Medium Access ControlRahulNo ratings yet

- MAC Presentation1Document50 pagesMAC Presentation1srinusirisalaNo ratings yet

- The Medium Access Control SublayerDocument70 pagesThe Medium Access Control Sublayerayushi vermaNo ratings yet

- Module 3 2Document23 pagesModule 3 2SINGH NAVINKUMARNo ratings yet

- Ch12 Multiple AccessDocument42 pagesCh12 Multiple AccessShubham JainNo ratings yet

- RAVIKANT Paper 1 SuveyDocument8 pagesRAVIKANT Paper 1 SuveyDeepak SharmaNo ratings yet

- MAC ProtocolDocument36 pagesMAC ProtocolHrithik ReignsNo ratings yet

- Microwave Rngineering Short Answer QuestionsDocument2 pagesMicrowave Rngineering Short Answer QuestionsrahulNo ratings yet

- What Is Electronics?: SubjectsDocument11 pagesWhat Is Electronics?: SubjectsrahulNo ratings yet

- A Building Has 100 Floors. One of The Floors Is The Highest Floor An Egg Can Be Dropped From Without BreakingDocument10 pagesA Building Has 100 Floors. One of The Floors Is The Highest Floor An Egg Can Be Dropped From Without BreakingrahulNo ratings yet

- Getting The Most Out of Microsoft EdgeDocument16 pagesGetting The Most Out of Microsoft EdgerahulNo ratings yet

- Rahul Voter SlipDocument1 pageRahul Voter SliprahulNo ratings yet

- Wireless Notice Board Using Zigbee: Under The Guidance ofDocument9 pagesWireless Notice Board Using Zigbee: Under The Guidance ofrahulNo ratings yet

- BibliographyDocument1 pageBibliographyrahulNo ratings yet

- Project Progress Report - Review #01 Title: Rfid Based Smart Trolley With Automatic Billing SystemDocument2 pagesProject Progress Report - Review #01 Title: Rfid Based Smart Trolley With Automatic Billing SystemrahulNo ratings yet

- ZigbeeDocument18 pagesZigbeerahulNo ratings yet

- Zigbee TutorialDocument9 pagesZigbee TutorialrahulNo ratings yet

- Project Guidelines 2011Document10 pagesProject Guidelines 2011rahulNo ratings yet

- Zigbee Based Wireless Notice Board Batch No: C09 Guide:-Mrs - Vilasini RajaDocument2 pagesZigbee Based Wireless Notice Board Batch No: C09 Guide:-Mrs - Vilasini RajarahulNo ratings yet

- BPP of Goods Receipt MIS-MMMDocument9 pagesBPP of Goods Receipt MIS-MMMmeddebyounesNo ratings yet

- SA 516 Gr. 70Document3 pagesSA 516 Gr. 70GANESHNo ratings yet

- Class-XII - Chemistry Worksheet-1 Aldehyde, Ketone and Carboxylic AcidsDocument3 pagesClass-XII - Chemistry Worksheet-1 Aldehyde, Ketone and Carboxylic AcidsSameer DahiyaNo ratings yet

- Assignment 1 Geme 521Document6 pagesAssignment 1 Geme 521Raggar P CammNo ratings yet

- Transactional Behavior Verification in Business Process As A Service ConfigurationDocument91 pagesTransactional Behavior Verification in Business Process As A Service ConfigurationselbalNo ratings yet

- Attendance Management Software User ManualV1.3Document266 pagesAttendance Management Software User ManualV1.3Mark Christopher Guardaquivil OlegarioNo ratings yet

- 450 SXS-F 2008: Spare Parts Manual: EngineDocument28 pages450 SXS-F 2008: Spare Parts Manual: EnginecharlesNo ratings yet

- Pages From Deplazes - 2005 - Constructing - Architecture PDFDocument1 pagePages From Deplazes - 2005 - Constructing - Architecture PDFGonzalo De la ParraNo ratings yet

- Metodos de Reparacion Mindray DC-6Document24 pagesMetodos de Reparacion Mindray DC-6Khalil IssaadNo ratings yet

- Spreadsheet Risk ManagementDocument9 pagesSpreadsheet Risk ManagementesperryNo ratings yet

- Procuct Data Guide (Five Star Products)Document192 pagesProcuct Data Guide (Five Star Products)ccorp0089No ratings yet

- Operational Manual: Pistol Fort - 28 Caliber 5.7x28 MMDocument8 pagesOperational Manual: Pistol Fort - 28 Caliber 5.7x28 MMluca ardenziNo ratings yet

- F2000 - Afm Sup11 Rev04 - 20100712Document36 pagesF2000 - Afm Sup11 Rev04 - 20100712rjohnson3773No ratings yet

- Also Known As Pitched or Peaked RoofDocument2 pagesAlso Known As Pitched or Peaked RoofMarvin AmparoNo ratings yet

- EnableThinner Stronger Collation Shrink Films Help Brand Owners Deliver Bundled Products Securely enDocument2 pagesEnableThinner Stronger Collation Shrink Films Help Brand Owners Deliver Bundled Products Securely enSajib BhattacharyaNo ratings yet

- SG10 ICT Chapter6Document36 pagesSG10 ICT Chapter6Rasika JayawardanaNo ratings yet

- Control Theory TutorialDocument112 pagesControl Theory Tutorialananda melania prawesti100% (1)

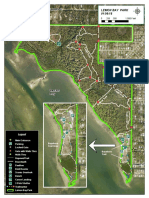

- Lemon Bay Park Florida Map 2018Document1 pageLemon Bay Park Florida Map 2018Kilty ONealNo ratings yet

- Certificado SofarDocument3 pagesCertificado SofarJeff DanceNo ratings yet

- Design and Fabrication OF Solar Light TubesDocument35 pagesDesign and Fabrication OF Solar Light TubesMelquir jakrajNo ratings yet

- "Open Sesame": By: Ysms Lecturer: Sir Aliff Farhan Bin Mohd YaminDocument85 pages"Open Sesame": By: Ysms Lecturer: Sir Aliff Farhan Bin Mohd YaminFakhrurrazi HizalNo ratings yet

- Module-01 Anthrophometrics PDFDocument2 pagesModule-01 Anthrophometrics PDFAndrew LadaoNo ratings yet

- Student Chapter Report: Diego Sebastián Sica (President UBA SPIE SC) 22Document1 pageStudent Chapter Report: Diego Sebastián Sica (President UBA SPIE SC) 22Willy MerloNo ratings yet

- Wartsila SP B Navy OPVDocument4 pagesWartsila SP B Navy OPVodvasquez100% (1)

- Troubleshooting Oven Repair GuideDocument18 pagesTroubleshooting Oven Repair GuideMonete FlorinNo ratings yet

- 2016 Kitchen ManualDocument93 pages2016 Kitchen ManualAdnan Ul HaqNo ratings yet

- Hello, From Sotja Interiors Bali - 27 NOV 2017Document174 pagesHello, From Sotja Interiors Bali - 27 NOV 2017Ibrahim Amir HasanNo ratings yet

- Brochure UNIPVDocument20 pagesBrochure UNIPVPurushoth KumarNo ratings yet