Professional Documents

Culture Documents

Brown 2005 - BRJ 29 2 Summer PDF

Uploaded by

rosario belloOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Brown 2005 - BRJ 29 2 Summer PDF

Uploaded by

rosario belloCopyright:

Available Formats

Equity of Literacy-Based Math

Performance Assessments for

English Language Learners

Clara Lee Brown

The University of Tennessee, Knoxville

Abstract

This article reports findings from a study that investigated math

achievement differences between English language learners (ELLs)

and fully English proficient (FEP) students on a literacy-based

performance assessment (LBPA). It has been assumed that LBPAs

are superior to standardized multiple-choice assessments, but it

has not been determined if LBPAs are appropriate for measuring

the math achievement of ELLs. The most salient characteristic of

LBPAs is that students read multi-level questions and explain how

they solve math problems in writing. Thus, LBPAs place great

literacy demands upon students. Because most ELLs have

underdeveloped literacy skills in English, these demands put ELLs

at a great disadvantage. Analysis revealed that socioeconomic

status (SES) had a significant impact on all students, but the impact

was larger on FEP students than on ELLs; high-SES FEP students

outperformed high-SES ELLs, but there was no significant difference

between low-SES ELLs and low-SES FEP students. High SES

generally means more cognitive academic language proficiency,

because of the influence of non-school factors such as the presence

of a print-rich environment. High-SES ELLs did not do as well as

high-SES FEP students because of a lack of academic English. The

nature of the examination masked their true abilities. The finding

of no difference between low-SES ELLs and low-SES FEP students,

however, could be a result of the fact that neither group had the

advantage of high cognitive academic language proficiency; the FEP

students’ only “advantage” was superior conversational English,

of little use for performing academic tasks. This article concludes

that LBPAs, together with the current assessment-driven

accountability system, seriously undermine equal treatment for

ELLs.

Equity of Literacy-Based Math Assessments 337

Introduction

It has long been recognized that a substantial achievement gap exists

between language-minority students and native speakers of English (August

& Hakuta, 1997; Silver, Smith, & Nelson, 1995). A significant gap in math

scores, in particular, has caused widespread concern among educators (Khisty,

1997; Secada, Fennema, & Adajian, 1995). Moreover, language-minority

students are less likely to be represented in math-related majors in higher

education, which affects their career opportunities and lifetime earnings

(Bernardo, 2002; Cuevas, 1984; Torres & Zeidler, 2001). Apparently, math

achievement plays a significant role in the academic and social stratification

of minorities (Khisty, 1995; Secada, 1992). Thus, English language learner

(ELL) students’ math achievement—or lack thereof—should be explored in

light of new ways ELL students are being assessed.

Under the standards-based reform movement initiated in the late 1980s,

the National Council of Teachers of Mathematics (NCTM) published

Curriculum and Evaluation Standards for School Mathematics (1989),

specifying what students should know and be able to do. NCTM declared

that a more problem-solving and higher order thinking–based curriculum should

replace the arithmetic- and isolated facts–based traditional approach. These

1989 NCTM standards also conveyed the importance of mathematical literacy,

especially students’ ability to communicate mathematically, so that they can

read, write, and discuss mathematics.

While this curriculum movement was taking place, various states created

new assessment programs that reflected the tenets of NCTM’s new math

curriculum: understanding concepts rather than algorithms, critical thinking,

problem solving, and communicating mathematically. As a result, states such

as Connecticut, Kentucky, Maryland, Vermont, and Wisconsin created literacy-

based performance assessments (LBPAs) in content areas such as math

(National Council of Teachers of Mathematics, 1995). The strength of LBPAs

lies in asking students to solve real-life problems by applying higher order

and critical thinking skills based on conceptual understanding and then to

explain, in writing, how they solved the problems. LBPAs go beyond the

traditional multiple-choice standardized testing procedures: In LBPAs, such

as National Assessment of Educational Progress testing, math questions are

open ended. However, the percentage of open-ended questions differs from

state to state. In Maryland, all math questions are open ended and highly

literacy based. They require students to read rather lengthy, multiple-part

questions and provide a written response describing the problem-solving

process, and how they solved the problems (see sample questions in

Appendixes A and B).

Although they are timely and appropriate for preparing all students for

the 21st century’s era of information and high technology, the new assessments

have their drawbacks, especially in evaluating the math achievement of ELL

338 Bilingual Research Journal, 29: 2 Summer 2005

students. One salient characteristic of ELL students is that their academic

English is below grade level—sometimes several grades below. Thus, ELL

students have a double disadvantage: They have to learn math in their less

than fully developed language, and they must take a test that requires

communicating mathematical concepts in writing in a language they have not

yet fully grasped. Under the new LBPAs, the achievement gap between ELL

students and fully English proficient (FEP) students will likely be widened,

not narrowed (Madden, Slavin, & Simons, 1995). Students from language-

minority backgrounds are more likely to score worse than their counterparts

on performance-based assessments than on standardized assessments

(Shavelson, Baxter, & Pine, 1992).

The need for strong math skills has never been greater. The No Child Left

Behind Act (2002) requires all states to assess students’ math achievement

every year from third grade to eighth grade (Olson, 2002). Under the No Child

Left Behind Act, ELL students are lumped together into an accountability

system that not only fails to provide a level playing field, but that puts them at

a severe disadvantage. Thus, the following critical issues emerge:

1. How equitable are LBPAs for ELL students? According to NCTM’s

standards, the new math goes beyond that of algorithms and rote

calculations; students are now taught to reason mathematically and to

communicate their reasoning (Madden et al., 1995). This is indeed an

improvement. If math is taught in a way that emphasizes mathematical

thinking and problem solving, assessment must reflect this by assessing

students’ ability to demonstrate that they can apply what they know to

solve authentic problems. We do not know, however, how this type of

assessment will impact ELL students.

2. Today’s higher curriculum standards, a result of the Education Summit of

1989 (for a history of the standards movement in the United States, see

Mid-Continent Research for Education and Learning, n.d.), push for

equitable assessment and aim to guard against unfairness. How do we

reconcile these lofty intentions with the apparent inappropriateness of

such assessment for ELL students?

To date, no empirical studies have reported on the relationship between

ELL students’ English proficiency and their math achievement as measured

on LBPAs. This knowledge gap warrants an inquiry regarding fair and accurate

assessment for these students. This article, based on the test score analysis

of third graders taking one of the statewide LBPAs, argues that math testing

through LBPAs severely undermines the opportunity for ELL students to be

equitably assessed. This article also suggests changing assessment policy

so as to uphold the integrity of the new math curriculum as well as to protect

assessment equity for ELL students.

Equity of Literacy-Based Math Assessments 339

Review of Related Literature

Second-Language Proficiency and Academic Achievement

Cummins (1980, 1981) has provided a much-needed framework in the field

of bilingual and English as a Second Language (ESL) education. His critical

work reveals why ELL students’ academic achievement cannot be assessed in

the same manner as that of their FEP counterparts. He asserts that oral fluency

cannot be regarded as academic competence in academic settings.

Cummins theorizes that there are two distinctively different proficiencies.

Basic conversational language ability is acquired rapidly. ELL students take

only a year or 2 to become proficient in conversational English (see also

Hakuta, Butler, & Witt, 1999). In contrast, attaining grade level of academic

English can take far longer, as long as 5 to 7 years. Academic English is

necessary for tasks that are context reduced, such as reading chapters in a

textbook that describes different math functions.

Second-Language Proficiency and Math Achievement

What makes math such a difficult subject for ELL students? First, ELL

students must filter their math knowledge—a language all its own—through

a second language, English. So, in this case, math becomes the “third” language.

Students face an extra challenge, then, as they attempt to learn cognitively

demanding, highly abstract mathematical concepts while they are still learning

English (Chamot & O’Malley, 1994).

Second, math learning must be accrued. For example, students must know

how to add and subtract before they can learn how to multiply and divide, and

must learn multiplication and division before learning ratios. In addition, as

students progress in math, content and textbooks become more difficult. Thus,

as ELL students proceed to higher grades, they face increasingly greater

challenges in keeping up or catching up with their counterparts. As a result,

the achievement gap widens.

Third, math vocabulary is not commonly used in daily settings, is technical

in nature, and is narrowly defined (Cuevas, 1984). Krussel (1998) views language

as an essential part of the math construct because language is an indispensable

tool in math. It comes as no surprise that ELL students are not successful at

solving word problems loaded with difficult and unfamiliar vocabulary (Abedi

& Lord, 2001; Solano-Flores & Trumbull, 2003). For ELL students who are just

learning English, words such as least common denominator, ratio, or quotient

have little meaning. In most cases, the concept is new, and in addition, words

may be used in ways that are quite different from uses in ordinary language.

Fourth, syntax—language structure—used in math is highly complex

and very specific. Math uses syntactic features that many students find

cumbersome, and that can be especially confusing for ELL students. For

340 Bilingual Research Journal, 29: 2 Summer 2005

example, the use of comparatives (e.g., higher than, greater than, as much as),

passive voice (e.g., X is added to Y), reversed ways of stating the known and

unknown variables (e.g., X is 2 less than Y; the correct equation is X = Y – 2,

not X – 2 = Y) can exacerbate confusion (Chamot & O’Malley, 1994. p. 230).

Cuevas (1984) and Carey, Fennema, Carpenter, and Franks (1995) point out

that, unlike the language of literary narratives, reduced redundancy in

mathematical expressions makes it extremely hard for ELL students to

comprehend what they read in math textbooks, which lack the built-in contextual

cues found in language arts.

The following example illustrates how the structure of word problems can

lead ELL students to misunderstand the question. A bilingual student in ninth-

grade Algebra I wrote “X3 > N” as an answer to “The number of nickels in my

pocket is three times more than the number of dimes” (Mestre, 1988 p. 205).

Mestre attributed the incorrect response to the missing word equal in the

word problem. Thus, the student misinterpreted “more than” as a statement of

inequality. Abedi and Lord (2001) reported that ELL students achieved slightly

higher scores on a modified math test written using simpler language and less

complex language structure. They concluded that ELL students’ math

performance was confounded by their language skills.

Fifth, ELL students’ reading skills affect their math performance. Previous

studies also show high correlations between math and reading scores. McGhan

(1995) reported a correlation of .84 between fourth graders’ reading

comprehension and math test scores for 139 school districts in Michigan. In

addition to difficulties related to math vocabulary and style of expression,

ELL students process information more slowly than do their counterparts

because ELL students are slower readers (Abedi, 2004; Bernhardt, 1991; Oller

& Perkins, 1978).

Sixth, according to Chamot and O’Malley (1994), mathematical procedures

are culturally bound; different cultures use different approaches to solve

problems, or they use symbols differently. Midobuche (2001) shows the way

the same division problem is solved differently in two different countries

(p. 501).

495 495

3)1485 3)1485

-12 28

28 15

-27 0

15

-15

0

(Long division solved in the United States) (Short division solved in Mexico)

Equity of Literacy-Based Math Assessments 341

Even the ways numbers are read differ across cultures. In Korea, 200,000

(“two hundred thousand”) will be read as “twenty ten thousand.” It is read as

“twenty man”; man (pronounced as m-ah-n) means ten thousands in Korean.

Seventh, not only is the way the math problems are solved culturally

specific, but the way the math questions are interpreted can also be

socioculturally bound (Solano-Flores & Trumbull, 2003; Stanley & Spafford,

2002). Solano-Flores and Trumbull (2003) reported that for the sentence

“[Sam’s] mother has only $1.00 bills” ELL students misunderstood the word

“only,” interpreting the sentence as meaning that Sam’s mother only had a

dollar (p. 4). Solano-Flores and Trumbull argued that this misinterpretation

might be related to socioeconomic status (SES): Students from low-SES

backgrounds may have a more “survival-oriented” perspective and may project

their concerns onto the way they interpret the problem; having limited funds

would not be unusual (p. 5).

Eighth, in addition to the way problem solving is approached differently

based on cultural differences, math word problems cannot be solved if the

students are not familiar with the cultural context of the mainstream society or

the cultural knowledge that is taken for granted. For instance, ELL students

might not understand a word problem that makes a reference to a Mardi Gras

parade. ELL students may thus be handicapped both with respect to language

and context.

Based on the foregoing discussion, one can easily understand why ELL

students find math challenging. To complicate matters, many teachers wrongly

believe math is not about language, but only about symbols and numbers

(Bransford, 2000). Thus, they feel that ELL students can perform competitively

in math (Collier, 1987; Tsang, 1988). This is indeed a myth: Abedi (2004) reports

gaps between ELL students and FEP students on several types of math test;

the gap is, however, smallest in computational math. In fact, many studies

have demonstrated that ELL students lag far behind in word problems, and

the cause of their struggle in the problem-solving aspects of math has been

attributed to their less developed academic English proficiency (Abedi, 2004;

Abedi, Hofstetter, & Lord, 2004; Abedi & Lord, 2001; Brenner, 1998; Khisty,

1997; Kopriva & Saez, 1997; Myers & Milne, 1988; Olivares, 1996; Solano-

Flores & Trumbull, 2003).

Abedi (2004; Abedi, Leon, & Mirocha, 2003) reports that the performance

difference between ELL students and FEP students was greater for tests of

analytical math that contained linguistically complex items than for

computational math. ELL students performed as well as native speakers only

on some tests of math calculation. In a recent study of Filipino bilingual

students whose first language was either Filipino or English, higher scores

were reported when students had the mathematical word problems written in

their native language (Bernardo, 2002). These findings indicated that second-

language proficiency is strongly correlated to mathematical problem-solving

342 Bilingual Research Journal, 29: 2 Summer 2005

skills. Clearly, ELL students’ poor performance at math problem-solving tasks

can be a result of their level of English proficiency, which can mask their

mathematical knowledge. Although ELL students can keep up with low-level

mechanical aspects of math, on many tests they must go beyond mere

arithmetic. On LBPAs, ELL students face increasingly tougher challenges

(Abedi, 2004; Romberg, 1992).

Literacy-Based Performance Assessments

LBPAs require students to use writing to demonstrate what they know

and can do. LBPAs come in various forms across all content areas. For example,

essay assessments in language arts are considered LBPAs, because students

must demonstrate their competence in particular writing genres. Portfolios,

which showcase selective samples from students’ written work during a certain

time frame, are classified as LBPAs, as are open-ended, literacy-based

mathematics assessments that ask students to explain in writing how they

solved problems (Kopriva & Saez, 1997). By definition, then, all math problems

that ask students to justify their answers are considered LBPAs. This includes

some of the word problems in the National Assessment of Educational Progress

mathematics test (see Appendix A for an example).

The degree of difficulty and complexity in word problems differs starkly

between multiple-choice tests and LBPAs. Although word problems in

multiple-choice tests may require one answer, word problems in LBPAs ask a

set of related questions requiring multiple steps to find solutions. For example,

students may first have to perform algebraic calculations to gather data. Second,

they might have to use the data to construct a graph. Third, they may have to

analyze the graph to find a trend. Fourth, they could be required to predict a

real-life situation based on the trend they discovered. Fifth, they might be

asked to discuss the final result in writing. As a result, word problems in

LBPAs require higher level reading skills than multiple-choice tests do, in

addition to writing. Thus, LBPAs demand higher literacy skills.

LBPAs offer some important advantages over multiple-choice tests.

LBPAs (a) can present a better picture of students’ progress over a period of

time; (b) can be used to show comprehensively what students know and can

do; (c) require students to apply what they have learned to solve problems in

authentic situations; and (d) cause students to participate actively in the

assessment process by setting their own goals and being self-reflective

(Lachat, 1999; Moya & O’Malley, 1994).

Although LBPAs may appear superior to multiple-choice tests, their use

in large-scale, statewide assessments raises several critical issues for the

nation’s fastest growing student body: those whose native language is not

English. As previously mentioned, the high language demands of LBPAs put

ELL students at a great disadvantage as they try to express what they know,

using their weaker language (LaCelle-Peterson & Rivera, 1997; McKay, 2000;

Short, 1993).

Equity of Literacy-Based Math Assessments 343

Fairness becomes an issue when LBPAs fail to measure ELL students’

academic achievement accurately: Do their low scores come from a lack of

content knowledge, or do they result from insufficient English skills? Further,

little research has been conducted on LBPAs to show whether performance

difference exists between ELL students and their FEP peers, or to assess their

assumed superiority over multiple-choice tests for ELL students.

The Study

This study focused on the achievement gap in math between ELL students

and FEP students on the Maryland School Performance Assessment Program

(MSPAP) using test scores from the year 2000.1 The MSPAP was chosen

because the Maryland State Department of Education (MSDE) created a unique

LBPA. The MSPAP differed from assessments used in other states in the

following ways:

1. One of the MSPAP content areas (the math communication subskill)

specifically measures students’ ability to communicate mathematical

knowledge in writing, thus challenging students to go well beyond mere

mathematical calculation;

2. This open-ended test asks students to construct written responses

throughout the entire testing program;

3. The entire math portion of the MSPAP consists exclusively of higher level

word problems (see sample test items in Appendix B);

4. Multi-procedure questions in math word problems require a high level of

reading comprehension; and

5. Connections between reading and writing across the curriculum reflect

the most salient characteristics of the LBPAs (see http://www.mdk12.org/

mspp/mspap/what-is-mspap for a detailed description of the MSPAP).

The MSPAP is given in Grades 3, 5, and 8. Third graders were chosen for

this study because there are a higher number of ELL students in Grade 3:

Young ELL students tend to exit ESL programs rather quickly. (Note that ELL

students, once exited from ESL programs, are not coded as ELL students.

They are reclassified and become part of the FEP population. Thus, there is a

high probability that reclassified ELL students were part of the FEP pool when

sampled.)

This study posed three research questions in relation to achievement

differences in math between ELL students and FEP students within the same

SES as measured by Free and Reduced Meals (FARMs) status. The SES

variable is held constant within each group to minimize its influence on the

test scores, since the SES is known to be the most influential determinant of

student achievement (Fernández & Nielsen, 1986). The research questions

were:

344 Bilingual Research Journal, 29: 2 Summer 2005

1. Is there a significant difference between the mean scores of third-grade

ELL students and FEP students within the same FARMs status in math?

2. Is there a significant difference between the mean scores of third-grade

ELL students and FEP students within the same FARMs status on the

math communication subskill?

3. Which predictor variables—reading, writing, language usage, FARMs,

gender, and ethnicity—account for the most variance in third-grade ELL

students’ and FEP students’ math scores?

Research Questions 1 and 2 hypothesized no achievement difference

between ELL students and FEP students in math and the math communication

subskill within the same SES variable. The third research question compared

the roles of language-related predictors with SES for the two groups. Gender

and ethnicity were chosen as additional predictor variables to further explain

ELL students’ math achievement status.

Instrumentation

MSPAP, a criterion-referenced test, assesses students’ achievement levels

in six content areas: reading, writing, language usage, math, science, and

social studies. It is constructed so that the scores from multiple content areas

can be cross-sectionally compared within a grade. The scaled scores, ranging

from 350 to 700, are designed to have a mean score of 500 and a standard

deviation of 50 (see http://www.mdk12.org/mspp/mspap/what-is-mspap for a

detailed description of the MSPAP, including administration and scoring).

Sampling

Test scores of the third graders from all 25 Maryland school districts were

selected, excluding students who received special education services

(language variables and exceptionalities related to special education have

confounding effects on the test scores). Random sampling for the ELL students

and stratified random sampling for the FEP students were planned according

to FARMs status to keep the SES variable constant. However, random sampling

for ELL students was not performed because of the contingency imposed on

the author by the MSDE due to the small percentage (1.1%) of ELL students’

participation in the MSPAP. Consequently, there were four subgroups:

(a) ELL students with FARMs, (b) FEP students with FARMs, (c) ELL students

with non-FARMs, and (d) FEP students with non-FARMs. Information

regarding participants’ prior educational backgrounds or formal schooling

was not available from the MSDE data set.

In 2000, a total of 65,536 third-grade students took the MSPAP; 742 of

them were identified as ELL students and the rest (64,794) as FEP students.

From the 742 ELL students, 90 students coded as special education were

excluded. From the remaining 652 ELL students, 492 (n1) students were

Equity of Literacy-Based Math Assessments 345

identified as having complete test scores in math. Among the 492 ELL students,

there were 260 ELL students coded with FARMs status and 232 ELL students

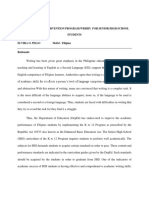

with non-FARMs status (see Figure 1 for a description of the sampling

process). The ELL student group included 2 American Indians (.4%), 168

Asian Americans (34.1%), 48 African Americans (9.8%), 56 non-Hispanic

Whites (11.4%), and 218 Hispanics (44.3%).

For the FEP group sampling, the same procedures were applied. First,

9,291 students coded as special education were excluded. Second, from the

remaining 55,503 students, 53,025 students were identified as students who

took a math portion of the MSPAP. Third, to match the ELL group, 260 FARMs

students were randomly selected from 17,244 non-ELL students identified

with FARMs status. Fourth, matching 232 non-FARMs students were randomly

selected from 35,781 FEP students identified with non-FARMs status. Table 1

contains demographics of the third graders who were selected for the study.

Data Analysis

Independent samples t-tests were selected to answer the first two research

questions, an investigation of performance differences between ELL students

and FEP students in the overall math examination, as well as the math

communication subskill. For the third research question, multiple linear

regression analysis was employed to determine which predictor variable

accounted for the largest proportion of the variance in the criterion variable,

math achievement.

Merged Final Data Set (984)

FARMs (260) Non-FARMs (232)

FARMs (260) Non-FARMs (232) FARMs (17,244) Non-FARMs (35,781)

Non-complete Scores Complete Scores (492) Non-complete Scores Complete Scores (53,025)

Special Ed (90) Non-Special Ed (652) Special Ed (9,291) Non-Special E (55,503)

ELLs (742) FEPs (64,794)

Entire Data Set (65,536)

Figure 1. Overview of the sampling process.

Note. “Complete” denotes complete test scores, and “non-complete” denotes

incomplete test scores.

346 Bilingual Research Journal, 29: 2 Summer 2005

Table 1

Free and Reduced Meals (FARMs) Status, Gender, and Ethnicity

of English Language Learners (ELLs) and Fully English

Proficient (FEP) Students

ELLs FEPs

n1 % n2 %

Yes 260 52.8 260 52.8

FARMs

No 232 47.2 232 47.2

Male 246 50 224 46

Gender

Female 246 50 268 54

American Indian 2 .4 2 .4

Asian American 168 34.1 16 3.3

Ethnicity African American 48 9.8 206 41.9

Non-Hispanic White 56 11.4 251 51

Hispanic 218 44.3 17 3.5

Grand total 492 100 492 100

The .05 level of significance was chosen for the study; however, when

the same statistical procedures were performed more than once, the alpha

level was adjusted to a more conservative level (α = .01) in order to lower

the chances of committing a Type I error, that is, the error of concluding what

are actually non-significant findings as significant. For Research Questions 1

and 2, in addition to t-tests, a multivariate analysis of variance (MANOVA)

was conducted.

Results

Achievement Differences in Math

Using a 2x2 factorial design, preliminary analyses of interaction effects

between FARMs and ELL status were performed prior to investigating the

main effect of ELL status across the same FARMs status. The result revealed

a significant interaction between ELL status and FARMs status, indicating

that the FARMs variable had different effects for ELL students and

Equity of Literacy-Based Math Assessments 347

FEP students (F [1, 980] = 52.23, p < .001). FARMs status resulted in lower

scores for FEP students, relative to non-FARMs status, but FARMs status

did not have as large an effect on ELL scores, and the test score gap between

FEP and ELL students was significantly lower for FARMs-status students.

Therefore, these findings warrant further analyses of the main effects. The

main effects of both ELL and FARMs status were significant (ELL status,

F [1, 980] = 102.31, p < .001; FARMs status, F [1, 980] = 331.72, p < .001).

In addition, the effect sizes in analysis of variance (ANOVA) indicate that ELL

status explained 10% (η ² = .10) and FARMs status, 25% ( η² = .25) of the

variance associated with math scores. The mean math scores of both groups

can be found in Table 2.

The first research question asked if there was a significant difference

between the mean scores of ELL students and FEP students within the same

FARMs status in math. An independent samples t-test indicated no significant

group difference between ELL students and FEP students who were identified

with FARMs status, the difference falling just short of statistical significance

(p = .057), with a small effect size (d = .17). There was, however, a significant

difference between ELL and FEP non-FARMs students in math. The effect

size was substantial (t [462] = -13.70, p < .01, d = 1.27 [see Table 2]). Thus,

it can be said that both groups from low-SES backgrounds performed similarly,

but FEP students from high-SES backgrounds outperformed ELL students

from high-SES backgrounds.

Table 2

Means, Standard Deviations, and t-Test Results on Math

Non-Free and Reduced Meals groups

English language Fully English proficient

learners

n1 M1 SD n2 M2 SD t p d

(462)

232 517.29 44.82 232 562.31 22.27 -13.70 .000* 1.27

Free and Reduced Meals groups

n1 M1 SD n2 M2 SD t p d

(518)

260 488.78 45.81 260 496.27 43.80 1.91 .057 .17

Note. d = effect size.

*p < .001, two-tailed.

348 Bilingual Research Journal, 29: 2 Summer 2005

Math Communication Subskill

Exactly the same steps used for the first research question were taken for

the second research question. Before investigating the main effect of ELL

status on the math communication subskill, preliminary analyses of interaction

effects between FARMs and ELL status revealed a significant interaction,

which required further analyses of main effects (F [1, 685] = 24.54, p < .001).

The main effects of both ELL and FARMs status were significant (ELL status,

F [1, 685] = 23.84, p < .001; FARMs status, F [1, 685] = 107.60, p < .001).

Inspection of the effect sizes derived from the ANOVA indicated that ELL

status explained 3% (η ² = .03) and FARMs status 14% ( η² = .14) of the

variance associated with the math communication subskill.

The achievement-difference patterns identified from Research Question

1 were repeated for Research Question 2. The second research question asked

if there was a significant difference between ELL students and FEP students

on the math communication subskill. An independent samples t-test indicated

no significant group difference between ELL students and FEP students who

were identified with FARMs status. Yet, the main effect of ELL status on the

math communication measure showed a significant group difference among

non-FARMs students (communication subskill, t [319] = -7.66, p < .01,

d = .85 [see Table 3]). The mean difference between the non-FARMs status

ELL students and FEP students on the math communication subskill was

substantial. Once again, ELL students and FEP students from low-SES

backgrounds performed similarly, but FEP students from high-SES families

outperformed ELL students from high-SES families. The SES variable did not

seem to offset ELL status for the math communication subskill test for high-

SES ELL students.

Predictors in Math Achievement

Before multiple linear regression was conducted, the significance of all

predictor variables was determined. A two-tailed t-test indicated that reading,

writing, language usage, and FARMs were significant predictor variables,

while ethnicity and gender were not, for both ELL and FEP student groups

(t = 10.80, 2.56, 5.9, 4.3 for ELL students; t = 9.00, 4.12, 2.54, 10.42 for FEP

students for reading, writing, language usage, and FARMs, respectively, all

significant, p < .05; for gender, t = .03, for ELL students, and t = .53 for FEP

students; for ethnicity, t = .70 for ELL students and t =1.68 for FEP students,

not significant). Gender and ethnicity were thus removed from the full

regression model by the parsimony rule because they were not considered

significant predictor variables that contribute to explaining the total math

score variance for both ELL and FEP students.

For the restricted model for ELL students, the remaining predictor variables

explained 50.3% of the total variance on math achievement (R² = .503,

F [4, 487] = 123.21, p < .05) and for FEP students, the remaining predictor

Equity of Literacy-Based Math Assessments 349

Table 3

Means, Standard Deviations, and t-Test Results on the Math

Communication Subskill

Non-Free and Reduced Meals groups

English language Fully English proficient

learners

t

n1 M1 SD n2 M2 SD p d

(319)

153 507.78 71.60 168 560.15 49.85 -7.66 .000* .85

Free and Reduced Meals groups

t

n1 M1 SD n2 M2 SD p d

(366)

185 478.92 75.95 185 478.55 76.95 -.047 .96 .004

Note. d = effect size. The n of the math communication subskill test is smaller than

that of the math test due to the fact that fewer students were assessed on the subskill

part.

*p < .001, two-tailed.

variables explained 64% of the total variance on math achievement (R² = .64,

F [4, 487] = 217.88, p < .05). The regression equation for ELL students and

FEP students are the following (see Table 4):

Y’1 (ELLs) = .41 reading + .26 language usage - .16 FARMs + .11 writing

Y’2 (FEPs) = -.38 FARMs + .32 reading + .17 writing + .11 language usage

As indicated by the findings, FARMs status was not only a significant

but also a strong predictor of math achievement for FEP students. FARMs

status was the strongest predictor of math achievement, followed by reading

skills. For ELL students, FARMs status was a statistically significant predictor

as well. It was, however, only the third strongest predictor for this group,

ranking behind reading and usage (see Table 4). Notably, reading was a stronger

predictor for ELL students than it was for FEP students. Correlations among

significant variables for ELL and FEP students can be found in Table 5.

MANOVA Results of Math and Math Communication Subskill

As previously mentioned, a MANOVA was performed as an additional

test to reduce the measurement error for Research Questions 1 and 2. From

preliminary analysis of interaction effects between ELL status and FARMs

status, a significant interaction was found on the mean scores of math and

350 Bilingual Research Journal, 29: 2 Summer 2005

Table 4

Summary of Regression for Variables Predicting English Language

Learner (ELL) Students’ and Fully English Proficient (FEP)

Students’ Math Achievement in the Maryland School

Performance Assessment Program

ELL students

Predictors B Beta t p

Reading .44 .41 10.91 .000*

Writing .12 .11 2.54 .011*

Language usage .24 .26 6.0 .000*

Free and reduced

-14.85 -.16 -4.76 .000*

meals

FEP students

Reading .34 .32 9.09 .000*

Writing .18 .17 4.08 .000*

Language usage .09 .11 2.50 .013*

Free and reduced

-36.90 -.38 -11.30 .000*

meals

*p < .05.

math communication subskill (Wilks’s Λ = .95, p < .01 [see Figure 2]). Thus,

main effects were further analyzed. The MANOVA indicated that the overall

group difference among FARMs status students was not statistically

significant (Wilks’s Λ = .99, F [1, 366] = 1.78, p = .17 [see Table 6]). ELL

status explained only 1% of the variance associated with the dependent

variables (η² = .01). Among non-FARMs status students, however,

MANOVA results revealed a significant group difference (Wilks’s Λ = .71,

F [1, 319] = 66.16, p < .01 [see Table 7]). ELL status explained almost 30% of

the variance associated with the dependent variables, math and math

communication skills ( η² = .29). Table 8 reports descriptive statistics.

Equity of Literacy-Based Math Assessments 351

Table 5

Pearson Correlation Coefficients for Reading, Writing, Language

Usage, and Free and Reduced Meals (FARMs) for English

Language Learners (ELLs) and Fully English Proficient

(FEP) Students

Math Reading Writing Usage FARMs

ELLs FEPs ELLs FEPs ELLs FEPs ELLs FEPs ELLs FEPs

Math 1.00 1.00 .61* .66* .52* .63* .56* .60* -.30* -.68*

Reading 1.00 1.00 .50* .56* .46* .56* -.17* -.49*

Writing 1.00 1.00 .66* .74* -.21* -.54*

Usage 1.00 1.00 -.20* -.51*

FARMs 1.00 1.00

Note. * indicates the correlation is significant at the .01 level, two-tailed.

Estimated marginal means of Estimated marginal means of math

math scores communication skill scores

560

560

540

540

520

520

500

500

480

0 1 0 1

FARMs FARMs

Figure 2. Interaction between free and reduced meals (FARMs) and English language

learner (ELL) status on math and the math communication subskill.

Note. “0” indicates non-FARMs group, and “1” indicates FARMs status. The broken

lines refer to the ELL students, the solid lines refer to the FEP students.

352 Bilingual Research Journal, 29: 2 Summer 2005

Table 6

Summary of MANOVA of Free and Reduced Meals Students

on Math and Communication Subskill

Effect Wilks's F Hypothesis Error p Eta2

Λ df df

English language

learner status .99 1.78 2.00 365.00 .17 .01

Table 7

Summary of MANOVA of Non–Free and Reduced Meals

Students on Math and Communication Subskill

Effect Wilks's F Hypothesis Error p Eta2

Λ df df

English language

learner status .71 66.16 2.00 318.00 .00* .29

*p < .01.

Table 8

Group Means on Math and Communication Subskill of

Non–Free and Reduced Meals Students

Groups

English language learners Fully English proficient

n1 M SD n2 M SD

Math 153 517.71 44.68 168 561.76 21.44

Communication 153 507.78 71.60 168 560.15 49.85

Note. The sample size from the MANOVA is different from that of the t-test described

in the text. For the MANOVA, only subjects with both math and communication

subskill scores were utilized.

Equity of Literacy-Based Math Assessments 353

Discussion

SES emerged as an important factor in this study. SES, of course, has a

strong impact on student achievement (Fernández & Nielsen, 1986; Krashen

& Brown, 2005; Lytton & Pyryt, 1998; Secada, 1992; Tate, 1997). High SES

generally results in greater cognitive academic language proficiency (CALP),

consisting of superior knowledge of subject matter and aspects of academic

language that are similar in the first and second languages (Krashen, 1996).

Children from higher income families are exposed to more print and have a

wider range of school-relevant experiences. As a result, they gain more

knowledge relevant to school in their home life. CALP makes a powerful

contribution to math achievement on LPBAs in particular, a test that demands

mastery of academic language. Both non-FARMs groups in this study, FEP

and ELL students, have these advantages.

A likely explanation for the finding that high-SES ELL students did not do

as well as high-SES FEP students is that their true ability was masked by their

less developed academic-language proficiency in English. We predict that

over time, high-SES ELL students will do quite well, as they have the same

advantages as high-SES FEP students, and only need to acquire academic

English. In other words, high-SES ELL students’ competence in math cannot

be fully demonstrated due to the language barriers built into the assessment

despite the advantage of having high SES. The language of the test is too

hard for them to understand, and the demands placed on their writing

competence are excessive.

FARMs status made less of a difference for ELL students: FARMs and

non-FARMs ELL students performed similarly. Both of these groups share

the same disadvantages that all low-SES students do: Lack of background

knowledge as well as lack of academic language. The only advantage the

FARMs FEP students had over the non-FARMs ELL students was their

superior competence in conversational English, of little use for performing

academic tasks (Cummins, 1996; Saville-Troike, 1984).

Clearly, ELL students need more time to develop grade-level academic

English before they are required to take large-scale high-stakes tests. A math

test that requires high-level reading skills to understand the questions and

requires mathematical communication through writing seems to be highly

inappropriate for assessing ELL students’ achievement in math (Kopriva &

Saez, 1997). Rather, such tests can create even greater obstacles for them; not

only are their scores lower, but such students, no matter how well prepared

they are and how well they understand the material, are often “pegged” as low

performers with the educational stigma that so often accompanies such

labeling.

Under the heightened accountability policy mandated by No Child Left

Behind (2002), funding often depends on assessment scores. Because of

such high-stakes assessments, districts with higher representations of ELL

354 Bilingual Research Journal, 29: 2 Summer 2005

students will regard these students as burdensome (Olson, 2002). Thus, LBPAs,

together with assessment-driven accountability, can seriously threaten

assessment equity for ELL students.

The “adequate yearly progress (AYP)” stipulated by NCLB in establishing

initial baseline data forces all ELL students to take tests regardless of their

English proficiency. This is not a sound policy. Newly revised guidelines

exempting ELL students for only 1 year are not nearly enough (Dobbs, 2004).

Even for those with high levels of CALP, the tests are inaccurate, and for

those with low levels of CALP, they are, in addition, unfair and cruel. Blindly

throwing ELL students into the accountability system without considering

their unique needs constitutes treatment that is neither equal nor equitable.

The results also clearly suggest that ELL students should not be treated

as a homogeneous group. Those with high-SES backgrounds have, most

likely, an excellent chance of success in school after they acquire sufficient

academic language, but it is likely that those who are from low-SES backgrounds

will face serious problems. Treating ELL students as a uniform group will not

accurately portray their true performance and will result in widening gaps in

academic achievement (Stevens, Butler, & Castellon-Wellington, 2000).

Educational Implications

Although this study is not comprehensive, its results illustrate a critical

aspect of how test formats could affect ELL students’ math achievement. The

American Educational Research Association (2000), on its Web site, expressed

its position regarding high-stakes testing by asserting that “appropriate

attention [should be given] to language difference among examinees” because

when the test scores of the ELL students are adversely affected by their

linguistic proficiencies, those scores cannot be considered an accurate

measurement of true ability.

Unfortunately, an assessment program created with good intentions can

jeopardize assessment equity for ELL students. Thus, policymakers must create

mechanisms that allow ELL students to be tested alternatively. One available

alternative, portfolio assessment, can show yearly progress and would free

schools and teachers to convert their energy from “teaching to the test”

toward helping students expand their knowledge.

In addition, implementing an assessment alternative such as portfolio

assessments would be the most meaningful way to include ELL students in

the accountability system. Portfolio assessments would help establish

accountability by allowing all ELL students to take part in the assessment

process, beginning from their first day of school. Then, achieving “adequate

yearly progress” would not be merely a federal mandate but a tangible and

meaningful goal for all stakeholders.

Equity of Literacy-Based Math Assessments 355

Furthermore, while we are waiting for alternative measures for ELL

students, the results of this study call for exemption provisions for high-

stakes standardized tests to be extended from the current 1 year to at least 3

years, allowing ELL students time to improve their competence in academic

English. (For data on the amount of time necessary to develop sufficient

academic English to do class work in the mainstream and to be able to take

high-stakes tests, see Krashen, 2001.)

This is not a plan, however, to keep ELL students out of the accountability

loop. As noted above, accountability for the first 3 years of the ELL students’

school careers can be measured, hopefully through portfolio assessment,

which can give us a picture of both their subject matter and language

development.

Others (Abedi, 2004; Abedi et al., 2004; Abedi et al., 2003; Abedi & Lord,

2001) propose a different solution: modification of tests to make them more

comprehensible for ELL students, that is, simplifying the language of the

tests. Results of these efforts have produced, however, only modest

improvements in comprehensibility (Abedi et al., 2004).

Apple (1995) succinctly states that educational policy needs to recognize

“the winners and losers” of educational practices (p. 331). The fact that LBPAs

have been in the educational arena for a relatively short period of time in large-

scale statewide assessments necessitates investigating who the winners are

and who the losers are. Nevertheless, meaningful and equitable assessment

of ELL students in systemwide assessment is critical. Without assessment

that allows ELL students to be tested equitably, these students will be perpetual

losers in a system in which they do not receive a fair chance.

References

Abedi, J. (2004). The No Child Left Behind Act and English language

learners: Assessment and accountability issues. Educational Researcher,

33(1), 4–14.

Abedi, J., Hofstetter, C., & Lord, C. (2004). Assessment accommodations

for English language learners: Implications for policy-based empirical

research. Review of Educational Research, 74(1), 1–28.

Abedi, J., Leon, S., & Mirocha, J. (2003). Impact of student language back-

ground on content-based performance: Analyses of extant data (CSE

Tech. Rep. No. 603). Los Angeles: University of California, National

Center for Research on Evaluation, Standards, and Student Testing.

Abedi, J., & Lord, C. (2001). The language factor in mathematics tests.

Applied Measurement in Education, 14(3), 219–234.

356 Bilingual Research Journal, 29: 2 Summer 2005

American Educational Research Association. (2000). AERA position

statements. Retrieved May 31, 2005, from http://www.aera.net/

policyandprograms/?id=378

Apple, M. W. (1995). Taking power seriously: New directions in equity in

mathematics education and beyond. In W. G. Secada, E. Fennema, & L. B.

Adajian (Eds.), New directions in equity in mathematics education

(pp. 329–348). New York: Cambridge University Press.

August, D., & Hakuta, K. (Eds.). (1997). Improving schooling for

language-minority children: A research agenda. Washington, DC:

National Academy Press.

Bernardo, A. B. (2002). Language and mathematical problem solving among

bilinguals. The Journal of Psychology, 136(3), 283–297.

Bernhardt, E. B. (1991). Reading development in a second language:

Theoretical, empirical, & classroom perspectives. Norward, NJ: Ablex.

Bransford, J. D. (Ed.). (2000). How people learn: Brain, mind, experience,

and school. Washington, DC: National Academy Press.

Brenner, M. E. (1998). Development of mathematical communication in

problem solving groups by language minority students. Bilingual

Research Journal, 22(2–4), 149–163.

Carey, D. A., Fennema, E., Carpenter, T. P., & Franks, M. L. (1995). Equity

and mathematics education. In W. G. Secada, E. Fennema, & L. B.

Adajian (Eds.), New directions for equity in mathematics education

(pp. 93–125). New York: Cambridge University Press.

Chamot, A. U., & O’Malley, J. M. (1994). The CALLA handbook: Imple-

menting the cognitive academic language learning approach. New

York: Longman.

Collier, V. (1987). Age and rate of acquisition of second language for

academic purposes. TESOL Quarterly, 21(4), 617–641.

Cuevas, G. J. (1984). Mathematics learning in English a second language.

Journal for Research in Mathematics Education, 15(2), 134–144.

Cummins, J. (1980). Psychological assessment of immigrant children: Logic

or institution? Journal of Multilingual and Multicultural Development,

1(2), 97–111.

Cummins, J. (1981). Four misconceptions about language proficiency in

bilingual education. NABE Journal, 5(3), 31–45.

Cummins, J. (1996). Negotiating identities: Education for empowerment in

a diverse society. Ontario: California Association for Bilingual Education.

Dobbs, M. (2004, March 30). More changes made to “No Child” rules.

Washington Post, p. 1.

Equity of Literacy-Based Math Assessments 357

Fernández, R. M., & Nielsen, F. (1986). Bilingualism and Hispanic

scholastic achievement: Some baseline results. Social Science Research,

15(1), 43–70.

Hakuta, K., Butler, Y. G., & Witt, D. (1999). How long does it take English

learners to attain proficiency? (Policy Report No. 2000-1). The University

of California Linguistic Minority Research Institute.

Khisty, L. L. (1995). Making inequality: Issues of language and meanings in

mathematics teaching with Hispanics students. In W. G. Secada, E. Fennema,

& L. B. Adajian (Eds.), New directions for equity in mathematics edu-

cation (pp. 279–297). New York: Cambridge University.

Khisty, L. L. (1997). Making mathematics accessible to Latino students:

Rethinking instructional practice. In J. Trentacosta & M. J. Kenney (Eds.),

1996 yearbook multicultural and gender equity in mathematics

classroom: The gift of diversity (pp. 92–101). Reston, VA: National Council

of Teachers of Mathematics.

Kopriva, R., & Saez, S. (1997). Guide to scoring LEP student responses to

open-ended mathematics items. Washington, DC: The Council of Chief

State School Officers.

Krashen, S. (1996). Under attack: The case against bilingual education.

Century City, CA: Language Education Associates.

Krashen, S. (2001). How many children remain in bilingual education “too

long”? Some recent data. NABE News, 24, 15–17.

Krashen, S., & Brown, C. L. (2005). The ameliorating effects of high socio-

economic status: A secondary analysis. Bilingual Research Journal,

29(1), 185–196.

Krussel, L. (1998). Teaching the language of mathematics. The Mathematical

Teacher, 91(5), 436–441.

LaCelle-Peterson, M., & Rivera, C. (1997). Is it real for all kids? A frame-

work for equitable assessment policies for English language learners.

Harvard Educational Review, 64(1), 55–75.

Lachat, M. A. (1999). Standards, equity and cultural diversity (No.

RJ 96006401). Providence, RI: Northeast and Islands Regional Educational

Laboratory at Brown University.

Lytton, H., & Pyryt, M. (1998). Predictors of achievement in basic skills:

A Canadian effective schools study. Canadian Journal of Education,

23(3), 281–301.

Madden, N., Slavin, R., & Simons, K. (1995). Mathwings: Effects on student

mathematics performance (Research No. R-117-D40005). Baltimore:

Center for Research on the Education of Students Placed At Risk.

358 Bilingual Research Journal, 29: 2 Summer 2005

McGhan, B. (1995). MEAP: Mathematics and the reading connection.

Retrieved March 23, 2005, from http://comnet.org/cspt/essays/

mathread.htm

McKay, P. (2000). On ESL standards for school-age learners. Language

Testing, 17(2), 185–214.

Mestre, J. P. (1988). The role of language comprehension in mathematics

and problem solving. In R. R. Cocking & J. P. Mestre (Eds.), Linguistic

and cultural influences on learning mathematics (pp. 201–240). Hillsdale,

NJ: Lawrence Erlbaum Associates.

Mid-Continent Research for Education and Learning. (n.d.) Purpose of this

work. Retrieved May 31, 2005, from http://www.mcrel.org/standards-

benchmarks/docs/purpose.asp

Midobuche, E. (2001). Building cultural bridges between home and the

mathematics classroom. Teaching Children Mathematics, 7(9), 500–502.

Moya, S. S., & O’Malley, J. M. (1994). A portfolio assessment model for

ESL. The Journal of Educational Issues of Language Minority Students,

13, 13–36.

Myers, D. E., & Milne, A. M. (1988). Effects of home language and primary

language on mathematics achievement. In R. R. Cocking & J. P. Mestre

(Eds.), Linguistic and cultural influences on learning mathematics

(pp. 259–293). Hillsdale, NJ: Lawrence Erlbaum Associates.

National Council of Teachers of Mathematics. (1995). Assessment standards

for school mathematics. Reston, VA: Author.

No Child Left Behind Act, Pub. L. No. 107-110 (2002).

Olivares, R. A. (1996). Communication in mathematics for students with

limited English proficiency. In P. C. Elliot & M. J. Kenney (Eds.), 1996

yearbook communication in mathematics K-12 and beyond (pp. 219–

230). Reston, VA: National Council of Teachers of Mathematics.

Oller, J. W., & Perkins, K. (1978). Language in education: Testing the tests.

Rowerly, MA: Newbury House.

Olson, L. (2002). States scramble to rewrite language-proficiency exams.

Retrieved December 4, 2002, from http://www.edweek.org/ew/

ew_printstory.cfm?slug=14lep.h22

Romberg, T. A. (1992). Further thoughts on the standards: A reaction to

Apple. Journal for Research in Mathematics Education, 23(5), 432–437.

Saville-Troike, M. (1984). What really matters in second language learning

for academic achievement? TESOL Quarterly, 18(2), 199–219.

Equity of Literacy-Based Math Assessments 359

Secada, W. G. (1992). Race, ethnicity, social class, language, and achievement

in mathematics. In D. A. Grouws (Ed.), Handbook of research on

mathematics teaching and learning: A project of the National Council

of Teachers of mathematics (pp. 623–660). New York: Macmillan.

Secada, W. G., Fennema, E., & Adajian, L. B. (Eds.). (1995). New directions

for equity in mathematics education. New York: Cambridge University

Press.

Shavelson, R., Baxter, G., & Pine, J. (1992). Performance assessments:

Political rhetoric and measurement reality. Educational Researcher,

21(4), 22–27.

Short, D. J. (1993). Assessing integrated Language and content instruction.

TESOL Quarterly, 27(4), 627–656.

Silver, E. A., Smith, M. S., & Nelson, B. S. (1995). The QUASAR project:

Equity concerns meet mathematics education reform in the middle school.

In W. G. Secada, E. Fennema, & L. B. Adajian (Eds.), New directions for

equity in mathematics education (pp. 9–56). New York: Cambridge

University Press.

Solano-Flores, G., & Trumbull, E. (2003). Examining language in context:

The need for new research and practice paradigms in the testing of

English-language learners. Educational Researcher, 32(2), 3–13.

Stanley, C., & Spafford, C. (2002). Cultural perspectives in mathematics

planning efforts. Multicultural Education, 10(1), 40–42.

Stevens, R. A., Butler, F. A., & Castellon-Wellington, M. (2000). Academic

language and content assessment: Measuring the progress of English

language learners. Los Angeles: CRESST/University of California.

Tate, W. F. (1997). Race-ethnicity, SES, gender, and language proficiency

trends in mathematics achievement: An update. Journal for Research in

Mathematics Education, 28(6), 652–679.

Torres, H. N., & Zeidler, D. L. (2001). The effects of English language

proficiency and scientific reasoning skills on the acquisition of science

content knowledge by Hispanic English language learners and native

English language speaking students. Retrieved April 8, 2003, from http:/

/unr.edu/homepage/crowther/ejse/ejsev6n2.html#top

Tsang, S.-L. (1988). The mathematics achievement characteristics of Asian-

American students. In R. R. Cocking & J. P. Mestre (Eds.), Linguistic

and cultural influences on learning mathematics (pp. 123–136). Hillsdale,

NJ: Lawrence Erlbaum Associates.

360 Bilingual Research Journal, 29: 2 Summer 2005

Acknowledgments

I would like to thank the three anonymous Bilingual Research Journal

reviewers, Stephen Krashen, Pamela Guandique, and Amos Hatch for their

valuable comments on an earlier version of this paper.

Endnote

1

MSDE no longer uses the MSPAP to test students. The MSPAP did not

comply with the NCLB Act (2002) because it did not provide individual student

report cards. The MSDE developed a Maryland School Assessment that

consists of multiple-choice and constructed response items. The new test in

math, however, retains questions that require students to respond in writing,

in addition to multiple-choice items.

Equity of Literacy-Based Math Assessments 361

Appendix A

2003 National Assessment of Educational Progress Grade 4

Math Item: Apply a Linear Relationship and Justify Answer

20. The table below shows how the chirping of a cricket is related to the

temperature outside. For example, a cricket chirps 144 times each minute

when the temperature is 76°.

Number of Chirps Per Minute Temperature

144 76o

152 78o

160 80o

168 82o

176 84o

What would be the number of chirps per minute when the temperature

outside is 90° if this pattern stays the same?

Answer: ________________________

Explain how you figured out your answer.

________________________________________________

________________________________________________

________________________________________________

________________________________________________

________________________________________________

Did you use the calculator on this question?

yes no

Note. Retrieved from http://nces.ed.gov/nationsreportcard/ITMRLS/qtab.asp

362 Bilingual Research Journal, 29: 2 Summer 2005

Appendix B

Maryland School Performance Assessment Program Sample

Math Proportion Item Released to the Public

Step A

The zoo planner wants to have a small information center. They want to

cover the floors with tiles. Design a repeating pattern that could be used on

the floor in the information center. Show your work on the grid below.

Step B

Write a sentence or two explaining the pattern you chose.

Information Center

GO ON

________________________________________________

________________________________________________

________________________________________________

________________________________________________

________________________________________________

Note. This item is reconstructed based on the information available on the Maryland

State Department of Education Web site: http://www.mdk12.org/share/publicrelease/

plan_task.pdf

Equity of Literacy-Based Math Assessments 363

You might also like

- AMC 8 Formulas and Strategies HandoutDocument26 pagesAMC 8 Formulas and Strategies HandoutNadia50% (2)

- Saxon Math 3 Reteachings Lessons 101-110Document10 pagesSaxon Math 3 Reteachings Lessons 101-110Himdad BahriNo ratings yet

- Mathematizing Your School PDFDocument21 pagesMathematizing Your School PDFNenad MilinkovicNo ratings yet

- Reading Interventions for the Improvement of the Reading Performances of Bilingual and Bi-Dialectal ChildrenFrom EverandReading Interventions for the Improvement of the Reading Performances of Bilingual and Bi-Dialectal ChildrenNo ratings yet

- Darden Casebook 2015-2016Document154 pagesDarden Casebook 2015-2016Akash Srivastava50% (2)

- Research On Language ResearchDocument33 pagesResearch On Language Researchaharon_boquiaNo ratings yet

- Detection: R.G. GallagerDocument18 pagesDetection: R.G. GallagerSyed Muhammad Ashfaq AshrafNo ratings yet

- Performance of English Language Learners As A Subgroup in Large-Scale Assessment: Interaction of Research and PolicyDocument11 pagesPerformance of English Language Learners As A Subgroup in Large-Scale Assessment: Interaction of Research and PolicyTahira KhanNo ratings yet

- Giansante NyseslatDocument7 pagesGiansante Nyseslatapi-266338559No ratings yet

- Differentiating Homework For El StudentsDocument33 pagesDifferentiating Homework For El Studentsapi-551294993No ratings yet

- BilingualismDocument39 pagesBilingualismJaizel Francine RomaNo ratings yet

- Kanageswari & Ong 2013Document28 pagesKanageswari & Ong 2013Mastura GhaniNo ratings yet

- English Learners With Learning DisabilitiesDocument22 pagesEnglish Learners With Learning Disabilitiesapi-661928109No ratings yet

- Description: Tags: NewcomersDocument48 pagesDescription: Tags: Newcomersanon-32938No ratings yet

- ProposalDocument4 pagesProposaldaysee garzonNo ratings yet

- Crawford - No Child Left Behind Misguided Approach To School Accountability For English Language LearnersDocument9 pagesCrawford - No Child Left Behind Misguided Approach To School Accountability For English Language LearnersAshlar TrystanNo ratings yet

- Calapa DriveDocument8 pagesCalapa DrivemxeducatorNo ratings yet

- V Perez Module 2Document10 pagesV Perez Module 2api-363644209No ratings yet

- Project GabienuDocument48 pagesProject GabienuGodfred AbleduNo ratings yet

- Academic Writing For Graduate-Level English As A Second Language Students: Experiences in EducationDocument26 pagesAcademic Writing For Graduate-Level English As A Second Language Students: Experiences in EducationnanioliNo ratings yet

- A Case For Explicit Grammar InstructionDocument10 pagesA Case For Explicit Grammar InstructionYuii PallapaNo ratings yet

- Assessment English Language ProficiencyDocument24 pagesAssessment English Language ProficiencyNicole MannNo ratings yet

- Key Issues in Language LearningDocument6 pagesKey Issues in Language Learningelzahraa elzahraaNo ratings yet

- ArenstamactionresearchpDocument22 pagesArenstamactionresearchpapi-277203496No ratings yet

- Teaching AlthoughDocument17 pagesTeaching Althoughhail jackbamNo ratings yet

- The Relationship Between English Language and Mathematics Learning For Non-Native SpeakersDocument4 pagesThe Relationship Between English Language and Mathematics Learning For Non-Native Speakersflynn_chiaNo ratings yet

- Moving Beyond Compliance - Promoting Research-Based Professional Discretion in The Implementation of The Common Core State Standards in English Language ArtsDocument16 pagesMoving Beyond Compliance - Promoting Research-Based Professional Discretion in The Implementation of The Common Core State Standards in English Language ArtsUIC College of EducationNo ratings yet

- Vcai Pme 843 Individual PaperDocument8 pagesVcai Pme 843 Individual Paperapi-417680410No ratings yet

- Good Teacher For AllDocument39 pagesGood Teacher For AllEvy VillaranteNo ratings yet

- Pa Taryo PDFDocument10 pagesPa Taryo PDFmariyono la hasiNo ratings yet

- Academic Concept PaperDocument8 pagesAcademic Concept PaperIvan Mar HondaneroNo ratings yet

- Educational Equity For English Language LearnersDocument28 pagesEducational Equity For English Language Learnersapi-269928551No ratings yet

- Academics and English Language AcquisitionDocument12 pagesAcademics and English Language Acquisitionatbin 1348No ratings yet

- Stem Education For English Language Learners 1: Running HeadDocument5 pagesStem Education For English Language Learners 1: Running Headapi-233776728No ratings yet

- Ronnel Assign2 QualiDocument12 pagesRonnel Assign2 QualironnelpalasinNo ratings yet

- ThesisDocument94 pagesThesisAimzyl Operio TriaNo ratings yet

- Student's Understanding of Algebraic Notation PDFDocument19 pagesStudent's Understanding of Algebraic Notation PDFSebastian GelvesNo ratings yet

- Grade 11 Students English Language Reading Anxiety and Its Relationship To Academic Strands and rEADING Comprehension LevelDocument24 pagesGrade 11 Students English Language Reading Anxiety and Its Relationship To Academic Strands and rEADING Comprehension LevelDave DoncilloNo ratings yet

- LIBRA Group 1Document18 pagesLIBRA Group 1Mariecriz Balaba BanaezNo ratings yet

- Learning and Individual Differences: Jill L. Adelson, Emily R. Dickinson, Brittany C. CunninghamDocument6 pagesLearning and Individual Differences: Jill L. Adelson, Emily R. Dickinson, Brittany C. CunninghamjaywarvenNo ratings yet

- 08 13 07 EffectiveProgramsforMSandHSReading-Cyn-1Document67 pages08 13 07 EffectiveProgramsforMSandHSReading-Cyn-1iprintslNo ratings yet

- Discuss The Nature of Your Future English Language Teaching Role in The Malaysian Secondary School Education System and DiscussDocument6 pagesDiscuss The Nature of Your Future English Language Teaching Role in The Malaysian Secondary School Education System and DiscussSyafiq RasidiNo ratings yet

- Different Approaches To Teaching Grammar: A Case StudyDocument15 pagesDifferent Approaches To Teaching Grammar: A Case StudyLim Kun YinNo ratings yet

- E P A A: Ducation Olicy Nalysis RchivesDocument29 pagesE P A A: Ducation Olicy Nalysis RchivesTania Mery QuispeNo ratings yet

- Task-Based Language TeachingDocument15 pagesTask-Based Language TeachingSyamimi ZolkepliNo ratings yet

- Elvira O. Pillo Practical Research - Maed - FilipinoDocument20 pagesElvira O. Pillo Practical Research - Maed - FilipinoAR IvleNo ratings yet

- Article 11Document7 pagesArticle 11api-278240286No ratings yet

- SolorzanoDocument2 pagesSolorzanoapi-265063585No ratings yet

- Lit Review Final DraftDocument8 pagesLit Review Final Draftmelijh1913No ratings yet

- Differentiated Writing Interventions For High-Achieving Urban African American Elementary StudentsDocument35 pagesDifferentiated Writing Interventions For High-Achieving Urban African American Elementary Studentsjose martinNo ratings yet

- Grammatical Competence of First Year EngDocument54 pagesGrammatical Competence of First Year Engciedelle arandaNo ratings yet

- Understanding EFL Learners' Errors in Language Knowledge in Ongoing AssessmentDocument8 pagesUnderstanding EFL Learners' Errors in Language Knowledge in Ongoing AssessmentvaldezjwNo ratings yet

- 7.chp - 5 JSJSJHDJHDJDDocument12 pages7.chp - 5 JSJSJHDJHDJDAliya ShafiraNo ratings yet

- CeldtDocument7 pagesCeldtapi-377713383No ratings yet

- Dialect, Idiolect and Register Connection With StylisticsDocument10 pagesDialect, Idiolect and Register Connection With StylisticsEmoo jazzNo ratings yet

- Model Strategies in Bilingual EducationDocument47 pagesModel Strategies in Bilingual EducationAdrianaNo ratings yet

- Bailey Wolf - The Challenge of Assessing Language ProficiencyDocument8 pagesBailey Wolf - The Challenge of Assessing Language ProficiencyDana CastleNo ratings yet

- Why Aren ElsDocument16 pagesWhy Aren ElszaimankbNo ratings yet

- Final Draft Action ResearchDocument27 pagesFinal Draft Action Researchapi-305488606No ratings yet

- Pablo Gonzalez Abraldes Garcia - Senior Project DraftDocument9 pagesPablo Gonzalez Abraldes Garcia - Senior Project Draftapi-670174976No ratings yet

- Success of English Language Learners Barriers and StrategiesDocument49 pagesSuccess of English Language Learners Barriers and StrategiesRosejen MangubatNo ratings yet

- Assessing English-Language LearnersDocument12 pagesAssessing English-Language LearnersAshraf Mousa100% (2)

- 1626 3459 1 PBDocument12 pages1626 3459 1 PBnatalia dwiyantiNo ratings yet

- Improving Adequate Yearly Progress For English Language LearnersDocument9 pagesImproving Adequate Yearly Progress For English Language Learnersamanysabry350No ratings yet

- WebDocument32 pagesWebSARANYANo ratings yet

- TUGAS BIOSTATISTIK (Winda)Document3 pagesTUGAS BIOSTATISTIK (Winda)FiyollaNo ratings yet

- Problem of Points2Document5 pagesProblem of Points2Keith BoltonNo ratings yet

- MATHS Complex NumberDocument16 pagesMATHS Complex NumberDaksh AgarwalNo ratings yet

- Lecture 5Document31 pagesLecture 5Ela Man ĤămměŕşNo ratings yet

- PQM 2010Document2 pagesPQM 2010Harshita MehtonNo ratings yet

- LeaP Math G5 Week 2 Q3Document4 pagesLeaP Math G5 Week 2 Q3REYMARK DE TOBIONo ratings yet

- A Review of Optimization Approach To Power Flow Tracing in A Deregulated Power SystemDocument14 pagesA Review of Optimization Approach To Power Flow Tracing in A Deregulated Power SystemAZOJETE UNIMAIDNo ratings yet

- Real Analysis: Dr. Samir Kumar BhowmikDocument25 pagesReal Analysis: Dr. Samir Kumar BhowmikRoksana IslamNo ratings yet

- Applied Mathematics QuestionDocument3 pagesApplied Mathematics QuestionSourav KunduNo ratings yet

- KMS Skema Jawapan Pre PSPMDocument5 pagesKMS Skema Jawapan Pre PSPMnaderaqistina23No ratings yet

- Bitmanip-1 0 0Document61 pagesBitmanip-1 0 0best124612No ratings yet

- Fundamentals of Probability. 6.436/15.085: Birth-Death ProcessesDocument6 pagesFundamentals of Probability. 6.436/15.085: Birth-Death Processesavril lavingneNo ratings yet

- MCQ Test On Unit 4.1 - Attempt ReviewDocument3 pagesMCQ Test On Unit 4.1 - Attempt ReviewDemo Account 1No ratings yet

- Quiz 1Document3 pagesQuiz 1SadiNo ratings yet

- MA1301 Chapter 2Document104 pagesMA1301 Chapter 2Pulipati Shailesh AvinashNo ratings yet

- Assignment #1 Math 21-1Document1 pageAssignment #1 Math 21-1Aihnee OngNo ratings yet

- CSD 205 - Design and Analysis of AlgorithmsDocument44 pagesCSD 205 - Design and Analysis of Algorithmsinstance oneNo ratings yet

- Linear Programming - Graphical MethodDocument8 pagesLinear Programming - Graphical MethodjeromefamadicoNo ratings yet

- Critical Book Report Differential EquationDocument3 pagesCritical Book Report Differential EquationAte Malem Sari GintingNo ratings yet

- Effective Factors Increasing The Students Interest in Mathematics in The Opinion of Mathematic Teachers of ZahedanDocument9 pagesEffective Factors Increasing The Students Interest in Mathematics in The Opinion of Mathematic Teachers of ZahedanVimala Dewi KanesanNo ratings yet

- CH 05Document81 pagesCH 05Whats UPNo ratings yet

- Fundamental Classes 6Document11 pagesFundamental Classes 6rahul rastogiNo ratings yet

- Numpy-Long Answer QuestionsDocument3 pagesNumpy-Long Answer QuestionsPonnalagu R NNo ratings yet

- Module 2 Part 2Document8 pagesModule 2 Part 2student07No ratings yet