0% found this document useful (0 votes)

658 views34 pagesINOI 2018 Competitive Programming Guide

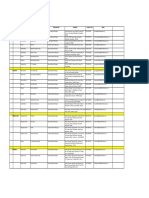

This document provides a guide for competitive programming aimed at preparing students for the Indian National Olympiad in Informatics (INOI). It begins by introducing the purpose and scope of the guide. It then provides an overview of important algorithms and data structures covered in the INOI syllabus such as sorting, searching, graphs, dynamic programming, and more. The guide emphasizes learning through hands-on problem solving over extensive theory. It directs readers to online judge systems to practice problems and provides code examples for linear and binary search algorithms.

Uploaded by

Himanshu RanjanCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

658 views34 pagesINOI 2018 Competitive Programming Guide

This document provides a guide for competitive programming aimed at preparing students for the Indian National Olympiad in Informatics (INOI). It begins by introducing the purpose and scope of the guide. It then provides an overview of important algorithms and data structures covered in the INOI syllabus such as sorting, searching, graphs, dynamic programming, and more. The guide emphasizes learning through hands-on problem solving over extensive theory. It directs readers to online judge systems to practice problems and provides code examples for linear and binary search algorithms.

Uploaded by

Himanshu RanjanCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd