Professional Documents

Culture Documents

FPGA Prototyping in Verification Flows Application Note

Uploaded by

skarthikpriyaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

FPGA Prototyping in Verification Flows Application Note

Uploaded by

skarthikpriyaCopyright:

Available Formats

FPGA Prototyping in Verification Flows

Application Note

Introduction

Hardware-assisted verification environments often make use of FPGAs to prototype the

ASIC in order to provide a faster alternative to simulation and allow software development to

proceed in parallel with hardware design. This application note addresses the factors that

should be taken into account in such a flow in order to maximize the effectiveness of the

overall verification environment.

FPGA Prototyping

Hardware-assisted SoC verification flows include acceleration, emulation or prototyping as

part of the overall verification strategy in order to enable much higher effective simulation

speeds allowing for software and system verification prior to device tape-out. Applications

where a representative subset of the device functionality can be ported to an FPGA which is

connected to real-world interfaces or laboratory test equipment providing stimulus and

response benefit from the following main advantages:

• Faster “simulation” speed: much closer to real-time operation enabling much more

data to be processed and if controlled correctly perhaps more corner cases to be

stimulated.

• Realistic system environment: where possible the device can be proven to interface

to other known system components and external interfaces.

• Software development platform: the improved performance allows concurrent

development of the software with the evolving hardware.

It is important to stress that FPGA prototyping does not replace simulation-based

verification, but rather it is a technique to improve the overall effectiveness of the

verification. The main reasons for this are that the FPGA is not the final product and that the

FPGA prototyping environment lacks many essential features provided by simulation-based

verification, in particular:

• Different physical implementation: The FPGA has a different netlist, layout, clock-

tree, reset distribution, memory structures, I/O characteristics, power management,

performance, clock frequency, clock domains, etc.

• Unimplemented or modified modules: where possible the RTL differences should

be minimised to improve maintenance but typically custom cells are missing,

analogue modules are not available or implemented in a separate prototype chip with

performance considerations, often the full design will not fit in a single FPGA and

needs partitioned across several FPGAs, etc.

Copyright © 2006 Verilab 1 CONFIDENTIAL

• FPGA debug environment is poor: in particular the controllability and observability

of events inside the FPGA is inadequate even with the most advanced probing

techniques. If the prototype environment happens to generate interesting corner cases

which cause observable failures these are very time consuming to debug. If the

prototype environment does not cause failures, it is difficult to know if it really

generated appropriate interesting corner cases or realistic stress conditions. In other

words functional coverage is difficult to implement and measure in an FPGA

prototyping environment.

• Defect tracing to FPGA, hardware or software: since the FPGA prototyping

environment is being used to debug a printed circuit board (with sockets, connectors,

crosstalk, etc.), evolving software and a representative part of the device at the same

time, it can be difficult and time consuming to trace the cause of incorrect behaviour.

• FPGA turnaround time: for a complex SoC being targeted to an FPGA

implementation, the back-end processes of synthesis, placement, static timing

analysis, and bit-stream generation is likely to consume a considerable amount of

time (of the order 4 to 8 hours is common for some highly utilized high-speed

implementations). This means repetitive rebuilds to solve minor issues or connect up

a different set of debug signals is prohibitive.

• Process, voltage and temperature: any operations on the FPGA prototype represent

a limited subset of the overall device environmental operation conditions (and a

different physical implementation) – a single point on the PVT curve. It may be

possible to perform limited stressing of the voltage and temperature but it will never

be possible to emulate the process tolerances for the final chip. Most design teams

care about reliable operation over the entire specified environmental and functional

operational conditions.

Simulation Environment

Most of the essential features for an effective verification environment that are not met by the

FPGA prototyping solution are the corresponding strengths of simulation-based verification

flows. Creating an appropriate symbiosis between the two and understanding the relationship

is key to maximizing the effectiveness of the overall solution. The particular strengths of

simulation in this context are:

• Debug environment: digital simulators and supporting tools are the perfect debug

environment. All the signals in the design are readily accessible. Once a defect has

been reproduced in this environment the debugging can be performed extremely

efficiently. For this reason it is recommended the that simulation environment be

designed with the capability of executing the same tests performed on the FPGA in

order to reproduce failing scenarios and enable effective – this has implications for

both the testbench design and the test code structuring.

• Controllability and observability: simulation provides excellent control and

observability of signals. High-level verification languages use constrained-random

stimulus generation to target corner case operation and stress conditions. Assertion-

based verification can be used to check intended operation at many levels of the

device including internal interfaces, clock-domain crossings, state machines and

Copyright © 2006 Verilab 2 CONFIDENTIAL

external protocols. Temporal expressions, protocol monitors and assertions also

provide self-checking functionality throughout all simulation runs.

• Functional coverage: functional coverage using high-level verification languages

and, to a lesser extent, assertion-based verification, provides detailed feedback on

which features of the design have been appropriately stimulated and checked. Code

coverage can also be measured effectively in the simulation environment to provide

another metric to assess verification completeness.

• Turnaround time: validating a fix to the RTL in the simulation environment

typically takes a very short time: with make based compile flow and deterministic

constrained-random testbench generation techniques the new RTL can be subjected to

the failing scenario in a matter of minutes. Gate-level defects that are undetectable in

other stages of the flow take considerably longer to turn around.

• Gate-level simulations: where necessary, back annotated gate-level simulations can

be used to validate static timing analysis, detect reset issues, check for incorrectly

synthesized code (together with equivalence checking) and, most importantly, check

for dynamic timing defects like clock glitches. These simulations can be performed

using best and worst case process/voltage/temperature conditions.

Verification Plan

The key to managing the workload distribution between the simulation and FPGA based

components of the verification environment is the verification plan. In particular a properly

maintained verification plan enables the team to:

• Target specific features for system test on FPGA: the effectiveness of the overall

solution is improved by identifying which features are slow to simulate, or hard to

emulate, in the simulation environment and targeting these for validation in the

FPGA. Likewise the majority of the features which do not fall into this category can

be targeted for simulation – ensuring fewer defects propagate to the FPGA which will

help contain the difficult debugging situation. There will of course be a large overlap

of features stimulated in both cases, but the main point here is to focus on where

things are meant to be verified and proven.

• Identify functional coverage: the verification plan should identify the functional

coverage goals for the simulation environment and where the corresponding gaps

should be. Some inferred coverage should be possible for low risk items, however key

features identified for validation by FPGA alone should have appropriate coverage

mechanisms built-in to the FPGA implementation.

• Analyze risk and make appropriate decisions: verification is seldom completed in

time for tape-out. It is important to assess the priority and risk associated with every

feature to enable informed decisions and avoid “killer” bugs.

Copyright © 2006 Verilab 3 CONFIDENTIAL

Regressions

All aspects of the verification environment should have the capability to perform regressions,

in other words all tests must be repeatable to ensure that when defects are introduced during

development they will be detected. A requirement for effective regression is that the

verification environment must be self checking; since the FPGA prototype forms part of the

verification environment solution it follows that tests ran on the FPGA must also be self

checking. A one-off lab test executed on the FPGA to see if something “works” is about as

useful as a simulation that requires the user to look at the waveform output to determine

success or failure.

Improving Effectiveness

The following items are suggestions of things to consider for improving the overall

effectiveness of a verification environment that includes both FPGA prototype and

simulation components:

• Design for verification: in many applications it is possible to implement functional

operational modes which enable many other features of the design to be simulated in

a much faster way. For example in telecoms applications where most of the

functionality is related to header (overhead) processing rather than data (payload)

content, it is possible to define much shorter frame modes which keep all the headers

and associated operations like justification, parity and CRC calculations intact. In

such cases the simulations are performed primarily on the verification modes and the

FPGA used for normal operation.

• Synthesizable assertions: functional checks can be synthesized into the FPGA to

detect critical protocol violations deep inside the hierarchy or measure functional

performance characteristics such as FIFO fill levels and arbitration fairness. Although

this logic is redundant, in that it is not a requirement of the specification, including it

can save a huge amount of effort when debugging problems or trying to replicate

failures in the simulation environment.

• Functional coverage measurement: functional coverage monitors can be

synthesized into the FPGA to ensure the system tests manage to generate appropriate

scenarios. In general the majority of the functional coverage should be collected in the

simulation environment; however coverage holes, particularly related to features

targeted for validation in the FPGA, can be implemented using embedded counters

and state sequencers. Care is required to ensure the accuracy and relevance of this

coverage since it consumes FPGA resources. Such coverage can be extracted at the

end of a test using techniques such as uploading and post processing the FPGA

configuration chain, using dedicated scan chains for the coverage accessed via a

JTAG TAP controller, or using the FPGA debug probing infrastructure.

Mark Litterick, Verification Consultant, Verilab GmbH. mark.litterick@verilab.com

Copyright © 2006 Verilab 4 CONFIDENTIAL

You might also like

- App Depedent TestingDocument10 pagesApp Depedent TestingSumit RajNo ratings yet

- Key Concerns For Verifying Socs: Figure 1: Important Areas During Soc VerificationDocument5 pagesKey Concerns For Verifying Socs: Figure 1: Important Areas During Soc Verificationsiddu. sidduNo ratings yet

- Protyping and EmulationDocument49 pagesProtyping and EmulationAbdur-raheem Ashrafee Bepar0% (1)

- DFT Advantages Goals Types Cons Assignment 1Document12 pagesDFT Advantages Goals Types Cons Assignment 1senthilkumarNo ratings yet

- Chip-Level FPGA Verification: How To Use A VHDL Test Bench To Perform A System Auto TestDocument11 pagesChip-Level FPGA Verification: How To Use A VHDL Test Bench To Perform A System Auto TestMitesh KhadgiNo ratings yet

- EmulationDocument37 pagesEmulationsabareeNo ratings yet

- Emulation MethodologyDocument7 pagesEmulation MethodologyRamakrishnaRao SoogooriNo ratings yet

- EEL 5722C Field-Programmable Gate Array Design: Lecture 19: Hardware-Software Co-SimulationDocument23 pagesEEL 5722C Field-Programmable Gate Array Design: Lecture 19: Hardware-Software Co-SimulationArun MNo ratings yet

- Challenges of SoCc VerificationDocument6 pagesChallenges of SoCc Verificationprodip7No ratings yet

- DFT Notes PrepDocument13 pagesDFT Notes PrepBrijesh S D100% (2)

- Fast SimulationDocument5 pagesFast Simulationsunil3679No ratings yet

- Verification TechniquesDocument11 pagesVerification Techniquesdu.le0802No ratings yet

- Embedded Jtag Boundary Scan TestDocument9 pagesEmbedded Jtag Boundary Scan TestAurelian ZaharescuNo ratings yet

- Performance Testing TestRailDocument26 pagesPerformance Testing TestRailfxgbizdcsNo ratings yet

- Design-for-Testability Verification 10X Faster with Hardware EmulationDocument3 pagesDesign-for-Testability Verification 10X Faster with Hardware EmulationRod SbNo ratings yet

- 10 Hymel Conger AbstractDocument2 pages10 Hymel Conger AbstractTamiltamil TamilNo ratings yet

- Ebook Performance TestingDocument44 pagesEbook Performance TestinglugordonNo ratings yet

- Pre Silicon Validation WhitepaperDocument3 pagesPre Silicon Validation WhitepaperSrivatsava GuduriNo ratings yet

- Paper Wcas RevisedDocument4 pagesPaper Wcas RevisedJoão Luiz CarvalhoNo ratings yet

- DFT Basics: Detection Strategies and TypesDocument5 pagesDFT Basics: Detection Strategies and TypessenthilkumarNo ratings yet

- WP 01208 Hardware in The LoopDocument9 pagesWP 01208 Hardware in The LoopPaulo RogerioNo ratings yet

- DVCon Europe 2015 TA1 5 PaperDocument7 pagesDVCon Europe 2015 TA1 5 PaperJon DCNo ratings yet

- Final - Volume 3 No 4Document105 pagesFinal - Volume 3 No 4UbiCC Publisher100% (1)

- 1.1 Testability and Design For Test (DFT)Document29 pages1.1 Testability and Design For Test (DFT)spaulsNo ratings yet

- OVM-UVM End Burn ChurnDocument9 pagesOVM-UVM End Burn ChurnSamNo ratings yet

- Debugging Tools ForDocument10 pagesDebugging Tools ForVijay Kumar KondaveetiNo ratings yet

- RAC System Test Plan Outline 11gr2 v2 0Document39 pagesRAC System Test Plan Outline 11gr2 v2 0Dinesh KumarNo ratings yet

- Developing A Rapid Prototyping Method Using A Matlab/Simulink/Fpga Development Environment To Enable Importing Legacy CodeDocument7 pagesDeveloping A Rapid Prototyping Method Using A Matlab/Simulink/Fpga Development Environment To Enable Importing Legacy Codebeddar antarNo ratings yet

- Development of JTAG Verification IP in UVM MethodologyDocument3 pagesDevelopment of JTAG Verification IP in UVM MethodologyKesavaram ChallapalliNo ratings yet

- Essential Guide to Dynamic SimulationDocument6 pagesEssential Guide to Dynamic SimulationMohammad Yasser RamzanNo ratings yet

- PLC Programming & Implementation: An Introduction to PLC Programming Methods and ApplicationsFrom EverandPLC Programming & Implementation: An Introduction to PLC Programming Methods and ApplicationsNo ratings yet

- Factory Acceptance Testing Ensures Equipment Meets SpecsDocument8 pagesFactory Acceptance Testing Ensures Equipment Meets Specspsn_kylm100% (2)

- Testing of Embedded System: Version 2 EE IIT, Kharagpur 1Document18 pagesTesting of Embedded System: Version 2 EE IIT, Kharagpur 1Sunil PandeyNo ratings yet

- Verification Ch3Document27 pagesVerification Ch3Darshan Iyer NNo ratings yet

- Incisive Enterprise PalladiumDocument7 pagesIncisive Enterprise PalladiumSamNo ratings yet

- Introduction To Co-Simulation: CPSC689-603 Hardware-Software Codesign of Embedded SystemsDocument22 pagesIntroduction To Co-Simulation: CPSC689-603 Hardware-Software Codesign of Embedded SystemsMuni Sankar MatamNo ratings yet

- Questa VisualizerDocument2 pagesQuesta VisualizerVictor David SanchezNo ratings yet

- Test Driven Development of Embedded SystemsDocument7 pagesTest Driven Development of Embedded Systemsjpr_joaopauloNo ratings yet

- RAC System Test Plan Outline 11gr2 v2 4Document39 pagesRAC System Test Plan Outline 11gr2 v2 4xwNo ratings yet

- Design For Testability of FPGA BlocksDocument7 pagesDesign For Testability of FPGA BlocksscholarankurNo ratings yet

- Lec 3 Tester Foundation Level CTFLDocument80 pagesLec 3 Tester Foundation Level CTFLPhilip WagihNo ratings yet

- uMaster_e-JTAGDocument4 pagesuMaster_e-JTAGZemeteNo ratings yet

- Testing FPGA Designs Requires Advanced VerificationDocument4 pagesTesting FPGA Designs Requires Advanced VerificationNitu VlsiNo ratings yet

- 00966777Document9 pages00966777arthy_mariappan3873No ratings yet

- DFT Basics and TypesDocument17 pagesDFT Basics and TypessenthilkumarNo ratings yet

- CVAX and Rigel Development ProcessDocument23 pagesCVAX and Rigel Development ProcessMat DalgleishNo ratings yet

- Design Methodologies and Tools for Developing Complex SystemsDocument16 pagesDesign Methodologies and Tools for Developing Complex SystemsElias YeshanawNo ratings yet

- Performance of Java Application - Part 1Document9 pagesPerformance of Java Application - Part 1manjiri510No ratings yet

- MatterDocument47 pagesMatterDevanand T SanthaNo ratings yet

- Testing of Embedded SystemsDocument18 pagesTesting of Embedded Systemsnagabhairu anushaNo ratings yet

- Testing System Clocks With Boun - AssetDocument10 pagesTesting System Clocks With Boun - AssetcarlosNo ratings yet

- FPGA Test Time Reduction Through A Novel Interconnect Testing SchemeDocument9 pagesFPGA Test Time Reduction Through A Novel Interconnect Testing SchemeCherupalli AkshithaNo ratings yet

- Next Generation Factory Acceptance Test: April 2012Document7 pagesNext Generation Factory Acceptance Test: April 2012Anuradha ChathurangaNo ratings yet

- Performance Testing: Key ConsiderationsDocument9 pagesPerformance Testing: Key ConsiderationssahilNo ratings yet

- Faulttolerancech5 150426005118 Conversion Gate02Document24 pagesFaulttolerancech5 150426005118 Conversion Gate02Sofiene GuedriNo ratings yet

- HW SW Lecture15 SimumlationDocument22 pagesHW SW Lecture15 SimumlationAkshay DoshiNo ratings yet

- NI LabVIEW For CompactRIO Developer's Guide-85-117Document33 pagesNI LabVIEW For CompactRIO Developer's Guide-85-117MANFREED CARVAJAL PILLIMUENo ratings yet

- Module-5 STDocument43 pagesModule-5 STRama D NNo ratings yet

- Embedded Systems Design with Platform FPGAs: Principles and PracticesFrom EverandEmbedded Systems Design with Platform FPGAs: Principles and PracticesRating: 5 out of 5 stars5/5 (1)

- Instructors: Dr. Phillip JonesDocument44 pagesInstructors: Dr. Phillip JonesskarthikpriyaNo ratings yet

- NBA QP FORMAT - Cycle Test 01 - III Yr - EHOSDocument6 pagesNBA QP FORMAT - Cycle Test 01 - III Yr - EHOSskarthikpriyaNo ratings yet

- MPC University LAB QuestionsDocument4 pagesMPC University LAB QuestionsskarthikpriyaNo ratings yet

- Cpre 288 - Introduction To Embedded Systems: Instructors: Dr. Phillip JonesDocument89 pagesCpre 288 - Introduction To Embedded Systems: Instructors: Dr. Phillip JonesskarthikpriyaNo ratings yet

- Cla1 - EhosDocument4 pagesCla1 - EhosskarthikpriyaNo ratings yet

- Conference ContentDocument2 pagesConference ContentskarthikpriyaNo ratings yet

- Water 1Document1 pageWater 1skarthikpriyaNo ratings yet

- Short Channel Effects and ScalingDocument23 pagesShort Channel Effects and ScalingskarthikpriyaNo ratings yet

- VLSI Design Course ECE Professional CoreDocument2 pagesVLSI Design Course ECE Professional CoreskarthikpriyaNo ratings yet

- 18CSC202J Set2Document1 page18CSC202J Set2skarthikpriyaNo ratings yet

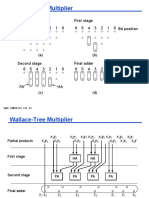

- Wallace-Tree Multiplier: 6 5 4 3 2 1 0 6 5 4 3 2 1 0 Partial Products First Stage Bit PositionDocument2 pagesWallace-Tree Multiplier: 6 5 4 3 2 1 0 6 5 4 3 2 1 0 Partial Products First Stage Bit PositionskarthikpriyaNo ratings yet

- Short Channel Effects and ScalingDocument23 pagesShort Channel Effects and ScalingskarthikpriyaNo ratings yet

- M.E. Computer Science and Engineering Curriculum and SyllabusDocument99 pagesM.E. Computer Science and Engineering Curriculum and SyllabusskarthikpriyaNo ratings yet

- Conference ContentDocument2 pagesConference ContentskarthikpriyaNo ratings yet

- Verilog Quiz1Document2 pagesVerilog Quiz1skarthikpriyaNo ratings yet

- 18CSC202J Set1Document1 page18CSC202J Set1skarthikpriyaNo ratings yet

- The Interntional Womens Day 1Document1 pageThe Interntional Womens Day 1Karthik SekharNo ratings yet

- UML - Deployment Diagrams - Tutorialspoint PDFDocument3 pagesUML - Deployment Diagrams - Tutorialspoint PDFskarthikpriyaNo ratings yet

- Configure Serial Communication InterfaceDocument31 pagesConfigure Serial Communication InterfaceskarthikpriyaNo ratings yet

- Optimize CPU time with 8279 keyboard display controllerDocument37 pagesOptimize CPU time with 8279 keyboard display controllerNihar PandaNo ratings yet

- 18CSC202J Set1 Even Reg NoDocument1 page18CSC202J Set1 Even Reg NoskarthikpriyaNo ratings yet

- UML Component Diagram TutorialDocument3 pagesUML Component Diagram TutorialskarthikpriyaNo ratings yet

- 18CSC202J Set2 ODD Reg NoDocument1 page18CSC202J Set2 ODD Reg NoskarthikpriyaNo ratings yet

- SCS1202 - Object Oriented Programming Unit 2 Classes and ObjectsDocument17 pagesSCS1202 - Object Oriented Programming Unit 2 Classes and ObjectsakjissNo ratings yet

- M.E. Computer Science and Engineering Curriculum and SyllabusDocument99 pagesM.E. Computer Science and Engineering Curriculum and SyllabusskarthikpriyaNo ratings yet

- UML Component Diagram TutorialDocument3 pagesUML Component Diagram TutorialskarthikpriyaNo ratings yet

- CDC Question TemplateDocument4 pagesCDC Question TemplateskarthikpriyaNo ratings yet

- Optimize CPU time with 8279 keyboard display controllerDocument37 pagesOptimize CPU time with 8279 keyboard display controllerNihar PandaNo ratings yet

- Configure Serial Communication InterfaceDocument31 pagesConfigure Serial Communication InterfaceskarthikpriyaNo ratings yet

- Xilinx SDKDocument11 pagesXilinx SDKskarthikpriyaNo ratings yet

- Manual Mimaki Cg60sriiiDocument172 pagesManual Mimaki Cg60sriiirobert landonNo ratings yet

- LOAD MANNA How to Download and Install the Loadmanna AppDocument35 pagesLOAD MANNA How to Download and Install the Loadmanna AppFred Astair Nieva Santelices100% (1)

- Fx-3650P User ManualDocument61 pagesFx-3650P User ManualWho Am INo ratings yet

- Ethical Hacking Course - ISOEH and BEHALA COLLEGE - PDFDocument4 pagesEthical Hacking Course - ISOEH and BEHALA COLLEGE - PDFLn Amitav BiswasNo ratings yet

- Geo5 Rock StabilityDocument8 pagesGeo5 Rock StabilityAlwin Raymond SolemanNo ratings yet

- Datasheet 125vdcDocument2 pagesDatasheet 125vdcRenato AndradeNo ratings yet

- Safe use and storage of toolsDocument4 pagesSafe use and storage of toolsMM Ayehsa Allian SchückNo ratings yet

- Mechanical BIM Engineer ResumeDocument24 pagesMechanical BIM Engineer ResumerameshNo ratings yet

- Mlacp Server SupportDocument26 pagesMlacp Server SupporttoicantienNo ratings yet

- FinalpayrollsmsdocuDocument127 pagesFinalpayrollsmsdocuapi-271517277No ratings yet

- Ul MCCBDocument112 pagesUl MCCBbsunanda01No ratings yet

- Na U4m11l01 PDFDocument10 pagesNa U4m11l01 PDFEzRarezNo ratings yet

- Husnain Sarfraz: Q No 1: Explain The Value of Studying ManagementDocument4 pagesHusnain Sarfraz: Q No 1: Explain The Value of Studying ManagementRadey32167No ratings yet

- 01 Analyze The Power Regulator CircuitDocument29 pages01 Analyze The Power Regulator Circuiterick ozoneNo ratings yet

- Estimate Distance Measurement Using Nodemcu Esp8266 Based On Rssi TechniqueDocument5 pagesEstimate Distance Measurement Using Nodemcu Esp8266 Based On Rssi TechniqueBrendo JustinoNo ratings yet

- EECS3000-Topic 3 - Privacy IssuesDocument5 pagesEECS3000-Topic 3 - Privacy Issuesahmadhas03No ratings yet

- Communication Protocols List PDFDocument2 pagesCommunication Protocols List PDFSarahNo ratings yet

- Hitachi Travelstar 5K100Document2 pagesHitachi Travelstar 5K100Toni CuturaNo ratings yet

- Siemens SIMATIC Step 7 Programmer's HandbookDocument57 pagesSiemens SIMATIC Step 7 Programmer's HandbookEka Pramudia SantosoNo ratings yet

- CXW01 - Campus Switching Fundamentals - Lab GuideDocument19 pagesCXW01 - Campus Switching Fundamentals - Lab Guidetest testNo ratings yet

- Tutorial 2Document3 pagesTutorial 2Stephen KokNo ratings yet

- 6 Strategies For Prompt EngineeringDocument9 pages6 Strategies For Prompt EngineeringSyed MurtazaNo ratings yet

- CV Yaumil FirdausDocument3 pagesCV Yaumil FirdausYaumil FirdausNo ratings yet

- Pointers: M. Usman GhousDocument34 pagesPointers: M. Usman GhousMalik Farrukh RabahNo ratings yet

- Netwrix Auditor For Windows File Servers: Quick-Start GuideDocument24 pagesNetwrix Auditor For Windows File Servers: Quick-Start GuidealimalraziNo ratings yet

- Makex Brochure MainDocument12 pagesMakex Brochure MainAtharv BalujaNo ratings yet

- CYANStart Service Manual ENG 20130110Document34 pagesCYANStart Service Manual ENG 20130110Юрий Скотников50% (6)

- Design and Implementation of Oinline Course Registration and Result SystemDocument77 pagesDesign and Implementation of Oinline Course Registration and Result SystemAdams jamiu67% (3)

- List of Companies in Bangalore LocationDocument13 pagesList of Companies in Bangalore LocationKaisar HassanNo ratings yet

- EEM424 Introduction to Design of ExperimentsDocument45 pagesEEM424 Introduction to Design of ExperimentsNur AfiqahNo ratings yet