Professional Documents

Culture Documents

Conv - Forward: Int Int

Uploaded by

Dharshinipriya RajasekarOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Conv - Forward: Int Int

Uploaded by

Dharshinipriya RajasekarCopyright:

Available Formats

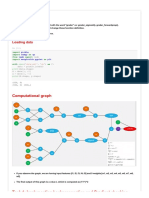

# GRADED FUNCTION: conv_forward

def conv_forward(A_prev, W, b, hparameters):

"""

Implements the forward propagation for a convolution function

Arguments:

A_prev -- output activations of the previous layer, numpy array of shape

(m, n_H_prev, n_W_prev, n_C_prev)

W -- Weights, numpy array of shape (f, f, n_C_prev, n_C)

b -- Biases, numpy array of shape (1, 1, 1, n_C)

hparameters -- python dictionary containing "stride" and "pad"

Returns:

Z -- conv output, numpy array of shape (m, n_H, n_W, n_C)

cache -- cache of values needed for the conv_backward() function

"""

### START CODE HERE ###

# Retrieve dimensions from A_prev's shape (≈1 line)

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

# Retrieve dimensions from W's shape (≈1 line)

(f, f, n_C_prev, n_C) = W.shape

# Retrieve information from "hparameters" (≈2 lines)

stride = hparameters['stride']

pad = hparameters['pad']

# Compute the dimensions of the CONV output volume using the formula

given above. Hint: use int() to floor. (≈2 lines)

n_H = int((n_H_prev - f + 2*pad)/stride) + 1

n_W = int((n_W_prev - f + 2*pad)/stride) + 1

# Initialize the output volume Z with zeros. (≈1 line)

Z = np.zeros((m, n_H, n_W, n_C))

# Create A_prev_pad by padding A_prev

A_prev_pad = zero_pad(A_prev, pad)

for i in range(m): # loop over the batch of

training examples

a_prev_pad = A_prev_pad[i] # Select ith

training example's padded activation

for h in range(n_H): # loop over vertical

axis of the output volume

for w in range(n_W): # loop over horizontal

axis of the output volume

for c in range(n_C): # loop over channels

(= #filters) of the output volume

# Find the corners of the current "slice" (≈4 lines)

vert_start = h * stride

vert_end = h + f

horiz_start = w * stride

horiz_end = w + f

# Use the corners to define the (3D) slice of a_prev_pad

(See Hint above the cell). (≈1 line)

a_slice_prev = a_prev_pad[h:h+f, w:w+f, :]

# Convolve the (3D) slice with the correct filter W and

bias b, to get back one output neuron. (≈1 line)

Z[i, h, w, c] = conv_single_step(a_slice_prev, W[:,:,:,c],

b[:,:,:,c])

### END CODE HERE ###

# Making sure your output shape is correct

assert(Z.shape == (m, n_H, n_W, n_C))

# Save information in "cache" for the backprop

cache = (A_prev, W, b, hparameters)

return Z, cache

In [42]:

np.random.seed(1)

A_prev = np.random.randn(10,4,4,3)

W = np.random.randn(2,2,3,8)

b = np.random.randn(1,1,1,8)

hparameters = {"pad" : 2,

"stride": 1}

Z, cache_conv = conv_forward(A_prev, W, b, hparameters)

print("Z's mean =", np.mean(Z))

print("cache_conv[0][1][2][3] =", cache_conv[0][1][2][3])

You might also like

- Deception The Greatest WeaponDocument44 pagesDeception The Greatest Weaponwondimu100% (1)

- SDL Trados Studio Getting Started Part 2 sp1Document119 pagesSDL Trados Studio Getting Started Part 2 sp1dalia daugNo ratings yet

- The Concise Oxford Duden German Dictionary (PDFDrive)Document1,416 pagesThe Concise Oxford Duden German Dictionary (PDFDrive)wai100% (2)

- Convolutional Neural Networks (CNN) - QA & HandsOnDocument5 pagesConvolutional Neural Networks (CNN) - QA & HandsOnजोशी अंकित60% (5)

- Steve Eckels Global Adventures For Fingerstyle GuitaristsDocument65 pagesSteve Eckels Global Adventures For Fingerstyle Guitaristsfrankie_75100% (3)

- Relative ClausesDocument12 pagesRelative Clausesapi-252190418100% (2)

- Year 1 Ict Nov ExamDocument4 pagesYear 1 Ict Nov ExamVincentNo ratings yet

- MC KoreanDocument1 pageMC Koreankara333No ratings yet

- SAP R3 Archiving With PBSDocument26 pagesSAP R3 Archiving With PBSkiransharcNo ratings yet

- CNN Numpy 1st HandsonDocument5 pagesCNN Numpy 1st HandsonJohn Solomon100% (1)

- Eo IGarciaDocument19 pagesEo IGarciaLily100% (1)

- RH - GKA - U1 - L4 - Tap - Nan - Tap - Coco - 0603 - 200604143444Document19 pagesRH - GKA - U1 - L4 - Tap - Nan - Tap - Coco - 0603 - 200604143444Siki SikiNo ratings yet

- Theory and Applications of Ontology: Computer ApplicationsDocument584 pagesTheory and Applications of Ontology: Computer ApplicationsVishnu Vijayan100% (7)

- DAA RecordDocument15 pagesDAA Recorddharun0704No ratings yet

- Lab Manuals PDFDocument27 pagesLab Manuals PDFAyaz MughalNo ratings yet

- Import As: Cal - Pivot Array ArrayDocument12 pagesImport As: Cal - Pivot Array ArraySanodariya Kshitij Ashvinchandra (B19ME075)No ratings yet

- Single Step of ConvolutionDocument1 pageSingle Step of ConvolutionDharshinipriya RajasekarNo ratings yet

- Ann Practical AllDocument23 pagesAnn Practical Allmst aheNo ratings yet

- Procesamiento Digital de Señales Lab7-Practica-labDocument8 pagesProcesamiento Digital de Señales Lab7-Practica-labheyNo ratings yet

- Lab PRGMDocument16 pagesLab PRGMNitesh HuNo ratings yet

- CVDL Exp4 CodeDocument22 pagesCVDL Exp4 CodepramodNo ratings yet

- Matlab QuestionsDocument9 pagesMatlab QuestionsNimal_V_Anil_2526100% (2)

- JJJDocument6 pagesJJJSerey Rothanak OrNo ratings yet

- CodeDocument11 pagesCodemushahedNo ratings yet

- GeorgiaTech CS-6515: Graduate Algorithms: Blackbox Algorithms Flashcards by Calvin Hoang - BrainscapeDocument12 pagesGeorgiaTech CS-6515: Graduate Algorithms: Blackbox Algorithms Flashcards by Calvin Hoang - BrainscapeJan_SuNo ratings yet

- AI For DSDocument15 pagesAI For DSB.C.H. ReddyNo ratings yet

- PGM 5 BDocument2 pagesPGM 5 B1734675.j.harshvardhanNo ratings yet

- Innoctf: 'Hex64' 'R' "" '/N' '' 'Innoctf (' 'Base64' 'Hex'Document8 pagesInnoctf: 'Hex64' 'R' "" '/N' '' 'Innoctf (' 'Base64' 'Hex'Srikanth SatyaNo ratings yet

- ML LW 6 Kernel SVMDocument4 pagesML LW 6 Kernel SVMPierre-Antoine DuvalNo ratings yet

- B19059 Lab9 IC252 CodeDocument3 pagesB19059 Lab9 IC252 CodeSourav SehgalNo ratings yet

- Detyre Kursi ELN II ADocument9 pagesDetyre Kursi ELN II AEdlir PetroshiNo ratings yet

- Deep Learning Lab Manual-36-41Document6 pagesDeep Learning Lab Manual-36-41Ajay RodgeNo ratings yet

- MATHS RecordDocument45 pagesMATHS Recordchandu93152049No ratings yet

- Artificial Intelligence RecordDocument26 pagesArtificial Intelligence RecordHarishwaran V.No ratings yet

- ANN PR Code and OutputDocument25 pagesANN PR Code and Outputas9900276No ratings yet

- Prob13: 1 EE16A Homework 13Document23 pagesProb13: 1 EE16A Homework 13Michael ARKNo ratings yet

- Aim L RecordDocument26 pagesAim L RecordAbhilash AbhiNo ratings yet

- Python Numpy (1) : Intro To Multi-Dimensional Array & Numerical Linear AlgebraDocument27 pagesPython Numpy (1) : Intro To Multi-Dimensional Array & Numerical Linear AlgebraBania Julio Fonseca100% (1)

- Daa LabDocument22 pagesDaa Labvikas 5G9No ratings yet

- Final ReviewDocument5 pagesFinal Reviewjitendra rauthanNo ratings yet

- Multistage BackwardDocument13 pagesMultistage BackwardRama SugavanamNo ratings yet

- Solution Assignment 5Document4 pagesSolution Assignment 5Srikanth MuralidharanNo ratings yet

- Ex - No:2 Back Propagation Neural NetworkDocument4 pagesEx - No:2 Back Propagation Neural NetworkGopinath NNo ratings yet

- 4ann - 5Th ProgDocument15 pages4ann - 5Th Progshreyas .cNo ratings yet

- Digital Signal Processing Lab: Discrete Time Systems: DownsamplingDocument9 pagesDigital Signal Processing Lab: Discrete Time Systems: DownsamplingMian Safyan KhanNo ratings yet

- 20011P0417 DSP Matlab AssignmentDocument12 pages20011P0417 DSP Matlab AssignmentNARENDRANo ratings yet

- Extra Class 2Document19 pagesExtra Class 2melt.bonier.0zNo ratings yet

- Machine Learning LabDocument13 pagesMachine Learning LabKumaraswamy AnnamNo ratings yet

- BMAT101P Lab Assignment 2Document4 pagesBMAT101P Lab Assignment 2Harshit Kumar SinghNo ratings yet

- Aiml Lab PGMDocument15 pagesAiml Lab PGMShubham KumarNo ratings yet

- Assignment 2Document6 pagesAssignment 2Hamza Ahmed WajeehNo ratings yet

- PRGM 2 AO - AlgorithmDocument2 pagesPRGM 2 AO - AlgorithmMadhumathi PoleshiNo ratings yet

- Digital Signal ProcessingDocument30 pagesDigital Signal ProcessingSundram InvinsibleNo ratings yet

- (Numpy) - Extended CheatsheetDocument8 pages(Numpy) - Extended CheatsheetValdano SODENo ratings yet

- Software Laboratory II CodeDocument27 pagesSoftware Laboratory II Codeas9900276No ratings yet

- Ada Sumission AdibDocument35 pagesAda Sumission Adib21becet009Adib LokhandwalaNo ratings yet

- Deep-Learning-Keras-Tensorflow - 1.1.1 Perceptron and Adaline - Ipynb at Master Leriomaggio - Deep-Learning-Keras-TensorflowDocument11 pagesDeep-Learning-Keras-Tensorflow - 1.1.1 Perceptron and Adaline - Ipynb at Master Leriomaggio - Deep-Learning-Keras-Tensorflowme andan buscandoNo ratings yet

- Homework Week 1Document1 pageHomework Week 1Anas SoryNo ratings yet

- Focus Function ListDocument98 pagesFocus Function ListJhoseph RoqueNo ratings yet

- Lab No 3Document4 pagesLab No 3Farhan Khan NiaZiNo ratings yet

- AI Lab ManualDocument22 pagesAI Lab Manualrishit.kantariyaNo ratings yet

- Labsheet-1: Question No.1:Basic Signal Generation and PlottingDocument15 pagesLabsheet-1: Question No.1:Basic Signal Generation and PlottingmanasNo ratings yet

- Aiml LabDocument14 pagesAiml Lab1DT19IS146TriveniNo ratings yet

- Python 3 Functions and OOPs FPDocument9 pagesPython 3 Functions and OOPs FPjitahaNo ratings yet

- AI Lets GoDocument28 pagesAI Lets GoRushikesh BallalNo ratings yet

- A Numerical Tour of Signal Processing - Image Denoising With Linear MethodsDocument8 pagesA Numerical Tour of Signal Processing - Image Denoising With Linear MethodsNazeer SaadiNo ratings yet

- Backpropagation: Loading DataDocument12 pagesBackpropagation: Loading Datasatyamgovilla007_747No ratings yet

- P 5Document1 pageP 5Fardhin Ahamad ShaikNo ratings yet

- 11Document10 pages11vachanshetty10No ratings yet

- AAD Lec 9 Approximation AlgorithmsDocument41 pagesAAD Lec 9 Approximation AlgorithmsgjolluNo ratings yet

- Max Fee TX HandlerDocument4 pagesMax Fee TX HandlerDharshinipriya RajasekarNo ratings yet

- Complaint NodeDocument1 pageComplaint NodeDharshinipriya RajasekarNo ratings yet

- G.T.N Arts College, Dindigul.: CircularDocument1 pageG.T.N Arts College, Dindigul.: CircularDharshinipriya RajasekarNo ratings yet

- Surfaces-:: E Textile or Polymeric Material Supplied in Rolls or Sheets of Finished ProductDocument5 pagesSurfaces-:: E Textile or Polymeric Material Supplied in Rolls or Sheets of Finished ProductDharshinipriya RajasekarNo ratings yet

- The Main Difference Is The Amount ofDocument2 pagesThe Main Difference Is The Amount ofDharshinipriya RajasekarNo ratings yet

- # GRADED FUNCTION: Zero - PadDocument1 page# GRADED FUNCTION: Zero - PadDharshinipriya RajasekarNo ratings yet

- G.T.N .Arts College, Dindigul.: Thiru. Dr. Ln. K.RETHINAMDocument1 pageG.T.N .Arts College, Dindigul.: Thiru. Dr. Ln. K.RETHINAMDharshinipriya RajasekarNo ratings yet

- Motion ModelDocument9 pagesMotion ModelDharshinipriya RajasekarNo ratings yet

- Import: Connect Cursor Execute Execute Input LenDocument1 pageImport: Connect Cursor Execute Execute Input LenDharshinipriya RajasekarNo ratings yet

- Board of StudiesDocument1 pageBoard of StudiesDharshinipriya RajasekarNo ratings yet

- Body Fluids and Fluid CompartmentsDocument3 pagesBody Fluids and Fluid CompartmentsDharshinipriya RajasekarNo ratings yet

- EnglishDocument7 pagesEnglishRoseNo ratings yet

- List of HTTP Status Codes - WikipediaDocument13 pagesList of HTTP Status Codes - WikipediaChukwuzube SamNo ratings yet

- Fs 1 - The Learner S Development and Environment: Pamantasan NG Lungsod NG MarikinaDocument3 pagesFs 1 - The Learner S Development and Environment: Pamantasan NG Lungsod NG MarikinaMarry DanielNo ratings yet

- The Tax Man Poem SummaryDocument3 pagesThe Tax Man Poem Summaryshimulkata21No ratings yet

- SCCA1013 AcademicPlanning A141Document8 pagesSCCA1013 AcademicPlanning A141Wira AmriNo ratings yet

- Story WritingDocument3 pagesStory Writingsalm099No ratings yet

- Aecukbimprotocol v2 0Document46 pagesAecukbimprotocol v2 0Ivana ŽivkovićNo ratings yet

- The Fox Woman & The Blue Pagoda (1946) by Merrit & BokDocument106 pagesThe Fox Woman & The Blue Pagoda (1946) by Merrit & Bokjpdeleon20No ratings yet

- Temas de Ensayo de Comparación y Contraste A Nivel UniversitarioDocument4 pagesTemas de Ensayo de Comparación y Contraste A Nivel Universitariohokavulewis2100% (1)

- CV Romy Redeker 2016 DefinitiefDocument2 pagesCV Romy Redeker 2016 Definitiefapi-300762588No ratings yet

- English Phonics Guide and Check Guidance Notes For Teachers 1203Document2 pagesEnglish Phonics Guide and Check Guidance Notes For Teachers 1203Nat AlieenNo ratings yet

- Anx-I Journal FormatDocument6 pagesAnx-I Journal FormatSam RenuNo ratings yet

- KV Feb2023 WebDocument52 pagesKV Feb2023 WebDhananjayNo ratings yet

- Theme 2. Forces and Momenta Coming Into Action in Gas Turbine EngineDocument22 pagesTheme 2. Forces and Momenta Coming Into Action in Gas Turbine EngineОлег Олексійович ПогорілийNo ratings yet

- EF4e Beg Quicktest 02Document4 pagesEF4e Beg Quicktest 02Natalia Soria0% (1)

- Improving Your Reading Skills With Inference Iggy - The Magic City Reading ComprehensionDocument3 pagesImproving Your Reading Skills With Inference Iggy - The Magic City Reading ComprehensionMylie RobertsNo ratings yet

- Detailed Lesson Plan in 21ST Century LiteratureDocument6 pagesDetailed Lesson Plan in 21ST Century LiteratureStarrky PoligratesNo ratings yet

- F3 CL Javascipt L1Document6 pagesF3 CL Javascipt L1Salad WongNo ratings yet

- Hash Table PDFDocument25 pagesHash Table PDFJohn TitorNo ratings yet