Professional Documents

Culture Documents

What Do Data Really Mean?: Research Findings, Meta-Analysis, and Cumulative Knowledge in Psychology

Uploaded by

Blayel FelihtOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

What Do Data Really Mean?: Research Findings, Meta-Analysis, and Cumulative Knowledge in Psychology

Uploaded by

Blayel FelihtCopyright:

Available Formats

What Do Data Really Mean?

Research Findings, Meta-Analysis, and Cumulative

Knowledge in Psychology

Frank L. Schmidt College of Business, University of Iowa

How should data be interpreted to optimize the possibil- null hypothesis significance testing has been the dominant

ities for cumulative scientific knowledge? Many believe data analysis procedure. The prevailing decision rule, as

that traditional data interpretation procedures based on Oakes (1986) has demonstrated empirically, has been this:

statistical significance tests reduce the impact of sampling If the statistic (t, F, etc.) is significant, there is an effect

error on scientific inference. Meta-analysis shows that the (or a relation); if it is not significant, then there is no

significance test actually obscures underlying regularities effect (or relation). (See also Cohen, 1990, 1992.) These

and processes in individual studies and in research lit- prevailing interpretational procedures have focused

eratures, leading to systematically erroneous conclusions. heavily on the control of Type I errors, with little attention

Meta-analysis methods can solve these problems-and have being paid to the control of Type II errors. A Type I error

done so in some areas. However, meta-analysis represents (alpha error) consists of concluding there is a relation or

more than merely a change in methods of data analysis. an effect when there is not. A Type II error (beta error)

It requires major changes in the way psychologists view consists of the opposite—concluding there is no relation

the general research process. Views of the scientific value or effect when there is. Alpha levels have been controlled

of the individual empirical study, the current reward at the .05 or .01 levels, but beta levels have by default

structure in research, and even the fundamental nature of been allowed to climb to high levels, often in the 50%-

scientific discovery may change. 80% range (Cohen, 1962, 1988, 1990; Schmidt, Hunter,

& Urry, 1976). To illustrate this, let us look at an example

Many today are disappointed in the progress that psy- from a hypothetical area of experimental psychology.

chology has made in this century. Numerous explanations

have been advanced for why progress has been slow. Faulty Suppose the research question is the effect of a cer-

philosophy of science assumptions have been cited (e.g., tain drug on learning, and suppose the actual effect of a

excessive emphasis on logical positivism; Glymour, 1980; particular dosage is .50 of a standard deviation increase

Schlagel, 1979; Suppe, 1977; Toulmin, 1979). The neg- in the amount learned. An effect size of .50, considered

ative influence of behaviorism has been discussed and medium size by Cohen (1988), corresponds to the differ-

debated (e.g., Koch, 1964; Mackenzie, 1977; McKeachie, ence between the 50th and 69th percentiles in a normal

1976; Schmidt, Hunter, & Pearlman, 1981). This article distribution. With an effect size of this magnitude, 69%

focuses on a reason that has been less frequently discussed: of the experimental group would exceed the mean of the

the methods psychologists (and other social scientists) control group if both were normally distributed. Many

have traditionally used to analyze and interpret their reviews of various literatures have found relations of this

data—both in individual studies and in research litera- general magnitude (Hunter & Schmidt, 1990b). Now

tures. This article advances three arguments: (a) Tradi- suppose a large number of studies are conducted on this

tional data analysis and interpretation procedures based dosage, each with 15 rats in the experimental group and

on statistical significance tests militate against the dis- 15 in the control group.

covery of the underlying regularities and relationships that Figure 1 shows the distribution of effect sizes (lval-

are the foundation for scientific progress; (b) meta-analysis ues) expected under the null hypothesis. All variability

methods can solve this problem—and they have already around the mean value of zero is due to sampling error.

begun to do so in some areas; and (c) meta-analysis is To be significant at the .05 level (with a one-tailed test),

not merely a new way of doing literature reviews. It is a the effect size must be .62 or larger. If the null hypothesis

new way of thinking about the meaning of data, requiring is true, only 5% will be that large or larger. In analyzing

that we change our views of the individual empirical study

and perhaps even our views of the basic nature of scientific Frederick A. King served as action editor for this article.

An earlier and shorter version was presented as an invited address

discovery. at the Second Annual Convention of the American Psychological Society,

Traditional Methods Versus Meta-Analysis Dallas, TX, June 8, 1990.

I would like to thank John Hunter, Deniz Ones, Michael Judiesch,

Psychology and the social sciences have traditionally relied and C. Viswesvaran for helpful comments on a draft of this article. Any

errors remaining are mine.

heavily on the statistical significance test in interpreting

Correspondence concerning this article should be addressed to Frank

the meaning of data, both in individual studies and in L. Schmidt, Department of Management and Organization, College of

research literatures. Following the lead of Fisher (1932), Business, University of Iowa, Iowa City, IA 52242.

October 1992 • American Psychologist 1173

Copyright 1992 by the American Psychological Association, Inc. 0003-066X/92/$2.00

Vol. 47, No. 10, 1173-1181

their data, researchers typically focus only on the infor-

mation in Figure 1. Most believe that their significance Figure 2

test limits the probability of an error to 5%. Statistical Power in a Series of Experiments

Actually, in this example the probability of a Type N E = N c = 15

I error is zero—not 5%. Because the actual effect size is Total N = 30

always .50, the null hypothesis is always false, and there-

fore there is no possibility of a Type I error. One cannot

falsely conclude there is an effect when in fact there is an 6 = .50

effect. When the null hypothesis is false, the only kind of

SE = .38

error that can occur is a Type II error—failure to detect

the effect that is present. The only type of error that can

occur is the type that is not controlled. A reviewer of this

article asked for more elaboration on this point. In any

-.6 -.5 -.4 -.3 -.2 -.1 0 .1 .2 .3 .4 .5 .6 .7 .8 .9 1.0 1.1

given study, the null hypothesis is either false or not false

in the population in question. If the null is false (as in .62

this example), there is a nonzero effect in the population.

Required For Significance: dc = .62

A Type I error consists of concluding there is an effect

when no effect exists. Because an effect does, in fact, exist (One Tailed Test. Alpha = .05)

here, it is not possible to make a Type I error (Hunter & Statistical Power = .37

Schmidt, 1990b, pp. 23-31). Type II Error Rate = 63%

Figure 2 shows not only the irrelevant null distri- Type I Error Rate = 0%

bution but also the actual distribution of observed effect

sizes across these studies. The mean of this distribution

is the true value of .50, but because of sampling error Most researchers in experimental psychology would

there is substantial variation in observed effect sizes. traditionally have used analysis of variance (ANOVA) to

Again, to be significant, the effect size must be .62 or analyze these data. This means the significance test would

larger. Only 37% of studies conducted will obtain a sig- be two-tailed rather than one-tailed, as in our example.

nificant effect size; thus statistical power for these studies With a two-tailed test (i.e., one-way ANOVA), statistical

is only .37. That is, the true effect size of the drug is power is even lower—.26 instead of .37. The Type II error

always .50; it is never zero. Yet it is only detected as sig- rate (and hence the overall error rate) would be 74%.

nificant in 37% of the studies. The error rate in this re- Also, this example assumes use of a z test; any researchers

search literature is 63%, not 5% as many would mistak- not using ANOVA would probably use a t test. For a one-

enly believe. tailed t test with alpha equal to .05 and degrees of freedom

of 28, the effect size (d value) must be .65 to be significant.

(The t value must be at least 1.70, instead of the 1.645

required for the z test.) With the t test, statistical power

Figure 1 would also be lower—.35 instead of .37. Thus both com-

The Null Distribution of d Values in a Series of monly employed alternative significance tests would yield

Experiments even lower statistical power.

Furthermore, the studies that are significant yield

Total N = 30

distorted estimates of effect sizes. The true effect size is

always .50; all departures from .50 are due solely to sam-

pling error. But the minimum value required for signif-

icance is .62. The obtained d value must be . 12 above its

true value—24% larger than its real value—to be signif-

SE = .38 icant. The average of the significant lvalues is .89, which

is 78% larger than the true value.

In any study in this example that by chance yields

5% the correct value of .50, the conclusion under the pre-

vailing decision rule will be that there is no relationship.

6 ..5 -.4 -.3 -.2 -.1 0 .1 .2 .3 .4 .5 .6 That is, it is only the studies that are by chance quite

inaccurate that lead to the correct conclusion that a re-

lationship exists.

How would this body of studies be interpreted as a

Required For Significance: dc = .62 research literature? There are two interpretations that

d c = [1.645(.38)] = -62

would have traditionally been frequently accepted. The

first is based on the traditional voting method (Hedges &

(One Tailed Test, Alpha = .05) Olkin, 1980; Light & Smith, 1971). Using this method,

one would note that 63% of the studies found "no rela-

1174 October 1992 • American Psychologist

Figure 3

Meta-Analysis of the Drug Studies of Figure 1

I. Compute Actual Variance of Effect Sizes (S j:)

1. S"j = .1444 (Observed Variance of d Values)

2. S = .1444 (Variance Predicted from Sampling Error)

2 2

4. S? = .1444 - .1444 = 0 (True Variance of 8 Values)

o

n . Compute Mean Effect Size (5)

1. d = . 50 (Mean Observed d Value)

2. S = .50

3. SDS = 0

III. Conclusion: There is only one effect size, and its value is .50 standard deviation.

tionship." Because this is a majority of the studies, the the average d value will be close to the true (population)

conclusion would be that no relation exists. It is easy to value because sampling errors are random and hence av-

see that this conclusion is false, yet many reviews in the erage out to zero. Note that these meta-analysis methods

past were conducted in just this manner (Hedges & Olkin, do not rely on statistical significance tests. Only effect

1980). The second interpretation reads as follows: In 63% sizes are used, and significance tests are not used in an-

of the studies, the drug had no effect. However, in 37% alyzing the effect sizes.

of the studies, the drug did have an effect. Research is The data in this example are hypothetical. However,

needed to identify the moderator variables (interactions) if one accepts the validity of basic statistical formulas for

that cause the drug to have an effect in some studies but sampling error, one will have no reservations about this

not in others. For example, perhaps the strain of rat used example. But the same principles do apply to real data,

or the mode of injecting the drug affects study outcomes. as shown next by an example from personnel selection.

This interpretation is also completely erroneous. Table 1 shows observed validity coefficients from 21

How would meta-analysis interpret these studies? studies of a single clerical test and a single measure of

Different approaches to meta-analysis use somewhat dif- job performance. Each study has N = 68 (the median N

ferent quantitative procedures (Bangert-Drowns, 1986; in the literature in personnel psychology), and every study

Glass, McGaw, & Smith, 1981; Hedges & Olkin, 1985; is a random draw (without replacement) from a single

Hunter, Schmidt, & Jackson, 1982; Hunter & Schmidt, larger validity study with 1,428 subjects. The correlation

1990b; Rosenthal, 1984, 1991). I illustrate this example in the large study (uncorrected for measurement error,

using the methods presented by Hunter et al. (1982) and range restriction, or other artifacts) is .22 (Schmidt, Oca-

Hunter and Schmidt (1990b). Figure 3 shows that meta- sio, Hillery, & Hunter, 1985).

analysis reaches the correct conclusion. Meta-analysis first The validity is significant in eight (or 38%) of these

computes the variance of the observed d values. Next, it studies, for an error rate of 62%. The traditional conclu-

uses the standard formula for the sampling error variance sion would be that this test is valid in 38% of the orga-

of d values (e.g., see Hunter & Schmidt, 1990b, chap. 7) nizations, and invalid in the rest, and that in organizations

to determine how much variance would be expected in where it is valid, its mean observed validity is .33 (which

observed d values from sampling error alone. The amount is 50% larger than its real value). Meta-analysis of these

of real variance in population d values (5 values) is esti- validities indicates that the mean is .22 and all variance

mated as the difference between the two. In our example, in the coefficients is due solely to sampling error. The

this difference is zero, indicating correctly that there is meta-analysis conclusions are correct; the traditional

only one population value. This single population value conclusions are false.

is estimated as the average observed value, which is .50 Reliance on statistical significance testing in psy-

here, the correct value. If the number of studies is large, chology and the social sciences has long led to frequent

October 1992 • American Psychologist 1175

The result is a further increase in the Type II error rate—

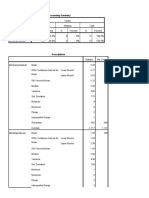

Table 1 an average increase of 17%.

21 Validity Studies (N = 68 Each) These examples of meta-analysis have examined only

the effects of sampling error. There are other statistical

Study Observed validity Study Observed validity

and measurement artifacts that cause artifactual variation

1 .04 12 .11 in effect sizes and correlations across studies—for ex-

2 .14 13 .21 ample, differences between studies in measurement error,

3 .31* 14 .37* range restriction, and dichotomization of measures. Also,

4 .12 15 .14 in meta-analysis, mean d values and mean correlations

5 .38* 16 .29* must be corrected for attenuation due to such artifacts

6 .27* 17 .26* as measurement error and dichotomization of measures.

7 .15 18 .17 These artifacts are beyond the scope of this presentation

8 .36* 19 .39* but are covered in detail elsewhere (Hunter & Schmidt,

9 .20 20 .22 1990a, 1990b). The purpose here is only to demonstrate

10 .02 21 .21

11

that traditional data analysis and interpretation methods

.23

logically lead to erroneous conclusions and to demon-

• p < .05, two-tailed. strate that meta-analysis can solve these problems.

Applications of Meta-Analysis in Industrial-

serious errors in interpreting the meaning of data (Hunter

Organizational (IO) Psychology

& Schmidt, 1990b, pp. 29-42,483-484), errors that have Meta-analysis methods have been applied to a variety of

systematically retarded the growth of cumulative knowl- research literatures in I/O psychology. The following are

edge. Yet it has been remarkably difficult to wean social some examples: (a) correlates of role conflict and role

science researchers away from their entrancement with ambiguity (Fisher & Gittelson, 1983; Jackson & Schuler,

significance testing (Oakes, 1986). One can only hope that 1985); (b) relation of job satisfaction to absenteeism

lessons from meta-analysis will finally stimulate change. (Hackett & Guion, 1985; Terborg & Lee, 1982); (c) re-

But time after time, even in recent years, I and my re- lation between job performance and turnover (McEvoy

search colleagues have seen researchers who have been & Cascio, 1987); (d) relation between job satisfaction and

taught to understand the deceptiveness of significance job performance (Iaffaldono & Muchinsky, 1985; Petty,

testing sink back, in weak moments, into the nearly in- McGee, & Cavender, 1984); (e) effects of nonselection

curable habit of reliance on significance testing. I have organizational interventions on employee output and

occasionally done it myself. The psychology of addiction productivity (Guzzo, Jette, & Katzell, 1985); (f) effects

to significance testing would be a fascinating research of realistic job previews on employee turnover, perfor-

area. This addiction must be overcome; but for most re- mance, and satisfaction (McEvoy & Cascio, 1985; Pre-

searchers, it will not be easy. mack & Wanous, 1985); (g) evaluation of Fiedler's theory

In these examples, the only type of error that is con- of leadership (Peters, Harthe, & Pohlman, 1985); and (h)

trolled—Type I error—is the type that cannot occur. It accuracy of self-ratings of ability and skill (Mabe & West,

is likely that, in most areas of research, as time goes by 1982). These applications have been to both correlational

and researchers gain a better and better understanding of and experimental literatures. Sufficient meta-analyses

the processes they are studying, it is less and less frequently have been published in I/O psychology that a review of

the case that the null hypothesis is "true," and more and meta-analytic studies in this area has now been published.

more likely that the null is false. Thus Type I error de- This lengthy review (Hunter & Hirsh, 1987) reflects the

creases in importance, and Type II error increases in im- fact that this literature is now quite large. It is noteworthy

portance. This means that researchers should be paying that the review devotes considerable space to the devel-

increasing attention to Type II error and to statistical opment and presentation of theoretical propositions; this

power as time goes by. However, a recent review in Psy- is possible because the clarification of research literatures

chological Bulletin (Sedlmeier & Gigerenzer, 1989) con- produced by meta-analysis provides a basis for theory

cluded that the average statistical power of studies in one development that previously did not exist.

APA journal had declined from 46% to 37% over a 22- Although there have now been about 50 such pub-

year period, despite the earlier appeal by Cohen (1962) lished applications of meta-analysis in I/O psychology,

for attention to statistical power. Only two of the 64 ex- the most frequent application to date has been the ex-

periments reviewed even mentioned statistical power, and amination of the validity of employment tests and other

none computed estimates of power. The review concluded methods used in personnel selection. Meta-analysis has

that the decline in power was due to increased use of been used to test the hypothesis of situation-specific va-

alpha-adjusted procedures (such as the Newman-Keuls, lidity. In personnel selection it had long been believed

Duncan, and Scheffe procedures). That is, instead of at- that validity was specific to situations; that is, it was be-

tempting to reduce the Type II error rate, researchers had lieved that the validity of the same test for what appeared

been imposing increasingly stringent controls on Type I to be the same job varied from employer to employer,

errors—which probably cannot occur in most studies. region to region, across time periods, and so forth

1176 October 1992 • American Psychologist

(Schmidt et al., 1976). In fact, it was believed that the that the old belief that validities are situationally specific

same test could have high validity (i.e., a high correlation is erroneous (Schmidt & Hunter, 1981).

with job performance) in one location or organization Today, many organizations—including the federal

and be completely invalid (i.e., have zero validity) in an- government, the U.S. Employment Service, and some

other. This belief was based on the observation that ob- large corporations—use validity generalization findings

tained validity coefficients and statistical significance lev- as the basis of their selection-testing programs. Validity

els for similar or identical tests and jobs varied substan- generalization has been included in standard texts (e.g.,

tially across different studies conducted in different Anastasi, 1982, 1988), in the Standards for Educational

settings; that is, it was based on findings similar to those and Psychological Testing (American Educational Re-

in Table 1. This variability was explained by postulating search Association, American Psychological Association,

that jobs that appeared to be the same actually differed & National Council on Measurement in Education,

in important ways in the traits and abilities required to 1985), and in the Principles for the Validation and Use

perform them. This belief led to a requirement for local of Personnel Selection Procedures (APA, 1987). Proposals

(or situational) validity studies. It was held that validity have been made to include validity generalization in the

had to be estimated separately for each situation by a federal government's Uniform Guidelines on Employee

study conducted in the setting; that is, validity findings Selection Procedures when this document is revised in

could not be generalized across settings, situations, em- the near future to reflect changes in the recently enacted

ployers, and the like (Schmidt & Hunter, 1981). In the Civil Rights Act of 1991. A recent report by the National

late 1970s and throughout the 1980s, meta-analyses of Academy of Sciences (Hartigan & Wigdor, 1989) devotes

validity coefficients, called validity generalization studies, a full chapter (chap. 6) to validity generalization and en-

were conducted to test whether validity might not in fact dorses its methods and assumptions.

be generalizable (Callender and Osburn, 1981; Hirsh,

Northrop, & Schmidt, 1986; Pearlman, Schmidt, & Role of Meta-Analysis in Theory

Hunter, 1980; Schmidt, Gast-Rosenberg, & Hunter, 1980; Development

Schmidt & Hunter, 1977; Schmidt, Hunter, Pearlman, & The major task in any science is the development of the-

Shane, 1979). If all or most of the study-to-study vari- ory. A good theory is simply a good explanation of the

ability in observed validities was due to artifacts, then processes that actually take place in a phenomenon. For

the traditional belief in situational specificity of validity example, what actually happens when employees develop

would be seen to be erroneous, and the conclusion would a high level of organizational commitment? Does job sat-

be that validity findings generalized. isfaction develop first and then cause the development of

To date, meta-analysis has been applied to over 500 commitment? If so, what causes job satisfaction to de-

research literatures in employment selection, each one velop, and how does it have an effect on commitment?

representing a predictor-job performance combination. As another example, how do higher levels of mental ability

Several slightly different computational procedures for cause higher levels ofjob performance—only by increas-

estimating the effects of artifacts have been evaluated and ing job knowledge, or also by directly improving problem

have been found to yield very similar results and conclu- solving on the job? The social scientist is essentially a

sions (Callender & Osburn, 1980; Raju & Burke, 1983; detective; his or her job is to find out why and how things

Schmidt et al., 1980). In addition to ability and aptitude happen the way they do. But to construct theories, one

tests, predictors studied have included nontest procedures, must first know some of the basic facts, such as the em-

such as evaluations of education and experience (Mc- pirical relations among variables. These relations are the

Daniel, Schmidt, & Hunter, 1988), interviews (McDaniel, building blocks of theory. For example, if there is a high

Whetzel, Schmidt, & Mauer, 1991), and biodata scales and consistent population correlation between job satis-

(Rothstein, Schmidt, Erwin, Owens, & Sparks, 1990). In faction and organization commitment, this will send the-

many cases, artifacts accounted for all variance in valid- ory development in particular directions. If the correlation

ities across studies; the average amount of variance ac- between these variables is very low and consistent, theory

counted for by artifacts has been 80%-90% (Schmidt, development will branch in different directions. If the

Hunter, Pearlman, & Hirsh, 1985; Schmidt et al., in relation is known to be highly variable across organiza-

press). As an example, consider the relation between tions and settings, the theory developer will be encouraged

quantitative ability and overall job performance in clerical to advance interactive or moderator-based theories. Meta-

jobs (Pearlman et al., 1980). This substudy was based on analysis provides these empirical building blocks for the-

453 correlations computed on a total of 39,584 people. ory. Meta-analytic findings reveal what it is that needs to

Seventy-seven percent of the variance of the observed va- be explained by the theory.

lidities was traceable to artifacts, leaving a negligible vari- Theories are causal explanations. The goal in every

ance of .019. The mean validity was .47. Thus, integration science is explanation, and explanation is always causal.

of this large amount of data leads to the general (and In the behavioral and social sciences, the methods of path

generalizable) principle that the correlation between analysis (e.g., see Hunter & Gerbing, 1982; Kenny, 1979;

quantitative ability and clerical performance is approxi- Loehlin, 1987) can be used to test causal theories when

mately .47, with very little (if any) true variation around the data meet the assumptions of the method. The rela-

this value. Like other similar findings, this finding shows tionships revealed by meta-analysis—the empirical

October 1992 • American Psychologist 1177

building blocks for theory—can be used in path analysis analysis results are shown in Figure 4. These results in-

to test causal theories even when all the delineated rela- dicate that the major impact of general mental ability on

tionships are observational rather than experimental. Ex- job performance capability (measured by the work sam-

perimentally determined relationships can also be entered ple) is indirect: Higher ability leads to increased acqui-

into path analyses along with observationally based re- sition of job knowledge, which in turn has a strong effect

lations. It is necessary only to transform d values to cor- on job performance capability [(.46)(-66)=.30]. This in-

relations (Hunter & Schmidt, 1990b, chap. 7). Thus path direct effect of ability is almost 4 times greater than the

analyses can be "mixed." Path analysis can be a useful direct effect of ability on performance capability. The

tool for reducing the number of theories that could pos- pattern for job experience is similar: The indirect effect

sibly be consistent with the data, sometimes to a very through the acquisition ofjob knowledge is much greater

small number, and sometimes to only one theory (Hunter, than the direct effect on performance capability. The

1988). Every such reduction in the number of possible analysis also reveals that supervisory ratings of job per-

theories is an advance in understanding. formance are more heavily determined by employee job

A recent study (Schmidt, Hunter, & Outerbridge, knowledge than by employee performance capabilities as

1986) is an example of this. We applied meta-analysis to measured by the work sample measures. I do not want

studies reporting correlations among the following vari- to dwell at length on thefindingsof this study. The point

ables: General mental ability, job knowledge, job perfor- is that this study is an example of a two-step process that

mance capability, supervisory ratings of job performance, uses meta-analysis in theory development:

and length of experience on the job. General mental abil- Step 1. Use meta-analysis to obtain precise estimates

ity was measured using standardized group intelligence of the relations among' variables. Meta-analysis averages

tests; job knowledge was assessed by content-valid written out sampling error deviations from correct values, and it

measures of facts, principles, and methods needed to per- corrects mean values for distortions due to measurement

form the particular job; job performance capability was error and other artifacts. Meta-analysis results are not

measured using work samples that simulated or repro- affected by the problems that logically distort the results

duced important tasks from the job, as revealed by job and interpretations of significance tests.

analysis; and job experience was a coding of months on Step 2. Use the meta-analytically derived estimates

the job in question. These correlations were corrected for of relations in path analysis to test theories.

measurement error, and the result was the matrix of meta-

analytically estimated correlations shown in Table 2. We The Broader Impact of Meta-Analysis

used these correlations to test a theory of the joint impact Some have proposed that meta-analysis is nothing more

of general mental ability and job experience on job than a new, more quantitative method of conducting lit-

knowledge and job performance capability on the basis erature reviews (Guzzo, Jackson, & Katzell, 1986). If this

of methods described in Hunter and Gerbing (1982). Path were true, then its impact could be fully evaluated by

Table 2

Original Correlation Matrix, Correlation Matrix Reproduced From Path Model, and the Difference Matrix

Variable A K p s E

Original matrix

Ability (A) 0.46 0.38 0.16 0.00

Job knowledge (K) 0.46 — 0.80 0.42 0.57

Performance capability (P) 0.38 0.80 — 0.37 0.56

Supervisory ratings (S) 0.16 0.42 0.37 — 0.24

Job experience (E) 0.00 0.57 0.56 0.24

Reproduced matrix

Ability (A) 0.46 0.38 0.19 0.00

Job knowledge (K) 0.46 — 0.80 0.41 0.57

Performance capability (P) 0.38 0.80 — 0.36 0.56

Supervisory ratings (S) 0.19 0.41 0.36 — 0.24

Job experience (E) 0.00 0.57 0.56 0.24

Difference matrix (original

minus reproduced)

_ 0.00 0.00 -0.03 0.00

Ability (A)

Job knowledge (K) 0.00 — 0.00 0.01 0.00

Performance capability (P) 0.00 0.00 — 0.01 u.uu

Supervisory ratings (S) -0.03 0.01 0.01 U.UU

Job experience (E) 0.00 0.00 0.00 0.00

1178 October 1992 • American Psychologist

The commonly held belief that research progress will be

Figure 4 made if only we "let the data speak" is sadly erroneous.

Path Model and Path Coefficients Because of the effects of artifacts such as sampling error

and measurement error, it would be more accurate to say

that data come to us encrypted, and to understand their

General meaning we must first break the code. Doing this requires

Job Mental meta-analysis. Therefore any individual study must be

Experience Ability

(E) considered only a single data point to be contributed to

(A) a future meta-analysis. Thus the scientific status and value

of the individual study is necessarily reduced.

The result has been a shift of the focus of scientific

discovery from the individual primary study to the meta-

analysis, creating a major change in the relative status of

reviews. Journals that formerly published only primary

studies and refused to publish reviews are now publishing

meta-analytic reviews in large numbers. In the past, re-

search reviews were based on the narrative-subjective

method, and they had limited status and gained little

Job Performance credit for one in academic raises or promotions. The re-

Knowledge Capability wards went to those who did primary research. Perhaps

(K) (P) this was appropriate because it can be seen in retrospect

that such reviews often contributed little to cumulative

knowledge (Glass et al., 1981; Hedges & Olkin, 1985).

Not only is this no longer the case, but there has been a

far more important development. Today, many discoveries

and advances in cumulative knowledge are being made

not by those who do primary research studies but by

Supervisory those who use meta-analysis to discover the latent mean-

Ratings ing of existing research literatures. It is possible for a be-

of Job havioral or social scientist today with the needed training

Performance and skills to make major original discoveries and contri-

(S) butions without conducting primary research studies—

simply by mining the information in accumulated re-

search literatures. This process is well under way today.

merely examining the differences in conclusions of meta- The I/O psychology and organizational behavior research

analytic and traditional literature reviews. These differ- literatures— the ones with which I am most familiar—

ences are major and important, and they show that the are rapidly being mined. This is apparent not only in the

conclusions of narrative reviews, based on traditional in- number of meta-analyses being published but also—and

terpretations of statistical significance tests, are frequently perhaps more importantly—in the shifting pattern of ci-

very erroneous. However, meta-analysis is much more tations in the literature and in textbooks from primary

than a new method for conducting reviews. The realities studies to meta-analyses. The same is true in education,

revealed about data and research findings by the principles social psychology, medicine, finance, marketing, and other

of meta-analysis require major changes in our views of areas (Hunter & Schmidt, 1990b, chap. 1).

the individual empirical study, the nature of cumulative The meta-analytic process of cleaning up and mak-

research knowledge, and the reward structure in the re- ing sense of research literature not only reveals the cu-

search enterprise. mulative knowledge that is there but also prevents the

Meta-analysis has explicated the critical role of sam- diversion of valuable research resources into truly un-

pling error, measurement error, and other artifacts in de- needed research studies. Meta-analysis applications have

termining the observed findings and statistical power of revealed that there are questions for which additional re-

individual studies. In doing so, it has revealed how little search would waste scientifically and socially valuable re-

information there is in any single study. It has shown sources. For example, as of 1980, 882 studies based on a

that, contrary to widespread belief, no single primary total sample of 70,935 had been conducted relating mea-

study can resolve an issue or answer a question. Consid- sures of perceptual speed to the job performance of cler-

eration of meta-analysis principles suggests that there is ical workers. Based on these studies, our meta-analytic

a strong cult of overconfident empiricism in the behavioral estimate of this correlation is .47 (Sp2 = .05; Pearlman et

and social sciences, that is, an excessive faith in data as al., 1980). For other abilities, there were often 200-300

the direct source of scientific truths and an inadequate cumulative studies. Clearly, further research on these re-

appreciation of how misleading most social science data lationships is not the best use of available resources.

are when accepted at face value and interpreted naively. Only meta-analytic integration of findings across

October 1992 • American Psychologist 1179

studies can control chance and other statistical and mea- Perhaps the ideal solution would be for the meta-

surement artifacts and provide a trustworthy foundation analyst to work with one or two primary researchers in

for conclusions. And yet meta-analysis is not possible un- conducting the meta-analysis. Knowledge of the primary

less the needed primary studies are conducted. Is it pos- research area is desirable, if not indispensable, in applying

sible that meta-analysis will kill the incentive and moti- meta-analysis optimally. But this solution avoids the two-

vation to conduct primary research studies? In new re- tiered scientific enterprise for only one small group of

search areas, this potential problem is not of much primary researchers. For the rest, the reality would be

concern. The first study conducted on a question contains the two-tiered structure.

100% of the available research information, the second Conclusion

contains roughly 50%, and so on. Thus, the early studies

in any area have a certain status. But the 50th study con- Traditional procedures for data analysis and interpreta-

tains only about 2% of the available information, the tion in individual studies and in research literatures have

100th, about 1%. Will we have difficulty motivating re- hampered the development of cumulative knowledge in

searchers to conduct the 50th or 100th study? The answer psychology. These procedures, based on the statistical

will depend on the future reward system in the behavioral significance test and the null hypothesis, logically lead to

and social sciences. What will that reward structure be? erroneous conclusions because they overestimate the

What should it be? amount of information contained in individual studies

and ignore Type II errors and statistical power. Meta-

One possibility—not necessarily desirable—is that analysis can solve these problems. But meta-analysis is

we will have a two-tiered research enterprise. One group more than just a new way of conducting research reviews:

of researchers will specialize in conducting individual It is a new way of viewing the meaning of data. As such,

studies. Another group will apply complex and sophis- it leads to a different view of individual studies and an

ticated meta-analysis methods to those cumulative studies altered concept of scientific discovery, and it may lead to

and will make the scientific discoveries. Such a structure changed roles in the research enterprise.

raises troubling questions. How would these two groups

be rewarded? What would be their relative status in the REFERENCES

overall research enterprise? Would this be comparable to American Educational Research Association, American Psychological

the division of labor in physics between experimental and Association, & National Council on Measurement in Education.

theoretical physicists? Experimental physicists conduct (1985). Standards for educational and psychological testing. Wash-

the studies, and theoretical physicists interpret their ington, DC: American Psychological Association.

American Psychological Association, Division of Industrial and Orga-

meaning. This analogy may be very appropriate: Hedges nizational Psychology (Division 14). (1987). Principles for the vali-

(1987) has found that theoretical physicists (and chemists) dation and use of personnel selection procedures (3rd ed.). College

use methods that are "essentially identical" to meta- Park, MD: Author.

analysis. In fact, a structure similar to this already exists Anastasi, A. (1982). Psychological testing (5th ed.). New York: Macmillan.

in some areas of I/O psychology. A good question is this: Anastasi, A. (1988). Psychological testing (6th ed.). New York: Macmillan.

Bangert-Drowns, R. L. (1986). Review of developments in meta-analytic

Is it the wave of the future? method. Psychological Bulletin, 99, 388-399.

One might ask, Why can't the primary researchers Callender, J. G, & Osburn, H. G. (1980). Development and test of a

also conduct the meta-analyses? This can happen, and in new model for validity generalization. Journal of Applied Psychology,

65, 543-558.

some research areas it has happened. But there are wor- Callender, J. C. & Osburn, H. G. (1981). Testing the constancy of validity

rysome trends that militate against this outcome in the with computer generated sampling distributions of the multiplicative

longer term. Mastering a particular area of research often model variance estimates: Results for petroleum industry validation

requires all of an individual's time and effort. Research research. Journal of Applied Psychology, 66, 274-281.

at the primary level is often complex and time consuming, Cohen, J. (1962). The statistical power of abnormal-social psychological

research: A review. Journal of Abnormal and Social Psychology, 65,

leaving little time or energy to master new quantitative 145-153.

methods such as meta-analysis. A second consideration Cohen, J. (1988). Statistical power analysis for the behavioral sciences.

is that, as they are refined and improved, meta-analysis (2nd ed.) Hillsdale, NJ: Erlbaum.

methods are becoming increasingly elaborated and ab- Cohen, J. (1990). Things 1 have learned (so far). American Psychologist,

stract. In my experience, many primary researchers al- 45, 1304-1312.

Cohen, J. (1992). Statistical power analysis. Current Directions in Psy-

ready felt in the early 1980s that meta-analysis methods chological Science, 7,98-101.

were complex and somewhat forbidding. In retrospect, Fisher, C. D. & Gittelson, R. (1983). A meta-analysis of the correlates

those methods were relatively simple. Our 1982 book on of role conflict and ambiguity, journal of Applied Psychology, 68,

meta-analysis (Hunter et al., 1982) was 172 pages long. 320-333.

Fisher, R. A. (1932). Statistical methods for research workers (4th ed.).

Our 1990 book (Hunter & Schmidt, 1990b) is 576 pages Edinburgh, Scotland: Oliver & Boyd.

long. The meta-analysis book by Hedges and Olkin (1985) Glass, G. V., McGaw, B. & Smith, M. L. (1981). Mela-analysis in social

is perhaps even more complex statistically and is 325 research. Beverly Hills, CA: Sage.

pages long. Improvements in accuracy of meta-analysis Glymour, C. (1980). The good theories do. In E. B. Williams (Ed.),

Construct validity in psychological measurement (pp. 13-21). Prince-

methods are mandated by the scientific need for precision. ton, NJ: Educational Testing Service.

Yet increases in precision come only at the price of in- Guzzo, R. A., Jackson, S. E., & Katzell, R. A. (1986). Meta-analysis

creased complexity and abstractness, and this makes the analysis. In L. L. Cummings & B. M. Staw (Eds.), Research in or-

methods more difficult for generalists to master and apply. ganizational behavior (Vol. 9). Greenwich, CT: JAl Press.

1180 October 1992 • American Psychologist

Guzzo, R. A., Jette, R. D., & Katzell, R. A. (1985). The effects of psy- Pearlman, K., Schmidt, F. L., & Hunter, J. E. (1980). Validity general-

chologically based intervention programs on worker productivity: A ization results for tests used to predict job proficiency and training

meta-analysis. Personnel Psychology, 38, 275-292. success in clerical occupations. Journal of Applied Psychology, 65,

Hackett, R. D., & Guion, R. M. (1985). A re-evaluation of the absen- 373-406.

teeism-job satisfaction relationship. Organizational Behavior and Peters, L. H., Harthe, D., & Pohlman, J. (1985). Fiedler's contingency

Human Decision Processes, 35, 340-381. theory of leadership: An application of the meta-analysis procedures

Hartigan, J. A., & Wigdor, A. K. (1989). Fairness in employment testing: of Schmidt and Hunter. Psychological Bulletin, 97, 274-285.

Validity generalization, minority issues, and the General Aptitude Test Petty, M. M., McGee, G. W., & Cavender, J. W. (1984). A meta-analysis

Battery. Washington, DC: National Academy Press. of the relationship between individual job satisfaction and individual

Hedges, L. V. (1987). How hard is hard science, how soft is soft science: performance. Academy of Management Review, 9, 712-721.

The empirical cumulativeness of research. American Psychologist, 42, Premack, S., & Wanous, J. P. (1985). Meta-analysis of realistic job preview

443-455. experiments. Journal of Applied Psychology, 70, 706-719.

Hedges, L. V., & Olkin, I. (1980). Vote counting methods in research Raju, N. S., & Burke, M. J. (1983). Two new procedures for studying

synthesis. Psychological Bulletin, 88, 359-369. validity generalization. Journal of Applied Psychology, 68, 382-395.

Hedges, L. V., &Olkin, I. (1985). Statistical methods for meta-analysis. Rosen thai, R. (1984). Meta-analytic proceduresfor social research. Bev-

San Diego, CA: Academic Press. erly Hills, CA: Sage.

Hirsh, H. R., Northrop, L, & Schmidt, F. L. (1986). Validity general- Rosenthal, R. (1991). Meta-analytic procedures for social research (2nd

ization results for law enforcement occupations. Personnel Psychology, ed.). Newbury Park, CA: Sage.

39, 399-420. Rothstein, H. R., Schmidt, F. L., Erwin, F. W., Owens, W. A., & Sparks,

Hunter, J. E. (1988). A path analytic approach to analysis ofcovariance. C. P. (1990). Biographical data in employment selection: Can validities

Unpublished manuscript, Michigan State University, Department of be made generalizable? Journal of Applied Psychology, 75, 175-184.

Psychology. Schlagel, R. H. (1979, September). Revaluation in the philosophy of

Hunter, J. E., & Gerbing, D. W. (1982). Unidimensional measurement, science: Implications for method and theory in psychology. Invited

second order factor analysis and causal models. In B. M. Staw & address at the 87th Annual Convention of the American Psychological

L. L. Cummings (Eds.), Research in organizational behavior (Vol. 4). Association, New York.

Greenwich, CT: JAI Press. Schmidt, F. L., Gast-Rosenberg, I., & Hunter, J. E. (1980). Validity gen-

Hunter, J. E., & Hirsh, H. R. (1987). Applications of meta-analysis. In eralization results for computer programmers. Journal of Applied

C. L. Cooper & I. T. Robertson (Eds.), International review of industrial Psychology, 65, 643-661.

and organizational psychology 1987(pp. 321-357). New "fork: Wiley. Schmidt, F. L., & Hunter, J. E. (1977). Development of a general solution

Hunter, J. E., & Schmidt, F. L. (1990a). Dichotomization of continuous to the problem of validity generalization. Journal of Applied Psy-

variables: The implications for meta-analysis. Journal of Applied Psy- chology, 62, 529-540.

chology, 75, 334-349. Schmidt, F. L., & Hunter, J. E. (1981). Employment testing: Old theories

Hunter, J. E., & Schmidt, F. L. (1990b). Methods of meta-analysis: Cor- and new research findings. American Psychologist, 36, 1128-1137.

recting error and bias in research findings. Newbury Park, CA: Sage. Schmidt, F. L., Hunter, J. E., & Outerbridge, A. N. (1986). Impact of

Hunter, J. E., Schmidt, F. L., & Jackson, G. B. (1982). Meta-analysis: job experience and ability on job knowledge, work sample perfor-

Cumulating research findings across studies. Beverly Hills, CA: Sage. mance, and supervisory ratings of job performance. Journal of Applied

Iaffaldono, M. T, & Muchinsky, P. M. (1985). Job satisfaction and job Psychology, 71, 432-439.

performance: A meta-analysis. Psychological Bulletin, 97, 251-273. Schmidt, F. L., Hunter, J. E., & Pearlman, K. (1981). Task differences

Jackson, S. E., & Schuler, R. S. (1985). A meta-analysis and conceptual as moderators of aptitude test validity in selection: A red herring.

critique of research on role ambiguity and role conflict in work settings. Journal of Applied Psychology, 66, 166-185.

Organizational Behavioral and Human Decision Processes, 36, 16-78. Schmidt, F. L., Hunter, J. E., Pearlman, K., & Hirsh, H. R. (1985).

Kenny, D. A. (1979). Correlation and causality. New York: Wiley. Forty questions about validity generalization and meta-analysis. Per-

Koch, S. (1964). Psychology and emerging conceptions of knowledge as sonnel Psychology, 38, 697-798.

unitary. In T. W. Wann (ed.), Behaviorism and Phenomenology. Chi- Schmidt, F. L., Hunter, J. E., Pearlman, K., & Shane, G. S. (1979).

cago: University of Chicago Press. Further tests of the Schmidt-Hunter Bayesian validity generalization

Light, R. J., & Smith, P. V. (1971). Accumulating evidence: Procedures procedure. Personnel Psychology, 32, 257-381.

for resolving contradictions among different research studies. Harvard Schmidt, F. L., Hunter, J. E., & Urry, V. E. (1976). Statistical power in

Educational Review, 41, 429-471. criterion-related validation studies. Journal of Applied Psychology,

Loehlin, J. C. (1987). Latent variable models: An introduction to factor, 61, 473-485.

path, and structural analysis. Hillsdale, NJ: Erlbaum. Schmidt, F. L., Law, K., Hunter, J. E., Rothstein, H. R., Pearlman, K.,

Mabe, P. A., Ill, & West, S. G. (1982). Validity of self evaluations of & McDaniel, M. A. (in press). Refinements in validity generalization

ability: A review and meta-analysis. Journal of Applied Psychology, procedures: Implications for the situational specificity hypothesis.

67, 280-296. Journal of Applied Psychology.

Mackenzie, B. D. (1977). Behaviorism and the limits of scientific method.

Schmidt, F. L., Ocasio, B. P., Hillery, J. M., & Hunter, J. E. (1985).

Atlantic Highlands, NJ: Humanities Press.

Further within-setting empirical tests of the situational specificity hy-

McDaniel, M. A., Schmidt, F. L., & Hunter, J. E. (1988). A meta-analysis

pothesis in personnel selection. Personnel Psychology, 38, 509-524.

of the validity of methods of rating training and experience in personnel

selection. Personnel Psychology, 41, 283-314. Sedlmeier, P., & Gigerenzer, G. (1989). Do studies of statistical power

McDaniel, M. A., Whetzel, D. L., Schmidt, F. L., & Mauer, S. (1991). have an effect on the power of studies? Psychological Bulletin, 105,

The validity of employment interviews: A review and meta-analysis. 309-316.

Manuscript submitted for publication. Suppe, F. (Ed.). (1977). The structure of scientific theories. Urbana: Uni-

McEvoy, G. M., & Cascio, W. F. (1985). Strategies for reducing employee versity of Illinois Press.

turnover A meta-analysis. Journal ofApplied Psychology, 70, 342-353. Terborg, J. R., & Lee, T. W. (1982). Extension of the Schmidt-Hunter

McEvoy, G. M., & Cascio, W. F. (1987). Do poor performers leave? A validity generalization procedure to the prediction of absenteeism

meta-analysis of the relation between performance and turnover. behavior from knowledge of job satisfaction and organizational com-

Academy of Management Journal, 30, 744-762. mitment. Journal of Applied Psychology, 67, 280-296.

McKeachie, W. J. (1976). Psychology in America's bicentennial year. Toulmin, S. E. (1979, September). The cult of empiricism. In P. F. Secord

American Psychologist, 31, 819-833. (Chair), Psychology and Philosophy. Symposium conducted at the

Oakes, M. (1986). Statistical inference: A commentary for the social and 87th Annual Convention of the American Psychological Association,

behavioral sciences. New York: Wiley. New York.

October 1992 • American Psychologist 1181

You might also like

- Evaluating Effect Size in Psychological Research - Sense and NonsenseDocument25 pagesEvaluating Effect Size in Psychological Research - Sense and NonsenseJonathan JonesNo ratings yet

- How Meta-Analysis Increases Statistical Power (Cohn 2003)Document11 pagesHow Meta-Analysis Increases Statistical Power (Cohn 2003)Bextiyar BayramlıNo ratings yet

- Cohen 1992Document5 pagesCohen 1992Adriana Turdean VesaNo ratings yet

- Draft: Why Most of Psychology Is Statistically UnfalsifiableDocument32 pagesDraft: Why Most of Psychology Is Statistically UnfalsifiableAmalNo ratings yet

- Schmidt Hunter 1996 26 ScenariosDocument25 pagesSchmidt Hunter 1996 26 ScenariosPaola K. TamayoNo ratings yet

- Art (Cohen, 1962) The Statistical Power of Abnormal-Social Psychological Research - A ReviewDocument9 pagesArt (Cohen, 1962) The Statistical Power of Abnormal-Social Psychological Research - A ReviewIsmael NeuNo ratings yet

- The Hindsight Bias A Meta AnalysisDocument22 pagesThe Hindsight Bias A Meta AnalysisGreta Manrique GandolfoNo ratings yet

- Replicación en PsicologíaDocument10 pagesReplicación en Psicología290971No ratings yet

- The Causes of Errors in Clinical Reasoning - Cognitive Biases, Knowledge Deficits, and Dual Process ThinkingDocument9 pagesThe Causes of Errors in Clinical Reasoning - Cognitive Biases, Knowledge Deficits, and Dual Process ThinkingDavid GourionNo ratings yet

- Effect Size Estimates: Current Use, Calculations, and InterpretationDocument19 pagesEffect Size Estimates: Current Use, Calculations, and InterpretationsofianiddaNo ratings yet

- Ioannidis Plo SMedDocument6 pagesIoannidis Plo SMedapi-26302710No ratings yet

- Gigerenzer - Modeling Fast and Frugal HeuristicsDocument12 pagesGigerenzer - Modeling Fast and Frugal Heuristicsmeditationinstitute.netNo ratings yet

- Null Hypothesis Significance TestingDocument6 pagesNull Hypothesis Significance Testinggjgcnp6z100% (2)

- Business Research MethodsDocument11 pagesBusiness Research MethodsHamza ZainNo ratings yet

- A Biologist's Guide To Statistical Thinking and AnalysisDocument39 pagesA Biologist's Guide To Statistical Thinking and AnalysisBruno SaturnNo ratings yet

- A Guide To Conducting A Meta-Analysis With Non-Independent Effect SizesDocument10 pagesA Guide To Conducting A Meta-Analysis With Non-Independent Effect SizesfordNo ratings yet

- The Paranoid Optimist An Integrative EvolutionaryDocument61 pagesThe Paranoid Optimist An Integrative EvolutionaryAngela MontesdeocaNo ratings yet

- Quantitative Research CycleDocument7 pagesQuantitative Research CycleMaría Céspedes MontillaNo ratings yet

- JPSP08Document24 pagesJPSP08AkhilNo ratings yet

- Generalizability and Field ResearchDocument22 pagesGeneralizability and Field ResearchnickcupoloNo ratings yet

- Evaluating The Replicability of Social Priming StudiesDocument62 pagesEvaluating The Replicability of Social Priming StudiesEarthly BranchesNo ratings yet

- 5 A Comprehensive Meta-Analysis of Interpretation Biases in DepressionDocument16 pages5 A Comprehensive Meta-Analysis of Interpretation Biases in DepressionАлексейNo ratings yet

- 00.pashler Pentru GerryDocument6 pages00.pashler Pentru GerrycutkilerNo ratings yet

- Power To The People ManuscriptDocument42 pagesPower To The People ManuscriptZerlinda SanamNo ratings yet

- AP+Psychology+ +module+5Document12 pagesAP+Psychology+ +module+5Klein ZhangNo ratings yet

- TestingDocument22 pagesTestingAkash BhowmikNo ratings yet

- (Behavioral Economics) Michael Choi - The Possibility Analysis of Eliminating Errors in Decision Making ProcessesDocument26 pages(Behavioral Economics) Michael Choi - The Possibility Analysis of Eliminating Errors in Decision Making ProcessespiturdabNo ratings yet

- Yarkoni 2020Document37 pagesYarkoni 2020kropek2011No ratings yet

- Statistical Terms Part 1:: The Meaning of The MEAN, and Other Statistical Terms Commonly Used in Medical ResearchDocument5 pagesStatistical Terms Part 1:: The Meaning of The MEAN, and Other Statistical Terms Commonly Used in Medical ResearchBono ReyesNo ratings yet

- Jussim, Crawford, Et Al - Interpretations and Methods - Towards A More Effectively Self-Correcting Social Psychology - JESP (2016)Document18 pagesJussim, Crawford, Et Al - Interpretations and Methods - Towards A More Effectively Self-Correcting Social Psychology - JESP (2016)Joe DuarteNo ratings yet

- What Is The Probability of Replicating A Statistically Significant EffectDocument24 pagesWhat Is The Probability of Replicating A Statistically Significant EffectMichael RogersNo ratings yet

- Running From William James Bear A Review of Preattentive Mechanisms and Their Contributions To Emotional ExperienceDocument31 pagesRunning From William James Bear A Review of Preattentive Mechanisms and Their Contributions To Emotional ExperienceDaniel Jann CotiaNo ratings yet

- Merikle & Reingold 1998 On Demonstrating Unconscious Perception - Comment On Draine and Greenwald (1998)Document7 pagesMerikle & Reingold 1998 On Demonstrating Unconscious Perception - Comment On Draine and Greenwald (1998)hoorieNo ratings yet

- Eterogeneity 9.1.: 9.1.1. What Do We Mean by Heterogeneity?Document64 pagesEterogeneity 9.1.: 9.1.1. What Do We Mean by Heterogeneity?Anindita Chakraborty100% (1)

- Meta Analysis 2019 2020Document125 pagesMeta Analysis 2019 2020SihamNo ratings yet

- Cognitive Components of Social AnxietyDocument4 pagesCognitive Components of Social AnxietyAdina IlieNo ratings yet

- Prep, The Probability of Replicating An Effect: January 2015Document14 pagesPrep, The Probability of Replicating An Effect: January 2015Aitana ParrillaNo ratings yet

- Causes of Effects Vs Effects of CausesDocument10 pagesCauses of Effects Vs Effects of CausesWaqar SiapadNo ratings yet

- Quantitative: How To Detect Effects: Statistical Power and Evidence-Based Practice in Occupational Therapy ResearchDocument8 pagesQuantitative: How To Detect Effects: Statistical Power and Evidence-Based Practice in Occupational Therapy ResearchkhadijehkhNo ratings yet

- 1 Introduction To Psychological StatisticsDocument83 pages1 Introduction To Psychological StatisticsJay HeograpiyaNo ratings yet

- QuotesDocument4 pagesQuotesWasif ImranNo ratings yet

- 03 Psychology 3 2015 TllezDocument21 pages03 Psychology 3 2015 TllezDianaCarolinaNo ratings yet

- Deciphering Data: How To Make Sense of The LiteratureDocument42 pagesDeciphering Data: How To Make Sense of The LiteratureNational Press FoundationNo ratings yet

- Meta Analysis StepsDocument50 pagesMeta Analysis StepsmddNo ratings yet

- A Criticism of John Hattie's Arguments in Visible Learning From The Perspective of A StatisticianDocument10 pagesA Criticism of John Hattie's Arguments in Visible Learning From The Perspective of A StatisticianMarysia JasiorowskaNo ratings yet

- The Social Validity Manual: A Guide to Subjective Evaluation of Behavior InterventionsFrom EverandThe Social Validity Manual: A Guide to Subjective Evaluation of Behavior InterventionsNo ratings yet

- Comparing Treatment Means Overlapping Standard Errors 52odf4albiDocument13 pagesComparing Treatment Means Overlapping Standard Errors 52odf4albiThazin HtayNo ratings yet

- STATISTIC 11891 - Chapter - 5Document34 pagesSTATISTIC 11891 - Chapter - 5steveNo ratings yet

- Detection of Malingering during Head Injury LitigationFrom EverandDetection of Malingering during Head Injury LitigationArthur MacNeill Horton, Jr.No ratings yet

- Rosenthal 1979 Psych BulletinDocument4 pagesRosenthal 1979 Psych BulletinChris El HadiNo ratings yet

- We Knew The Future All Along Scientific Hypothesizing Is Much More Accurate Than Other Forms of Precognition-A Satire in One PartDocument4 pagesWe Knew The Future All Along Scientific Hypothesizing Is Much More Accurate Than Other Forms of Precognition-A Satire in One PartAntonio PereiraNo ratings yet

- PSYC-320A Dist-Ed Lecture 2Document44 pagesPSYC-320A Dist-Ed Lecture 2raymondNo ratings yet

- Causal Models and Learning From DataDocument9 pagesCausal Models and Learning From DataAndi Fahira NurNo ratings yet

- Bootstrap BC A For Cohens DDocument20 pagesBootstrap BC A For Cohens DAnonymous rfn4FLKD8No ratings yet

- Lance Storm - Meta-Analysis in Parapsychology: I. The Ganzfeld DomainDocument19 pagesLance Storm - Meta-Analysis in Parapsychology: I. The Ganzfeld DomainSorrenneNo ratings yet

- 01 - Chance - Fletcher, Clinical Epidemiology The Essentials, 5th EditionDocument19 pages01 - Chance - Fletcher, Clinical Epidemiology The Essentials, 5th EditionDaniel PalmaNo ratings yet

- Curran 2015Document16 pagesCurran 2015Ana Hernández DoradoNo ratings yet

- ArticoIntelligence New Findings and Theoretical DevelopmentslDocument31 pagesArticoIntelligence New Findings and Theoretical DevelopmentslMariaGrigoritaNo ratings yet

- Best AnswerDocument167 pagesBest AnswerAlex DarmawanNo ratings yet

- Running Head: Methods in Behavioral Research 1Document4 pagesRunning Head: Methods in Behavioral Research 1Tonnie KiamaNo ratings yet

- 14.interaction Between Self-Monitoring andDocument11 pages14.interaction Between Self-Monitoring andBlayel FelihtNo ratings yet

- 0.the Influence of Implicit Self-Theories On Consumer Financial Decision MakingDocument10 pages0.the Influence of Implicit Self-Theories On Consumer Financial Decision MakingBlayel FelihtNo ratings yet

- The Impact of The Implicit Theories of Social Optimism and Social Pessimism On Macro Attitudes Towards ConsumptionDocument17 pagesThe Impact of The Implicit Theories of Social Optimism and Social Pessimism On Macro Attitudes Towards ConsumptionBlayel FelihtNo ratings yet

- 12.Self-Monitoring and The Self-Attribution of Positive EmotionsDocument12 pages12.Self-Monitoring and The Self-Attribution of Positive EmotionsBlayel FelihtNo ratings yet

- 16.turnover Intentions and Voluntary Turnover The Moderating Roles of Self-Monitoring, Locus of Control, Proactive Personality, and Risk AversionDocument11 pages16.turnover Intentions and Voluntary Turnover The Moderating Roles of Self-Monitoring, Locus of Control, Proactive Personality, and Risk AversionBlayel FelihtNo ratings yet

- Capitalizing On Brand Personalities in Advertising The Influence of Implicit Selftheories On Ad Appeal EffectivenessDocument10 pagesCapitalizing On Brand Personalities in Advertising The Influence of Implicit Selftheories On Ad Appeal EffectivenessBlayel FelihtNo ratings yet

- Can Implicit Theory Influence Construal LevelDocument9 pagesCan Implicit Theory Influence Construal LevelBlayel FelihtNo ratings yet

- 64.privacy Concerns and Online Purchasing Behaviour Towards Anintegrated ModelDocument10 pages64.privacy Concerns and Online Purchasing Behaviour Towards Anintegrated ModelBlayel FelihtNo ratings yet

- 0.lay Dispositionism and Implicit Theories of PersonalityDocument13 pages0.lay Dispositionism and Implicit Theories of PersonalityBlayel FelihtNo ratings yet

- 0.the Effects of Goal Progress Cues An Implicit Theory PerspectiveDocument13 pages0.the Effects of Goal Progress Cues An Implicit Theory PerspectiveBlayel FelihtNo ratings yet

- 54.exploring The Individual's Behavior On Self-Disclosure OnlineDocument20 pages54.exploring The Individual's Behavior On Self-Disclosure OnlineBlayel FelihtNo ratings yet

- 63.inducing Sensitivity To Deception in Order To Improve Decision Making PerformanceDocument27 pages63.inducing Sensitivity To Deception in Order To Improve Decision Making PerformanceBlayel FelihtNo ratings yet

- 53.A Trust Model For Consumer Internet ShoppingDocument18 pages53.A Trust Model For Consumer Internet ShoppingBlayel FelihtNo ratings yet

- 4.on The Nature of Self-Monitoring - Construct Explication With Q-Sort RatingsDocument14 pages4.on The Nature of Self-Monitoring - Construct Explication With Q-Sort RatingsBlayel FelihtNo ratings yet

- 3.appeals To Image and Claims About QualityDocument12 pages3.appeals To Image and Claims About QualityBlayel FelihtNo ratings yet

- Rieh ARIST2007Document50 pagesRieh ARIST2007Gustavo Soto MiñoNo ratings yet

- 10.Self-Monitoring and The MetatraitsDocument14 pages10.Self-Monitoring and The MetatraitsBlayel FelihtNo ratings yet

- 64.privacy Concerns and Online Purchasing Behaviour Towards Anintegrated ModelDocument10 pages64.privacy Concerns and Online Purchasing Behaviour Towards Anintegrated ModelBlayel FelihtNo ratings yet

- 6.social Influence The Role of Self-Monitoring When Making Social ComparisonsDocument15 pages6.social Influence The Role of Self-Monitoring When Making Social ComparisonsBlayel FelihtNo ratings yet

- 63.inducing Sensitivity To Deception in Order To Improve Decision Making PerformanceDocument27 pages63.inducing Sensitivity To Deception in Order To Improve Decision Making PerformanceBlayel FelihtNo ratings yet

- 9.on The Nature of Self-Monitoring Matters of Assessment, Matters of ValidityDocument15 pages9.on The Nature of Self-Monitoring Matters of Assessment, Matters of ValidityBlayel FelihtNo ratings yet

- 66.helpfulness of Online Consumer Reviews Readers'Document29 pages66.helpfulness of Online Consumer Reviews Readers'Blayel FelihtNo ratings yet

- 53.objective Self-Awareness Theory Recent Progress and Enduring ProblemsDocument13 pages53.objective Self-Awareness Theory Recent Progress and Enduring ProblemsBlayel FelihtNo ratings yet

- 16.disambiguating Authenticity Interpretations of Value and AppealDocument23 pages16.disambiguating Authenticity Interpretations of Value and AppealBlayel FelihtNo ratings yet

- 17.authenticity Is Contagious Brand Essence and The Original Source of ProductionDocument17 pages17.authenticity Is Contagious Brand Essence and The Original Source of ProductionBlayel FelihtNo ratings yet

- 51.shame Proneness and Guilt Proneness Toward The Further Understanding of Reactions To Public and Private TransgressionsDocument27 pages51.shame Proneness and Guilt Proneness Toward The Further Understanding of Reactions To Public and Private TransgressionsBlayel FelihtNo ratings yet

- Survey Measures and Code Book (Kitayama Et Al., Psychological Science in Press)Document10 pagesSurvey Measures and Code Book (Kitayama Et Al., Psychological Science in Press)Blayel FelihtNo ratings yet

- 50.shame and Embarrassment RevisitedDocument14 pages50.shame and Embarrassment RevisitedBlayel FelihtNo ratings yet

- Moral Emotions and Moral BehaviorDocument27 pagesMoral Emotions and Moral BehaviorCătălina PoianăNo ratings yet

- Need For Closure Scale Manual CodeDocument4 pagesNeed For Closure Scale Manual CodeBlayel FelihtNo ratings yet

- Machine Learning 10601 Recitation 8 Oct 21, 2009: Oznur TastanDocument46 pagesMachine Learning 10601 Recitation 8 Oct 21, 2009: Oznur TastanS AswinNo ratings yet

- Stat-Q2 1Document8 pagesStat-Q2 1Von Edrian PaguioNo ratings yet

- Deprivation, Ill-Health and The Ecological Fallacy: University of Liverpool, UKDocument16 pagesDeprivation, Ill-Health and The Ecological Fallacy: University of Liverpool, UKwalter31No ratings yet

- CH5019 Mathematical Foundations of Data Science Test 8 QuestionsDocument4 pagesCH5019 Mathematical Foundations of Data Science Test 8 QuestionsLarry LautorNo ratings yet

- DocumentDocument7 pagesDocumentvasanthi_cyberNo ratings yet

- Chapter 8 Tools Used in Gat Audit 2Document123 pagesChapter 8 Tools Used in Gat Audit 2Jeya VilvamalarNo ratings yet

- Probe Part 7Document27 pagesProbe Part 7udaywal.nandiniNo ratings yet

- Projects Prasanna Chandra 7E Ch4 Minicase SolutionDocument3 pagesProjects Prasanna Chandra 7E Ch4 Minicase SolutionJeetNo ratings yet

- Stat Slides 5Document30 pagesStat Slides 5Naqeeb Ullah KhanNo ratings yet

- (Anderson) The Asymptotic Distributions of Autoregressive CoefficientsDocument24 pages(Anderson) The Asymptotic Distributions of Autoregressive CoefficientsDummy VariablesNo ratings yet

- Hyp DataDocument3 pagesHyp DataSubhaNo ratings yet

- Portfolio Math CFA ReviewDocument3 pagesPortfolio Math CFA ReviewDionizioNo ratings yet

- Case Processing SummaryDocument5 pagesCase Processing SummarySiti NuradhawiyahNo ratings yet

- SEM Back To Basics MuellerDocument19 pagesSEM Back To Basics Muellerkuldeep_0405No ratings yet

- Time Series ForecastingDocument52 pagesTime Series ForecastingAniket ChauhanNo ratings yet

- Bum2413 - Applied StatisticsDocument9 pagesBum2413 - Applied StatisticsSyada HagedaNo ratings yet

- MI2036 Chap2Document163 pagesMI2036 Chap2Long Trần VănNo ratings yet

- (Maths) - Normal DistributionDocument4 pages(Maths) - Normal Distributionanonymous accountNo ratings yet

- Foo PDFDocument7 pagesFoo PDFfodsffNo ratings yet

- Zakharov 2017Document4 pagesZakharov 2017JesusDavidDeantonioPelaezNo ratings yet

- Submitted To: Mrs. Geetika Vashisht College of Vocational Studies University of DelhiDocument36 pagesSubmitted To: Mrs. Geetika Vashisht College of Vocational Studies University of Delhisanchit nagpalNo ratings yet

- 3.2 The Following Set of Data Is From A Sample of N 6 7 4 9 7 3 12 A. Compute The Mean, Median, and ModeDocument10 pages3.2 The Following Set of Data Is From A Sample of N 6 7 4 9 7 3 12 A. Compute The Mean, Median, and ModeSIRFANI CAHYADINo ratings yet

- MGT646Document8 pagesMGT646AMIRUL RAJA ROSMANNo ratings yet

- Rainfall Drought Simulating Using StochaDocument13 pagesRainfall Drought Simulating Using StochaNela MuetzNo ratings yet

- Adaptive Traitor Tracing With Bayesian Networks (Slides)Document33 pagesAdaptive Traitor Tracing With Bayesian Networks (Slides)philip zigorisNo ratings yet

- Assignment Report - Predictive Modelling - Rahul DubeyDocument18 pagesAssignment Report - Predictive Modelling - Rahul DubeyRahulNo ratings yet

- Comm 215.MidtermReviewDocument71 pagesComm 215.MidtermReviewJose Carlos BulaNo ratings yet

- Cheat Sheet Quantitative Methods in Finance Nova Cheat Sheet Quantitative Methods in Finance NovaDocument3 pagesCheat Sheet Quantitative Methods in Finance Nova Cheat Sheet Quantitative Methods in Finance Novabassirou ndao0% (1)

- ETM 421Hw3Document3 pagesETM 421Hw3Waqas SarwarNo ratings yet

- Weibull Distribution Illustration in ExcelDocument4 pagesWeibull Distribution Illustration in ExcelBijli VadakayilNo ratings yet