Professional Documents

Culture Documents

Interpreting A Result That Is (Or Is Not) Statistically Significant

Uploaded by

Ana CalmîșOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Interpreting A Result That Is (Or Is Not) Statistically Significant

Uploaded by

Ana CalmîșCopyright:

Available Formats

c h a p t e r 15

Interpreting a Result That Is

(Or Is Not) Statistically Significant

s

Interpreting results That Are

es

“statistically significant”

Pr

Let’s consider a simple scenario: comparing enzyme activity in cells incubated with a

ity

new drug to enzyme activity in control cells. Your scientific hypothesis is that the drug

rs

increases enzyme activity, so the null hypothesis is that there is no difference. You run

ve

the analysis, and indeed, the enzyme activity is higher in the treated cells. You run a

ni

U

t test and find that the P value is less than 0.05, so you conclude the result is statistically

significant. Below are seven possible explanations for why this happened.

d

or

xf

Explanation 1: The drug had a substantial effect

O

Scenario: The drug really does have a substantial effect to increase the

16

activity of the enzyme you are measuring.

20

Discussion: This is what everyone thinks when they see a small P value in

this situation. But it is only one of seven possible explanations.

ht

ig

Explanation 2: The drug had a trivial effect

yr

op

Scenario: The drug actually has a very small effect on the enzyme you are

studying even though your experiment yielded a P value of less than 0.05.

C

Discussion: The small P value just tells you that the difference is large

enough to be surprising if the drug really didn’t affect the enzyme at all. It

does not imply that the drug has a large treatment effect. A small P value

with a small effect will happen with some combination of a large sample

size and low variability. It will also happen if the treated group has mea-

surements a bit above their true average and the control group happens to

have measurements a bit below their true average, causing the effect ob-

served in your experiment to be larger than the true effect.

Explanation 3: There was a Type I error or false discovery

Scenario: The drug really doesn’t affect enzyme expression at all. Random

sampling just happened to give you higher values in the cells treated with the

drug and lower levels in the control cells. Accordingly, the P value was small.

96

15-Motulsky-Chap15.indd 96 29/04/15 7:49 AM

c h a p t e r 15 • Interpreting a Result That Is (Or Is Not) Statistically Significant 97

Discussion: This is called a Type I error or false discovery. If the drug really

has no effect on the enzyme activity (if the null hypothesis is true) and you

choose the traditional 0.05 significance level (a 5 5%), you’ll expect to

make a Type I error 5% of the time. Therefore, it is easy to think that you’ll

make a Type I error 5% of the time when you conclude that a difference is

statistically significant. However, this is not correct. You’ll understand

why when you read about the false discovery rate (the fraction of “statisti-

cally significant” conclusions that are wrong) in the next chapter.

Explanation 4: There was a Type S error

Scenario: The drug (on average) decreases enzyme activity, which you

would only know if you repeated the experiment many times. In this par-

ticular experiment, however, random sampling happened to give you high

enzyme activity in the drug-treated cells and low activity in the control

s

es

cells, and this difference was large enough, and consistent enough, to be

statistically significant.

Pr

Discussion: Your conclusion is backward. You concluded that the drug (on

ity

average) significantly increases enzyme activity when in fact the drug

rs

decreases enzyme activity. You’ve correctly rejected the null hypothesis

ve

that the drug does not influence enzyme activity, so you have not made a

ni

Type I error. Instead, you have made a Type S error, because the sign (plus

U

or minus, increase or decrease) of the actual overall effect is opposite to the

d

or

results you happened to observe in one experiment. Type S errors are rare

xf

but not impossible. They are also referred to as Type III errors.

O

16

Explanation 5: The experimental design was poor

20

Scenario: The enzyme activity really did go up in the tubes with the added

drug. However, the reason for the increase in enzyme activity had nothing

ht

to do with the drug but rather with the fact that the drug was dissolved

ig

in an acid (and the cells were poorly buffered) and the controls did not re-

yr

ceive the acid (due to bad experimental design). So the increase in enzyme

op

activity was actually due to acidifying the cells and had nothing to do with

C

the drug itself. The statistical conclusion was correct—adding the drug

did increase the enzyme activity—but the scientific conclusion was com-

pletely wrong.

Discussion: Statistical analyses are only a small part of good science. That

is why it is so important to design experiments well, to randomize and

blind when possible, to include necessary positive and negative controls,

and to validate all methods.

Explanation 6: The results cannot be interpreted

due to ad hoc multiple comparisons

Scenario: You actually ran this experiment many times, each time testing

a different drug. The results were not statistically significant for the first

24 drugs tested but the results with the 25th drug were statistically significant.

15-Motulsky-Chap15.indd 97 29/04/15 7:49 AM

98 E S S E N T I A L B I O S TAT I S T I C S

Discussion: These results would be impossible to interpret, as you’d expect

some small P values just by chance when you do many comparisons. If you

know how many comparisons were made (or planned), you can correct for

multiple comparisons. But here, the design was not planned, so a rigorous

interpretation is impossible. We’ll return to this topic in Chapter 17.

Explanation 7: The results cannot be interpreted

due to dynamic sample size

Scenario: You first ran the experiment in triplicate, and the result (n 5 3)

was not statistically significant. Then you ran it again and pooled the re-

sults (so now n 5 6), and the results were still not statistically significant.

So you ran it again, and finally, the pooled results (with n 5 9) were statis-

tically significant.

Discussion: The P value you obtain from this approach simply cannot be

s

es

interpreted. P values can only be interpreted at face value when the sample

Pr

size, the experimental protocol, and all the data manipulations and analy-

ses were planned in advance. Otherwise, you are P-hacking, and the re-

ity

sults cannot be interpreted. We’ll return to this topic in Chapter 18.

rs

ve

Bottom line: When a P value is small, consider all the possibilities

ni

Be cautious when interpreting small P values. Don’t make the mistake of in-

U

stantly believing explanation 1 above without also considering the possibility

d

or

that the true explanation is one of the other six possibilities listed. Read on to see

xf

how you need to be just as wary when interpreting a high P value.

O

16

Interpreting Results That Are

20

“Not statistically significant”

ht

“Not significantly different” does not mean “no difference”

ig

yr

A large P value means that a difference (or correlation, or association) as

op

large as what you observed would happen frequently as a result of random

C

sampling. But this does not necessarily mean that the null hypothesis of no

difference is true or that the difference you observed is definitely the result of

random sampling.

Vickers (2010) tells a great story that illustrates this point:

The other day I shot baskets with [the famous basketball player] Michael Jordan

(remember that I am a statistician and never make things up). He made 7 straight

free throws; I made 3 and missed 4 and then (being a statistician) rushed to the

sideline, grabbed my laptop, and calculated a P value of .07 by Fisher’s exact

test. Now, you wouldn’t take this P value to suggest that there is no difference

between my basketball skills and those of Michael Jordan, you’d say that our

experiment hadn’t proved a difference.

A high P value does not prove the null hypothesis. And deciding not to

reject the null hypothesis is not the same as believing that the null hypothesis

15-Motulsky-Chap15.indd 98 29/04/15 7:49 AM

c h a p t e r 15 • Interpreting a Result That Is (Or Is Not) Statistically Significant 99

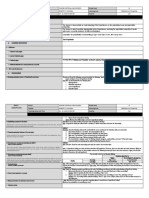

CONTROLS HYPERTENSION

Number of subjects 17 18

Mean receptor number (receptors/platelet) 263 257

Standard deviation 87 59

Table 15.1. Number of α2 -adrenergic receptors on the platelets isolated

from the blood of controls and people with hypertension.

is definitely true. The absence of evidence is not evidence of absence (Altman &

Bland, 1995).

Example: a2-Adrenergic receptors on platelets

Epinephrine, acting through a2-adrenergic receptors, makes blood platelets

stickier and thus helps blood clot. Motulsky, O’Connor, and Insel (1983) counted

s

es

these receptors and compared people with normal and high blood pressure. The

Pr

idea was that the adrenergic signaling system might be abnormal in people with

high blood pressure (hypertension). We were most interested in the effects on the

ity

heart, blood vessels, kidney, and brain but obviously couldn’t access those tissues

rs

in people, so we counted the receptors on platelets instead. Table 15.1 shows the

ve

results.

ni

The results were analyzed using a test called the unpaired t test, also called

U

the two-sample t test. The average number of receptors per platelet was almost

d

or

the same in both groups, so of course, the P value was high—specifically, 0.81.

xf

If the two populations were Gaussian with identical means, you’d expect to see a

O

difference as large as or larger than that observed in this study in 81% of studies

16

of this size.

20

Clearly, these data provide no evidence that the mean receptor number

differs in the two groups. When my colleagues and I published this study over

ht

30 years ago, we stated that the results were not statistically significant and

ig

yr

stopped there, implying that the high P value proves that the null hypothesis is

op

true. But that was not a complete way to present the data. We should have inter-

C

preted the confidence interval.

The 95% CI for the difference between group means extends from –45 to

57 receptors per platelet. To put this in perspective, the average number of

a2-adrenergic receptors per platelet is about 260. We can therefore rewrite the

confidence interval as extending from –45/260 to 57/260, which is from –17.3%

to 21.9%, or approximately plus or minus 20%.

It is only possible to properly interpret the confidence interval in a scientific

context. Here are two alternative ways to think about these results:

• A 20% change in receptor number could have a huge physiological impact.

With such a wide confidence interval, the data are inconclusive, be-

cause you could have these data with no difference, substantially more

receptors, or substantially fewer receptors on platelets of people with

hypertension.

15-Motulsky-Chap15.indd 99 29/04/15 7:49 AM

100 E S S E N T I A L B I O S TAT I S T I C S

• The confidence interval convincingly shows that the true difference is un-

likely to be more than 20% in either direction. This experiment counts

receptors on a convenient tissue (blood cells) as a marker for other organs,

and we know that the number of receptors per platelet varies a lot from

individual to individual. For these reasons, we’d only be intrigued by the

results (and want to pursue this line of research) if the receptor number

in the two groups differed by at least 50%. Here, the 95% CI extended

about 20% in each direction. Therefore, we can reach a solid negative con-

clusion that the receptor number in individuals with hypertension does not

change or that any such change is physiologically trivial and not worth

pursuing.

The difference between these two perspectives is a matter of scientific judg-

ment. Would a difference of 20% in receptor number be scientifically relevant?

s

The answer depends on scientific (physiological) thinking. Statistical calcula-

es

tions have nothing to do with it. Statistical calculations are only a small part of

Pr

interpreting data.

ity

Five explanations for “not statistically

rs

ve

significant” results

ni

U

Let’s continue the simple scenario from the first part of this chapter. You com-

d

pare cells incubated with a new drug to control cells and measure the activity

or

of an enzyme, and you find that the P value is large enough (greater than 0.05)

xf

O

for you to conclude that the result is not statistically significant. Below are five

explanations to explain why this happened.

16

20

Explanation 1: The drug did not affect the enzyme you are studying

ht

Scenario: The drug did not induce or activate the enzyme you are studying,

ig

so the enzyme’s activity is the same (on average) in treated and control cells.

yr

Discussion: This is, of course, the conclusion everyone jumps to when they

op

see the phrase “not statistically significant.” However, four other explana-

C

tions are possible.

Explanation 2: The drug had a trivial effect

Scenario: The drug may actually affect the enzyme but by only a small

amount.

Discussion: This explanation is often forgotten.

Explanation 3: There was a Type II error

Scenario: The drug really did substantially affect enzyme expression.

Random sampling just happened to give you some low values in the cells

treated with the drug and some high levels in the control cells. Accord-

ingly, the P value was large, and you conclude that the result is not statisti-

cally significant.

15-Motulsky-Chap15.indd 100 29/04/15 7:49 AM

c h a p t e r 15 • Interpreting a Result That Is (Or Is Not) Statistically Significant 101

Discussion: How likely are you to make this kind of Type II error? It

depends on how large the actual (or hypothesized) difference is, on the

sample size, and on the experimental variation. We’ll return to this topic

in Chapter 18.

Explanation 4: The experimental design was poor

Scenario: In this scenario, the drug really would increase the activity of the

enzyme you are measuring. However, the drug was inactivated, because it

was dissolved in an acid. Since the cells were never exposed to the active

drug, of course the enzyme activity didn’t change.

Discussion: The statistical conclusion was correct—adding the drug did

not increase the enzyme activity—but the scientific conclusion was com-

pletely wrong.

s

es

Explanation 5: The results cannot be interpreted due to dynamic

Pr

sample size

Scenario: In this scenario, you hypothesized that the drug would not work,

ity

and you really want the experiment to validate your prediction (maybe

rs

you have made a bet on the outcome). You first ran the experiment three

ve

times, and the result (n 5 3) was statistically significant. Then you ran it

ni

three more times, and the pooled results (now with n 5 6) were still statis-

U

tically significant. Then you ran it four more times, and finally, the results

d

or

(with n 5 10) were not statistically significant. This n 5 10 result (not

xf

statistically significant) is the one you present.

O

Discussion: The P value you obtain from this approach simply cannot be

16

interpreted.

20

Lingo

ht

ig

As already mention in Chapter 14, beware of the word significant. Its use in sta-

yr

tistics as part of the phrase statistically significant is very different than the or-

op

dinary use of the term to mean important or notable. Whenever you see the word

C

significant, make sure you understand the context.

If you are writing scientific papers, the best way to avoid the ambiguity is to

never use the word significant. You can write about whether a P value is greater

or less than a preset threshold without using that word. And there are lots of

alternative words to use to describe the clinical, physiological or scientific impact

of a finding.

Common Mistakes

Mistake: Believing that a “statistically significant” result proves

an effect is real

As this chapter points out, there are several reasons why a result can be statisti-

cally significant.

15-Motulsky-Chap15.indd 101 29/04/15 7:49 AM

102 E S S E N T I A L B I O S TAT I S T I C S

Mistake: Believing that a “not statistically significant”

result proves no effect is present

As this chapter points out, there are several reasons why a result can be not sta-

tistically significant.

Mistake: Believing that if a difference is “statistically significant,”

it must have a large physiological or clinical impact

If the sample size is large, a difference can be statistically significant but also so

tiny that it is physiologically or clinically trivial.

Q&A

Is this chapter trying to tell me that it isn’t enough to determine if a result is, or is not, statisti-

cally significant. Instead, I actually have to think?

s

Yes!

es

Pr

CHAPTER SUMMARY

ity

• A conclusion of statistical significance can arise in seven different

rs

situations:

ve

There really is a substantial effect. This is what everyone thinks of when

ni

they hear “statistically significant.”

U

There is a trivial effect.

d

or

There is a Type I error or false discovery. In other words, there truly is

xf

zero effect, but random sampling caused an apparent effect.

O

There is a Type S error. In other words, the effect is real, but random

16

sampling led you to see an effect in this one experiment that is opposite

20

in direction to the true effect.

The study design is poor.

ht

The results cannot be interpreted due to ad hoc multiple comparisons.

ig

yr

The results cannot be interpreted due to ad hoc sample size selection.

op

• The word “significant” is often misinterpreted it. Avoid using it. When

C

reading papers that include the word “significant” make sure you know

what the author meant.

• “Not significantly different” does not mean “no difference.” Absence of

evidence is not evidence of absence.

• A conclusion of “not statistically significant” can arise in five different

situations:

There is no effect. In other words, the null hypothesis is true.

The effect is too small to detect in this study.

There is a Type II error. In other words, random sampling prevented you

from seeing a true effect.

The study design is poor.

The results cannot be interpreted due to ad hoc sample size selection.

15-Motulsky-Chap15.indd 102 29/04/15 7:49 AM

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Common Misconceptions About Data Analysis and StatisticsDocument7 pagesCommon Misconceptions About Data Analysis and StatisticsAna CalmîșNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- International Congress On Oil and Protein CropsDocument173 pagesInternational Congress On Oil and Protein CropsAna CalmîșNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Evaluation of The Genetic Diversity of Origanum Genus' SpeciesDocument11 pagesEvaluation of The Genetic Diversity of Origanum Genus' SpeciesAna CalmîșNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- ThesisDocument342 pagesThesisAna CalmîșNo ratings yet

- Pop GeneDocument29 pagesPop GeneSão Camilo BiotecnologiaNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Book of Abstracts MAPPPS2016 Articol SedumDocument185 pagesBook of Abstracts MAPPPS2016 Articol SedumMirela ArdeleanNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Book of AbstractsDocument121 pagesBook of AbstractsAna CalmîșNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Lucrări Ştiinţifice, 2018, Seria AgronomieDocument6 pagesLucrări Ştiinţifice, 2018, Seria AgronomieAna CalmîșNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Interpretation of Nei and Shannon-Weaver Within Population Variation IndicesDocument6 pagesThe Interpretation of Nei and Shannon-Weaver Within Population Variation IndicesAna CalmîșNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Evaluation of Genetic Diversity of Origanum Genus SpeciesDocument8 pagesEvaluation of Genetic Diversity of Origanum Genus SpeciesAna CalmîșNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Aula - Molecular - Markers PDFDocument194 pagesAula - Molecular - Markers PDFwandecNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Intraspecific Genetic Variability of Hyssopus Officinalis L.Document8 pagesIntraspecific Genetic Variability of Hyssopus Officinalis L.Ana CalmîșNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- RAPD MOLECULAR MARKER STUDY OF THE INTRASPECIFIC VARIABILITY OF Origanum Vulgare Subsp. Vulgare NATURALLY OCCURRING IN MOLDOVADocument11 pagesRAPD MOLECULAR MARKER STUDY OF THE INTRASPECIFIC VARIABILITY OF Origanum Vulgare Subsp. Vulgare NATURALLY OCCURRING IN MOLDOVAAna CalmîșNo ratings yet

- Phenylalanine Ammonia-Lyase in Normal and Biotic Stress ConditionsDocument8 pagesPhenylalanine Ammonia-Lyase in Normal and Biotic Stress ConditionsAna CalmîșNo ratings yet

- Chapter 17Document8 pagesChapter 17Ana CalmîșNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Conservation of Plant Diversity: Academy of Sciences of MoldovaDocument7 pagesConservation of Plant Diversity: Academy of Sciences of MoldovaAna CalmîșNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Evaluation of The Measure of Polymorphism Information of Genetic DiversityDocument7 pagesEvaluation of The Measure of Polymorphism Information of Genetic DiversityAna CalmîșNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Chapter 22 Intuitive BiostatisticsDocument11 pagesChapter 22 Intuitive BiostatisticsAna CalmîșNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Chapter 1 Intuitive BiostatisticsDocument9 pagesChapter 1 Intuitive BiostatisticsAna CalmîșNo ratings yet

- Interpopulation Genetic Differentiation Orobanche Cumana Wallr. From Russia, Kazakhstan and Romania Using Molecular Genetic MarkersDocument11 pagesInterpopulation Genetic Differentiation Orobanche Cumana Wallr. From Russia, Kazakhstan and Romania Using Molecular Genetic MarkersAna CalmîșNo ratings yet

- Chapter 19 Intuitive BiostatisticsDocument6 pagesChapter 19 Intuitive BiostatisticsAna CalmîșNo ratings yet

- Gene Expression Analysis: Ulf Leser and Karin ZimmermannDocument46 pagesGene Expression Analysis: Ulf Leser and Karin ZimmermannAna CalmîșNo ratings yet

- 2019, SSR O. CumanaDocument10 pages2019, SSR O. CumanaAna CalmîșNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Genetic Mapping in PolyploidsDocument309 pagesGenetic Mapping in PolyploidsAna CalmîșNo ratings yet

- GenAlEx 6.5b3 GuideDocument131 pagesGenAlEx 6.5b3 GuideAna CalmîșNo ratings yet

- Genetic Mapping in PolyploidsDocument309 pagesGenetic Mapping in PolyploidsAna CalmîșNo ratings yet

- Donald A. Berry. The Difficult and Ubiquitous Problems of Multiplicities.Document6 pagesDonald A. Berry. The Difficult and Ubiquitous Problems of Multiplicities.Ana CalmîșNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Pop GeneDocument29 pagesPop GeneSão Camilo BiotecnologiaNo ratings yet

- GenAlEx 6.5b3 GuideDocument131 pagesGenAlEx 6.5b3 GuideAna CalmîșNo ratings yet

- Uestion 1Document52 pagesUestion 1John Elmer LoretizoNo ratings yet

- University of Gondar Estimation and Hypothesis TestingDocument119 pagesUniversity of Gondar Estimation and Hypothesis Testinghenok birukNo ratings yet

- Evaluating the Fit of Structural Equation Models with Unobserved Variables and Measurement ErrorDocument13 pagesEvaluating the Fit of Structural Equation Models with Unobserved Variables and Measurement ErrorClecio AraujoNo ratings yet

- Signature Assignment - Personal RenaissanceDocument3 pagesSignature Assignment - Personal Renaissanceapi-457726286No ratings yet

- Hypothesis TestingDocument128 pagesHypothesis TestingJulia Nicole OndoyNo ratings yet

- STATS MC Practice Questions 50Document6 pagesSTATS MC Practice Questions 50Sta Ker0% (1)

- 482 Chapter 9: Fundamentals of Hypothesis Testing: One-Sample TestsDocument29 pages482 Chapter 9: Fundamentals of Hypothesis Testing: One-Sample TestscleofecaloNo ratings yet

- SPSS Chapter 14Document34 pagesSPSS Chapter 14kelvin leeNo ratings yet

- A Study On Factors Governing Computerized Accounting in Selected SMEs' of GujaratDocument23 pagesA Study On Factors Governing Computerized Accounting in Selected SMEs' of GujaratrajNo ratings yet

- AJC H2 Math 2013 Prelim P2Document6 pagesAJC H2 Math 2013 Prelim P2nej200695No ratings yet

- Hypothesis Testing ExplainedDocument16 pagesHypothesis Testing Explainedtemedebere100% (1)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Effects of Depression On Students Academ PDFDocument6 pagesEffects of Depression On Students Academ PDFKristine Dela CruzNo ratings yet

- Calculateds The Probabilities of Committing A Type I and Type II Eror.Document4 pagesCalculateds The Probabilities of Committing A Type I and Type II Eror.Robert Umandal100% (1)

- Level of SignificanceDocument4 pagesLevel of SignificanceAstha VermaNo ratings yet

- Statictics Maths 2 Marks - 2Document42 pagesStatictics Maths 2 Marks - 2AshokNo ratings yet

- Statistics and Probability Module 5 (1)Document53 pagesStatistics and Probability Module 5 (1)papyoverzosaNo ratings yet

- Customer Management and Organizational Performance of Banking Sector - A Case Study of Commercial Bank of Ethiopia Haramaya Branch and Harar BranchesDocument10 pagesCustomer Management and Organizational Performance of Banking Sector - A Case Study of Commercial Bank of Ethiopia Haramaya Branch and Harar BranchesAlexander DeckerNo ratings yet

- Chapter8 Hypothesis TestingDocument139 pagesChapter8 Hypothesis TestingRegitaNo ratings yet

- Wahl ClimChange2007Document37 pagesWahl ClimChange20070shaka0No ratings yet

- Module - 4 - Analyze Phase - Oct 20Document210 pagesModule - 4 - Analyze Phase - Oct 20mohmedkelioy1No ratings yet

- Normality Test in Clinical ResearchDocument7 pagesNormality Test in Clinical ResearchMichaelNo ratings yet

- Skittles Term Project 1-6Document10 pagesSkittles Term Project 1-6api-284733808No ratings yet

- Research Methods: Foundation Course OnDocument31 pagesResearch Methods: Foundation Course OnSharad AggarwalNo ratings yet

- G Power CalculationDocument9 pagesG Power CalculationbernutNo ratings yet

- Critical Appraisal For Cross SectionalDocument8 pagesCritical Appraisal For Cross SectionalSiti Zulaikhah100% (2)

- Weekly Quiz 3 SMDM - PGPBABI.O.OCT19 Statistical Methods For Decision Making - Great Learning PDFDocument6 pagesWeekly Quiz 3 SMDM - PGPBABI.O.OCT19 Statistical Methods For Decision Making - Great Learning PDFAnkit67% (3)

- Measurement in Physical Education and Exercise ScienceDocument15 pagesMeasurement in Physical Education and Exercise ScienceJaviera ZambranoNo ratings yet

- Final Internshala ReportDocument38 pagesFinal Internshala ReportKartik SinghNo ratings yet

- Modern Business Statistics With Microsoft Office Excel 4Th Edition Anderson Solutions Manual Full Chapter PDFDocument47 pagesModern Business Statistics With Microsoft Office Excel 4Th Edition Anderson Solutions Manual Full Chapter PDFjerryholdengewmqtspaj100% (8)

- Chapter3 - 2Document54 pagesChapter3 - 2Hafzal Gani67% (3)