Professional Documents

Culture Documents

Paradoxes in Probability Theory

Uploaded by

Eduardo Costa0 ratings0% found this document useful (0 votes)

139 views3 pagesCopyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

139 views3 pagesParadoxes in Probability Theory

Uploaded by

Eduardo CostaCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 3

Book Review

Paradoxes in

Probability Theory

Reviewed by Olle Häggström

The Simulation Argument

Paradoxes in Probability Theory

William Eckhardt Assume that computer technology continues to

Springer, September 2012 develop to the extent that eventually we are able to

Paperback, 94 pages, US$39.95 run, at low cost, detailed simulations of our entire

ISBN-13: 978-9400751392 planet, down to the level of atoms or whatever

is needed. Then future historians will likely run

plenty of simulations of world history during those

William Eckhardt’s recent book, Paradoxes in Prob- interesting transient times of the early twenty-first

ability Theory, has an appetizing format with just century. Hence the number of people living in

xi+79 pages. Some might say it’s a booklet rather

the real physical world in 2012 will be vastly

than a book. Call it what you will, this thought-

outnumbered by the number of people who believe

provoking text treats the following seven delightful

themselves to do so but actually live in computer

problems or paradoxes.

simulations run in the year 2350 or so. Can we

thus conclude that we probably live in a computer

The Doomsday Argument

simulation?

Let a given human being’s birth rank be defined

as the number of human beings born up to and

Newcomb’s Paradox

including his or her birth. Consider the scenario

that humanity has a bright future, with a population An incredibly intelligent donor, perhaps from outer

of billions thriving for tens of thousands of years space, has prepared two boxes for you: a big one

or more. In such a scenario, our own birth ranks and a small one. The small one (which might as

will be very small as compared to the “typical” well be transparent) contains $1,000. The big one

human. Can we thus conclude that Doomsday is contains either $1,000,000 or nothing. You have a

near? choice between accepting both boxes or just the

big box. It seems obvious that you should accept

The Betting Crowd both boxes (because that gives you an extra $1,000

You are in a casino together with a number of other irrespective of the content of the big box), but

people. All of you bet on the roll of a pair of fair here’s the catch: The donor has tried to predict

dice not giving double sixes; either all of you win or whether you will pick one box or two boxes. If the

all of you lose, depending on the outcome of this prediction is that you pick just the big box, then it

single roll. You seem to have probability 35/36 contains $1,000,000, whereas if the prediction is

of winning, but there’s a catch. The casino first that you pick both boxes, then the big box is empty.

invites one person to play this game. If the casino The donor has exposed a large number of people

wins, then the game is played no more; otherwise, before you to the same experiment and predicted

another ten people are invited to play. If the casino correctly 90 percent of the time, regardless of

wins this time, play closes; otherwise, one hundred whether subjects chose one box or two.1 What

new players are invited. And so on, with a tenfold should you do?

increase in the number of players in each round.

This ensures that, when the whole thing is over, The Open Box Problem

more than 90 percent of all players will have lost. This is the same as Newcomb’s Paradox except that

Now what is your probability of winning? you get to see the contents of the big box before

Olle Häggström is professor of mathematics at Chalmers deciding.

University of Technology in Gothenburg, Sweden. His email

1

address is olleh@chalmers.se. Eckhardt forgets to mention this last condition (“regardless

DOI: http://dx.doi.org/10.1090/noti958 of…”), but it is clear that he intended it.

March 2013 Notices of the AMS 329

The Hadron Collider Card Game he writes that “there exist a variety of arguments

Physicists at CERN are looking for a hitherto both for and against one-boxing but, in keeping

undetected particle X.2 A radical physical theory Y with the design of this book, I search for an

has been put forth3 that particle X can in principle incontrovertible argument. (Of course it will be

be produced, except that “something in the future controverted.)” The book is a pleasure to read, not

is trying by any means available to prevent the so much for Eckhardt’s solutions (which, indeed, I

production of [particle X].” A way to test theory find mostly controvertible) but for the stimulus it

Y is as follows. Prepare and shuffle a deck with a provides for thinking about the problems.

million cards, including one ace of spades. Pick one Eckhardt is a financial trader with a background

card at random from the deck after having made in mathematical logic, a keen interest in philosophy,

international agreements to abandon the search and a few academic publications in the subject

for particle X provided the card picked turns out prior to the present one. Hence it is not surprising

to be the ace of spades. If the ace of spades is that he may have a different view of what is meant

picked, this can be seen as evidence in favor of by probability theory, as compared to an academic

theory Y. Does this make sense? mathematician and probabilist such as I, who

considers the title Paradoxes in Probability Theory

The Two-Envelopes Problem to be a bit of a misnomer. To me, probability

theory is the study of internal properties of

Two envelopes are prepared, one with a positive

given probability models (or classes of probability

amount of money and the other with twice that

models) satisfying Kolmogorov’s famous axioms

amount. The envelopes are shuffled, and you get to

from 1933 [Ko], the focus being on calculating or

pick one and open it. You may then decide whether

estimating probabilities or expectations of various

you wish to keep that amount or switch to the other

events or quantities in such models. In contrast,

envelope. If the amount you observe is X, then the

issues about how to choose a probability model

amount in the other envelope is either X/2 or 2X,

suitable for a particular real-world situation (or

with probability 1/2 each, for an expectation of

X/2+2X for a particular philosophical thought experiment)

2 = 5X4 , which is greater than X, so it seems are part of what we may call applied probability

that you should switch envelopes. But this is true

but not of probability theory proper. This is not to

regardless of the value of X, so it seems that you

say that we probabilists shouldn’t engage in such

have incentive to switch even before you open the

modeling issues (we should!), only that when we

envelope. This, however, seems to clash with an

do so we step outside the realms of probability

obvious symmetry between the two well-shuffled

theory.

envelopes. What is going on here?

In this strict sense of probability theory, all

Of these seven paradoxes, two are new, whereas seven problems treated by Eckhardt fall outside of

the other five are known from the literature, such it. Take for instance the Two-Envelopes Problem,

as the Simulation Argument, which was put forth which to the untrained eye may seem to qualify as a

by Bostrom [B] in 2003 and has been the topic of probability problem. But it doesn’t for the following

intense discussion in the philosophy literature ever reason. Write Y and 2Y for the two amounts put

in the envelopes. No probability distribution for

since.4 The two new ones, the Betting Crowd and

Y is specified in the problem, whereas in order

the Open Box Problem, were invented by Eckhardt,

to determine whether you increase your expected

mainly as pedagogical vehicles to help one think

reward by changing envelopes when you observe

more clearly about the other paradoxes.

X = $100 (say), you need to know the distribution

Eckhardt has no ambition to provide complete

of Y or at least the ratio P (Y = 50)/P (Y = 100).

coverage of the literature on the five previously

So a bit of modeling is needed. The first thing

known paradoxes. Rather, the task he sets himself

to note (as Eckhardt does) is that the problem

is to resolve them, once and for all, and the review

formulation implicitly assumes that the symmetry

of previous studies that he does provide is mostly 1

P (X = Y ) = P (X = 2Y ) = 2 remains valid if we

to set the stage for his own solutions. He claims

condition on X (i.e., looking in the envelope gives

to be successful in his task but realizes that not

no clue about whether we have picked the larger

everyone will agree about that: in the introductory

or the smaller amount). This assumption leads

paragraph of his chapter on Newcomb’s Paradox,

to a contradiction: if P (Y = y) = q for some

2

In Eckhardt’s account, X is the Higgs boson, which unfortu-

y > 0 and q > 0, then the assumption implies

nately (for his problem formulation) has been detected since that P (Y = 2k y) = q for all integers k, leading

the time of writing. to an improper probability distribution whose

3 total mass sums to ∞ (and it is easy to see that

It really has; see [NN].

4 giving Y a continuous distribution doesn’t help).

Even I have found reason to discuss it in an earlier book

review in the Notices [Hä]. Hence the uninformativeness assumption must

330 Notices of the AMS Volume 60, Number 3

be abandoned. Some of the paradoxicality can be probability in case of two-boxing, then the decision

retained in the following way. Fix r ∈ (0, 1) and to one-box is a no-brainer. But why should I accept

give Y the following distribution: that those conditional probabilities apply to me

P (Y = 2k ) = (1 − r )r k for k = 0, 1, 2, . . . . just because they arise as observed frequencies in

a large population of other people? It seems that

For r > 12 , it will always make sense (in terms of most people would resist such a conclusion and

expected amount received) to switch envelopes that there are (at least) two psychological reasons

upon looking in one. Eckhardt moves quickly from for this. One is our intuitive urge to believe in

the general problem formulation to analyzing something called free will, which prevents even

this particular model. I agree with him that the the most superior being from reliably predicting

behavior of the model is a bit surprising. Note, whether we will one-box or two-box.5 The other is

however, that r > 12 implies E[Y ] = ∞, so the our notorious inability to take into account base

paradox can be seen as just another instance of the rates (population frequencies) in judging uncertain

familiar phenomenon that if I am about to receive features of ourselves, including success rates of

a positive reward with infinite expected value, I future tasks [Ka]. The core issue in Newcomb’s

will be disappointed no matter how much I get. Paradox is whether we should simply overrule

Consider next Newcomb’s Paradox. Here, Eck- these cognitive biases and judge, based on past

hardt advocates one-boxing, i.e., selecting the big frequencies, our conditional probabilities of getting

box only. The usual argument against one-boxing is the $1,000,000 given one-boxing or two-boxing

that since the choice has no causal influence on the to be as stipulated by Eckhardt or if there are

content of the big box, one-boxing is sure to lose other, more compelling, rational arguments to

$1,000 compared to two-boxing no matter what think differently.

the big box happens to contain. On the other hand, The story is mostly the same with the other

one-boxers and two-boxers alike seem to agree problems and paradoxes treated in this book. The

that, if the observed correlation between choice real difficulty lies in translating the problem into a

and content of the big box reflected a causal effect fully specified probability model. Once that is done,

of the choice on the box content, then one-boxing the analysis becomes more or less straightforward.

would be the right choice. Eckhardt’s argument for My main criticism of Eckhardt’s book is that he

one-boxing, in the absence of such causality, is an tends to put too little emphasis on the first step

appeal to what he calls the Coherence Principle, (model specification) and too much on the second

which says that decision problems that can be put (model analysis). The few hours needed to read the

in outcome alignment should be played in the same book are nevertheless a worthwhile investment.

way. Here outcome alignment is a particular case of

what probabilists call a coupling [L]. Two decision References

problems with the same set of options to choose [B] N. Bostrom (2003), Are we living in a computer

from are said to be outcome alignable if they can simulation? Philosophical Quarterly 53, 243–255.

be constructed on the same probability space in [Hä] O. Häggström (2008), Book review: Irreligion, Notices

such a way that any given choice yields the same of the American Mathematical Society 55, 789–791.

[Har] S. Harris (2012), Free Will, Free Press, New York.

outcome for the two problems. Eckhardt tweaks

[Ho] D. R. Hofstadter (2007), I Am a Strange Loop, Basic

the original problem formulation NP to produce a Books, New York.

causal variant NPc, where the choice does influence [Ka] D. Kahneman (2011), Thinking, Fast and Slow, Farrar

the box content causally in such a way that a Straus Giroux, New York.

one-boxer gets the $1,000,000 with probability [Ko] A. N. Kolmogorov (1933), Grundbegriffe der

0.9, and a two-boxer gets it with probability 0.1. Wahrscheinlichkeitsrechnung, Springer, Berlin.

He also stipulates the same probabilities for NP [L] T. Lindvall (1992), Lectures on the Coupling Method,

Wiley, New York.

and notes that NP and NPc can be coupled into

[NN] H. B. Nielsen and M. Ninomiya (2008), Search for

outcome alignment. Since everyone agrees that effect of influence from future in Large Hadron Col-

one-boxing is the right choice in NPc, we get from lider, International Journal of Modern Physics A 23,

the Coherence Principle that one-boxing is the 919–932.

right choice also in NP.

The trouble with Eckhardt’s solution, in my

opinion, is that his stipulation of the probabilities

in NP for getting the million dollars, given one-

boxing or two-boxing, glosses over the central 5

This intuitive urge is so strong that, in a situation like this, it

difficulty of Newcomb’s Paradox. Suppose I find tends to overrule a more intellectual insight into the problem

myself facing the situation given in the problem of free will, such as my own understanding (following, e.g.,

formulation. If I accept that I have a 0.9 probability Hofstadter [Ho] and Harris [Har]) of the intuitively desirable

of getting the million in case of one-boxing and a 0.1 notion of free will as being simply incoherent.

March 2013 Notices of the AMS 331

You might also like

- What Is Random?: Chance and Order in Mathematics and LifeFrom EverandWhat Is Random?: Chance and Order in Mathematics and LifeRating: 3.5 out of 5 stars3.5/5 (2)

- Two-Envelope Paradox. Two Indistinguishable Envelopes, 1 and 2, AreDocument21 pagesTwo-Envelope Paradox. Two Indistinguishable Envelopes, 1 and 2, Areimp.michele1341No ratings yet

- The Godelian Puzzle BookDocument3 pagesThe Godelian Puzzle BookJulia0% (1)

- Genius 2 Genius Manifest: A Word From The EditorDocument12 pagesGenius 2 Genius Manifest: A Word From The EditorPascalNo ratings yet

- God Created The IntegersDocument3 pagesGod Created The IntegersAnnie50% (2)

- History of ProbabilityDocument17 pagesHistory of ProbabilityonlypingNo ratings yet

- Paradoxes, Ancient Philosophy and ExistencialismDocument7 pagesParadoxes, Ancient Philosophy and ExistencialismAlice Ivanova OlivaNo ratings yet

- Duelling Idiots and Other Probability PuzzlersFrom EverandDuelling Idiots and Other Probability PuzzlersRating: 4.5 out of 5 stars4.5/5 (3)

- Maths Hacks 100 Clever Ways To Help You Understand and Remember The Most Important Theories (PDFDrive)Document236 pagesMaths Hacks 100 Clever Ways To Help You Understand and Remember The Most Important Theories (PDFDrive)NickOlNo ratings yet

- Models of the Mind: How Physics, Engineering and Mathematics Have Shaped Our Understanding of the BrainFrom EverandModels of the Mind: How Physics, Engineering and Mathematics Have Shaped Our Understanding of the BrainRating: 3.5 out of 5 stars3.5/5 (4)

- Virtual Interiorities: The Myth of Total VirtualityFrom EverandVirtual Interiorities: The Myth of Total VirtualityVahid VahdatNo ratings yet

- Zen0 G. Swijtink : D'Alembert and The Maturity of Chances+Document23 pagesZen0 G. Swijtink : D'Alembert and The Maturity of Chances+Manticora FabulosaNo ratings yet

- Riddles in Mathematics: A Book of ParadoxesFrom EverandRiddles in Mathematics: A Book of ParadoxesRating: 4 out of 5 stars4/5 (11)

- Probability and StatisticsDocument28 pagesProbability and StatisticsElmerNo ratings yet

- Jupyter Notebook ViewerDocument32 pagesJupyter Notebook ViewerThomas Amleth BottiniNo ratings yet

- PARADOXESDocument7 pagesPARADOXESAlice Ivanova OlivaNo ratings yet

- IESS Probability History ofDocument4 pagesIESS Probability History ofAdda Cletus WepariNo ratings yet

- In Pursuit of Zeta-3: The World's Most Mysterious Unsolved Math ProblemFrom EverandIn Pursuit of Zeta-3: The World's Most Mysterious Unsolved Math ProblemRating: 3 out of 5 stars3/5 (1)

- Digital Dice: Computational Solutions to Practical Probability ProblemsFrom EverandDigital Dice: Computational Solutions to Practical Probability ProblemsRating: 5 out of 5 stars5/5 (5)

- Games for Your Mind: The History and Future of Logic PuzzlesFrom EverandGames for Your Mind: The History and Future of Logic PuzzlesRating: 4 out of 5 stars4/5 (1)

- Introduction To Probability With Mathematica - Hastings Sec1.1Document6 pagesIntroduction To Probability With Mathematica - Hastings Sec1.1Δημητρης ΛουλουδηςNo ratings yet

- Virtual Interiorities: Senses of Place and SpaceFrom EverandVirtual Interiorities: Senses of Place and SpaceDave GottwaldNo ratings yet

- On The Origins of Certain Arguments in J.M. Keynes Theory of ProbabilityDocument7 pagesOn The Origins of Certain Arguments in J.M. Keynes Theory of Probabilitymaximilian.oberbauerNo ratings yet

- Puzzles and Paradoxes: Fascinating Excursions in Recreational MathematicsFrom EverandPuzzles and Paradoxes: Fascinating Excursions in Recreational MathematicsRating: 4 out of 5 stars4/5 (1)

- The Philosophy of Set Theory: An Historical Introduction to Cantor's ParadiseFrom EverandThe Philosophy of Set Theory: An Historical Introduction to Cantor's ParadiseRating: 3.5 out of 5 stars3.5/5 (2)

- Probability and Combinatorics - Olivia KucanDocument3 pagesProbability and Combinatorics - Olivia Kucanapi-221860675No ratings yet

- The Mystery of Prime NumbersDocument5 pagesThe Mystery of Prime NumbershjdghmghkfNo ratings yet

- Klerkx Curriculum AnalysisDocument10 pagesKlerkx Curriculum Analysisapi-253618062No ratings yet

- Nonplussed!: Mathematical Proof of Implausible IdeasFrom EverandNonplussed!: Mathematical Proof of Implausible IdeasRating: 4 out of 5 stars4/5 (8)

- Koyre - Liar PDFDocument20 pagesKoyre - Liar PDFDjepetoNo ratings yet

- Mathematics And The World's Most Famous Maths Problem: The Riemann HypothesisFrom EverandMathematics And The World's Most Famous Maths Problem: The Riemann HypothesisNo ratings yet

- The Seven Follies of Science [2nd ed.] A popular account of the most famous scientific impossibilities and the attempts which have been made to solve them.From EverandThe Seven Follies of Science [2nd ed.] A popular account of the most famous scientific impossibilities and the attempts which have been made to solve them.No ratings yet

- New Light Through Old Windows: Exploring Contemporary Science Through 12 Classic Science Fiction TalesFrom EverandNew Light Through Old Windows: Exploring Contemporary Science Through 12 Classic Science Fiction TalesNo ratings yet

- Virtual Interiorities: When Worlds CollideFrom EverandVirtual Interiorities: When Worlds CollideGregory Turner-RahmanNo ratings yet

- Enigmatic Terra Al-Jabr - EngDocument103 pagesEnigmatic Terra Al-Jabr - EngEnriqueRamosNo ratings yet

- DieterleDocument22 pagesDieterlerebe53No ratings yet

- The Primal CodeDocument17 pagesThe Primal CodeRobert West100% (1)

- Alexander Golovnev, Alexander S. Kulikov, Vladimir v. Podolskii, Alexander Shen - Discrete Mathematics For Computer Science-Leanpub - Com (2022) - 5Document56 pagesAlexander Golovnev, Alexander S. Kulikov, Vladimir v. Podolskii, Alexander Shen - Discrete Mathematics For Computer Science-Leanpub - Com (2022) - 5Chirag SutharNo ratings yet

- Mathematical Association of America Math HorizonsDocument3 pagesMathematical Association of America Math HorizonsFurkan Bilgekagan ErtugrulNo ratings yet

- Puzzles 1Document3 pagesPuzzles 1YogapaNo ratings yet

- Module 1 Discrete MathDocument56 pagesModule 1 Discrete MathKerwin TantiadoNo ratings yet

- Introduction of The Architecture of The ImaginationDocument21 pagesIntroduction of The Architecture of The ImaginationIván RodríguezNo ratings yet

- Doob, The Development of Rigor in Mathematical Probability (1900-1950) PDFDocument11 pagesDoob, The Development of Rigor in Mathematical Probability (1900-1950) PDFFardadPouranNo ratings yet

- Book Review: Anthropic Bias: Observation Selection Effects in Science and Philosophy. byDocument5 pagesBook Review: Anthropic Bias: Observation Selection Effects in Science and Philosophy. byvbaumhoffNo ratings yet

- Brandub Print-And-Play: Assembly InstructionsDocument5 pagesBrandub Print-And-Play: Assembly InstructionsVictor M GonzalezNo ratings yet

- Asalto Print and PlayDocument3 pagesAsalto Print and PlayEduardo CostaNo ratings yet

- Cyningstan: Traditional Board GamesDocument4 pagesCyningstan: Traditional Board GamesEduardo CostaNo ratings yet

- Cyningstan: Nine Men'S MorrisDocument3 pagesCyningstan: Nine Men'S MorrisEduardo CostaNo ratings yet

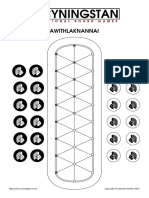

- Awithlaknannai Print PlayDocument2 pagesAwithlaknannai Print PlayEduardo CostaNo ratings yet

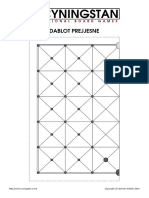

- Dablot Prejjesne Print PlayDocument4 pagesDablot Prejjesne Print PlayEduardo CostaNo ratings yet

- Cyningstan: Three Men'S MorrisDocument2 pagesCyningstan: Three Men'S MorrisEduardo CostaNo ratings yet

- Alquerque Print and PlayDocument2 pagesAlquerque Print and PlayEduardo CostaNo ratings yet

- WariDocument2 pagesWariWkrscribdNo ratings yet

- HorseshoeDocument2 pagesHorseshoeWkrscribdNo ratings yet

- Cyningstan: 5 & 6 Men'S MorrisDocument2 pagesCyningstan: 5 & 6 Men'S MorrisEduardo CostaNo ratings yet

- Fox and GeeseDocument2 pagesFox and GeeseWkrscribd100% (2)

- SenetDocument2 pagesSenetWkrscribdNo ratings yet

- TablutDocument2 pagesTablutWkrscribdNo ratings yet

- Mathematical Association of America Is Collaborating With JSTOR To Digitize, Preserve and Extend Access To The American Mathematical MonthlyDocument2 pagesMathematical Association of America Is Collaborating With JSTOR To Digitize, Preserve and Extend Access To The American Mathematical MonthlyEduardo CostaNo ratings yet

- AlquerqueDocument2 pagesAlquerqueDeniver VukelicNo ratings yet

- Power of A PointDocument142 pagesPower of A PointRoberto HernándezNo ratings yet

- 50 Great Maths WebsitesDocument11 pages50 Great Maths WebsitesSRIDHAR SUBRAMANIAM100% (1)

- Twenty Problems in ProbabilityDocument13 pagesTwenty Problems in ProbabilityGopi KrishnaNo ratings yet

- Nine Mens MorrisDocument2 pagesNine Mens MorrisLudwigStinsonNo ratings yet

- American Mathematical Monthly Volume 69 Issue 3 1962 (Doi 10.2307 - 2311065) Sondow, Jonathan Just, Erwin Schaumberger, Norman Manheimer, - E1478Document3 pagesAmerican Mathematical Monthly Volume 69 Issue 3 1962 (Doi 10.2307 - 2311065) Sondow, Jonathan Just, Erwin Schaumberger, Norman Manheimer, - E1478Eduardo CostaNo ratings yet

- Project IdeasDocument34 pagesProject Ideasimec_coordinator73530% (1)

- 50 Diophantine Equations Problems With SolutionsDocument6 pages50 Diophantine Equations Problems With Solutionsbvarici100% (2)

- 40 Functional EquationsDocument2 pages40 Functional EquationsToaster97No ratings yet

- Celtic Tiles 1Document1 pageCeltic Tiles 1Eduardo CostaNo ratings yet

- Pin The Bow Tie On The Skeleton Game TemplateDocument1 pagePin The Bow Tie On The Skeleton Game TemplateEduardo CostaNo ratings yet

- Celtic Counter: These Knots Are All Different. Count The Number of Loops in Each OneDocument76 pagesCeltic Counter: These Knots Are All Different. Count The Number of Loops in Each OneEduardo CostaNo ratings yet

- SolutionsDocument5 pagesSolutionsPhillipe El HageNo ratings yet

- Hoffman and Kunze PDFDocument137 pagesHoffman and Kunze PDFMarcus Vinicius Sousa SousaNo ratings yet

- Two Steps From Hell Full Track ListDocument13 pagesTwo Steps From Hell Full Track ListneijeskiNo ratings yet

- Krautkrämer Ultrasonic Transducers: For Flaw Detection and SizingDocument48 pagesKrautkrämer Ultrasonic Transducers: For Flaw Detection and SizingBahadır Tekin100% (1)

- Concrete Batching and MixingDocument8 pagesConcrete Batching and MixingIm ChinithNo ratings yet

- APQP TrainingDocument22 pagesAPQP TrainingSandeep Malik100% (1)

- 332-Article Text-1279-1-10-20170327Document24 pages332-Article Text-1279-1-10-20170327Krisdayanti MendrofaNo ratings yet

- 07 Indian WeddingDocument2 pages07 Indian WeddingNailah Al-FarafishahNo ratings yet

- Final Research Proposal-1Document17 pagesFinal Research Proposal-1saleem razaNo ratings yet

- Relative Plate Motion:: Question # Difference Between Relative Plate Motion and Absolute Plate Motion and ApparentDocument9 pagesRelative Plate Motion:: Question # Difference Between Relative Plate Motion and Absolute Plate Motion and ApparentGet TipsNo ratings yet

- B. Concept of The State - PeopleDocument2 pagesB. Concept of The State - PeopleLizanne GauranaNo ratings yet

- Cost ContingenciesDocument14 pagesCost ContingenciesbokocinoNo ratings yet

- Opinion - : How Progressives Lost The 'Woke' Narrative - and What They Can Do To Reclaim It From The Right-WingDocument4 pagesOpinion - : How Progressives Lost The 'Woke' Narrative - and What They Can Do To Reclaim It From The Right-WingsiesmannNo ratings yet

- IIT JEE Maths Mains 2000Document3 pagesIIT JEE Maths Mains 2000Ayush SharmaNo ratings yet

- Say. - .: Examining Contemporary: Tom Daley's Something I Want To Celebrity, Identity and SexualityDocument5 pagesSay. - .: Examining Contemporary: Tom Daley's Something I Want To Celebrity, Identity and SexualityKen Bruce-Barba TabuenaNo ratings yet

- Mendezona vs. OzamizDocument2 pagesMendezona vs. OzamizAlexis Von TeNo ratings yet

- Emasry@iugaza - Edu.ps: Islamic University of Gaza Statistics and Probability For Engineers ENCV 6310 InstructorDocument2 pagesEmasry@iugaza - Edu.ps: Islamic University of Gaza Statistics and Probability For Engineers ENCV 6310 InstructorMadhusudhana RaoNo ratings yet

- Orthogonal Curvilinear CoordinatesDocument16 pagesOrthogonal Curvilinear CoordinatesstriaukasNo ratings yet

- Moorish Architecture - KJDocument7 pagesMoorish Architecture - KJKairavi JaniNo ratings yet

- Krok1 EngDocument27 pagesKrok1 Engdeekshit dcNo ratings yet

- Alternative Forms of BusOrgDocument16 pagesAlternative Forms of BusOrgnatalie clyde matesNo ratings yet

- Tutorial-Midterm - CHEM 1020 General Chemistry - IB - AnsDocument71 pagesTutorial-Midterm - CHEM 1020 General Chemistry - IB - AnsWing Chi Rainbow TamNo ratings yet

- WaveDocument5 pagesWaveArijitSherlockMudiNo ratings yet

- Test Bank For Marketing 12th Edition LambDocument24 pagesTest Bank For Marketing 12th Edition Lambangelascottaxgeqwcnmy100% (39)

- Contoh Writing AdfelpsDocument1 pageContoh Writing Adfelpsmikael sunarwan100% (2)

- A Case Study On College Assurance PlanDocument10 pagesA Case Study On College Assurance PlanNikkaK.Ugsod50% (2)

- Question Bank of Financial Management - 2markDocument16 pagesQuestion Bank of Financial Management - 2marklakkuMSNo ratings yet

- Egg Osmosis PosterDocument2 pagesEgg Osmosis Posterapi-496477356No ratings yet

- Utah GFL Interview Answers TableDocument5 pagesUtah GFL Interview Answers TableKarsten WalkerNo ratings yet

- General ManagementDocument47 pagesGeneral ManagementRohit KerkarNo ratings yet

- Chapter-1-Operations and Management: There Are Four Main Reasons Why We Study OM 1)Document3 pagesChapter-1-Operations and Management: There Are Four Main Reasons Why We Study OM 1)Karthik SaiNo ratings yet

- Experimental Nucleonics PDFDocument1 pageExperimental Nucleonics PDFEricNo ratings yet

![The Seven Follies of Science [2nd ed.]

A popular account of the most famous scientific

impossibilities and the attempts which have been made to

solve them.](https://imgv2-1-f.scribdassets.com/img/word_document/187420329/149x198/356a2b3c08/1579720183?v=1)