Professional Documents

Culture Documents

Measuring The Cost Effectiveness of Confluent Cloud: White Paper

Uploaded by

Deni DianaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Measuring The Cost Effectiveness of Confluent Cloud: White Paper

Uploaded by

Deni DianaCopyright:

Available Formats

Measuring the Cost

Effectiveness of Confluent Cloud

White Paper

Overview

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Demonstrating cost effectiveness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Confluent’s approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Customer example: Anonymized TCO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Key Takeaways and FAQs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

About Confluent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2020 Confluent, Inc. | confluent.io

White Paper

1. Demonstrating cost effectiveness

Introduction

At Confluent, we believe the event streaming platform will be the single most strategic data platform in every modern

company. To help make this happen, we built Confluent Cloud - the industry’s only fully managed, cloud-native event

streaming platform powered by Apache Kafka. In order to demonstrate the business value of this solution and event

streaming in general, we offer a free-of-charge analysis of your costs of running Apache Kafka® and the potential ROI of

utilizing a fully-managed service. Customers using Confluent Cloud have seen up to a 60% reduction in the TCO of Apache

Kafka - and we’ll show you how in this whitepaper.

Many vendors make claims around their software reducing total cost of ownership (TCO), or improving ROI. But how are

these claims formulated? What’s the process of measuring them? What are the assumptions? How robust are the estimates?

This white paper provides answers to these questions by outlining the business value assessment model we use, our approach,

and a customer example and shares lessons learned along the way. Our intention is to be as open and transparent as

possible in our discovery, the information we use, and the assumptions we make. We strive to gain consensus with our

customers when calculating business value because a wildly exaggerated and groundless TCO or ROI is pointless for all

involved. Or worse, it can dangerously set flawed expectations

The intended audience for this white paper includes all the stakeholders involved in making decisions around implementing

and operating Kafka in an organization. This broadly includes the following three groups:

Hands-on technology teams – This group is often tasked with creating a business case and includes DevOps, data

engineers, architects, and InfoSec

Business-tech teams – This group involves the economic buyer, including enterprise architects, VP of Engineering, BU

heads, CTO, and CIO

Pure business teams – This group typically reviews business cases and ensures appropriate prioritization and includes

LOB heads, product owners, business decision-makers, buyers, procurement, and potentially the broader C-suite

A focus on TCO, ROI, and overall cost effectiveness is particularly important in the current climate amidst COVID-19. The

global public safety measures and economic downturn have simultaneously increased the importance of focusing on the

digital side of the business while increasing pressure on the budgets available to deliver those digital services..

Our model TCO and ROI

A TCO exercise offers insight into business value by focusing on all the costs involved in developing and operating a solution.

The focus is on cost take-out across two or more options. This is slightly different from ROI, which models a financial return.

At Confluent, we use a mix of TCO and ROI. Why? A TCO model alone is often so heavily focused on cost take-out that it fails

to measure the true business value of a fully managed service.

As an example, a TCO might outline reduced setup and operating costs of a fully managed service. Whilst this presents

business value, many organizations choose a fully managed service for reasons beyond cost take-out alone. Additional

reasons can include, for example, accelerated time to market, increased developer velocity, increased business agility and

reduced overall risk, and perhaps most importantly, it frees key resources from the operational burden of implementing and

managing a solution.

2020 Confluent, Inc. | confluent.io 1

White Paper

Deploying Kafka presents challenges that aren’t the core problem most companies are trying to solve. It’s not where they

want their best people focused. With a fully managed service the customer can shift key resources to higher value tasks. This

refocus results in opportunity optimization and isn’t captured in TCO alone.

In order to capture the full business value of Confluent Cloud, we look at three value buckets:

Increse speed Reduce total cost Maximize return

to market of ownership on investment

Deploy Kafka at scale Operate more efficiently with Deliver higher returns with your

within one week of lower infra cost, maintenance, project by launching faster and

starting with Confluent and downtime risk reducing operational burden

1. Increase speed to market by up to 75%

Confluent Cloud:

• Increases developer productivity and agility

• Reduces the time it takes to bring something to market

2. Reduce total cost of ownership by up to 60%

Confluent Cloud helps you operate more efficiently with lower costs across infrastructure, maintenance, and risk.

This is broken down into two segments:

• Ongoing operating expenses – This includes infrastructure bills (compute, storage, and network), maintenance

and development costs, and other services you might consume to keep your Kafka cluster running

• Risk and indirect costs – This includes things like the cost of downtime, performance degradations, and security risks

3. Maximize return on investment

The above categories adds up to a higher return on investment for your projects – especially when you consider the following:

• Reducing the operational burden allows engineers to focus on higher value work related to the business use case.

• The business use case which the Kafka project underpins

• Confluent Cloud helps avoid the pain and complexity of integrating your growing engineering environment by serving

as the universal streaming platform that democratizes data access across different engineering teams

2. Our approach

Our approach to measuring business value includes a discovery exercise in which we ask questions around each of the value

buckets above. For example:

Speed to market

• How long would it take to develop the self-managed platform (including hiring / ramping resources)?

• What is the financial benefit of getting to market sooner?

Total cost of ownership

• What’s the number of developers / operations required?

• What is the fully loaded cost of a developer / operator?

• What is the estimated effort involved, including ongoing bug fixes, improvement, feature enhancements, scaling up and

down, etc.?

• What are the planned infrastructure costs (compute, storage, and network)?

2020 Confluent, Inc. | confluent.io 2

White Paper

• What are the planned management costs such as systems integrators, training, or other services?

• What are the indirect costs such as downtime, performance degradations, and security risks?

Return on investment

• What are the details around the business use case which the Kafka project underpins?

We often get asked to list discovery questions in advance, but this approach can skirt key value areas. While many details

above are straightforward, some areas are more nebulous, or have an indirect line to value. Calculating value requires

discussion and sometimes debate. Good discovery is a skill. It requires being inquisitive, asking the right questions, drilling into

relevant areas, backing out of others, and gaining overall consensus.

In our approach we aim to be as empirical as possible. In a sense, comparing Confluent Cloud, a fully managed service, with

a self-managed service or other option is similar to a scientific thought experiment, one that is imagined as a sequence of

events, then constructed and explained through narrative form.

1. The self-managed (baseline) represents the “control group.”

2. The target state (Confluent Cloud) represents the “experimental group,” which includes the “dependent variable” –

Confluent Cloud. We want to compare the two scenarios (baseline versus target), like-for-like, except for

the single variable.

3. We assess value as the difference between the baseline and target state in terms of the three value buckets listed above

– these are our hypotheses.

4. We also assess soft, or intangible, benefits – i.e., elements of value which can be difficult to quantify. We also model

various scenarios, sensitivities, and risks which might impact the overall business case.

5. Finally, we aim to use proof points to support the value assessment assumptions and estimates. In scientific terms,

we aim to corroborate our findings with further evidence.

Of course, our overall approach cannot be fully scientific. This is an exercise in forecasting, which relies on assumptions and

estimates. There will be many potentially confounding factors outside of our control in real-world scenarios. We’re using

inductive, not deductive logic, which means we need to accept a business case for what it is – a bit of guesswork, until we

can prove the case post-implementation.

Because of this, we insist the customer own the business case, and we establish this ground rule early on in any engagement.

We can help build and execute the model, © 2014–2020 Confluent, Inc. | 5 and provide guidance and advice, but ultimately

all cost categories, estimates, and assumptions should be provided by, or ratified by, the customer.

In the next section, we provide a real-world example of how this plays out.

2020 Confluent, Inc. | confluent.io 3

White Paper

3. Example of a cost effective assessment

This section walks through an example exercise to measure the cost effectiveness of Confluent Cloud. We start by looking at

the increase in speed to market.

Increse speed Reduce total cost Maximize return

to market of ownership on investment

Deploy Kafka at scale Operate more efficiently with Deliver higher returns with your

within one week of lower infra cost, maintenance, project by launching faster and

starting with Confluent and downtime risk reducing operational burden

Increase speed to market

Reducing the time it takes to bring something to market. With self-managed Kafka, you need to do the manual sizing,

provisioning, expansion, and maintenance of a Kafka cluster. With Confluent Cloud, we do all the work for you. Clusters

are provisioned instantly and maintenance is seamlessly managed for you, and you can start streaming data the day you

sign up.

Increasing developer productivity and agility. With a fully-managed service you don’t have to spend 6-9 months hiring

people who know Kafka just to manage infrastructure or bog down your developers with Kafka maintenance. As a result

you avoid the time and expense of the hiring and ramp-up stages for new people and the slowdowns caused by moving

developers off of critical projects. Of course the project doesn’t end when the product launches. There will always be

ongoing bug fixes, improvements, and feature enhancements, which typically requires developers to invest more time

into developing new tools, new integrations, and so on. Confluent Cloud offers a suite of fully-managed tools and

connectors so your best people remain focused on your critical projects and apps that drive competitive differentiation

and revenue – not maintaining Kafka.

Below is a typical view of how a new Kafka project timeline looks.

Traditional Kafka Development

Project 6-9 months of hire 3 months 9-12 months to build the reproduction - grade

Kickoff Kafka resources to ramp Kafka platform and develop application

Go to market in 2 years

Traditional Kafka Development

3-6 months to focus

Project Start in Launch your application in months

on app development,

Kickoff 1 week and grow your business $$$

not managing Kafka

Go to market in 6 months

2020 Confluent, Inc. | confluent.io 4

White Paper

With Confluent Cloud, the two-year project can potentially be reduced to six months. You don’t need to hire a full team,

and you can get your Kafka clusters up and running in a matter of weeks. You can then focus all your energy in building

applications and delivering value from your project faster.

The following are real-world customer use cases:

• Next-gen cybersecurity company built their massive scale (GBps+) threat intelligence app in six months versus two years.

A 75% reduction.

• Leading financial institution launched their real-time market data service in three months versus one year with

alternatives. A 75% reduction.

• European web analytics company launched their machine learning application in one week. A 50% reduction.

The next section focuses on TCO.

Increse speed Reduce total cost Maximize return

to market of ownership on investment

Deploy Kafka at scale Operate more efficiently with Deliver higher returns with your

within one week of lower infra cost, maintenance, project by launching faster and

starting with Confluent and downtime risk reducing operational burden

We first try to estimate the cost breakdown of a customer’s operating expenses. This typically includes three main components:

1. Infrastructure – this can be on premises or cloud provider bills.

2. Operational costs – in the form of full-time equivalent (FTE), including engineers maintaining and building out platform

capabilities for Kafka, e.g., SREs and DevOps.

3. Support and services – this includes third-party spend to cover things like professional services, training, and support plans, etc.

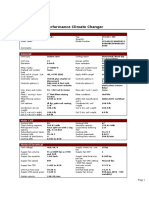

Annual Operating Costs

$ 2,000,000

$ 240,000 $ 1,875,645

$ 950,000 $-

$ 1,500,000

$ 1,000,000

$ 400,950

$ 500,000

$ 193,134

$ 73,561

$-

Inf - Inf - Inf - Operational Outage Cots Support Subtotal

Compute Storage Network Costs (not counted) Cost (CP)

We then look at cost take-out opportunities and ratify these with the customer.

2020 Confluent, Inc. | confluent.io 5

White Paper

Comparing annual operating costs: Self-Managed vs. Confluent Cloud

$ 2,000,000 Confluent Spend

$ 1,857,645

$- Support & Services

$$240,000

73,561

Operations

$ 1,500,000

Infrastructure

$ 73,561

$ 1,000,000

$ 680,000

$ 500,000

$ 480,000

$ 667,645

$-

$ 200,000

$- $-

Self-Managed Confluent Cloud

In this example, we see that Confluent Cloud allows us to remove the infrastructure and support and services costs in the

self-managed solution completely and reduce the operations costs by more than 60%. The overall savings amounts to

$1,177,645 or 63% over one year.

When we model the above savings over three years, we get $3.5M+ (63%) in savings.

Annual Operating Costs

Self-Managed TCO Confluent Cloud TCO Cumulative Savings

$ 8,000,000

$ 6,000,000

$ 4,000,000

$5,572,936

$ 2,000,000 $3,715,291 $3,532,936

$2,355,291 $2,040,000

$1,857,645

$1,177,645 $1,360,000

$680,000

$-

Year 1 Year 2 Year 3

In this section we also look at risk costs, as identified by the following three categories:

1. Downtime – It takes significant investment and expertise to maintain a reliable platform with high availability. Confluent

Cloud comes with a 99.5% SLA for Basic clusters and a 99.95% uptime SLA for Standard and Dedicated clusters built in,

with no disruptions for upgrades and planned maintenance.

2020 Confluent, Inc. | confluent.io 6

White Paper

2. Performance degradation – If your organization experiences any downtime, it may result in significant performance

degradation, which can lead to prolonged periods of lost productivity. Confluent’s 24/7 expert-led support helps prevent

issues and enables fast recovery.

3. Security infringement – Your data is obviously important. It can be costly and risky to manage it all by yourself. Confluent

Cloud comes with enterprise-grade security and compliance to make sure your data is always safe.

The impact of risks, such as downtime, performance degradation, and security infringements, should not be understated.

Various studies have estimated that an hour of downtime costs an organization $100K on average. And a recent study by IBM

found that the average data breach costs an organization $3 million to 4 million, from detection and resolution costs, fines

and reparations, and reputational harm to the business.

We can model these risks costs, however, many organizations choose not to quantify these directly. In general, we take

direction from the customer in terms of what they’re comfortable modeling.

This is also the case when we review the overall ROI. We work with the customer to model business value or cost

effectiveness for their organization.

4. Key takeaways and questions asked

When undertaking a business value assessment we are often asked a common series of questions, so we’ve listed and

answered them below.

Value our customers see from Confluent Cloud

Moving to a fully-managed Apache Kafka deployment for event streaming provides a ton of value for any organization. Our

customers typically see a reduction in the TCO of Apache Kafka by up to 60% and up to a 75% increase in speed to market

for their apps. The cost savings and operational efficiencies that come from this can save your business millions of dollars

and countless FTE hours.

When should we complete the analysis?

The obvious time to complete a business case assessment is prior to starting a project. Further, a business case analysis is

often required to justify budget allocation upfront.

In addition, there is a strong argument to continue the business case exercise in the form of ongoing benefits realization, or

to complete a “sanity check” part way through a program. Studies by McKinsey, Everest Group, and IDC suggest three out of

four digital transformation projects fail to deliver on their stated business value. By incrementally assessing value and costs, a

project can pause, or pivot, if it is not hitting business goals.

As a vendor that mostly relies on a subscription model, we encourage ongoing scrutiny in costs and value. Why? It makes

good business sense. Gartner found that nearly two-thirds of tech buyers would purchase more from existing providers if

they see value from their investments being clearly demonstrated.

How long does it take to complete a business case evaluation?

It depends. We can complete a high-level TCO exercise in about an hour. Whether or not you are interested in our product,

just understanding your own cost structure is a useful exercise and we can provide a number of cost-saving tips during

the process. Equally, we can go through a process that takes 2-3 hours, 2-3 days, or 2-3 weeks, depending on the level of detail

required.

2020 Confluent, Inc. | confluent.io 7

White Paper

What information is required?

We provided an example list of questions in section two of this paper, but it varies. We collect as much information as

possible about the case we’re assessing , plus any variables we think will be impacted.

For the TCO, at a minimum, we require information on development cycles / timeline, infrastructure costs (compute, storage,

and network), developer FTEs and DevOps, or operational FTEs (number and fully loaded costs), and support and services

details. Additional information may include: the positive impact of business agility and the negative impact (associated

costs), security infringements, performance degradation, and downtime.

Modeling a range and adjusting numbers

Accurate TCO calculations forecast the future and are therefore predictions at best. Any credible ROI calculation should

include a range of outcomes, including lower/upper bounds (sometimes referred to as a Monte Carlo simulation). We always

try to model a range with a conservative estimate in the middle.

In terms of accuracy, cash flows can be “NPV’d,” which means discounted to “net present value.” This adjusts the dollar

numbers to compare over time (i.e., cash today is worth more than cash tomorrow). NPV adjustments are obviously more

applicable to five-year business cases, rather than three-year business cases.

Since we mostly run three-year models – and accept accuracy is often less than 10% – we tend to simplify our models to

exclude NPV adjustments. While we acknowledge this approach – and sometimes use it for financial services customers – we

mostly avoid it to keep things simple.

We also acknowledge that customer needs vary. We tend to weigh a business case depending on what’s important to a

customer. Some will have higher-level strategic priorities around cost savings over risk costs, for example.

Credibility

We aim to maximize the credibility of the business case in the following three ways:

1. Being conservative, aligning with a theme of under promising and over delivering.

2. Acknowledging factors that cause uncertainty.

3. Clearly stating and ratifying assumptions and estimates, with the option to easily adjust these at any time in the process.

Initially, customers tend to be shy about sharing actual numbers. We recognize that we first need to earn their trust and

sometimes start by using placeholder numbers (estimates), in an effort to illustrate what the business case might look like. If

the customer likes the insight we provide, they’re often more inclined to share actual figures.

Charting the projections and using a narrative

A business case is generally an exercise in persuading someone to spend money and that investment is justified. And it’s been

shown that people tend to be more convinced when a story is told using visuals such as graphics and images. For this reason,

we tend to use charts to illustrate one-year and three-year benefits.

In addition to charts, a story requires a good narrative, which helps to explain the overall value proposition when discussing

and presenting the business case – especially to a nontechnical audience. As illustrated in the image below from

a Forbes article on data storytelling:

• Visuals (charts) help engage people

• Data helps convince people

• Narratives help take people along the journey

2020 Confluent, Inc. | confluent.io 8

White Paper

Narrative Visuals Narrative Visuals Narrative Visuals

Engage

Explain Enlighten

Data Data

Data

We know decisions are often based on emotion as well as logic. So, emotion also needs to be factored into the narrative.

Value can be intangible or indirect

Separating and quantifying value as a standalone TCO or ROI number has its limitations. To use an analogy for direct value,

it is a little like trying to place value on the foundation of a house, separately from the house. It doesn’t make total sense.

The foundation is valuable, of course. You can estimate its cost. But does it have a separate value to the overall house? How

valuable is the house without its foundation? We believe as businesses become more software-defined, their technology and

data platform foundations become fundamental, just like a foundation is to the house.

An example of indirect value leans on the analogy of paying for insurance. Much like insurance, when you invest in security,

you hope to never have to really use it. We can clearly articulate the costs associated with security, but what is the value?

Is it cost effective? We only really know if something goes wrong. Otherwise, how can we quantify the business value

of a security infringement not happening?

The value of quantifying value

People assume the reason for completing a TCO or ROI exercise is to get to the output – the end result. The TCO savings

of 75% or the 3x ROI … but this is only half the story. The real value comes in the process of analysis and collaboration during

the exercise.

The process also includes completing discovery, and agreeing on the assumptions and estimates. The collaboration includes

the communicating and sharing of information, where the vendor gets to understand both the customer’s challenges and

opportunity and the customer gets to fully understand the vendor’s value proposition.

Helping our customers complete ROI and TCO models and demonstrating how we deliver business value helps our customers

make more informed decisions and strengthens our long-term partnership. If you’d like to learn more or discuss our approach

to business value and event streaming assessments, please contact bvc@confluent.io or visit http://cnfl.io/kafka-tco.

About Confluent

Confluent, founded by the original creators of Apache Kafka®, pioneered the enterprise-ready event streaming platform.

With Confluent, organizations benefit from the first event streaming platform built for the enterprise with the ease of

use, scalability, security and flexibility required by the most discerning global companies to run their business in real time.

Companies leading their respective industries have realized success with this new platform paradigm to transform their

architectures to streaming from batch processing, spanning on-premises and multi-cloud environments. Confluent is

headquartered in Mountain View and London with offices globally. To learn more, please visit www.confluent.io. Download

Confluent Platform and Confluent Cloud at:

www.confluent.io/download

2020 Confluent, Inc. | confluent.io 9

You might also like

- PNB MetLife PolicyDocument4 pagesPNB MetLife PolicyNeelNo ratings yet

- Open BIM Solutions: Exchanging The Analysis (CAE) ModelDocument4 pagesOpen BIM Solutions: Exchanging The Analysis (CAE) ModelMuscadin MakensonNo ratings yet

- Cloud Solution AdvisorDocument30 pagesCloud Solution AdvisorbgpexpertNo ratings yet

- TruecallerrDocument26 pagesTruecallerrDiksha LomteNo ratings yet

- Bruker-Revize - 2 - PDFDocument33 pagesBruker-Revize - 2 - PDFEmad BNo ratings yet

- Application Performance Management Advanced For Saas Flyer PDFDocument7 pagesApplication Performance Management Advanced For Saas Flyer PDFIrshad KhanNo ratings yet

- PowerMaxOS 5978.711.711 Release NotesDocument14 pagesPowerMaxOS 5978.711.711 Release NotesPavan NavNo ratings yet

- SystemCheckReport 06292021 0658Document1 pageSystemCheckReport 06292021 0658Deepak Rao RaoNo ratings yet

- Oracle DBaaS OEM WebServicesDocument29 pagesOracle DBaaS OEM WebServicesJohan LouwersNo ratings yet

- Lecture 0 INT306Document38 pagesLecture 0 INT306Joy BoyNo ratings yet

- Proof Point Spam Reporting Outlook Plugin UserDocument4 pagesProof Point Spam Reporting Outlook Plugin UserAlecsandro QueirozNo ratings yet

- Merchant Banking and Financial Services AssignmentsDocument8 pagesMerchant Banking and Financial Services AssignmentsAnkit JugranNo ratings yet

- Operators Telco Cloud - White Paper: 1. Executive SummaryDocument9 pagesOperators Telco Cloud - White Paper: 1. Executive SummaryOdysseas PyrovolakisNo ratings yet

- Introduction To Idrac6 PDFDocument23 pagesIntroduction To Idrac6 PDFMac LoverzNo ratings yet

- American National Standard For Financial PDFDocument19 pagesAmerican National Standard For Financial PDFaakbaralNo ratings yet

- Genesys Inbound VoiceDocument6 pagesGenesys Inbound VoiceparidimalNo ratings yet

- Sri VadDocument10 pagesSri VadSunyOraNo ratings yet

- DataVision International Limited - Company ProfileDocument11 pagesDataVision International Limited - Company ProfileWilliam MgayaNo ratings yet

- 7210 SAS PresentationDocument48 pages7210 SAS PresentationLucho OrtegaNo ratings yet

- 01 - 1 - Minimize SLA Violation and Power Consumption in Cloud Data Center Using Adaptive Energy Aware AlgorithmDocument15 pages01 - 1 - Minimize SLA Violation and Power Consumption in Cloud Data Center Using Adaptive Energy Aware AlgorithmhasniNo ratings yet

- Five Myths of Cloud ComputingDocument8 pagesFive Myths of Cloud Computingdangkuw5572No ratings yet

- Dynamic Memory AllocationDocument5 pagesDynamic Memory AllocationGihan ChanukaNo ratings yet

- Application Paper - Measuring and Implementing OEE - Matthews AustralasiaDocument9 pagesApplication Paper - Measuring and Implementing OEE - Matthews AustralasiaMike MichaelidesNo ratings yet

- Csam Qsc2021 SlidesDocument123 pagesCsam Qsc2021 SlidesNurudeen MomoduNo ratings yet

- Chapter 8 - Social Media Information SystemsDocument38 pagesChapter 8 - Social Media Information SystemsSelena ReidNo ratings yet

- Full Auditor General ReportDocument27 pagesFull Auditor General ReportTodd FeurerNo ratings yet

- BrandZ 2015 LATAM Top50 ReportDocument83 pagesBrandZ 2015 LATAM Top50 ReportmperdomoqNo ratings yet

- TNS120 - Partner Onboarding Day: Aim & ObjectivesDocument36 pagesTNS120 - Partner Onboarding Day: Aim & ObjectivesAndrew AntwanNo ratings yet

- Strategic Management ToolsDocument31 pagesStrategic Management ToolsMuhammad AzimNo ratings yet

- Resume Example For 2024Document5 pagesResume Example For 2024Khennie SumileNo ratings yet

- Hikvision DS 2CD1121 IDocument3 pagesHikvision DS 2CD1121 IBan Darl PonshiNo ratings yet

- Honeywell Production IntelligenceDocument4 pagesHoneywell Production Intelligencepalotito_eNo ratings yet

- Accedian SkyLIGHT Value PropositionDocument44 pagesAccedian SkyLIGHT Value PropositionBenny LimNo ratings yet

- APCOSDocument4 pagesAPCOSPrasad VaradiNo ratings yet

- User Manual With CDM Ac Customers Version EnglishDocument35 pagesUser Manual With CDM Ac Customers Version EnglishAmir NaziffNo ratings yet

- SummaryDocument5 pagesSummarysmall mochiNo ratings yet

- Sathi A Das 2003Document10 pagesSathi A Das 2003pain2905No ratings yet

- Buyers-Guide HippoDocument17 pagesBuyers-Guide HippoJose GuerraNo ratings yet

- TeklaStructuresGlossary PDFDocument154 pagesTeklaStructuresGlossary PDFRajKumarNo ratings yet

- Apprenticeship Outlook Report H1 2021Document49 pagesApprenticeship Outlook Report H1 2021GunjanNo ratings yet

- Welch Allyn PDFDocument15 pagesWelch Allyn PDFsrikanth PosaNo ratings yet

- Tesco'S Erp SystemDocument14 pagesTesco'S Erp SystemMichael ObinnaNo ratings yet

- OSC Logistics - C&F Refined Foundation Design Document v.1.1Document66 pagesOSC Logistics - C&F Refined Foundation Design Document v.1.1En KirukalgalNo ratings yet

- EmeakDocument3 pagesEmeakSantosh RecruiterNo ratings yet

- Netezza OBIEEDocument10 pagesNetezza OBIEErahulmod5828No ratings yet

- TcsDocument245 pagesTcsBanumathy SaranNo ratings yet

- Mfa Quick Admin GuideDocument26 pagesMfa Quick Admin GuideJosh WhiteNo ratings yet

- Netapp PricesDocument91 pagesNetapp PricesNayab RasoolNo ratings yet

- OpenShift Container Platform-4.4-Installing On Bare metal-en-USDocument87 pagesOpenShift Container Platform-4.4-Installing On Bare metal-en-USasha sinhaNo ratings yet

- SQLMX Vs OracleDocument49 pagesSQLMX Vs OraclePaul ChinNo ratings yet

- Honeywell Manufacturing SolutionBrief enDocument4 pagesHoneywell Manufacturing SolutionBrief enMohammed Mansur AlqadriNo ratings yet

- Introduction To Service ManagementDocument216 pagesIntroduction To Service ManagementvuNo ratings yet

- ACL Escalation MatrixDocument5 pagesACL Escalation MatrixMohammad Mahmudur RahmanNo ratings yet

- Method Statement For Painting System KAFD-RY-RIA2-CP04-SAB-ARF-MES-05001 Rev.01Document13 pagesMethod Statement For Painting System KAFD-RY-RIA2-CP04-SAB-ARF-MES-05001 Rev.01khalid khanNo ratings yet

- FPT Information System (FPT IS)Document61 pagesFPT Information System (FPT IS)Dương ZeeNo ratings yet

- Big Data in Cloud Computing An OverviewDocument7 pagesBig Data in Cloud Computing An OverviewIJRASETPublicationsNo ratings yet

- Microservices Adoption Migration Design Dev Deployment 1613561147Document20 pagesMicroservices Adoption Migration Design Dev Deployment 1613561147VishnuNo ratings yet

- CIO's Guide To Application Migration: Google Cloud Whitepaper May 2020Document21 pagesCIO's Guide To Application Migration: Google Cloud Whitepaper May 2020johnlondon125100% (1)

- The Impact of Cloud Adoption On ICT Financial Management - How To Address Emerging ChallengesDocument109 pagesThe Impact of Cloud Adoption On ICT Financial Management - How To Address Emerging ChallengestchiuraNo ratings yet

- Understanding The Principles of Cost Optimization: Google Cloud WhitepaperDocument54 pagesUnderstanding The Principles of Cost Optimization: Google Cloud Whitepapersat_ksNo ratings yet

- 2011 07 IDIP Unit A Past PaperDocument4 pages2011 07 IDIP Unit A Past Paperfh71No ratings yet

- Safety StockDocument8 pagesSafety StockIwan NovaNo ratings yet

- The Yocum Library Online Database Passwords: All SubjectsDocument3 pagesThe Yocum Library Online Database Passwords: All SubjectsJHSNo ratings yet

- CV Achmad AgusDocument11 pagesCV Achmad AgusWoori ConsultingNo ratings yet

- Tutorial ControlDocument161 pagesTutorial ControlQuangNguyenDuy100% (1)

- SimNowUsersManual4 6 1Document271 pagesSimNowUsersManual4 6 1MichelleNo ratings yet

- ColsonCatalogR27!11!11 15Document124 pagesColsonCatalogR27!11!11 15Roberto SolorzanoNo ratings yet

- Teaching & Learning Activities: Biology (Sb015) - PelajarDocument2 pagesTeaching & Learning Activities: Biology (Sb015) - PelajarLeevandraaNo ratings yet

- Torque SpecsDocument21 pagesTorque SpecssaturnayalaNo ratings yet

- ECE Formula SheetDocument7 pagesECE Formula SheetMahendra ReddyNo ratings yet

- Intro To BJT Small SignalDocument35 pagesIntro To BJT Small Signaldaserock83100% (1)

- Sachet MarketingDocument7 pagesSachet MarketingTom JohnNo ratings yet

- 2022 Grade 10 Study GuideDocument85 pages2022 Grade 10 Study Guideeskaykhan11No ratings yet

- N5K Troubleshooting GuideDocument160 pagesN5K Troubleshooting GuideLenin KumarNo ratings yet

- Ambassodor PDFDocument46 pagesAmbassodor PDFPatsonNo ratings yet

- Practical Mix Design of Concrete2Document3 pagesPractical Mix Design of Concrete2Jiabin LiNo ratings yet

- Dividido Trane 30 TonsDocument23 pagesDividido Trane 30 TonsairemexNo ratings yet

- Peh 12 Reviewer 3RD QuarterDocument12 pagesPeh 12 Reviewer 3RD QuarterRalph Louis RosarioNo ratings yet

- Instruction Manual: El SaadDocument184 pagesInstruction Manual: El SaadElias Rabbat100% (1)

- Dokumen - Tips Strength of Materials 5691845f3ea8eDocument13 pagesDokumen - Tips Strength of Materials 5691845f3ea8eJay GeeNo ratings yet

- Valley Line LRT Preliminary DesignDocument26 pagesValley Line LRT Preliminary DesigncaleyramsayNo ratings yet

- English Compulsory (1) PrintDocument15 pagesEnglish Compulsory (1) PrintZakir KhanNo ratings yet

- Nike Strategy AnalysisDocument24 pagesNike Strategy AnalysisasthapriyamvadaNo ratings yet

- STEP English (1-18) All Worksheets 2020Document134 pagesSTEP English (1-18) All Worksheets 2020Shema ZulfiqarNo ratings yet

- Chap1 7Document292 pagesChap1 7Zorez ShabkhezNo ratings yet

- Offer and Acceptance:: Lapse of An OfferDocument12 pagesOffer and Acceptance:: Lapse of An OfferPriya AroraNo ratings yet

- Stochastic Epidemic ModellingDocument15 pagesStochastic Epidemic ModellingIRJMETS JOURNALNo ratings yet

- White Lily - Ship's ParticularDocument1 pageWhite Lily - Ship's ParticularAYA ALHADITHEYNo ratings yet

- Human Resource ManagementDocument86 pagesHuman Resource ManagementK V S PRASD REDDYNo ratings yet

- IBP1941 14 Fatigue of Pipelines Subjecte PDFDocument10 pagesIBP1941 14 Fatigue of Pipelines Subjecte PDFAnjani PrabhakarNo ratings yet