Professional Documents

Culture Documents

Generated

Generated

Uploaded by

hemanth kumar0 ratings0% found this document useful (0 votes)

3 views2 pagesOriginal Title

Generated (2)

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

3 views2 pagesGenerated

Generated

Uploaded by

hemanth kumarCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 2

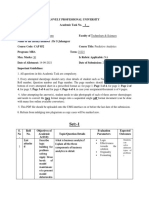

-Explain in details

SST

SSR

SSE

ns) SST

The sum ofsquares pal, denoted SST, is the sqvared differences

etween the observed dependentyariable and its mean. You can think

F this as the dispersion of the observed variables around the mean —

vch like the variance in descriptre siatstcs.

he Towl 597 +ells you how much variaton tere is in the dependent

nriable. Total SS = U(Yi - mean of¥) 2 find he aca number that

presents asum ofsquares. A diagram (like the regression line above)

eptoral and can supply a visual representation of whatyoure

Nevlating.

is ameasure of he ol variability of the datset.

SSR

The second term is the sum of squares due regression, or SSR. Tt

the sum of the differences between the predicted value and the

ean of the dependentrariable. Think ofitas am ensure that describes

ow well ourline fit the dot.

this value ofSS@ is equal » the sum ofsquares pal, itmeans our

igression model copwres all the observed variability and is perfect.

nce again, We have + menton thatano+ther common notaton is ESF

vex plained sum ofsqvores.

52 = El ¥)2= SST - SSE. Regression sum of squares is interpreted

ror sum of squares is obtained by firstcompudng the mean

Feach botiery 4 pe. For each battery ofa specified 4pe, the

sbracted from each individual battery's lifeme and then

The sum of these squared terms forall batiery ypes equals

F5E is ameasvre of sampling error.

term is the sum of squares error 0 r SSE. The erroris he

2 between the observed value and he predicted valve.

y Want minimize the error. The sm aller the error, the beter

xfon power of he regression. Finally, Lshould add thatitis

nas residual sum of squares.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5819)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Tablue (Mallus)Document253 pagesTablue (Mallus)hemanth kumarNo ratings yet

- Sahil Int577 Ca1Document12 pagesSahil Int577 Ca1hemanth kumarNo ratings yet

- Financial Modelling and Analysis of 50 Flats Housing Project in Gurgoan, Haryana INDocument27 pagesFinancial Modelling and Analysis of 50 Flats Housing Project in Gurgoan, Haryana INhemanth kumar100% (1)

- Academic Task - 1Document8 pagesAcademic Task - 1hemanth kumarNo ratings yet

- Academic Task - 1Document8 pagesAcademic Task - 1hemanth kumarNo ratings yet

- Logic Effectiveness Towards The Solution Originality FormattingDocument7 pagesLogic Effectiveness Towards The Solution Originality Formattinghemanth kumarNo ratings yet

- 9 Puzzle TestDocument52 pages9 Puzzle Testhemanth kumarNo ratings yet

- 12 Pipes and CisternDocument40 pages12 Pipes and Cisternhemanth kumarNo ratings yet

- 8 ClocksDocument40 pages8 Clockshemanth kumarNo ratings yet

- Name Smart Task No. Project TopicDocument3 pagesName Smart Task No. Project Topichemanth kumarNo ratings yet